Abstract

The decomposition-based multi-objective evolutionary algorithm (MOEA/D) has shown remarkable effectiveness in solving multi-objective problems (MOPs). In this paper, we integrate the quantum-behaved particle swarm optimization (QPSO) algorithm with the MOEA/D framework in order to make the QPSO be able to solve MOPs effectively, with the advantage of the QPSO being fully used. We also employ a diversity controlling mechanism to avoid the premature convergence especially at the later stage of the search process, and thus further improve the performance of our proposed algorithm. In addition, we introduce a number of nondominated solutions to generate the global best for guiding other particles in the swarm. Experiments are conducted to compare the proposed algorithm, DMO-QPSO, with four multi-objective particle swarm optimization algorithms and one multi-objective evolutionary algorithm on 15 test functions, including both bi-objective and tri-objective problems. The results show that the performance of the proposed DMO-QPSO is better than other five algorithms in solving most of these test problems. Moreover, we further study the impact of two different decomposition approaches, i.e., the penalty-based boundary intersection (PBI) and Tchebycheff (TCH) approaches, as well as the polynomial mutation operator on the algorithmic performance of DMO-QPSO.

1. Introduction

The particle swarm optimization (PSO) algorithm, originally proposed by Kennedy and Eberhart in 1995, is a population-based metaheuristic that imitates the social behavior of birds flocking [1]. In PSO, each particle is treated as a potential solution, and all particles follow their own experiences and the current optimal particle to fly through the solution space. As it requires fewer parameters to adjust and can be easily implemented, PSO has been rapidly developed in solving real-world optimization problems, including circuit design [2], job scheduling [3], data mining [4], path planning [5,6] and protein-ligand docking [7]. In 1999, Moore and Chapman extended PSO to solve multi-objective problems (MOPs) for the first time in [8]. Since then, a great interest has been aroused among researchers from different communities to tackle MOPs by using PSO. For example, Coello and Lechuga [9] introduced a proposal for multi-objective PSO, noted as MOPSO, which determines particles’ flight directions by using the concept of Pareto dominance and adopts a global repository to store previously found nondominated solutions. Later in 2004, Coello et al. [10] presented an enhanced version of MOPSO which employs a mutation operator and a constraint-handling mechanism to improve the algorithmic performance of the original MOPSO. Raquel and Prospero [11] proposed the MOPSO-CD algorithm that selects the global best and updates the external archive of nondominated solutions by calculating the crowding distance of particles. In 2008, Peng and Zhang developed a new MOPSO algorithm adopting a decomposition approach, called MOPSO/D [12]. It is based on a framework, named as MOEA/D [13], which converts an MOP into a number of single-objective optimization sub-problems and then simultaneously solves all these sub-problems. In MOPSO/D, the particle’s global best is defined by the solutions located within a certain neighborhood. Moubayed et al. [14] proposed a novel smart MOPSO based on decomposition (SDMOPSO) that realizes the information exchange between neighboring particles with fewer objective function evaluations and stores the leaders of the whole particle swarm using a crowding archive. dMOPSO, proposed by Martinez and Coello [15], selects the global best from a set of solutions according to the decomposition approach and thus update each particle’s position. Moubayed et al. [16] realized a MOPSO, called D2MOPSO, which hybrids the approach of dominance and decomposition and introduces an archiving technique using crowding distance. There have also been some other MOPSOs proposed in recent years that have proved to be effective in solving complex MOPs, such as MPSO/D [17], MMOPSO [18], AgMOPSO [19], CMOPSO [20] and CMaPSO [21].

The quantum-behaved PSO (QPSO), proposed by Sun et al. [22], is a variant of PSO inspired by quantum mechanics and the trajectory analysis of PSO. The trajectory analysis clarified the idea that each particle in PSO is in a bound state. Specifically, each particle in PSO oscillates around and converges to its local attractor [23]. In QPSO, the particle is assumed to have quantum behavior and further assumed to be attracted by a quantum delta potential well centered on its local attractor. Additionally, the concept of the mean best position was defined and employed in this algorithm to update particles’ positions. In the terms of the update equation, which is different from that of PSO, on the one hand, QPSO has no velocity vectors for each particle to update, on the other hand, QPSO requires fewer parameters to adjust [24]. Due to these advantages of QPSO, we incorporate it into the original MOEA/D framework for the purpose of obtaining a more effective algorithm for solving MOPs than other decomposition-based MOPSOs that uses the canonical PSO.

QPSO and other PSO variants generally have fast convergence speed due to more information exchange among particles. This is why such kinds of algorithms are more efficient to solve optimization problems than other population-based random search algorithms. Fast convergence speed means rapid diversity decline, which is desirable for the algorithm to find satisfying solutions quickly during the early stage of the search process. However, rapid diversity decline during the later stage of the search process results in aggregation of particles around the global best position and in turn the stagnation of the whole particle swarm (i.e., premature convergence).

Diversity maintenance is also essential when extending PSO to solve MOPs. During the past decade, researchers have done a lot of work on developing novel techniques to maintain diversity in their MOPSOs. For example, Qiu et al. [25] introduced a novel global best selection method, which is based on proportional distribution and K-means algorithm, to make particles converge to the Pareto front in a fast speed while maintaining diversity. Cheng et al. [26] presented the IMOPSO-PS algorithm, in which the preference strategy is applied for optimal distributed generation (DG) integration into the distribution system. This algorithm uses a circular nondominated selection of particles from one iteration to the next and performs mutation on particles to enhance the swarm diversity during the search process.

In this paper, we propose a multi-objective quantum-behaved particle swarm optimization algorithm based on decomposition, named DMO-QPSO, which integrates the QPSO with the original MOEA/D framework and uses a strategy of diversity control. As in the literature [12,13], a neighborhood relationship is defined according to distances between the weight vectors of different sub-problems. Each sub-problem is solved utilizing the information only from its neighboring sub-problems. However, with the increasing number of iterations, the current best solutions to the neighbors of a sub-problem may get close to each other. This may result in a diversity loss of the new population produced in the next iteration, particularly at the later stage of the search process. Therefore, in DMO-QPSO, we do not adopt the neighborhood relationship described in the framework of MOEA/D and MOPSO/D. Meanwhile, we introduce a two-phased diversity controlling mechanism to make particles alternate between attraction and explosion states according to the swarm diversity. Particles move through the search space in the phase of attraction unless the swarm diversity declines to a threshold value that triggers the phase of explosion. Additionally, unlike MOPSO/D in which the global best is updated according to a decomposition approach, the proposed DMO-QPSO uses a vector set to store a pre-defined number of nondominated solutions and then randomly picks one as the current global best. All solutions in this vector set would have a chance to guide the movement of the whole particle swarm. The penalty-based boundary intersection (PBI) approach [27] is used in the algorithm owing to its advantage over other decomposition methods including the weighted sum (WS) and the Tchebycheff (TCH) [13].

The rest of this paper is organized as follows. Some preliminaries of MOP, PSO, QPSO and the framework of MOEA/D are given in Section 2. Section 3 describes the procedure of our proposed DMO-QPSO algorithm in detail. Section 4 presents the experimental results and analysis. Some further discussion on DMO-QPSO are introduced in Section 5. Finally, the paper is concluded in the last section.

2. Preliminaries

In this section, we first state the definition of MOPs and then describe the basic principles of the canonical PSO and QPSO. After that, some of the most commonly used decomposition methods and the original MOEA/D framework are presented.

2.1. Multi-Objective Optimization

A multi-objective optimization problem (MOP) can be stated as follows.

where is the decision variable vector, is the decision (variable) space, and is the number of the real-valued objective functions. is the objective function vector, where is the objective space. The objectives in an MOP are mutually conflicting, so no one solution can minimize all the objectives at the same time. Improvement of one objective may lead to deterioration of another. In this situation, the Pareto optimal solutions become the best tradeoffs among different objectives. Therefore, most of multi-objective optimization algorithms are designed to find a finite number of Pareto optimal solutions to approximate the Pareto front (PF), which could be good representatives of the whole PF [28,29,30,31]. In order to better understand the concept of Pareto optimality [32], some definitions are provided as follows.

Definition 1.

Let. For all, if and only ifdominates, denoted as.

Definition 2.

Let. If no solutionexists insuch that, thenis a Pareto optimal solution to MOP in Equation (1), andis a Pareto optimal (objective) vector. The set of all the Pareto optimal solutions is called the Pareto set (PS), denoted by. The set of all the Pareto optimal (objective) vectors is called the Pareto front (PF), denoted by.

2.2. Particle Swarm Optimization

In the canonical PSO algorithm with particles, each particle has a position vector and a velocity vector . is the dimension of the search space. During each iteration , particle in the swarm is updated according to its personal best () position and the global best () position found by the whole swarm. The update strategies are presented as follows.

for where is the inertia weight, are the learning factors, are two random variables uniformly distributed on (0, 1).

2.3. Quantum-Behaved Particle Swarm Optimization

In the quantum-behaved PSO (QPSO) algorithm with particles, each particle has a position vector and a personal best () position . is the dimension of the search space. During each iteration , particle in the swarm is updated as follows.

for where is a random number distributed on (0, 1) uniformly, is the local attractor of particle , calculated by

is a random number distributed on (0, 1) uniformly and is the global best () position found by particles during the search process. The contraction-expansion coefficient was designed to control the convergence speed of the QPSO algorithm. is the mean of the personal best positions of all the particles, namely, the position, and it can be calculated as below.

2.4. The Decomposition Approaches

In the state-of-the-art multi-objective optimization algorithms based on decomposition, the most commonly used decomposition approaches are the Tchebycheff (TCH), the weighted sum (WS), and the penalty-based boundary intersection (PBI) approaches [13,28]. These methods are supposed to decompose an MOP into a finite group of single-objective optimization sub-problems, so that a certain algorithm can solve these sub-problems effectively and efficiently. Let be a weight vector for the th sub-problem (), satisfying and for all ; and be a reference point. Below are the definitions of TCH and PBI approaches which will be used later in this paper.

- Tchebycheff (TCH) approach:In the TCH approach, the sub-problem is defined as

- Penalty-based boundary intersection (PBI) approach:In PBI approach, the sub-problem is defined aswhere means the , and is a penalty parameter.

2.5. MOEA/D

MOEA/D divides an MOP into single-objective optimization sub-problems and attempts to simultaneously optimize all these sub-problems rather than directly solving the MOP. These sub-problems are linked together by their neighborhoods. The neighborhood of sub-problem is defined as the sub-problems whose weight vectors are the closest ones to its weight vector and thus the neighborhood size of sub-problem is .

The MOEA/D algorithm maintains a population of solutions , where is a feasible solution to the sub-problem . is the -value (i.e., the fitness value) of , that is, . is a reference point and is the minimal value of objective found so far. is an external population used to tore non-dominated solutions found during the search process. The main framework of MOEA/D is described in Algorithm 1.

| Algorithm 1 Framework of MOEA/D | |

| Input: The number of sub-problems, i.e., the population size, ; The set of weight vectors, ; ; | |

| Output:; | |

| 1: | |

| 2: | Calculate the Euclidean distances between any two weight vectors; |

| 3: | Successively select weight vectors which are the closest to the weight vector and store the indexes of these weight vectors in ; |

| 4: | Generate an initial population randomly; |

| 5: | Evaluate ; |

| 6: | Initialize ; |

| 7: | while termination criterion is not fulfilled do |

| 8: | for do |

| 9: | Select two indexes randomly from ; |

| 10: | Use the genetic operators to produce a new solution y from and ; |

| 11: | Repair ; |

| 12: | for do |

| 13: | if then |

| 14: | ; |

| 15: | end if |

| 16: | end for |

| 17: | for each do |

| 18: | if g then |

| 19: | ; |

| 20: | end if |

| 21: | end for |

| 22: | end for |

| 23: | Update ; |

| 24: | end while |

3. The Proposed DMO-QPSO

In this section, we propose an improved multi-objective quantum-behaved particle swarm optimization algorithm based on decomposition, named as DMO-QPSO, which integrates the QPSO algorithm with the MOEA/D framework and adopts a mechanism to control the swarm diversity during the search process so as to avoid premature convergence and escape the local optimal area with a higher probability.

At the beginning of the proposed algorithm DMO-QPSO, we need to define a set of well-distributed weight vectors and then use a certain approach to decompose the original MOP into a group of single objective sub-problems. More precisely, let to be the weight vectors, and the PBI approach is employed in this paper owing to its advantage over other decomposition approaches.

In DMO-QPSO, the swarm with particles is randomly initialized. Each particle has a position vector and a personal best position . is initially set to be equal to . Then the mean best position of all particles can be easily obtained according to Equation (6). The global best position is produced in a natural way according to the Pareto dominance relationship among different personal best positions. More specifically, we firstly define a vector set , and the size of is pre-set, denoted as . Then, the fast nondominated sorting approach [33] is applied to sort the set of all the personal best positions and . The lower nondomination rank of a solution is, the better it is. Therefore, we only select the ones in the lower nondomination ranks and then store them in . All of the solutions in are regarded as candidates for the global best employed in the next iteration for updating particles’ positions.

It should be noted that the neighborhood in the original MOEA/D framework is formed according to distances between the weight vectors of different sub-problems. That is to say, the neighborhood of a sub-problem includes all sub-problems with the closest weight vectors. Hence, on the basis of this definition, solutions to neighboring sub-problems would be close in the decision space. It may enable the algorithm to converge faster at the early stage but brings the risk of diversity loss and premature convergence at the later stage. For this reason, we do not adopt in DMO-QPSO the neighborhood relationship stated in the original MOEA/D framework.

Furthermore, we measure the swarm diversity during the search process and make the swarm alternate between two phases, i.e., attraction and explosion, according to its diversity. At each iteration, the diversity of the particle swarm is calculated as below.

where is the dimensionality of the problem, is the length of longest diagonal in the search space, is the particle swarm, is the population size, is the th value of particle and is the th value of the average point. According to the literature [34,35], the particle converges when the contraction-expansion coefficient is less than 1.778 and otherwise it diverges. Therefore, we set a threshold, denoted as , to the swarm diversity. When the diversity drops below (i.e., in the explosion phase), the value of will be reset to a constant , larger than 1.778, to make particles diverge and thus increase the swarm diversity. Otherwise, linearly decreases between the predefined interval (i.e., in the attraction phase).

Like MOEA/D, we also use an external population in the DMO-QPSO to store the nondominated solutions found during the search process. In each iteration step, we check the Pareto dominance relationship between the new generated solutions and the solutions in . Solutions in dominated by a new generated solution will be removed from and this new generated solution will be added to if no one in dominates it. The main process of the DMO-QPSO algorithm is presented in Algorithm 2.

| Algorithm 2 DMO-QPSO | |

| Input: The number of sub-problems, i.e., the population size, ; The set of weight vectors, ; The maximal number of iterations, ; | |

| Output:; | |

| 1: | |

| 2: | for do |

| 3: | Randomly initialize the position vector ; |

| 4: | Set the personal best position ; |

| 5: | Evaluate the fitness value ; |

| 6: | end for |

| 7: | ; |

| 8: | ; |

| 9: | for do |

| 10: | Compute the mean best position of all the particles according to Equation (6); |

| 11: | Measure ; |

| 12: | if do |

| 13: | ; |

| 14: | else |

| 15: | Set linearly decreasing between the interval ; |

| 16: | end if |

| 17: | for do |

| 18: | Update the position vector using Equation (4); |

| 19: | Repair ; |

| 20: | Evaluate ; |

| 21: | for do |

| 22: | if then |

| 23: | ; |

| 24: | end if |

| 25: | end for |

| 26: | if then |

| 27: | ; |

| 28: | end if |

| 29: | end for |

| 30: | Update ; |

| 31: | Update ; |

| 32: | end for |

4. Experimental Studies

This section presents the experiments conducted to investigate the performance of our proposed DMO-QPSO algorithm. Firstly, we introduce a set of MOPs used as benchmark functions. Next, the parameter settings for different algorithms and two performance metrics are described in detail. Finally, the comparison experiments and results analysis are presented. More precisely, we compared the DMO-QPSO with two recently proposed multi-objective PSOs (i.e., MMOPSO and CMOPSO) and other three multi-objective optimization algorithms, namely, MOPSO, MOPSO/D and MOEA/D-DE [36]. The PBI approach is used in four decomposition-based algorithms (i.e., DMO-QPSO, MOPSO/D, MOEA/D-DE and MMOPSO).

4.1. Test Functions

We selected 15 test functions whose PFs have different characteristics including concavity, convexity, multi-frontality and disconnections. Twelve of these test functions are bi-objective (i.e., F1, F2, F3, F4, F5, F7, F8, F9 from the F test set [36], UF4, UF5, UF6, UF7 from the UF test set [37]) and the rest of them are tri-objective (i.e., F6 from the F test set and UF9, UF10 from the UF test set). As shown in references [36,37], the F test set and the UF test set are two sets of test instances for facilitating the study of the ability of MOEAs to solve problems with complicated PS shapes. Besides, we used 30 decision variables for the UF test set, problems from F1 to F5, and F9. Problems from F6 to F8 were tested by using 10 decision variables.

4.2. Parameter Setting

The setting of weight vectors is decided by an integer [36]. More precisely, each individual weight in takes a value from . Therefore, the population size can be presented by , where is the number of objectives. was 299 for the bi-objective test functions and 33 for the tri-objective ones. Consequently, the population size was 300 for the bi-objective test functions and 595 for the tri-objective ones. The maximal number of iterations was 500 and each algorithm runs 30 independent times for each test function. The size of external population was set to be 100. Besides, the penalty factor in PBI was 5.0.

The polynomial mutation [29] was employed in MOPSO, MOPSO/D, MOEA/D-DE, MMOPSO and CMOPSO. Two parameters in this mutation operator were 20 and , respectively.

For DMO-QPSO, the size of was 10, and the contraction-expansion coefficient in the attraction phase varied linearly from 1.0 to 0.5. The value of the lower bound of diversity was set to be 0.05. When the diversity drops below , we set the parameter .

The details are listed in Table 1.

Table 1.

Parameters setting for different algorithms.

4.3. Performance Metrics

In our experiments, the following performance metrics were used.

- The inverted generational distance (IGD) [36]: It is proposed as a method of estimating the distance between the elements in a set of nondominated vectors and those in the Pareto optimal set, and can be stated as:where is a number of points which are evenly distributed in the objective space along the PF, and is an approximation to the PF. is the minimal Euclidean distance between and the points in , and is the size of the set . must be as close as possible to the PF of a certain test problem and cannot miss any part of the whole PF, so as to obtain a low value of . It can reflect both the diversity and the convergence of the obtained solutions to the real PF.

- Coverage (C-metric) [13]: It can be stated as:where are two approximations to the real PF of an MOP, is defined as the proportion of the solutions in that are dominated by at least one solution in .

4.4. Results and Discussion

Table 2 and Table 3 present the average, minimum and standard deviation (SD) of the IGD values of 30 final populations on different test functions that were produced by MOPSO, MOPSO/D, MOEA/D-DE, MMOPSO, CMOPSO and DMO-QPSO. It is clear that our proposed algorithm, DMO-QPSO, performed better than the other five algorithms on most of the test problems. It yielded the best mean IGD values on all the problems except on F7, F8, UF4, UF6 and UF10. According to Table 2 and Table 3, both the mean and minimal IGD values on F7, F8, UF4 and UF10 obtained by DMO-QPSO are worse than those obtained by MOEA/D-DE. It should be noted that the performance of DMO-QPSO is still acceptable on UF6. The mean IGD value of DMO-QPSO on UF6 are slightly worse than that of MMOPSO, but DMO-QPSO got the lowest minimal IGD value among all of these algorithms. Besides, it is obvious that MOPSO/D performed the worst on almost all of the test problems except on F6 and UF4.

Table 2.

The mean, minimum and standard deviation of IGD values on F problems, where the best value for each test case is highlighted with a bold background.

Table 3.

The mean, minimum and standard deviation of IGD values on UF problems, where the best value for each test case is highlighted with a bold background.

In addition, the statistics by the Wilcoxon rank sum tests in Table 2 and Table 3 also indicate that DMO-QPSO outperformed other five algorithms. The MMOPSO was the second best on F problems and the third best on UF problems respectively, while the CMOPSO was the second worst on both F and UF problems. Table 4 illustrates the total ranks of these algorithms on F and UF problems and gives their final ranks in the last column of the table. As shown in this table, MOEA/D-DE is the second-best algorithm, followed by MMOPSO. In contrast, MOPSO/D is the worst algorithm, followed by CMOPSO.

Table 4.

The final rank of different algorithms on F and UF problems.

The average C-metric values are shown in Table 5, Table 6, Table 7, Table 8, Table 9 which confirm the results above. It can be seen from these tables that the final solutions obtained by DMO-QPSO is better than those obtained by MOPSO, MOPSO/D, MOEA/D-DE, MMOPSO and CMOPSO for most of the test functions.

Table 5.

Average C-metric between DMO-QPSO (A) and MOPSO (B).

Table 6.

Average C-metric between DMO-QPSO (A) and MOPSO/D (B).

Table 7.

Average C-metric between DMO-QPSO (A) and MOEA/D-DE (B).

Table 8.

Average C-metric between DMO-QPSO (A) and MMOPSO (B).

Table 9.

Average C-metric between DMO-QPSO (A) and CMOPSO (B).

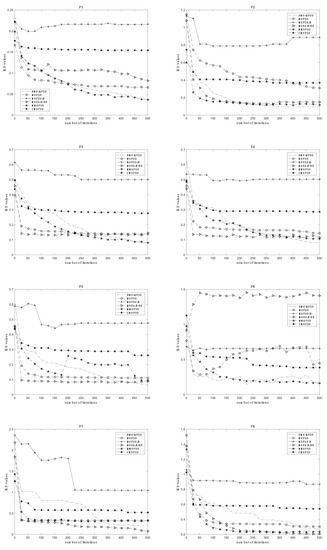

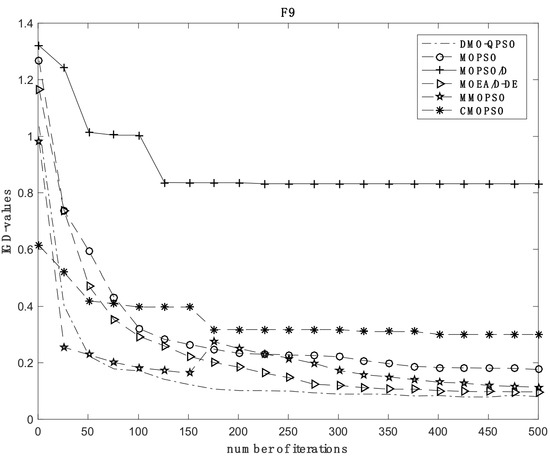

The results of the trial runs which have the lowest IGD values on 15 test functions produced by MOPSO, MOPSO/D, MOEA/D-DE, MMOPSO, CMOPSO and DMO-QPSO are selected, respectively, and then plotted in Figure 1 and Figure 2. The figures in Figure 1 and Figure 2 clearly show the evolution of the IGD values for different algorithms versus the number of iterations on both F and UF problems. It can be seen that the results in these figures are in consistence with those in Table 2 and Table 3. For the F problems, DMO-QPSO performed the best except on F6, F7 and F8. As we can see in Figure 1, MOEA/D-DE was the best on problems F7 and F8, the IGD values of which drop quickly at the early stage and then converge to values close to 0.08 and 0.20, respectively. MMOPSO obtained the second minimal IGD value on F8, followed by DMO-QPSO, but it declines even faster than MOEA/D-DE during the first 200 iteration steps. On F6, the IGD value of DMO-QPSO fluctuates for about 400 iteration steps during the whole search process and then reaches the value (0.1634) just slightly larger than that of MMOPSO (0.1632), which may be related to the variation of the swarm diversity. MOPSO/D had the worst performance on all of F problems except on F6.

Figure 1.

Evolution of the IGD values of MOPSO, MOPSO/D, MOEA/D-DE, MMOPSO, CMOPSO and DMO-QPSO versus the number of iterations on F problems.

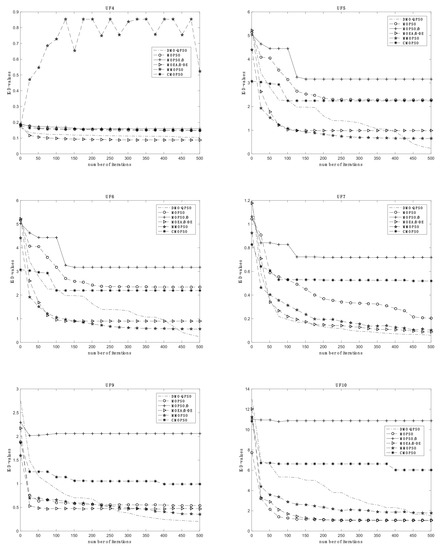

Figure 2.

Evolution of the IGD values of MOPSO, MOPSO/D, MOEA/D-DE, MMOPSO, CMOPSO and DMO-QPSO versus the number of iterations on UF problems.

For the UF problems, DMO-QPSO still performed the best except on UF4 and UF10. It can be seen that the MOEA/D-DE was the best on UF4, the IGD value of which decreases rapidly to a value just below 0.1 while the value of DMO-QPSO is larger than 0.1. By contrast, MMOPSO had the worst performance on UF4, the IGD value of which fluctuates significantly between 0.5 and 0.9. On UF10, MOEA/D-DE and MOPSO had the similar performance, for the IGD values of them decrease rapidly during the first 80 iteration steps and then gradually converge to values around 1.0. DMO-QPSO was the third-best, followed by MMOPSO. MOPSO/D had the worst performance on all of UF problems except on UF4. Additionally, from all the figures in Figure 1 and Figure 2, we can observe that within the same iteration steps, the IGD value of DMO-QPSO declines slowly at the early stage on several test problems compared to MOEA/D-DE but gets to a much smaller value at the later stage. If we perform a greater amount of iterations, the DMO-QPSO may obtain much better results than the other compared algorithms.

In summary, DMO-QPSO has better performance on most of the test functions compared to other tested algorithms, and it is promising in solving MOPs with complicated PS shapes.

5. Further Discussions

In this section, we further study the impact of different decomposition approaches (i.e., TCH and PBI) and the polynomial mutation on DMO-QPSO. Some comparison experiments were also conducted.

5.1. The Impact of Different Decomposition Approaches

As described in Section 2.4, TCH and PBI are two commonly used approaches for decomposition-based multi-objective optimization algorithms. In terms of solution uniformness, the TCH approach may perform worse than the PBI approach, especially for the problems having more than two objectives. Therefore, we tested MOPSO/D, MOEA/D-DE and DMO-QPSO using TCH or PBI as the decomposition method respectively. The parameter settings for each algorithm are the same as those presented in Section 4.2. Table 10 and Table 11 present the average, minimum and standard deviation (SD) of the IGD values of 30 final populations on different test functions that were produced by each algorithm. In these tables, MOPSO/D-TCH, MOEA/D-DE-TCH and DMO-QPSO-TCH stand for the variants of algorithms MOPSO/D, MOEA/D-DE and DMO-QPSO using the TCH approach, respectively.

Table 10.

The mean, minimum and standard deviation of IGD values for different algorithms with TCH or PBI on F problems.

Table 11.

The mean, minimum and standard deviation of IGD values for different algorithms with TCH or PBI on UF problems.

According to Table 10 and Table 11, algorithms (i.e., MOPSO/D and DMO-QPSO) using PBI performed better than those using TCH, particularly for solving tri-objective problems. As for DMO-QPSO, using PBI, to some extent, could help the algorithm to acquire better Pareto optimal solutions to approximate the entire PF. However, applying PBI to MOEA/D-DE does not show significant improvement compared to MOEA/D-DE using TCH. It may be related to the unique characteristics of the DE operators employed in MOEA/D-DE.

5.2. The Impact of Polynomial Mutation

Polynomial mutation was adopted in both MOPSO/D, MOEA/D-DE, MMOPSO and CMOPSO for producing new solutions as well as maintaining the population diversity, as stated in the literature [12,18,20,36]. In order to investigate the impact of polynomial mutation on DMO-QPSO, we tested four DMO-QPSO variants, i.e., DMO-QPSO, DMO-QPSO-TCH, DMO-QPSO-pm and DMO-QPSO-TCH-pm, on different problems here. DMO-QPSO and DMO-QPSO-TCH used PBI and TCH as the decomposition method, respectively. DMO-QPSO-pm and DMO-QPSO-TCH-pm are two variants using the polynomial mutation operator on the basis of DMO-QPSO and DMO-QPSO-TCH respectively. The parameter settings for each algorithm are the same as those presented in Section 4.2. Table 12 and Table 13 present the average, minimum and standard deviation (SD) of the IGD values of 30 final populations on different test functions that were produced by different variants of DMO-QPSO.

Table 12.

The mean, minimum and standard deviation of IGD values for four DMO-QPSO variants on F problems.

Table 13.

The mean, minimum and standard deviation of IGD values for four DMO-QPSO variants on UF problems.

As we can see from Table 12 and Table 13, the DMO-QPSO variants using PBI, i.e., DMO-QPSO and DMO-QPSO-pm, outperformed the DMO-QPSO variants using TCH, i.e., DMO-QPSO-TCH and DMO-QPSO-TCH-pm, on most of the test problems. These results also confirm the conclusion presented in Section 5.1. Besides, DMO-QPSO-pm shows an advantage over DMO-QPSO on several test problems. It should be pointed out that adopting the PBI approach and the polynomial mutation at the same time can effectively improve the algorithmic performance of DMO-QPSO.

6. Conclusions

This paper has proposed a multi-objective quantum-behaved particle swarm optimization algorithm based on decomposition, named DMO-QPSO, which integrates the QPSO algorithm with the original MOEA/D framework and adopts a strategy to control the swarm diversity. Without using the neighboring relationship defined in the MOEA/D framework, we employed a two-phased diversity controlling mechanism to avoid the premature convergence and make the algorithm escape sub-optimal solutions with a higher probability. In addition, we used a set of nondominated solutions to produce the global best so as to update the particle’s position. The comparison experiments were carried out among six algorithms, namely, MOPSO, MOPSO/D, MOEA/D-DE, MMOPSO, CMOPSO and DMO-QPSO, on 15 test functions with complicated PS shapes. The experimental results show that the proposed DMO-QPSO algorithm has an advantage over other five algorithms on most of the test problems. It has a slower convergence speed than MOEA/D-DE on some test problems at the early stage, but has better balance between exploration and exploitation, finally obtaining better solutions to an MOP. In addition, we further investigated the impact of different decomposition approaches, i.e., the TCH and PBI approaches, as well as the polynomial mutation on DMO-QPSO. It was shown that using PBI and the polynomial mutation can enhance the algorithmic performance of DMO-QPSO, particularly when the tri-objective problems were being solved.

In the future, we will focus on studying new methods for generating a set of weight vectors that are as uniformly distributed as possible, modifying the mechanism of diversity control in the DMO-QPSO algorithm for dealing with more complicated test problems, and improving the quality of the solutions obtained. In addition, we will extend the proposed algorithm to problems having more than three objectives.

Author Contributions

Q.Y., J.S., F.P., V.P. and B.A. contributed to the study conception and design; Q.Y. performed the experiment, analyzed the experimental results and wrote the first draft of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China (Projects Numbers: 61673194, 61672263, 61672265), and in part by the national first-class discipline program of Light Industry Technology and Engineering (Project Number: LITE2018-25).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All of the test functions can be found in papers doi: 10.1109/TEVC.2008.925798 and https://www.researchgate.net/publication/265432807_Multiobjective_optimization_Test_Instances_for_the_CEC_2009_Special_Session_and_Competition, accessed on 1 May 2021.

Acknowledgments

The authors would like to express their sincere thanks to the anonymous referees for their great efforts to improve this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Eberhart, R.C.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Mallick, S.; Kar, R.; Ghoshal, S.P.; Mandal, D. Optimal sizing and design of cmos analogue amplifier circuits using craziness-based particle swarm optimization. Int. J. Numer. Model.-Electron. Netw. Devices Fields 2016, 29, 943–966. [Google Scholar] [CrossRef]

- Singh, M.R.; Singh, M.; Mahapatra, S.; Jagadev, N. Particle swarm optimization algorithm embedded with maximum deviation theory for solving multi-objective flexible job shop scheduling problem. Int. J. Adv. Manuf. Technol. 2016, 85, 2353–2366. [Google Scholar] [CrossRef]

- Sousa, T.; Silva, A.; Neves, A. Particle swarm based data mining algorithms for classification tasks. Parallel Comput. 2004, 30, 767–783. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Wu, L.; Wang, S. Ucav path planning by fitness-scaling adaptive chaotic particle swarm optimization. Math. Probl. Eng. 2013, 2013, 705238. [Google Scholar] [CrossRef]

- Zhang, Y.; Jun, Y.; Wei, G.; Wu, L. Find multi-objective paths in stochastic networks via chaotic immune pso. Expert Syst. Appl. 2010, 37, 1911–1919. [Google Scholar] [CrossRef]

- Ng, M.C.; Fong, S.; Siu, S.W. Psovina: The hybrid particle swarm optimization algorithm for protein-ligand docking. J. Bioinform. Comput. Biol. 2015, 13, 1541007. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moore, J.; Chapman, R. Application of Particle Swarm to Multiobjective Optimization; Unpublished Work; Department of Computer Science and Software Engineering, Auburn University: Auburn, Alabama, 1999. [Google Scholar]

- Coello, C.A.C.; Lechuga, M.S. Mopso: A proposal for multiple objective particle swarm optimization. In Proceedings of the 2002 Congress on Evolutionary Computation, CEC’02 (Cat. No.02TH8600), Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1051–1056. [Google Scholar]

- Coello, C.A.C.; Pulido, G.T.; Lechuga, M.S. Handling multiple objectives with particle swarm optimization. IEEE Trans. Evol. Comput. 2004, 8, 256–279. [Google Scholar] [CrossRef]

- Raquel, C.R.; Prospero, C.N. An effective use of crowding distance in multiobjective particle swarm optimization. In Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation (GECCO ’05), Washington, DC, USA, 25–29 June 2005. [Google Scholar]

- Peng, W.; Zhang, Q. A decomposition-based multi-objective particle swarm optimization algorithm for continuous optimization problems. In Proceedings of the 2008 IEEE International Conference on Granular Computing (GrC 2008), Hangzhou, China, 26–28 August 2008. [Google Scholar]

- Zhang, Q.; Li, H. Moea/d: A multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 2008, 11, 712–731. [Google Scholar] [CrossRef]

- Moubayed, N.; Petrovski, A.; Mccall, J. A novel smart multi-objective particle swarm optimisation using decomposition. In International Conference on Parallel Problem Solving from Nature; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–10. [Google Scholar]

- Mart´ınez, Z.; Coello, C.A.C. A multi-objective particle swarm optimizer based on decomposition. In Proceedings of the 13th Annual Conference on Genetic and Evolutionary Computation (GECCO ’11), Dublin, Ireland, 12–16 July 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 69–76. [Google Scholar]

- Moubayed, N.; Petrovski, A.; Mccall, J. D2mopso: Mopso based on decomposition and dominance with archiving using crowding distance in objective and solution spaces. Evol. Comput. 2014, 22, 47–77. [Google Scholar] [CrossRef] [Green Version]

- Dai, C.; Wang, Y.; Ye, M. A new multi-objective particle swarm optimization algorithm based on decomposition. Inf. Sci. 2015, 325, 541–557. [Google Scholar] [CrossRef]

- Lin, Q.; Li, J.; Du, Z.; Chen, J.; Ming, Z. A novel multi-objective particle swarm optimization with multiple search strategies. Eur. J. Oper. Res. 2015, 247, 732–744. [Google Scholar] [CrossRef]

- Zhu, Q.; Lin, Q.; Chen, W.; Wong, K.; Coello, C.A.C.; Li, J.; Chen, J.; Zhang, J. An external archive-guided multiobjective particle swarm optimization algorithm. IEEE Trans. Cybern. 2017, 47, 2794–2808. [Google Scholar] [CrossRef]

- Zhang, X.; Zheng, X.; Cheng, R.; Qiu, J.; Jin, Y. A competitive mechanism based multi-objective particle swarm optimizer with fast convergence. Inf. Sci. 2018, 427, 63–76. [Google Scholar] [CrossRef]

- Yang, W.; Chen, L.; Wang, Y.; Zhang, M. Multi/many-objective particle swarm optimization algorithm based on competition mechanism. Comput. Intell. Neurosci. 2020, 2020, 5132803. [Google Scholar] [CrossRef]

- Sun, J.; Feng, B.; Xu, W. Particle swarm optimization with particles having quantum behavior. In Proceedings of the 2004 Congress on Evolutionary Computation, Portland, OR, USA, 19–23 June 2004; pp. 325–331. [Google Scholar]

- Sun, J.; Fang, W.; Wu, X.; Palade, V. Quantum-behaved particle swarm optimization: Analysis of individual particle behavior and parameter selection. Evol. Comput. 2012, 20, 349–393. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Wu, X.; Palade, V.; Fang, W.; Lai, C.H.; Xu, W. Convergence analysis and improvements of quantum-behaved particle swarm optimization. Inf. Sci. 2012, 193, 81–103. [Google Scholar] [CrossRef]

- Qiu, C.; Wang, C.; Zuo, X. A novel multi-objective particle swarm optimization with k-means based global best selection strategy. Int. J. Comput. Intell. Syst. 2013, 6, 822–835. [Google Scholar] [CrossRef] [Green Version]

- Cheng, S.; Chen, M.; Peter, J. Improved multi-objective particle swarm optimization with preference strategy for optimal dg integration into the distribution system. Neurocomputing 2015, 148, 23–29. [Google Scholar] [CrossRef]

- Trivedi, A.; Srinivasan, D.; Sanyal, K.; Ghosh, A. A survey of multiobjective evolutionary algorithms based on decomposition. IEEE Trans. Evol. Comput. 2017, 21, 440–462. [Google Scholar] [CrossRef]

- Deb, K. Multi-Objective Optimization Using Evolutionary Algorithms; Wiley: Hoboken, NJ, USA, 2001. [Google Scholar]

- Coello, C.A.C.; Veldhuizen, D.A.V.; Lamont, G.B. Evolutionary Algorithms for Solving Multi-Objective Problems, 2nd ed.; Springer: Boston, MA, USA, 2007. [Google Scholar]

- Tan, K.C.; Khor, E.F.; Lee, T.H. Multiobjective Evolutionary Algorithms and Applications (Advanced Information and Knowledge Processing); Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Miettinem, K. Nonlinear Multiobjective Optimization; Springer: Boston, MA, USA, 1999. [Google Scholar]

- Stadler, W. A survey of multicriteria optimization or the vector maximum problem, part I: 1776–1960. J. Optim. Theory Appl. 1979, 29, 1–52. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: Nsgaii. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef] [Green Version]

- Sun, J.; Xu, W.; Fang, W. Quantum-behaved particle swarm optimization algorithm with controlled diversity. In International Conference on Computational Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 2006, pp. 847–854. [Google Scholar]

- Sun, J.; Xu, W.; Feng, B. Adaptive parameter control for quantum-behaved particle swarm optimization on individual level. In Proceedings of the 2005 IEEE International Conference on Systems, Man and Cybernetics, Waikoloa, HI, USA, 12 October 2005. [Google Scholar]

- Li, H.; Zhang, Q. Multiobjective optimization problems with complicated pareto sets, moea/d and nsga-ii. IEEE Trans. Evol. Comput. 2009, 13, 284–302. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhou, A.; Zhao, S.; Suganthan, P.N.; Tiwari, S. Multiobjective Optimization Test Instances for the CEC 2009 Special Session and Competition; Mechanical Engineering, University of Essex: Colchester, UK; Nanyang Technological University: Singapore, 2008. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).