Abstract

Supervised classification of 3D point clouds using machine learning algorithms and handcrafted local features as covariates frequently depends on the size of the neighborhood (scale) around each point used to determine those features. It is therefore crucial to estimate the scale or scales providing the best classification results. In this work, we propose three methods to estimate said scales, all of them based on calculating the maximum values of the distance correlation (DC) functions between the features and the label assigned to each point. The performance of the methods was tested using simulated data, and the method presenting the best results was applied to a benchmark data set for point cloud classification. This method consists of detecting the local maximums of DC functions previously smoothed to avoid choosing scales that are very close to each other. Five different classifiers were used: linear discriminant analysis, support vector machines, random forest, multinomial logistic regression and multilayer perceptron neural network. The results obtained were compared with those from other strategies available in the literature, being favorable to our approach.

1. Introduction

As a result of the advances in photogrammetry, computer vision and remote sensing, accessing massive unstructured 3D point cloud data is becoming easier and easier. Simultaneously, there is a growing demand for methods for the automatic interpretation of these data. Machine learning algorithms are among the most used methods for 3D point cloud segmentation and classification given their good performance and versatility [1,2,3,4]. Many of these algorithms are based on defining a set of local geometric features obtained through calculations on the vicinity of each point (or the center of a voxel when voxelization is carried out to reduce computing time) as explanatory variables. Local geometry depends on the size of the neighborhood (scale) around each point. Thus, a point can be seen as belonging to an object of different geometry, such as a line, a plane or a volume, depending on the scale [5]. As a result, the label assigned to each point can also change with this variable. The neighborhood around a point is normally defined by a sphere of fixed radius centered on each point [6] or by a volume limited by a fixed number of the closest neighbors to that point [7]. A third method, which is normally applied to airborne LiDAR (Light Detection and Ranging) data, consists of selecting the points in a cylinder of a fixed radius [8]. The local geometry of each point on the point cloud is mainly obtained from the covariance matrix, although other alternatives are possible, such as in [9], where Delaunay triangulation and tensor voting were used to extract object contours from LiDAR point clouds.

Given the importance of the scale in the result of the classification, there has been a great interest in estimating the scale (size of the neighborhood) or combination of scales (multiscale approach) [10,11,12,13] that provide the best results (the smallest error in the classification). Unfortunately, this is not a trivial question, and several methods have been proposed to determine optimum scales. A heuristic but largely used method is to try a few scales considering aspects such as the density of the point cloud, the size of the objects to be classified and the experience of the people solving the problem and select the scale or scales that provide the best solution. Differences in the density through the point cloud lead to errors in the classification [14]. In [15], the sparseness in the point cloud was addressed through upsampling by a moving least squares method. Another possibility is to select several scales at regular intervals or at intervals determined by a specific function, such as in [16], where a quadratic function of the radius of a sphere centered in each point was used. Obviously, these procedures cannot guarantee an optimal solution, so some more objective and automatic alternatives have been proposed. One of them is to estimate the optimum scales taking into account the structure of the local covariance matrix obtained from the coordinates of the points, and a measure of the uncertainty, such as the Shannon entropy [17,18]. Another alternative is to relate the size of the neighborhood with the point density and the curvature at each point [19]. The size of the neighborhood can be fixed across the point cloud, but the results can improve when it is changed from point to point [20].

There are different approaches for feature selection in classification problems. Some of them, for example, the meta algorithm optimal feature weighting [21], random forests [22] or regularization methods, such as Lasso [23], select the features at the time of performing the classification. By contrast, other methods (known as filter methods), such as Anova, Kruskel, AUC test [24] or independent component analysis [25], perform the selection prior to establishing the classification model. In this work, we propose a method inside this second category. As in [26], we assume that a good approach to the optimum scale selection should be that for which the distance correlation [27] between the features and the labels of the classes has high values, but in this case we look for a combination of scales that provides the best classification, instead of selecting just one scale. In summary, we propose a simple, objective and model independent approach to address an unsolved problem: the determination of the optimal scales in 3D point cloud multiscale supervised classification.

2. Methodology

Given a a sample , where represents the predictors, , and , we are interested in determining a model that assigns values to Y given the corresponding features . Each feature depends on the values of a variable k observed in discretization points. Accordingly, is a vector in . For each , the response variable Y follows a multinomial distribution with L possible levels and associated probabilities , .

In the context of our particular problem, represents each of the features obtained from the point cloud to be classified, whose values are calculated, for each training point, at a finite number of scales , each of them representing the size of the local vicinity around each point. In contrast to the standard procedure, by which only a few scales are used, here the features are calculated at a much larger number of scales in order to be able to select those containing the relevant information to solve the classification problem.

The initial hypothesis is that for each feature only a few values of k (scales) provide useful information to perform the classification, and that these scales correspond to high values of the distance correlation between the features and the labels representing each category. Generally speaking, the distance correlation [27,28] between two random vectors X and Y is defined as

where represents distance covariance (see [29] for an application of distance covariance for variable selection in functional data classification), a measure of the distance between , the joint characteristic function of random vectors X and Y, and the product of the characteristic functions of X and Y, respectively. For their part, and are constant depending on the dimensions p and q, respectively.

A distance correlation has some advantages over other correlation coefficients, such as the Pearson correlation coefficient: (1) it measures non-linear dependence, (2) X and Y do not need to be one dimensional variables, (3) are independent, that is, independence is a necessary and sufficient condition for the nullity of distance correlation.

Given that is defined for X and Y random vector variables in arbitrary finite dimension spaces, in this study, the distance correlation was calculated both for a single scale or for a set of scales (discretization points) . Our aim is to select a set of critical scales (those which contain transcendental information for the classification) , with , which maximize the distance correlation. Solving this problem can be time consuming given that it requires calculating the distance correlation from a very high number of combinations. In this work, three different approaches have been proposed to determine the arguments providing the maximum distance correlation values.

The first approach (Algorithm 1) is an iterative method that looks for the best combinations of all the combinations of the scales K, taking a number of scales at a time. It is a procedure that in each iteration fix the last values of vector and randomly selects the remaining value until a maximum of is reached. This is a force brute procedure that does not look for local maximums directly.

The second approach (Algorithm 2.a) calculates the DC at each value of k for each feature and looks for the local maximums of the distance correlation, sorting them in decreasing order, and finally selecting the first values of k.

Finally, the third approach (Algorithm 2.b) only differs from the second approach in that the distance correlation is smoothed before calculating the local maxima. Then, the values of DC calculated at discrete scales are considered as coming from a smooth function so that , being a zero mean independent error term and . In this way, close local maximums providing redundant information are avoided. In particular, this work follows the same idea as [29] but using a B-spline base instead of kernel-type smoothing.

Each algorithm is run several times, and the values of k in obtained in each step are stored. Those values of that are the most frequent are considered as the critical points (scales). Once these critical scales have been selected, a classification model is fitted to the features at these scales, avoiding the inherent drawbacks of high-dimensional feature spaces. The algorithms for each of the methods are written below, although the second and third methods are written together, since they only differ in a sentence corresponding to the adjustment of a smooth function to the vector of distance correlation. In each algorithm, n represents the size of the data, K the number of scales used to calculate the features and the number of critical points selected.

| Algorithm 1 For scale selection by brute force of the DC. |

| Step 0: Randomly select the initial estimates taking a sample of size without replacement. Step 1: Cycle calculating the update Step 2: Repeat Step 1 replacing by until there is no change between and . |

| Algorithm 2 For scale selection by local maxima of the DC. |

| (Algorithm 2.a) Step 1: Calculate for . Step 2: Smooth as a function of k (only for the third approach-Algorithm 2.b). Step 3: Compute corresponding to local maxima of , sorting them in decreasing order: . |

3. Simulation Study

This section reports the procedure followed in order to evaluate the practical performance of the proposed methodology using simulated data. We consider each simulated feature drawn from

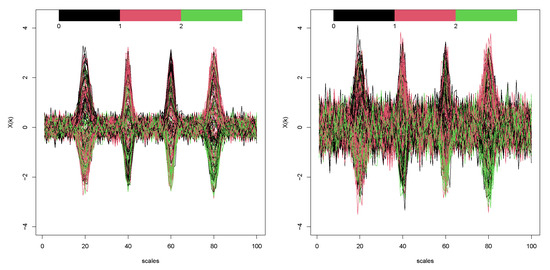

with being the critical points or points of interest to be detected, a measure of how much sharpness is around , and weights that have been simulated independently from a uniform distribution in the interval . The errors were generated from a random Gaussian process with covariance matrix , being a scalar. A set of predictors (features) for and is represented in Figure 1.

Figure 1.

Functional predictors , colored by the corresponding outcome variable ( in black, in red and in green) for two different values of the standard deviation in the error term ( in the left, and in the right).

Given , the corresponding outcome variable was generated from a multinomial distribution with three possible results , and , with associated probabilities , , given by

and . We specifically define and .

A number of independent samples were generated under the above scenario for different variance parameters . Moreover, we randomly split the sample into a training set (), used in the estimation process, and a test set (), for prediction. The curves were discretized in equi-spaced points in .

The performance of the algorithms was evaluated for each of the critical points (as mentioned above, the theoretical critical points in the simulation scenario were by means of the mean square error on a number of repetitions R (we specifically chose ):

where is the estimated optimal subset of scale points in the simulation r.

values for the different proposed algorithms and different values of are summarized in Table 1. For the three algorithms, the mean squared error and the dispersion of the estimated critical points around the mean increases with , as expected. Algorithm 2.a produced the worst results, both in detecting the critical points as well as in the uncertainty of the points detected. In contrast, Algorithm 2.b presented the best performance, so smoothing the distance correlation functions before calculating the critical points had a positive effect. Despite the fact that Algorithm 1 produces reasonable results in terms of accuracy in estimating the critical points, it is striking that the standard deviation is too large in opposition to Algorithm 2.b.

Table 1.

Mean values of (standard deviation in brackets) for different values of .

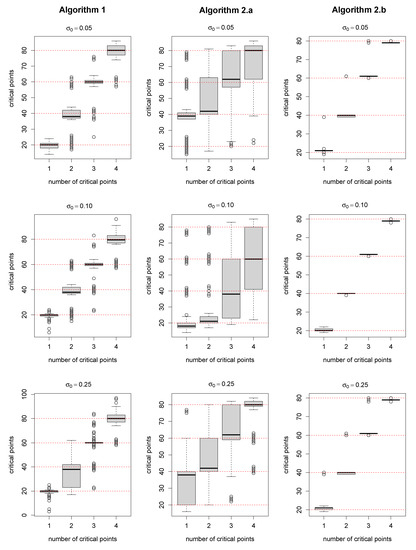

Figure 2 shows boxplots representing the results of the simulation to detect each of the critical points for three different values of and for each algorithm. Clearly Algorithm 2.b is the most accurate, while the worst results corresponded to Algorithm 2.a. Increasing produces uncertainty in the determination of the critical points for Algorithms 1 and 2.a, but hardly affects Algorithm 2.b.

Figure 2.

Boxplots of the estimated critical scales together with the theoretical scales (red lines) for (top), (middle) and (bottom) under Algorithm 1 (left), Algorithm 2.a (middle) and Algorithm 2.b (right).

In addition, to measure the effect of the errors in the detection of the critical points in the estimation of the probabilities, the mean squared error of the probabilities for each class was also calculated:

The results are shown in Table 2, considering a different number of critical points and values of , again, the best results, that is, small values of MSE (and its standard deviation) and total accuracy close to that of the theoretical model, corresponded to Algorithm 2.b, whereas Algorithm 2.a provided the worst results.

Table 2.

Mean values of and accuracy (standard deviation in brackets) for a different number of critical points. makes reference to the accuracy obtained by using the true model, while represents the accuracy values obtained from the simulation.

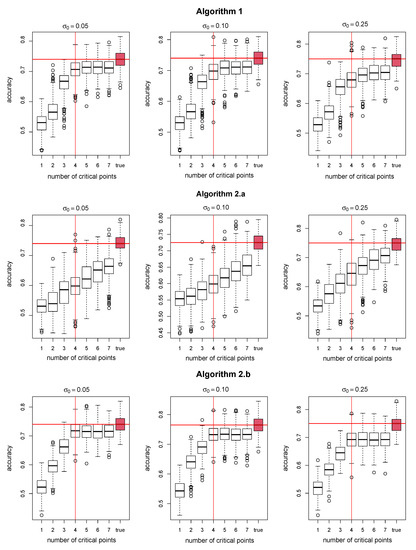

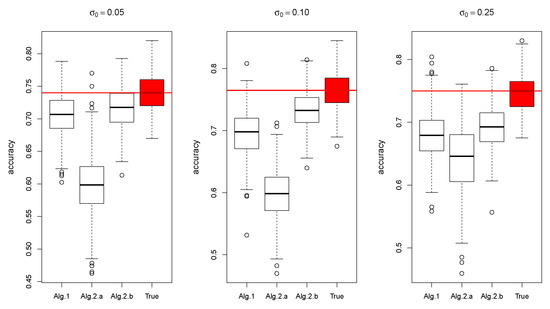

Figure 3 shows boxplots of total accuracy for all the repetitions and for the three algorithms tested, according to the number of critical points. The red items correspond to the theoretical model. Again, the best results correspond to Algorithm 2.b followed by Algorithm 1. Note that more than four critical points were tried, but, either way, for Algorithms 1 and 2.b these two metrics stabilized at .

Figure 3.

Boxplots of the total accuracy obtained with the three algorithms: 1 (top), 2.a (middle), 2.b (bottom). The theoretical value of these metrics in given by the red line. The red boxplot corresponds to the theoretical probabilities.

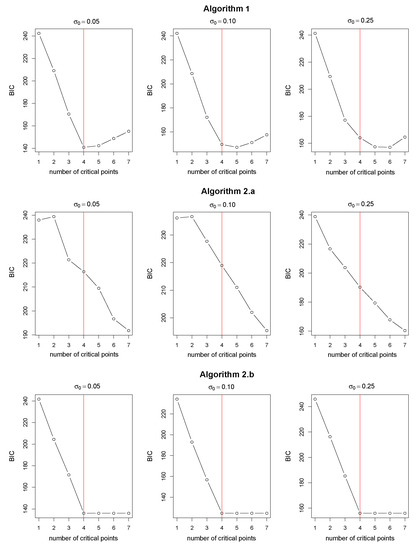

The Bayesian Information Criterion for each Algorithm is represented versus the number of critical points, for three different values of in Figure 4. As can be appreciated, Algorithm 2.a does not work properly: the number of critical points is not detected, and there is a great dispersion in accuracy. Algorithm 2.b reaches a minimum value of BIC at the correct number of critical points , regardless of the value of .

Figure 4.

BIC vs. number of critical points under the Algorithm 1 (left), Algorithm 2.a (middle) and Algorithm 2.b (right), for (top), (middle) and (bottom).

A comparison of the total accuracy for the three algorithms tested with the theoretical model (in red), fixing , for different values of , is shown in Figure 5. A decrease in the accuracy is accompanied by an increase in the standard deviation of the error. Based on the results of the classification for each algorithm it seems that there is a relationship between inaccuracy in the estimation of the critical points and errors in the classification. As before, the best algorithm is Algorithm 2.b, followed by Algorithm 1, while Algorithm 2.a behaves badly. Note that inaccuracy in Algorithm 2.b is mainly due to bias (see right panel of Figure 2), since there is hardly any variability, while for the other two algorithms there is both bias and variability, especially for Algorithm 2.a (see center panel of Figure 2).

Figure 5.

Total accuracy for the three Algorithms 1 and 2 under study at (top), (middle) and (bottom). The boxplots on the right (in red) correspond to the theoretical model.

4. Case Study

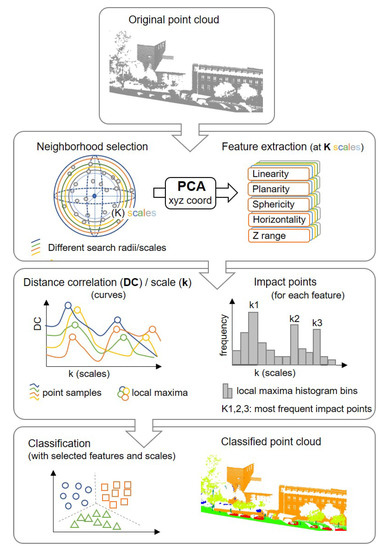

In addition to testing our method on simulated data, we also applied it to a real dataset where the objects to be labeled are elements of an urban environment. Figure 6 shows the operations followed to perform the classification of the point cloud using the proposed methodology.

Figure 6.

Workflow of the proposed methodology to select the optimum scales (impact points) and perform the multiscale classification.

4.1. Dataset and Feature Extraction

The real dataset used to test our approach was the Oakland 3D point cloud [30], a benchmark dataset of 1.6 million points that has been previously used in other studies concerning point cloud segmentation and classification. The objective is to automatically assign a label to each point in the point cloud from a set of features obtained from the the coordinates of a training dataset. Specifically, there are six categories (labels) of interest, as is shown in Figure 7.

Figure 7.

Oakland MLS point cloud. The classes to be extracted have been represented in different colors.

The point cloud was collected around the CMU campus in Oakland-Pittsburgh (USA) using a Mobile Laser Scanner system (MLS) that incorporates two-dimensional laser scanners, an Inertial Measurement Unit (IMU) and a Global Navigation Satellite receptor (GNSS), all calibrated and mounted on the Nablab 11 vehicle. Figure 7 shows a small part of the point cloud, where a label represented with a color has been assigned to each point. A total of six labels have been considered.

The features representing the local geometry around each point were obtained through the eigendecomposition of the covariance matrix [13,31]:

where vector represents each point in the point cloud, a matrix whose columns are the eigenvectors , , and a diagonal matrix whose non-zero elements are the eigenvalues .

The three eigenvalues and the eigenvector were used to calculate the five features registered in Table 3. Z range for each point is calculated considering the points in a vertical column of a specific section (scale) around that point. In order to avoid the negative effect of outliers, instead of using the range of Z coordinates we used the values between the 5th and 95th percentiles. An explanation of the geometrical meaning of these and other local features can be obtained in [12,16].

Table 3.

Features extracted from the point cloud.

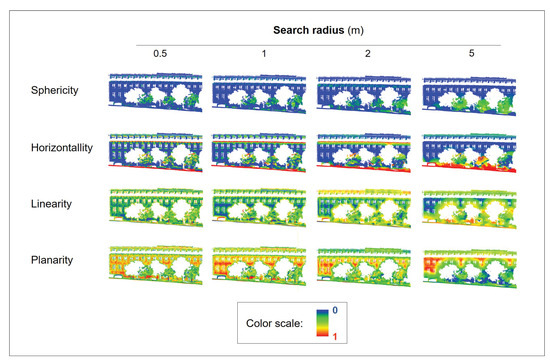

The spatial distribution of these features at different scales are shown in Figure 8.

Figure 8.

Example of the features extracted at different scales.

4.2. Neighborhood Selection

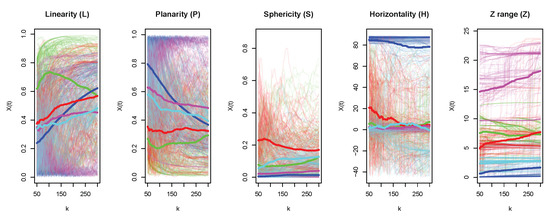

The proposed methodology for optimum scale selection (detection of critical points) was applied to solve the previous classification problem. Thus, we have a vector of input variables representing the features (linearity L, planarity P, sphericity S, horizontality H and Z range Z) measured at different scales, and an output variable , which can also take five discrete values, the labels assigned to each type of object (cars, buildings, canopy, ground and poles). Our aim is to estimate an optimum neighborhood (scale) for each feature by means of distance correlation (DC), taking into account its advantage with respect to the Pearson coefficient. For each sample, each feature was evaluated at a regular grid of scales measured in centimeters in a linear scale from 50 to 300 cm. Figure 9 shows a sample of curves for each feature registered in the interval and the corresponding functional mean , both colored by a class label.

Figure 9.

A sample of curves representing the features measured on scales. Each color represents a label or type of object: poles (green), ground (blue), vegetation (red), buildings (magenta), and vehicles (cyan). Mean values for each class are represented as wider lines.

Note the different performance of the features for the different classes and scales. For instance, horizontality takes high values for the ground, and it is uniform at different scales. However, this feature shows abrupt jumps at certain scales for the poles, that could correspond to edge effects. As expected, linearity takes high values for the poles and low values for the buildings, while planarity is high for buildings and low for poles.

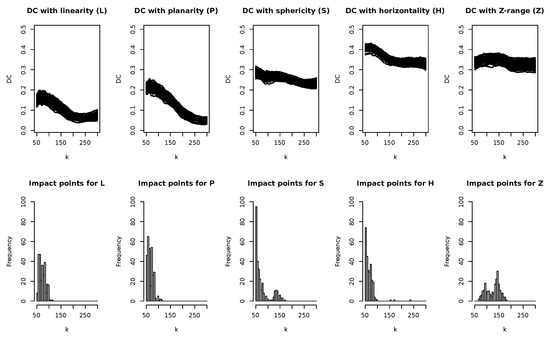

Figure 10 shows the distance correlation functions between a dummy matrix representation of the categorical response and the covariates for 100 repetitions of random samples of size (150 per class), corresponding to each of the features extracted. DC functions were calculated using the fda.usc package [32]. They are quite uniform, with not many peaks, and some of them are close together. This could cause problems in finding the relevant scales, similar to the effect of increasing the standard deviation observed with the simulated data. A histogram of the global maximum of distance correlation curves for those repetitions is depicted at the bottom of the figure. As can be appreciated, most of the relative maximums correspond to low scales (impact points), except for the Z range variable (5th-95th range of z axis).

Figure 10.

Distance correlation functions for each of the features (top)) and histogram of critical points. There are 100 curves, hence the same number of impact points, that were obtained by random sampling the data.

Again, smoothing the DC function before searching for the local maximums helped to discriminate the most important points. However, even when the number of local maximums decreases after smoothing DC, some of them have little influence on the classification. In fact, no more than three critical points were needed to obtain the best results of the classification for the different classifiers tested. The three most frequent scales in each histogram are shown in Table 4. As can be appreciated, the important scales take low values for all the features, with the exception of the Z range, for which important scales correspond to low to medium grades.

Table 4.

Most frequent values of scales selected using Algorithm 2.b.

The performance of our approach was contrasted with the proposal in [18], on which the optimal scale was calculated as the minimum of the Shannon entropy E, which depends on the normalized eigenvalues , , of the local covariance matrix :

4.3. Classification

In this step we evaluate how the classifier translates the information of critical points of X (all features) into a classification error. This is a quite an interesting question in the functional context or when dealing with high-dimensional spaces. However, new important questions arise related to how representative the selected critical points (scales) shown before are or how to select a useful classifier for the classification problem among all the possibilities. Unfortunately, detecting the critical scales is not a simple task, as was proved in the simulation study by introducing Gaussian errors with standard deviation of different magnitude in a model relating features and classes. The greater the standard deviation, the greater the error in determining the exact values of the relevant scales. This is because these scales correspond to the peaks of , and they are less sharp as the standard deviation of the error increases. In this situation, the best results were obtained when DC was smoothed before searching for the local maximum, and this was the method applied to perform the classification with real data.

Now, we have to deal with a purely multivariate classification problem in dimension (the number of critical points for each functional variable) and many procedures are known to handle this problem (see, for example, [33]). Many classification methods have been described in the literature, but we limit our study to four proven methods that are representative of most of the types of classifiers, linear or non-linear, hard or probabilistic, ensemble or not. Specifically, the chosen methods are: linear discriminant analysis (LDA) [34,35], multiclass logistic regression (LR) [36,37], multiclass support vector machines (SVMs) [38,39], random forest (RF) [22] and feed-forward neural networks with a single hidden layer (ANN) [40]. They are among the top classification algorithms in machine learning, although there are some other classification methods that could be employed here such as, for example, Quadratic Discriminant Analysis (QDA) or Generalized Additive Models (GAMs).

The choice among the different classifiers could be influenced by their theoretical properties and/or the easiness to draw inferences. Better inferences can be drawn from more simple classifiers such as LDA or LR models. Furthermore, as is discussed in [41], such simple classifiers are usually hard to beat in real life scenarios, adding interpretability to the classification rule which is sometimes more important than predictability.

We consider three possibilities for the classification, depending on the number of critical scales used, according to Table 4:

- A unique scale (impact point), , for each of the features, corresponding to the most frequent value of the impact points.

- Two scales, , corresponding to the two most frequent critical scales for each of the features.

- Three scales, , corresponding to the three most frequent critical scales for each of the features.

In order to contrast the performance of our approach, the same classification algorithms were applied to the feature values obtained using other scales. Specifically, we used the following values of the scale k:

- , obtained according to Equation (3).

- , linearly spaced scales corresponding to the following values of k in centimeters (cm): .

Training data ( per class) and test data ( per class) were sampled from different areas of the point cloud in order to ensure their independence. Table 5 compares the total accuracy obtained using LR, LDA, SVM, RF and ANN classifiers for all the scales studied: no important discrepancies between them were appreciated, with SVM having a narrow advantage over the others. A quick look at this table informs us that our proposal is better than using sequential scales or . Furthermore, using a multiscale scheme provides a slight improvement in accuracy with respect to using just one scale () for almost all the classifiers. The feature selection and classifier functions available in the R package fda.usc were used with the default parameters (without previous tuning).

Table 5.

Total accuracy (and standard deviation) in % of the classification using five different classifiers depending on the scales evaluated in samples in repetitions. The values were calculated by averaging the total accuracy in each repetition. The numbers in bold represent maximum values.

Table 6 shows the results of the classification for the test sample, in terms of precision and recall, for each of the scales tested, using a multinomial logistic regression LR and SVM classifiers. We limited the results to these two classifiers because there are few differences with the other three classifiers. Although some non-optimal scales provided the best results for some types of objects (ground and buildings), in global terms it can be concluded that the largest values of precision and recall correspond to and , and that they are practically the same in both cases.

Table 6.

Metrics (precision and recall) of the classification by classes using LR and SVM for different scales in a test sample . The numbers in bold represent maximum values in each column. Both metrics correspond to the average values in 100 repetitions.

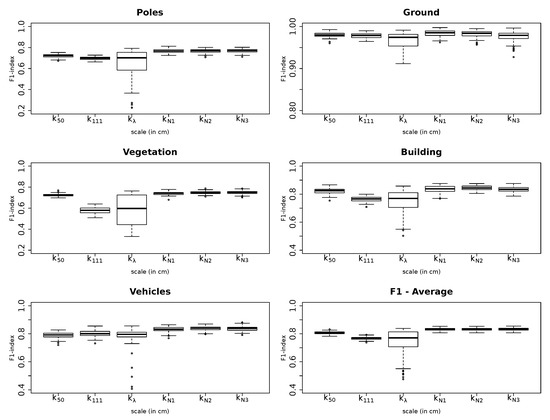

Figure 11 represents boxplots of F1-index for the LR classifier, for each of the classes depending on the scale. The plot at the bottom right is the average value of F1 for the five classes. With a few exceptions, the highest F1 values correspond to the case where two () or three () optimum scales for each feature were used. However, the values of the median and the interquantile range are almost the same, so we cannot claim that there are significant differences between both options. For its part, led to the worst performance with low mean values and great dispersion because sometimes the extreme values of the scale were selected.

Figure 11.

F1 index for each class and average F1 for all the classes (bottom right).

A drawback for the practical implementation of the proposed algorithms, specially for Algorithm 1, is the memory consumption. The overall consumption of memory when storing the distance matrices for p covariates with n elements each is . As an example, Table 7 shows the maximum memory consumed (in megabytes) and the execution time (in seconds) when the algorithms for different sample sizes and two different levels of error is executed in an Intel Core i7-1065G7 with 16MB of RAM.

Table 7.

Computation times (in sec) and memory consumption (in MB) for computing the three algorithms using different parameters in repetitions.

5. Conclusions

In this work we propose three different algorithms to select optimum scales in multiscale classification problems with machine learning. They are based on determining the arguments (scales) of the features with high distance correlation with the labels assigned to the objects. Distance correlation provides a measure of the association, linear or non-linear, between two random vectors of arbitrary dimensions, so it was expected that high correlations correspond to low classification errors. First, the proposed algorithms were tested simulating the distance correlation function and its relationship with the labels. The results were encouraging, supporting the validity of our proposal, and allowing us to establish the order of performance of the three algorithms. The best results were obtained when the distance correlation functions for each feature were smoothed before calculating the local maximum. Then, the algorithm that provided the best results with the simulated data was tested in a real classification problem involving a 3D point cloud collected using a mobile laser scanning system. Determining the optimum scales simplifies the classification problem for other point clouds, since they give us important information to limit the scales at which the features are determined, assuming the quality and density of the point clouds are similar to those of the training data and, of course, that we are classifying the same type of objects. The results obtained for the real problem were also positive, outperforming those obtained with other methods reported in the literature that use unique sequentially defined scales or the Shannon entropy. A maximum of three scales for each feature was sufficient to obtain the best results in the classification, measured in terms of precision, recall and F1-index. In addition, non-significant differences were found between the five classifiers tested.

Author Contributions

Conceptualization, C.O.; Software, M.O.-d.l.F.; Methodology, M.O.-d.l.F. and J.R.-P.; Resources, C.C; Data preparation, C.C.; Validation: M.O.-d.l.F. and J.R.-P.; Writing original draft, C.O. and J.R.-P.; Writing-review & editing, C.C., C.O. and M.O.-d.l.F. All authors have read and agreed to the published version of the manuscript.

Funding

Manuel Oviedo-de la Fuente acknowledges financial support by (1) CITIC, as Research Center accredited by Galician University System, funded by “Consellería de Cultura, Educación e Universidade from Xunta de Galicia”, supported in an 80% through European Regional Development Funds (ERDF), Operational Programme Galicia 2014-2020, and the remaining 20% by “Secretaría Xeral de Universidades” (Grant ED431G 2019/01), (2) by the Spanish Ministry of Science, Innovation and Universities grant project MTM2016-76969-P and (3) by “the Xunta de Galicia (Grupos de Referencia Competitiva ED431C-2020-14)”, all of them through the ERDF Funds. Celestino Ordóñez acknowledges support from the University of Oviedo (Spain) for recognized research groups. Javier Roca-Pardiñas acknowledges financial support by the Grant MTM2017-89422-P (MINECO/AEI/FEDER, UE).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data source is cited in the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bonneau, D.A.; Hutchinson, D.J. The use of terrestrial laser scanning for the characterization of a cliff-talus system in the Thompson River Valley, British Columbia, Canada. Geomorphology 2019, 327, 598–609. [Google Scholar] [CrossRef]

- Zhou, J.; Fu, X.; Zhou, S.; Zhou, J.; Ye, H.; Nguyen, H.T. Nguyen, Automated segmentation of soybean plants from 3D point cloud using machine learning. Comput. Electron. Agric. 2019, 162, 143–153. [Google Scholar] [CrossRef]

- Xie, Y.; Tian, J.; Zhu, X.X. Linking Points with Labels in 3D: A Review of Point Cloud Semantic Segmentation. IEEE Geosci. Remote Sens. Mag. 2020, 8, 38–59. [Google Scholar] [CrossRef]

- de Oliveira, L.M.C.; Lim, A.; Conti, L.A.; Wheeler, A.J. 3D Classification of Cold-Water Coral Reefs: A Comparison of Classification Techniques for 3D Reconstructions of Cold-Water Coral Reefs Front. Mar. Sci. 2021, 8, 640713. [Google Scholar] [CrossRef]

- Brodu, N.; Lague, D. 3D terrestrial lidar data classification of complex natural scenes using a multi-scale dimensionality criterion: Applications in geomorphology. ISPRS J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef]

- Lee, I.; Schenk, T. Perceptual organization of 3D surface points. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 193–198. [Google Scholar]

- Linsen, L.; Prautzsch, H. Local versus global triangulations. Proc. Eurographics 2001, 1, 257–263. [Google Scholar]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual classification of lidar data and building object detection in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Sreevalsan-Nair, J.; Jindal, A.; Kumari, B. Contour extraction in buildings in airborne lidar point clouds using multiscale local geometric descriptors and visual analytics. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2320–2335. [Google Scholar] [CrossRef]

- Mallet, C.; Bretar, F.; Roux, M.; Soergel, U.; Heipke, C. Relevance assessment of full-waveform lidar data for urban area classification. ISPRS J. Photogramm. Remote Sens. 2011, 66, 71–84. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Mallet, C. Feature relevance assessment for the semantic interpretation of 3D point cloud data. ISPRS Annals of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2013, 5, 1. [Google Scholar]

- Dittrich, A.; Weinmann, M.; Hinz, S. Analytical and numerical investigations on the accuracy and robustness of geometric features extracted from 3D point cloud data. ISPRS J. Photogramm. Remote Sens. 2017, 126, 195–208. [Google Scholar] [CrossRef]

- Thomas, H.; Goulette, F.; Deschaud, J.E.; Marcotegui, B.; LeGall, Y. Semantic classification of 3D point clouds with multiscale spherical neighborhoods. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 390–398. [Google Scholar]

- Du, J.; Jiang, Z.; Huang, S.; Wang, Z.; Su, J.; Su, S.; Wu, Y.; Ca, G. Point Cloud Semantic Segmentation Network Based on Multi-Scale Feature Fusion. Sensors 2021, 21, 1625. [Google Scholar] [CrossRef]

- Kumar, S.; Raval, S.; Banerjee, B. A robust approach to identify roof bolts in 3D point cloud data captured from a mobile laser scanner. Int. J. Min. Sci. Technol. 2021, 31, 303–312. [Google Scholar] [CrossRef]

- Demantké, J.; Mallet, C.; David, N.; Vallet, B. Dimensionality based scale selection in 3D lidar point clouds. In Proceedings of the Laserscanning 2011, Calgary, AB, Canada, 29–31 August 2011. [Google Scholar]

- Shannon, C. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Mitra, N.J.; Nguyen, A. Estimating surface normals in noisy point cloud data. In Proceedings of the Nineteenth Annual Symposium on Computational Geometry, San Diego, CA, USA, 8–10 June 2003; pp. 322–328. [Google Scholar]

- Blomley, R.; Weinmann, M.; Leitloff, J.; Jutzi, B. Shape distribution features for point cloud analysis-a geometric histogram approach on multiple scales. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2014, 2, 9. [Google Scholar] [CrossRef]

- Gadat, S.; Younes, L. A stochastic algorithm for feature selection in pattern recognition. J. Mach. Learn. Res. 2007, 8, 509–547. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Bommert, A.; Sun, X.; Bischl, B.; Rahnenführer, J.; Lang, M. Benchmark for filter methods for feature selection in high-dimensional classification data. Comput. Stat. Data Anal. 2020, 143, 106839. [Google Scholar] [CrossRef]

- Comon, P. Independent component analysis, a new concept? Signal Process. 1994, 36, 287–314. [Google Scholar] [CrossRef]

- de la Fuente, M.O.; Cabo, C.; Ordóñez, C.; Roca-Pardiñas, J. Optimum Scale Selection for 3D Point Cloud Classification through Distance Correlation. In International Workshop on Functional and Operatorial Statistics (IWFOS); Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–220. [Google Scholar]

- Székely, G.J.; Rizzo, M.L.; Bakirov, N.K. Measuring and testing dependence by correlation of distances. Ann. Stat. 2007, 35, 2769–2794. [Google Scholar] [CrossRef]

- Székely, G.J.; Rizzo, M.L. Partial distance correlation with methods for dissimilarities. Ann. Stat. 2014, 42, 2382–2412. [Google Scholar] [CrossRef]

- Berrendero, J.R.; Cuevas, A.; Torrecilla, J.L. Variable selection in functional data classification: A maxima-hunting proposal. Stat. Sin. 2016, 26, 619–638. [Google Scholar]

- Munoz, D.; Bagnell, J.A.; Vandapel, N.; Hebert, M. Contextual classification with functional max-margin markov networks. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 975–982. [Google Scholar]

- Ordóñez, C.; Cabo, C.; Sanz-Ablanedo, E. Automatic detection and classification of pole-like objects for urban cartography using mobile laser scanning data. Sensors 2017, 17, 1465. [Google Scholar] [CrossRef]

- Febrero Bande, M.; Oviedo de la Fuente, M. Statistical Computing in Functional Data Analysis: The R Package fda.usc. J. Stat. Softw. 2012, 51, 1–28. [Google Scholar] [CrossRef]

- Ripley, B.D. Pattern Recognition and Neural Networks; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Aguilera-Morillo, M.C.; Aguilera, A.M. Multi-class classification of biomechanical data: A functional LDA approach based on multi-class penalized functional PLS. Stat. Model. 2020, 20, 592–616. [Google Scholar] [CrossRef]

- Cox, D.R. The regression analysis of binary sequences. J. R. Stat. Soc. Ser. B Methodol. 1958, 20, 215–232. [Google Scholar] [CrossRef]

- Zelen, M. Multinomial response models. Comput. Stat. Data Anal. 1991, 12, 249–254. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Crammer, K.; Singer, Y. On the algorithmic implementation of multiclass kernel-based vector machines. J. Mach. Learn. Res. 2001, 2, 265–292. [Google Scholar]

- Venables, W.N.; Ripley, B.D. Modern Applied Statistics with S; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Hand, D.J. Classifier technology and the illusion of progress. Stat. Sci. 2006, 21, 1–14. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).