Abstract

The problem of out-of-focus image restoration can be modeled as an ill-posed integral equation, which can be regularized as a second kind of equation using the Tikhonov method. The multiscale collocation method with the compression strategy has already been developed to discretize this well-posed equation. However, the integral computation and solution of the large multiscale collocation integral equation are two time-consuming processes. To overcome these difficulties, we propose a fully discrete multiscale collocation method using an integral approximation strategy to compute the integral, which efficiently converts the integral operation to the matrix operation and reduces costs. In addition, we also propose a multilevel iteration method (MIM) to solve the fully discrete integral equation obtained from the integral approximation strategy. Herein, the stopping criterion and the computation complexity that correspond to the MIM are shown. Furthermore, a posteriori parameter choice strategy is developed for this method, and the final convergence order is evaluated. We present three numerical experiments to display the performance and computation efficiency of our proposed methods.

1. Introduction

Continuous integral equations are often used to model certain practical problems in image processing. However, the corresponding discrete models are often used instead of continuous models because discrete models are much easier and more convenient to implement than continuous integral models. Discrete models are piecewise constant approximations of integral equation models, and they introduce a bottleneck model error that cannot be addressed by any image processing method. To overcome the accuracy deficiency of conventional discrete reconstruction models, we use continuous models directly to restore images, which are more in line with physical laws. This idea first appeared in [1], and was widely used later, such as in [2,3,4]. In addition to making more sense in physics, continuous models can be discretized with higher-order accuracy. This means that the model error will be significantly decreased when compared with piecewise constant discretization, especially in the field of image enlargement.

Many researchers have made great contributions to the solution of integral equations, however, many difficulties remain. First, integral operators are compact in Banach space since integral kernels are normally smooth. This will produce a situation in which the solutions of the relevant integral equations do not depend on the known data continuously. To overcome this problem, the Tikhonov [5] and the Lavrentiev [6] regularization methods were proposed to regularize the ill-posed integral equation into a second-kind integral equation. Second, the equation using the Tikhonov regularization method contains a composition integral operator, which greatly increases the computation time. Given this condition, a coupled system equation that only involves a one-time integral operator was proposed in [7] to reduce the high computational cost. The original second-kind integral equation involves the composition of an integral operator, which is time-consuming. The collocation method [8] and the Galerkin method [9] were proposed to discretize the coupled system, and the collocation method is much easier. The third problem is that after the coupled system equation is discretized by the collocation method, a full coefficient matrix is generated. To overcome this issue, Chen et al. [10] proposed that the integral operator be represented using a multiscale basis and obtained a sparse coefficient matrix. Then, a matrix compression technique [1,11] is used to approximate that matrix, which does not affect the existing convergence order. Finally, appropriately choosing the regularization parameter (see [12,13,14,15,16,17]) is a crucial process that should balance between the approximation accuracy and the well-posedness.

The purpose of this paper is to efficiently solve the second-kind integral equation on the basis of the previous achievements of other researchers. Although the equivalent coupled system is developed to reduce the computational complexity caused by the composition of the original integral operator, this is insufficient because a lot of computing time is required to compute the integral. Therefore, inspired by [18], we further propose a fully discrete multiscale collocation method using an integral approximation strategy to compute the integration. The idea of this strategy is the use of the Gaussian quadrature formula to efficiently compute the sparse coefficient matrix. By using the piecewise Gauss-Legendre quadrature, we turn the calculation of the integration into the matrix operation, which will tremendously reduce the computation time. Another challenging issue is that directly solving the large, fully discrete system obtained from the matrix compression strategy is time-consuming. Inspired by [19], we propose a multilevel iteration method (MIM) to solve the large, fully discrete coupled system, and we further present the computation complexity of the MIM. We also propose a stopping criterion of this iteration process, and we prove that this criterion can maintain the existing convergence rate. Finally, we adopt an a posteriori choice of the regularization parameter related to the MIM and then show that it will lead to an optimal convergence order.

This paper is organized into six sections. In Section 2, an overview flowchart is displayed first, and then the integral equation model of the first kind is reviewed to reconstruct an out-of-focus image. Following this, an equivalent coupled system is deduced. In Section 3, we present the fast multiscale collocation method to discretize the coupled system using piecewise polynomial spaces. Additionally, a compression strategy is developed to generate a sparse coefficient matrix in order to make the coupled equation easily solvable. Finally, we propose an integral approximation strategy to compute the nonzero entries of the compressed coefficient matrix, which turns the operation of the integral into a matrix computation. We also provide a convergence analysis of the proposed method. In Section 4, we propose a multilevel iteration method corresponding to the multiscale method to solve the integral equations, and a complete analysis of the convergence rate of the corresponding approximate solution is shown. A posteriori choice of the regularization parameter, which is related to the multilevel iteration method, is presented in Section 5, and we further prove that the MIM, combined with this posteriori parameter choice, makes our solution optimal. In Section 6, we report three comparative tests to verify the efficiency of our two proposed methods. The first test shows the computing efficiency of the coefficient matrix using the integral approximation strategy and the numerical quadrature scheme in [1]. The other two tests exhibit the performance of the MIM compared with the Gaussian elimination method and the multilevel augmentation method, respectively. These tests reveal the high efficiency of our proposed methods.

2. The Integral Equation Model for Image Restoration

In this section, we first depict the overall process of reconstructing out-of-focus images and then describe some common notations that are used throughout the paper. Finally, in the second subsection, we introduce the approach to formulating our image restoration problem into an integral equation.

2.1. System Overview

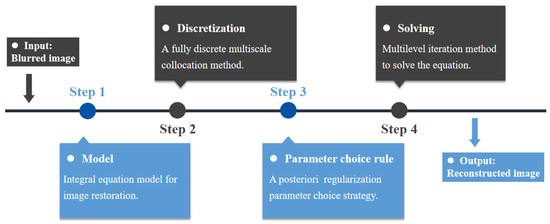

We present an overview flowchart for reconstructing an out-of-focus image in Figure 1. This process includes four main parts, that is, modeling, discretization, the parameter choice rule, and solving the equation.

Figure 1.

Overview flowchart.

For the input out-of-focus image, we formulate it into a Tikhonov-regularized integral equation, which is described in the next subsection. Solving this integral equation necessitates discretization. We propose a fully discrete multiscale collocation method based on an integral approximation strategy. This method works by converting the calculation of the integral into a matrix operation. The next two parts describe the parameter choice rule and solution of the problem. We note that these two parts are actually a whole when executed in practice. However, we describe it in two parts because it is too complicated to describe as a whole. For a clearer presentation, we first display the multilevel iteration method in Section 4 under the condition that we have already selected a good regularization parameter. Then, we describe how this regularization parameter is chosen in Section 5.

Some notations are needed. Suppose that represents d-dimensional Euclidean space. In addition, and denote the subsets of . is a special kind of space of when , and it is proved to be a Banach space. We use x, including x with upper and lower indices, to denote the solution of the equation. Similarly, we use y, including y with upper and lower indices, to denote the known variable of the equation. Furthermore, the operator represents the blurring kernel, and represents the adjoint operator of . The notation denotes the direct sum of spaces A and B when . The notation means that a and b are in the same order. represents the range of the operator. Finally, many extended and new notations that are not declared here are defined in the context in which they are first used.

2.2. Model Definition

In this subsection, we describe an integral equation model obtained from a reformulation of the Tikhonov regularization equation for image restoration. In addition, an equivalent coupled system is developed for solving this integral equation quickly and efficiently.

Assume that the whole image is a support set. In general, images are usually rectangular. Let denotes the image domain, also the support set. The image restoration can be modeled by a continuous integral equation of the first kind

where y is the blurred image and we aim to reconstruct the blurred image y into a clearer image . Thus, image x can also be called the reconstructed image. is the linear compact operator defined in terms of a kernel g by

Let , with . The images may not be in , but can be shaped to by scaling and shifting. According to [20], the out-of-focus image is usually modeled with the kernel

where ,, and is the parameter characterizing the level of ambiguity in the kernel.

Blurring kernel (3) is a two-dimensional symmetric operator. It can be written as a combination of two univariate integral operators. That is to say, solving Equation (1) is equivalent to solving the following system:

In this system, is the final solution of the reconstruction, and is the intermediate result. These two equations mean that we can first deal with all the rows of the image (first equation) and then all the columns (second equation). Hence, the problem has changed significantly. We have changed the procedure of dealing with a two-dimensional image to processing the rows of the image first, and then the columns. Furthermore, as can be seen from the two equations in (4), the rows and columns of the image are processed similarly, and both processes can be formulated to a one-dimensional integral equation:

For , is the linear compact operator defined by

with

and is the observed function. Hence, we focus on Equation (5) in the following illustration.

Ill-posedness makes Equation (5) difficult to solve, but the Tikhonov regularization method can handle this problem. By using the Tikhonov regularization method, we get the following equation:

where is the regularization parameter, and is the adjoint operator of . It is easy to prove that the operator is invertible. In addition, is usually noisy data, with

for a noise level . denotes the solution of Equation (6) when y is replaced by . is self-adjoint; that is, . Note that Equation (6) includes , which is defined by a 2-fold integral. This 2-fold integral is tremendously computationally expensive. By letting , the authors of [7] split Equation (6) into a coupled system:

These two were proven to be equal in [7], and the advantage is that system (8) only involves one single integral. Thus, we do not solve Equation (6) but instead solve system (8) to reduce the computational difficulties.

In the next section, we apply a fully discrete method to solve system (8).

3. Fully Discrete Multiscale Collocation Method

In this section, a multiscale method is reviewed first, and then we develop a fully discrete formulation of the multiscale collocation method using an integral approximation strategy. By using this strategy, we turn the computation of the integral into a matrix operation, which will greatly reduce the calculation time.

3.1. Multiscale Collocation Method

Let us begin with a quick review of the fast collocation method [10,21,22,23,24] for solving system (8).

We denote as the natural numbers set. For , let . Let , denote the subspaces of . Let . More clearly,

A basis construction of can be found in [25,26]. Suppose that is an orthogonal basis of . Let , where is the dimension of . According to (9), we get

Similarly, the multiscale collocation functionals and the collocation points are important. Suppose that is the collocation functional. Meanwhile, we have a sequence of collocation points in E. We define an interpolation projection and an orthogonal projection .

For , let . The multiscale collocation method for system (8) is to find the solutions

and

of the system

For , after the introduction of three definitions,

Equation (9) has the matrix form

Note that is a dense matrix. The compression of is an important factor of our method. Following the compression strategy of [7], we get the compression matrix :

Thus, substituting with , we finally need to solve the equation

3.2. Integral Approximation Strategy

The computation of system (11) mainly lies in the integral operator . Next, we focus on this problem and develop an integral approximation strategy using the Gaussian quadrature formula to solve this coupled system.

Note that the integral operator is

and the orthogonal basis functions are all piecewise polynomial functions. Thus, the piecewise Gauss–Legendre quadrature is used here.

Integral approximation strategy: With the supports of these basis functions, we divide E equally into parts. Let , for ; then, . Each basis function is a continuous function in . Then, we choose Gaussian points in each part and let . All Gaussian points form a set of piecewise Gaussian points G in order. Let ; then, . Thus, the integral under the accuracy of in can be written as

where represents a Gaussian point in , and represents the weight corresponding to . Further, the integral with an accuracy of in E is

which can be written as a formulation of vector multiplication

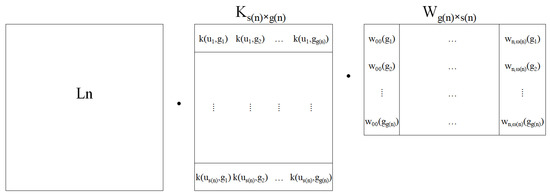

Furthermore, as shown in Figure 2, we can use this strategy to approximate as a matrix form of

where is a basis function matrix with weights. And it stands for all the basis functions act on all piecewise Gaussian points of G with the weight of . denotes the matrix representation of point evaluation functional , and the details of refer to [26].

Figure 2.

The components of matrix .

In order to combine it with the matrix compression strategy, we write Equation (13) in blocks. This is the matrix representation , which is the approximate value of using the integral approximation strategy.

Then, Equation (10) becomes a formulation of the fully discrete multiscale collocation equation:

where is as given in Equation (14).

Let , . Assume that is the operator corresponding to the matrix representation using the compression strategy, and define . Additionally, let represent the operator with respect to the matrix representation using the integral approximation strategy and the compression strategy, and then define . Therefore, corresponding to Equation (11), system (9) becomes

By adopting the integral approximation strategy, the computation of the integral becomes the computation of the matrix multiplication (14), which greatly reduces the calculation.

Next, we estimate the convergence order of the fast multiscale collocation method using the integral approximation strategy. We should note that parameter c is different in different scenarios below, unless explicitly stated.

We assume that there exists a constant M such that . According to [5,12], for any , is invertible. We also have the inequality

Assume that is the exact solution of Equation (1), and .

(H1) If with , then there exists an such that .

Following from [5,27], if hypothesis (H1) holds, then we have the convergence rate

Suppose that . In order to estimate the convergence rate of the integral approximation strategy, we propose the following hypothesis:

(H2) For all and some positive constants r,

when is a Fredholm integral operator with a kernel having the rth derivative, this hypothesis holds true.

Following [28], we can write the remark below.

Remark 1.

Let for . The Gauss-Legendre quadrature of function on is:

where is a Gaussian point on . The remainder of the Gauss–Legendre quadrature is given as

where , and for . We can conclude that:

Note that when m Gaussian points are used, the accuracy of the Gauss-Legendre quadrature is . Corresponding to Remark 1, we give the following proposition.

Proposition 1.

Assume that the integral accuracy m satisfies

Then, we can obtain the conclusion that

Proof.

Assume that , the operator . Because the polynomial functions are infinitely differentiable, we can get the result that for . From Remark (1), we gain the following inequality:

Furthermore, we can infer from inequality (21) that when all areas are added, then

Thus, the proof has been completed. □

Note that the Gaussian function has an infinite derivative; thus, assumption (19) is easy to satisfy.

Theorem 1.

Let , and . If hypothesis (H2) and condition (19) are true, then for ,

Proof.

First, ; then, we can prove this theorem by estimating and separately.

If hypothesis (H2) holds, and according to Lemma (3.2) in [22], we have the conclusion that for all ,

From condition (19), we have that . At the same time, . Since and are uniformly bounded, we can infer that

Thus, we can obtain . Because , we can get the inequality

This completes the proof. □

Lemma 1.

Assume that hypothesis (H2) holds, and is the same parameter as in Theorem 1. If parameters n and γ are chosen from

then we can conclude that is invertible. In addition,

Proof.

According to a known result in [29], we conclude that is invertible and

Next, we give two lemmas. The proofs are similar to those in [22], so they are omitted here.

Lemma 2.

Lemma 3.

Suppose that hypothesis (H2) holds, and inequality (24) is satisfied. Then, for and ,

For the remainder of this section, we estimate the error bound for .

Theorem 2.

Proof.

From the triangle inequality, we have

It is apparent that the estimate in this theorem follows directly from the above bound, inequality (18), and Lemmas 2 and 3. □

4. Multilevel Iteration Method

In general, we solve Equation (16) while choosing the regularization parameter. When parameter selection is finished, the equation is solved. Note that when executed in practice, these two processes are a whole and occurring simultaneously. However, in order to describe it more clearly, we split it into two processes. In this section, we present the multilevel iteration method (MIM) for a fixed and assume that this parameter is already well selected. In the next section, we show the regularization parameter choice rule.

In this section, we first describe the multilevel iteration method and then present the computation complexity of this algorithm. Finally, the error estimation is then proved.

4.1. Multilevel Iteration Method

After obtaining matrices , and , we begin to solve Equation (15). If we just invert this equation directly, it will require considerable time. Thus, we follow a MIM instead of inversion to obtain a fast algorithm.

First, we introduce the MIM to the coupled system (16). We now assume that the fixed parameter is already selected according to the rule in Section 5.

With the decomposition of solution domain, for and , we can write the solutions and of system (16) as two block vectors

where , , and , , for . The operator also has the following matrix form

The MIM for solving the coupled system (16) can be represented as Algorithm 1. Let

We split the operator into two parts, , which are lower and higher frequencies, respectively. Similarly, we can obtain and by splitting using an analogous approach. Accordingly, the coupled system (16) can be written as

| Algorithm 1: Multilevel Iteration Method (MIM). | |

|

4.2. Computation Complexity

We now turn to studying the computation complexity of this algorithm. Specifically, we estimate the number of multiplications used in the method. As a result, we write the iterative equation in Algorithm 1 in the matrix representation form. First, we introduce the block matrix and define . Moreover, for a fixed , we define the blocks . Additionally, for , we define , , and . From these definitions, we write . We also partition matrix in the same way, which we omit here.

Then, the matrix representations of the operators and are

We also write down the matrix representations of the operators and at the ℓth iteration as and . Furthermore, we define . Using this block form of the matrix, the solutions of the iterative equation in Algorithm 1 become

For ,

For a matrix A, we denote by the number of nonzero entries of A. Let

For , we need multiplications with an inverse operation to obtain and from Equation (31). However, in all iterations, the inverse operation only needs to be done once; thus, we assume that the inverse operation needs multiplications. Hence, the number of multiplications for computing and from and is

In addition, we only need to compute the same inverse operation to obtain and in the first step in Algorithm 1. Therefore, we are now ready to summarize the above discussion in a proposition.

Proposition 2.

The total number of multiplications required to obtain and is given by

Note that when we solve the coupled system (16) directly, we need to compute the inverse operation . However, when we use the MIM, we only need to compute the inverse operation . This is the key factor that leads to a fast algorithm.

In the next subsection, we estimate the error .

4.3. Error Estimation

From the triangle inequality

we only need to estimate . Let

Then, .

Lemma 4.

Suppose that hypotheses (H1) (H2) and condition (24) hold, then , as , for . Then for , and ,

Proof.

If these two hypotheses are true, then there exists a constant such that

and is the same. We rewrite as

From the definition of , (17), and Theorem 1, for any , we have

A corollary (cf, Corollary 9.9, page 337 of [10]) confirms that the condition numbers cond() and cond() have the same order. In other words, the MIM will not ruin the well-condition property of the original multiscale method. However selecting an appropriate k is important, which will influence the result of the iteration process. It follows from the iterative equation in Algorithm 1 that

Generally, in order to make the iteration process convergence, we would choose k such that

and

Theorem 3.

Proof.

We prove this result by induction on ℓ.

First, we prove that Equation (43) holds when . It is apparent that . Therefore, from the result of Lemmas 2 and 3, we can conclude that there exists a constant c,

and then we obtain Equation (43) when .

Meanwhile, following from Equation (29), we can obtain

On the basis of Lemma 4, for and ,

Next, we estimate , , and . From hypothesis (H2), we have

and

Then, we get

We assume that Equation (43) holds for , and prove that it also holds for .

Theorem 4.

If hypotheses (H1) and (H2) and assumption (19) hold, the parameters n and γ are chosen to satisfy condition (24), and the integer k is chosen to satisfy Equations (40) and (41), then for , , and ,

Moreover, if parameters k and m are chosen on the basis of , and the number of iterations ℓ satisfies

then

Proof.

From the triangle inequality, we have

Note that

and is exponentially decreasing. Therefore, the stopping criterion can be reached at a finite step. With the next remark, we show that the stopping criterion of Algorithm 1 can guarantee the convergence rate of Equation (55).

Remark 2.

Proof.

From the triangle inequality, we get

On the one hand, following from the iterative equation in Algorithm 1, we can write as

On the other hand,

According to Theorem 2, the above inequality, and also , we can get the final result:

□

5. Regularization Parameter Choice Strategies

Choosing an appropriate regularization parameter is an important process in solving the ill-posed integral equation. We present a posteriori parameter choice strategy [14] for our proposed method.

For any given , we choose the parameters according to Theorem 4. Following from [14], we assume that there exist two increasing continuous functions,

with , such that we can write Equation (53) as

Then, would be the best choice. For constants and , we let the positive integer N be determined by

and then define the set , and define . Obviously, can be the approximation of the regularization parameter, but the function involves the unknown smoothness order of the integral operator. Therefore, it is infeasible to use directly, and a little modification is necessary. We next present a rule for choosing the regularization parameter .

Rule 1.

As suggested in [14], we choose the parameter as an approximation of , where

This is a posteriori parameter choice strategy, which does not involve the smoothness order . We next present a crucial lemma, which can be found in Theorem 2.1 of [14].

Lemma 5.

Suppose that assumption (64) holds, and and are the integers corresponding to . If for , we have , , and , for , then we can get the result

Proof.

This lemma can be obtained from [7] with a slight modification. Thus, we omit the details of the proof. □

Then, we estimate the convergence order when we choose the parameter .

Theorem 5.

Suppose that hypotheses (H1) and (H2) and assumption (19) are true, and if we choose the regularization parameter according to Rule 1, then the convergence order of the approximate solution is

Proof.

Substituting Equation (63) into Equation (66), we can obtain Equation (67). Thus, it is sufficient to verify the hypotheses of Lemma 5. From the definition of , we can get . On the one hand, , and , and then we can obtain . On the other hand, and , and then . In sum, we can conclude that and . Therefore, , and at the same time, we also have . So far, we have proven that the conditions of Lemma 5 are met. Therefore, there exists a constant such that

The proof has been completed. □

6. Numerical Experiments

In this section, three numerical examples are presented for the restoration of out-of-focus images to verify the performance of the fully discrete multiscale collection method and the multilevel iteration method. Matlab was used to conduct our simulations, and all examples below were run on a computer with a 3.00 GHz CPU and 8 GB memory.

Using the integral equation necessitates transforming the discrete matrix (the observed image) into a continuous function. We used the method in [1] directly. Assume that the size of the image is , and the pixels of image are on the grid . The function to formulate the image is

where is the old pixel value. Assume that s is a positive integer. Then, for ,

Then Equation (5) becomes

The noise level is defined as . Note that we employ the piecewise linear functions in [26] for simplicity in the following examples.

Example 1.

In our first experiment, we verified the effectiveness of the integral approximation strategy for the coupled integral equation. We set as the initial level and measured the computing time in seconds to generate the coefficient matrix of the coupled operator Equation (9) for the range to 10 in Table 1. For comparison, we repeated the experiment of the numerical quadrature scheme in [1]. In this example, we set both integral methods to have the same accuracy, . For different values of n, denotes the computing time of the numerical quadrature scheme in [1], and denotes the computing time of the proposed integral approximation strategy. As we can see in Table 1, the proposed integral approximation strategy uses less time to generate the coefficient matrix , which proves that the integral approximation strategy is an efficient fast algorithm.

Table 1.

Computing time for generating matrices .

Example 2.

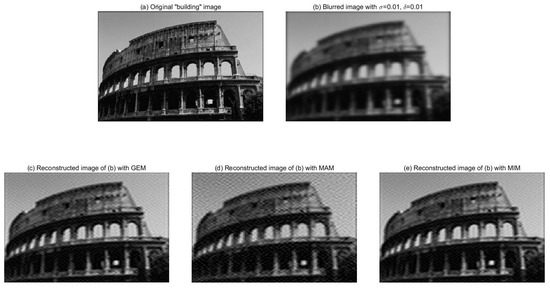

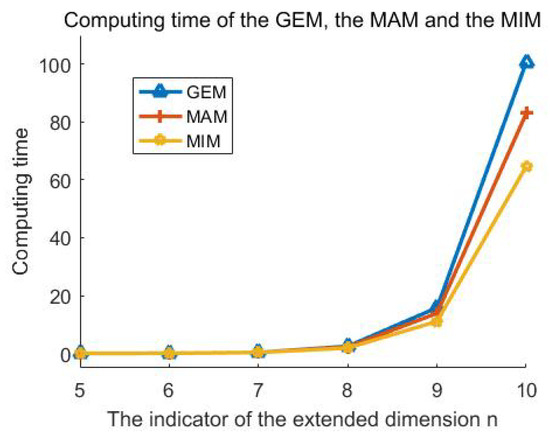

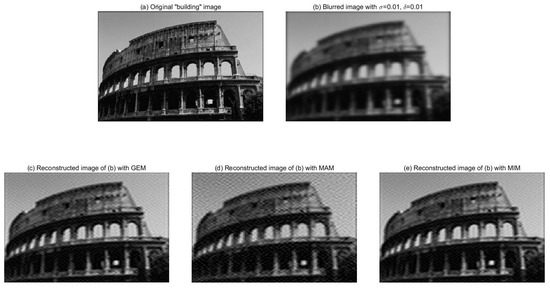

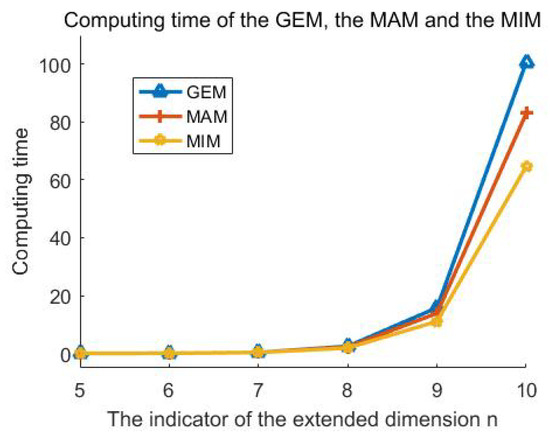

The second simulation was executed to verify the efficiency of the proposed multilevel iteration method. Figure 3a is the original clear ’building’ image with the size , and the blurred image is recovered in Figure 3b with in the blurring kernel and noise level using the MIM. For comparison, we also conducted experiments using the Gaussian elimination method (GEM) in [28] and the multilevel augmentation method (MAM) in [10]. , and represent the computing time of the Gaussian elimination method, the multilevel augmentation method and the multilevel iteration method, respectively. The value n listed in Table 2 ranges from 5 to 10, and in this case, the continuous intensity function (69) is needed. As shown in Section 2, two one-dimensional integral equations are solved to recover the blurred image. Therefore, the computing time here denotes the summation of the time needed to solve the coupled Equation (8) twice. In our MIM experiments, all processes stopped at the second iteration, which is very fast because of the good selection of the initial values and . These two initial values can be found in Step 1 of Algorithm 1. Figure 3c–e show the reconstructed images of (b) using the GEM, the MAM and the MIM. Meanwhile, Table 2 and Figure 4 exhibit the computing time of these three methods. On the whole, the computing time of the MIM is the least among the results of these methods. All results show the proposed multilevel iteration method requires less computational resources. The difference is obvious, especially when the indicator of the extended dimension n is large.

Figure 3.

(a) The original clear ’building’ image. (b) Blurred image with and . (c–e) are reconstructed images of (b) with the GEM, the MAM, and the MIM.

Table 2.

The computing time of the GEM, the MAM and the MIM.

Figure 4.

The computing time of the GEM, the MAM and the MIM.

Example 3.

In this example, we demonstrate that the performance of the MIM is as good as the alternative method. As shown in Figure 5b–d, we consider the restoration of the ’Lena’ image, which has the size . We use the image with in the blurring kernel and different noise level δ. Note that when , the image is noise free. We introduce the peak signal-to-noise ratio (PSNR) to evaluate the restored images and blurred images. For comparison, we solved the corresponding integral equation by using the proposed multilevel iteration method and the Gaussian elimination method with the piecewise linear polynomial basis functions at . Table 3 and Table 4 list obtained from the parameter choice using Rule 1, the PSNR value of the blurred image (PB), the PSNR value of the reconstructed image (PR), and the corresponding time to solve the equation using the GEM and the MIM separately, where the noise level δ ranges from 0 to 0.15. Figure 5e–j show the reconstructed image corresponding to different methods and different noise levels of 0, 0.03, and 0.1. In general, by comparing the numerical experiments in Table 3 and Table 4, we can conclude that there is almost no difference in the PSNR value of the reconstructed image using the GEM and the MIM, but more specifically, the MIM performs better in most cases. But more seriously, the MIM performs better in most cases.

Figure 5.

(a) Original clear ’Lena’ image. (b–d) are blurred images with in kernel and noise level , respectively. (e–g) are reconstructed images of (b–d) respectively using the GEM. (h–j) are reconstructed images of (b–d) respectively using the MIM.

Table 3.

Performance of the Gaussian elimination method.

Table 4.

Performance of the multilevel iteration method.

Example 2, combined with Example 3, proves that the MIM uses less computation time than the alternative methods, while the performance is equally well. Therefore, we can conclude that the multilevel iteration method is an effective fast algorithm to solve the integral equation in image restoration.

7. Conclusions

In this paper, we formulate the problem of image restoration as an integral equation. In order to solve this integral equation, we propose two fast algorithms. The first one is the fully discrete multiscale collocation method, which converts the calculation of the integral to a matrix operation. The second one is the multilevel iteration method, which guarantees that the solution has an optimal order. All examples verify that the proposed methods are accurate and efficient when compared with alternative strategies. In the future, we will still focus on finding faster and more efficient methods.

Author Contributions

B.Z. completed the analysis and designed the paper; H.Y. managed the project and reviewed the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported in part by NSFC under grant 11571386.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lu, Y.; Shen, L.; Xu, Y. Integral equation models for image restoration: High accuracy methods and fast algorithms. Inverse Probl. 2010, 26, 045006. [Google Scholar] [CrossRef]

- Chan, R.H.; Chan, T.F.; Shen, L.; Shen, Z. Wavelet algorithms for high-resolution image reconstruction. SIAM J. Sci. Comput. 2003, 24, 1408–1432. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, S.; Xu, Y. A Higher-Order Polynomial Method for SPECT Reconstruction. IEEE Trans. Med. Imaging 2019, 38, 1271–1283. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Shen, L.; Xu, Y.; Yang, H. A collocation method solving integral equation models for image restoration. J. Integral Eq. Appl. 2016, 28, 263–307. [Google Scholar] [CrossRef]

- Groetsch, C.W. The Theory of Tikhonov Regularization for Fredholm Equations of the First Kind; Research Notes in Mathematics; Pitman (Advanced Publishing Program): Boston, MA, USA, 1984. [Google Scholar]

- Engl, H.W.; Hanke, M.; Neubauer, A. Regularization of Inverse Problems; Mathematics and Its Applications; Kluwer Academic Publishers Group: Dordrecht, The Netherlands, 1996. [Google Scholar]

- Chen, Z.; Ding, S.; Xu, Y.; Yang, H. Multiscale collocation methods for ill-posed integral equations via a coupled system. Inverse Probl. 2012, 28, 025006. [Google Scholar] [CrossRef]

- Russell, R.D.; Shampine, L.F. A collocation method for boundary value problems. Numer. Math. 1972, 19, 1–28. [Google Scholar] [CrossRef]

- Krasnosel’skii, M.A.; Vainikko, G.M.; Zabreiko, P.P.; Rutitskii, Y.B.; Stetsenko, V.Y. Approximate Solution of Operator Equations; Wolters-Noordhoff Pub: Groningen, The Netherlands, 1972. [Google Scholar]

- Chen, Z.; Micchelli, C.A.; Xu, Y. Multiscale Methods for Fredholm Integral Equations; Cambridge Monographs on Applied and Computational Mathematics; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Micchelli, C.A.; Xu, Y.; Zhao, Y. Wavelet Galerkin methods for second-kind integral equations. J. Comput. Appl. Math. 1997, 86, 251–270. [Google Scholar] [CrossRef]

- Groetsch, C.W. Convergence analysis of a regularized degenerate kernel method for Fredholm integral equations of the first kind. Integral Eq. Oper. Theory 1990, 13, 67–75. [Google Scholar] [CrossRef]

- Hämarik, U.; Raus, T. About the balancing principle for choice of the regularization parameter. Numer. Funct. Anal. Optim. 2009, 30, 951–970. [Google Scholar] [CrossRef]

- Pereverzev, S.; Schock, E. On the adaptive selection of the parameter in regularization of ill-posed problems. SIAM J. Numer. Anal. 2005, 43, 2060–2076. [Google Scholar] [CrossRef]

- Bauer, F.; Kindermann, S. The quasi-optimality criterion for classical inverse problems. Inverse Probl. 2008, 24, 035002. [Google Scholar] [CrossRef]

- Jin, Q.; Wang, W. Analysis of the iteratively regularized Gauss-Newton method under a heuristic rule. Inverse Probl. 2018, 34, 035001. [Google Scholar] [CrossRef]

- Rajan, M.P. Convergence analysis of a regularized approximation for solving Fredholm integral equations of the first kind. J. Math. Anal. Appl. 2003, 279, 522–530. [Google Scholar] [CrossRef][Green Version]

- Ma, Y.; Xu, Y. Computing integrals involved the Gaussian function with a small standard deviation. J. Sci. Comput. 2019, 78, 1744–1767. [Google Scholar] [CrossRef]

- Luo, X.; Fan, L.; Wu, Y.; Li, F. Fast multi-level iteration methods with compression technique for solving ill-posed integral equations. J. Comput. Appl. Math. 2014, 256, 131–151. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Wintz, P. Digital Image Processing; Addison-Wesley Publishing Co.: Reading, MA, USA; London, UK; Amsterdam, The Netherlands, 1977. [Google Scholar]

- Chen, Z.; Micchelli, C.A.; Xu, Y. A construction of interpolating wavelets on invariant sets. Math. Comp. 1999, 68, 1569–1587. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, Y.; Yang, H. Fast collocation methods for solving ill-posed integral equations of the first kind. Inverse Probl. 2008, 24, 065007. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, Y.; Yang, H. A multilevel augmentation method for solving ill-posed operator equations. Inverse Probl. 2006, 22, 155–174. [Google Scholar] [CrossRef]

- Chen, Z.; Micchelli, C.A.; Xu, Y. Fast collocation methods for second kind integral equations. SIAM J. Numer. Anal. 2002, 40, 344–375. [Google Scholar] [CrossRef]

- Micchelli, C.A.; Xu, Y. Reconstruction and decomposition algorithms for biorthogonal multiwavelets. Multidimens. Syst. Signal Process. 1997, 8, 31–69. [Google Scholar] [CrossRef]

- Fang, W.; Lu, M. A fast collocation method for an inverse boundary value problem. Int. J. Numer. Methods Eng. 2004, 59, 1563–1585. [Google Scholar] [CrossRef]

- Groetsch, C.W. Uniform convergence of regularization methods for Fredholm equations of the first kind. J. Aust. Math. Soc. Ser. A 1985, 39, 282–286. [Google Scholar] [CrossRef][Green Version]

- Kincaid, D.; Cheney, W. Numerical Analysis: Mathematics of Scientific Computing; Brooks/Cole Publishing Co.: Pacific Grove, CA, USA, 1991. [Google Scholar]

- Taylor, A.E.; Lay, D.C. Introduction to Functional Analysis, 2nd ed.; Robert, E., Ed.; Krieger Publishing Co., Inc.: Melbourne, FL, USA, 1986. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).