Abstract

We introduce a new projection and contraction method with inertial and self-adaptive techniques for solving variational inequalities and split common fixed point problems in real Hilbert spaces. The stepsize of the algorithm is selected via a self-adaptive method and does not require prior estimate of norm of the bounded linear operator. More so, the cost operator of the variational inequalities does not necessarily needs to satisfies Lipschitz condition. We prove a strong convergence result under some mild conditions and provide an application of our result to split common null point problems. Some numerical experiments are reported to illustrate the performance of the algorithm and compare with some existing methods.

1. Introduction

Let H be a real Hilbert space induced with norm and inner product Let be a nonempty, closed and convex subset of H and be an operator. We study the Variational Inequality Problem (shortly, VIP) defined by

The solution set of (1) is denoted by The VIP is a powerful tool for studying many nonlinear problems arising in mechanics, optimization, control network, equilibrium problems, and so forth; see References [1,2,3]. Due to this importance, the problem has drawn the attention of many researchers who had studied its existence of solution and proposed various iterative methods such as the extragradient method [4,5,6,7,8,9], subgradient extragradient method [10,11,12,13,14], projection and contraction method [15,16], Tseng’s extragradient method [17,18] and Bregman projection method [19,20] for approximating its solution in various dimensions.

The operator is said to be

- -strongly monotone on if there exists such that

- monotone on if

- - strongly pseudo-monotone on if there exists such thatfor all ;

- pseudo-monotone on if for all

- L- Lipschitz continuous on if there exists a constant such thatwhen then A is called a contraction;

- weakly sequentially continuous if for any such that implies

It is easy to see from (1) ⇒ (2) ⇒ (4) and (1) ⇒ (3) ⇒ (4), but the converse implications do not hold in general; see, for instance Reference [16,19].

For solving the VIP (1) in finite dimensional spaces, Korpelevich [21] introduced the Extragradient Method (EM) as follows:

where is the metric projection from H onto and is a monotone and L-Lipschitz operator. See, for example, References [4,5,22,23], for some extension of the EM to infinite dimensional Hilbert spaces. A major drawback in the EM is the that one needs to calculate at least two projections onto the closed convex set per each iteration which can be very complicated if does not have a simple structure. Censor et al. [10,11] introduced an improved method called the Subgradient Extragradient Method (SEM) by replacing the second projection in the EM with a projection onto a half-space as follows:

where The authors proved that the sequence generated by (3) converges weakly to a solution of the VIP. Furthermore, He [24] introduced a Projection and Contraction Method (PCM) which does not involves a projection onto the half-space as follows:

where and He [24] also proved that the sequence generated by (4) converges weakly to a solution of VIP. The PCM (4) has been modified by many author who proved its strong convergence to solution of the VIP; see for instance References [16,18,25,26]. In particular, Cholamjiak et al. [27] introduced the following inertial PCM for solving the VIP with pseudomonotone operator:

where such that where for some and is chosen such that and

The authors of Reference [27] proved that the sequence generated by Algorithm (5) converges strongly to a solution of the VIP provided the condition is satisfied. Note that the inertial extrapolation term in (5) is regarded as a means of improving the speed of convergence of the algorithm. This process was first introduced by Polyak [28] as a discretization of a two-order time dynamical system and has been employed by many researchers; see for instance References [16,17,25,29,30,31,32,33,34].

The viscosity approximation method was introduced by Moudafi [35] for finding the fixed point of a nonexpansive mapping T, that is, finding such that We denote the set of fixed points of T by Let be a contraction, for an arbitrary let be generated recursively by

where Xu [36] later proved that if satisfies some certain conditions, the sequence generated by (7) converges to the unique fixed point of T which are also solution of the variational inequality

Moreover, the problem of finding a common solution of VIP and fixed point problem for a nonlinear mapping T, that is,

become very important in optimization theory due to its possible applications to mathematical models whose constraints can be modeled as both problems. In particular, such models can be found in practical problems such as signal processing, network resources allocation, image recovery, see for instance, References [37,38,39].

Recently, Thong and Hieu [25] introduced the following modified SEM for solving (9):

and

where is a -demicontractive mapping with and The authors proved that the sequences generated by (10) and (11) converges strongly to a solution of (9) under certain mild conditions. Also Dong et al. [31] introduced an inertial PCM for solving (9) for a nonexpansive mapping S as follows:

where is a non-decreasing sequence with and are constants such that

- (i)

- the stepsize depends on a prior estimate of the Lipschitz constant L which is very difficult to determine in practice. Moreover in many practical problems, the cost operator may not even satisfies Lipschitz condition; see, for example, Reference [19];

- (ii)

- the condition (13) weaken the convergence of the algorithm;

- (iii)

- the algorithm converges weakly to a solution of (9).

Motivated by these results, in this paper, we introduce a new inertial projection and contraction method for finding a common solution of VIP and split common fixed point problem, that is,

where are real Hilbert spaces, is nonempty closed convex set, is a bounded linear operator, and are -demicontractive mappings. It should be observed that when and (identity operator on ), then Problem (14) reduced to (9). Thus (14) is general than (9). Our algorithm is designed such that the stepsize is determined by an Armijo line-search technique and its convergence does not require prior estimate of the Lipschitz constant. We also employ a generalized viscosity method and proved a strong convergence result for the sequence generated by our algorithm under certain mild conditions. We then provide some numerical examples to illustrate the performance of our algorithm. We highlight some contributions in this paper as follows:

- The authors of References [18,25,26,27,32] introduced some inertial PCMs which required a prior estimate of the Lipschitz constant of the operator It is known that finding such estimate is very difficult which also slows down the rate of convergence of the algorithm. In this paper, we propose a new inertial PCM which does not require a prior estimate of the Lipschitz constant of A.

- The authors of Reference [16] proposed an effective PCM for solving pseudomonotone VIP in real Hilbert space. When in our Algorithm 1, we obtained the method of Reference [16].

- In Reference [26], the author proposed a hybrid inertial PCM for solving monotone VIP in real Hilbert spaces. This method required computing extra projection onto the intersection of two closed convex subsets of H which can be computationally costly. Our algorithm performs only one projection onto C and no extra projection onto any subset of H.

2. Preliminaries

In this section, some basic definitions and results which are needed for establishing our results would be given. In the sequel, H is a real Hilbert space, is nonempty, closed and convex subset of H, we write to denotes converges strongly to x and to denotes converges weakly to

The metric projection of onto C is defined as the necessary unique vector satisfying

It is well known that has the following properties (see, e.g., Reference [40]).

- (i)

- For each and

- (ii)

- For any

- (iii)

- For any and

For any real Hilbert space it is known that the following identities hold (see, e.g., Reference [41]).

Lemma 1.

For all then

- (i)

- (ii)

- (iii)

The following are types of nonlinear mappings we considered:

Definition 1

([42]). A mapping is called

- (i)

- nonexpansive if

- (ii)

- quasi-nonexpansive mapping if and

- (iii)

- μ-strictly pseudocontractive if there exists a constant such that

- (iv)

- ϱ-demicontractive mapping if there exists and such that

It is well known that the demicontractive mappings posseses the following property.

Lemma 2.

([38], Remark 4.2, p. 1506) Suppose where T is a ϱ-demicontractive self-mapping on Define where Then

- (i)

- is a quasi-nonexpansive mapping if ;

- (ii)

- is closed and convex.

Lemma 3

([7]). Let Ω be a nonempty closed and convex subset of a real Hilbert space H. For any and we denote

then

Lemma 4

([6]). Given and Then we obtain

and

Lemma 5.

([43], Lemma 2.1) Consider the VIP (1) with Ω being a nonempty closed convex subset of H and is pseudomonotone and continuous. Then if and only if

Lemma 6

([44]). Let be a nonexpansive mapping and where F is k-Lipschitz, η-strongly monotone and Then T is a contraction map if that is,

where

Lemma 7.

([45], Lemma 3.1) Let and be sequences of nonnegative real numbers such that

where is a sequence in and is a real sequence. Assume that . Then, the following results hold:

- (i)

- If for some , then is a bounded sequence.

- (ii)

- If and , then .

Lemma 8.

([42], Lemma 3.1) Given a sequence of real numbers such that there exists a subsequence of with for all . Let be integers defined by

Then is a non-decreasing sequence verifying and for all the following estimate hold:

3. Results

In this section, we propose a new inertial projection and contraction for solving pseudomonotone variational inequality and split common fixed point problem.

Let be real Hilbert spaces, be a nonempty closed convex subset of be a bounded linear operator, be a pseudomonotone operator which is weakly sequentually continuous in and be demicontractive mappings with respectively. Let be a contraction mapping with constant and be a Lipschitz and strongly monotone operator with coefficients and respectively such that for and Suppose the solution set

Let be sequences in and such that

- (C1)

- and ;

- (C2)

- (C3)

- (C4)

- that is,

We now present our algorithm as follows:

Remark 1.

Note that we are at a solution of Problem (14) if In our convergence analysis, we will implicitly assumed that this does not occur after finite iterations so that our algorithm produces infinite sequences for the convergence analysis. More so, we show in the next result that the stepsize defined by (22) is well-defined.

Lemma 9.

Suppose is generated by Algorithm 1. Then there exists a non-negative integer satisfying (22). In addition

Proof.

Let for some Take for which (22) is satisfied. Suppose for some and assume that (22) does not hold, that is,

Using Lemma 3 and since we have

Recall that is continuous, then as Now, we consider the following possible cases.

| Algorithm 1: GVIPCM |

Initialization: Choose , be pick arbitrarily. Iterative steps: Given the iterates and , for each calculate the iterate as follows.

|

Case I: Suppose Then Since and it follows from Lemma 3 that

Passing to the limit as in (20), we obtain

Then, we arrived at a contradiction and so (22) is valid.

Case II: Assume that then

Also

This is a contraction. Therefore, we conclude that the line search (22) is well defined.

Furthermore, from (22), we have

Also

Lemma 10.

Let be the sequence generated by Algorithm 1. Then is bounded.

Proof.

Let then and Thus, we have

Since A is pseudomonotone and then

Also from (15), we have

This implies that

Hence

Using the definition of , we obtain

More so from (23), we get

Since then we obtain

Furthermore using Lemma 1(i), we have

Using (25), we obtain

Moreover

Using condition (C3), we obtain

Therefore from Lemma 6, we have

Putting

it follows from condition (C4) that this is bounded. Let

Thus from (37), we obtain

Putting and in Lemma 7(i), it follows from (38) that is bounded. This implies that is bounded and consequently, are bounded too. □

Lemma 11.

Let and be subsequences of the sequences and generated by Algorithm 1, respectively, such that Suppose as Then

- (i)

- for all

- (ii)

Proof.

(i) Since , then from (15), we have

Thus, we have

Hence

Next, we consider the following possible cases based on

Case I: Assume that Let Note that hence by using Lemma 4, we obtain

More so, which implies that is a bounded sequence. By the uniform continuity of A, we have

Thus

More so, from (15), we get

Hence

Taking limit of the above inequality as then we get

Case II: On the other hand, suppose Passing limit to (40) and noting that as , we have

This established (i). Next we show (ii).

Now let such that as For each we denote by N the smallest non-negative integer such that

where the existence of N follows from (i). Thus

for some satisfying Since A is pseudomonotone, we have

This implies that

Then

Hence, in view of Lemma 5, we obtain that □

Lemma 12.

Let be the sequence generated by Algorithm 1. Then the following inequality holds for all and

where

Proof.

Clearly

where Also

Using (44) in the expression above, we get

□

Now, we present our main theorem.

Theorem 1.

Let be the sequence generated by Algorithm 1. Then converges strongly to a point where is the unique solution of the variational inequalities

Proof.

Let and We consider the following two cases.

Case A: Suppose is monotonically non-increasing. Then, since is bounded, we obtain

Since and as thus we have

Using condition (C2) and (C3), we obtain

This implies that

Hence, we have

Since and then we obtain

Hence using (27) in the above expression, we get

This implies that

Clearly,

On the other hand, from (49), we have

Hence

Moreover

and

Hence

Since is bounded, then there exists a subsequence of such that It follows from (52), (53) and (54) that and respectively. Since and it follows from Lemma 11 that Also, since then Since it follows from the demiclosedness of T that Moreover, D is a bounded linear operator, then Then it follows from (49) and the demiclosedness of that Therefore We now show that the sequence converges strongly to a point where It follows from (15) and (56) that

Hence from Lemma 7 and 12, we have as Thus converges strongly to

Case B: Suppose is not monotonically decreasing. Let be a function defined by

fo all (for some large enough). From Lemma 8, it is clear that is a non-decreasing sequence such that and

for all Hence from Lemma 12, we have

where

for some Then, we have

Following similar proof as in Case A, we can show that

and

Also

This implies that

Moreover, for all we have if (i.e., Since for Therefore, it follows that for all

So This implies that converges strongly to . This completes the proof. □

The following results can be obtained as consequences of our main result.

Corollary 1.

Let be real Hilbert spaces, Ω be a nonempty closed convex subset of be a bounded linear operator, be a pseudomonotone operator which is weakly sequentually continuous in and be quasi-nonexpansive mappings. Let be a contraction mapping with constant and be a Lipschitz and strongly monotone operator with coefficients and respectively such that for and Suppose the solution set Let and be sequences in such that conditions (C1)–(C4) are satisfied. Then the sequence generated by Algorithm 1 converges strongly to a point where is the unique solution of the variational inequalities

Also, by setting (a real Hilbert space), (the identity mapping on ), then we obtain the following result for finding common solution of pseudomonotone VIP (1) and fixed point of demicontractive mappings.

Corollary 2.

Let H be a real Hilbert space, Ω be a nonempty closed convex subset of be a pseudomonotone operator which is weakly sequentually continuous in be ϱ demicontractive mapping with and is demiclosed at zero. Let be a contraction mapping with constant and be a Lipschitz and strongly monotone operator with coefficients and respectively such that for and Suppose the solution set Let and be sequences in such that conditions (C1)–(C4) are satisfied. Then the sequence generated by the following Algorithm 2 converges strongly to a point where is the unique solution of the variational inequalities

| Algorithm 2: GVIPCM |

Initialization: Choose , be pick arbitrarily. Iterative steps: Given the iterates and for each calculate the iterate as follows.

|

4. Application

In this section, we apply our result to finding the solution of Split Null Point Problem (SNPP) in real Hilbert spaces.

We first recall some basic concept of monotone operators:

Definition 2.

- A multivalued mapping is called monotone if for all

- The graph of φ is defined by

- When is not properly contained in the graph of any other monotone operator, we say that φ is maximally monotone. Equivalently, φ is maximal if and only if for , for all implies that

The resolvent operator associated with and is the mapping defined by

for all and It is well known that the resolvent operator is single-valued, nonexpansive and the set of zeros of (i.e., ) coincides with the set of fixed points of see for instance Reference [46].

Let and be real Hilbert spaces and be a bounded linear operator. Let and be maximal monotone operators. The Split Null Point Problem (SNPP) is formulated as

We denote the set of solution of SNPP by (63) by The SNPP consist of many other important problems such as split variational inequality problem, split equilibrium problem and split feasibility problem. The split feasibility problem was first introduced by Censor and Elfving [47] and has found numerous applications in many real-life problems such as intensity, modulated therapy, medical phase retrival, tomography and image reconstruction, see for instance References [46,48,49,50,51,52,53]. By using our Algorithm 1, we have the following problem for solving the SNPP.

Theorem 2.

Let be real Hilbert spaces, Ω be a nonempty closed convex subset of be a bounded linear operator, be a pseudomonotone operator which is weakly sequentually continuous in and be maximal monotone operators. Let be a contraction mapping with constant and be a Lipschitz and strongly monotone operator with coefficients and respectively such that for and Suppose the solution set

Let and be sequences in such that condition (C1)–(C4) are satisfied with in (C3). Then the sequence generated by the following Algorithm 3 converges strongly to a point where is the unique solution of the variational inequalities

| Algorithm 3: GVIPCM |

Initialization: Choose , be pick arbitrarily. Iterative steps: Given the iterates and for each calculate the iterate as follows.

|

Proof.

Set and in Algorithm 1. Then T and U are nonexpansive and thus, 0-demicontractive. Therefore, we obtain the desired result following the line of proof of Theorem 1. □

5. Numerical Examples

In this section, we give some numerical examples to show the performance and efficiency of the proposed algorithm.

Example 1.

First, we consider a generalized Nash-Cournot oligopolistic equilibrium problem in electricity markets described below:

Suppose there are m companies, each company j possessing generating units. We denoted by the vector whose entry corresponds to the power generating by unit j and denotes the price which can be assumed to be a decreasing affine function of where and N is the number of all generating units. Then The profit made by company l is given by where denotes the cost for generating by unit We denote by the strategy set of company that is, for each Thus, we can write the strategy set of the model as Each company l wants to maximize its profit by choosing a corresponding production level under the presumption that the production of the other companies are parametric inputs. A commonly used approach for treating the model is the Nash equilibrium concept (see References [54,55]).

Recall that a point is called an equilibrium point of the Nash equilibrium model if

where the vector stands for the vector obtained from by replacing with Defining

with Then the problem of finding a Nash equilibrium point of the model can be formulated as

Furthermore, we suppose that the cost for each unit j used in production and the environmental fee g are increasing convex functions. This implies that both the cost and environmental fee g for producing a unit production by each unit j increase as the quantity of the production increases. Under this assumption, we can formulate problem (66) as

where

and

Note that the function is convex and differentiable for each j. In this case, we test the proposed Algorithm 1 with the cost function given by

The matrix vector d and parameter are randomly generated in the interval and respectively. Also, we use different choices of and 50 with different initial points generated randomly in the interval and More so, we assume that each company j has the same production level with other companies, that is,

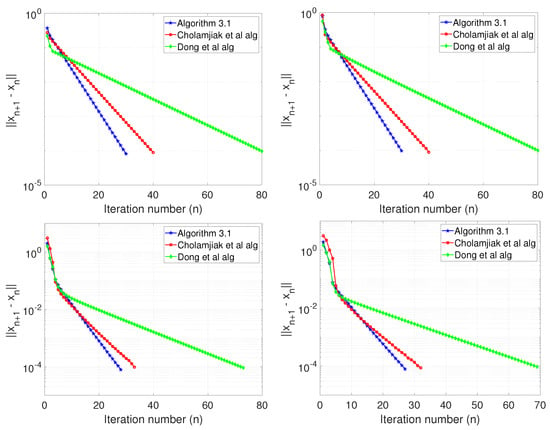

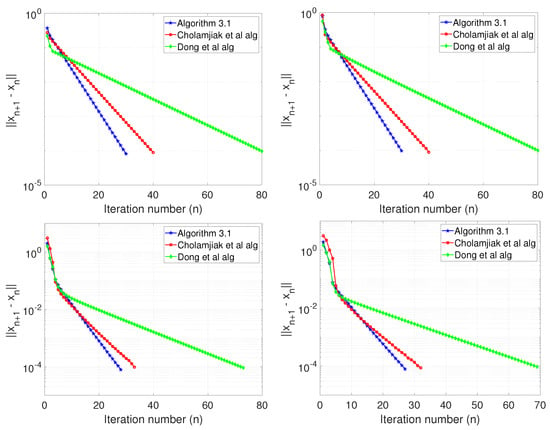

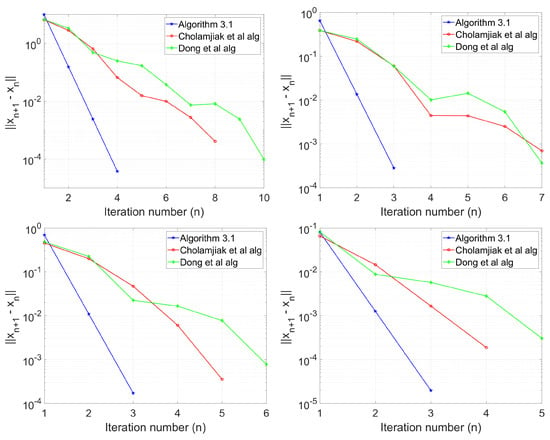

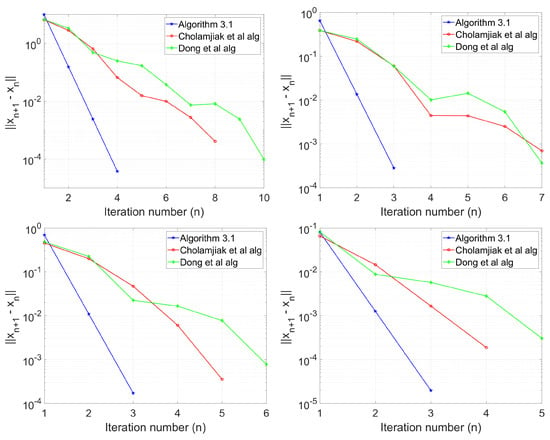

We take which is 0-demicontractive, We compare the performance of our Algorithm 1 with Algorithm (5) of Cholamjiak et al. [27] and Algorithm (12) of Dong et al. [32]. In (5), we take and Also for (12), we choose The computations were stopped when each algorithm satisfies The numerical results are shown in Table 1 and Figure 1. In Figure 1, Algorithm 3.1 refers to Algorithm 1.

Table 1.

Computational result for Example 1.

Figure 1.

Example 1, Top Left: ; Top Right: ; Bottom Left: ; Bottom Right: .

Example 2.

Next, we consider the min-max problem which can be formulated as a variational inequality problem with skew-symmetric matrix. This problem is to determine the shortest network in a given full Steiner topology (see (References [56], Example 1)). The compact form of the min-max problem is given as

where

A is a block matrix of the form

Equation (67) is equivalent to the following linear variational inequality (see References [15])

where

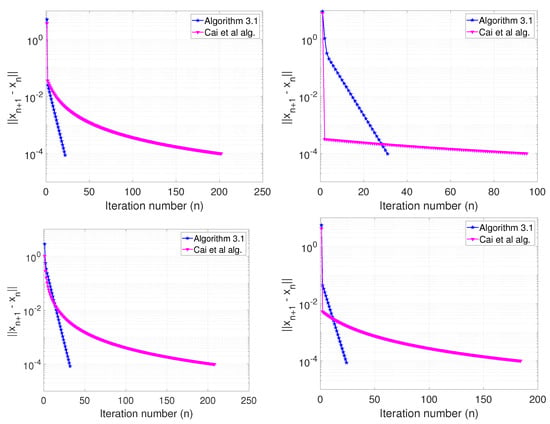

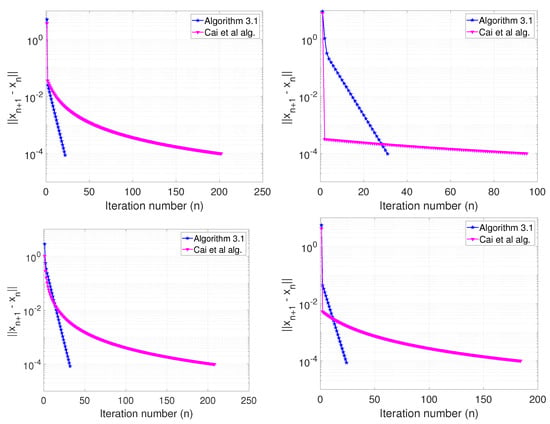

Note that M is skew-symmetric and the LVI is monotone. Also the mapping in (68) is Lipschitz continuous. We set We define the mapping and by

and

where Δ is the closed ball in centered at with radius that is, It is easy to see that T is -demicontractive and not nonexpansive, while U is nonexpansive, and thus, -demicontractive. We compare our method with the Projection contraction method of Cai et al. [15]. We take and choose the various initial values as follows:

- Case I:

- Case II:

- Case III:

- Case IV:

For the Reference [15] algorithm, we used the Correction of PC Method 1 and take We used as stopping criterion. The numerical results are shown in Table 2 and Figure 2.

Table 2.

Computational result for Example 2.

Figure 2.

Example 2, Top Left: Case I; Top Right: Case II; Bottom Left: Case III; Bottom Right: Case IV.

Finally, we give an example in infinite dimensional spaces to support our strong convergence result.

Example 3.

Let with inner product and normLet and be given by Then A is monotone and uniformly continuous and

We define the mapping and Then is 0-demicontractive. We take We also compare the performance of our Algorithm 1 with Algorithm (5) of Reference [27] and (12) of Reference [32]. For (5), we take Also for (12), we take We test each algorithm using the following initial values and as stopping criterion:

- Case I:

- Case II:

- Case III:

- Case IV:

Table 3.

Computational result for Example 3.

Figure 3.

Example 3, Top Left: ; Top Right: ; Bottom Left: ; Bottom Right: .

6. Conclusions

In this paper, we present a new generalized inertial viscosity approximation method for solving pseudomonotone variational inequality and split common fixed point problems in real Hilbert spaces. The algorithm is designed such that the stepsize of the variational inequality is determined by a line searching process and its convergence does not require norm of the bounded linear operator. A strong convergence result is proved under mild conditions and some numerical experiments are given to illustrate the efficiency and accuracy of the proposed method. This result improves and extends the results of References [16,17,18,26,27,32] and other related results in the literature.

Author Contributions

Conceptualization, L.O.J.; methodology, L.O.J.; validation, M.A. and L.O.J.; formal analysis, L.O.J.; writing—original draft preparation, L.O.J.; writing—review and editing, M.A.; visualization, L.O.J.; supervision, M.A.; project administration, M.A.; funding acquisition, M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Sefako Makgatho Health Sciences University Postdoctoral research fund and and the APC was funded by Department of Mathematics and Applied Mathematics, Sefako Makgatho Health Sciences University, Pretoria, South Africa.

Acknowledgments

The authors acknowledge with thanks, the Department of Mathematics and Applied Mathematics at the Sefako Makgatho Health Sciences University for making their facilities available for the research.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Alber, Y.; Ryazantseva, I. Nonlinear Ill-Posed Problems of Monotone Type; Springer: Dordrecht, The Netherlands, 2006. [Google Scholar]

- Bigi, G.; Castellani, M.; Pappalardo, M.; Passacantando, M. Nonlinear Programming Technique for Equilibria; Spinger Nature: Cham, Switzerland, 2019. [Google Scholar]

- Shehu, Y. Single projection algorithm for variational inequalities in Banach spaces with applications to contact problems. Acta Math. Sci. 2020, 40, 1045–1063. [Google Scholar] [CrossRef]

- Ceng, L.C.; Hadjisavas, N.; Weng, N.C. Strong convergence theorems by a hybrid extragradient-like approximation method for variational inequalities and fixed point problems. J. Glob. Optim. 2010, 46, 635–646. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Extensions of Korpelevich’s extragradient method for variational inequality problems in Euclidean space. Optimization 2012, 61, 119–1132. [Google Scholar] [CrossRef]

- Denisov, S.; Semenov, V.; Chabak, L. Convergence of the modified extragradient method for variational inequalities with non-Lipschitz operators. Cybern. Syst. Anal. 2015, 51, 757–765. [Google Scholar] [CrossRef]

- Fang, C.; Chen, S. Some extragradient algorithms for variational inequalities. In Advances in Variational and Hemivariational Inequalities; Springer: Cham, Switzerland, 2015; Volume 33, pp. 145–171. [Google Scholar]

- Hammad, H.A.; Ur-Rehman, H.; La Sen, M.D. Advanced algorithms and common solutions to variational inequalities. Symmetry 1198, 12, 1198. [Google Scholar] [CrossRef]

- Solodov, M.V.; Svaiter, B.F. A new projection method for variational inequality problems. SIAM J. Control Optim. 1999, 37, 765–776. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 2011, 26, 827–845. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef]

- Hieu, D.V. Parallel and cyclic hybrid subgradient extragradient methods for variational inequalities. Afr. Mat. 2017, 28, 677–679. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Taiwo, A.; Alakoya, T.O.; Mewomo, O.T. A self adaptive inertial subgradient extragradient algorithm for variational inequality and common fixed point of multivalued mappings in Hilbert spaces. Demonstr. Math. 2019, 52, 183–203. [Google Scholar] [CrossRef]

- Yang, J.; Liu, H. The subgradient extragradient method extended to pseudomonotone equilibrium problems and fixed point problems in Hilbert space. Optim. Lett. 2020, 14, 1803–1816. [Google Scholar] [CrossRef]

- Cai, X.; Gu, G.; He, B. On the O(1/t) convergence rate of the projection and contraction methods for variational inequalities with Lipschitz continuous monotone operators. Comput. Optim. Appl. 2014, 57, 339–363. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Taiwo, A.; Alakoya, T.O.; Mewomo, O.T. A unified algorithm for solving variational inequality and fixed point problems with application to the split equality problem. Comput. Appl. Math. 2019, 39. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Jolaoso, L.O.; Mewomo, O.T. Modified inertial subgradient extragradient method with self-adaptive stepsize for solving monotone variational inequality and fixed point problems. Optimization 2020. [Google Scholar] [CrossRef]

- Thong, D.V.; Vinh, N.T.; Cho, Y.J. New strong convergence theorem of the inertial projection and contraction method for variational inequality problems. Numer. Algorithms 2020, 84, 285–305. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Aphane, M. Weak and strong convergence Bregman extragradient schemes for solving pseudo-monotone and non-Lipschitz variational inequalities. J. Ineq. Appl. 2020, 2020, 195. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Taiwo, A.; Alakoya, T.O.; Mewomo, O.T. A strong convergence theorem for solving pseudo-monotone variational inequalities using projection methods in a reflexive Banach space. J. Optim. Theory Appl. 2020, 185, 744–766. [Google Scholar] [CrossRef]

- Korpelevich, G.M. The extragradient method for finding saddle points and other problems. Ekon. Mat. Metody. 1976, 12, 747–756. (In Russian) [Google Scholar]

- Nadezhkina, N.; Takahashi, W. Weak convergence theorem by an extragradient method for nonexpansive mappings and monotone mappings. J. Optim. Theory Appl. 2006, 128, 191–201. [Google Scholar] [CrossRef]

- Vuong, P.T. On the weak convergence of the extragradient method for solving pseudo-monotone variational inequalities. J. Optim. Theory Appl. 2018, 176, 399–409. [Google Scholar] [CrossRef]

- He, B.S. A class of projection and contraction methods for monotone variational inequalities. Appl. Math. Optim. 1997, 35, 69–76. [Google Scholar] [CrossRef]

- Thong, D.V.; Hieu, D.V. Modified subgradient extragdradient algorithms for variational inequalities problems and fixed point algorithms. Optimization 2018, 67, 83–102. [Google Scholar] [CrossRef]

- Tian, M.; Jiang, B.N. Inertial hybrid algorithm for variational inequality problems in Hilbert spaces. J. Ineq. Appl. 2020, 2020, 12. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Thong, D.V.; Cho, Y.J. A novel inertial projection and contraction method for solving pseudomonotone variational inequality problem. Acta Appl. Math. 2020, 169, 217–245. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. U.S.S.R. Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Bot, R.I.; Csetnek, E.R.; Hendrich, C. Inertial Douglas-Rachford splitting for monotone inclusions. Appl. Math. Comput. 2015, 256, 472–487. [Google Scholar] [CrossRef]

- Chambole, A.; Dossal, C.H. On the convergence of the iterates of the “fast shrinkage/thresholding algorithm”. J. Optim. Theory Appl. 2015, 166, 968–982. [Google Scholar] [CrossRef]

- Dong, Q.-L.; Lu, Y.Y.; Yang, J. The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 2016, 65, 2217–2226. [Google Scholar] [CrossRef]

- Dong, Q.L.; Cho, Y.J.; Zhong, L.L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalites. J. Glob. Optim. 2018, 70, 687–704. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Alakoya, T.O.; Taiwo, A.; Mewomo, T.O. An inertial extragradient method via viscosity approximation approach for solving equilibrium problem in Hilbert spaces. Optimization 2020. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Oyewole, K.O.; Okeke, C.C.; Mewomo, O.T. A unified algorithm for solving split generalized mixed equilibrium problem and fixed point of nonspreading mapping in Hilbert space. Demonstr. Math. 2018, 51, 211–232. [Google Scholar] [CrossRef]

- Moudafi, A. Viscosity approximation method for fixed-points problems. J. Math. Anal. Appl. 2000, 241, 46–55. [Google Scholar] [CrossRef]

- Xu, H.K. Viscosity approximation method for nonexpansive mappings. J. Math. Anal. Appl. 2004, 298, 279–291. [Google Scholar] [CrossRef]

- Iiduka, H. Acceleration method for convex optimization over the fixed point set of a nonexpansive mappings. Math. Prog. Ser. A 2015, 149, 131–165. [Google Scholar] [CrossRef]

- Mainge, P.E. A hybrid extragradient viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 2008, 49, 1499–1515. [Google Scholar] [CrossRef]

- Maingé, P.E. Projected subgradient techniques and viscosity methods for optimization with variational inequality constraints. Eur. J. Oper. Res. 2010, 205, 501–506. [Google Scholar] [CrossRef]

- Rudin, W. Functional Analysis, McGraw-Hill Series in Higher Mathematics; McGraw-Hill: New York, NY, USA, 1991. [Google Scholar]

- Marino, G.; Xu, H.K. Weak and strong convergence theorems for strict pseudo-contraction in Hilbert spaces. J. Math. Anal. Appl. 2007, 329, 336–346. [Google Scholar] [CrossRef]

- Maingé, P.E. Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

- Cottle, R.W.; Yao, J.C. Pseudo-monotone complementarity problems in Hilbert space. J. Optim. Theory Appl. 1992, 75, 281–295. [Google Scholar] [CrossRef]

- Yamada, I. The hybrid steepest-descent method for variational inequalities problems over the intersection of the fixed point sets of nonexpansive mappings. In Inherently Parallel Algorithms in Feasibility and Optimization and Their Applications; Butnariu, D., Censor, Y., Reich, S., Eds.; North-Holland: Amsterdam, The Netherlands, 2001; pp. 473–504. [Google Scholar]

- Maingé, P.E. Approximation methods for common fixed points of nonexpansive mappings in Hilbert spaces. J. Math. Anal. Appl. 2007, 325, 469–479. [Google Scholar] [CrossRef]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2004, 20, 103–120. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Alg. 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Censor, Y.; Bortfeld, T.; Martin, B.; Trofimov, A.A. A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 2006, 51, 2353–2365. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T.; Kopf, N.; Bortfeld, T. The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Probl. 2005, 21, 2071–2084. [Google Scholar] [CrossRef]

- Dong, Q.L.; Jiang, D.; Cholamjiak, P.; Shehu, Y. A strong convergence result involving an inertial forward-backward algorithm for monotone inclusions. J. Fixed Point Theory Appl. 2017, 19, 3097–3118. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Suantai, S.; Sunthrayuth, P. Strong convergence of a general viscosity explicit rule for the sum of two monotone operators in Hilbert spaces. J. Appl. Anal. Comput. 2019, 9, 2137–2155. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Suantai, S.; Sunthrayuth, P. An explicit parallel algorithm for solving variational inclusion problem and fixed point problem in Banach spaces. Banach J. Math. Anal. 2020, 14, 20–40. [Google Scholar] [CrossRef]

- Kesornprom, S.; Cholamjiak, P. Proximal type algorithms involving linesearch and inertial technique for split variational inclusion problem in hilbert spaces with applications. Optimization 2019, 68, 2365–2391. [Google Scholar] [CrossRef]

- Shehu, Y. On a modified extragradient method for variational inequality problem with application to industrial electricity production. J. Ind. Appl. Math. 2019, 15, 319–342. [Google Scholar]

- Yen, L.H.; Muu, L.D.; Huyen, N.T.T. An algorithm for a class of split feasibility problems: Application to a model in electricity production. Math. Meth. Oper. Res. 2016, 84, 549–565. [Google Scholar] [CrossRef]

- Xue, G.L.; Ye, Y.Y. An efficient algorithm for minimizing a sum of Euclidean norms with applications. SIAM J. Optim. 1997, 7, 1017–1036. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).