Abstract

Splitting methods have received a lot of attention lately because many nonlinear problems that arise in the areas used, such as signal processing and image restoration, are modeled in mathematics as a nonlinear equation, and this operator is decomposed as the sum of two nonlinear operators. Most investigations about the methods of separation are carried out in the Hilbert spaces. This work develops an iterative scheme in Banach spaces. We prove the convergence theorem of our iterative scheme, applications in common zeros of accretive operators, convexly constrained least square problem, convex minimization problem and signal processing.

1. Introduction

Let be a real Banach space. The zero point problem is as follows:

where is an operator and is a set-valued operator. This problem includes, as special cases, convex programming, variational inequalities, split feasibility problem and minimization problem [1,2,3,4,5,6,7]. To be more precise, some concrete problems in machine learning, image processing [4,5], signal processing and linear inverse problem can be modeled mathematically as the form in Equation (1).

Signal processing and numerical optimization are independent scientific fields that have always been mutually influencing each other. Perhaps the most convincing example where the two fields have met is compressed sensing (CS) [2]. Several surveys dedicated to these algorithms and their applications in signal processing have appeared [3,6,7,8].

Fixed point iterations is an important tool for solving various problems and is known in a Banach space . Let be a nonempty closed convex subset of and is the operator with at least one fixed point. Then, for

- The Picard iterative scheme [9] is defined by:

- The Mann iterative scheme [10] is defined by:where is a sequence in

- The Ishikawa iterative scheme [11] is defined by:where and are sequences in

- The -iterative scheme [12] is defined by:where and are sequences in

Recently, Sahu et al. [13] and Thakur et al. [14] introduced the following same iterative scheme for nonexpansive mappings in uniformly convex Banach space:

where and are sequences in . The authors proved that this scheme converges to a fixed point of contraction mapping, faster than all known iterative schemes. In addition, the authors provided an example to support their claim.

In this paper, we first develop an iterative scheme for calculating common solutions and using our results to solve the problem in Equation (1). Secondly, we find common solutions of convexly constrained least square problems, convex minimization problems and applied to signal processing.

2. Preliminaries

Let be a real Banach space with norm and be its dual. The value of at ia denoted by A Banach space is called strictly convex if for all with It is called uniformly convex if for any two sequences in such that and

The (normalized) duality mapping from into the family of nonempty (by Hahn Banach theorem) weak-star compact subsets of its dual is defined by

for each where denotes the generalized duality pairing.

For an operator we denote its domain, range and graph as follows:

and

respectively. The inverse of is defined by if and only if If and and there is such that then is called accretive.

An accretive operator in a Banach space is said to satisfy the range condition if for all where denotes the closure of the domain of We know that for an accretive operator which satisfies the range condition, for all

A point is a fixed point of provided Denote by the set of fixed points of , i.e.,

- The mapping is called Lipschitz, , if

- The mapping is called nonexpansive if

- The mapping is called quasi-nonexpansive if and

In this case, is a real Hilbert space. If is an accretive operator (see [15,16,17]), then is called maximal accretive operator [18], and for all , if and only if is called maximal monotone [19]. Denote by the domain of a function

The subdifferential of at is the set

where denotes the class of all functions from to with nonempty domains.

Lemma 1

([20]). Let Then, is maximal monotone.

We denote by the closed ball with the center at v and radius

Lemma 2

([21]). Let be a Banach space, and and be two fixed numbers. Then, is uniformly convex if and only if there exists a continuous, strictly increasing, and convex function with such that

for all and

Definition 1

([22]). A vector space is said to satisfy Opial’s condition, if for each sequence in which converges weakly to point

Lemma 3

([23]). Let be a nonempty subset of a Banach space , let be a uniformly continuous mapping, and let an approximating fixed point sequence of Then, is an approximating fixed point sequence of whenever is in such that

Lemma 4

([16]). Let be a nonempty closed convex subset of a uniformly convex Banach space . If is a nonexpansive mapping, then has the demiclosed property with respect to

A subset of Banach space is called a retract of if there is a continuous mapping from onto such that for all We call such a retraction of onto It follows that, if a mapping is a retraction, then for all v in the range of A retraction is called a sunny if for all and If a sunny retraction is also nonexpansive, then is called a sunny nonexpansive retract of [24].

Let be a strictly convex reflexive Banach space and be a nonempty closed convex subset of Denote by the (metric) projection from onto , namely, for is the unique point in with the property

Let an inner product and the induced norm are specified with a real Hilbert space . Let is a nonempty subset of , we have the nearest point projection is the unique sunny nonexpansive retraction of onto It is also known that and

3. Main Results

Let be a nonempty closed convex subset of a Banach space with as a sunny nonexpansive retraction. We denote by .

Lemma 5.

Let be a nonempty closed convex subset of a Banach space with as the sunny nonexpansive retraction, let be quasi-nonexpansive mappings which and let and be sequences in for all Let be defined by Algorithm 1. Then, for each exists and

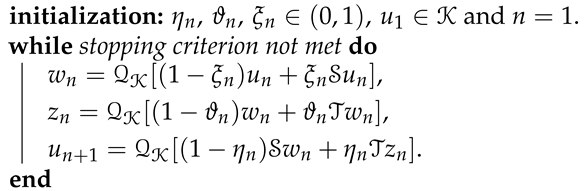

| Algorithm 1: Three-step sunny nonexpansive retraction |

|

Proof.

Let Then, we have

and

Therefore,

Since is monotonically decreasing, we have that the sequence is convergent. □

From Lemma 5, we have results:

Theorem 1.

Let be a nonempty closed convex subset of a Banach space with as the sunny nonexpansive retraction, let be quasi-nonexpansive mappings with and let and be sequences of real numbers, for which for all Let and is defined by Algorithm 1. Then, we have the following:

- (i)

- is in a closed convex bounded set where λ is a constant in such that

- (ii)

- If is uniformly continuous, then and

- (iii)

- If fulfills the Opial’s condition and and are demiclosed at then converges weakly to an element of

Proof.

Since from Equation (6), we obtain

Therefore, is in the closed convex bounded set

Suppose that is uniformly continuous. Using Lemma 5, we get that and are in and hence, from Equation (2), we obtain

Note that: Thus,

In the same way, we obtain

Therefore, we have From the relations in Algorithm 1, we obtain

and

Note that: and Thus,

It follows that Note that:

Since is uniformly continuous, it follows from Lemma 3 that Thus, from we obtain

By assumption, satisfies the Opial’s condition. Let such that From Lemma 5, we have exists. Suppose there are two subsequences and which converge to two distinct points and in respectively. Then, since both and have the demiclosed property at we have and Moreover, using the Opial’s condition:

Similarly, we obtain

which is a contradiction. Therefore, Hence, the sequence converges weakly to an element of □

Theorem 2.

Let be a nonempty closed convex subset of a Banach space with as the sunny nonexpansive retraction, let be nonexpansive mappings with and let and be sequences of real numbers, for which for all Let and is defined by Algorithm 1. Then, we have the following:

- (i)

- is in a closed convex bounded set where λ is a constant in such that

- (ii)

- and

- (iii)

- If fulfills the Opial’s condition, then converges weakly to an element of

Proof.

It follows from Theorem 1. □

Corollary 1.

Let be a nonempty closed convex subset of a real Hilbert space let be nonexpansive mappings with and let and be sequences of real numbers, for which for all Let be defined by

Then, converges weakly to an element of

Proof.

It follows from Theorem 1. □

4. Applications

4.1. Common Zeros of Accretive Operators

Theorem 3.

Let be a nonempty closed convex subset of a Banach space satisfying the Opial’s condition. Let be accretive operators, for which and Let and be sequences of real numbers, for which for all Let and Let be defined by

Then, we have the following:

- (i)

- is in a closed convex bounded set where λ is a constant in such that

- (ii)

- and

- (iii)

- converges weakly to an element of

Proof

By assumption we known that be nonexpansive. Note that and hence

Next, set and Hence, Theorem 3 is the same way as Theorem 2. □

4.2. Convexly Constrained Least Square Problem

We provide applications of Theorem 2 for finding solutions to common problems with two convexly constrained least square problems. We consider the following problem:

Let and Define by

where is a real Hilbert space.

Let be a nonempty closed convex subset of The objective is to find such that

where

Proposition 1

([8]). Let be a real Hilbert space, with the adjoint and Let be a nonempty closed convex subset of Let and Then, the following statements are equivalent:

- (i)

- b solves the following problem:

- (ii)

- (iii)

- for all

Theorem 4.

Let be a nonempty closed convex subset of a real Hilbert space , and , for which the solution set of the problem in Equation (17) is nonempty. Let and be sequences of real numbers, for which for all Let and is defined by

where defined by and for all Then, we have the following:

- (i)

- is in the closed ball where λ is a constant in such that

- (ii)

- and

- (iii)

- converges weakly to an element of

Proof.

Note that: for all we obtain that for all Thus, is -ism and hence is nonexpansive from into for Therefore, and are nonexpansive mappings from into itself for and respectively. Hence, Theorem 4 is the same way as Theorem 2. □

4.3. Convex Minimization Problem

We give an application to common solutions to convex programming problems in a Hilbert space . We consider the following problem:

Let be proper functions. The objective is to find such that:

Note that:

Theorem 5.

Let be a nonempty closed convex subset of a real Hilbert space . Let , for which the solution set of the problem in Equation (19) is nonempty. Let and be sequences of real numbers, for which for all Let and Let and is defined by

Then, we have the following:

- (i)

- is in the closed ball where λ is a constant in such that

- (ii)

- and

- (iii)

- converges weakly to an element of

Proof.

Using Lemma 1, we have that is maximal monotone. We know that and using the maximal monotonicity of Thus, is nonexpansive. Similarly, is nonexpansive. Hence, Theorem 5 is the same way as Theorem 2. □

4.4. Signal Processing

We consider some applications of our algorithm to inverse problems occurring from signal processing. For example, we consider the following underdeterminated linear equation system:

where is recovered, is observations or measured data with noisy e, and is a bounded linear observation operator. It determines a process with loss of information. For finding solutions of the linear inverse problems in Equation (21), a successful one of some models is the convex unconstrained minimization problem:

where and is the norm. Thus, we can find solution to Equation (22) by applying our method in the case and For any the corresponding forward-backward operator as follows:

where is the squared loss function of the Lasso problem in Equation (22). The proximity operator for norm is defined as the shrinkage operator as follows:

where is the signum function. We apply the algorithm to the problem in Equation (22) follow as Algorithm 2:

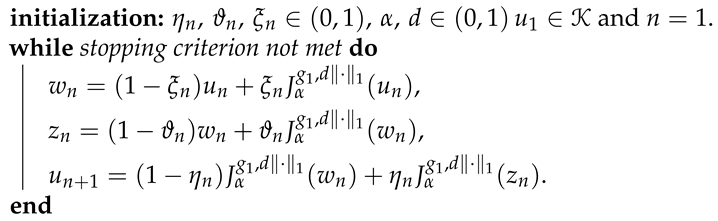

| Algorithm 2: Three-step forward-backward operator |

|

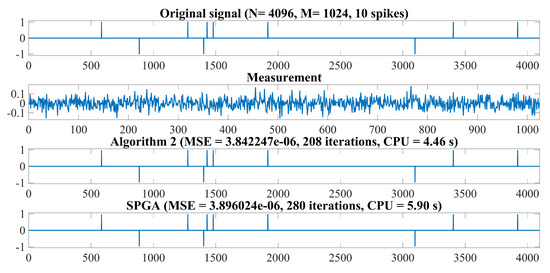

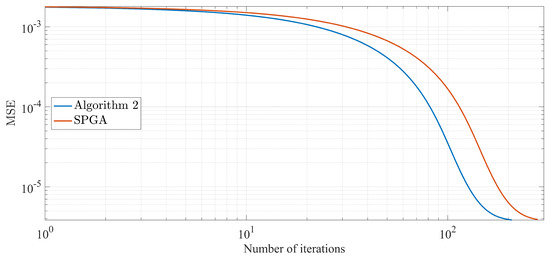

In our experiment, we set the hits of a signal . The matrix was generated from a normal distribution with mean zero and one invariance. The observation y is generated by Gaussian noise distributed normally with mean 0 and variance . We compared our Algorithm 2 with SPGA [12]. Let and in both Algorithm 2 and SPGA. The experiment was initialized by and terminated when The restoration accuracy was measured by means of the mean squared error: MSE = where is an estimated signal of All codes were written in Matlab 2016b and run on Dell i-5 Core laptop. We present the numerical comparison of the results in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6.

Figure 1.

From top to bottom: Original signal, observation data, recovered signal by Algorithm 2 and SPGA with and 10 spikes, respectively.

Figure 2.

Comparison MSE of two algorithms for recovered signal with and 10 spikes, respectively.

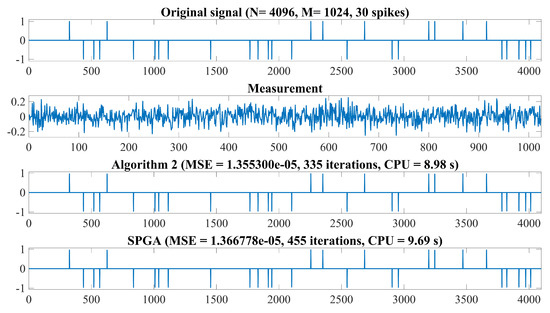

Figure 3.

From top to bottom: Original signal, observation data, recovered signal by Algorithm 2 and SPGA with and 30 spikes, respectively.

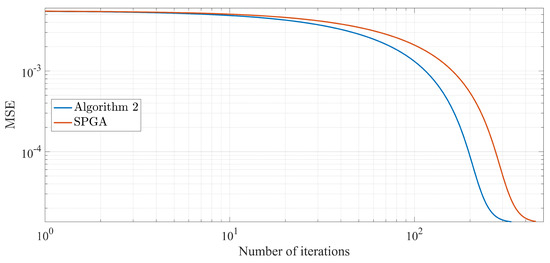

Figure 4.

Comparison MSE of two algorithms for recovered signal with and 30 spikes, respectively.

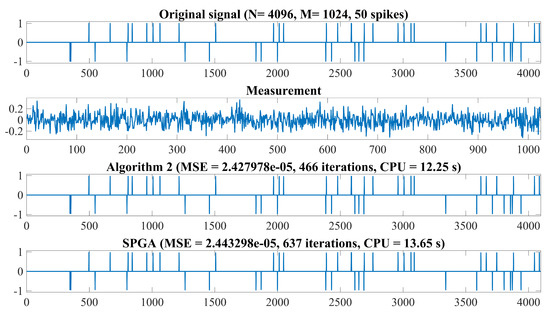

Figure 5.

From top to bottom: Original signal, observation data, recovered signal by Algorithm 2 and SPGA with and 50 spikes, respectively.

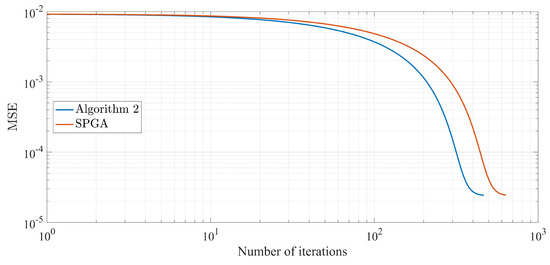

Figure 6.

Comparison MSE of two algorithms for recovered signal with and 50 spikes, respectively.

5. Conclusions

In this work, we introduce a modified iterative scheme in Banach spaces and solve common zeros of accretive operators, convexly constrained least square problem, convex minimization problem and signal processing. In the case of signal processing, all results are compared with the forward—backward method in Algorithm 2 and SPGA, as proposed in [12]. The numerical results show that Algorithm 2 has a better convergence behavior than SPGA when using the same step sizes for both.

Author Contributions

A.P. and P.S.; writing original draft, A.P. and P.S.; data analysis, A.P. and P.S.; formal analysis and methodology.

Funding

This research was funded by Rajamangala University of Technology Thanyaburi (RMUTT).

Acknowledgments

The first author thanks Rambhai Barni Rajabhat University for the support. Pakeeta Sukprasert was financially supported by Rajamangala University of Technology Thanyaburi (RMUTT).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Symbols | Display |

| lower semicontinuous, convex | |

| the set of all bounded and linear operators from into itself | |

| real uniformly convex |

References

- Kankam, K.; Pholasa, N.; Cholamjiak, P. On convergence and complexity of the modified forward–backward method involving new linesearches for convex minimization. Math. Meth. Appl. Sci. 2019, 42, 1352–1362. [Google Scholar] [CrossRef]

- Candès, E.J.; Wakin, M.B. An introduction to compressive sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Suantai, S.; Kesornprom, S.; Cholamjiak, P. A new hybrid CQ algorithm for the split feasibility problem in Hilbert spaces and Its applications to compressed Sensing. Mathematics 2019, 7, 789. [Google Scholar] [CrossRef]

- Kitkuan, D.; Kumam, P.; Padcharoen, A.; Kumam, W.; Thounthong, P. Algorithms for zeros of two accretive operators for solving convex minimization problems and its application to image restoration problems. J. Comput. Appl. Math. 2019, 354, 471–495. [Google Scholar] [CrossRef]

- Padcharoen, A.; Kumam, P.; Cho, Y.J. Split common fixed point problems for demicontractive operators. Numer. Algorithms 2019, 82, 297–320. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Shehu, Y. Inertial forward-backward splitting method in Banach spaces with application to compressed sensing. Appl. Math. 2019, 64, 409–435. [Google Scholar] [CrossRef]

- Jirakitpuwapat, W.; Kumam, P.; Cho, Y.J.; Sitthithakerngkiet, K. A general algorithm for the split common fixed point problem with its applications to signal processing. Mathematics 2019, 7, 226. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward–backward splitting. Multiscale Model Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Picard, E. Memoire sur la theorie des equations aux d’erives partielles et la methode des approximations successives. J. Math Pures Appl. 1890, 231, 145–210. [Google Scholar]

- Mann, W.R. Mean value methods in iteration. Proc. Am. Math. Soc. 1953, 4, 506–510. [Google Scholar] [CrossRef]

- Ishikawa, S. Fixed points by a new iteration method. Proc. Am. Math. Soc. 1974, 44, 147–150. [Google Scholar] [CrossRef]

- Agarwal, R.P.; O’Regan, D.; Sahu, D.R. Iterative construction of fixed points of nearly asymptotically nonexpansive mappings. J. Nonlinear Convex Anal. 2007, 8, 61–79. [Google Scholar]

- Sahu, V.K.; Pathak, H.K.; Tiwari, R. Convergence theorems for new iteration scheme and comparison results. Aligarh Bull. Math. 2016, 35, 19–42. [Google Scholar]

- Thakur, B.S.; Thakur, D.; Postolache, M. New iteration scheme for approximating fixed point of non-expansive mappings. Filomat 2016, 30, 2711–2720. [Google Scholar] [CrossRef]

- Chang, S.S.; Wen, C.F.; Yao, J.C. Zero point problem of accretive operators in Banach spaces. Bull. Malays. Math. Sci. Soc. 2019, 42, 105–118. [Google Scholar] [CrossRef]

- Browder, F.E. Nonlinear mappings of nonexpansive and accretive type in Banach spaces. Bull. Am. Math. Soc. 1967, 73, 875–882. [Google Scholar] [CrossRef]

- Browder, F.E. Semicontractive and semiaccretive nonlinear mappings in Banach spaces. Bull. Am. Math. Soc. 1968, 7, 660–665. [Google Scholar] [CrossRef]

- Cioranescu, I. Geometry of Banach Spaces, Duality Mapping and Nonlinear Problems; Kluwer: Amsterdam, The Netherlands, 1990. [Google Scholar]

- Takahashi, W. Nonlinear Functional Analysis, Fixed Point Theory and Its Applications; Yokohama Publishers: Yokohama, Japan, 2000. [Google Scholar]

- Rockafellar, R.T. On the maximal monotonicity of subdifferential mappings. Pac. J. Math. 1970, 33, 209–216. [Google Scholar] [CrossRef]

- Xu, H.K. Inequalities in Banach spaces with applications. Nonlinear Anal. 1991, 16, 1127–1138. [Google Scholar] [CrossRef]

- Opial, Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 1967, 73, 591–597. [Google Scholar] [CrossRef]

- Sahu, D.R.; Pitea, A.; Verma, M. A new iteration technique for nonlinear operators as concerns convex programming and feasibility problems. Numer. Algorithms 2019. [Google Scholar] [CrossRef]

- Goebel, K.; Reich, S. Uniform Convexity, Hyperbolic Geometry and Non Expansive Mappings; Marcel Dekker: New York, NY, USA; Basel, Switzerland, 1984. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).