Abstract

In this article, we consider an inverse problem to determine an unknown source term in a space-time-fractional diffusion equation. The inverse problems are often ill-posed. By an example, we show that this problem is NOT well-posed in the Hadamard sense, i.e., this problem does not satisfy the last condition-the solution’s behavior changes continuously with the input data. It leads to having a regularization model for this problem. We use the Tikhonov method to solve the problem. In the theoretical results, we also propose a priori and a posteriori parameter choice rules and analyze them.

Keywords:

fractional diffusion-wave equation; fractional derivative; ill-posed problem; Tikhonov regularization method MSC:

26A33; 35K05; 35R11; 35K99; 47H10

1. Introduction

Let be a bounded domain in with sufficiently smooth boundary , . In this paper, we consider the inverse source problem of the time-fractional diffusion-wave equation:

where is the Caputo fractional derivative of order defined as []

where is the Gamma function.

It is known that the inverse source problem mentioned above is ill-posed in general, i.e., a solution does not always exist and, in the case of existence of a solution, it does not depend continuously on the given data. In fact, from a small noise of physical measurement, for example, is noised by observation data with order of , and .

In all functions , , and are given data. It is well-known that if are small enough, the sought solution may have a large error. The backward problem is to find from and which satisfies (3), where denotes the norm.

It is known that the inverse source problem mentioned above is ill-posed in general, i.e., a solution does not always exist, and in the case of existence of a solution, it does not depend continuously on the given data. In fact, from a small noise of physical measurement, the corresponding solutions may have a large error. Hence, a regularization is required. Inverse source problems for a time-fractional diffusion equation for have been studied. Tuan et al. [] used the Tikhonov regularization method to solve the inverse source problem with the later time and show the estimation for the exact solution and regularized solution by a priori and a posteriori parameter choices rules. Wei et al. [,,] studied an inverse source problem in a spatial fractional diffusion equation by quasi-boundary value and truncation methods. Fan Yang et al., see [], used the Landweber iteration regularization method for determining the unknown source for the modified Helmholtz equation. Nevertheless, to our best knowledge, Salir Tarta et al. [] used these properties and analytic Fredholm theorem to prove that the inverse source problem is well-posed, i.e., can be determined uniquely and depends continuously on additional data , , see [,]—the authors studied the inverse source problem in the case of nonlocal inverse problem in a one-dimensional time-space and numerical algorithm. Furthermore, the research of backward problems for the diffusion-wave equation is an open problem and still receives attention. In 2017, Tuan et al. [] considered

where ; is a parameter; is a given function; ; are fractional order of the time and the space derivatives, respectively; and is a final time. The function denotes a concentration of contaminant at a position x and time t with as the fractional Laplacian. If tends to 2, the fractional Laplacian tends to the Laplacian normal operator, see [,,,,,,,,,,,]. In this paper, we use the fractional Tikhonov regularization method to solve the identification of source term of the fractional diffusion-wave equation inverse source problem with variable coefficients in a general bounded domain. However, a fractional Tikhonov is not a new method for mathematicians in the world. In [], Zhi Quan and Xiao Li Feng used this method for considering the Helmholtz equation. Here, we estimate a convergence rate under an a priori bound assumption of the exact solution and a priori parameter choice rule and estimate a convergence rate under the a posteriori parameter choice rule.

In several papers, many authors have shown that the fractional diffusion-wave equation plays a very important role in describing physical phenomena, such as the diffusion process in media with fractional geometry, see []. Nowadays, fractional calculus receives increasing attention in the scientific community, with a growing number of applications in physics, electrochemistry, biophysics, viscoelasticity, biomedicine, control theory, signal processing, etc., see []. In a lot of papers, the Mittag–Leffler function and its properties are researched and the results are used to model the different physical phenomena, see [,].

The rest of this article is organized as follows. In Section 2, we introduce some preliminary results. The ill-posedness of the fractional inverse source problem (1) and conditional stability are provided in Section 3. We propose a Tikhonov regularization method and give two convergence estimates under an a priori assumption for the exact solution and two regularization parameter choice rules: Section 4 (a priori parameter choice) and Section 5 (a posteriori parameter choice).

2. Preliminary Results

In this section, we introduce a few properties of the eigenvalues of the operator , see [].

Definition 1

(Eigenvalues of the Laplacian operator).

- 1.

- Each eigenvalues of is real. The family of eigenvalues satisfy , and as .

- 2.

- We take the eigenvalues and corresponding eigenvectors of the fractional Laplacian operator in Ω with Dirichlet boundary conditions on :for . Then, we define the operator bywhich maps into . Let . By , we denote the space of all functions with the propertywhere Then, we also define . If , then is

Definition 2

(See []). The Mittag–Leffler function is:

where and are arbitrary constants.

Lemma 1

(See []). For , , and , we get

Lemma 2

(See []). If and , suppose ζ satisfies , . Then, there exists a constant as follows:

Lemma 3

(See []). The following equality holds for , and

Lemma 4.

For , , and positive integer , we have

Lemma 5.

For any satisfying , there exists positive constants such that

Lemma 6

(See []). Let , we have

where

Lemma 7.

For constant and , one has

where are independent on .

Proof.

Let , we solve the equation , then there exists a unique , it gives

□

Lemma 8.

Let the constant and , we get

Proof.

- If , then from , we get

- If , then it can be seen that Taking the derivative of with respect to , we know that

From (16), a simple transformation gives

attains maximum value at such that it satisfies . Solving , we know that .

Hence, we conclude

□

Lemma 9.

Let and , and be a function defined by

where and .

Proof.

- If , then for we know that

- If , then we have , then we knowBy taking the derivative of with respect to , we know thatThe function attains maximum at value , whereby , which satisfies . Solving , we obtain that , then we have

The proof of Lemma 9 is completed. Our main results are described in the following Theorem. □

Now, we use the separation of variables to yield the solution of (1). Suppose that the solution of (1) is defined by Fourier series

Next, we apply the separating variables method and suppose that problem (1) has a solution of the form . Then, is the solution of the following fractional ordinary differential equation with initial conditions as follows:

As Sakamoto and Yamamoto [], the formula of solution corresponding to the initial value problem for (22) is obtained as follows:

Hence, we get

Letting , we obtain

From (25) and using final condition , we get

By denoting , , , and , using a simple transformation, we have

Then, we receive the formula of the source function

where .

In the following Theorem, we provide the uniqueness property of the inverse source problem.

Theorem 1.

The couple solution of problem (1) is unique.

Proof.

We assume and to be the source functions corresponding to the final values and in form (27) and (28), respectively, whereby

Suppose that , , and , then we prove that . In fact, using the inequality , we get

From (30), we can see that if the right hand side tends to 0, then . Therefore, we have . The proof is completed. □

2.1. The Ill-Posedness of Inverse Source Problem

Theorem 2.

The inverse source problem is ill-posed.

Define a linear operator as follows:

where is the kernel

Due to , we know is a self-adjoint operator. Next, we are going to prove its compactness. We use the fractional Tikhonov regularization method to rehabilitate it, where is an orthogonal basis in and

Proof.

Due to , we know is a self-adjoint operator. Next, we are going to prove its compactness. Defining the finite rank operators as follows:

Then, from (31) and (34) and combining Lemma 5, we have

Therefore, in the sense of operator norm in as . Additionally, is a compact and self-adjoint operator. Therefore, admits an orthonormal eigenbasis in . From (31), the inverse source problem we introduced above can be formulated as an operator equation

and by Kirsch [], we conclude that it is ill-posed. To illustrate an ill-posed problem, we present an example. To perform this example ill-posed, we fix and let us choose the input data

Let us choose other input data . By (28), the source term corresponding to is . An error in norm between two input final data is

with B as defined in Lemma 5. Therefore,

An error in norm between two corresponding source terms is

From (41) and using the inequality in Lemma 5, we obtain

From (42), we have

2.2. Conditional Stability of Source Term

In this section, we show a conditional stability of source function .

Theorem 3.

If for , then

3. Regularization of the Inverse Source Problem for the Time-Fractional Diffusion-Wave Equation by the Fractional Tikhonov Method

As mentioned above, applying the fractional Tikhonov regularization method we solve the inverse source problem. Due to singular value decomposition for compact self-adjoint operator , as in (33). If the measured data and with a noise level of , and satisfy

then we can present a regularized solution as follows:

where is a parameter regularization.

where

4. A Priori Parameter Choice

Afterwards, we will give an error estimation for and show convergence rate under a suitable choice for the regularization parameter.

Theorem 4.

- If , since we have

- If , by choosing we have

where

Proof.

By the triangle inequality, we know

The proof falls naturally into two steps.

Step 1: Estimation for , we receive

Combining (50) to (51), and Lemma 5, it is easily seen that . From (58), applying the inequality and combining Lemma 7, we know that

Using the result of Lemma 1 in above, we receive

Therefore, we have concluded

where

□

Step 2: Next, we have to estimate . From (28) and (50), and using Parseval equality, we get

From (63), we have estimation for

Hence, has been estimated

Next, using the Lemmas 5 and 8, we continue to estimate . In fact, we get

Next, combining the above two inequalities, we obtain

Choose the regularization parameter as follows:

Hence, we conclude that

Case 1: If , since we have

Case 2: If , since we have

5. A Posteriori Parameter Choice

In this section, we consider an a posteriori regularization parameter choice in Morozov’s discrepancy principle (see in []). We use the discrepancy principle in the following form:

whereby , , and is the regularization parameter.

Lemma 10.

Let

If , then the following results hold:

- (a)

- is a continuous function;

- (b)

- as ;

- (c)

- as ;

- (d)

- is a strictly increasing function.

Lemma 11.

Proof.

Step 1: First of all, we have the error estimation between and . Indeed, using the inequality , we get

Step 2: Using the inequality , we can receive the following estimation

From (76), we get

whereby

From (78), we get as follows:

From (79), using Lemma 9, we have

Therefore, combining (77) to (79), we know that

From (80), it is very easy to see that

So,

which gives the required results. The estimation of is established by our next Theorem. □

Theorem 5.

Assume the a priori condition and the noise assumption hold, and there exists such that . This Theorem now shows the convergent estimate between the exact solution and the regularized solution such that

- If , we have the convergence estimate

- If , we have the convergence estimate

whereby

Proof.

Applying the triangle inequality, we get

Case 1: If . First of all, we recalled estimation from (61) and, by Lemma 9 Part (a), we have

Next, we have estimate . From (28) and (50), and using Parseval equality, we get

Using the Hölder’s inequality, we obtain

Next, using the priori condition a, we have

Case 2: Our next goal is to determine the estimation of when , we get

Next, for , we get

From (96), repeated application of Lemma 11 Part (b) enables us to write , it is easy to check that

In the same way as in , it follows easily that , we now proceed by induction

The proof is completed. □

6. Simulation Example

In this section, we are going to show an example to simulate the theory. In order to do this, we consider the problem as follows:

where the Caputo fractional derivative of order is defined as

where is the Gamma function.

We chose the operator on the domain with the Dirichlet boundary condition for , we have the eigenvalues and corresponding eigenvectors given by and = , respectively.

We consider the following assumptions:

In this example, we choose the following solution

Before giving the main results of this section, we present some of the following numerical approximation methods.

- Composite Simpson’s rule: Suppose that the interval is split up into n sub-intervals, with n being an even number. Then, the composite Simpson’s rule is given bywhere for with , in particular, and .

- For are two positive integers given. We use the finite difference method to discretize the time and spatial variable for as follows:

- Explicit forward Euler method: Let , then the finite difference approximations are given by

Instead of getting accurate data , we get approximated data of , i.e., the input data is noised by observation data with order of which satisfies

where, in Matlab software, the function generates arrays of random numbers whose elements are uniformly distributed in the interval .

The absolute error estimation is defined by

where and .

From the above analysis, we present some results as follows.

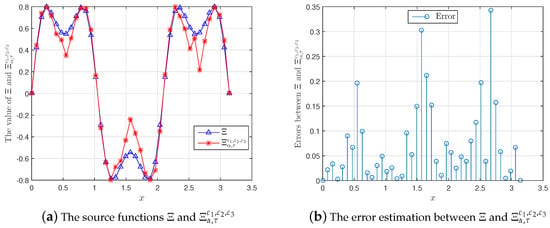

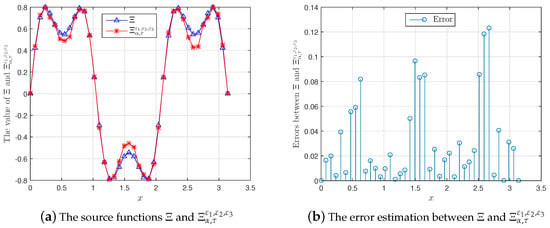

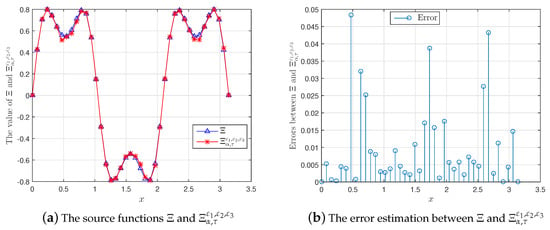

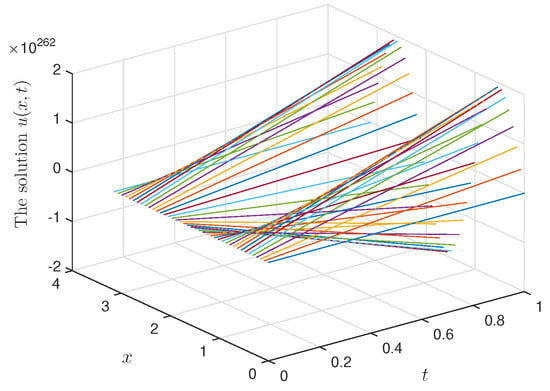

In Table 1, we show the convergent estimate between and with a priori and a posteriori parameter choice rules. From the observations on this table, we can conclude that the approximation result is acceptable. Moreover, we also present the graph of the source functions with cases of the input data noise and the corresponding errors, respectively (see Figure 1, Figure 2 and Figure 3). In addition, the solution is also shown in Figure 4 for and .

Table 1.

The errors estimation between and at with , .

Figure 1.

A comparison between and for , , := , .

Figure 2.

A comparison between and for , , := , .

Figure 3.

A comparison between and for , , := , .

Figure 4.

The solution for .

7. Conclusions

In this study, we use the Tikhonov method to regularize the inverse problem to determine an unknown source term in a space-time-fractional diffusion equation. By an example, we prove that this problem is ill-posed in the sense of Hadamard. Under a priori and a posteriori parameter choice rules, we show the results about the convergent estimate between the exact solution and the regularized solution. In addition, we show an example to illustrate our proposed regularization.

Author Contributions

Project administration, Y.Z.; Resources, L.D.L.; Methodology, N.H.L.; Writing—review, editing and software, C.N.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Podlubny, I. Fractional Differential Equations. In Mathematics in Science and Engineering; Academic Press Inc.: San Diego, CA, USA, 1990; Volume 198. [Google Scholar]

- Nguyen, H.T.; Le, D.L.; Nguyen, T.V. Regularized solution of an inverse source problem for a time fractional diffusion equation. Appl. Math. Model. 2016, 40, 8244–8264. [Google Scholar] [CrossRef]

- Wei, T.; Wang, J. A modified quasi-boundary value method for an inverse source problem of the time-fractional diffusion equation. Appl. Numer. Math. 2014, 78, 95–111. [Google Scholar] [CrossRef]

- Wang, J.G.; Zhou, Y.B.; Wei, T. Two regularization methods to identify a space-dependent source for the time-fractional diffusion equation. Appl. Numer. Math. 2013, 68, 39–57. [Google Scholar] [CrossRef]

- Zhang, Z.Q.; Wei, T. Identifying an unknown source in time-fractional diffusion equation by a truncation method. Appl. Math. Comput. 2013, 219, 5972–5983. [Google Scholar] [CrossRef]

- Yang, F.; Liu, X.; Li, X.X. Landweber iterative regularization method for identifying the unknown source of the modified Helmholtz equation. Bound. Value Probl. 2017, 91. [Google Scholar] [CrossRef]

- Tatar, S.; Ulusoy, S. An inverse source problem for a one-dimensional space-time fractional diffusion equation. Appl. Anal. 2015, 94, 2233–2244. [Google Scholar] [CrossRef]

- Tatar, S.; Tinaztepe, R.; Ulusoy, S. Determination of an unknown source term in a space-time fractional diffusion equation. J. Frac. Calc. Appl. 2015, 6, 83–90. [Google Scholar]

- Tatar, S.; Tinaztepe, R.; Ulusoy, S. Simultaneous inversion for the exponents of the fractional time and space derivatives in the space-time fractional diffusion equation. Appl. Anal. 2016, 95, 1–23. [Google Scholar] [CrossRef]

- Tuan, N.H.; Long, L.D. Fourier truncation method for an inverse source problem for space-time fractional diffusion equation. Electron. J. Differ. Equ. 2017, 2017, 1–16. [Google Scholar]

- Mehrdad, L.; Dehghan, M. The use of Chebyshev cardinal functions for the solution of a partial differential equation with an unknown time-dependent coefficient subject to an extra measurement. J. Comput. Appl. Math. 2010, 235, 669–678. [Google Scholar]

- Pollard, H. The completely monotonic character of the Mittag-Leffler function Eα(-x). Bull. Am. Math. Soc. 1948, 54, 1115–1116. [Google Scholar] [CrossRef]

- Yang, M.; Liu, J.J. Solving a final value fractional diffusion problem by boundary condition regularization. Appl. Numer. Math. 2013, 66, 45–58. [Google Scholar] [CrossRef]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations; Elsevier Science Limited: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Luchko, Y. Initial-boundary-value problems for the one-dimensional time-fractional diffusion equation. Fract. Calc. Appl. Anal. 2012, 15, 141–160. [Google Scholar] [CrossRef]

- Quan, Z.; Feng, X.L. A fractional Tikhonov method for solving a Cauchy problem of Helmholtz equation. Appl. Anal. 2017, 96, 1656–1668. [Google Scholar] [CrossRef]

- Trifce, S.; Tomovski, Z. The general time fractional wave equation for a vibrating string. J. Phys. A Math. Theor. 2010, 43, 055204. [Google Scholar]

- Trifce, S.; Ralf, M.; Zivorad, T. Fractional diffusion equation with a generalized Riemann–Liouville time fractional derivative. J. Phys. A Math. Theor. 2011, 44, 255203. [Google Scholar]

- Hilfer, R.; Seybold, H.J. Computation of the generalized Mittag-Leffler function and its inverse in the complex plane. Integral Transform Spec. Funct. 2006, 17, 37–652. [Google Scholar] [CrossRef]

- Seybold, H.; Hilfer, R. Numerical Algorithm for Calculating the Generalized Mittag-Leffler Function. SIAM J. Numer. Anal. 2008, 47, 69–88. [Google Scholar] [CrossRef]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Application of Fractional differential equations. In North—Holland Mathematics Studies; Elsevier Science B.V.: Amsterdam, The Netherlands, 2006; Volume 204. [Google Scholar]

- Sakamoto, K.; Yamamoto, M. Initial value/boundary value problems for fractional diffusion-wave equations and applications to some inverse problems. J. Math. Anal. Appl. 2011, 382, 426–447. [Google Scholar] [CrossRef]

- Kirsch, A. An Introduction to the Mathematical Theory of Inverse Problem; Springer: Berlin, Germany, 1996. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).