Abstract

As a task in the digital preservation of calligraphy stone inscriptions, an invaluable cultural heritage, style classification faces prominent challenges: insufficient feature representation of single-channel rubbings, and difficulties in effectively capturing the complex strokes and spatial layouts inherent to calligraphic works. To tackle these issues, an efficient deep learning model integrated with the dual-path attention mechanism of Bottleneck Attention Module (BAM) is proposed in this paper, which is designed to achieve accurate and efficient classification of calligraphy styles. With the lightweight network EfficientNetB2 as its backbone, this model innovatively integrates the BAM. It realizes the channel-spatial collaborative attention in calligraphy analysis, with the weight of stroke structure features increased to over 85%. Through the synergistic effect of channel attention and spatial attention, the model’s ability to extract stroke structure and spatial layout features from calligraphy images is significantly enhanced. The experimental results on the stratified sampling dataset show that the model achieves an accuracy of 98.44% on the test set, a confusion matrix recall rate of 94.80%, an F1-score of 0.9675, a precision of 0.8690, and a macro-averaged Area Under the Curve (AUC) value of 0.9694. To further validate the effectiveness of the BAM module and the necessity of its dual-path design, we conducted a systematic ablation experiment analysis. The experiment used EfficientNet-B2 as the baseline model and sequentially compared the contributions of different attention mechanisms. The experimental results show that the method proposed in this paper balances efficiency and performance, and holds practical significance in fields such as ancient book authentication and calligraphy research.

Keywords:

calligraphy classification; inscription identification; attention mechanism; EfficientNet; digitalization of cultural heritage MSC:

68T07; 68T10

1. Introduction

With the acceleration of the digitization of calligraphy stone inscriptions, the demand for automatic identification of calligraphy stone inscriptions has become increasingly prominent. However, traditional computer vision methods (such as SIFT and HOG features [1]) are difficult to capture deep style features due to the unity of ink color, the diversity of pen tip morphology and the complexity of paper texture in single channel rubbing images, especially in the face of the single channel rubbing image. Therefore, the development of high precision classification system is urgent practical significance for calligraphy heritage protection.

From the perspective of the overall research context, deep learning technology has become the driving force promoting research in the field of calligraphy. It has shown excellent performance in key tasks such as calligraphy text detection, character recognition and classification, style analysis, and aesthetic evaluation (see Table 1).

In the direction of calligraphy text detection, several studies have proposed solutions to the problem of text extraction in complex scenarios. Mask TextSpotter v3 [2] proposed by Peking University in 2021 achieves an F1 value of 86.3% in the curved text detection through the fusion of instance segmentation and semantic segmentation, but these methods cannot significantly reduce performance when facing calligraphy text with severe occlusion, blurring, complex backgrounds, or very different styles. For example, the rubbings of calligraphy stone inscriptions may have disturbances such as fading, staining, and cracks. Point Gathering Network (PGNet) [3], developed by the Institute of Automation of the Chinese Academy of Sciences at the same time, significantly improves the positioning accuracy of scenes such as street view signboards with the help of pixel-level geometric modeling. In addition, Huang et al. [4] tried to replace the convolutional neural network embedded in the main structure of connection text proposal network (CTPN) and Efficient and Accurate Scene Text Detection (EAST) with ResNet (Residual Network) with better feature extraction sensitivity, the results indicate that the accuracy of modified EAST with ResNet101 would be the highest with a deeper depth and larger width of ResNet. The accuracy of text segmentation on ICDAR 2015 is 83.4% which is 7% higher than the original PVANET-EAST. The text detection accuracy is 83.9% on the untrained scanned document. Also, it achieved an accuracy of 86.3% when applied to self-collected Chinese calligraphy. However, general purpose networks such as ResNet are not designed for calligraphy images and may not be able to optimally capture the subtle structure and stroke characteristics of calligraphy characters. Based on a dual generator framework of deep adversarial networks, Wang et al. [5] proposed a method for robotic calligraphy reproduction. In this model, an encoder-decoder module is employed as one generator for style learning, while a robotic arm serves as the other generator for motion learning. These two generators work together to optimize the network and obtain optimal robotic calligraphy works. The experimental results demonstrate that this method is extremely efficient and applicable in robotic calligraphy, achieving state-of-the-art performance. Specifically, the average structural similarity index reaches 75.91%, the coverage rate is 70.25%, and the intersection over union (IoU) stands at 80.68%. Wang et al. [6] proposed a new detector named EMANet. First, replaced the feature extraction module in the core of EfficientNet-B3 with an improved feature extraction (MBConv++) module to better capture the interdependencies between channels. By integrating this module, the network can focus on the key features in the entire dataset. Additionally, designed a Multi-Dimensional Scale Fusion (MDSF) module, which effectively enhances the scale robustness of the network. Finally, developed a mobile ancient text digitization application suitable for the Internet of Things (IoT). The experimental results show that EMANet achieves an F-measure of 94.9% on the handwritten ancient book dataset, which proves its effectiveness. Qi et al. [7] proposed an advanced deep learning framework for detecting ancient Chinese characters in complex scenarios, with the goal of improving detection accuracy. This framework introduced an Ancient Character Haar Wavelet Transform Downsampling Block (ACHaar), which effectively reduces the spatial resolution of feature maps while preserving key features of ancient characters. Secondly, a Glyph Focus Module (GFM) is incorporated; it utilizes an attention mechanism to enhance the processing of deep semantic information and generates feature maps of ancient characters that emphasize horizontal and vertical features through a four-path parallel strategy. To train and validate the model, a dedicated dataset was constructed. The experimental results show that this method outperforms previous text detection methods on the HUSAM-SinoCDCS dataset: the accuracy is increased by 1.36–92.84%, the recall rate is increased by 2.24–85.61%, and the F1-score is increased by 1.84–89.08%.

In the field of calligraphy recognition and classification, the research focus is on algorithm improvement and model adaptation to improve recognition robustness in complex scenarios. Huang et al. [8] conducted Convolutional Neural Networks (CNN) training and testing on the four main calligraphy fonts of the Tang Dynasty (690–907 AD), and achieved 89.5–96.2% in the calligraphy classification task. The accuracy rate highlights the importance of CNN in font and art style classification. However, the scope of its testing is still limited to a specific dynasty or font. Wang et al. [9] proposed a calligraphy recognition method based on the improved EfficientNet network, which efficiently extracts a single calligraphy character and expands the training set through a character box algorithm, and introduce Convolutional Block Attention Module (CBAM) in the training process enhance the network attention mechanism with the Self-Attention module; however, its structure is relatively complex, which may increase the computational cost of model training and inference. In addition, attention mechanisms such as BAM and CBAM may not fully consider the spatial information of different scales in calligraphy characters, and fail to make full use of the contextual information in different receptive fields to enrich the feature space. The hierarchical general network Hierarchical Universal Network (HUNet) designed by Wang et al. [10] realizes the efficient recognition of different types with the help of parameter sharing architecture and multi-stage feature extraction fusion, which achieves better recognition performance than other evaluated efficient models while ensuring high computational throughput. After training on MTACCR, HUNet’s Top-1 accuracy on the MACR test set improved by 9.64% However, the specific computational overhead of its parameter sharing architecture and multi-stage feature extraction fusion still needs to be considered. In response to the special scenarios, Han et al. [11] proposed a crowdsourced-based expert recognition method to restore severely degraded calligraphy characters through a novel character similarity reasoning (CSI) algorithm, and developed a crowdsourcing repair task system LanT, the experiment confirmed that CSI can reliably identify experts; but relying on crowdsourcing and expert identification can be costly and difficult to scale. Zerdoumi et al. [12] focus on offline Arabic text separation, which performed well in distinguishing Arabic texts, the method used Osmani and Arabic scripts based on verses 84 and 117, with an accuracy rate greater than 98.14% and 90.16%, respectively. In addition, to solve the special problem of calligraphy characters, Gao et al. [13] proposed a method based on Faster RCNN to solve the problem of component segmentation caused by partial adhesion and overlap in incoherent handwritten calligraphy characters in multi-scale and small object detection. The experimental results demonstrate the accurate segmentation effectiveness of the method for adhering and overlap components. In addition, these components could be retrieved accurately in the retrieval system, and the mean Average Precision of the top 30 retrieval results reached 95.7%. A better retrieval accuracy reflects a better segmentation effect from the side, which proves the effectiveness of the proposed method. Chen et al. [14] constructed an improved algorithm YOLOv7-PDM for regular calligraphy recognition, and after training and testing on a self-made square character single-character dataset, it was confirmed that the accuracy of the method in orthographic feature detection reached 94.19%. However, it was trained and tested on homemade, style-specific calligraphy datasets. This may lead to weak generalization of models in other styles, calligraphy works by different calligraphers, or in different periods. Ye et al. [15] proposed innovative recognition methods, one of which integrates feature sequence extraction, sequence modeling, and transcription, through an integrated architecture, and the other is to break through the limitation of traditional algorithms. The recognition accuracy of both methods is 84.70%, and the latter shows higher robustness in multi-style Chinese character recognition. Yan et al. [16] proposed a novel one-shot learning model called SMFNet for Chinese character font recognition. This model was trained and tested on the XIKE-CFS Chinese font style dataset. The experimental results indicate that SMFNet achieved a recognition accuracy of 97.50% on the XIKE-CFS dataset; additionally, it supports one-shot recognition, with an accuracy of 92.84% for this specific task. Zhang et al. [17] proposed an end-to-end Chinese character generation model based on dense blocks and capsule networks. The generator of this model adopts a self-attention mechanism and densely connected blocks, while the discriminator is composed of a capsule network and a fully connected network. To verify the effectiveness of the model, they conducted comparative experiments and ablation experiments on the Yan Zhenqing Zhengwen dataset, Deng Shiru Wenshuwen dataset, and Wang Xizhi Yunxing Wenshu dataset. The experimental results show that compared with the comparison models, this model increases the SSIM by an average of 0.07, decreases the MSE by an average of 1.95, and increases the PSNR by 0.92.

In terms of style analysis and aesthetic evaluation, Sun et al. [18] proposed a Siamese regression aesthetic fusion method based on calligraphy aesthetics and deep learning, called SRAFE. To further enhance the performance of calligraphy evaluation, the SRAFE method incorporates depth features from SRN and hand-designed aesthetic features. Sun et al. further investigated the effectiveness of the SRAFE method through ablation experiments, the SRAFE method improved by 0.170 on Mean Absolute Error (MAE) and 0.041 on Pearson correlation coefficient (PCC) compared to SRN while improving MAE by 0.323 and PCC by 0.261 compared to using manual features alone. Yoshida et al. [19] used the deep learning method TabNet to expand the previous research to improve the accuracy and interpretability of the aesthetic quality assessment of Chinese calligraphy; however, how to make deep learning models better understand and integrate higher-level abstract concepts such as calligraphy aesthetic principles, charm, and qi charm is still a challenge. Xu et al. [20] proposed a shear-guided filter to extract calligraphy art information, and experiments showed that the filter could extract form and spirit information more accurately, and the new evaluation parameters performed better in performance evaluation. In each image, RGF performs best. OTSU is the worst effective, with a maximum MER value of 0.0268. Compared with MER values and averages, FFCM is better than OTSU but inferior to MCGF. Si et al. [21] identified the unique characteristics of each character through the HOG method, combined with Euler distance to measure the spatial relationship between the target and the background point to capture unique strokes and patterns, and then used the Google LeNet Inception-v3 model to exert CNN capabilities, highlighting its application potential. The results of the in-depth analysis revealed an awareness rate of 93.12%, illustrating the immense potential of their approach. Even in the presence of Gaussian white noise, 91.3%, 90.9%, and 89.4% accuracy are obtained at noise levels of 0.02, 0.04, and 0.06. In terms of style generation and calligraphy application, Liang et al. [22] proposed a calligraphy writing method based on style transfer algorithm to achieve Chinese font art style transfer while maintaining calligraphy imitation accuracy, and proposed a typical similarity evaluation method including balance, tilt, and writing strength similarity to analyze the writing effect. The experimental results show that the minimum similarity between the robot’s writing style and the target character style is greater than 0.75. The proposed method shows good calligraphy writing effect and can be applied to various calligraphy training processes and trajectory planning applications. Wang et al. [5] designed a dual-generator framework based on deep adversarial network for calligraphy reproduction, with an average structural similarity index of 75.91%, a coverage rate of 70.25%, and an intersection rate of 80.68%. Lin et al. [23] proposed a stroke generation module based on a generative adversarial learning model to learn to disassemble the writing style of strokes so that it can generate stroke trajectories based on the learned style through character disassembly and apply the writing style to any Chinese character in a given database. Liu et al. [24] employed the Latent Diffusion Model (LDM) to generate Chinese calligraphy characters, they use ready-made SimSun font character images as guiding conditions, reference styles can be learned at the component level demonstrating state-of-the-art performance that surpasses comparison methods both quantitatively and qualitatively. Zhao et al. [25] proposed a self-supervised style learning (SSL) method that aims to increase the skeletal similarity between the output image and the target. An evaluation of the proposed method using a public Chinese handwriting database shows that the handwritten images generated by its method have higher quality, stroke correctness, and calligraphy similarity compared to other popular font imitation methods. Xin et al. [26] proposed a novel detection network based on dynamic feature fusion upsampling and text region focusing, named DRA-Net. The core innovations of this method include: (1) a Dynamic Fusion Upsampling Module, which adaptively assigns weights to effectively fuse multi-scale features while preserving key information during feature propagation; (2) an Adaptive Text Region Focusing Module, which integrates an axial attention mechanism to enhance the model’s ability to locate text regions and suppress background interference; and (3) the integration of deformable convolution, which improves the network’s capability to model irregular text shapes and extreme aspect ratios. Additionally, a dataset named ACST was constructed. The experimental results show that the detection accuracy of DRA-Net on the ACST dataset is significantly higher than that of existing methods, and it performs robustly in scenarios with complex backgrounds and extreme text aspect ratios. Its F1-score reaches 72.9%, precision is 82.8%, and recall rate is 77.5%.

In conclusion, in image-based calligraphy style classification tasks, especially for unique image carriers such as rubbing images of stone inscriptions, existing methods are confronted with significant challenges.

First, rubbing images are essentially single-channel grayscale images, whose feature representation dimension is far lower than that of color images. This single-channel characteristic determines that rubbing images mainly rely on information such as stroke morphology, ink shade, and paper texture for representation. During the digitization process, affected by factors including ambient light, rubbing quality, and paper aging, the images usually contain a large amount of low-quality noise, uneven ink distribution, or damage marks. These non-semantic noises are mixed with the genuine structural features of calligraphy, which greatly limits the extraction of effective style features and the robustness of traditional convolutional neural networks (CNNs).

Second, the style discrimination of calligraphy art is highly dependent on subtle local features (e.g.; the pressing and lifting of brush tips, the force at turning points, and the angles of stroke initiation and termination) as well as global spatial layouts across character scales. For instance, the differences in clerical script styles among different dynasties may only be reflected in the delicate handling of the wavy stroke (the extended right-falling stroke) or the degree of outward expansion of the overall character structure. Traditional CNNs perform excellently in extracting local textures, but they often struggle to achieve an efficient balance when required to simultaneously capture these fine-grained local variations and long-range global dependencies. This deficiency makes it difficult for the models to accurately distinguish calligraphy works with similar styles but different ages, thereby compromising the final classification accuracy.

Based on the two key challenges mentioned above, we propose an efficient deep learning model integrated with a dual-path attention mechanism. The model is designed to overcome the limitations of single-channel features and guide the network to accurately focus on the key brushstrokes and spatial regions critical for style discrimination during the feature extraction process, thus achieving accurate and efficient calligraphy style classification.

Table 1.

A Summary of Relevant Research in Calligraphy Image Analysis.

Table 1.

A Summary of Relevant Research in Calligraphy Image Analysis.

| Research Directions | Representative Literature | Dataset | Core Approach | Key Performance |

|---|---|---|---|---|

| Calligraphy text detection | [2] Mask TextSpotter v3 | Natural scenes bend text | Instance segmentation and semantic segmentation | Curved Text Detection F1: 86.3% |

| [4] Huang et al. | Universal text | CTPN/EAST + ResNet | Improved feature extraction to improve positioning accuracy | |

| Calligraphy identification and classification | [8] Huang et al. | Four regular script fonts in the Tang Dynasty | CNN | Classification accuracy: 89.5–96.2% |

| [9] Wang et al. | Single calligraphy character | Improved EfficientNet + CBAM | Introduce attention mechanisms | |

| [14] Chen et al. | Self-built square italic single-word dataset | Improved YOLOv7 | Accuracy of Orthographic Feature Detection: 94.19% | |

| Style analysis and generation | [19] Yoshida et al. | Calligraphy aesthetic evaluation | TabNet | Improve the accuracy and interpretability of aesthetic evaluation |

| [5] Wang et al. | Calligraphy reprint | Deep Adversarial Network Dual Generator Framework | Average Structural Similarity Index: 75.91% |

2. Materials and Methods

2.1. Data Acquisition and Preprocessing

The data utilized in this paper is derived from a self-constructed calligraphy image dataset. This dataset comprises calligraphy works by different calligraphers across four dynasties, specifically the Han Dynasty (299 images), Tang Dynasty (138 images), Yuan Dynasty (246 images), and Qing Dynasty (282 images), amounting to a total of 965 images. 579 training sets, 193 validation sets, and 193 test sets, Random stratified sampling was adopted.

The images in the calligraphy dataset primarily encompass scanned images of calligraphy stone inscriptions, handwritten Chinese characters, and copied calligraphy works. Selected samples from this dataset are presented in Figure 1.

Figure 1.

Partial dataset (in order of dynasties).

The core of calligraphy style analysis lies in stroke structure rather than color information; thus, all color images were converted into grayscale images. Subsequently, all images were uniformly resized to 260 × 260 pixels to meet the input requirements of the EfficientNet model. This preprocessing step retains the core features of calligraphy works while mitigating the interference of color information on the model’s judgment. To enhance the model’s generalization ability, a multi-level data augmentation scheme was designed for the training set:

- (1)

- Geometric transformation: This includes random rotation (±10°) and affine transformation (translation ±10%, scaling ±10%), which is designed to simulate variations in actual observation angles.

- (2)

- Visual perturbation: This encompasses color jitter (brightness ±30%, contrast ±30%), Gaussian blur (to simulate paper texture), and random erasure (with a 20% probability of occluding local regions).

- (3)

- Structural deformation: Elastic transformation (α = 50, δ = 5) was adopted to simulate the natural bending effect of paper. The standard deviation (δ) of the Gaussian kernel controls the smoothness and affects the smoothness of the displacement field. A larger δ value leads to a larger Gaussian kernel, resulting in a smoother image deformation that resembles a global bending (e.g.; large-area distortion of paper). In contrast, a smaller δ value restricts the deformation to local minor jitters. The scaling factor (α) governs the deformation intensity and determines the severity of the deformation. A higher α value corresponds to a greater maximum displacement distance of pixels, making the image appear more distorted and deformed. Conversely, a smaller α value yields a more subtle deformation.

- (4)

- Channel adjustment: Single-channel grayscale images were converted to three-channel images to match the input requirements of the pre-trained EfficientNet model.

The data preprocessing process starts with raw calligraphy images and proceeds through feature retention, augmentation optimization, scientific partitioning, and efficient loading, ultimately providing high-quality inputs for model training. Each step is tailored to the specific demands of calligraphy style analysis, ensuring the model can accurately capture stylistic nuances across different dynasties.

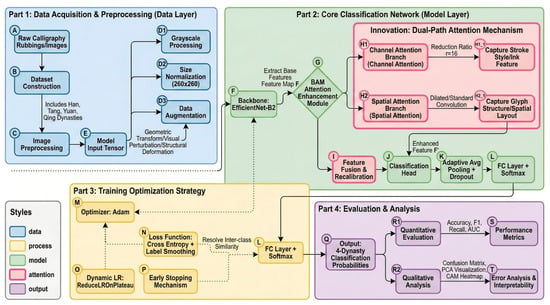

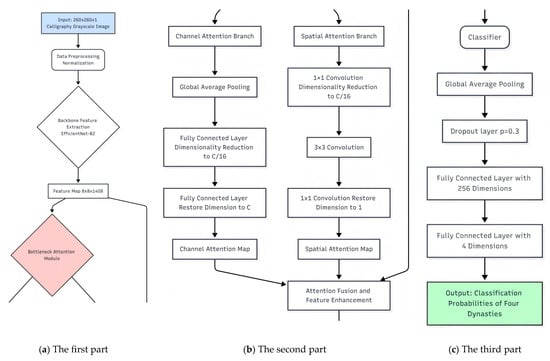

2.2. Methods

To provide a clear overview of the proposed approach, the overall research framework is illustrated in Figure 2. The framework consists of four main components: data acquisition and preprocessing, the core classification network, the training optimization strategy, and the evaluation procedure. Raw calligraphy rubbings are first processed through grayscale conversion, size normalization, and augmentation to generate standardized input tensors. These tensors are then fed into an EfficientNet-B2 backbone, upon which a dual-path BAM attention module is incorporated as the central innovation of this study. The channel-attention branch emphasizes stroke-level and ink-distribution characteristics, while the spatial-attention branch captures structural and layout-related information. The enhanced features are subsequently passed through the classification head for dynasty-level prediction. Finally, both quantitative and qualitative evaluations are performed to assess model performance and interpretability.

Figure 2.

Overall framework of the proposed Lishu calligraphy style classification model.

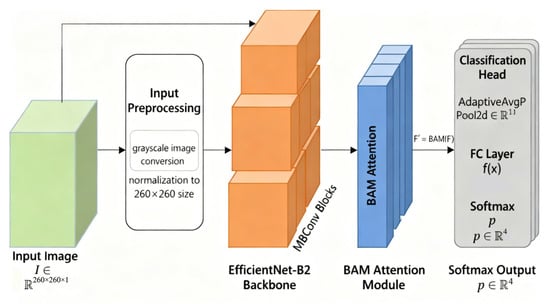

2.2.1. Design of the Model

The EfficientNet model incorporating the attention mechanism adopts EfficientNet-B2 as its backbone and embeds the BAM to form a pipeline of “input preprocessing-backbone feature extraction-attention enhancement-classification output” (see Figure 3).

Figure 3.

Model Structure.

- (1)

- Input preprocessing:

The input calligraphy image is converted to gray images and normalized to the dimensions of 260 × 260, resulting in the preprocessed image , which matches the input requirements of the EfficientNet-B2 backbone.

- (2)

- Backbone feature extraction:

The preprocessed image is send into the EfficientNet-B2 network. Its MBConv blocks extract basic features such as stroke edges and textures. This process yields a feature map , where 8 × 8 is the spatial size and 1408 is the number of channels.

- (3)

- Attention reinforcement:

The BAM module is embedded between the backbone network and the classification head. It is deployed immediately after all the feature extraction layers of EfficientNet-B2 (including all MBConv blocks, the Stem module, and the Head Conv layer), but prior to the global average pooling (GAP) operation. It adaptively weights the channel and spatial dimensions of the feature map to enhance attention on key features. The output is an enhanced feature map .

- (4)

- Classification output:

The enhanced feature map is compressed to via adaptive average pooling. After flattening, a vector is obtained. This vector is then passed through a fully connected layer defined as (where , ). Finally, the class probability is output, where represents the probability distribution across the four dynasties.

2.2.2. BAM Attention Module Embedding

The key to classifying calligraphy by dynasty lies in capturing the distinctive features of each dynasty, whereas the traditional EfficientNet model gives insufficient attention to such features. By integrating channel attention and spatial attention, the BAM module can adaptively adjust feature weights and enhance the model’s capacity to capture these distinctive features.

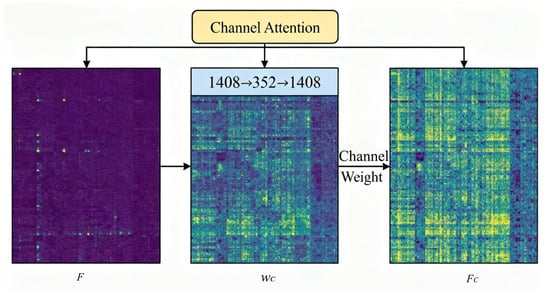

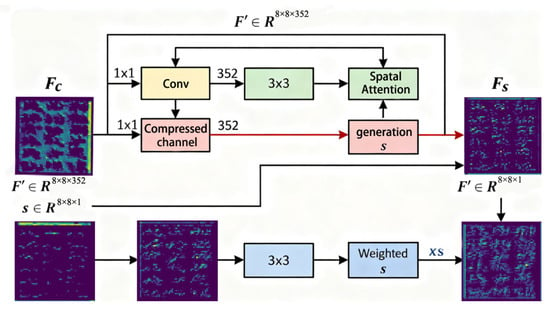

The BAM module consists of a channel attention branch and a spatial attention branch, and the specific process is as follows:

- (1)

- Channel attention branch:

Global average pooling is performed on the feature map to obtain the channel description vector . Channel weights are generated through 2 fully connected layers. The channel weights are multiplied with the original feature map to obtain the channel-weighted feature map ( indicates channel-by-channel multiplication) (see Figure 4).

Figure 4.

Structure of Channel attention branches.

- (2)

- Spatial attention branch:

The feature map is convoluted by 1 × 1 to obtain , Spatial attention maps are generated with 3 × 3 convolutions. The spatial attention map is multiplied with to obtain the spatially weighted feature map = ⊙s (see Figure 5).

Figure 5.

Structure of spatial attention branches.

As shown in Figure 6a–c from top to bottom and from left to right, the proposed deep learning architecture is specifically designed for the Dynasty Classification of Chinese Calligraphy Grayscale Images. The core innovation lies in the integration of a Bottleneck Attention Module (BAM) into a robust framework to enhance feature representation and discrimination capabilities. Initially, the input calligraphy image undergoes standard User Preprocessing and Normalization before being fed into the EfficientNet-B2 backbone network, which performs fundamental feature extraction, yielding a 512 channel Feature Map. This feature map then enters the BAM, which is structured into two parallel paths: the Channel Attention Branch and the Spatial Attention Branch. The Channel Attention path uses Global Average Pooling followed by two Fully Connected layers (with an intermediate dimension reduction to 1/16) to calculate the importance weights for each feature channel. Simultaneously, the Spatial Attention path employs a sequence of 1 × 1 and 3 × 3 convolutions to derive a 2D weight map that highlights critical spatial locations within the feature map. The outputs of these two branches are combined, and the resulting attention map is multiplied with the original 512-channel feature map, performing Feature Enhancement by adaptively recalibrating the features. This mechanism compels the model to focus on the most discriminative characteristics, such as specific brush strokes and structural traits relevant to dynasty classification. Finally, the enhanced feature map is processed by the classification head, which includes a final Global Average Pooling layer, a Dropout Layer set at 0.3 to mitigate overfitting, and two consecutive Fully Connected layers (with 256 and 4 dimensions, respectively), ultimately outputting the probability scores for classification across the four distinct calligraphy dynasties.

Figure 6.

Framework of the EfficientNet-B2-BAM Classification Pipeline.

2.2.3. Details of Model Training

After determining the model architecture, we performed detailed hyperparameter configuration and designed optimization strategies for the training process to ensure the model attains adequate learning and strong generalization capabilities.

All models in this paper were implemented based on the Pycharm 2025.2 and PyTorch 2.5.1 framework and trained on a single NVIDIA GeForce RTX 2060 GPU. The training hyperparameters were set as follows: the batch size was set to 16 to strike a balance between GPU memory capacity and training stability; the Adam optimizer was employed with an initial learning rate of 1 × 10−3; the maximum number of training epochs was set to 50. Additionally, an early stopping mechanism was introduced—with a patience of 5, to monitor the validation set accuracy. Specifically, if the validation set performance stopped improving, the training would be terminated early; the final model ceased training after approximately 35 epochs.

The experimental environment is shown in Table 2:

Table 2.

Experimental Environment and Configuration.

The experimental parameters in this chapter are shown in Table 3:

Table 3.

Hyperparameter Configuration for Model Training.

2.2.4. Training Strategy Optimization

The calligraphy dataset exhibits class imbalance and subtle stylistic differences, rendering traditional training strategies prone to overfitting or slow convergence rates. To address these issues, training strategy optimization encompasses loss function design, optimizer selection, learning rate scheduling, and the incorporation of an early stopping mechanism.

- (1)

- Loss function design

The cross-entropy loss function is:

where denotes the c-th element of the ground-truth label y corresponding to the i-th sample; denotes the probability that the i-th sample is predicted as class c by the proposed model; N denotes the batch size; C denotes the total number of classes.

If there are fewer Qing scripts, the soft label reduces the probability of the true category of Qing from 1.0 to 0.9, and increases the probability of other categories from 0.0 to 0.03. This reduces the model’s overfitting to minority classes. Since the “square structure” of Han and Qing scripts is easy to confuse, the soft label allows the model to focus not only on the characteristics of Hanli but also on the simple characteristics of Qingli, thereby reducing misjudgment.

- (2)

- Optimizer and learning rate scheduling

The selection of the optimizer exerts a direct impact on the model’s convergence speed and stability. This study adopts the Adam optimizer, which integrates the advantages of the momentum term and adaptive learning rate, and its formulas are as follows:

where, and is the estimation of the first moment and the second moment of the gradient, respectively, , is the attenuation coefficient, is the initial learning rate, is a numerical stability term, is a model parameter, is the gradient of the step t.

Initial learning rate , balance convergence speed and stability to avoid model divergence caused by excessive learning rate.

The Adam optimizer adapts the learning rate independently for each parameter, enabling rapid adaptation to the feature distribution of calligraphy data. Additionally, its momentum term accelerates convergence and mitigates training fluctuations—rendering it well-suited for the medium-sized dataset (of 965 samples) in this paper.

- (3)

- Learning rate scheduling strategy

In order to address the convergence stagnation induced by a fixed learning rate in the late stage of training, this study employs the ReduceLROnPlateau learning rate scheduler to dynamically adjust the learning rate based on validation set performance. The specific parameter settings are as follows:

Mode: monitors the validation set accuracy and triggers adjustment when the accuracy ceases to improve;

Factor: , reduces the learning rate to half of its original value;

Patience: , triggers learning rate decay if the validation accuracy does not increase for three consecutive rounds;

Minimum learning rate: , serves as the minimum learning rate threshold to prevent the model from failing to update due to an excessively low learning rate;

During training, the validation set accuracy is computed after each validation round. If the accuracy does not improve for three consecutive rounds, the scheduler multiplies the current learning rate by the decay factor (0.5), and this process continues until the learning rate drops below the minimum threshold (10−5);

ReduceLROnPlateau adaptively responds to the model’s convergence status, eliminating the subjectivity associated with manual learning rate adjustment. In calligraphy classification, a large learning rate in the late training stage may prevent the model from capturing subtle stroke features; dynamic learning rate adjustment effectively mitigates this issue and enhances the model’s generalization performance.

- (4)

- Early stop mechanism

To prevent overfitting, an early stopping mechanism is incorporated in this study to monitor the validation set accuracy: training is terminated and the currently optimal model is saved if the accuracy ceases to improve over multiple consecutive rounds. The specific parameter settings are as follows:

Patience: 5, training is terminated if the validation set accuracy does not improve for five consecutive rounds;

Optimal model save path: best_model.pth, saves the model corresponding to the highest validation set accuracy achieved during training;

After each training epoch, the validation set accuracy is computed. If the current accuracy exceeds the best accuracy recorded thus far, the best accuracy is updated and the model is saved; if the current accuracy is lower than the optimal accuracy, the counter is incremented by 1. Training is terminated once the counter reaches the patience value of 5.

3. Results

3.1. Evaluation Indicators

The loss value of the model on the validation set quantifies the discrepancy between the model’s predictions and the true labels for unseen validation data.

Validation Loss (Val Loss) serves as a core indicator reflecting the model’s generalization ability. Specifically, if Val Loss is significantly higher than Training Loss (Train Loss), it indicates the model is overfitting; if Val Loss is close to Train Loss, the model demonstrates strong generalization ability.

Accuracy: Defined as the ratio of correctly predicted samples to the total number of samples, it is the most intuitive indicator of classification performance.

To validate the effectiveness of the proposed method, we compare it against conventional CNN models and ResNet-50 as baseline models.

3.2. Experimental Results

The EfficientNet-B2 + BAM model attained a classification accuracy of 98.44% on the test set, significantly outperforming ResNet-50 (92.34%) and conventional CNN models (84.67%). More importantly, both its training loss and validation loss were minimized to 0.1049 and 0.0625, respectively the lowest among all compared models. This indicates that the model not only possesses strong fitting capability but also demonstrates superior generalization performance, with overfitting effectively mitigated (see Table 4).

Table 4.

Comprehensive Performance Comparison of Different Models.

In terms of comprehensive evaluation metrics, the proposed model also exhibits notable advantages. Its F1-score (0.9675) and AUC (Area Under the Curve) value (0.9694) rank the highest among the three models, confirming that it achieves an optimal trade-off between precision and recall while demonstrating robust inter-category discrimination capability.

To verify the effectiveness of the BAM attention module and the necessity of its dual-path design, a series of systematic ablation experiments were conducted, with the results presented in Table 5. The experiments employed EfficientNet-B2 as the baseline model (test set accuracy: 76.68%) and sequentially integrated different attention mechanisms for comparison—encompassing standalone channel attention, standalone spatial attention, SE attention, ECA attention, CBAM, and the proposed BAM module.

Table 5.

Ablation Study on Attention Mechanisms for Calligraphy Image Classification.

The experimental results show that the proposed EfficientNet-B2 + BAM model achieved an excellent accuracy of 98.44% on the test set, significantly outperforming all other variants. Meanwhile, its extremely low validation loss (0.0625) and training loss (0.1049) indicate the model exhibited excellent generalization ability and optimization efficiency. In terms of macro evaluation metrics, the BAM model performed remarkably well: it achieved a Macro F1-score of 0.9675, a Macro Recall of 0.9480, a Macro AUC of 0.9694, and a Macro Precision of 0.8690, delivering balanced and leading overall performance.

Standalone spatial attention significantly increased accuracy from 76.68% to 87.62% (a 10.94 percentage point gain), whereas standalone channel attention yielded only a marginal improvement (78.24%, a 1.56 percentage point increase). This explicitly demonstrated that in calligraphy style classification, geometric information—such as character stroke structure and spatial layout—is more discriminative of style differences than channel feature responses. The spatial attention model’s F1-score (0.8614), Recall (0.8527), and AUC (0.9258) were all significantly higher than those of the channel attention model, further corroborating the importance of geometric features.

Although spatial attention contributed substantially, the complete BAM model (accuracy: 98.44%) substantially outperformed any single-path model. BAM’s F1-score (0.9675) was 10.61 percentage points higher than that of standalone spatial attention, and its AUC (0.9694) was 4.36 percentage points higher. This robustly validated the synergistic effect between channel and spatial attention: through adaptive recalibration of feature channel weights, the channel path assisted the spatial path in focusing more precisely on style-relevant regions.

Compared with the well-established CBAM, the BAM model outperformed it by 5.7 percentage points in accuracy (98.44% vs. 92.75%) and held advantages in F1-score (0.9675 vs. 0.9138), Recall (0.9480 vs. 0.9054), and AUC (0.9694 vs. 0.9689), with only a slightly lower Precision (0.8690 vs. 0.9237). This result underscored the BAM module’s unique advantages in calligraphy image feature extraction, and its dual-path integration method is better suited to capturing the complex features of such cultural heritage data. Furthermore, BAM’s validation loss (0.0625) was lower than CBAM’s (0.0802), indicating stronger generalization ability.

Performance showed a gradual improvement from SE attention (accuracy: 89.43%) to ECA attention (90.67%), then to CBAM (92.75%), and finally to BAM (98.44%), mirroring the iterative optimization of attention mechanism design. BAM achieved the highest accuracy while maintaining low training and validation losses, showcasing a favorable balance between model complexity and performance.

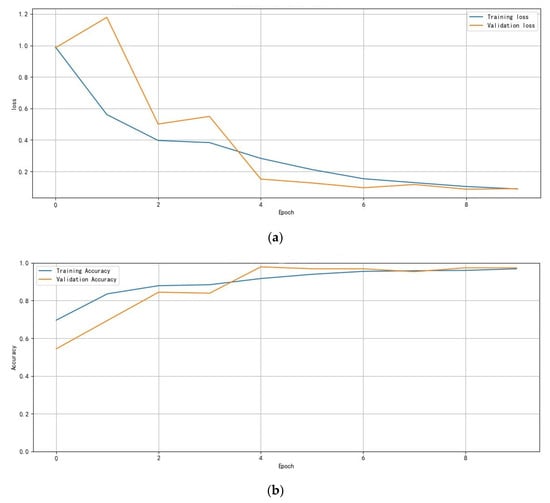

The loss and accuracy curve during the experiment is shown in Figure 7.

Figure 7.

Loss and accuracy curve. (a) Train and validation loss curve, (b) Train and validation accuracy curve.

As shown in Figure 7a, in the early stage of training (Epoch 0–5), the model quickly captures the high-frequency features of the training data with efficient parameter updates. The validation loss rises initially before declining: this is because the model tends to overfit the training data at first, leading to an increase in validation loss. However, as training progresses, the model gradually develops generalized representations, and the validation loss drops sharply.

In the middle stage of training (Epoch 5–10), both the training loss and validation loss decrease concurrently, indicating that the model enters a stable learning phase. During this period, it not only refines its fitting to the training data but also enhances its generalization ability.

In the late stage of training (Epoch 10–35), both losses stabilize, with the validation loss being slightly lower than the training loss. At this point, the model has fully grasped the core features of the data. A lower validation loss suggests that the model’s generalization error on the validation set is smaller than the fitting error on the training set.

As shown in Figure 7b, during the initial training phase (Epoch 0–15), the training accuracy rises rapidly. In this period, the model quickly captures the discriminative features of the training data, resulting in a marked enhancement in its classification capability.

Subsequently (Epoch 15–35), the validation accuracy aligns closely with the training accuracy—even reaching parity with or slightly exceeding the latter. This phenomenon fully reflects the model’s strong generalization ability.

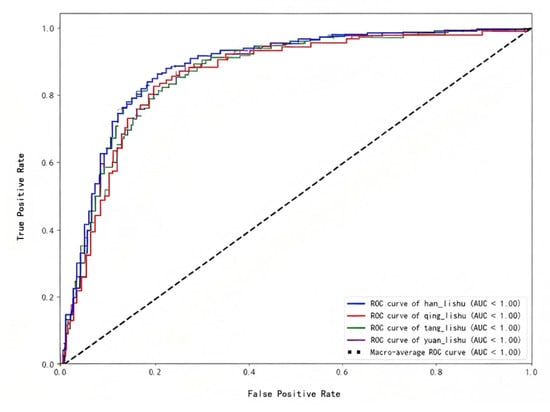

Figure 8 shows the multi-class ROC curves of the proposed calligraphy dynasty classification model on the test set. Key observations from the figure are as follows:

Figure 8.

Receiver Operating Characteristic (ROC) Curves for the Four-Dynasty Classification.

The individual ROC curves for all four dynasties assume a shape close to the ideal, with their AUC values all approaching 1.00. This demonstrates that the model has highly accurate recognition capability for calligraphy works of each dynasty. While the performance across all categories is exceptional, minor differences are still observable. For example, the ROC curves of Tang Dynasty Lishu (green) and Yuan Dynasty Lishu (purple) are closer to the upper-left corner than those of Han Dynasty Lishu (blue) and Qing Dynasty Lishu (orange), with their AUC values also being marginally higher.

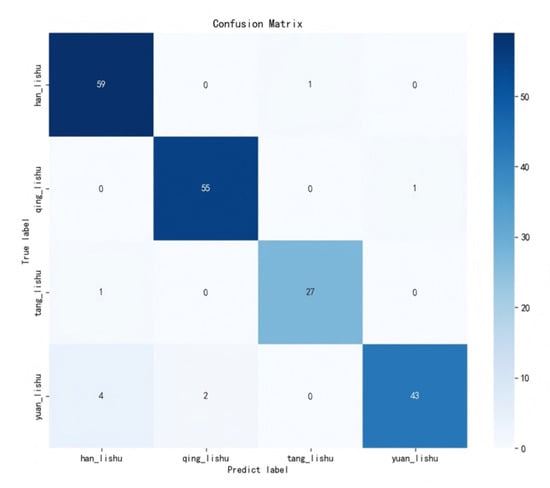

Figure 9 shows the normalized confusion matrix of the proposed model on the test set. Overall, the values on the main diagonal are significantly higher than those on the off-diagonals—indicating the model has a robust ability to discriminate between the calligraphy styles of the four dynasties. Nevertheless, the matrix also reveals notable error patterns, as detailed below:

Figure 9.

Normalized Confusion Matrix on the Test Set.

First, the most prominent confusion occurs between Han and Qing. Fifty-five Han samples were incorrectly classified as Qing, while four Qing samples were misclassified as Han. This mutual confusion strongly suggests a high degree of visual stylistic similarity between the two.

Second, a certain degree of confusion is also observed between Tang and Yuan, with 27 Tang samples misclassified as Yuan. Tang is characterized by rigorous regularity, while Yuan often integrates the brushwork of regular scripts. This confusion may stem from two factors: inherent structural commonalities between the two styles, or unrepresentative stylistic features in some samples of the dataset.

These findings not only validate the model’s effectiveness but also, more importantly, shed light on the model’s decision-making mechanism. They further offer directions for future optimization—for instance, developing more refined feature extraction strategies specifically for these easily confused dynasty style pairs.

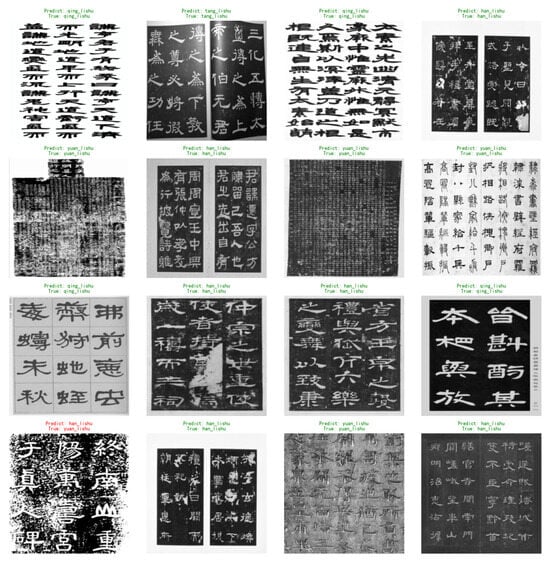

This section presents the classification results for calligraphy samples (specifically Lishu) from four dynasties: Han, Tang, Yuan, and Qing. As shown in Figure 10, the model demonstrates outstanding classification performance for samples from these four dynasties and is capable of accurately identifying unseen samples from the test set.

Figure 10.

Visualization of test set classification results.

3.3. Discussion

As shown in Table 4, a noteworthy finding requiring in-depth analysis is that ResNet-50 achieved the highest recall rate, whereas the conventional CNN model yielded the highest precision. This reflects the inherent characteristics of different model architectures: ResNet-50’s deep structure is more adept at “capturing” positive samples, yet this capability comes at the cost of potentially introducing more misclassifications; in contrast, the conventional CNN model is more “cautious,” with higher confidence levels for its positive predictions.

In comparison, the proposed model achieves a high-level balance across all metrics while maintaining exceptionally high accuracy and F1-score. This is attributable to the BAM attention mechanism’s precise focusing on key features, enabling overall performance that comprehensively outperforms the baseline models.

In order to evaluate the performance stability and statistical reliability of the calligraphy image classification model based on attention enhancement, the model was statistically validated by 10-fold Cross Validation. Table 6 shows the average performance indicators and standard deviations of the model over 10 cross-validations.

Table 6.

Ten-fold cross-validation results.

The statistical results show that the model’s performance in the 10 cross experiments is stable with a high level of around 98%, and the standard deviation is less than 0.015. This indicates that the model demonstrates strong generalization ability and robustness, showing no significant fluctuations across different data partitions. The low standard deviation indicates that the model’s performance is minimally affected by data partitioning, demonstrating good data adaptability without overfitting any specific subset. Both precision and recall rates remain high, further confirming the model’s ability to effectively reduce false positives while maintaining an extremely low false negative rate.

3.4. Error Analysis

Combined with the error analysis, it can be found that a small number of misclassifications mainly occur on the samples with fuzzy font style boundaries or similar character structures. For example:

The Han dynasty clerical script (han li) and Qing dynasty clerical script (qing li) share similar stylistic features, though some samples exhibit stylistic confusion.

Some clerical script characters in Tang Dynasty (tang li) exhibit structural similarities to Han clerical script (han li), resulting in occasional misinterpretation.

These confusions have a certain fontlogical rationality, and are closely related to the brushwork, structure and the evolution of different calligraphy styles.

Although the misclassifications identified in the error analysis can be partially attributed to the historical continuity and stylistic proximity between certain dynasties, several methodological improvements could further reduce these errors and enhance the robustness of the proposed model. A key direction is the incorporation of character-level geometric normalization, as variations in stroke proportion, rubbing distortion, and carving depth may obscure essential structural cues; techniques such as skeleton extraction, affine-invariant preprocessing, or stroke-width standardization could help the model better capture dynasty-specific morphology. In addition, expanding the dataset with transitional or stylistically ambiguous samples—either through targeted data collection or controlled synthetic augmentation—would allow the classifier to learn finer boundaries where historical styles overlap. Strengthening the feature extractor with multi-scale or fine-grained modules may also mitigate confusions such as Han–Tang or Han–Qing, where differences lie in subtle stroke angularity and serif formation. Moreover, contrastive learning paradigms that explicitly enforce larger inter-dynasty feature margins could improve separability in the embedding space, particularly for historically adjacent styles. Finally, integrating domain-specific constraints from calligraphy studies, such as stroke entry/exit patterns or radical-level structural rules, may provide discriminative cues that conventional CNN architectures do not explicitly model. Together, these improvements offer promising pathways for addressing the observed misclassifications and further enhancing the system’s interpretive reliability.

4. Conclusions

We constructed a high-quality Lishu rubbing dataset spanning four dynasties and implemented systematic preprocessing and normalization procedures. This dataset provides a reliable foundation for both training and objective comparison, addressing the lack of standardized Lishu classification resources in previous research. We designed an EfficientNet-B2 backbone integrated with a dual-path Bottleneck Attention Module (BAM), enabling the model to better capture the stroke-geometry and structural features characteristic of Lishu calligraphy. This tailored architecture significantly improves discriminative feature extraction while maintaining computational efficiency.

Through multi-fold validation, ablation studies, and cross-dynasty consistency analysis, the proposed model demonstrates stable performance and strong generalization across different writing styles, carving qualities, and rubbing variations. These experiments verify the practicality of the framework in real-world Lishu style analysis. The visualization results, including saliency-based attention maps and feature-space clustering, show that the model learns meaningful stylistic structures, reflecting historical evolution patterns within Lishu. This interpretability not only strengthens confidence in the model’s decisions but also suggests potential applications in digital humanities and calligraphy studies.

In future work, more lightweight or hierarchical attention architectures will be explored to reduce computational cost while preserving discriminative power, enabling deployment in mobile or museum-oriented digital-heritage platforms. Incorporating affine-transformation-invariant image classification through the Differentiable Arithmetic Distribution Module (ADM) [28] or generative augmentation strategies inspired by recent works on transformation-robust representation learning could strengthen the model’s adaptability to real-world variations such as distortion, partial erosion, and rubbing artifacts.

Author Contributions

Conceptualization, Y.L. and Y.M.; methodology, T.Z.; validation, Y.M.; writing original draft preparation, T.Z. and Y.L.; writing review and editing, Y.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Social Science Foundation of Shaanxi Province (No. 2022J043), and the College Students’ Innovative Entrepreneurial Training Plan Program (Nos. S202510702129 and X202510702200).

Data Availability Statement

The original contributions presented in this study are included in this article. Further inquiries can be directed to the corresponding author.

Acknowledgments

Special thanks are extended to the reviewers. The constructive comments put forward by the reviewers have helped us refine our research ideas and improve the quality of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Parashivamurthy, R.; Naveena, C.; Sharath Kumar, Y.H. SIFT and HOG features for the retrieval of ancient Kannada epigraphs. IET Image Process. 2020, 14, 4657–4662. [Google Scholar] [CrossRef]

- Liao, M.; Pang, G.; Huang, J.; Hassner, T.; Bai, X. Mask textspotter v3: Segmentation proposal network for robust scene text spotting. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 706–722. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, C.; Qi, F.; Liu, S.; Zhang, X.; Lyu, P.; Shi, G. Pgnet: Real-time arbitrarily-shaped text spotting with point gathering network. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 2782–2790. [Google Scholar] [CrossRef]

- Huang, L.K.; Tseng, H.T.; Hsieh, C.C.; Yang, C.S. Deep learning based text detection using resnet for feature extraction. Multimed. Tools Appl. 2023, 82, 46871–46903. [Google Scholar] [CrossRef]

- Wang, X.; Gong, Z. RoDAL: Style generation in robot calligraphy with deep adversarial learning. Appl. Intell. 2024, 54, 7913–7923. [Google Scholar] [CrossRef]

- Wang, P.; Hu, Y.; Peng, S.; Zhou, L. EMANet: An ancient text detection method based on enhanced-EfficientNet and multidimensional scale fusion. IEEE Internet Things J. 2024, 11, 32105–32116. [Google Scholar] [CrossRef]

- Qi, H.; Yang, H.; Wang, Z.; Ye, J.; Xin, Q.; Zhang, C.; Lang, Q. AncientGlyphNet: An advanced deep learning framework for detecting ancient Chinese characters in complex scene. Artif. Intell. Rev. 2025, 58, 88. [Google Scholar] [CrossRef]

- Huang, Q.; Li, M.; Agustin, D.; Li, L.; Jha, M. A novel CNN model for classification of Chinese historical calligraphy styles in regular script font. Sensors 2023, 24, 197. [Google Scholar] [CrossRef]

- Wang, W.; Jiang, X.; Yuan, H.; Chen, J.; Wang, X.; Huang, Z. Research on algorithm for authenticating the authenticity of calligraphy works based on improved EfficientNet network. Appl. Sci. 2023, 14, 295. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, C.; Lang, Q.; Jin, L.; Qi, H. HUNet: Hierarchical universal network for multi-type ancient Chinese character recognition. npj Herit. Sci. 2025, 13, 281. [Google Scholar] [CrossRef]

- Han, K.; You, W.; Deng, H.; Sun, L.; Song, J.; Hu, Z.; Yi, H. LanT finding experts for digital calligraphy character restoration. Multimed. Tools Appl. 2024, 83, 64963–64986. [Google Scholar] [CrossRef]

- Zerdoumi, S.; Jhanjhi, N.Z.; Habeeb, R.A.A.; Hashem, I.A.T. A deep learning based approach for extracting Arabic handwriting: Applied calligraphy and old cursive. PeerJ Comput. Sci. 2023, 9, e1465. [Google Scholar] [CrossRef]

- Gao, X.; Yang, F.; Chen, T.; Si, J. Chinese character components segmentation method based on faster RCNN. IEEE Access 2022, 10, 98095–98103. [Google Scholar] [CrossRef]

- Chen, J.; Huang, Z.; Jiang, X.; Yuan, H.; Wang, W.; Wang, J.; Xu, Z. Authenticity identification method for calligraphy regular script based on improved YOLOv7 algorithm. Front. Phys. 2024, 12, 1404448. [Google Scholar] [CrossRef]

- Ye, X.L.; Zhang, H.B.; Yang, L.J.; Du, J.X. Radical Constraint-Based Generative Adversarial Network for Handwritten Chinese Character Generation. Comput. Inform. 2024, 43, 482–504. [Google Scholar] [CrossRef]

- Yan, F.; Zhang, H. SMFNet: One-Shot Recognition of Chinese Character Font Based on Siamese Metric Model. IEEE Access 2024, 12, 38473–38489. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, Z.; Wu, X. An End-to-End Generation Model for Chinese Calligraphy Characters Based on Dense Blocks and Capsule Network. Electronics 2024, 13, 2983. [Google Scholar] [CrossRef]

- Sun, M.; Gong, X.; Nie, H.; Iqbal, M.M.; Xie, B. Srafe: Siamese regression aesthetic fusion evaluation for Chinese calligraphic copy. CAAI Trans. Intell. Technol. 2023, 8, 1077–1086. [Google Scholar] [CrossRef]

- Yoshida, S.; Yatoh, N.; Muneyasu, M. Aesthetic Evaluation of Chinese Calligraphy Using TabNet: Interpretability and Novel Features for Improved Accuracy. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2025, 108, 357–361. [Google Scholar] [CrossRef]

- Xu, P.; Zheng, X.; Chang, X.; Miao, Q.; Tang, Z.; Chen, X.; Fang, D. Artistic information extraction from Chinese calligraphy works via shear-guided filter. J. Vis. Commun. Image Represent. 2016, 40, 791–807. [Google Scholar] [CrossRef]

- Si, H. Analysis of calligraphy Chinese character recognition technology based on deep learning and computer-aided technology. Soft Comput. 2024, 28, 721–736. [Google Scholar] [CrossRef]

- Liang, D.T.; Liang, D.; Xing, S.M.; Li, P.; Wu, X.C. A robot calligraphy writing method based on style transferring algorithm and similarity evaluation. Intell. Serv. Robot. 2020, 13, 137–146. [Google Scholar] [CrossRef]

- Lin, G.; Guo, Z.; Chao, F.; Yang, L.; Chang, X.; Lin, C.M.; Shang, C. Automatic stroke generation for style-oriented robotic Chinese calligraphy. Future Gener. Comput. Syst. 2021, 119, 20–30. [Google Scholar] [CrossRef]

- Liu, L.; Xiong, X.; Wan, M.; Tao, C. Chinese calligraphy character generation with component-level style learning and structure-aware guidance. Appl. Soft Comput. 2025, 176, 113159. [Google Scholar] [CrossRef]

- Zhao, B.; Feng, G.; Zhang, W.; Li, Y.; Miao, Q.; Liu, X.; Liu, R. One-shot handwriting imitation via self-supervised cross spatial transformer networks. Neurocomputing 2025, 642, 129969. [Google Scholar] [CrossRef]

- Xin, Q.; Zhang, C.; Wang, Y.; Fan, C.; Yang, H.; Lang, Q.; Qi, H. DRA-Net: Dynamic Feature Fusion Upsampling and Text-Region Focus for Ancient Chinese Scene Text Detection. Electronics 2025, 14, 3324. [Google Scholar] [CrossRef]

- Gao, P.C.; Gu, G.; Wu, J.Q.; Wei, B.G. Chinese calligraphic style representation for recognition. Int. J. Doc. Anal. Recognit. 2017, 20, 59–68. [Google Scholar] [CrossRef]

- Tan, Z.; Dong, G.; Zhao, C.; Basu, A. Affine-transformation-invariant image classification by differentiable arithmetic distribution module. In Proceedings of the International Conference on Smart Multimedia, Los Angeles, CA, USA, 28–30 March 2024; pp. 78–90. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.