Abstract

Quantum entanglement plays a fundamental role in quantum mechanics, with applications in quantum computing. This study introduces a new approach that integrates quantum simulations, noise analysis, and fuzzy clustering to classify and evaluate the stability of quantum entangled states under noisy conditions. The Fuzzy C-Means clustering model (FCM) is applied to identify different categories of quantum states based on fidelity and entropy trends, allowing for a structured assessment of the impact of noise. The presented methodology follows five key phases: a simulation of the Bell state, the introduction of the noise channel (depolarization and phase damping), noise suppression using corrective operators, clustering-based state classification, and interpretability analysis using Explainable Artificial Intelligence (XAI) techniques. The results indicate that while moderate noise levels allow for partial state recovery, strong decoherence, particularly under depolarization, remains a major challenge. Rather than relying solely on noise suppression, a classification-based strategy is proposed to identify states that retain computational feasibility despite the effects of noise. This hybrid approach combining quantum-state classification with AI-based interpretability offers a new framework for assessing the resilience of quantum systems. The results have practical implications in quantum error correction, quantum cryptography, and the optimization of quantum technologies under realistic conditions.

Keywords:

quantum entanglement; quantum decoherence mitigation; fuzzy clustering in quantum systems; Explainable Artificial Intelligence (XAI) MSC:

81P73

1. Introduction

Quantum entanglement, initially proposed by Einstein, Podolsky, and Rosen (1935) in their paper on the “EPR paradox” [1], and later defined by Schrödinger as “the characteristic trait of quantum mechanics”, is an important phenomenon within quantum physics. Decades later, Bell’s theorem (1964) provided the theoretical foundation for experimentally verifying the nonlocal correlations that define this phenomenon, confirming that the quantum nature of entanglement surpasses the constraints imposed by local and realistic theories [2].

Entanglement has played a fundamental role in the development of advanced quantum technologies, including quantum teleportation [3], quantum cryptography [4], and quantum computing [5]. Quantum teleportation leverages entanglement to transfer quantum information between particles without requiring physical transportation. However, the practical implementation of these technologies faces significant challenges due to quantum noise, which degrades entanglement and reduces system fidelity.

Quantum noise, formally described using Kraus operators [6], arises from the unwanted interaction between quantum systems and their environment, leading to decoherence effects. Ensuring the viability of quantum teleportation and other quantum processes requires modeling, understanding, and mitigating these effects. Early work on quantum error-correction codes [7] demonstrated that noise effects can be minimized through corrective operators and stabilizer codes.

In this study, a five-phase methodological framework is proposed to address the challenges posed by quantum noise. This framework integrates advanced techniques such as Fuzzy C-Means for the fuzzy classification of quantum states and Explainable Artificial Intelligence (XAI) tools to interpret and justify the model’s decisions. Additionally, key quantum metrics are evaluated, including fidelity and von Neumann entropy, to analyze entanglement quality and the ability to recover initial states after noise introduction. Key contributions proposed in this paper:

- Quantum Noise Modeling: Building on previous studies of quantum channels such as depolarization and phase noise [8], the effects of noise on Bell states are modeled, analyzing its impact on fidelity and entropy.

- Noise Suppression: Inspired by Steane’s work on error-correction codes and corrective operators [9], methods are implemented to restore the initial quantum state under different noise levels.

- Fuzzy and Hard Clustering: Models such as Fuzzy C-Means are applied [10] to classify quantum states based on fidelity and entropy, providing a probabilistic perspective on state transitions.

- XAI Integration: Techniques are incorporated such as SHAP [11] to explain the most influential variables in state recovery and cluster classification.

- Practical Case Study: Detailed simulations based on modern quantum-computing platforms (Qiskit v. 0.7.2, 2023) are conducted to evaluate the impact of noise and the effectiveness of corrective operators in preserving quantum entanglement and mitigating decoherence effects [12].

The development of this article is structured as follows: Section 2 reviews the state of the art related to quantum noise, and fuzzy classification models. Section 3 details the proposed methodology, structured into five phases, integrating both mathematical and computational aspects. Section 4 presents the simulations, and the results obtained, followed by a comprehensive analysis. Section 5 discusses the practical implications, conclusions, and future directions. Finally, Section 6 includes some brief conclusions.

2. Related Work

The study of quantum entanglement has been an active area of research since its conceptualization, since it serves as a basis for various technological and theoretical applications. This phenomenon enables non-local correlations between particles, facilitating processes such as quantum computation and quantum cryptography. However, real-world quantum systems are susceptible to decoherence and noise, which degrade entanglement and hinder practical implementations.

This study explores recent advances in quantum noise analysis, state classification, and error-correction techniques. In particular, a new approach is introduced that combines Fuzzy C-Means clustering for quantum-state classification with Explainable Artificial Intelligence (XAI) to assess the impact of noise and corrective measures. This methodology enables a deeper understanding of quantum-state resilience, identifying optimal conditions to mitigate decoherence and improve quantum system stability.

2.1. Quantum Noise and Decoherence

Quantum noise, caused by the interaction of quantum systems with their environment, is one of the main practical limitations in maintaining entanglement. Quantum channels, such as the depolarizing channel, phase noise channel, and amplitude damping channel, are the most studied models for simulating this effect.

- Depolarizing Channel: Introduces uniform noise by mixing the basis states with equal probabilities and has been widely used to model the degradation of entanglement in experimental systems [8]. Depolarizing noise is a worst-case scenario in which the quantum state completely loses its coherence and population information due to random errors. This type of noise is common in noisy intermediate-scale quantum (NISQ) devices and in situations where the system is subjected to uncontrolled interactions.

- Phase Noise: Models the loss of relative coherence between basis states, primarily affecting quantum interference [13]. Phase damping occurs when a quantum system loses coherence but retains population information, meaning that the state amplitudes remain unchanged, but superposition is degraded. This type of noise is less destructive than depolarization and appears in systems where phase information is lost due to environmental coupling without energy exchange.

- Amplitude damping: Simulates the loss of energy from an excited state to a bulk state, which is relevant in physical systems such as trapped atoms [14]. Amplitude damping is a significant type of quantum noise that simulates the loss of energy from an excited state to a bulk state. It is especially determinant in physical systems where quantum information is stored in energy levels, such as trapped ions, superconducting qubits and quantum dots.

The impact of quantum noise has been evaluated using metrics such as von Neumann entropy, which measures the degree of disorder or uncertainty in a quantum system [8].

2.2. Quantum Error Correction

Quantum error correction helps mitigate the effects of noise and maintain the fidelity of quantum systems. Stabilizer codes and corrective operators are key tools used for this purpose.

- Corrective Operators: These reverse the effects of specific noise channels by applying inverse operations in Hilbert space [7].

- Error-Correction Codes: Shor and Steane codes are examples of protocols that protect quantum systems against bit-flip and phase-flip errors [9].

However, implementing these techniques in quantum hardware involves high computational cost and the need for advanced physical resources.

2.3. Quantum-State Classification

The classification of quantum states under different levels of noise is a continuously evolving field. Clustering models, both hard clustering (K-Means) and fuzzy clustering (Fuzzy C-Means), have been used to analyze the transition between high-, moderate-, and low-fidelity states.

- K-Means: Identifies hard clusters and is useful for the fast analysis of large volumes of quantum data [14].

- Fuzzy C-Means: Provides degrees of membership to multiple clusters, better capturing the probabilistic nature of quantum states [15].

These models allow for a deeper understanding of how key metrics, such as fidelity and entropy, are distributed across different noise configurations.

2.4. XAI Techniques Applied to Quantum Systems

The integration of Explainable Artificial Intelligence (XAI) into quantum system analysis has recently attracted considerable attention. Methods such as SHAP (SHapley Additive Explanations) offer insights by quantifying the contribution of each feature in classification models, thereby enhancing interpretability.

- SHAP: Assesses the global impact of individual variables within the classification model, emphasizing key metrics such as fidelity and entropy [16].

Through these tools, researchers can better understand complex quantum behaviors, refine correction strategies and design more robust quantum systems capable of mitigating noise and decoherence.

2.5. Quantum Teleportation in the Presence of Noise

Quantum teleportation has been experimentally demonstrated under ideal conditions; however, its implementation in noisy systems remains a challenge. Recent studies have evaluated how teleportation fidelity varies depending on noise levels and the application of correction techniques [3].

It has been identified that the Bell state, being the most robust against noise, is ideal for such processes [4]. However, its fidelity rapidly deteriorates in the presence of depolarization channels or phase noise, emphasizing the need for hybrid approaches that combine error-correction techniques with advanced analysis methods.

2.6. Web of Science

In the next step, an analysis is carried out on the Web of Science focusing on the following searches to gain a deeper temporal perspective on the integration of quantum computing:

- “Quantum noise AND entanglement”: Identifying articles that examine the impact of quantum noise on entangled systems.

- “Clustering AND quantum systems”: Searching for applications of Fuzzy C-Means in quantum-state classification.

- “Explainable AI AND quantum computing”: Identifying recent studies that integrate Explainable AI (XAI) into quantum analysis.

- “Quantum teleportation AND decoherence”: Reviewing both experimental and theoretical studies on quantum teleportation in the presence of noise.

This analysis will allow us to establish a solid foundation for understanding recent advances and identifying research gaps in these areas of quantum computing.

2.6.1. Quantum Noise and Entanglement

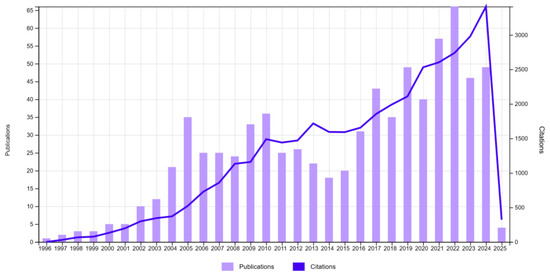

Figure 1 shows the evolution of publications and citations related to the topic “Quantum noise AND entanglement” on the Web of Science. The trends indicate a steady increase in research interest and impact over the past few decades.

Figure 1.

Publications (1312) and citations. TS = ((“QUANTUM NOISE”) AND (“ENTANGLEMENT”)).

2.6.2. Clustering and Quantum System

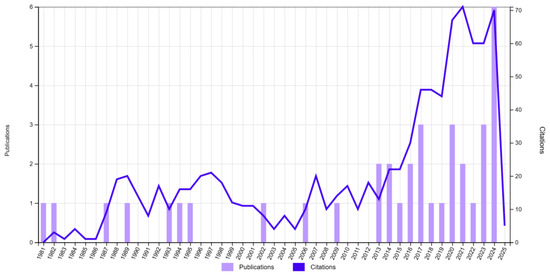

Figure 2 shows the evolution of publications and citations related to “Fuzzy clustering AND quantum systems” in Web of Science. The results indicate a low volume of publications compared to other research topics, with significant implications.

Figure 2.

Publications (37) and citations. TS = ((“CLUSTERING”) AND (“QUANTUM SYSTEM”)).

The absence of fuzzy clustering techniques highlights a significant research gap. Given the probabilistic nature of quantum states, fuzzy clustering could offer a more accurate representation of quantum classifications. Future research should explore the potential advantages of fuzzy methods in analyzing quantum-state distributions, entanglement classifications, and decoherence effects.

This finding reinforces the relevance of our approach to the integration of Fuzzy C-Means in the classification of quantum systems.

2.6.3. Explainable AI and Quantum Computing

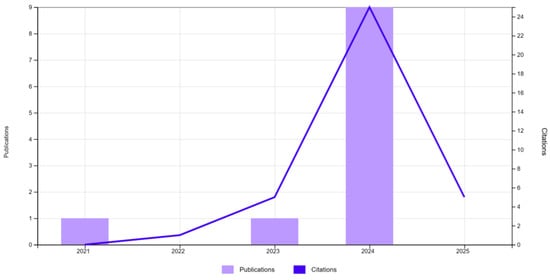

Figure 3 shows the evolution of the publications and citations related to “Explainable AI (XAI) and Quantum Computing”, which is an emerging field with very few publications until 2023. In 2024, there is a peak in publications and citations, suggesting growing interest in the scientific community.

Figure 3.

Publications (11) and citations. TS = ((“EXPLAINABLE AI”) AND (“QUANTUM COMPUTING”)).

This scenario presents a great research opportunity, as there are still a few established studies, allowing researchers to position themselves as pioneers in this field.

A Web of Science search using TS = (“EXPLAINABLE AI”) AND (“QUANTUM COMPUTING”) yielded a collection of studies focused on the integration of explainability within quantum computation. However, none of these papers specifically address the use of explainable AI (XAI) to analyze quantum entanglement or mitigate the effects of quantum noise. The retrieved publications cover various applications, such as quantum fuzzy expert systems [17], interpretability in quantum neural networks [18], and quantum graph-based learning [19]. In addition, some studies analyze the role of Boolean logic in explainable AI [20], quantum transfer learning [21] and the application of AI to hydrological sciences [22].

Despite the presence of research on quantum-enhanced AI models [23] and error correction in conversational AI [24], there is a notable absence of studies applying XAI to quantum entanglement analysis, noise suppression, or fidelity preservation. The existing literature focuses primarily on the optimization of AI models rather than employing explainability techniques for quantum-state characterization. This highlights an important research gap, as no current work integrates XAI with quantum entanglement degradation, noise effects, and clustering techniques for quantum entanglement.

3. Methodology

This study is structured into five main phases, each designed to address key aspects of quantum noise analysis and mitigation in entangled systems. First, in Phase 1, Initial quantum states are generated by preparing ideal Bell states and verifying their quality by means of fidelity and von Neumann entropy.

In Phase 2, quantum noise is introduced by simulating the degradation of quantum states through common noise channels, such as depolarization and phase damping, evaluating the impact in terms of entropy increase and fidelity reduction.

To counteract these effects, Phase 3 focuses on noise suppression and state recovery by implementing corrective operators and comparing the recovered states with the initial ones using fidelity and entropy reduction evaluation metrics.

In Phase 4, the quantum states are classified by applying clustering techniques such as Fuzzy C-Means, categorizing states into high, moderate, and low fidelity, while analyzing membership degrees and identifying the most relevant variables within each cluster.

Finally, in Phase 5, the time evolution of quantum states under different noise and correction configurations is evaluated, incorporating Explainable Artificial Intelligence (XAI) techniques to interpret the results and justify the model’s decisions.

This methodological framework provides a comprehensive approach to understanding the effects of quantum noise, state recovery mechanisms and classification strategies, thus contributing to the development of more robust quantum technologies.

3.1. Initial Quantum-State Generation

The generation of initial quantum states is the starting point of any analysis of entangled systems. The aim of this phase is to prepare an ideal state, free of noise, which allows the effects of the later stages of the process to be evaluated. The Bell state is one of the most studied entangled states due to its fundamental role in applications such as quantum teleportation and quantum cryptography [2].

The Bell state for two qubits is defined as:

In terms of its density matrix , the Bell state is represented as:

where and are basis states in the Hilbert space, and the coherence between them (the off-diagonal elements) indicates perfect entanglement.

The Bell state can be generated using a basic quantum circuit that employs two operations: the Hadamard gate (H), which acts on the first qubit to create a superposition, and the CNOT gate (CX), which correlates the state of the first qubit with the second, generating entanglement.

The process begins by initializing both qubits in the ground state , such that the combined initial state is . Applying the Hadamard gate to the first qubit transforms it into an equal superposition of and , leading to the intermediate state:

Subsequently, the application of the CNOT gate, which flips the second qubit if and only if the first qubit is in state , results in the final Bell state:

This process ensures the creation of a maximally entangled quantum state, where the measurement of one qubit instantaneously determines the state of the other, a key property exploited in quantum information protocols such as quantum teleportation and superdense coding.

The initial Bell state exhibits key quantum properties that determine its robustness against noise and its role in quantum protocols [25].

- Quantum fidelity measures the “purity” of a state relative to an ideal reference state For identical states, fidelity is defined as:

In the case of the ideal Bell state, .

- Von Neumann entropy evaluates the degree of disorder or uncertainty in a quantum system:

For the pure Bell state , entropy reaches its minimum value, .

- Concurrence quantifies the degree of entanglement in a two-qubit system. For the Bell state , concurrence is maximized, .

The preparation of the Bell state serves as a primary benchmark for analyzing the impact of quantum noise in subsequent phases. Its fidelity, entropy, and concurrence provide metrics for evaluating the performance of the quantum system throughout the methodological process.

3.2. Introduction of Quantum Noise

The introduction of quantum noise in entangled systems is a useful tool to evaluate the robustness of quantum properties to decoherence. In this phase, the effects of two main types of noise are simulated: depolarization and phase noise, using quantum channel models. These channels directly affect the density matrix of the quantum system, modifying its coherence and entanglement properties.

3.2.1. Depolarizing Channel

The depolarizing channel introduces uniform noise into the quantum system, replacing the state with a completely mixed state with probability . Mathematically, its effect on an initial density matrix is described as:

where

represents the depolarizing channel operator, is the depolarization probability; is the dimension of the Hilbert space, and is the identity matrix.

The depolarizing channel reduces entanglement by uniformly mixing all quantum states, leading to a degradation of coherence and fidelity.

3.2.2. Damping Channel

Phase damping is a type of quantum noise that selectively destroys quantum coherence without affecting the energy of the system. The phase-damping channel affects the relative coherence between the system’s basis states by introducing a random phase shift. Its effect on the initial density matrix is given by:

where

is the Pauli-Z operator and is the phase noise probability.

Phase noise primarily affects quantum interference, reducing coherence between superposed states while preserving population probabilities. This effect is important in quantum communication and computation, where maintaining coherence is an primary attribute for quantum-state conservation.

3.2.3. Noise Impact Evaluation

To quantify the impact of quantum noise on entangled states, two key metrics are used.

Von Neumann entropy, defined as:

measures the degree of disorder or uncertainty within a quantum system. For a pure state, the entropy remains at , whereas increasing noise levels lead to higher entropy values, indicating a progressive loss of quantum information.

On the other hand, quantum fidelity assesses the similarity between two quantum states, and , and is mathematically expressed as:

A fidelity value of signifies that both states are identical, while lower values indicate increasing divergence caused by noise-induced alterations. These two metrics provide a detailed assessment of how noise affects entangled states, serving as a foundation for further corrective strategies.

3.3. Noise Suppression and State Recovery

Suppressing quantum noise is a necessary step to preserve quantum properties in real-world systems. The goal of this phase is to implement corrective operators to mitigate the effects of previously introduced noise and, as much as possible, restore the initial quantum state.

3.3.1. Corrective Operator

A corrective operator acts on a noisy quantum state to counteract the effect of noise:

where is the corrective operator designed to reverse the effect of the applied noise channel and is the Hermitian adjoint of . The design of depends on the type of noise channel,

- Depolarization: Transformations are applied to reinforce correlations between the system’s basis states.

- Phase Noise: Specific corrective operators realign the relative phases of the affected basis states.

3.3.2. Evaluation Metrics

Quantum fidelity measures the similarity between two quantum states and . In this phase, fidelity is evaluated between the initial state initial and the recovered state, Equation (10).

A value close to 1 indicates a near-perfect recovery of the initial state.

On the other hand, the von Neumann entropy is used to measure system disorder before and after correction.

The entropy difference between the initial and recovered states provides a metric of correction success:

A significant reduction in entropy indicates effective noise suppression.

This phase aims to determine how well noise correction techniques can restore entangled quantum states, providing insight into their feasibility for real-world quantum technologies.

3.4. Quantum-State Classification

In this phase, quantum states are classified based on key characteristics such as noise level, fidelity, and entropy, allowing for the identification of patterns in their evolution and categorization according to performance metrics. The Fuzzy C-Means (FCM) algorithm classifies quantum states based on their fidelity and entropy, assigning degrees of membership to different clusters instead of forcing a hard classification [26].

Given a set of quantum states represented by their fidelity and entropy , the algorithm minimizes the following objective function:

where is the membership degree of state to cluster , represents the centroid of cluster , and is the fuzziness parameter, which controls how diffuse the clustering is.

The centroid of each cluster is updated iteratively based on the weighted average of the assigned states:

The membership degree of each quantum state to a given cluster is computed as:

where the denominator ensures that the sum of membership degrees across all clusters for a given state is equal to 1.

FCM was chosen over K-Means and Gaussian Mixture Models (GMMs) due to its ability to handle quantum uncertainty by assigning degrees of membership instead of forcing hard classification. Unlike K-Means, which assumes sharp cluster boundaries, and a GMM, which relies on probabilistic density functions that may not always align with quantum-state distributions, FCM provides a more flexible clustering approach [27]. This is particularly useful in quantum-state classification, where entanglement degradation occurs progressively rather than discretely.

Clustering provides a structured framework for understanding the impact of noise on quantum states, allowing for a data-driven approach to improving quantum-error-correction mechanisms. The classification of states enables the identification of transition thresholds, guiding the selection of corrective measures based on the observed fidelity and entropy levels. The use of Fuzzy C-Means further enhances this process by accommodating quantum uncertainty, ensuring a more nuanced understanding of quantum-state evolution under noisy conditions.

3.5. Temporal Evaluation and Advanced Metrics

3.5.1. Temporal Evolution

This phase analyzes the time evolution of quantum states under the effects of noise and correction. This evaluation provides insight into the dynamical behavior of quantum systems and their ability to preserve important properties such as fidelity and entanglement over time.

The time evolution of a quantum state under a noise channel can be modeled using the Kraus operator formalism:

where represents the Kraus operators that characterize the noise channel, such as depolarization or phase damping.

To mitigate accumulated noise, corrective operators are applied at periodic intervals, modifying the state as follows:

The effect of corrective operators is evaluated using the previously defined metrics, quantum fidelity and von Neumann entropy. These metrics help determine the degree of degradation and the effectiveness of correction techniques.

3.5.2. Explainable Artificial Intelligence (XAI)

To understand the contribution of different features—noise level, entropy, correction intensity, and time step—on the fidelity of the quantum state, SHAP values are used, which provide a quantitative measure of feature importance in the prediction model.

To achieve this, a Random Forest Regressor trained on a dataset containing key parameters of the quantum system is developed: noise level, entropy, correction intensity, and time step. This model enables us to predict the fidelity of quantum states based on these input features and to determine which factors most significantly affect state degradation and recovery.

The process begins with the simulation of quantum states subjected to depolarization noise, where noise levels range from 0 to 1. Corrective operators with varying intensities (from 0.5 to 2.0) are applied at different steps to counteract the noise effects. The von Neumann entropy and quantum fidelity are computed after each correction, creating a dataset that encapsulates the temporal evolution of quantum-state degradation and recovery. By training a Random Forest model, SHAP values can be extracted, which provide a quantitative measure of feature importance in determining fidelity restoration. This allows us to pinpoint which parameters have the most significant influence on quantum-state preservation.

SHAP assigns an important value to each feature by computing its impact on the model’s output. The SHAP value for a given feature is computed as:

where is a subset of all input features excluding feature , is the prediction of the model when only features in are used, and normalizes the sum over all subsets to ensure fair contribution allocation.

By applying SHAP values to our dataset, the contribution of each characteristic can be quantified (noise level, entropy, correction applied, and time step) to the predicted fidelity, allowing us to identify dominant trends in quantum-state evolution under noisy conditions.

To further assess the behavior of quantum states under noise and correction, two advanced temporal metrics are introduced:

- Temporal Degradation Rate () measures how quickly fidelity declines due to noise accumulation over time:where is the fidelity change over time steps. A higher degradation rate indicates rapid quantum information loss.

- Corrective Recovery Rate () evaluates the effectiveness of noise suppression after applying a corrective operator:where is the fidelity after correction and is the fidelity before correction. This metric quantifies the efficacy of noise-suppression strategies over time.

By integrating SHAP analysis, temporal modeling and corrective operations, this phase provides a comprehensive assessment of quantum decoherence and its mitigation. The explainability derived from SHAP allows us to distinguish the parameters that most affect fidelity, optimize noise-correction strategies, and develop more robust approaches to preserve quantum coherence. These conditions help to improve the stability of quantum protocols for communication, teleportation, and computation, ensuring that quantum systems can operate effectively even under realistic noise conditions.

4. Case Study

4.1. Initial Quantum-State Generation

The first step in our study is to generate an ideal Bell state, which serves as a reference for evaluating quantum noise and corrective strategies in subsequent phases. To achieve this, a quantum circuit is implemented using Qiskit that creates the Bell state , a maximally entangled two-qubit state.

The quantum circuit consists of the following operations:

- Hadamard gate () on Qubit 0: creates an equal superposition of and .

- CNOT gate () between Qubit 0 and Qubit 1: correlates the first qubit with the second, establishing quantum entanglement.

After executing the circuit in the AerSimulator from Qiskit, the vector representation of the quantum state was extracted.

The state vector in quantum simulation provides a complete description of the quantum system on a computational basis. In this case, after applying the Hadamard and CNOT gates, the system is in the Bell state , mathematically defined in Equation (4).

This representation indicates that the system exists in a superposition of the and states with equal probability amplitudes.

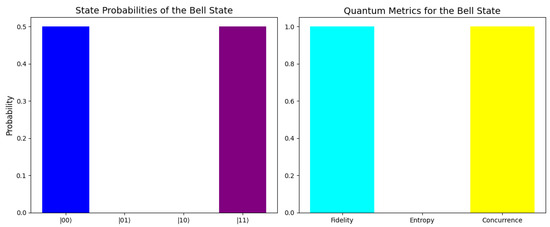

To confirm the accuracy of the generated Bell state, three key metrics were computed. Quantum fidelity measures the similarity between the simulated and theoretical Bell states, with a value close to 1 indicating accurate state preparation. Von Neumann entropy evaluates the system’s uncertainty, where a pure state like the Bell state should have an entropy of zero. Finally, concurrence quantifies the degree of entanglement, with a value of 1 representing maximum entanglement, which is a defining characteristic of an ideal Bell state, Figure 4.

Figure 4.

State probabilities and quantum metrics of the Bell state.

- A fidelity of 1 indicates that the generated state perfectly matches the ideal Bell state. This validates that the quantum circuit and the simulation work optimally in a noise-free environment.

- A value of 0 in the von Neumann entropy calculation confirms that the state is pure, which is a key feature of Bell states in an ideal environment.

- A value of 1 in the Concurrency metric indicates that the state is fully entangled, which is characteristic of Bell states.

The quantum circuit successfully generated the Bell state, as confirmed by the state probability distribution. The high fidelity, entropy, and concurrence validate the presence of strong quantum entanglement.

4.2. Impact of Quantum Noise on Entropy and Fidelity

Quantum systems are inherently fragile and susceptible to environmental noise, which affects their coherence and entanglement properties. Understanding the effects of different noise channels on quantum states is important for advancing quantum computing and quantum communication.

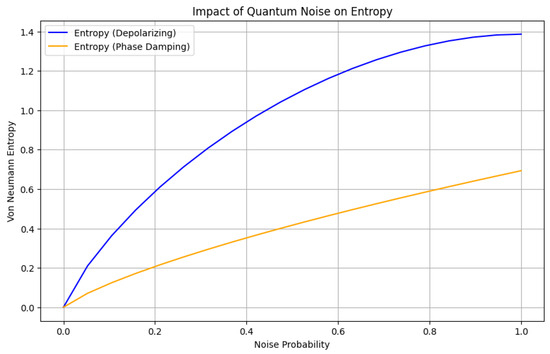

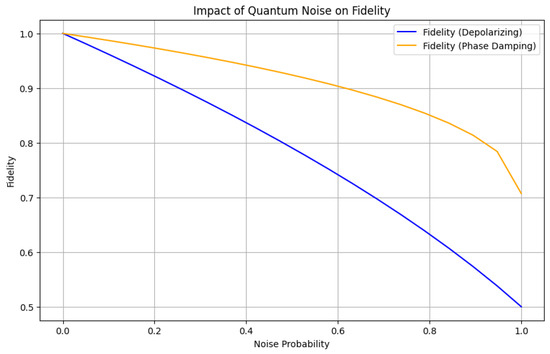

Using the QuTiP library, noise channels are simulated by varying the noise probability from 0 to 1, Equations (7) and (8). The results, shown in Figure 5 and Figure 6, illustrate the effects of noise on entropy and fidelity, respectively.

Figure 5.

Impact of quantum noise on entropy.

Figure 6.

Impact of quantum noise on fidelity.

Figure 5 shows that von Neumann entropy increases with noise probability. Depolarizing noise (blue curve) leads to a rapid entropy increase, reaching approximately 1.4 at , indicating complete randomization of the state. In contrast, phase damping (orange curve) increases entropy more gradually, peaking at , demonstrating that it primarily affects coherence rather than completely mixing the state.

Figure 6 highlights the decline in fidelity as noise probability increases. Depolarizing noise causes a steep decrease in fidelity, reaching at , confirming severe degradation of the quantum state. Phase damping, however, preserves fidelity for longer, maintaining values above , even at high noise probabilities. This result suggests that depolarizing noise is significantly more disruptive to quantum entanglement than phase damping.

The results indicate that depolarizing noise leads to a faster loss of entanglement and coherence. Phase damping, although detrimental, allows quantum correlations to be partially retained, making it less destructive for certain quantum protocols. Both results highlight the need for quantum-error-correction techniques to mitigate the impact of noise and ensure the stability of quantum states in real applications. By understanding the various effects of these noise channels, more resilient quantum algorithms and hardware architectures can be developed, paving the way for practical quantum computing.

4.3. Noise Suppression and Effectiveness Analysis

To assess the impact of noise mitigation, a corrective operator designed to partially restore quantum coherence was introduced. This operator was applied after noise injection, aiming to counteract the decoherence effects induced by depolarizing noise and phase damping. The effectiveness of this approach was evaluated by comparing fidelity and von Neumann entropy before and after correction.

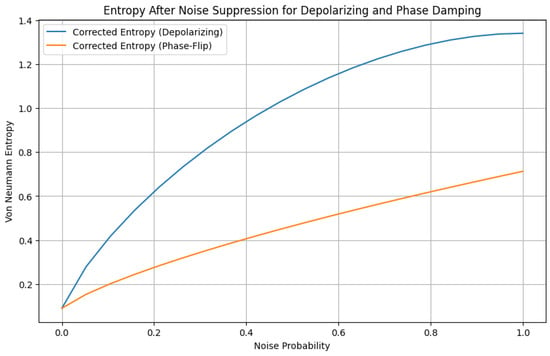

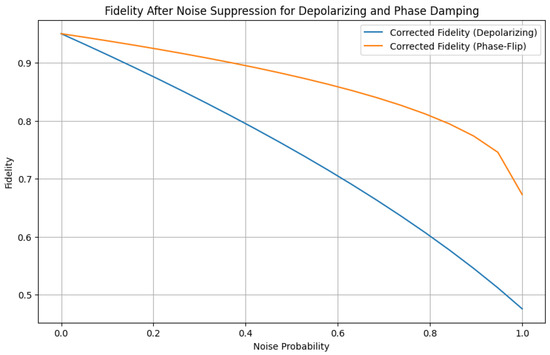

Figure 7 and Figure 8 show the results obtained after performing the noise-suppression process, the following results can be observed:

Figure 7.

Entropy after noise suppression for depolarizing and phase damping.

Figure 8.

Fidelity after noise suppression for depolarizing and phase damping.

- Depolarizing Noise Remains Highly Disruptive. Fidelity continued to decrease rapidly, even after correction, indicating that depolarizing noise introduces randomness that cannot be easily reversed. Entropy remained high, confirming that the quantum state was still highly mixed and unsuitable for computation.

- Phase Damping Demonstrates Greater Stability. Fidelity declined more gradually, suggesting that some coherence was retained post-noise. Entropy increased steadily but remained significantly lower than in depolarizing noise, meaning that the system retained more structure despite decoherence.

- Noise Suppression Had a Limited Effect. The corrective operator did not significantly alter fidelity or entropy trends. This suggests that simple corrective techniques are insufficient to counteract quantum noise, particularly in the case of depolarization. Phase damping proved more manageable, but even in this case, coherence recovery was not substantial.

Noise suppression alone does not fully restore entanglement, especially in the presence of strong decoherence. Depolarizing noise remains the most destructive, requiring advanced error-correction techniques to mitigate its effects. Phase damping, while less damaging, still imposes coherence loss, though some entangled states may remain useful post-noise.

Instead of enforcing further noise suppression, a classification-based approach is more suitable. It is proposed to apply Fuzzy C-Means clustering to categorize entangled states based on their fidelity and entropy trends, enabling the identification of quantum states that remain viable for computation and those that have undergone irreversible degradation.

By shifting the focus towards state classification rather than noise elimination, a deeper understanding of quantum-state resilience can be developed and strategies to mitigate quantum decoherence can be optimized for practical applications.

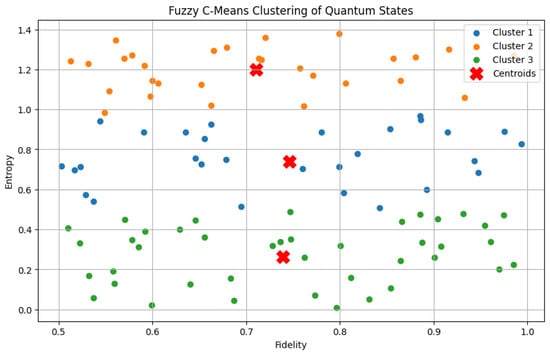

4.4. Analysis of Clustering Results

The application of Fuzzy C-Means clustering to quantum states based on fidelity and entropy has resulted in the identification of three distinct clusters. These clusters highlight different behaviors in the evolution of quantum states under noise effects. The fuzziness parameter m was set to a typical value within the standard range (1.1–2.0) to balance classification sharpness and overlapping membership. While this choice aligns with prior applications of FCM in uncertain environments, further investigation into the impact of different values could enhance clustering performance.

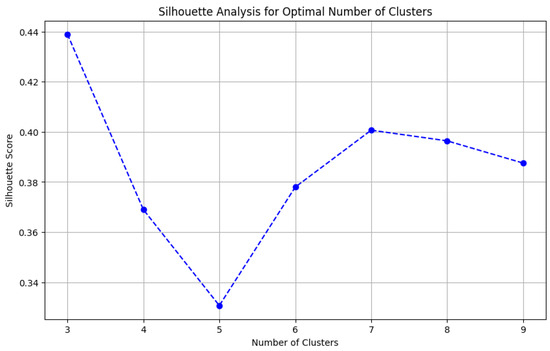

The Silhouette Score analysis indicates that three clusters provide a meaningful partition of the dataset. The analysis suggests that attempting to use more clusters does not significantly improve separability, reinforcing that three groups effectively capture the underlying patterns in the data; see Figure 9.

Figure 9.

Silhouette analysis for optimal number of clusters.

Figure 10.

Fuzzy C-Means clustering of quantum states.

Table 1.

Clusters’ centroids.

- Cluster 1 (Centroid: Fidelity = 0.746, Entropy = 0.741). Represents states with moderate fidelity and entropy. These states retain some coherence but show signs of increased randomness. Could correspond to partially recoverable states after noise effects. Moderate-entropy states may still be recoverable, suggesting that targeted error-correction strategies could improve their usability.

- Cluster 2 (Centroid: Fidelity = 0.711, Entropy = 1.200). Shows the highest entropy, indicating states that are highly mixed and degraded. These states are likely not viable for quantum computation due to their low coherence. Associated with states that have undergone significant decoherence, possibly from depolarizing noise. High-entropy states are largely unusable, indicating that certain noise types (such as depolarization) have an irreversible impact on quantum coherence.

- Cluster 3 (Centroid: Fidelity = 0.739, Entropy = 0.264). Represents states with low entropy and moderate fidelity, suggesting minimal decoherence. These states remain the most stable and usable for quantum operations. Likely corresponds to states affected by milder noise sources, such as phase damping. Low-entropy states remain stable, implying that phase-damping effects may not completely degrade quantum information.

The five most representative quantum states per cluster have been selected according to the highest degree of membership in each category. This selection allows us to analyze how different states are classified according to their fidelity and entropy trends; see Table 2.

Table 2.

Quantum states per cluster membership degree.

4.5. Temporal Evolution of Quantum States Under Noise and Correction

In this section, how quantum states evolve over time when subjected to noise and corrective measures are analyzed. The primary objective is to assess the dynamic behavior of quantum systems and their ability to retain fundamental properties such as fidelity and entanglement over multiple time steps. By introducing a noise-suppression technique with adjustable correction intensity, it is evaluated whether quantum states can recover coherence after the effects of decoherence. Additionally, SHAP (SHapley Additive Explanations) is applied to determine the most critical factors influencing quantum-state stability.

At each time, the noise level is recorded, as well as the correction intensity and entropy and fidelity values. This dataset allows us to analyze the effectiveness of the correction techniques under conditions of increasing noise.

To understand the relationship between noise, correction, and quantum-state stability, a Random Forest regression model is used to predict fidelity. The model is trained using the following key features:

- Noise Level—the intensity of noise applied at each time step.

- Entropy—the level of uncertainty in the quantum state.

- Correction Applied—the magnitude of correction implemented to counteract noise effects.

- Time Step—the evolution of the quantum state over time.

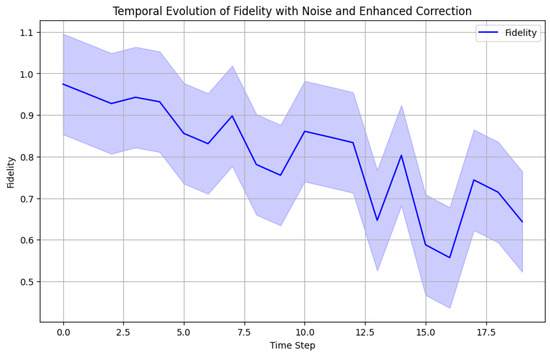

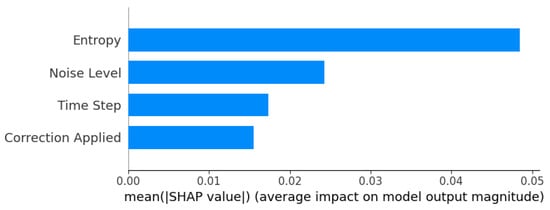

Using SHAP, importance scores are extracted for the characteristics to identify which factors have the most significant impact on fidelity preservation. The SHAP summary provides insights into how noise intensity, entropy growth, and corrective interventions influence the retention of quantum coherence; see Figure 11 and Figure 12.

Figure 11.

Temporal evolution of fidelity with noise and enhanced correction.

Figure 12.

SHAP feature importance.

To gain further insights into quantum-state evolution, two key visualizations are generated:

- Temporal Fidelity Evolution Plot. A time-series graph that illustrates how fidelity changes over time when subjected to noise and correction. The confidence intervals (shaded region) represent the 95% confidence range for fidelity variations over multiple simulation runs. This plot helps determine whether correction strategies effectively preserve coherence or if the state remains irreversibly degraded; see Figure 11.

- SHAP Feature Importance Plot. The SHAP analysis of the model reveals that entropy is the most influential factor affecting quantum-state degradation, followed by noise level, time evolution, and the applied correction. These findings support the idea that classifying quantum states based on entropy and noise resistance is a more effective strategy than solely relying on noise suppression; see Figure 12.

The following conclusions can be drawn from the results obtained in this section.

- Entropy as the Dominant Factor Affecting Fidelity. The SHAP analysis clearly indicates that entropy has the highest impact on the model’s prediction of fidelity. This suggests that as quantum systems evolve under noise, entropy plays an important role in determining whether fidelity can be preserved. Higher entropy values correlate with a loss of coherence, making the system more prone to decoherence effects.

- Noise level is the second most influential factor. Noise level also has a significant impact but is less dominant than entropy. This implies that while noise is a major contributor to fidelity degradation, the way entropy accumulates over time is even more critical. This is consistent with the idea that quantum noise contributes to introducing randomness and fundamentally alters the structure of the system.

- Limited Impact of Correction Applied. The corrective measures applied have a relatively lower impact compared to entropy and noise. This suggests that the current correction techniques may not be sufficient to restore coherence effectively. It may be necessary to explore more sophisticated error-correction mechanisms, such as dynamically adapting the correction intensity based on real-time fidelity loss.

- Time-Step Influence is Minimal. The time-step factor has the least impact on fidelity, indicating that degradation due to noise is not necessarily linear over time. Instead, fluctuations in fidelity suggest that correction effects may vary unpredictably, potentially depending on other underlying interactions within the quantum system.

- Temporal Evolution of Fidelity Shows High Variability. The fidelity plot over time demonstrates a steady decline with oscillatory behavior. While some short-term recoveries are observed, the overall trend indicates a long-term irreversible loss of coherence. The error bands suggest significant variations in fidelity, possibly due to the stochastic nature of noise or variations in correction effectiveness.

5. Discussion and Future Work

This study provides significant insights into the behavior of quantum states affected by depolarizing and phase-damping noise, analyzing both their susceptibility to decoherence and the effectiveness of correction mechanisms. The results demonstrate that depolarizing noise remains highly disruptive, as even after correction, fidelity deteriorates significantly, indicating that the noise introduces non-reversible randomness. The entropy values confirm this, showing that the quantum state increasingly resembles a maximally mixed state, making it unsuitable for quantum computation. In contrast, phase-damping noise exhibits greater stability, with a more gradual fidelity decline, suggesting that some coherence remains even after the noise is introduced. While entropy increases steadily in the phase-damping scenario, it remains considerably lower than in the depolarizing noise case, indicating that the system retains more structure despite decoherence.

The application of a correction operator, although theoretically intended to mitigate the effects of noise, showed limited effectiveness in restoring coherence. Fidelity and entropy trends remained virtually unchanged after correction, suggesting that noise-suppression techniques are insufficient, especially in cases of depolarizing noise. Although phase damping seems more manageable, correction alone did not lead to a substantial recovery of coherence. Taking these observations into account, a classification-based methodology was introduced in the study. A viable alternative could be to perform exhaustive error correction, but nevertheless, Fuzzy C-Means (FCM) clustering is proposed to classify quantum states according to their fidelity and entropy tendencies. This approach allows structured decisions to be made about the feasibility of quantum states for computation.

5.1. Key Contributions and Novel Aspects

This study introduces several contributions to quantum noise analysis and mitigation:

- The application of Fuzzy C-Means (FCM) for quantum-state classification: In contrast to previous research, which mainly focuses on direct noise suppression, clustering techniques are employed in the study to classify quantum states based on their resistance to noise.

- The integration of Explainable Artificial Intelligence (XAI) using SHAP analysis: By identifying the key factors influencing fidelity loss, an interpretable framework is provided to assess the degradation of quantum states. The results show that entropy has the largest impact, followed by noise level, correction intensity, and time evolution.

- Adaptive noise-mitigation strategies: SHAP enables the dynamic selection of corrective operators, optimizing error correction based on state-specific characteristics rather than predefined mechanisms. This improves efficiency in quantum error correction (QEC) by focusing on states that exhibit lower entropy and higher resilience to noise.

- Classification-driven approach to quantum-state management: Instead of applying uniform noise suppression, SHAP-guided classification enables the clustering of states by stability levels. This aligns with the Fuzzy C-Means (FCM) strategy employed in this study, offering a structured alternative to direct correction.

- An analysis of corrective operators and their limitations: The results indicate that standard correction techniques are ineffective against depolarizing noise, which shifts the paradigm towards classification and selective error mitigation.

- The identification of naturally resistant quantum states: Instead of attempting to correct all states, the proposed approach allows for the selection of states that naturally maintain computational feasibility despite noise.

5.2. Limitations of the Study

Although the results provide new insights, this study has the following limitations:

- Limited scope to two noise models: The noise models used in this study (depolarization and phase damping) represent standard theoretical frameworks but do not capture the full complexity of real-world quantum hardware noise, such as T1 relaxation, T2 dephasing, and crosstalk effects.

- Simplified correction operators: The correction techniques explored in this work are relatively simple and may not reflect more sophisticated quantum-error-correction (QEC) methods used in practical quantum computing.

- Experimental validation: The simulations are performed in a controlled computational environment, and real-world quantum hardware implementation is needed to validate these findings.

- Limited analysis of time evolution: Although the study explores the temporal behavior of quantum states after noise, longer time scales and different dynamical models could provide additional information.

- Fixed fuzziness parameter in FCM: The FCM clustering method was applied with a fixed fuzziness parameter , without a systematic sensitivity analysis. Further exploration is required to determine its optimal value in quantum-state clustering.

- Expanding quantum-state classification metrics: Fidelity and von Neumann entropy provide relevant information on quantum-state degradation, and alternative metrics such as quantum mutual information or distinguishability measures could further improve classification accuracy.

- The formalization of correction operators in quantum-error-correction frameworks: Although the impact of the correction operator on fidelity improvement has been empirically validated, a formal mathematical derivation of its structure within quantum-error-correction frameworks remains an open avenue for future research, potentially strengthening its theoretical foundation and applicability in practical quantum-computing scenarios.

- The scalability of SHAP in high-dimensional quantum systems: The computational cost of SHAP analysis scales with the complexity of the quantum system, as evaluating feature importance requires perturbing multiple input variables. While SHAP provided meaningful insights in our two-qubit experiments, its feasibility in larger Hilbert spaces remains an open question.

5.3. Future Work

To further develop these conclusions, the following future orientations are proposed:

- Extension to Additional Noise Models: Investigate how different types of quantum noise (e.g., amplitude damping, bit-flip, phase-flip) impact state classification and correction strategies.

- Refinement of Corrective Operators: Explore adaptive and optimized correction techniques, integrating machine learning to dynamically adjust correction strategies based on real-time state evolution.

- Hybrid Error-Mitigation Approaches: Combine clustering-based classification with quantum-error-correction (QEC) codes to enhance robustness against decoherence [28]. FCM-based classification can guide adaptive QEC strategies, applying minimal correction to resilient states while assigning stronger error correction, such as Steane or Surface Codes, to highly decohered states. SHAP-based interpretability further optimizes this process by dynamically selecting the most effective correction approach.

- Quantum Hardware Implementation: Validate the proposed methodology using real quantum processors (e.g., IBM Quantum, Rigetti) to assess its practical applicability. This will require adapting noise models to specific hardware characteristics, addressing measurement errors and optimizing the computational complexity of the clustering approach in high-dimensional quantum systems.

- The exploration of Reinforcement Learning for Noise Mitigation: Train reinforcement learning models to optimize correction intensity dynamically, improving state resilience.

- The development of Quantum-State Selection Strategies: Investigate methods for identifying and preparing naturally resilient entangled states that exhibit high stability under specific noise conditions.

- The optimization of FCM Clustering Parameters: Conduct a detailed study of the impact of the fuzziness parameter and different distance metrics on the classification of quantum states.

- The expansion of XAI Analysis: Extend the use of interpretability techniques beyond SHAP, incorporating additional explainability frameworks for a deeper understanding of noise impact. Future work should explore computationally efficient SHAP approximations or alternative XAI techniques tailored for high-dimensional quantum-state analysis.

- Extension to Multi-Qubit Systems and Complex Entangled States: Assess whether the proposed methodology remains effective for multi-qubit systems or alternative entangled, GHZ (Greenberger–Horne–Zeilinger) states [29] and W states [30]. This expansion could help identify generalizable noise patterns and improve corrective strategies in larger quantum networks.

- Integrating additional classification metrics beyond fidelity and entropy. Mutual quantum information could provide a more complete understanding of entanglement loss, while distinguishability measures, such as tracking distance, could refine the ability to differentiate quantum states under noise [31].

6. Conclusions

This study lays the foundation for a new paradigm in quantum noise mitigation, moving from a correction-centric approach to one based on classification and adaptive error management. Leveraging Fuzzy C-Means clustering and explainable AI, a data-driven framework for understanding quantum-state resilience is provided. The results obtained highlight the limitations of conventional error-correction methods and suggest that AI-assisted adaptive strategies may offer a more efficient path towards robust quantum computing. Future advances in this field could redefine quantum noise management by shifting from traditional error-correction methods to adaptive, AI-driven strategies that classify, predict, and selectively mitigate noise effects in quantum systems. The integration of explainable AI and clustering techniques may pave the way for more efficient quantum computation and robust entanglement preservation in practical applications.

Funding

This research received no external funding.

Data Availability Statement

The data are obtained from simulations; they are not openly available.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Einstein, A.; Podolsky, B.; Rosen, N. Can Quantum-Mechanical Description of Physical Reality Be Considered Complete? Phys. Rev. B 1935, 47, 777–780. [Google Scholar] [CrossRef]

- Bell, J.S. On the Einstein Podolsky Rosen paradox. Phys. Phys. Fiz. 1964, 1, 195–200. [Google Scholar] [CrossRef]

- Bennett, C.H.; Brassard, G.; Crépeau, C.; Jozsa, R.; Peres, A.; Wootters, W.K. Teleporting an unknown quantum state via dual classical and Einstein-Podolsky-Rosen channels. Phys. Rev. Lett. 1993, 70, 1895–1899. [Google Scholar] [CrossRef] [PubMed]

- Ekert, A.K. Quantum Cryptography and Bell’s Theorem. Phys. Rev. Lett. 1992, 67, 413–418. [Google Scholar] [CrossRef]

- Shor, P.W. Algorithms for quantum computation: Discrete logarithms and factoring. In Proceedings of the 35th Annual Symposium on Foundation of Computer Science, Washington, DC, USA, 20–22 November 1994; pp. 124–134. [Google Scholar] [CrossRef]

- Kraus, K. States, Effects, and Operations: Fundamental Notions of Quantum Theory; Springer: Berlin/Heidelberg, Germany, 1983; Volume 190. [Google Scholar]

- Shor, P.W. Scheme for reducing decoherence in quantum computer memory. Phys. Rev. A 1995, 52, R2493–R2496. [Google Scholar] [CrossRef]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information: 10th Anniversary Edition; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Steane, A.M. Error Correcting Codes in Quantum Theory. Phys. Rev. Lett. 1996, 77, 793–797. [Google Scholar] [CrossRef]

- Díaz, G.M.; Medina, R.G.; Jiménez, J.A.A. Integrating Fuzzy C-Means Clustering and Explainable AI for Robust Galaxy Classification. Mathematics 2024, 12, 2797. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning. A Guide for Making Black Box Models Explainable. Book. 2019, p. 247. Available online: https://christophm.github.io/interpretable-ml-book (accessed on 24 February 2025).

- Team, Q.D. Qiskit: An Open-Source Quantum Computing Framework. 2023. Available online: https://zenodo.org/records/2562111 (accessed on 24 February 2025).

- Preskill, J. Lecture Notes for Physics 229: Quantum Information and Computation; California Institute of Technology: Pasadena, CA, USA, 1998. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. K-means++: The advantages of careful seeding. In Proceedings of the Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Plenum Press: New York, UY, USA, 1981. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4766–4775. [Google Scholar]

- Tightiz, L.; Yoo, J. Quantum-Fuzzy Expert Timeframe Predictor for Post-TAVR Monitoring. Mathematics 2024, 12, 2625. [Google Scholar] [CrossRef]

- Pira, L.; Ferrie, C. On the interpretability of quantum neural networks. Quantum Mach. Intell. 2024, 6, 52. [Google Scholar] [CrossRef]

- Jaouni, T.; Arlt, S.; Ruiz-Gonzalez, C.; Karimi, E.; Gu, X.; Krenn, M. Deep quantum graph dreaming: Deciphering neural network insights into quantum experiments. Mach. Learn. Sci. Technol. 2024, 5, 015029. [Google Scholar] [CrossRef]

- Rosenberg, G.; Brubaker, J.K.; Schuetz, M.J.A.; Salton, G.; Zhu, Z.; Zhu, E.Y.; Kadıoğlu, S.; Borujeni, S.E.; Katzgraber, H.G. Explainable Artificial Intelligence Using Expressive Boolean Formulas. Mach. Learn. Knowl. Extr. 2023, 5, 1760–1795. [Google Scholar] [CrossRef]

- Buonaiuto, G.; Guarasci, R.; Minutolo, A.; De Pietro, G.; Esposito, M. Quantum transfer learning for acceptability judgements. Quantum Mach. Intell. 2024, 6. [Google Scholar] [CrossRef]

- Maity, R.; Srivastava, A.; Sarkar, S.; Khan, M.I. Revolutionizing the future of hydrological science: Impact of machine learning and deep learning amidst emerging explainable AI and transfer learning. Appl. Comput. Geosci. 2024, 24, 100206. [Google Scholar] [CrossRef]

- Cuéllar, M.P.; Pegalajar, M.C.; Cano, C. Automatic evolutionary design of quantum rule-based systems and applications to quantum reinforcement learning. Quantum Inf. Process. 2024, 23, 179. [Google Scholar] [CrossRef]

- Izadi, S.; Forouzanfar, M. Error Correction and Adaptation in Conversational AI: A Review of Techniques and Applications in Chatbots. AI 2024, 5, 803–841. [Google Scholar] [CrossRef]

- Jiráková, K.; Černoch, A.; Lemr, K.; Bartkiewicz, K.; Miranowicz, A. Experimental hierarchy and optimal robustness of quantum correlations of two-qubit states with controllable white noise. Phys. Rev. A 2021, 104, 062436. [Google Scholar] [CrossRef]

- Bezdek, J.C.; Ehrlich, R.; Full, W. FCM: The fuzzy c-means clustering algorithm. Comput. Geosci. 1984, 10, 191–203. [Google Scholar] [CrossRef]

- Pérez-Ortega, J.; Roblero-Aguilar, S.S.; Almanza-Ortega, N.N.; Solís, J.F.; Zavala-Díaz, C.; Hernández, Y.; Landero-Nájera, V. Hybrid Fuzzy C-Means Clustering Algorithm Oriented to Big Data Realms. Axioms 2022, 11, 377. [Google Scholar] [CrossRef]

- Hayden, P.; Nezami, S.; Popescu, S.; Salton, G. Error Correction of Quantum Reference Frame Information. PRX Quantum 2021, 2, 010326. [Google Scholar] [CrossRef]

- Greenberger, D.M.; Horne, M.A.; Zeilinger, A. Going Beyond Bell’s Theorem. In Bell’s Theorem, Quantum Theory and Conceptions of the Universe; Springer: Berlin, Germany, 1989; pp. 69–72. [Google Scholar] [CrossRef]

- Dür, W.; Vidal, G.; Cirac, J.I. Three qubits can be entangled in two inequivalent ways. Phys. Rev. A 2000, 62, 062314. [Google Scholar] [CrossRef]

- Audenaert, K.M. Comparisons between quantum state distinguishability measures. Quantum Inf. Comput. 2014, 14, 31–38. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).