Abstract

In this paper, a completely smooth lower-order penalty method for solving a second-order cone mixed complementarity problem (SOCMCP) is studied. Four distinct types of smoothing functions are taken into account. According to this method, SOCMCP is approximated by asymptotically completely smooth lower-order penalty equations (CSLOPEs), which includes penalty and smoothing parameters. Under mild assumptions, the main results show that as the penalty parameter approaches positive infinity and the smooth parameter monotonically decreases to zero, the solution sequence of asymptotic CSLOPEs converges exponentially to the solution of SOCMCP. An algorithm based on this approach is developed, and numerical experiments demonstrate its feasibility. The performance profile of four specific smooth functions is given. The final results show that the numerical performance of CSLOPEs is better than that of a smooth-like lower-order penalty method.

Keywords:

mixed complementarity problem; second-order cone programming; exponential convergence rate; lower-order penalty approach MSC:

90C25; 90C30; 90C33

1. Introduction

This paper focuses on the second-order cone mixed complementarity problem (SOCMCP) [1], which is to find the vectors and such that

where and are vector-valued functions that are continuously differentiable, and represents a Cartesian product of second-order cones (SOCs), also known as Lorentz cones [2]. That is to say,

where , and is the -dimensional SOC, i.e.,

where denotes the Euclidean norm, and denotes (the semicolon denotes the concatenation of two column vectors). If , denotes , which is a set of non-negative real numbers. It is evident that constitutes a closed, convex, and self-dual cone within .

SOCMCP (1) has a strong connection with convex second-order cone programming (SOCP) and encompasses a diverse array of issues. If x and are removed from (1), then SOCMCP will be simplified as the second-order cone nonlinear complementarity problem (SOCNCP), which is to find such that

Specifically, when the mapping F is only regarded as an affine function, SOCNCP (3) can be reduced to the second-order cone linear complementarity problem (SOCLCP). Meanwhile, if in (2), then reduces to a non-negative quadrant , and SOCMCP, SOCNCP, and SOCLCP reduce to the normal mixed nonlinear complementarity problem (MNCP), nonlinear complementarity problem (NCP), and linear complementarity problem (LCP), respectively.

Over the past two decades, SOCP and the generalized second-order cone complementarity problem (SOCCP) have found extensive applications in engineering design, finance, mechanics, economics, management science, control, and other fields (see [3,4,5,6]). The convex SOCP encompasses quadratically constrained convex quadratic programs, convex quadratic programs, linear programs, and other related problems. SOCP and SOCCP are collectively called second-order cone optimization. Various algorithms have been introduced to address second-order cone optimization problems, including the interior-point method [3,4,7], smoothing Newton method [2,8,9], semismooth Newton method [10,11], smoothing regularization method [1], matrix splitting method [12,13], and merit function method [14,15,16]. While the effectiveness of some methods has seen substantial improvement in recent years, numerous complementary problem challenges still necessitate the development of effective and precise numerical techniques.

It is well known that penalty function algorithms play a significant role in solving constrained optimization problems [17,18,19,20]. The penalty function and the generalized lower-order penalty functions possess numerous advantageous properties, which have garnered significant attention from scholars [17,18,19,20]. In [21], Wang and Yang introduced a power penalty function algorithm for solving LCP. This approach transforms LCP into asymptotically nonlinear equations. The authors demonstrate that, under certain mild assumptions, the sequence of solutions to the asymptotically nonlinear equations converges exponentially to the solution of LCP, when the penalty parameter approaches positive infinity. In [22,23], Huang and Wang employed the power penalty function algorithm to solve NCP and MNCP. By transforming these problems into asymptotically nonlinear equations, they demonstrated that, under certain assumptions, the sequence of solutions to these nonlinear equations converges exponentially. In [24,25], the power penalty algorithm is utilized to solve SOCLCP and SOCNCP, building upon the foundations established in [21,22]. In [26], Chen and Mangasarian introduced a smoothing method, which will be elaborated on in Section 3. This method generates the plus and minus functions via convolution. Building upon the foundations laid in [26], the methodologies in [24,25] were further refined and expanded upon in [27,28]. In these latter works, a smoothing method that generates plus and minus functions through convolution was applied to the power penalty algorithm, enabling its application to SOCLCP and SOCNCP. However, it is important to note that the power penalty algorithm constitutes merely a specialized instance of the broader class of generalized lower-order penalty algorithms. In solving SOCP, SOCMCP (1) offers a more versatile and appropriate framework for tackling the Karush-Kuhn-Tucker (KKT) conditions associated with general SOCP. Furthermore, SOCMCP fundamentally belongs to the category of asymmetric cone complementarity problems, thereby distinguishing it from both SOCLCP and SOCNCP.

In [29], Hao et al. indicated that SOCMCP (1) can be seen as a ‘customized’ complementarity problem tailored to SOCP. They employed the power penalty algorithm to transform SOCMCP into the lower-order penalty equations (LOPEs)

where is a penalty parameter, is a power parameter, and is the projection of on (which will be introduced in (12)). Under certain assumptions, both the LOPEs (4) and SOCMCP (1) possess unique solutions. The convergence analysis reveals that, as , converges exponentially to the solution of SOCMCP (1), where is the solution of the power penalty Equation (4) associated with the penalty parameter .

In [30], Hao employed the smoothing method of generating positive and negative functions through convolution in [26] to construct the smooth function of the projection function , and transformed SOCMCP(1) into the smooth-like lower order penalty equations (SLOPEs)

where is a penalty parameter, is a power parameter, and is a smoothing parameter. Under this criterion, according to the convergence analysis, when the penalty parameter and the smooth parameter , the solution sequence of asymptotic SLOPEs (5) converges to the solution of SOCMCP (1) at an exponential rate. Under certain assumptions, the solutions of SLOPEs(5) and SOCMCP(1) are unique. In [30], four smooth functions provided by [27] are used to solve SLOPEs(5). In the process of designing the algorithm for solving, a simple criterion is given to estimate the parameter . When , the parameter is sufficiently small; when , the parameter is not small enough and a smaller parameter should be chosen.

In this paper, the method presented in [30] will undergo further enhancements and extensions. First, when the index of SLOPEs (5) is not equal to 1, SLOPEs (5) are not smooth at individual points. On this basis, when considering the case where the index of SLOPEs (5) is equal to 1, it facilitates the transformation of SOCMCP (1) into completely smooth lower-order penalty equations. Second, the algorithm presented in [30] provides a simple criterion for estimating the parameter . In this paper, we will try to solve the completely smooth lower-order penalty equations without adding this criterion in the algorithm. Furthermore, we will allow the parameters and to vary concurrently within the steps of the algorithm. Finally, by employing the four specific smooth functions introduced in [31,32], we construct a completely smooth lower-order penalty algorithm, which is subsequently solved using the smooth Newton method. Numerical experiments have been conducted on the examples presented in [29,33,34], and the results of these experiments are analyzed. These results show that the method is feasible.

The remainder of the article is structured as follows: In Section 2, we present some foundational knowledge essential for understanding the subsequent discussions. In Section 3, we structure a smooth approximation method tailored for a specific class of lower-order penalty equations. In Section 5, we investigate the completely smooth lower-order penalty equations aimed at solving SOCMCP (1), along with an analysis of their convergence properties. In Section 6, we construct corresponding algorithms, implement them, and display their numerical experimental results. Finally, we conclude with a summary of our findings. In this paper, for any , notation denotes norm . In particular, it is a Euclidean norm when .

2. Preliminary Results

This section presents the fundamental operations pertaining to a single SOC block , and these results can be seamlessly extended to a general case (2). For any , , their Jordan product [4,33,34] is defined as

The Jordan product is commutative, as . Additionally, the vector serves as the identity element, satisfying for any x. Here, denotes , and represents the standard vector addition. Some basic concepts [2,4,32,33,34,35] of the Jordan product are listed below.

Proposition 1.

For any , with the Jordan product (6), then:

- (a)

- The Jordan product does not satisfy associativity for in general, but it does satisfy power associativity, i.e., .

- (b)

- For any positive integer k, the power of the element is recursively defined as , and when , is defined.

- (c)

- For any positive integers m and n, holds.

- (d)

- The inverse of x is denoted as , satisfying .

- (e)

- If , then for any positive integer k, the inverse of is denoted as .

- (f)

- When , the Jordan product about does not satisfy the closure in general; i.e., there are , .

- (g)

- The trace is . The determinant is .

- (h)

- When , the square root exists, denoted as , and the square root is unique, satisfying , .

- (i)

- The absolute value vector of x is denoted by satisfying . Clearly .

By the properties of SOC, for any , if and only if . Therefore, the complementary conditions can be equivalently expressed through Jordan products.

Subsequently, we present the spectral factorization of vectors belonging to in relation to [2,32,34,35]. For any vector , the vector can be decomposed as

where are the spectral values of x, and are the spectral vectors of x. They are respectively given by

where is any unit vector in . Obviously, , and the spectral decompositions (7) and (8) are unique when . The following will list some basic properties of spectral decomposition [2,32,35].

Proposition 2.

- (a)

- , .

- (b)

- .

- (c)

- are non-negative (positive) if and only if .

For the sake of brevity and clarity, we often express the spectral decomposition of x simply as Spectral decompositions (7) and (8) and Proposition 2 offer a highly valuable tool for analyzing power functions in the context of Jordan product. For example, we have for any , so . On the contrary, for any , according to spectral decompositions (7) and (8), we know . Let , then . By the uniqueness of the square root, we have . These results indicate that when squaring or taking the square root of a vector, only the spectral value of the vector needs to undergo the respective operation, while the corresponding spectral vector remains unchanged.

By utilizing spectral decompositions (7) and (8), we can extend a scalar function to a vector-valued function within the SOC space associated with [2,32,36], which is given by

where and are the spectral values and spectral vectors related to x, respectively, as shown in (8).

For any , under the Euclidean norm, the nearest point of x onto is called the projection of x, denoted by , i.e., and satisfying

Clearly, when , a projection function reduces to .

The subsequent lemma demonstrates that and have the form of (9) (see [2], Proposition 3.3).

Lemma 1.

- (a)

- .

- (b)

- The representation of the projection functions for x onto can be expressed aswhere for any scalar , .

Analogous to the concept of projection functions on , for any vector x with spectral factorizations (7) and (8), we define [8]

where . Clearly, represents the projection functions of on , and .

The analysis of the aforementioned single SOC block can be extended to general situations (2). Specifically, for any , their Jordan product is defined as

Let and respectively denote the projection functions of x and onto ; then

where and for respectively denote the projection functions of and onto the single SOC block .

3. C–M Smooth Function of the Projection Function

In this section, we will introduce a method proposed by Chen and Mangasarian [26], which involves utilizing convolution to generate smooth functions from the plus function and the minus function . To begin, we define the piecewise continuous function , termed the density (kernel) function [26], which satisfies

Next, we define , where is a positive parameter. If , then there forms a smoothing approximation of , i.e.,

The subsequent proposition delineates the properties of . For a detailed proof, refer to Proposition 2.2 in [26].

Proposition 3.

Suppose that is a density function that satisfies (13) and for positive parameter μ. If is the piecewise continuous function, and satisfies , then (14) exhibits the following properties:

- (a)

- is continuously differentiable.

- (b)

- , where

- (c)

- is bounded and satisfying .

Under the assumptions of this proposition, according to Proposition 3(b), we have

By applying the aforementioned method for generating smooth functions to , we obtain

Analogous to Proposition 3, the following properties hold for .

Proposition 4.

Let and be as stated in Proposition 3; then the function (16) has the following properties:

- (a)

- is continuously differentiable.

- (b)

- , where

- (c)

- is bounded and satisfying

Similar to Proposition 3, for Proposition 4(b), we have

From (15) and (17), it can be seen that the functions and defined by (14) and (16) are smooth functions of and , respectively.

According to [31,32], the four specific smooth functions of are

where the corresponding kernel functions are

In [30], Hao et al. propose four smooth functions, of which the smooth function closest to is

where the corresponding kernel functions are

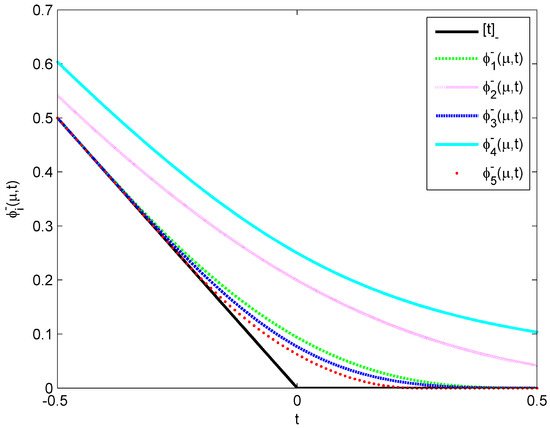

For the smoothing functions (18) to (22), they satisfy Proposition 4. The images of and at are shown in Figure 1.

Figure 1.

Graphs of and with .

From Figure 1, it can be seen that, for a fixed parameter , function is the closest function to among all . In fact, for a fixed parameter and all , we have

In this paper, based on the SOC vector-valued function (9), the smooth functions (18)–(22) are applied to the projection function in (4) to form a completely smooth lower-order penalty equation, which will be introduced in (27), and then an algorithm is constructed to solve SOCMCP (1).

For any , construct a smoothing function for the projection function associated with . First, utilizing the smoothing functions defined by (14) and (16), we will explore the construction of a smoothing function for the projection function related to a single SOC block , where and are given by (10) and (11), respectively. The spectral values and spectral vectors of with respect to are and , respectively. We define a vector-valued function

where is a smoothing parameter. According to [37], is smooth on , and

Finally, we construct smooth functions for projection functions and related to a general cone (2). Define a vector-valued function as

where are defined by (23) and (24), respectively. In order to facilitate the proof of subsequent convergence analysis, by [27], Lemma 3.2, we have

Based on the above smoothing idea, a completely smooth lower-order penalty equation can be established to solve SOCMCP (1). This will be described in the next section.

4. Completely Smooth Lower-Order Penalty Approach and Convergence Analysis

This section will introduce a completely smooth lower-order penalty method for solving SOCMCP (1), and it will also provide a comprehensive convergence analysis. In the following text, unless otherwise specified, is always represented as (2). We consider the completely smooth lower-order penalty equations (CSLOPEs)

where is a penalty parameter, is a smoothing parameter, and is defined as (26). If is violated, then the penalty term in (27) penalizes the ‘negative’ part of y. With a penalty parameter and a smooth parameter , it will force y to approach . In (27), it is straightforward to observe that always holds because . We expect the solution sequence of (27) to tend towards the solution of (1). To achieve this, let

where is defined in (2), and let us make the following assumptions.

Assumption 1.

The function is -monotone on ; i.e., there exist constants and , such that

It is apparent that a function that is strongly monotonic must also be a -monotonic function, though the opposite is not guaranteed. Under Assumption 1, we will undertake an analysis of convergence.

First, we consider the uniqueness of the solutions SOCMCP (1) and CSLOPEs (27). Using Proposition 1.1.3 and Theorem 2.2.3 from [5], Section 3.1 of [29] demonstrates that SOCMCP (1) can be equivalently transformed into a variational inequality. In the case of continuity and -monotone, variational inequalities have a unique solution. Therefore, under Assumption 1, SOCMCP (1) is uniquely solvable.

In [30], SLOPEs (5) consider the approximate smooth case of . In this paper, we further explore the completely smooth case where based on that. Therefore, the remaining results in this section are similar to the propositions and theorems in [30].

Proposition 5.

For any smoothing parameter , the function is defined as (26); then about y is monotone on , i.e.,

According to Proposition 5, for any parameter , function is monotone on . Suppose that

Due to the fact that nonlinear equations can be regarded as unconstrained variational inequalities, the uniqueness solutions of CSLOPEs (27) can be considered through variational inequalities.

Proposition 6.

Under Assumption 1, for any parameter ; then CSLOPEs (27) have a unique solution.

Proposition 7.

For any , when μ is sufficiently small, the solution of CSLOPEs (27) is bounded. Specifically, there exists a positive constant M that does not depend on , , such that .

Proposition 8.

For any , when μ is sufficiently small, there exists a positive constant C that does not depend on , such that

5. Algorithm and Numerical Experiments

In this section, we construct a completely smooth lower-order penalty algorithm for solving SOCMCP (1) based on Theorem 1. Additionally, we present several numerical experiments to demonstrate its effectiveness.

Algorithm 1 offers a completely smooth lower-order penalty method for solving SOCMCP (1). In the following sections, we will present several numerical examples to demonstrate the effectiveness of Algorithm 1. In numerical experiments, the smooth functions (18)–(21) are employed, and all nonlinear equations are solved using the smoothing Newton method. For each numerical example, denotes the initial point, denotes the number of iterations, records the time taken to calculate the problems, and denotes , where denotes the approximate optimal solution obtained through Algorithm 1, and denotes the exact solution of the problem. For all subsequent numerical examples presented hereinafter, unless otherwise stated, we adopt a termination criterion of . In the numerical experiment table, ‘-’ indicates that the results could not be obtained (due to matrix singularity or other reasons). All the numerical experiments in this paper were run in MATLAB 2012a.

| Algorithm 1: Completely Smooth Lower-Order Penalty Algorithm |

|

Example 1.

Consider SOCMCP (1) on , where , and

This example is derived from [29]. The function is a linear function, and is -monotonic but not strongly monotonic due to , which is composed of a cubic term and a constant term. According to [29], the precise solution for Example 1 is . The effect of individual parameter changes on the numerical results is considered below. Taking the initial point , the numerical experiments were conducted following these two distinct steps:

- First, we set and consider various values . The corresponding numerical results are presented in Table 1.

Table 1. The numerical results corresponding to variations in ().

Table 1. The numerical results corresponding to variations in (). - Second, we set and consider various values . The corresponding numerical results are presented in Table 2,

Table 2. The numerical results corresponding to variations in ().

Table 2. The numerical results corresponding to variations in ().

From these tables, we can draw the following conclusions:

- In Table 1, as the penalty parameter increases, Err gradually decreases. When employing for solving the problem, the value of Val transitions from negative to positive. For instance, when utilizing to solve, the penalty parameter increases to 168,070; we observed that Val shifts from a negative to a positive value. This indicates that the penalty parameter 168,070 is already large enough, and simply increasing its value will not yield better results. At this juncture, it becomes essential to consider adopting a smaller smoothing parameter .

- In Table 2, as the smoothing parameter decreases, the overall trend of exhibits a gradual decline. However, the subsequent changes are not significant, primarily due to the simple reduction in the smoothing parameter , without taking into account the influence of penalty parameters on numerical calculations. For instance, when employing for solving purposes, the value of the smoothing parameter changes from 0.1 to 0.01; changes from positive to negative. This indicates that the smoothing parameter is sufficiently small; thus further reductions will not yield improved outcomes. In fact, what is truly required at this point is an increase in the penalty parameter .

In Example 1, a fixed initial point was chosen to investigate the influence of various parameters on numerical experiments. In fact, when different initial points are employed, the numerical outcomes remain consistent with those presented in Table 1 and Table 2. Thus, we will not reiterate them here. The subsequent example serves to illustrate the effects of varying initial points on the numerical results.

Example 2.

For nonlinear SOCP with convex objective functions

the KKT condition is

where ,

This example is derived from [29]. According to [29], the approximate optimal solution for Example 2 is . When applying different initial points, by taking , , initial , and proper initial , the test results are summarized in Table 3. From Table 3, it can be seen that, for all initial points listed in the table, Example 2 can be solved by using Algorithm 1, which indicates that Algorithm 1 is not very sensitive to the initial points.

Table 3.

The numerical results with different initial points ().

In the aforementioned numerical experiment, the smooth functions (18)–(21) were utilized. However, which smooth function has better numerical performance? That is to say, it is imperative to conduct a comparative analysis of the performance among the functions . For this purpose, we employ the performance profile method, as introduced in [38], to evaluate and compare the performances of these functions.

Assuming that there are solvers from the solver set and test problems from the test set , we use computation time and the number of iterations as performance metrics. Here, we adopt computation time as the primary measure. For each problem p and solver s, define

and utilize the performance ratio

Assuming a parameter is selected such that for all , with if and only if solver s fails to solve problem p. The selection of does not influence the performance evaluation. To gain a comprehensive evaluation for each solver, we define

The function represents the cumulative performance ratio, also referred to as the performance profile.

According to [30], is the smooth function with the best performance. To compare the effectiveness of the smooth functions proposed in this paper with from [30], we will consider two numerical examples, which belong to SOCP with both equality and inequality constraints and SOCP with inequality constraint. For the performance profile, the smooth functions from (18)–(22) are treated as five solvers. Additionally, 20 initial points are randomly generated as 20 test problems to evaluate the performance of these solvers on the numerical examples.

Example 3.

Consider nonlinear SOCP with equality and inequality constraints, and convex objective functions

where . The KKT conditions of this SOCP can be transformed into the following SOCMCP:

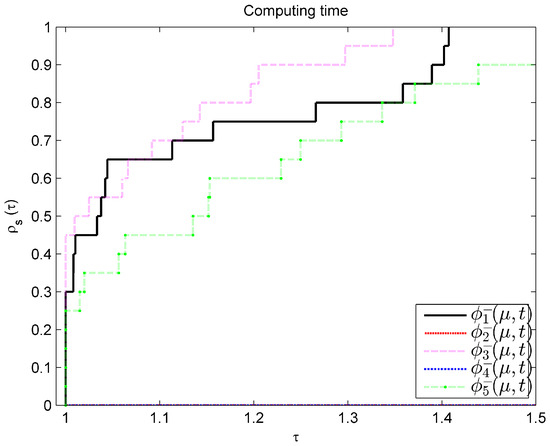

This example is derived from [29]. According to [29], the exact optimal solution for Example 3 is . Randomly selecting 20 initial points, by taking , , initial , and initial , the performance profile based on calculation time is shown in Figure 2.

Figure 2.

Performance profile of for Example 3.

Example 4.

Consider the following nonlinear SOCP:

where and

Let ; then the KKT conditions for the above SOCP is SOCMCP, as follows:

where

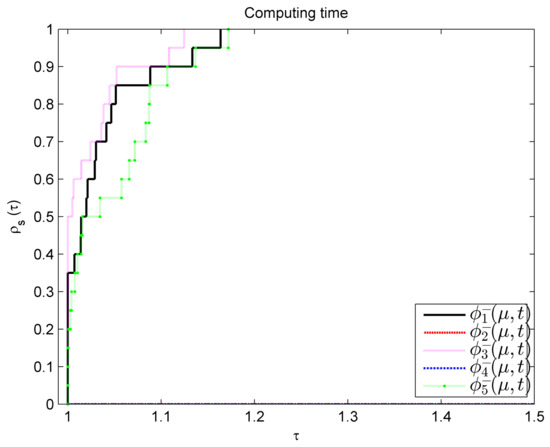

This example is derived from [33]. According to [33], the approximate optimal solution for Example 4 is . Randomly selecting 20 initial points, by taking , , initial , and initial , the performance profile based on calculation time is shown in Figure 3.

Figure 3.

Performance profile of for Example 4.

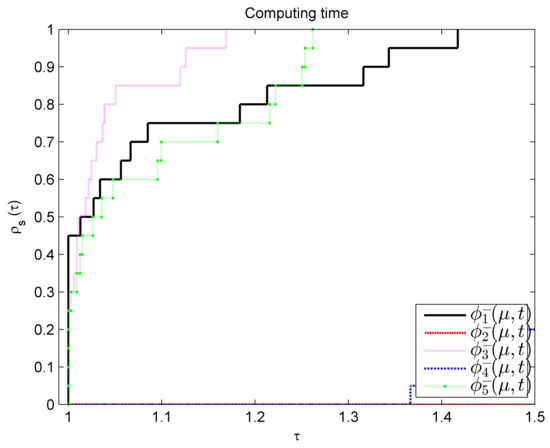

In [33], , , , , and . Randomly selecting and 20 initial points, by taking , , initial , and initial , the performance profile based on calculation time is shown in Figure 4.

Figure 4.

Performance profile of for Example 4.

From Figure 2, Figure 3 and Figure 4, it is evident that exhibits the best performance, followed by and then . Under the parameter selection of Examples 3 and 4, and cannot be solved well. Therefore, when using Algorithm 1 to solve SOCMCP, the smooth function is the best choice.

In [30], the smooth-like lower-order penalty equations (SLOPEs) algorithm transforms SOCMCP (1) into smooth-like lower-order penalty equations (5) with smooth functions. We attempt to use the smooth Newton method to solve nonlinear equations when the SLOPEs index . In Algorithm 1 of this paper, the projection function is approximated by the smooth functions , and is the best choice. Therefore, the performance of Algorithm 1 is compared with that of SLOPEs below, especially using the best smooth function .

Examples 2–4 are numerical examples that satisfy Assumption 1. In Algorithm 1 and SLOPEs, we take , , initial , and initial . In SLOPEs, we take . The numerical performance comparison results for different termination criteria are shown in Table 4.

Table 4.

Comparison results of the value of .

It can be concluded from Table 4 that, in the case of , Algorithm 1 has better numerical performance than the SLOPEs, which is mainly reflected in the following two aspects: (1) From the termination criteria, Algorithm 1 has higher accuracy than the SLOPEs. When the termination criterion is , Example 2 can be solved by Algorithm 1, but cannot be solved using SLOPEs. Therefore, for more refined termination criteria, Algorithm 1 can be calculated. (2) Under the same termination criteria that can be solved, Algorithm 1 has a slight advantage over SLOPEs in iteration time. Algorithm 1 parameterizes the power to 1 on the basis of SLOPEs, further smoothing the SLOPEs. SLOPEs added a criterion to determine whether the iteration point is within the second-order cone in the algorithm steps, while Algorithm 1 simplifies this step to make the algorithm more concise. According to the results in Table 4, there is no significant difference between the values of Val of the two algorithms. From this point of view, Algorithm 1 does have better numerical performance than SLOPEs.

The numerical comparisons above are based on termination criteria. Since Examples 1 and 3 have exact solutions, the numerical performances of Algorithm 1 and SLOPEs are compared by using the distance between the numerical solutions and the exact solutions as a new termination criterion. The new termination criterion for both algorithms is set to . In Algorithm 1 and SLOPEs, all take , , initial , and initial . In the SLOPEs, take as 1. Numerical performance comparison results are shown in Table 5. From the degree to which the numerical solution is close to the exact solution, it can be seen that Algorithm 1 has better numerical performance than SLOPEs.

Table 5.

Comparison results of the value of .

The functions in Examples 1–4 all satisfy Assumption 1 or nearly so. The following example will attempt to solve SOCP with a non-convex objective function.

Example 5.

Consider nonlinear SOCP

The KKT conditions of this SOCP are the following SOCMCP:

where ,

This example is derived from [34]. Since the objective function of the nonlinear SOCP is non-convex, does not satisfy -monotonicity. For different initial points, we attempt to solve Example 5 using Assumption 1 with , taking , , initial , and initial . For the initial points or , the approximate optimal solution is 0.63661, which is consistent with the computational results in [34]. However, for the initial points or , the numerical solution is 0.12991. It can be observed that this solution is also a local optimal solution of the original SOCP. This indicates that Assumption 1 is also applicable to some SOCMCPs that are not -monotone.

6. Conclusions

Based on the completely smooth lower-order penalty equations (CSLOPEs) (27), this paper proposes a completely smooth lower-order penalty method for solving SOCMCP (1). As the main result, Theorem 1 proves that, under Assumption 1, the solution sequence of CSLOPEs (27) converges to the solution of SOCMCP (1) at an exponential rate. In the numerical experiments, five smooth functions corresponding to are considered. The numerical experimental results show that has better numerical performance. Meanwhile, the accuracy of Algorithm 1 is generally higher than that of SLOPEs, and Algorithm 1 is also applicable to some SOCMCPs without Assumption 1.

Author Contributions

Conceptualization, Z.H.; methodology, Q.W.; software, Q.W.; validation, Q.W. and Z.H.; formal analysis, Z.H.; investigation, Q.W.; resources, Z.H.; data curation, Q.W.; writing—original draft preparation, Q.W.; writing—review and editing, Q.W.; visualization, Z.H.; supervision, Z.H.; project administration, Z.H.; funding acquisition, Z.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the author’s Natural Science Fund of Ningxia 2025.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hayashi, S.; Yamashita, N.; Fukushima, M. A combined smoothing and regularization method for monotone second-order cone complementarity problems. Siam J. Optim. 2005, 15, 593–615. [Google Scholar] [CrossRef]

- Fukushima, M.; Luo, Z.Q.; Tseng, P. Smoothing functions for second-order-cone complementarity problems. Siam J. Optim. 2002, 12, 436–460. [Google Scholar] [CrossRef]

- Lobo, M.S.; Vandenberghe, L.; Boyd, S.; Lebret, H. Applications of second order cone programming. Linear Algebra Its Appl. 1998, 284, 193–228. [Google Scholar] [CrossRef]

- Alizadeh, F.; Goldfarb, D. Second-order cone programming. Math. Program. 2003, 95, 3–51. [Google Scholar] [CrossRef]

- Facchinei, F.; Pang, J.S. Finite-Dimensional Variational Inequalities and Complementarity Problems; Springer: New York, NY, USA, 2003. [Google Scholar]

- Wilmott, P.; Dewynne, J.; Howison, S. Option Pricing: Mathematical Model and Computation; Oxford Financial Press: Oxford, UK, 1993. [Google Scholar]

- Monteiro, R.D.C.; Tsuchiya, T. Polynomial convergence of primal–dual algorithms for the second-order cone programs based on the MZ-family of directions. Math. Program. 2000, 88, 61–83. [Google Scholar] [CrossRef]

- Chen, X.D.; Sun, D.; Sun, J. Complementarity functions and numerical experiments for second-order cone complementarity problems. Comput. Optim. Appl. 2003, 25, 39–56. [Google Scholar] [CrossRef]

- Huang, Z.H.; Ni, T. Smoothing algorithms for complementarity problems over symmetric cones. Comput. Optim. Appl. 2010, 45, 557–579. [Google Scholar] [CrossRef]

- Kanzow, C.; Ferenczi, I.; Fukushima, M. On the local convergence of semismooth Newton methods for linear and nonlinear second-order cone programs without strict complementarity. Siam J. Optim. 2009, 20, 297–320. [Google Scholar] [CrossRef]

- Pan, S.; Chen, J.S. A damped Gauss-Newton method for the second-order cone complementarity problem. Appl. Math. Optim. 2009, 59, 293–318. [Google Scholar] [CrossRef]

- Hayashi, S.; Yamaguchi, T.; Yamashita, N.; Fukushima, M. A matrix-splitting method for symmetric affine second-order cone complementarity problems. J. Comput. Appl. Math. 2005, 175, 335–353. [Google Scholar] [CrossRef]

- Zhang, L.H.; Yang, W.H. An efficient matrix splitting method for the second-order cone complementarity problem. Siam J. Optim. 2014, 24, 1178–1205. [Google Scholar] [CrossRef]

- Chen, J.S.; Tseng, P. An unconstrained smooth minimization reformulation of the second-order cone complementarity problem. Math. Program. 2005, 104, 293–327. [Google Scholar] [CrossRef]

- Chen, J.S. Two classes of merit functions for the second-order cone complementarity problem. Math. Methods Oper. Res. 2006, 64, 495–519. [Google Scholar] [CrossRef]

- Chen, J.S.; Pan, S. A descent method for a reformulation of the second-order cone complementarity problem. J. Comput. Appl. Math. 2008, 213, 547–558. [Google Scholar] [CrossRef]

- Di Pillo, G.; Grippo, L. An exact penalty function method with global convergence properties for nonlinear programming problems. Math. Program. 1986, 36, 1–18. [Google Scholar] [CrossRef]

- Zangwill, W.I. Nonlinear programming via penalty functions. Manag. Sci. 1967, 13, 344–358. [Google Scholar] [CrossRef]

- Han, S.P.; Mangasarian, O.L. Exact penalty functions in nonlinear programming. Math. Program. 1979, 17, 251–269. [Google Scholar] [CrossRef]

- Bertsekas, D.; Nedić, A.; Ozdaglar, A. Convex Analysis and Optimization; Athena Scientific: Belmont, MA, USA, 2003. [Google Scholar]

- Wang, S.; Yang, X. A power penalty method for linear complementarity problems. Oper. Res. Lett. 2008, 36, 211–214. [Google Scholar] [CrossRef]

- Huang, C.; Wang, S. A power penalty approach to a nonlinear complementarity problem. Oper. Res. Lett. 2010, 38, 72–76. [Google Scholar] [CrossRef]

- Huang, C.; Wang, S. A penalty method for a mixed nonlinear complementarity problem. Nonlinear-Anal.-Theory Methods Appl. 2012, 75, 588–597. [Google Scholar] [CrossRef]

- Hao, Z.; Wan, Z.; Chi, X. A power penalty method for second-order cone linear complementarity problems. Oper. Res. Lett. 2015, 43, 137–142. [Google Scholar] [CrossRef]

- Hao, Z.; Wan, Z.; Chi, X.; Chen, J. A power penalty method for second-order cone nonlinear complementarity problems. J. Comput. Appl. Math. 2015, 290, 136–149. [Google Scholar] [CrossRef]

- Chen, C.; Mangasarian, O.L. A class of smoothing functions for nonlinear and mixed complementarity problems. Comput. Optim. Appl. 1996, 5, 97–138. [Google Scholar] [CrossRef]

- Hao, Z.; Nguyen, C.T.; Chen, J.S. An approximate lower order penalty approach for solving second-order cone linear complementarity problems. J. Glob. Optim. 2022, 83, 671–697. [Google Scholar] [CrossRef]

- Chieu, T.N.; Jan, H.A.; Hao, Z.; Chen, J.S. Smoothing penalty approach for solving second-order cone complementarity problems. J. Glob. Optim. 2025, 91, 39–58. [Google Scholar]

- Hao, Z.; Wan, Z.; Chi, X.; Jin, Z.F. Generalized lower-order penalty algorithm for solving second-order cone mixed complementarity problems. J. Comput. Appl. Math. 2021, 385, 113168. [Google Scholar] [CrossRef]

- Hao, Z.; Wu, Q.; Zhao, C. Smooth-like lower order penalty approach for solving second-order cone mixed complementarity problems. J. Comput. Appl. Math. 2024, 91, 39–58. [Google Scholar]

- Nguyen, C.T.; Saheya, B.; Chang, Y.L. Unified smoothing functions for absolute value equation associated with second-order cone. Appl. Numer. Math. 2019, 135, 206–227. [Google Scholar] [CrossRef]

- Yong, L.Q. Uniformly smooth approximation function and its properties. J. Shaanxi Univ. Technol. (Natural Sci. Ed.) 2018, 34, 74–79. (In Chinese) [Google Scholar]

- Okuno, T.; Yasuda, K.; Hayashi, S. SL1QP Based algorithm with trust region technique for solving nonlinear second-order cone programming problems. Interdiscip. Inf. Sci. 2015, 21, 97–107. [Google Scholar]

- Miao, X.; Chen, J.S.; Ko, C.H. A smoothed NR neural network for solving nonlinear convex programs with second-order cone constraints. Inf. Sci. 2014, 268, 255–270. [Google Scholar] [CrossRef]

- Faraut, J.; Korányi, A. Analysis on Symmetric Cones; Clarendon Press: Oxford, UK, 1994. [Google Scholar]

- Chen, J.S. SOC Functions and their Applications. In Springer Optimization and Its Applications; Springer: Singapore, 2019; Volume 143. [Google Scholar]

- Chen, J.S.; Chen, X.; Tseng, P. Analysis of nonsmooth vector-valued functions associated with second-order cones. Math. Program. 2004, 101, 95–117. [Google Scholar] [CrossRef]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).