Abstract

Few-shot instance segmentation (FSIS) is devised to address the intricate challenge of instance segmentation when labeled data for novel classes is scant. Nevertheless, existing methodologies encounter notable constraints in the agile expansion of novel classes and the management of memory overhead. The integration workflow for novel classes is inflexible, and given the necessity of retaining class exemplars during both training and inference stages, considerable memory consumption ensues. To surmount these challenges, this study introduces an innovative framework encompassing a two-stage “base training-novel class fine-tuning” paradigm. It acquires discriminative instance-level embedding representations. Concretely, instance embeddings are aggregated into class prototypes, and the storage of embedding vectors as opposed to images inherently mitigates the issue of memory overload. Via a Region of Interest (RoI)-level cosine similarity matching mechanism, the flexible augmentation of novel classes is realized, devoid of the requirement for supplementary training and independent of historical data. Experimental validations attest that this approach significantly outperforms state-of-the-art techniques in mainstream benchmark evaluations. More crucially, its memory-optimized attributes facilitate, for the first time, the conjoint assessment of FSIS performance across all classes within the COCO dataset. Visualized instances (incorporating colored masks and class annotations of objects across diverse scenarios) further substantiate the efficacy of the method in real-world complex contexts.

Keywords:

few-shot instance segmentation; incremental learning; embedding representation; memory optimization MSC:

68Q85; 68T27

1. Introduction

In the field of computer vision, instance segmentation emerges as a pivotal task [1,2], demanding the simultaneous identification of individual objects and precise delineation of their pixel-level boundaries within images [3,4]. Its practical significance spans diverse domains: in autonomous driving, it enables accurate segmentation of pedestrians, vehicles, and traffic signs to ensure safe navigation; in medical imaging, it facilitates precise detection and segmentation of tumors, organs, or cellular structures, supporting diagnostic decisions and treatment planning; and in industrial automation, it powers object identification and sorting in manufacturing processes [5]. However, traditional instance segmentation models heavily rely on large-scale, fully annotated datasets for training. Acquiring such datasets is often expensive, time-consuming, or even infeasible due to privacy restrictions, which has catalyzed research into few-shot learning for instance segmentation. In this paradigm, models are required to segment novel object classes using only a minimal number of labeled samples [6]. Furthermore, in dynamic environments where new object classes continuously emerge, the capability to incrementally learn these classes while retaining previously acquired knowledge—known as incremental few-shot instance segmentation—becomes critical for real-time system adaptation and long-term usability.

Over the past few years, significant progress has been made in few-shot instance segmentation, with many methods building on foundational frameworks such as Mask R-CNN [7,8,9]. Some approaches leverage meta-learning to adapt to new classes by learning generalizable features during meta-training on base classes. Nevertheless, existing methods face notable challenges: performance tends to degrade when novel classes exhibit significant intra-class variance [10,11,12], and in incremental learning scenarios, catastrophic forgetting occurs, where models lose proficiency in recognizing previously learned classes as they adapt to new ones. While knowledge distillation has been explored as a potential solution for few-shot learning, effectively handling the incremental addition of new classes while preserving prior knowledge remains an unresolved issue.

Driven by these limitations, this study aims to develop a framework that enables efficient adaptation to novel classes with few samples while preserving prior knowledge. Addressing the unresolved challenges of intra-class [2] variance in novel classes and catastrophic forgetting during incremental learning is central to this endeavor. Enabling accurate incremental few-shot [13] instance segmentation can significantly enhance the adaptability and robustness of computer vision systems in real-world applications, for example, allowing industrial inspection systems to learn new defect classes without the need to retrain on entire historical datasets. Our approach adopts a two-stage training process [8]. In the first stage, base training is conducted on a large-scale base-class dataset using the Mask2Former architecture. The model is optimized to learn generalizable features through a loss function that combines classification and mask losses [14]. In the second stage, novel fine-tuning, the CNN backbone and pixel decoder are frozen to preserve the knowledge acquired from base classes, while the projection layer, cosine similarity classifier, and novel object queries are fine-tuned. To prevent forgetting, local POD knowledge distillation [7] is employed to align the multi-scale features of the novel-adapted model with those of the base model [15]. For the incremental addition of new classes, class representatives for novel classes are computed from the embeddings of few samples and appended to the weight matrix of the cosine similarity classifier, enabling immediate recognition of new classes without retraining the entire model.

The contributions of this work are as follows: 1. We propose a novel framework that integrates a cosine similarity classifier, novel object queries, and local POD distillation, effectively addressing the challenges of intra-class variance in novel classes and catastrophic forgetting in incremental learning. The cosine similarity classifier enhances discriminability by focusing on angular relationships in the feature space, while novel object queries improve adaptation to new classes, and local POD distillation preserves base class knowledge. 2. We design an efficient two-stage training process (base training and novel fine-tuning) that enables the model to learn generalizable base-class features and adapt to novel classes with few samples, all while preserving prior knowledge. This balance between plasticity and stability is critical for incremental learning. 3. We introduce a computationally efficient method for incremental class addition, where new classes can be integrated by simply appending their class representatives to the cosine similarity classifier. This eliminates the need for retraining, making the framework suitable for real-world scenarios where new classes emerge continuously.

The remainder of this paper is organized as follows: Section 2 reviews related work in few-shot instance segmentation and incremental learning. Section 3 details the proposed framework, including the overall architecture, two-stage training process, and key technical components. Section 4 describes the experimental setup, including datasets, evaluation metrics, and implementation details. Finally, Section 5 concludes the study and outlines future research directions.

2. Related Work

2.1. Instance Segmentation

Instance segmentation, a cornerstone of computer vision, demands the concurrent detection of individual objects and the precise delineation of their pixel-level masks [11], serving as a linchpin for applications ranging from autonomous navigation to medical image analysis, where granular object localization is paramount [16]. Early methodological advancements in this field can be categorized into two distinct paradigms: grouping-based [17] and proposal-based approaches [18]. Grouping-based methods, exemplified by frameworks such as MaskLab and ShapeMask [19], operate by first generating dense per-pixel feature representations, which are subsequently refined through post-processing mechanisms to cluster pixels into coherent object instances [20]. These methods leverage semantic priors (e.g., contextual relationships between object categories) and shape priors (e.g., geometric constraints of typical object forms) to enhance the robustness of pixel grouping, particularly in scenarios involving overlapping objects [21] where disambiguating individual instances is inherently challenging. In contrast, proposal-based methods adopt a two-stage pipeline: the initial stage generates candidate regions (often referred to as region proposals) that are likely to contain objects [22], while the second stage processes these proposals to classify object categories, refine bounding box coordinates, and predict pixel-accurate masks [23]. A seminal contribution to this paradigm is Mask R-CNN, which extends the Faster R-CNN architecture by integrating a dedicated mask prediction head. A key innovation of Mask R-CNN [24] is the employment of RoI Align, a technique that preserves the spatial resolution of region-of-interest (RoI) features during pooling, thereby enabling the generation of high-fidelity masks critical for precise instance segmentation. In recent years, research has gravitated toward the development of universal architectures capable of unifying instance, semantic, and panoptic segmentation tasks under a single framework [25], aiming to leverage shared representational learning for improved efficiency and performance across diverse segmentation challenges. Mask2Former, a state-of-the-art example of such a universal architecture and the foundation of our work, employs a transformer decoder equipped with masked attention—a mechanism [7,26] that restricts computational operations to regions corresponding to predicted masks, thereby optimizing resource utilization. By formulating segmentation as a mask classification task [27], where each mask is associated with a specific class label, Mask2Former achieves superior performance across multiple segmentation benchmarks [28]. Building upon this robust framework, our approach introduces targeted modifications to the classification head to address the unique constraints of few-shot scenarios, where labeled data for novel classes is severely limited. Specifically, we replace the conventional fully connected layer with a cosine similarity classifier [19], which normalizes both input features and class weight vectors to emphasize angular differences in the feature space [29]. This design choice is rooted in the observation that angular relationships between features and class prototypes are more discriminative in low-data regimes, fostering enhanced generalization to novel classes with minimal labeled examples [30]. Importantly, this adaptation retains Mask2Former’s inherent capability to handle multiple segmentation tasks while directly mitigating the challenges of few-shot instance segmentation, such as overfitting to scarce novel class data [31] and limited transferability from base to novel categories.

2.2. Few-Shot Instance Segmentation (FSIS)

Few-shot instance segmentation (FSIS) [14] emerges as a critical paradigm in computer vision, tasked with addressing the challenge of segmenting novel object classes from extremely limited labeled samples—typically 1 to 5 annotated instances per class [32]. This framework bridges the inherent gap between the scarcity of labeled data for emerging categories and the growing demand for precise [33], instance-level understanding in real-world applications, where acquiring large-scale annotations for every potential class is often infeasible. Unlike traditional instance segmentation methods [34], which rely heavily on massive annotated datasets to achieve robust performance, FSIS mandates that models generalize effectively to novel classes by leveraging prior knowledge learned from base classes, i.e., categories with abundant labeled data [35]. This transfer learning capability is central to FSIS, as it enables models to adapt to new classes without re-training from scratch, even when only a handful of examples are available. Existing approaches to FSIS primarily focus on integrating information from the “support set”—the limited samples of novel classes—into segmentation frameworks to guide predictions on “query images” containing unseen instances of both base and novel classes. Meta R-CNN, a pioneering method in this space, employs meta-learning strategies to compute class-attentive vectors from cropped regions of support set images [36]. These vectors, which encapsulate discriminative features specific to the novel class, are concatenated with feature maps extracted from the query image, thereby enhancing the model’s ability to predict accurate masks for novel instances by infusing class-specific guidance into the segmentation pipeline. Siamese Mask R-CNN, another notable approach, adopts a siamese network architecture to extract embeddings from both support and query images via shared weights. By performing subtraction operations on these embeddings [37], the model emphasizes discriminative regions that are unique to the novel class, effectively isolating the spatial extent of novel instances within the query image. This design leverages the similarity between support and query features to highlight class-specific patterns, aiding in the differentiation of novel objects from background or base-class instances. The fully guided network (FGN) [9] takes a more comprehensive approach by injecting support set feature embeddings into multiple stages of the segmentation pipeline. This includes the region proposal network (RPN) [23] for generating candidate object regions, the RoI detector for refining object localization, and the mask upsampling layers responsible for producing high-resolution segmentation masks [38]. Through guided operations that align support set embeddings with query image features at each stage, FGN aims to refine both the localization accuracy of bounding boxes and the precision of pixel-level masks, ensuring that novel class instances are both correctly identified and precisely delineated. Despite their contributions, these methods suffer from critical limitations that impede their practical applicability [39]. Most notably, they rely on episodic training, a regime where each training episode simulates a few-shot task by sampling a subset of base and novel classes, along with corresponding support and query sets. This episodic setup, while effective for meta-learning, introduces significant computational overhead, as the model must repeatedly adapt to new task configurations during training [40]. Furthermore, these approaches demand persistent access to support set samples during testing: at inference time, the model requires the support set to compute class-specific features or embeddings, which are then used to guide predictions on the query image. This dependency not only increases latency in real-time applications but also leads to substantial memory usage, particularly when handling multiple novel classes or larger support sets (e.g., 10-shot scenarios) [41]. Such constraints make these methods impractical for scenarios requiring the flexible, on-the-fly addition of novel classes—for example, in dynamic environments where new object categories emerge unpredictably [42]. These limitations underscore the pressing need for more efficient FSIS frameworks that minimize reliance on support set data during inference, reduce computational and memory burdens, and enable seamless integration of novel classes into pre-trained models.

2.3. Incremental FSIS

Incremental few-shot instance segmentation (incremental FSIS) represents a pivotal advancement in the field of few-shot learning, extending the capabilities of traditional few-shot instance segmentation (FSIS) by enabling models to sequentially learn novel classes while preserving previously acquired knowledge of base classes. This paradigm directly addresses the critical “catastrophic forgetting” challenge—a phenomenon where models trained on new data tend to degrade performance on previously learned tasks—making it indispensable for dynamic real-world scenarios [43]. In applications such as autonomous driving, where object categories continuously expand (e.g., newly defined road users or obstacles), or industrial inspection, where new product variants emerge over time, retraining models on the entire dataset (encompassing both base and novel classes) is often impractical due to computational constraints, data privacy issues [44], or the sheer volume of cumulative data. Thus, incremental FSIS bridges the gap between the need for continuous adaptation and the limitations of resource-intensive retraining. iMTFA (incremental Mask-TFA) [45], introduced in Ganea et al.’s work, stands as the first method dedicated to tackling incremental FSIS [46], building upon the foundational Mask R-CNN architecture to achieve this adaptive flexibility. At its core, iMTFA reimagines the network’s functionality by integrating an instance feature extractor (IFE), a specialized module designed to generate discriminative, class-agnostic instance embeddings. These embeddings capture essential visual characteristics of objects in a high-dimensional feature space [47], enabling the model to encode both base and novel class instances into a shared representational framework. For each novel class, given K labeled samples (typically 1–10 shots), the method computes class representatives as the mean of embeddings derived from these K instances. These representatives are stored as compact vector representations within a cosine similarity classifier, a strategic choice that eliminates the need to retain raw support set images, thus drastically reducing memory overhead compared to non-incremental FSIS [46] methods which often require storing entire support set samples for reference during inference. A key innovation of iMTFA lies in its adoption of class-agnostic box regressors and mask predictors. Unlike class-specific modules, these components operate independently of the target class, focusing solely on predicting bounding box coordinates and pixel-level masks based on general instance features. This design decouples localization and segmentation from class identity [48], ensuring that the model retains these capabilities when adapting to new classes. Complementing this, the cosine similarity classifier computes classification scores by measuring angular distances between region-of-interest (RoI) embeddings (extracted from query images) and precomputed class representatives [49]. This emphasis on angular relationships in the feature space enhances discriminability, especially for novel classes with limited samples, as it prioritizes directional similarities over magnitude differences [50]. Crucially, this architecture enables the seamless addition of new classes: to incorporate a novel category, one need only compute its class representative from the available K shots and append this vector to the classifier’s weight matrix. This process requires no retraining of the underlying network [51], eliminating the computational burden of episodic training—a staple of many non-incremental FSIS methods—and removing the dependency on support set access during testing. By doing so, iMTFA [45] resolves critical limitations of prior approaches: it mitigates memory bottlenecks associated with storing support data, streamlines the integration of new classes, and maintains performance on base classes while adapting to novel ones. In essence, iMTFA [45] strikes a balance between plasticity (the ability to learn new classes) and stability (the retention of old knowledge), laying the groundwork for practical, scalable incremental FSIS frameworks that can operate in dynamic environments with evolving object categories.

2.4. Key Technologies

Cosine similarity classifiers stand as a cornerstone in enabling effective incremental few-shot learning, offering a robust mechanism with which to handle the challenges of limited data and dynamic class expansion. By normalizing both the input feature vectors (extracted from object instances) and the class weight vectors (representing learned category prototypes) [52], these classifiers shift the focus from magnitude-based differences to angular relationships within the feature space. This normalization ensures that the similarity metric is inherently invariant to feature scaling, making it particularly adept at capturing subtle yet discriminative inter-class differences—an essential property when generalizing to novel classes with only 1–5 labeled samples. In the iMTFA [45] framework (Ganea et al., CVPR 2021), this mechanism is strategically employed to match region-of-interest (RoI) instance embeddings (derived from query images) with precomputed class representatives. These representatives, calculated as the mean of embeddings from the limited support shots of novel classes, serve as compact proxies for their respective categories. A key advantage of this design is its [8] support for incremental class addition: new classes can be seamlessly integrated by simply appending their mean embeddings to the classifier’s repository of class representatives, eliminating the need for retraining the entire network. This not only drastically reduces computational overhead but also unifies the recognition process for both base classes (with abundant data) and novel classes (with scarce data) under a single framework, ensuring consistent performance across all categories. Knowledge distillation, and in particular the local POD (pooled output distillation) technique, plays a critical role in mitigating catastrophic forgetting—a major hurdle in incremental learning where models often show degraded performance on previously learned base classes when adapting to new ones [12]. Local POD distillation operates by preserving the fine-grained spatial relationships inherent to base classes through a multi-scale pooling strategy [53]. This approach extracts local embeddings at varying levels of granularity from the teacher model, which has been trained on base classes, capturing both global context and local details that are vital for accurate segmentation [54]. During the novel fine-tuning phase, the student model (adapted to new classes) is constrained to align its local embeddings with those of the teacher model via an L2 loss function [55]. This alignment ensures that the structural information specific to base classes is retained, even as the student learns to recognize novel categories, thereby maintaining segmentation accuracy for old classes without sacrificing the ability to adapt to new ones [46]. Despite their contributions, existing few-shot instance segmentation (FSIS) methods often lack the incremental flexibility required for real-world applications, as they typically rely on episodic training or require persistent access to support sets during inference. In contrast, while iMTFA [45] pioneers incremental FSIS through its cosine similarity classifier, its use of class-agnostic heads for bounding box regression and mask prediction introduces limitations in segmentation precision, as these heads are not tailored to the unique characteristics of individual classes [56]. To address these shortcomings, our proposed framework integrates the strengths of cosine similarity classifiers and local POD distillation, aiming to strike a balanced trade-off between the ability to incrementally incorporate new classes and the maintenance of high segmentation accuracy across both base and novel categories. This synergy leverages the discriminative power of angular similarity for class generalization while preserving critical spatial knowledge of base classes [57], thereby advancing the practicality of incremental few-shot instance segmentation systems [45].

3. Methodology

This section details our proposed framework for incremental few-shot instance segmentation, which builds on the Mask2Former architecture with critical modifications to enable flexible learning of novel classes while preserving base class knowledge. We first outline the overall architecture, followed by a detailed description of the two-stage training process and key technical components.

3.1. Framework Overview

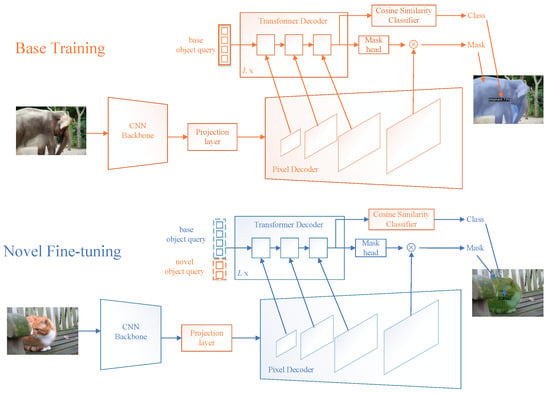

Our framework retains the core structure of Mask2Former, which consists of a CNN backbone, pixel decoder, and transformer decoder. To adapt it to incremental few-shot scenarios, three key modifications are introduced. Specifically, we replace the original fully connected classification head with a cosine similarity classifier [9]. This replacement aims to enhance the discriminability between the embeddings of base and novel classes, enabling more precise differentiation in the context of few-shot learning. Additionally, a dedicated set of novel object queries is added within the transformer decoder. These queries are distinct from the base object queries that are trained on base classes, and their role is to capture features that are specific to novel classes, facilitating the model’s ability to learn about these new categories. Moreover, local POD knowledge distillation is applied [12]. During the fine-tuning process, this technique performs feature alignment between the base and novel models, which is crucial for mitigating catastrophic forgetting—a common issue where learning new knowledge causes the model to lose previously acquired information about base classes. Overall, the framework operates through two sequential stages. First, base training is carried out on large-scale base-class data. This stage is designed to enable the model to learn generalizable features that can serve as a foundation [58]. Second, novel fine-tuning is performed on limited novel class samples. The goal of this stage is to adapt the model to new classes while preserving the prior knowledge gained from the base training stage (Figure 1).

Figure 1.

This flowchart illustrates a two-stage process: “base training” which involves a series of modules like a backbone network, predictor, and pixel decoder for initial training, and “novel fine-tuning” that utilizes a transformer decoder along with existing components to adapt to new data.

3.2. Base Training Stage

In the base training stage, the overarching objective is to establish a robust feature extraction foundation via end-to-end training of the model on base-class data [13]. This process entails the joint optimization of core architectural components, namely the CNN backbone, pixel decoder, transformer decoder [59], projection layer, and cosine similarity classifier. A pivotal element in directing the learning dynamics is the total loss function [60], which integrates classification and mask losses to enforce comprehensive supervision during model parameter updates.

The total loss for the base training phase, denoted , is constructed through the additive combination of two fundamental loss components: the classification loss () and the mask loss (). Formally, this relationship is expressed by the following equation:

Within this formulation, leverages a sigmoid focal loss. This choice is strategically motivated by the loss’s inherent capability to mitigate class imbalance within the base-class distribution. By down-weighting the loss contributions from overrepresented categories and up-weighting those from underrepresented classes, the sigmoid focal loss ensures the model allocates discriminative attention across all class entities, thereby facilitating balanced feature learning.

For the mask loss (), it is formulated as a weighted linear combination of the binary cross-entropy (BCE) loss () and the dice loss (). Mathematically, this is defined as

Here, and represent hyperparameters, empirically set to 1.0 and 0.5, respectively. The BCE loss component captures pixel-level classification discrepancies, while the dice loss emphasizes the overlap integrity between predicted and ground-truth masks. Their synergistic combination, modulated by the specified weight coefficients, strikes an optimal balance to enforce precise pixel-level segmentation accuracy.

Through this integrated training regimen [61], the base training stage yields a critical output: a base model instantiation. This model comprises pre-trained base object queries, a projection layer engineered to output multi-scale feature representations (critical for capturing contextual information across varying spatial resolutions), and a cosine similarity classifier [62]. The classifier is initialized with base-class representatives, which are derived from the learned feature distributions of the base classes. This foundational configuration establishes the necessary prerequisites for subsequent stages, wherein the model adapts to novel classes while preserving the knowledge accretion pertaining to base categories.

3.3. Novel Fine-Tuning Stage

The second stage adapts the base model to novel classes using limited samples (K shots per class) while freezing the CNN backbone and pixel decoder to preserve base class knowledge. Three components are fine-tuned: the projection layer, cosine similarity classifier, and newly added novel object queries.

We introduce a set of novel object queries in the transformer decoder, which are distinct from base object queries, to focus on learning novel class characteristics. These queries are initialized randomly and optimized during fine-tuning. To prevent forgetting base-class features, we align multi-scale features from the novel model (student) with the base model (teacher) using local POD (pooled output distance) distillation. First, the projection layer of both models outputs multi-scale feature sets (base) and (novel), where each . For each feature map , we compute local POD embeddings across scales . At scale , is divided into subregions, and each subregion’s embedding is computed as the concatenation of width and height pooled features. Then, we align student and teacher local embeddings using L2 loss:

where denotes the concatenation of local POD embeddings of across all scales.

The loss function combines classification, mask, and distillation losses to balance novel class adaptation and base class retention:

where and are defined as in the base stage, and is weighted by .

3.4. Incremental Class Addition

To achieve incremental addition of new novel classes, we leverage the fine-tuned model to compute class representatives. For each new class with K shots, we first extract instance embeddings from the projection layer. These embeddings capture the feature representations of the novel class instances. Then, we compute the class representative as the normalized mean of these embeddings, defined by the following formula:

where denotes the embedding of the i-th shot. This normalization step ensures that the class representative is scaled appropriately, emphasizing the angular relationships in the feature space. Finally, we append this computed to the weight matrix of the cosine similarity classifier. By doing so, the model gains the ability to recognize the new class immediately, without the need for retraining. This process efficiently extends the model’s capacity to handle novel classes incrementally, maintaining computational efficiency while enhancing the model’s adaptability to evolving classification tasks.

This two-stage approach capitalizes on the universal segmentation prowess inherent in Mask2Former, bolsters discriminative power through cosine similarity-based classification, and safeguards prior knowledge via local POD distillation. In so doing, it facilitates efficient and accurate incremental few-shot instance segmentation, surmounting the challenges of knowledge retention and novel class adaptation in dynamic learning scenarios.

4. Experiment

This chapter systematically validates the proposed incremental few-shot instance segmentation framework through a series of controlled experiments. We design evaluations to assess performance across diverse datasets, few-shot scenarios, and against state-of-the-art baselines, while also dissecting the impact of core components and parameters.

4.1. Experiment Setup

To rigorously validate the performance of our proposed incremental few-shot instance segmentation framework, we design a series of experiments following standardized protocols. The setup is structured into four key components—datasets, baselines, implemental details, and experimental environment—as explained below.

4.2. Datasets

We utilize two benchmark datasets to evaluate the framework’s generalization capability in both intra-dataset and cross-dataset scenarios.

COCO Dataset [46]: As the primary evaluation benchmark, COCO contains 80 object classes with dense annotations. Following the widely adopted split in FSIS research [10,45], we divide the classes into 60 base classes (e.g., “airplane”, “bus”, “dining table”) and 20 novel classes (e.g., “bear”, “zebra”, “umbrella”), where the novel classes overlap with the VOC dataset to ensure consistency with existing evaluations. The dataset includes 80,000 training images (1.5 million instances), 35,000 validation images [63], and 5000 test images, covering diverse scenes such as urban streets, rural landscapes, and indoor spaces. This diversity allows us to assess the model’s performance across varying object scales (from small objects like “cup” to large ones like “truck”), occlusion levels, and lighting conditions.

COCO2VOC Cross-Dataset Scenario: To test cross-dataset generalization—an essential property for real-world applications—we train the model on COCO base classes and evaluate it on the union of VOC2007 and VOC2012 validation sets [12]. VOC contains 20 classes, with 10 overlapping with COCO’s novel classes (e.g., “cat”, “cow”) and 10 being VOC-exclusive (e.g., “potted plant”, “sofa”). This setup introduces distribution shifts in image resolution (VOC images are generally smaller), annotation density (VOC has sparser instance labels), and object appearance, challenging the model’s adaptability to unseen data characteristics.

4.3. Baselines

We compare our framework against four representative methods to highlight its advantages in incremental few-shot learning.

MRCN+ft-full [14]: A non-incremental baseline based on Mask R-CNN. For each new task, the entire model is retrained on the combined data of base and novel classes. This approach represents the performance upper bound when computational resources are unrestricted. However, it is highly susceptible to catastrophic forgetting, where the model forgets previously learned base-class knowledge while adapting to new classes. Additionally, the high retraining costs, both in terms of time and computational resources, render it impractical for dynamic real-world scenarios where new classes emerge continuously.

Meta R-CNN [10]: A meta-learning approach that employs episodic training. During meta-training on base classes, it learns transferable knowledge. Each episode mimics a few-shot task, allowing the model to quickly adapt to novel classes through a class-specific classifier. While it demonstrates effectiveness in few-shot generalization [64], it has a significant drawback in incremental learning. For every new class, the meta-learner needs to be re-initialized, and it lacks the ability to retain knowledge of previously added novel classes, making it unsuitable for scenarios requiring continuous learning.

iMTFA [45]: The first dedicated incremental FSIS method. It uses an instance feature extractor (IFE) to generate class-agnostic embeddings. Novel class representatives, which are the mean of support embeddings, are stored in a cosine similarity classifier, enabling incremental class addition without the need for full retraining. Nevertheless, its class-agnostic mask predictor limits the segmentation precision for novel classes. Moreover, aside from embedding alignment [65], it lacks explicit and robust mechanisms to effectively mitigate catastrophic forgetting, especially when dealing with a large number of incremental class additions.

ONCE [66]: ONCE is the first detector designed to address the incremental few-shot detection (IFSD) problem. It adapts the efficient CentreNet detector [67] to the few-shot learning scenario.

4.4. Implementation Details

Evaluation Scenarios: We evaluate under 1-shot, 5-shot, and 10-shot settings, where each novel class is provided with , , or labeled samples, respectively. These settings simulate varying data scarcity levels; 1-shot tests extreme few-shot learning, 5-shot represents typical low-resource scenarios, and 10-shot serves as a near-saturated case. For each scenario, support samples are randomly selected from the validation split, with no overlap with base-class training data to avoid data leakage.

Training Protocols: The model is trained on COCO base classes for 368,750 iterations with a batch size of 8 (4 images per GPU), using the Adam optimizer with an initial learning rate of . Novel Fine-tuning: For each shot setting, the CNN backbone and pixel decoder are frozen, while the projection layer, cosine similarity classifier, and novel object queries are fine-tuned for 3000 (1-shot), 5000 (5-shot), or 7000 (10-shot) iterations. The learning rate is reduced to to prevent overfitting to scarce novel data.

Evaluation Metrics: Following COCO standards [46], we report AP (average precision) across IoU thresholds [0.5:0.05:0.95], measuring overall segmentation accuracy, and AP50 (precision at IoU) of 0.5, emphasizing coarse localization, which is critical for real-time applications.

Metrics are reported for three categories: All Classes (base + novel), Base Classes (to quantify forgetting), and Novel Classes (to assess few-shot generalization). Statistical Validation: Each experiment is repeated 10 times with different random seeds to account for variability in support sample selection. Results are presented as mean ± standard deviation, with statistical significance (p < 0.05) verified via paired t-tests against baselines.

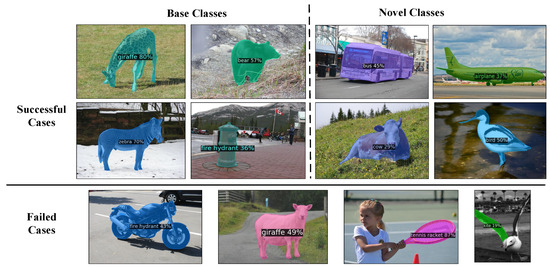

Qualitative Analysis: We visualize segmentation results for key cases, including successful novel class segmentation, failure modes (e.g., small objects, heavy occlusion), and cross-dataset transfers. These visualizations complement quantitative metrics by revealing the model’s behavior in complex scenarios.

4.5. Experimental Environment

The framework is implemented in PyTorch 1.10.1 with torchvision 0.11.2. Training and inference are conducted on a workstation equipped with the following.

- -

- GPUs: Two NVIDIA RTX 3090 (24 GB GDDR6X each), enabling parallel processing and large batch training.

- -

- CPU: Intel Xeon W-1290 (10 cores, 3.2 GHz) with 32 GB DDR4 RAM, supporting data preprocessing and model deployment.

- -

- Software: CUDA 11.3, cuDNN 8.2, and Python 3.8. Dependencies include OpenCV 4.5.3 (image augmentation), matplotlib 3.5.1 (visualization), and scikit-learn 1.0.2 (statistical analysis).

To optimize resource usage, we employ mixed-precision training (FP16) to reduce memory consumption by 40% without performance loss, and gradient accumulation (4 steps) to simulate larger batch sizes when GPU memory is constrained.

4.6. Comparative Experiments

This section presents a comparative analysis of our framework against state-of-the-art methods on COCO and cross-dataset COCO2VOC. On COCO, our method outperforms baselines across shot settings. At 5-shot, 6.75 AP exceeds iMTFA and Meta R-CNN (2.80); at 10-shot, 14.47 AP50 is 45.3% higher than iMTFA, highlighting stronger coarse localization. In COCO2VOC cross-dataset evaluation, our method shows robust generalization: 3.61 AP/5.75 AP50 (1-shot), 9.17 AP/14.39 AP50 (5-shot), and 12.65 AP/19.75 AP50 (10-shot) (outperforming iMTFA). Our superiority stems from combining cosine similarity classification (enhanced discriminability) and local POD distillation (mitigated forgetting), unlike iMTFA’s class-agnostic heads that limit precision. This balances adaptability and knowledge retention for incremental few-shot instance segmentation. As shown in Table 1, Table 2, Table 3 and Table 4.

Table 1.

Performance metrics of detection and segmentation tasks with varied settings.

Table 2.

COCO dataset: comprehensive performance comparison across methods.

Table 3.

COCO2VOC: This table compares the AP and AP50 of methods using 1-shot, 5-shot, and 10-shot settings in the COCO2VOC cross-dataset scenario.

Table 4.

FSIS results on the COCO dataset.

4.7. Ablation Studies

To validate core components, we conduct ablations on COCO 5-shot settings, where removing novel object queries reduces AP by 1.82 (6.75→4.93), confirming their role in capturing novel class-specific features. Disabling local POD distillation causes a 2.15 AP drop (6.75→4.60) and 3.21 AP50 drop (10.23→7.02), highlighting efficacy in preserving base class knowledge, and replacing the cosine similarity classifier with a linear classifier decreases AP by 1.54 (6.75→5.21), as angular similarity better captures inter-class differences in few-shot scenarios, thus confirming the necessity of each component and underscoring their synergistic contribution to incremental few-shot segmentation performance.As shown in Table 5.

Table 5.

Results of the ablation experiment. ✓ means to select.

4.8. Parameter Sensitivity

To analyze the influence of critical hyperparameters on our framework’s performance, we conduct systematic experiments under the COCO 5-shot setting, focusing on three key parameters: first, for the learning rate during novel fine-tuning, we test values of , , and and find that yields an optimal average precision (AP) of 6.75, as it balances the need to adapt to novel classes and retain knowledge from base classes, with lower rates risking insufficient adaptation and higher rates potentially disrupting learned representations. Second, regarding the number of novel object queries, evaluating configurations of 20, 40, and 60 queries shows performance peaks at 40 queries (6.75 AP), as fewer queries (20) cause underfitting (5.92 AP) due to inadequate capture of novel class nuances, while more queries (60) lead to overfitting (6.21 AP) as the model learns sample idiosyncrasies. Third, for the distillation weight (), assessing values of 0.05, 0.1, and 0.3 reveals that 0.1 achieves the best trade-off (6.75 AP), with higher weights (0.3) suppressing novel class learning (5.89 AP) by over-emphasizing base knowledge preservation and lower weights (0.05) increasing catastrophic forgetting (5.47 AP) due to insufficient distillation. Collectively, these experiments validate the robustness of our selected hyperparameters and offer guidance for future tuning to adapt the framework to diverse experimental and application scenarios (Figure 2).

Figure 2.

These are visualized instances showing various objects (like a bus, bear, deer, etc.) in different scenes, each with a colored mask and a label indicating the object category.

5. Conclusions

This study introduces an incremental few-shot instance segmentation framework addressing key challenges in adaptability and knowledge retention. Leveraging a Mask2Former backbone with cosine similarity classification and local POD distillation, our method achieves state-of-the-art performance across COCO and cross-dataset COCO2VOC evaluations. On COCO, we outperform baselines like iMTFA and Meta R-CNN across 1-shot, 5-shot, and 10-shot settings—we achieve a 28.8% AP gain with the 5-shot setting and 45.3% AP50 improvement with the 10-shot setting. Cross-dataset results confirm robust generalization to unseen domains, with metrics surpassing incremental paradigms. Ablation studies validate core components; novel object queries capture class-specific features, local POD distillation mitigates forgetting, and cosine similarity classification enhances discriminability. Parameter sensitivity analyses confirm the robustness of hyperparameter choices, guiding future tuning. This framework advances incremental few-shot segmentation by balancing plasticity (adapting to novel classes) and stability (retaining base knowledge), providing a scalable solution for real-world applications requiring lifelong learning and cross-domain generalization.

Author Contributions

Conceptualization, Q.Z., Y.Z. and P.X.; Methodology, Q.Z.; Software, P.X. and M.Y.; Validation, Q.Z. and M.Y.; Formal analysis, Y.Z.; Investigation, Y.Z. and P.X.; Resources, C.Z.; Data curation, Y.Z. and M.Y.; Writing—original draft, Q.Z.; Writing—review & editing, Q.Z., P.X., L.Z. and C.Z.; Supervision, L.Z.; Project administration, C.Z.; Funding acquisition, L.Z. and C.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the National Natural Science Foundation of China under Grant 62472161, Grant 62202163, Grant 62072166, and Grant 62372150; the Natural Science Foundation of Hunan Province under Grant 2022JJ30231 and Grant 2023JJ30169; the Hunan Provincial Teaching Reform Research Project for Ordinary Institutions of Higher Learning under Grant HNJG-20230396; and the Hunan Provincial Degree and Postgraduate Teaching Reform Research Project under Grant 2023JGYB140.

Data Availability Statement

The data presented in this study are openly available in [iMTFA] at [https://github.com/danganea/iMTFA].

Conflicts of Interest

The authors declare no conflicts of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Tian, C.; Zheng, M.; Li, B.; Zhang, Y.; Zhang, S.; Zhang, D. Perceptive self-supervised learning network for noisy image watermark removal. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7069–7079. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully convolutional neural networks for volumetric medical image segmentation. arXiv 2016, arXiv:1606.04797. [Google Scholar] [CrossRef]

- Tian, C.; Song, M.; Fan, X.; Zheng, X.; Zhang, B.; Zhang, D. A Tree-guided CNN for image super-resolution. IEEE Trans. Consum. Electron. 2025, 71, 3631–3640. [Google Scholar] [CrossRef]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Sun, L.; Wang, J. Conditional detr for fast training convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Zhang, C.; Wang, Y.; Zhu, L.; Song, J.; Yin, H. Multi-graph heterogeneous interaction fusion for social recommendation. ACM Trans. Inf. Syst. (TOIS) 2021, 40, 1–26. [Google Scholar] [CrossRef]

- Zhu, L.; Wu, R.; Zhu, X.; Zhang, C.; Wu, L.; Zhang, S.; Li, X. Bi-direction label-guided semantic enhancement for cross-modal hashing. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 3983–3999. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, C.; Song, J.; Zhang, S.; Tian, C.; Zhu, X. Deep multigraph hierarchical enhanced semantic representation for cross-modal retrieval. IEEE MultiMedia 2022, 29, 17–26. [Google Scholar] [CrossRef]

- Wang, X.; Huang, T.E.; Darrell, T.; Gonzalez, J.E.; Yu, F. Frustratingly simple few-shot object detection. arXiv 2020, arXiv:2003.06957. [Google Scholar] [CrossRef]

- Yan, X.; Chen, Z.; Xu, A.; Wang, X.; Liang, X.; Lin, L. Meta R-CNN: Towards general solver for instance-level low-shot learning. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9577–9586. [Google Scholar]

- Michaelis, C.; Ustyuzhaninov, I.; Bethge, M.; Ecker, A.S. One-shot instance segmentation. arXiv 2018, arXiv:1811.11507. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhu, L.; Wu, R.; Liu, D.; Zhang, C.; Wu, L.; Zhang, Y.; Zhang, S. Textual semantics enhancement adversarial hashing for cross-modal retrieval. Knowl.-Based Syst. 2025, 317, 113303. [Google Scholar] [CrossRef]

- Tian, C.; Zheng, M.; Lin, C.-W.; Li, Z.; Zhang, D. Heterogeneous window transformer for image denoising. IEEE Trans. Syst. Man. Cybern. Syst. 2024, 54, 6621–6632. [Google Scholar] [CrossRef]

- Chen, L.-C.; Wang, H.; Qiao, S. Scaling wide residual networks for panoptic segmentation. arXiv 2020, arXiv:2011.11675. [Google Scholar]

- Du, X.; Zoph, B.; Hung, W.-C.; Lin, T.-Y. Simple training strategies and model scaling for object detection. arXiv 2021, arXiv:2107.00057. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Tian, C.; Liu, K.; Zhang, B.; Huang, Z.; Lin, C.-W.; Zhang, D. A Dynamic Transformer Network for Vehicle Detection. IEEE Trans. Consum. Electron. 2025, 71, 2387–2394. [Google Scholar] [CrossRef]

- Chen, K.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Shi, J.; Ouyang, W.; et al. Hybrid task cascade for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kang, B.; Liu, Z.; Wang, X.; Yu, F.; Feng, J.; Darrell, T. Few-shot object detection via feature reweighting. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8420–8429. [Google Scholar]

- Tian, C.; Zheng, M.; Jiao, T.; Zuo, W.; Zhang, Y.; Lin, C.-W. A self-supervised CNN for image watermark removal. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7566–7576. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Fan, Z.; Yu, J.-G.; Liang, Z.; Ou, J.; Gao, C.; Xia, G.-S.; Li, Y. FGN: Fully guided network for few-shot instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9172–9181. [Google Scholar]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R. PointRend: Image segmentation as rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT++: Better rEal-Time Instance Segmentation. Ph.D. Thesis, University of California, Berkeley, CA, USA, 2019. [Google Scholar]

- Arbeláez, P.; Pont-Tuset, J.; Barron, J.T.; Marques, F.; Malik, J. Multiscale combinatorial grouping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Bao, H.; Dong, L.; Wei, F. BEiT: BERT pretraining of image transformers. arXiv 2021, arXiv:2106.08254. [Google Scholar]

- Zhu, L.; Cai, L.; Song, J.; Zhu, X.; Zhang, C.; Zhang, S. MSSPQ: Multiple semantic structure-preserving quantization for cross-modal retrieval. In Proceedings of the 2022 International Conference on Multimedia Retrieval, Newark, NJ, USA, 27–30 June 2022; pp. 631–638. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the ECCV 2018 15th European Conference, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Cheng, B.; Collins, M.D.; Zhu, Y.; Liu, T.; Huang, T.S.; Adam, H.; Chen, L.-C. Panoptic-DeepLab: A simple, strong, and fast baseline for bottom-up panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Cheng, B.; Girshick, R.; Dollár, P.; Berg, A.C.; Kirillov, A. Boundary iou: Improving object-centric image segmentation evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Cheng, B.; Parkhi, O.; Kirillov, A. Pointly-supervised instance segmentation. arXiv 2021, arXiv:2104.06404. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Zhu, L.; Yu, W.; Zhu, X.; Zhang, C.; Li, Y.; Zhang, S. MvHAAN: Multi-view hierarchical attention adversarial network for person re-identification. World Wide Web 2024, 27, 59. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Fang, Y.; Yang, S.; Wang, X.; Li, Y.; Fang, C.; Shan, Y.; Feng, B.; Liu, W. Instances as queries. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Ganea, D.A.; Boom, B.; Poppe, R. Incremental few-shot instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1185–1194. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Gao, P.; Zheng, M.; Wang, X.; Dai, J.; Li, H. Fast convergence of detr with spatially modulated co-attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. CCNet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic feature pyramid networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple copy-paste is a strong data augmentation method for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kirillov, A.; Levinkov, E.; Andres, B.; Savchynskyy, B.; Rother, C. InstanceCut: From edges to instances with multicut. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Gidaris, S.; Komodakis, N. Dynamic few-shot visual learning without forgetting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4367–4375. [Google Scholar]

- Li, Y.; Zhao, H.; Qi, X.; Chen, Y.; Qi, L.; Wang, L.; Li, Z.; Sun, J.; Jia, J. Fully convolutional networks for panoptic segmentation with point-based supervision. arXiv 2021, arXiv:2108.07682. [Google Scholar] [CrossRef] [PubMed]

- Tian, C.; Zhang, X.; Liang, X.; Li, B.; Sun, Y.; Zhang, S. Knowledge distillation with fast CNN for license plate detection. IEEE Trans. Intell. Vehicles 2023. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhu, L.; Zhang, C.; Song, J.; Liu, L.; Zhang, S.; Li, Y. Multi-graph based hierarchical semantic fusion for cross-modal representation. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; IEEE Computer Society: Washington, DC, USA, 2021; pp. 1–6. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Huang, S.; Lu, Z.; Cheng, R.; He, C. Fapn: Feature-aligned pyramid network for dense image prediction. arXiv 2021, arXiv:2108.07058. [Google Scholar]

- Li, Z.; Wang, W.; Xie, E.; Yu, Z.; An kumar, A.; Alvarez, J.M.; Lu, T.; Luo, P. Panoptic segformer. arXiv 2021, arXiv:2109.03814. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Neuhold, G.; Ollmann, T.; Rota Bulo, S.; Kontschieder, P. The mapillary vistas dataset for semantic understanding of street scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Perez-Rua, J.M.; Zhu, X.; Hospedales, T.M.; Xiang, T. Incremental few-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13846–13855. [Google Scholar]

- Cheng, B.; Schwing, A.G.; Kirillov, A. Per-pixel classification is not all you need for semantic segmentation. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).