Abstract

The basic theoretical properties of the approximate symmetric chordal metric (ASCM) for two real or complex numbers are studied, and reliable, accurate, and efficient algorithms are proposed for its computation. ASCM is defined as the minimum between the moduli of the differences of the two numbers and of their reciprocals. It differs from the chordal metric by including the modulus of the difference of the numbers. ASCM is not a true mathematical distance, but is a useful replacement for a distance in some applications. For instance, sensitivity analysis or block diagonalization of matrix pencils benefit from a measure of closeness of eigenvalues and also of their reciprocals; ASCM is ideal for this purpose. The proposed algorithms can be easily implemented on various architectures and compilers. Extensive numerical tests were performed to assess the performance of the associated implementation. The results were compared to those obtained in MATLAB, but with appropriate modifications for numbers very close to the bounds of the range of representable values, where the usual formulas give wrong results.

MSC:

15A20; 15A22; 65Y04; 68-04; 68Q25; 93B11

1. Introduction

The approximate symmetric chordal metric for two real or complex numbers is defined by

It differs from the Euclidean distance by also considering the absolute difference of the reciprocals of the given numbers. It is not a true mathematical distance, but can be used instead of a distance in some applications.

The approximate symmetric chordal metric in (1) measures the closeness of two numbers. In mathematics, a measure is a function that assigns a non-negative number to certain subsets of a set. Using pairwise distances (1) of a set of numbers, it is possible to build subsets (clusters) and a measure of their closeness. Measures are used in various fields, including analysis, probability theory, and geometry, as well as in sophisticated investigations and computational techniques.

There are quite a number of references on the chordal metric, but no references to the approximate symmetric chordal metric could be found. Klamkin and Meir [1] have shown that the chordal metric on a normed linear space, defined as , x, , satisfies the triangle inequality. Let be the Riemann sphere with diameter 1 and center . With z, , Euclidean metric in , the chordal metric of is [2,3,4]

where is the stereographic projection, is the complex plane, and N is the north pole of . Clearly, and . If , then . This way, maps the extended complex plane to . The chordal metric is indeed a distance, since satisfies triangle inequality. If , then , and if too, then . If a sphere with unit radius and center in the origin is used, the right hand side in (2) is multiplied by 2 [3,5].

Several authors present theoretical results and various applications of the chordal metric. Rainio and Vuorinen [4] use (2) to identify cases where the distortion caused by symmetrization of a quadruple of objects, mapped by a Möbius transformation, can be measured in terms of its Lipschitz constant in the Euclidean or the chordal metric. Álvarez-Tuñón et al. [6] investigate various pose representations in visual odometry networks, and show that the chordal distance between two rotations ensures faster convergence of the parameters than distances measured by Euler angles or quaternions. Xu et al. [7] provide sharper bounds of the chordal metric between generalized singular values of Grassmann matrix pairs. Sun [8] shows that the generalized singular values of , with , and A and B complex and , are insensitive to strong perturbations in the elements of A and B, if the chordal metric is used to describe such perturbations. Sasane [2] defines an abstract chordal metric on linear control systems described by transfer functions and shows that strong stabilizability is a robust property in this metric. A refinement of this metric for stable transfer functions is introduced in [9].

A possible application of the approximate symmetric chordal metric is ordering the (generalized) eigenvalues of a matrix (pencil), according to their pairwise distances measured using (1). This is important in numerical linear algebra, control theory, and other domains. For instance, it is essential for computing invariant or deflating subspaces of matrices or matrix pencils, respectively, or of their block-diagonal form [10]. The available algorithms for finding this form use non-unitary transformation matrices with a controlled numerical condition, but they can be inefficient for a matrix (pencil) with dense clusters of eigenvalues. Since the numerical condition is related to the sensitivity of the spectrum associated to the current block (pair) that should be split, a clever strategy for ordering the eigenvalues may increase the efficiency. The sensitivity of eigenvalue problems is investigated in [11,12,13,14,15,16]. Efficient and reliable implementations of the theoretical results are available in the LAPACK package [17] and in recent releases of interactive environments like MATLAB [18] or Mathematica [19]. The applications mentioned in this paragraph do not need the triangle inequality, but it is important that close eigenvalues be included in the same cluster. With (1), an eigenvalue will be added to a cluster containing a value if either or is smaller than a given threshold. If , , coincides to the chordal metric in [1], and it is an approximation of the value given by (2). So, an approach based on the chordal metric will choose or its approximation, even if . If and are eigenvalues for a matrix pencil defined by , then and are eigenvalues of the matrix pencil . Since A and B have a symmetric role, it is reasonable to consider the closeness of eigenvalues for both and .

The idea of decoupling a dynamical system to simpler subsystems, preserving the essential dynamics, has been recently extended to certain nonlinear systems, mainly described by ordinary differential equations (ODE) or difference equations. A so-called normal form is obtained using a near-identity transformation of coordinates, preserving the essential system behavior near an equilibrium point. Higher-order Taylor expansion of the system model can capture the major nonlinear effects. An application of the normal form theory to power systems described by differential algebraic equations (DAE) is studied in [20]. The new, structure-preserving method allows direct application of the theory to the DAE system.

A main contribution of the paper is the systematic presentation of the properties of the approximate symmetric chordal metric and of reliable, accurate and efficient algorithms for its computation. A factored representation of the modulus of complex numbers is introduced, which allows obtaining very accurate results for the entire range of the floating point system. Two different approaches to evaluate are described. The most efficient approach needs only the moduli of the two numbers and of their difference, but it cannot be used if that difference is not in the range of representable numbers. The proposed algorithms were implemented in Fortran and exhaustively tested in MATLAB.

The rest of the paper is organized as follows. Section 2 summarizes the notation and the main issues concerning the floating point system of computers, needed in the sequel. Section 3 investigates the properties of the approximate symmetric chordal metric, while Section 4 presents reliable, accurate, and efficient algorithms for its computation. Section 5 discusses the numerical results obtained using a careful implementation of these algorithms. Section 6 summarizes the conclusions.

2. Preliminaries

For technical convenience, will denote the real numbers field extended by the ∞ and symbols, and similarly for . A complex number , with , is infinite if and/or ; otherwise, a is finite. Let , be two finite complex numbers. For performing computations with/on and , it should be assumed that and , , are in the range of the computer floating point system, that is, and are (approximately) representable by their real and imaginary parts. Hence, , , where K is the maximum representable number,

with b the base of the numeral system, the maximum exponent, and the relative machine precision. In a common binary double precision floating point system, , , (or for machines with proper rounding), and is the number of base digits in the mantissa. The number is called precision. Representable real numbers in a digital computer are rational approximations of values in the range . An overflow is generated if an arithmetic operation would produce a result outside this range. Compilers and interactive environments may represent such a result as ±Infinity or ±Inf, and if this happens in a sequence of computations, the final result might be completely wrong. Hence, overflows should be avoided. Very small (in magnitude) numbers may also be not representable, and if this so-called underflow happens after an arithmetic operation, the result is set to zero. The underflow threshold is defined by , where is the minimum exponent before underflow. For the machine considered above, . Many computer systems allow for gradual underflow, i.e., they may also use numbers less than k, in the range . Algorithms for discovering the properties of the floating point arithmetic, as in [21,22], are implemented, e.g., in the SLAMCH and DLAMCH routines of the LAPACK package [17], for single and double precision computations, respectively.

The assumption that , does not imply that . If and , then , which is not representable. The specific case of our problem allows working with a factored form of and .

3. Basic Properties of the Approximate Symmetric Chordal Metric

In some applications using the (approximate symmetric) chordal metric, and/or or the terms in (1) may not be numbers, but 0/0 or . For instance, some eigenvalues can be infinite even for regular matrix pencils. If in (1), for , , then is . A Not-a-Number entity is represented by NaN in compilers and interactive environments, and special computer rules are used for mathematical operations. Specifically, in the paper context, the basic rules are The properties below follow directly from (1) and these rules.

- (i)

- ;

- (ii)

- ;

- (iii)

- ;

- (iv)

- ;

- (v)

- , if is infinite;

- (vi)

- if and or , or both, are finite.

Remark 1.

The three properties of a distance or metric, namely, symmetry (i), identity (iv), and non-negativity (iii) and (vi), are satisfied by . But it is easy to verify that the fourth needed property, the triangle inequality, does not hold for (1), in general. Consequently, is not a distance or metric function. Moreover, may differ from , with . Such property holds for and for , but not necessarily for their minimum.

Definition 1.

(Factored representation of the absolute value of a complex number a) Let with and . Let , , and . Then, is called the factored representationof .

From Definition 1, it follows that , since if , if , and for . Factored representation is useful when M is very close or coincides to K. Then, could exceed K, and cannot be computed. Still, its factors can be used for finding the approximate symmetric chordal metric.

4. Algorithms for Computing the Approximate Symmetric Chordal Metric

A mathematical-style pseudocode is used for each algorithm. A MATLAB-style function construct, with possibly optional arguments, is adopted for calling lower level algorithms. See Remark 3 in Section 4.2. The symbols ∧, ∨, and ¬, appearing in the algorithms and their description, are the binary logical operators AND, OR, and NOT, respectively. Moreover, and denote the sets of complex and real representable numbers, respectively. However, the algorithms below will also work correctly for and/or set to ±Infinity or ±Infinity±Infinity𝚤, if the IEEE arithmetic is available on the computer. Although the computational problem for approximate symmetric chordal metric is mathematically very simple, a reliable, accurate, and efficient implementation needs a thorough analysis. These desirable properties are supported by careful consideration of all possible special cases and lower level algorithms. Efficiency can be measured by an estimate of the number of floating point operations, or flops, which have been used especially for numerical linear algebra algorithms [11,23]. There are several definitions for a flop, but counting each arithmetic operation is suitable in the paper context.

4.1. Main Algorithm

This subsection presents the main algorithm, chordal (Algorithm 1). The lower level algorithms are introduced in the next subsections.

| Algorithm 1 chordal computes ASCM for two complex numbers |

|

In the first part of the Algorithm chordal, the value of is computed for special cases. If the maximum absolute value, M, of the real and imaginary parts of and is zero, or if , then , according to the basic property (iii). Otherwise, if , that is, if , then , using (i) and (ii). Similarly, if , then . The absolute values , for and , are computed by a lower level algorithm, called absa (Algorithm 2), introduced in the next subsection.

Algorithm absa needs on input the auxiliary parameters M and or , set in Algorithm chordal, using . If on input to absa, but also on its output, then the absolute value has been obtained. If on output from absa, then the absolute value would exceed K in (3); hence, a factored representation has to be used. The chosen is the largest number for which the absolute value is at most K. The algorithm will work correctly for smaller values of , but the computations would be somewhat more expensive.

Another special case is when one of the numbers or is negligible compared to the other. Then, as shown below, can be found using , , or both. Detecting this situation involves the logical variable , with . See Remark 2 below. If f is true, then it is easier to obtain the terms and of d in (1). Specifically, if and , , it follows that: if , then ; else if , then ; else . The tests involving m and M are motivated by the following reason: implies that is of the order of M, and is of the order of ; if , and have comparable values, and d should be found as ; if m is smaller enough than , then and so . Similarly, if m is sufficiently larger than , then , and so . The bounds 0.1 and 1.1 were found after several trials, so that all tests returned the correct results. Note that, in the discussion above and in Algorithm chordal, the product is not used, since it may overflow if and are close to K.

Remark 2.

Using Definition 1, the absolute value of , , can be expressed as , where . This factored form of is valid even if , when the factors cannot be multiplied without producing an overflow. If , it follows that

since and . Therefore, if is negligible compared to , the same is true for and . The last inequality in (4) assumes that the base of the floating point system is . For the previous paragraph, and .

The definition of the logical variable f uses the value of . This value guarantees that one of the given numbers is indeed negligible compared to the other. Larger values, such as might not do that. Smaller values can always be used, but the computations would be somewhat more expensive, since simplified formulas will not be used in all possible cases.

If no special case appears in Algorithm chordal, it follows that and are nonzero, distinct and relatively “close” to each other, in the sense that . This is the general case, for which it is often necessary to compute and . Finally, . These more involved computational steps of the main algorithm are detailed in the next subsections.

An account of the needed computations can be given here for the part involving special cases, but additional details, including those for general case, will be available in the remaining subsections of this section. At the beginning of Algorithm chordal, the computation of the absolute values for four real scalars, as well as three standard max operations (i.e., with two arguments) are needed for getting , , and M. If or , which requires three comparisons of real scalars, the result is . Otherwise, if , then , with and or ; each of these cases needs an evaluation of one norm (either of or of ); in addition, a division and two tests are needed to set , . If for , the special case when or is tried. The initialization of this pseudocode segment involves a standard min operation, two divisions, and a comparison, setting the value of the logical variable f. If and , then there are three cases: if , then ; else, if , then , for ; else, . Hence, in addition to the initialization part, the computations involve three or four tests, one or three divisions, one or two evaluations of the absolute value, and—for the third case—one standard min operation.

4.2. Computation of ,

If the magnitude of the real and imaginary parts of are close to the maximum representable number, K, then may overflow. For the computation of the approximate symmetric chordal metric d, it is possible to use the factored form of , using M and given in Definition 1, namely, , and , with .

For example, for with K in (3), the direct computation of abs(a) in MATLAB gives Inf, the machine infinity, while can very accurately be represented by the product factors and . Actually, abs(a), i.e., , is also evaluated internally in a factored form in MATLAB or in other available routines, like DLAPY2 from LAPACK [17], but the result is returned as a product, . However, in most cases, is far from K, and always using the factored form would involve some unnecessary computations. For efficiency, a bound is used to detect a case when the factored form might be needed. Specifically, if , with defined in Algorithm chordal, then one can safely use abs(a) in MATLAB, or DLAPY2 from LAPACK to obtain . Otherwise, it is safer to employ the factored form. Since is a conservative bound, it is possible that is representable. The needed test is included in the Algorithm absa, listed below, and explained in Remark 3; the logical variable u, externally initialized to true if , is updated internally as .

| Algorithm 2 absa computes the modulus (or its factored representation) of a complex number |

|

If on entry, Algorithm absa needs, in principle, to square two real numbers and make an addition and a square root. This computation can be performed using abs in MATLAB, or by a call to the LAPACK routine DLAPY2. This is preferable since there are professional implementations of this routine on many computing platforms that could return a more accurate result than a direct evaluation of the formula . If on entry to Algorithm absa, then the computations involve a standard min operation, the absolute values of two real numbers, two divisions, an addition, a square of a number, a square root, and a test . If after this test, then a multiplication is performed, in order to simplify the subsequent calculations. Actually, DLAPY2 uses the factors M and as in the else part of the algorithm, but then always multiplies them; therefore, the actual operations are the same as above, but, in extreme cases, the product may overflow.

The algorithm absa can be used to compute , , , and in Algorithm chordal. For , it is necessary to first evaluate and . This topic will be dealt with in Section 4.5.

Remark 3.

The logical variable is initialized to true in Algorithm chordal for , if , . If is false

on entry to Algorithm absa, but if, after computing the corresponding , it follows that , then is reset to true, using , and hence . Otherwise, is represented and used in the factored form. For instance, for finding or its factored representation, the following MATLAB-like command can be used,

where , if true on exit from absa, and and define the factored representation of , if false on exit. If it is known in advance that will remain true on exit, then and K can be omitted as input arguments, and can be omitted as an output argument. This is possible in all cases when true. For instance, if and , then and , since . The first inequality assumes that , with k the underflow threshold. With this assumption, ; hence, the factored form is not needed for numbers close to underflow. However, the computation of in such a case should still be performed using its factors and , for accuracy. For instance, , while the naïve computation will return 0.

4.3. Computation of ,

The computation of needs the real and imaginary parts of , and these parts are computed separately. Using the triangle inequality for the Euclidean distance between the points and in the complex plane, it follows that . Denoting , the same inequality expressed in the infinity norm, , , implies that . Clearly, even simple operations like or may produce overflow or underflow, if any of these scalars is close to K or k, respectively, and the other has appropriate sign and magnitude. These exceptions can be avoided in a professional implementation, but it is worth estimating their possible occurrence. This is performed in an algorithm that computes the difference between two real numbers, and . If IEEE arithmetic is available, and either or are/is ±Infinity, then the corresponding could not be computed, but will certainly be. Without IEEE arithmetic, the computation is more involved, as shown in Algorithm subtract (Algorithm 3) below.

The Algorithm subtract uses the sign function, defined as , if , , if , and , if . Note that the value 0 cannot appear in Algorithm subtract, since the sign function is used there only when its argument is nonzero.

Three special cases are dealt with by Algorithm subtract. If , then . This case needs the absolute values of two real numbers, a max operation, a test, and setting . The second special case is when one of the values and is negligible compared to the other, i.e., when , with . In such a case, if , or , otherwise. In addition to the operations performed for the first special case, a min operation, two divisions, and two tests are needed. The third special case is when and have the same sign, which implies that the result is . This case needs two sign operations, a subtraction, and a test. The general case may involve a few additional operations: a multiplication, an addition, one or two logical operations, and a test. If the test is satisfied, then and is set to false. Otherwise, .

| Algorithm 3 subtract computes the difference of two real numbers |

|

To obtain , Algorithm subtract has to be called for both the real and imaginary parts of . If it succeeds in obtaining representable values for and , then can be obtained using Algorithm absa. Let be the value of M on input of Algorithm absa applied to , and let or and be its outputs. If is true, then ; otherwise, and are the factored representation of . If the parameter is false on exit of the first or the second call of the algorithm, it means that or , respectively, would exceed K in magnitude and therefore could not be computed. In such a case, and/or are set to , or to , if the IEEE arithmetic is available.

4.4. Computation of , Using

Algorithm chordal has to compute only when and , since the special cases or have already been solved, resulting in or , respectively. If on exit of both calls of Algorithm subtract made in Algorithm chordal to compute , i.e., if is a representable result (possibly in a factored form), then can be obtained more efficiently than using the direct formula, based on evaluating , as described in Section 4.5. Indeed, since , it follows that

The absolute values of and are easily computed using Algorithm absa. Then, can be obtained using (5), taking into account that all factors, , , and can be in factored form or not. From (5), it follows that if ; hence, should not be evaluated in this case, since then . The computations are summarized in Algorithm d2byd1 (Algorithm 4) shown below.

The order of the arithmetic operations in Algorithm d2byd1 is important; changing it could produce overflows or underflows. Parentheses are used to enforce that order, and avoid possible optimizations made by compilers. Note that if , i.e., in the two last else cases of the test branches for , then M and m are already available from Algorithm chordal. Another observation is that if and , it follows that , since , where also ; therefore . Note that in the other three combinations of and for , since either or (or both) are larger than K, and the other value must exceed , because otherwise the special case , already detected by Algorithm chordal, appears.

| Algorithm 4 d2byd1 computes in (1) using |

|

In the usual case, with , Algorithm d2byd1 needs three, four or four tests, and three, three or four divisions, for the cases when only or , or when , respectively; the case needs three tests, one ∧, and a max operation, if , and another min operation, and two divisions, otherwise. If , an additional multiplication with is also required in all these four cases.

If in Algorithm subtract, cannot be computed by Algorithm d2byd1 since is not available, and therefore has to be found using the direct formula, which involves more computations.

4.5. Direct Computation of

The direct computation of using its definition in (1),

needs to evaluate the reciprocals of and , then the difference , and finally, the absolute value of this difference. The following algorithm shows how the reciprocal of a complex number a has to be safely computed. Note that if , , where can be given in a factored form, , but this product might not be representable. However, squaring up is prone to overflow, and therefore it must be avoided, as shown in Algorithm inva (Algorithm 5).

| Algorithm 5 inva computes the reciprocal of a complex number given its modulus or factored representation |

|

Remark 4.

The minus sign in front of ν is not necessary in this context, since the real and imaginary parts will be used to evaluate using Algorithm absa. Therefore, the signs of the imaginary parts of and do not matter.

Algorithm inva needs one test and four divisions if , or eight divisions if , but it should be applied to both and . Therefore, two tests and eight, twelve or sixteen divisions are necessary if , and , for , , or , respectively. The remaining computations are the same as those for computing , but applied to . Therefore, the computational effort for computing using Algorithm inva shoud be compared to that of Algorithm d2byd1, which needs at most four tests and four divisions, or at most three tests, two divisions, one ∧, one max and one min operations, if ; if , another multiplication is needed.

Clearly, the direct approach needs more computations than the first approach, based on . Note that the first approach can easily and efficiently detect the case when is not needed, since its value will exceed . However, the direct approach has to be used when the first approach could not be applied, namely, when overflows.

Remark 5.

The implementation inlines the low level algorithms, except for Algorithm subtract, that is more involved and is called twice, if can be found using , or four times, otherwise. Similarly, Algorithm inva is either not called, or called twice. The very simple Algorithm absais called at most four times, and Algorithm d2byd1 is inlined once. Inlining increases the efficiency, since it avoids passing input and output arguments, and duplicating some evaluations of internal variables. It may also increase the accuracy, since values stored in registers might be used.

5. Numerical Results

This section starts by presenting four examples that show the significance of the proposed approach. Then, the numerical results obtained in a large experiment with randomly generated examples, which cover the entire range of representable numbers, are discussed. Finally, the block diagonalization of matrix pencils is considered as an application, and two examples are shown. The performance of two solution approaches for a matrix pencil of order 999 is analyzed, illustrating the advantages of using a good eigenvalue reordering strategy and fast algorithms for computing the approximate symmetric chordal metric. The computations were performed in double precision on an Intel Core i7-3820QM portable computer (2.7 GHz, 16 GB RAM) under a Windows operating system and a recent version of MATLAB. The algorithms were implemented in Fortran and MATLAB, and a MEX-file calling the Fortran routines was used for the tests. The author’s preliminary implementations are already available in the SLICOT Library (Version v5.9) on GitHub (see https://github.com/SLICOT/SLICOT-Reference (accessed on 3 May 2025), and they will soon be updated.

5.1. Detailed Examples

The numerical results for four examples are presented and compared with those obtained through direct use of the MATLAB computations.

Example 1.

Let , . Implementing formula (1) in MATLAB, the result is , while Algorithm chordal, together with the lower level algorithms, returns 7.010041250456554e−309. Both and computed using (1) in MATLAB are wrong. Specifically, , with 1.617923821376084e+308, and the MATLAB command gives . Moreover, evaluating , it follows that , and therefore . On the other hand, the factored form of has and . Moreover, and , so that 1.004987562112089; therefore, .

Example 2.

Let 1.16e308+1.66e308ı. MATLAB command1/agives the result 0. While this is close to the true value, it is qualitatively wrong, since it does not satisfy the mathematical condition that . But using the factored form of , , with 1.66e308 and 1.219965042368649, as 1.16e308, it follows that

and then 9.999999999999996e−01−1.665334536937735e−16ı, which is very close to 1; the error is of the order of ε. Note that the denominator in (7) should be evaluated as shown there (or with permuted factors), but not as ; multiplying would give Inf. Clearly, the result obtained is very close to 0, but it can be accurately obtained and it numerically verifies the condition on the reciprocal of a complex number, while 0 does not. Note also that can be obtained directly as .

For computing in (1), it is necessary to evaluate both and , and then the absolute value of their difference. If and/or have real and imaginary parts with very large magnitude, as in Example 2, then using (1) will give an inaccurate result for d, as shown in the next example.

Example 3.

Let from Example 2, and . Implementing (1) in MATLAB, the result is 3.933412034978399e−309, while the implementation of Algorithm chordal gives 1.267129104195721e−309. The absolute difference between these values is very small, about 2.66628e-309. However, their relative difference, is about 0.67785, and taking as reference, . These large relative differences are due to the fact that using (1) to evaluate in MATLAB gives , and . Both MATLAB and Algorithm chordal compute 6.523894033771084e+307, but Algorithm chordal

uses Algorihm d2byd1 to obtain , where the factored representations are used for and .

Example 4.

Let . Using MATLAB, it follows that 1/a = 0, which is wrong, since it implies that , while the true result is 1. On the contrary, Algorithminva

gives 5.134785827324312e−309 − 3.423190551549538e−309ı. With this value, it follows that 1.000000000000000e+00 + 3.885780586188048e−16ı, that has absolute and relative errors less than .

5.2. Large Experiment with Randomly Generated Examples

In a run with 4,188,166 random examples, the first three examples had , , and . Specifically, all examples were generated using the following MATLAB commands, where a1 and a2 are used for and , respectively.

- a1 = randn + 1i*randn; a2 = 0;

- a2 = a1; a1 = 0;

- a2 = 0;

- for ii = -1022 : 1023

- s1 = 2^ii; re = s1*randn; im = s1*randn;

- if abs( re ) > realmax, re = sign( re )*realmax; end

- if abs( im ) > realmax, im = sign( im )*realmax; end

- a1 = re + 1i*im;

- for jj = -1022 : 1023

- s2 = 2^jj; re = s2*randn; im = s2*randn;

- if abs( re ) > realmax, re = sign( re )*realmax; end

- if abs( im ) > realmax, im = sign( im )*realmax; end

- a2 = re + 1i*im;

- end

- end

- s1 = realmax; re = s1*randn; im = s1*randn;

- if abs( re ) > realmax, re = sign( re )*realmax; end

- if abs( im ) > realmax, im = sign( im )*realmax; end

- a1 = re + 1i*im;

- for jj = -1022 : 1023

- s2 = 2^jj; re = s2*randn; im = s2*randn;

- if abs( re ) > realmax, re = sign( re )*realmax; end

- if abs( im ) > realmax, im = sign( im )*realmax; end

- a2 = re + 1i*im;

- end

- s2 = realmax; re = s2*randn; im = s2*randn;

- if abs( re ) > realmax, re = sign( re )*realmax; end

- if abs( im ) > realmax, im = sign( im )*realmax; end

- a2 = re + 1i*im;

The for loop with counter ii generates numbers s1 between (realmin) and 8.988465674311580e+307, which is very close to, but smaller than , (realmax), the relative error being smaller than 5.552e-17. The number s1 multiplies pseudorandom double precision values drawn from the standard normal distribution (with mean 0 and standard deviation 1), to obtain the real and imaginary parts of . If any of the computed parts of exceed K in magnitude, i.e., if is obtained, that value is reset to . The number is obtained in the same way in the internal loop with counter jj. The second part of the code segment has and it is used to obtain ; is generated as above. The last part also sets , and uses it to obtain . Clearly, complex numbers with and/or are generated.

For reproducibility of the results, the command sequence above was run after the MATLAB statement

- rng( ’default’ )

- which ensures that the (initial) seed of the random number generator is the same, and therefore the same sequence of random numbers is generated after using this command. However, for some further tests, the generating sequence was run repeatedly several times, but the rng( ’default’ ) command was placed just before the first run. This way, a larger number of tests were performed.

After each of the first three MATLAB commands, inside the (internal) loops, and after the last command, an implementation of Algorithm chordal is called, and its results are compared with those obtained using a MATLAB command for (1), but also a more sophisticated MATLAB code based on algorithms described in Section 4. This is needed since using (1) directly might return inaccurate results, as shown in Section 5.1.

The maximum relative error in this run was 6.3088e-16; the relative error is defined as , where d and denote the results obtained by evaluating (1) in MATLAB and by using an implementation of Algorithm chordal, respectively. Note that with another definition, , if , and , otherwise, its maximum value was 0.2649. However, the value became zero when using the modified MATLAB code, based on algorithms described in the previous section. After ten consecutive runs (without reinitialization of the random number generator between runs), the maximum relative error and its mean value were 7.6144e-16 and 6.3663e-16, respectively, while using the second definition, the values were 0.73077 and 0.35080, and they became zero with the modified MATLAB code.

In this test sequence, there were 3,970,109 examples with or negligible compared to the other; hence, the logical variable f in Algorithm chordal was true. This represents 94.794% from the total number of examples. Specifically, 1,985,994 and 1,984,115 examples have and , respectively. From those with , 987,937 have , 994,601 have , and 3456 have . From examples with , 987,834 have , 992,853 have , and 3428 have . These categories correspond to the three cases, with , , and , respectively. For , the logical variables and , , were true for all examples satisfying and , respectively. Moreover, for examples satisfying , when as well as needed to be computed, both and were true. Using a factored representation has never been necessary for any of these 3,970,109 examples.

A summary of the number of examples needing only to compute and/or is presented in Table 1.

Table 1.

Number of examples needing only and/or (case , 94.794% of all examples).

For the remaining 218,055 examples, representing 5.206% of all examples, and are nonzero, distinct and relatively “close” to each other, since . All these examples needed both and values to compute and . A factored representation for was required for 56 examples. Although 21 examples were expected to require a factored representation for , the factors could be multiplied without overflows for nine of these examples. Table 2 summarizes the number of examples with and without a factored representation for and .

Table 2.

Number of examples with/without a factored representation for and (case , 5.206% of all examples).

To get (also denoted in the pseudocodes), Algorithm subtract was used to obtain the real and imaginary parts, and , of . For three and two examples, and , respectively, would exceed K in magnitude. For the other 218,050 examples, was evaluated using Algorithm absa, and could be obtained from via Algorithm d2byd1. Specifically, was true on input to absa for 217,980 examples, and it was false for the remaining 70 examples with expected overflow; but for 12 out of 70 examples, was reset to true, since ; therefore, needed the factored representation defined by and for only 58 examples. Moreover, 107,475 out of the 218,050 examples satisfied the conditions and , which implies that ; hence, , and was not needed. For the remaining 110,575 examples, , with evaluated using (or and ), (or and ) and (or and ). Specifically, for the four cases with in Algorithm d2byd1 ( and , and , and , and ), there were 110,514, zero, two, and one examples, respectively. Similarly, for the four cases with , there were zero, six, 51, and one examples, respectively.

Clearly, for the five examples for which could not be computed, ; hence, was evaluated by calling twice Algorithm inva (for and ) and Algorithm subtract (for the real and imaginary parts of ). The logical variables and were true for four and one examples, respectively, and false for one and four examples. Therefore, all formulas for and in Algorithm inva were applied. Then, was obtained using Algorithm subtract separately for the real and imaginary parts of ; the variable remained true for each of these examples. The result was found by Algorithm absa.

A summary of the number of examples needing to compute and/or is presented in Table 3. Table 4 shows the number of examples with/without a factored representation for and . Note that is always representable.

Table 3.

Number of examples needing to compute and/or (case , 5.206% of all examples).

Table 4.

Number of examples with/without a factored representation for and (case , 5.206% of all examples).

The results presented and summarized in the tables show that all examples were solved using the simplest possible formulas, which proves the efficiency of the implementation. Moreover, it was checked that the results were practically the same with those obtained when was always computed using (6).

This demonstrates that the accuracy was preserved using the simplified formulas.

5.3. Application: Block Diagonalization of Matrix Pencils

This subsection presents the results obtained for block diagonalization of matrix pencils. The approximate symmetric chordal metric is used to measure the “distances” between eigenvalues, and based on this, to reorder and group them in clusters for reducing the condition numbers of the transformation matrices involved in the process.

Two examples with different levels of difficulty are described.

A random example. A matrix pencil of order was generated using normally distributed pseudorandom numbers produced by the following MATLAB commands:

- rng( ’default’ ); n = 100;

- A = randn( n ); B = randn( n );

- p = 10^3*rand( n, 1 ); q = rand( n, 1 )/10^2;

- P = diag( p ); Q = diag( q ); A = P*A*Q; B = P*B*Q;

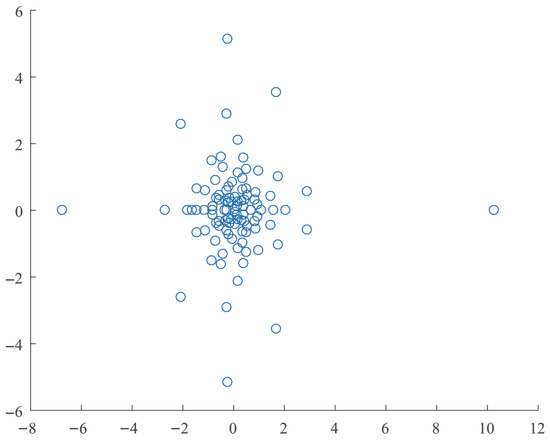

The vectors p and q, drawn from a uniform distribution, are used to scale the rows and columns, respectively, of A and B. The spectrum of the scaled matrix pencil is shown in Figure 1.

Figure 1.

The spectrum of the randomly generated matrix pencil of order 100.

The Euclidean norms of A and B and the ranges of their elements are listed in Table 5. The values are rounded to five significant digits.

Table 5.

Euclidean norms of A and B and the ranges of their elements for the randomly generated matrix pencil.

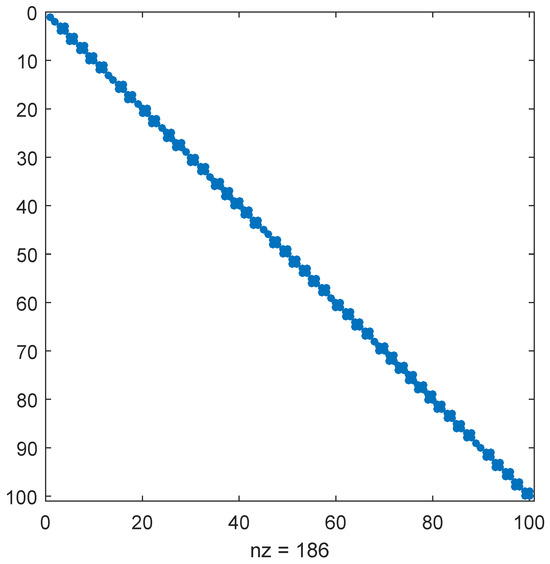

A MATLAB-executable, developed by the author, was used to perform the block diagonalization of the scaled matrix pencil . The executable has an option to work on general matrices, or on matrices already reduced to the generalized Schur form [17], , with , , where Q and Z are orthogonal (hence, and , with the identity matrix of order n); is upper triangular, and is block upper triangular with diagonal blocks of order 1 or 2, corresponding to real or complex conjugate eigenvalues, respectively. (All elements under the diagonal of and under the diagonal blocks of are zero.) The block diagonalization algorithm acts on and . An essential part of the computations is the solution of a generalized Sylvester equation. Since this involves non-unitary transformations, their elements should be bounded, to ensure good accuracy. Specifically, if any of these elements exceeds a given threshold , the process is stopped, and solving an enlarged Sylvester equation is tried. If many failed attempts are involved, the computational effort might be unacceptably high. Using the bound and default options (the diagonal blocks are not reordered before each reduction step, the left and right transformations are updated), the algorithm computed the transformed matrix pencil , with , , , , where and are the global transformations applied to and by the block diagonalization algorithm. The reduced matrix is block diagonal (see Figure 2), with diagonal blocks of order 1 and 2, while matrix is diagonal. There are 14 block pairs of order 1 and 43 block pairs of order 2. Since the original pencil has 14 real eigenvalues, the maximal possible reduction (with real arithmetic) was obtained.

Figure 2.

The form of the reduced matrix of the matrix pencil of order 100.

Let and be the absolute errors of and with respect to the transformed matrices and , respectively, and let and be their relative errors, i.e., , , with the Euclidean norm. Similarly, let and be the absolute and relative errors of the eigenvalues of the matrix pencil with respect to the eigenvalues of the matrix pencil . If the eigenvalue problem is well conditioned, then are very close to the true eigenvalues of the matrix pencil . These errors are reported in Table 6.

Table 6.

Absolute and relative errors of the transformed matrices and eigenvalues.

All these errors are as good as possible for such a problem, which shows that the block diagonalization was successful. The reduced matrix pencil can be used efficiently instead of the original pencil, e.g., for computing matrix functions or the responses of a linear descriptor system. In this case, the input and output matrices of the system should be updated with the computed transformation matrices and .

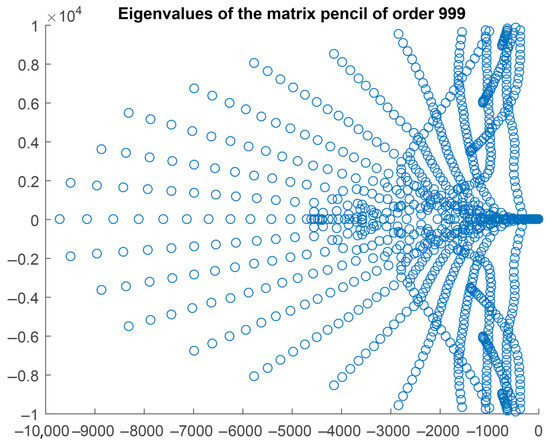

An example with dense clusters of eigenvalues. A matrix pencil of order with many clustered eigenvalues is considered now. The matrices A and B are already reduced to the generalized real Schur form. The spectrum is shown in Figure 3. It has 107 real and 446 complex conjugate eigenvalues.

Figure 3.

The spectrum of the matrix pencil of order 999 with dense clusters.

The Euclidean norms of A and B and the ranges of their elements are listed in Table 7. The values are rounded to five significant digits.

Table 7.

Euclidean norms of A and B and the ranges of their elements for the matrix pencil of order 999 with dense clusters.

Using the approach described for the randomly generated example, a solution with 135 diagonal block pairs was computed in 62.68s (seconds). The largest block pair, of order 734, appeared in the 89-th block position on the diagonals; in addition, there are three and 131 2 × 2 diagonal block pairs. The remaining 104 real eigenvalues are included in the largest block pair.

Using an eigenvalue reordering strategy based on the pairwise “distances” between eigenvalues, measured by the approximate symmetric chordal metric, another solution was computed in 4.6905s; hence, the execution time was 13.36 times shorter. Specifically, 763 selected eigenvalues were moved to the leading positions, obtaining the matrices and . The needed transformation matrices, and , were computed. This reordering process, including the computation of and , needed 2.4826s. The block diagonalization was then applied to the matrices and , and it took 2.2078s. The relative errors in the transformed matrices and eigenvalues for the two computational stages are shown in Table 8.

Table 8.

Relative errors of the transformed matrices and eigenvalues.

Compared to the first approach, more block pairs, namely, 141, were found and the largest block pair, of order 719, appeared in the last position. This is the preferred situation, since well-separated eigenvalues could be quickly placed in small block pairs in the leading part, while big clusters remain to be placed in the trailing part of the matrix pencil. There were 140 2 × 2 block pairs; so, all real eigenvalues were available in the largest block pair. The efficiency gain is mainly due to better eigenvalue reordering, which reduced the number of failed attempts to solve the generalized Lyapunov equations. However, it is worth noting that the number of “distances” between eigenvalues is ; hence, it increases quadratically with the problem size. Therefore, it is important that the algorithms for computing the approximate symmetric chordal metric are as fast as possible.

The computed matrix has 140 diagonal blocks followed by a diagonal block. The matrix is diagonal with positive diagonal elements.

6. Conclusions

The basic theoretical properties of the approximate symmetric chordal metric for two real or complex numbers are investigated, and reliable, accurate, and efficient algorithms for its computation are proposed. A factored representation of the modulus of a complex number is introduced, which allows to obtain very accurate results for the entire range of the floating point number system of a computer. Two different ways to evaluate the distance between the reciprocals of the given numbers are described. The algorithms can be easily implemented on various architectures and compilers. Extensive numerical tests were performed to assess the performance of the associated implementation. The results were compared to those obtained in MATLAB, but with appropriate modifications for numbers very close to the bounds of the range of representable values, where the usual formulas give wrong results, as shown in several detailed numerical examples. As an application, the block diagonalization of matrix pencils is considered and two examples are investigated. The largest order example illustrates the efficiency improvement possible using a proper eigenvalue reordering strategy and fast algorithms for computing the approximate symmetric chordal metric. The algorithms can be used for applications using the chordal metric, if the triangle inequality is not required.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Klamkin, M.S.; Meir, A. Ptolemy’s inequality, chordal metric, multiplicative metric. Pac. J. Math. 1982, 101, 389–392. [Google Scholar] [CrossRef][Green Version]

- Sasane, A. A generalized chordal metric in control theory making strong stabilizability a robust property. Complex Anal. Oper. Theory 2013, 7, 1345–1356. [Google Scholar] [CrossRef]

- Papadimitrakis, M. Notes on Complex Analysis. 2019. Available online: http://fourier.math.uoc.gr/~papadim/complex_analysis_2019/gca_vn_r.pdf (accessed on 3 May 2025).

- Rainio, O.; Vuorinen, M. Lipschitz constants and quadruple symmetrization by Möbius transformations. Complex Anal. Its Synerg. 2024, 20, 8. [Google Scholar] [CrossRef]

- Bishop, C. Complex Analysis I, MAT 536, Spring 2024; Stony Brook University, Dep Mathematics, 100 Nicolls Road: Stony Brook, NY, USA, 2024. [Google Scholar]

- Álvarez Tuñón, O.; Brodskiy, Y.; Kayacan, E. Loss it right: Euclidean and Riemannian metrics in learning-based visual odometry. arXiv 2023, arXiv:2401.05396. [Google Scholar] [CrossRef]

- Xu, W.; Pang, H.K.; Li, W.; Huang, X.; Guo, W. On the explicit expression of chordal metric between generalized singular values of Grassmann matrix pairs with applications. SIAM J. Matrix Anal. Appl. 2018, 39, 1547–1563. [Google Scholar] [CrossRef]

- Sun, J.-G. Perturbation theorems for generalized singular values. J. Comput. Math. 2025, 1, 233–242. [Google Scholar]

- Sasane, A. A refinement of the generalized chordal distance. SIAM J. Control Optim. 2014, 52, 3538–3555. [Google Scholar] [CrossRef][Green Version]

- Bavely, C.; Stewart, G.W. An algorithm for computing reducing subspaces by block diagonalization. SIAM J. Numer. Anal. 1979, 16, 359–367. [Google Scholar] [CrossRef][Green Version]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 4th ed.; The Johns Hopkins University Press: Baltimore, MD, USA, 2013. [Google Scholar]

- Wilkinson, J.H. The Algebraic Eigenvalue Problem; Oxford University Press (Clarendon): Oxford, UK, 1965. [Google Scholar]

- Wilkinson, J.H. Kronecker’s canonical form and the QZ algorithm. Linear Algebra Its Appl. 1979, 28, 285–303. [Google Scholar] [CrossRef]

- Stewart, G.W. On the sensitivity of the eigenvalue problem Ax = λBx. SIAM J. Numer. Anal. 1972, 9, 669–686. [Google Scholar] [CrossRef]

- Demmel, J.W. The condition number of equivalence transformations that block diagonalize matrix pencils. SIAM J. Numer. Anal. 1983, 20, 599–610. [Google Scholar] [CrossRef]

- Kågström, B.; Westin, L. Generalized Schur methods with condition estimators for solving the generalized Sylvester equation. IEEE Trans. Autom. Control 1989, 34, 745–751. [Google Scholar] [CrossRef]

- Anderson, E.; Bai, Z.; Bischof, C.; Blackford, S.; Demmel, J.; Dongarra, J.; Du Croz, J.; Greenbaum, A.; Hammarling, S.; McKenney, A.; et al. LAPACK Users’ Guide, 3rd ed.; Software·Environments·Tools, SIAM: Philadelphia, PA, USA, 1999. [Google Scholar]

- MathWorks®. MATLAB™, Release R2024b; The MathWorks, Inc.: Natick, MA, USA, 2024. [Google Scholar]

- Wolfram, S. The Story Continues: Announcing Version 14 of Wolfram Language and Mathematica. 2024. Available online: http://writings.stephenwolfram.com/2024/01/the-story-continues-announcing-version-14-of-wolfram-language-and-mathematica (accessed on 3 May 2025).

- Wang, B.; Kestelyn, X.; Kharazian, E.; Grolet, A. Application of Normal Form Theory to Power Systems: A Proof of Concept of a Novel Structure-Preserving Approach. In Proceedings of the 2024 IEEE Power & Energy Society General Meeting (PESGM), Seattle, WA, USA, 21–25 July 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Malcolm, M.A. Algorithms to reveal properties of floating-point arithmetic. Commun. ACM 1972, 15, 949–951. [Google Scholar] [CrossRef]

- Gentleman, W.M.; Marovich, S.B. More on algorithms that reveal properties of floating point arithmetic units. Commun. ACM 1974, 17, 276–277. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).