Abstract

The least trimmed squares (LTS) estimator is popular in location, regression, machine learning, and AI literature. Despite the empirical version of least trimmed squares (LTS) being repeatedly studied in the literature, the population version of the LTS has never been introduced and studied. The lack of the population version hinders the study of the large sample properties of the LTS utilizing the empirical process theory. Novel properties of the objective function in both empirical and population settings of the LTS and other properties, are established for the first time in this article. The primary properties of the objective function facilitate the establishment of other original results, including the influence function and Fisher consistency. The strong consistency is established with the help of a generalized Glivenko–Cantelli Theorem over a class of functions for the first time. Differentiability and stochastic equicontinuity promote the establishment of asymptotic normality with a concise and novel approach.

Keywords:

trimmed squares of residuals; continuity and differentiability of objective function; influence function; Fisher consistency; asymptotics MSC:

62J05; 62G36; 62J99; 62G99

1. Introduction

In classical multiple linear regression analysis, it is assumed that there is a relationship for a given data set :

where and (an error term, a random variable, and is assumed to have a zero mean and unknown variance in the classic regression theory) are in , ⊤ stands for the transpose, , the true unknown parameter, and is in () and could be random. It is seen that is the intercept term. Writing , one has . The classic assumptions such as linearity and homoscedasticity are implicitly assumed here. Others will be considered later when they are needed.

The goal is to estimate the based on the given sample (hereafter, it is implicitly assumed that they are i.i.d. from parent ). For a candidate coefficient vector , call the difference between (observed) and (predicted), the ith residual, , ( is often suppressed). That is,

To estimate , the classic least squares (LS) minimizes the sum of squares of residuals,

Alternatively, one can replace the square above with the absolute value to obtain the least absolute deviations estimator (aka, estimator, in contrast to the (LS) estimator).

Due to its great computability and optimal properties when the error follows a Gaussian distribution, the LS estimator is popular in practice across multiple disciplines. It, however, can misbehave when the error distribution slightly departs from the Gaussian assumption, particularly when the errors are heavy-tailed or contain outliers. Both and estimators have the worst asymptotic breakdown point, in sharp contrast to the of the least trimmed squares estimator [1]. The latter is one of the most robust alternatives to the LS estimator. Robust alternatives to the LS estimator are abundant in the literature. The most popular are M-estimators [2]), least median squares (LMS) and least trimmed squares (LTS) estimators [3]), S-estimators [4]), MM-estimators [5]), -estimators [6]) and maximum depth estimators [7,8,9]), among others.

Although the M-estimator is the first robust alternative to the LS estimator, it has a poor breakdown point, , just as the LS whereas the MM-estimator could have a higher breakdown point but it depends on the initial estimator, which must have a high breakdown robustness such as the LTS. So the MM-estimator can have a better efficiency than the LTS but not robustness.

Due to the cube-root consistency of LMS in R84 and its other drawbacks, LTS is preferred over LMS (see [10]). LTS is popular in the literature in view of its fast computability and high robustness and often serves as the initial estimator for many high breakdown iterative procedures (e.g., S- and MM- estimators). The LTS is defined as the minimizer of the sum of h trimmed squares of residuals. Namely,

where are the ordered squared residuals and and is the ceiling function.

There are copious studies on the LTS in the literature. Most are focused on its computation, e.g., [1,10,11,12,13,14,15,16,17,18];

The LTS has been extended to penalized regression setting with a sparse model where dimension p (in thousands) is much larger than sample size n (in tens, or hundreds), see, e.g., [19,20]. The resulting estimator performs outstanding, especially in terms of robustness.

Other studies on LTS sporadically addressed the asymptotics, e.g., Refs. [1,21] addressed the asymptotic normality of the LTS, but limited to the location case, that is, when . Refs. [22,23,24] also addressed the asymptotics of the LTS without employing advanced technical tools in a series (three) of lengthy articles for consistency, root-n consistency, and asymptotic normality, respectively. The analysis is technically demanding and with difficult-to-verify assumptions . Furthermore, the analysis is limited to the non-random vector s case. In this article, without those assumptions and limitations, those results are established concisely with the help of advanced empirical process theory.

Replacing by a unspecified nonlinear function , Refs. [25,26,27] discussed the asymptotics of the LTS in a nonlinear regression setting. Now that the more general non-linear case has been addressed, one might wonder if there are any merits to discussing the special linear case in this article.

There are at least three merits: (i) the nonlinear function cannot always cover the linear case of for the usual LTS (e.g., in the exponential and power regression cases); (ii) many assumptions for the nonlinear case (see A1, A2, A3, A4 in [25]; H1, H2, H3, H4, H5, H6; D1, D2; I1, I2 in [26,27]) (which are usually difficult to verify) can be dropped for the linear case as demonstrated in this article. (iii) A key assumption that form a VC class of functions over a compact parameter space (see [25,26,27]) can be verified directly in this article.

To avoid all the drawbacks and limitations discussed above and take advantage of the standard results of the empirical process theory, this article defines the population version of the LTS (Section 2.1), introduces the novel partition of the parameter space (Section 2.2), and investigates the primary properties of the objective function for the LTS both in the empirical and population settings (Section 2) for the first time. The obtained novel results facilitate the versification of some fundamental assumptions conveniently made previously in the literature. The major contributions of this article thus include the following

- (a)

- Introducing a novel partition of the parameter space and defining an original population version of the LTS for the first time;

- (b)

- Investigating primary properties of the sample and population versions of the objective function for the LTS, obtaining original results;

- (c)

- For the first time, obtaining the influence function () and Fisher consistency for the LTS;

- (d)

- For the first time, establishing the strong consistency of the sample LTS via a generalized Glivenko-Cantelli Theorem without artificial assumptions; and

- (e)

- For the first time, employing a novel and concise approach based on the empirical process theory to establish the asymptotic normality of the sample LTS.

The rest of the article is organized as follows. Section 2 introduces for the first time, the population version of LTS and addresses the properties of the LTS estimator in both empirical and population settings, including the global continuity and local differentiability and convexity of its objective function; its influence function (in cases) and Fisher consistency are established for the first time. Section 3 establishes the strong consistency via a generalized Glivenko–Cantelli Theorem and the asymptotic normality of the estimator is re-established in a very different and concise approach (via stochastic equicontinuity) rather than the previous approaches in the literature. Section 4 addresses the asymptotic inference procedures based on the asymptotic normality and bootstrapping. Concluding remarks in Section 5 end the article. Major proofs are deferred to Appendix A.

2. Definition and Properties of the LTS

2.1. Definition

Denote by the joint distribution of and y in model (1). Throughout, stands for the distribution function of the random vector . For a given and an , , let be the th quantile of with , where . The constant case is excluded to avoid unbounded and the LS cases. Define an objective function

and a regression functional

where is the indicator of A (i.e., it is one if A holds and zero otherwise). Let be the sample version of the based on a sample . and will be used interchangeably. Using , one obtains the sample versions

where is the floor function. Further

It is readily seen that the above is identical to the in (3) with . Henceforth, we prefer to treat the rather than the in (3).

The first natural question is the existence of the minimizer in the right-hand side (RHS) of (7), or the existence of the . Does it always exist? If it exists, will it be unique? Unique existence is a key precondition for the study of asymptotics of an estimator.

One might take the existence for granted since the objective is non-negative and has a finite infimum that can be approximated by objective values of a sequence of s. There is a sub-sequence of s with its objective values converging to the infimum that is a minimum due to the continuity of the objective function. The sub-sequence of s converges to a point , which is the minimizer of the RHS. However, there are multiple issues with the arguments above. The existence and the convergence of the sub-sequence (to a minimum) and continuity of objective function need to be proved. In the sequel, we take a different approach.

2.2. Properties in the Empirical Case

Write and for the and the , respectively. It is seen that

where . The fraction will often be ignored in the following discussion.

- Existence and uniqueness

- Partition parameter space

For a given sample , an (or h), and any , let for an integer . Note that and depend on , i.e., , . Obviously . Call -h-integer set. If for any distinct i and j, then the h-integer set is unique. Hereafter, we assume (A0): has a density for any given . Then, almost surely (a.s.), the h-integer set is unique.

Consider the unique cases. There are other s in that share the same h-integer set as that of the . Denote the set of such points that have the same h integers as by

If (A0) holds, then (a.s.). If it is , then we have a trivial case (see Remark 1 below). Otherwise, there are only finitely many such sets (for a fixed n) that partition . Let , where s are defined similarly to (9) and are disjoint for different l, , and is the closure of the set A. Write , an matrix. Assume (A1): and any its h rows have a full rank . As in the R function ltsReg in the R package robustbase version 99-4-1, hereafter, we assume that .

Lemma 1.

Assume that (A0) and (A1) hold, then

- (i)

- (a) For any l (), over .(b) For any , there exists an open ball centered at η with a radius such that for any

- (ii)

- The graph of over is composed of the L closures of graphs of the quadratic function of β: for and any l (), joined together.

- (iii)

- is continuous in .

- (iv)

- is differentiable and strictly convex over each for any .

Proof.

See the Appendix A. □

Remark 1.

(a) If , then is a twice differentiable and strictly convex quadratic function of β, the existence and the uniqueness of are trivial as long as has a full rank.

(b) Replacing by a nonlinear , conveniently assuming that (i) is twice differentiable around the points corresponding to the square roots of the α-quantiles of W, (ii) is continuous over parameter space B, (iii) is twice differentiable in β for a.s., and (iv) is continuous in β, Refs. [26,27] also addressed the continuity and differentiability of the objective function of the LTS. All assumptions were never verified in [26,27], though; however, they are proved (or not required) in Lemma 1.

(c) Continuity and differentiability inferred just based on being the sum of h continuous and differentiable functions (squares of residual) without (i) or (10) might not be flawless. In general, is not differentiable nor convex in β globally.

Let , . Note that depends on .

Theorem 1.

Assume that (A0) and (A1) hold. Then,

- (i)

- exists and is the local minimum of over for some ().

- (ii)

- Over , is the solution of the system of equations

- (iii)

- Over , the unique solution is

Proof.

The given conditions and Lemma 1 allow one to focus on a piece , , all results follow in a straightforward fashion. For more details, see Appendix A. □

Remark 2.

(a) Unique existence, which is often implicitly assumed or ignored in the literature, is central for the discussion of asymptotics of . Existence of could also be established under the assumption that there are no sample points of contained in any -dimensional hyperplane, similarly to that of Theorem 2.2 for LST in [28]. It is established here without such an assumption nevertheless.

(b) A sufficient condition for the invertibility of is that any h rows of form a full rank sub-matrix. The latter is true if (A1) holds.

(c) Ref. [22] also addressed the existence of (Assertion 1) for non-random covariates (carriers) satisfying many demanding assumptions () that are never verified. The uniqueness was left unaddressed, though.

2.3. Properties in the Population Case

The best breakdown point of the LTS (see p. 132 of [1]) reflects its global robustness. We now examine its local robustness via the influence function to depict its complete robust picture.

2.3.1. Definition of Influence Function

For a distribution F on and an , the version of F contaminated by an amount of an arbitrary distribution G on is denoted by (an amount deviation from the assumed F). is actually a convex contamination of F. There are other types of contamination such as contamination by total variation or Hellinger distances. We cite the definition given in [29].

Definition 1

([29]). The influence function (IF) of a functional at a given point for a given F is defined as

where is the point-mass probability measure at .

The function describes the relative effect (influence) on of an infinitesimal point-mass contamination at and measures the local robustness of .

To establish the IF for the functional , we need to first show its existence and uniqueness with or without point-mass contamination. To that end, write

with , , as the corresponding random vector (i.e., ). The versions of (4) and (5) at the contaminated are, respectively,

with being the th quantile of the distribution function of , , and

2.3.2. Existence and Uniqueness

Write for in (4). To have a counterpart of Lemma 1, we need (A2): has a positive density around a small neighborhood of for the given , .

Lemma 2.

Assume (A2) holds and exists. Then,

(i) is continuous in ;

(ii) is twice differentiable in ,

(iii) is strictly convex in .

Proof.

The boundedness of the integrand in (4), given conditions, and the Lebesgue-dominated convergence theorem leads to the desired results. For the details, see Appendix A. □

Note that (ii) and (iii) above are global in , stronger than the empirical counterparts, and all are attributed to the boundary of issue. We now treat the existence and uniqueness of , which is central to the study of the asymptotics.

Theorem 2.

Assume (A2) holds and exists, is continuous in β, and for any and the given α. Then,

(i) and exist.

(ii) Furthermore, they are the solution of a system of equations, respectively,

(iii) and are unique provided that

are invertible for β in a small neighborhood of and , respectively.

Proof.

In light of Lemma 2, the proof is straightforward, see Appendix A. □

The continuity of in is necessary for the differentiability of . In the non-contaminated case, the continuity of is guaranteed by (A2).

Does the population version of the LTS, , defined in (5), have something to do with ? It turns out that under some conditions, they are identical, which is called Fisher consistency.

2.3.3. Fisher Consistency

Theorem 3.

Assume (A2) holds and exists, then, provided that

- (i)

- is invertible, and

- (ii)

- , where .

Proof.

Theorem 2 leads directly to the desired result, see the Appendix A. □

2.3.4. Influence Function

Theorem 4.

Assume that the assumptions in Theorem 2 hold. Set . Then, for any , we have that

provided that is invertible, where .

Proof.

The connection to the derivative of a functional is the key, see Appendix A. □

Remark 3.

(a) When , the problem in our model (1) becomes a location problem (see p. 158 of [1]) and the IF of the LTS estimation functional is given on p. 191 of [1]. In the location setting, Ref. [30] also studied the IF of the LTS. When , namely in the simple regression case, Ref. [31] studied IF of the sparse-LTS functional under the assumption that and e are independent and normally distributed. Under stringent assumptions on the error terms and on , Ref. [21] also addressed the IF of LTS for any p, but the point mass at with z being the error term, an unusual contaminating point. The above result is much more general and valid for any , , and e.

(b) The influence function of remains bounded if the contaminating point does not follow the model (i.e., its residual is extremely large), in particular for bad leverage points and vertical outliers. This shows the good robust properties of the LTS.

(c) The influence function of , unfortunately, might be unbounded (in case), sharing the drawback of the sparse-LTS (in the p = 2 case). The latter was shown in [31]. Trimming based on the residuals (or squared residuals) will have this type of drawback since the term can be bounded, but might not.

3. Asymptotic Properties

Refs. [22,23,24] rigorously addressed the consistency, root-n consistency, and normality of the LTS under a restrictive setting (s are non-random covariates) plus many assumptions on s and on the distribution of in a series of three lengthy papers.

Refs. [26,27] also addressed asymptotic properties of an extended LTS under -mixing conditions for with nonlinear regression function , a seemingly more general setting, but with numerous artificial assumptions (H1, H2, H3, H4, H5, H6; D1, D2; I1, I2) that are never verified in any concrete example and not even for the linear LTS case. That is, Refs. [26,27] do not cover the LTS in (3).

Here, we address asymptotic properties of without the artificial assumptions that were made in the literature for LTS with a nonlinear regression function. Strong consistency has been addressed in [25] in a nonlinear setting without verifying any of their conveniently assumed key assumptions for the linear LTS. We now rigorously establish strong consistency.

3.1. Strong Consistency

Following the notations of [32], write

where , and are fixed.

Under corresponding assumptions in Theorems 1 and 2, and are unique minimizers of and , respectively.

To show that converges to a.s., one can take the approach given in Section 4.2 of [28]. However, here, we take a different and more direct approach.

To show that converges to a.s., it will suffice to prove that a.s., because is bounded away from outside each neighborhood of in light of continuity and compactness. Let be a closed ball centered at with a radius . Define a class of functions

If we prove uniform almost sure convergence of to P over (see Lemma 3 below), then we can deduce that a.s. from

The above discussions and arguments have led to the following

Theorem 5.

Under corresponding assumptions in Theorems 1 and 2 for the uniqueness of and , respectively, we have converges a.s. to (i.e., , a.s.).

The above is based on the following generalized Glivenko–Cantelli Theorem.

Lemma 3.

a.s., provided that (A2) holds.

Proof.

Verifying two requirements in Theorem 24 in II.5 of [32] leads to the result. Showing the covering number for functions in is bounded is challenging. Essentially, one needs to show that the graphs of functions in form a VC class of sets (this was avoided in the literature, e.g., in [22,23,24,25,26,27]). For details, see Appendix A. □

3.2. Root-n Consistency and Asymptotic Normality

Instead of treating the root-n consistency separately as in [22,23,24], we will establish the asymptotic normality of directly via stochastic equicontinuity (see p. 139 of [32]).

Stochastic equicontinuity refers to a sequence of stochastic processes whose shared index set T comes equipped with a semi-metric .

Definition 2

(IIV. 1, Def. 2 of [32]). Call stochastically equicontinuous at if for each and there exists a neighborhood U of for which

It is readily seen (see [32]) that if is a sequence of random elements of T that converges in probability to , then,

because, with probability tending to one, will belong to each U. The form above will be easier to apply, especially when the behavior of a particular sequence is under investigation.

Suppose , with T a subset of , is a collection of real, P-integrable functions on the set S where P (probability measure) lives. Denote by the empirical measure formed from n independent observations on P, and define the empirical process as the signed measure . Define

Suppose has a linear approximation near the at which takes on its minimum value:

For completeness, set , where ∇ (differential operator) is a vector of k real functions on S. We cite theorem 5 in VII.1 of [32] (p. 141) for the asymptotic normality of .

Lemma 4

([32]). Suppose is a sequence of random vectors converging in probability to the value at which has its minimum. Define and the vector of functions by (22). If

- (i)

- is an interior point of the parameter set T;

- (ii)

- has a non-singular second derivative matrix V at ;

- (iii)

- ;

- (iv)

- The components of all belong to ;

- (v)

- The sequence is stochastically equicontinuous at ;

then,

By Theorems 1 and 5, assume, without loss of generality (w.l.o.g.), that and belong to a ball centered at with a large enough radius , , and that is our parameter space of , hereafter. In order to apply the Lemma, we first realize that in our case, and correspond to and (assume, w.l.o.g. that in light of regression equivariance, see Section 4); and correspond to t and T; . In our case,

We will have to assume that exists to meet (iv) of the lemma, where and . It is readily seen that a sufficient condition for this assumption to hold is the existence of . In our case, , and we will have to assume that it is invertible when is replaced by (this is covered by (18)) to meet (ii) of the lemma. In our case,

We will assume that and are the minimum and maximum eigenvalues of positive semidefinite matrix V over all and .

Theorem 6.

Assume that

- (i)

- The uniqueness assumptions for and in Theorems 1 and 2 hold respectively;

- (ii)

- exists with ;

then

where β in V and ∇ is replaced by (which could be assumed to be zero).

Proof.

The key to applying this Lemma is to verify (v). For details, see Appendix A. □

Remark 4.

(a) In the case of , that is in the location case, the asymptotic normality of the LTS has been studied in [21,33,34].

(b) Ref. [35], under the rank-based optimization framework and stringent assumptions on error term (even density that is strictly decreasing for positive value, bounded absolute first moment) and on (bounded fourth moment), covers the asymptotic normality of the LTS. Ref. [24] also treated the general case and obtained the asymptotic normality of the LTS under many stringent conditions on the non-random covariates s and the distributions of in a 27-page article. The assumption is quite artificial and never verified there. Conversely, Refs. [26,27] also addressed the asymptotic normality of LTS in the nonlinear regression under a dependence setting. For these extensions, many artificial assumptions (D1, D2, H1, H2, H3, H4, H5, H6, I1, I2) are imposed, but they are never verified even for the linear LTS case. So those results do not cover the LTS in (3).

(c) Furthermore, since there was no population version like (4) and (5) before, empirical process theory could not be employed to verify the VC class of functions in [22,23,24,26,27]. Our approach here is quite different from former classical analyses and much more neat and concise (employing the standard empirical process theory that was asserted to be not applicable in [26,27]).

4. Inference Procedures

In order to utilize the asymptotic normality result in Theorem 6, we need to figure out the asymptotic covariance. For simplicity, assume that follows elliptical distributions with density

where and is a positive definite matrix of size p which is proportional to the covariance matrix if the latter exists. We assume is unimodal.

4.1. Equivariance

A regression estimation functional is said to be regression, scale, and affine equivariant (see [8]) if, respectively,

Theorem 7.

is regression, scale, and affine equivariant.

Proof.

See the empirical version treatment given in [1] (p. 132). □

4.2. Transformation

Assume the Cholesky decomposition of yields a non-singular lower triangular matrix of the form

with . Hence, . Now, transfer to with . It is readily seen that the distribution of follows .

Note that with . That is,

Equivalently,

where

It is readily seen that (25) is an affine transformation on and (26) is first an affine transformation on then a regression transformation on y followed by a scale transformation on y. In light of Theorem 7, we can assume, hereafter, w.l.o.g. that follows an (spherical) distribution and is the covariance matrix of .

Theorem 8.

Assume that , e, and are independent. Then,

- (1)

- and ,with , where and is the CDF of , is a chi-square random variable with one degree of freedom.

- (2)

- with .

- (3)

- , where C and are defined in (1) and (2) above.

Proof.

See Appendix A. □

4.3. Approximate Confidence Region

(i) Based on the asymptotic normality. Under the setting of Theorem 8, an approximate confidence region for the unknown regression parameter is:

where stands for the Euclidean distance. Without asymptotic normality, one can appeal to the next procedure.

(ii) Based on bootstrapping scheme and depth-median and depth-quantile. Here, no assumptions on the underlying distribution are needed. This approximate procedure first re-samples n points with replacement from the given original sample points and calculates an . Repeat this m (a large number, say ) times and obtain m such s.

The next step is to calculate the depth, concerning a location depth function (e.g., halfspace depth [36] or projection depth [37,38]), of these m points in the parameter space of . Trimming of the least deep points among the m points, the left points form a convex hull, that is an approximate confidence region for the unknown regression parameter in the location case and low dimensions.

Example 1.

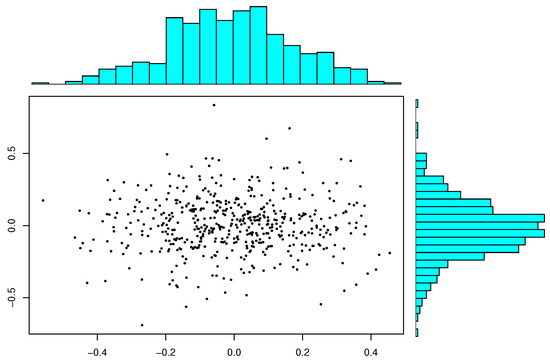

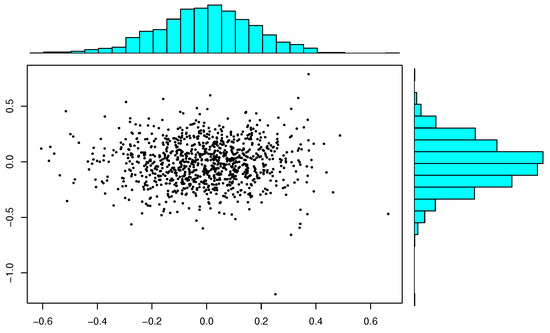

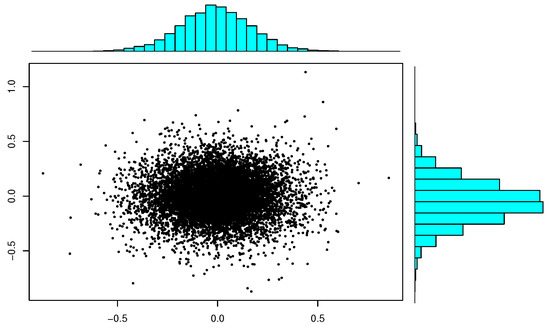

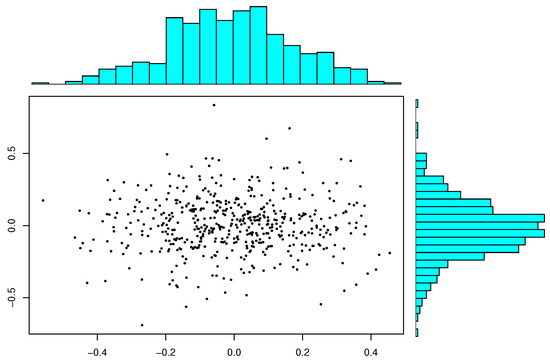

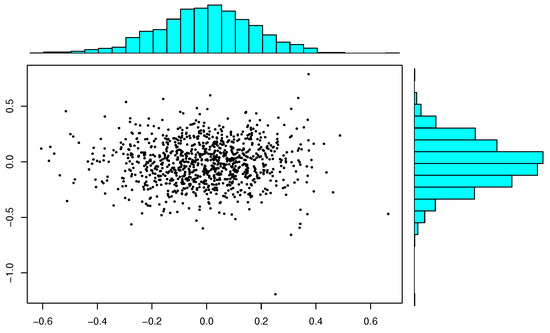

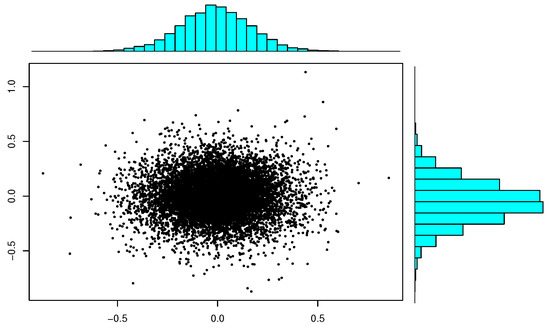

To illustrate the normality of , here, we carry out a small-scale simulation. We will generate s, each obtained based on a bivariate standard normal sample of size . For each N, we provide scatter plots of N s and marginal histgrams. Inspecting Figure 1, Figure 2 and Figure 3 reveals that the plots of s more and more resemble a bivariate normal pattern when the number of increases. The marginal histograms confirm the normality.

Figure 1.

Marginal histograms and scatter plot of 500 with sample size .

Figure 2.

Marginal histograms and scatter plot of 1000 with sample size .

Figure 3.

Marginal histograms and scatter plot of 10000 with sample size .

5. Concluding Remarks

Without the population version of the LTS (see (5)), it will be difficult to apply the empirical process theory to study the asymptotics of the LTS, e.g, to verify the key result, the VC-class property of regression function class (indexed by ) will be challenging. To avoid this challenge, some authors addressed the asymptotics of the nonlinear LTS, where without an explicit regression function (unlike the linear case), they can conveniently assume this VC-class property. Refs. [26,27] even believed that the standard empirical process theory does not apply to the asymptotics of LTS, while Refs. [22,23,24], addressed the asymptotics without any advanced tools, employing elementary tools with numerous artificial, difficult-to-verify assumptions and lengthy articles.

By partitioning the parameter space and introducing the population version for the LTS, this article establishes some fundamental and primary properties for the objective function of the LTS in both empirical and population settings. These newly obtained original results verify some key facts that were conveniently assumed (but never verified) in nonlinear regression literature for the LTS and facilitate the application of standard empirical process theory to the establishment of asymptotic normality for the sample LTS concisely and neatly. Some of the newly obtained results, such as Fisher and strong consistency, and influence function, are original and obtained as by-products.

The asymptotic normality is applied in Theorem 8 for the practical inference procedure of confidence regions of the regression parameter . There are open problems left here; one is the estimation of the variance of e, which is now unrealistically assumed to be known, and the other is the testing of the hypothesis on .

Funding

This author declares that there was no funding received for this study.

Data Availability Statement

The data will be made available by authors on request.

Acknowledgments

Insightful comments and useful suggestions from Wei Shao and Derek Young have significantly improved the manuscript and are highly appreciated. Special thanks go to Derek Young for making the technical report of Chen, Stromberg, and Zhou available.

Conflicts of Interest

This author declares that there is no conflict of interests/Competing interests.

Abbreviations

The following abbreviations are used in this manuscript:

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open-access journals |

| TLA | Three-letter acronym |

| LD | Linear dichroism |

Appendix A. Proofs

Proof of Lemma 1.

(i) Based on the definition in (9), over , there is no tie among the smallest h squared residuals. The assertion (a) follows straightforwardly.

The first and the last equality in (b) is trivial, it suffices to focus on the middle one. Let ( in light of (9)). By (6), we have that

Let and . Then, (a.s.).

Based on the continuity of in , for any and for any given , we can fix a small so that for any . Now, we have for any , (assume below )

Thus, , forms the h-integer set for any . Part (b) follows.

(ii) The domain of function is the union of the pieces of and the function of over is a quadratic function of : , the statement follows.

(iii) By (ii), it is clear that is continuous in over each piece of . We only need to show that this holds for any that is on the boundary of .

Let lie on the common boundary of and , then, for any [this is obviously true if , it is also true if on the boundary of since in this case the -h-integer set is not unique, there are at least two; one of them is ] and for any . Let be a sequence approaching , where could be on or on . We show that approaches to . Note that . Partition into and so that all members of the former belong to where the latter are all within . By continuity of the sum of h squared residuals in , both and approach to since both and approach as .

(iv) Note that for any l, , over , one has a least squares problem with n reduced to h, is a quadratic function and hence, is twice differentiable and strictly convex in light of the following

where , , and . Strict convexity follows from the positive definite of the Hessian matrix: (an invertible matrix due to (A1), see (iii) in the proof of Theorem 1). □

Proof of Theorem 1.

(i) Over each , an open set, is twice differentiable and strictly convex in light of given condition, hence, it has a unique minimizer (otherwise, one can show that by openness and strictly convexity there is a third point in that attains a strictly smaller objective value than the two minimizers). Since there are only finitely many , the assertion follows if we can prove that the minimum does not reach a boundary point of some .

Assume it is otherwise. That is, reaches its global minimum at point which is a boundary point of for some l. Assume that over , attains its local minimum value at the unique point . Then, . If equality holds, then, we have the desired result (since there are points besides in which also attain the minimum value as , a contradiction). Otherwise, there is a point in the small neighborhood of so that . A contradiction appears.

(ii) It is seen from (i) that is twice continuously differentiable, hence, its first derivative evaluated at the global minimum must be zero. By (i), we have the Equation (11).

(iii) This part directly follows from (ii) and the invertibility of . The latter follows from (A1), which implies that the p columns of matrix are linearly independent and also implies that any h sub-rows of has a full rank. □

Proof of Lemma 2.

Label , the integrand in (4), for a point . Write .

(i) By the strictly monotonicity of around , we have the continuity of the . Consequently, is obvious continuous and so is in .

(ii) For arbitrary points and in , there are three cases for the relationship between the squared residual and its quantile: (a) (b) , and (c) . Case (c) happens with probability zero, we thus skip this case and treat (a) and (b) only. By the continuity in , there is a small neighborhood of : , centered at with radius such that (a) (or (b)) holds for all . This implies that

and

Hence, we have that

Note that exists. Then, by the Lebesgue-dominated convergence theorem, the desired result follows.

(iii) The strict convexity follows from the twice differentiability and the positive definite of the second order derivative of . □

Proof of Theorem 2.

We will treat , the counterpart for can be treated analogously.

(i) Existence follows from the positive semi-definiteness of the Hessian matrix (see proof of (ii) of Lemma 2) and the convexity of .

(ii) The equation follows from the differentiability and the first order derivative of given in the proof (ii) of Lemma 2.

(iii) The uniqueness follows from the positive definite of the Hessian matrix based on the given condition (invertibility). □

Proof of Theorem 3.

By Theorem 2, (i) and given conditions guarantee the existence and the uniqueness of , which is the unique solution of the system of the equations

Notice that . Inserting this into the above equation, we have

By (ii), it is readily seen that is a solution of the above system of equations. Uniqueness leads to the desired result. □

Proof of Theorem 4.

Write for and insert it for into (17) and take derivative with respect to in both sides of (17) and let , we obtain (in light of dominated theorem)

where in the first term on the LHS and in the second term on the LHS. Call the two terms on the LHS as and , respectively, and call the integrand in as , then, it is seen that (see the proof (i) of Theorem 1)

Focus on the , it is readily seen that

In light of (16), we have

This, , and display (A2) lead to the desired result. □

Proof of Lemma 3.

We invoke Theorem 24 in II.5 of [32]. The first requirement of the theorem is the existence of an envelope of . The latter is , which is bounded since is compact and is continuous in , and is non-decreasing in . To complete the proof, we only need to verify the second requirement of the theorem.

For the second requirement, that is, to bound the covering numbers, it suffices to show that the graphs of functions in have only polynomial discrimination (see Theorem 25 and Example 26 in II.5 of [32]).

The graph of a real-valued function f on a set S is defined as the subset (see p. 27 of [32])

The graph of a function in contains a point if and only if or . The latter case could be excluded since the function is always nonnegative (and equals 0 case covered by the former case). The former case happens if and only if or .

Given a collection of n points (), the graph of a function in picks out only points that belong to . Introduce n new points in . On define a vector space of functions

where , , and and which is a -dimensional vector space.

It is clear now that the graph of a function in picks out only points that belong to the sets of for (ignoring the union and intersection operations at this moment). By Lemma 18 in II.4 of [32] (p. 20), the graphs of functions in pick only polynomial numbers of subsets of ; those sets corresponding to with , , and pick up even few subsets from . This in conjunction with Lemma 15 in II.4 of [32] (p. 18), yields that the graphs of functions in have only polynomial discrimination. By Theorem 24 in II.5 of [32], we have completed the proof. □

Proof of Theorem 6.

To apply Lemma 4, we need to verify the five conditions; among them, only (iii) and (v) need to be addressed, and all others are satisfied trivially. For (iii), it holds automatically since our is defined to be the minimizer of over .

So, the only condition that needs to be verified is (v), the stochastic equicontinuity of at . For that, we will appeal to the Equicontinuity Lemma (VII.4 of [32], p. 150). To apply the Lemma, we will verify the condition for the random covering numbers satisfy the uniformity condition. To that end, we look at the class of functions

Obviously, is an envelope for the class in , where is the radius of the ball . We now show that the covering numbers of is uniformly bounded, which amply suffices for the Equicontinuity Lemma. For this, we will invoke Lemmas II.25 and II.36 of [32]. To apply Lemma II.25, we need to show that the graphs of functions in have only polynomial discrimination. The graph of contains a point , if and only if for all and .

Equivalently, the graph of contains a point , if and only if . For a collection of n points with , the graph picks out those points satisfying . Construct from , a point in . On , define a vector space of functions

By Lemma 18 of [32], the sets , for , pick out only a polynomial number of subsets from ; those sets corresponding to functions in with and pick out even fewer subsets from . Thus, the graphs of functions in have only polynomial discrimination. □

Proof of Theorem 8.

In order to invoke Theorem 6, we only need to check the uniqueness of and . The former is guaranteed by the (iii) of Theorem 1 since (A1) holds true a.s. This is because any p columns of the or any of its h rows could be regarded as a sample from an continuous random vector with dimension n or h. The probability that these p points lie in a dimensional non-degenerated hyperplane (the normal vector is non-zero) is zero.

The latter is guaranteed by (iii) of Theorem 2 since is the square of a normal distribution with mean and hence, has a positive density and (18) becomes , hence, is invertible, where c is defined in Theorem 8. By Theorems 3 and 7, we can assume, w.l.o.g., that . Utilizing the independence between e and and Theorem 6, a straightforward calculation leads to the results. □

References

- Rousseeuw, P.J.; Leroy, A. Robust Regression and Outlier Detection; Wiley: New York, NY, USA, 1987. [Google Scholar]

- Huber, P.J. Robust estimation of a location parameter. Ann. Math. Stat. 1964, 35, 73–101. [Google Scholar] [CrossRef]

- Rousseeuw, P.J. Least median of squares regression. J. Am. Stat. Assoc. 1984, 79, 871–880. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Yohai, V.J. Robust regression by means of S-estimators. In Robust and Nonlinear Time Series Analysis; Lecture Notes in Statistics; Springer: New York, NY, USA, 1984; Volume 26, pp. 256–272. [Google Scholar]

- Yohai, V.J. High breakdown-point and high efficiency estimates for regression. Ann. Stat. 1987, 15, 642–656. [Google Scholar] [CrossRef]

- Yohai, V.J.; Zamar, R.H. High breakdown estimates of regression by means of the minimization of an efficient scale. J. Am. Stat. Assoc. 1988, 83, 406–413. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Hubert, M. Regression depth (with discussion). J. Am. Stat. Assoc. 1999, 94, 388–433. [Google Scholar] [CrossRef]

- Zuo, Y. On general notions of depth for regression. Stat. Sci. 2021, 36, 142–157. [Google Scholar] [CrossRef]

- Zuo, Y. Robustness of the deepest projection regression depth functional. Stat. Pap. 2021, 62, 1167–1193. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Van Driessen, K. Computing LTS Regression for Large Data Sets. Data Min. Knowl. Discov. 2006, 12, 29–45. [Google Scholar] [CrossRef]

- Stromberg, A.J. Computation of High Breakdown Nonlinear Regression Parameters. J. Am. Stat. Assoc. 1993, 88, 237–244. [Google Scholar] [CrossRef]

- Hawkins, D.M. The feasible solution algorithm for least trimmed squares regression. Comput. Stat. Data Anal. 1994, 17, 185–196. [Google Scholar] [CrossRef]

- Hössjer, O. Exact computation of the least trimmed squares estimate in simple linear regression. Comput. Stat. Data Anal. 1995, 19, 265–282. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Van Driessen, K. A fast algorithm for the minimum covariance determinant estimator. Technometrics 1999, 41, 212–223. [Google Scholar] [CrossRef]

- Hawkins, D.M.; Olive, D.J. Improved feasible solution algorithms for high breakdown estimation. Comput. Stat. Data Anal. 1999, 30, 1–11. [Google Scholar] [CrossRef]

- Agullö, J. New algorithms for computing the least trimmed squares regression estimator. Comput. Stat. Data Anal. 2001, 36, 425–439. [Google Scholar] [CrossRef]

- Hofmann, M.; Gatu, C.; Kontoghiorghes, E.J. An Exact Least Trimmed Squares Algorithm for a Range of Coverage Values. J. Comput. Andgr. Stat. 2010, 19, 191–204. [Google Scholar] [CrossRef]

- Klouda, K. An Exact Polynomial Time Algorithm for Computing the Least Trimmed Squares Estimate. Comput. Stat. Data Anal. 2015, 84, 27–40. [Google Scholar] [CrossRef]

- Alfons, A.; Croux, C.; Gelper, S. Sparse least trimmed squares regression for analyzing high-dimensional large data sets. Ann. Appl. Stat. 2013, 7, 226–248. [Google Scholar] [CrossRef]

- Kurnaz, F.S.; Hoffmann, I.; Filzmoser, P. Robust and sparse estimation methods for high-dimensional linear and logistic regression. Chemom. Intell. Lab. Syst. 2018, 172, 211–222. [Google Scholar] [CrossRef]

- Mašíček, L. Optimality of the Least Weighted Squares Estimator. Kybernetika 2004, 40, 715–734. [Google Scholar]

- Všek, J.Á. The least trimmed squares. Part I: Consistency. Kybernetika 2006, 42, 1–36. [Google Scholar]

- Všek, J.Á. The least trimmed squares. Part II: -consistency. Kybernetika 2006, 42, 181–202. [Google Scholar]

- Všek, J.Á. The least trimmed squares. Part III: Asymptotic normality. Kybernetika 2006, 42, 203–224. [Google Scholar]

- Chen, Y.; Stromberg, A.; Zhou, M. The Least Trimmed Squares Estimate in Nonlinear Regression; Technical Report, 1997/365; Department of Statistics, University of Kentucky: Lexington, KY, USA, 1997. [Google Scholar]

- Čížek, P. Asymptotics of Least Trimmed Squares Regression; CentER Discussion Paper 2004-72; Tilburg University: Tilburg, The Netherlands, 2004. [Google Scholar]

- Čížek, P. Least Trimmed Squares in nonlinear regression under dependence. J. Stat. Plan. Inference 2005, 136, 3967–3988. [Google Scholar] [CrossRef]

- Zuo, Y.; Zuo, H. Least sum of squares of trimmed residuals regression. Electron. J. Stat. 2023, 17, 2447–2484. [Google Scholar] [CrossRef]

- Hampel, F.R.; Ronchetti, E.M.; Rousseeuw, P.J.; Stahel, W.A. Robust Statistics: The Approach Based on Influence Functions; John Wiley & Sons: New York, NY, USA, 1986. [Google Scholar]

- Tableman, M. The influence functions for the least trimmed squares and the least trimmed absolute deviations estimators. Stat. Probab. Lett. 1994, 19, 329–337. [Google Scholar] [CrossRef]

- Öllerer, V.; Croux, C.; Alfons, A. The influence function of penalized regression estimators. Statistics 2015, 49, 741–765. [Google Scholar] [CrossRef]

- Pollard, D. Convergence of Stochastic Processes; Springer: Berlin, Germany, 1984. [Google Scholar]

- Bednarski, T.; Clarke, B.R. Trimmed likelihood estimation of location and scale of the normal distribution. Aust. J. Stat. 1993, 35, 141–153. [Google Scholar] [CrossRef]

- Butler, R.W. Nonparametric interval point prediction using data trimmed by a Grubbs type outlier rule. Ann. Stat. 1982, 10, 197–204. [Google Scholar] [CrossRef]

- Hössjer, O. Rank-Based Estimates in the Linear Model with High Breakdown Point. J. Am. Stat. Assoc. 1994, 89, 149–158. [Google Scholar] [CrossRef]

- Zuo, Y. A new approach for the computation of halfspace depth in high dimensions. Commun. Stat. Simul. Comput. 2018, 48, 900–921. [Google Scholar] [CrossRef]

- Zuo, Y. Projection-based depth functions and associated medians. Ann. Stat. 2003, 31, 1460–1490. [Google Scholar] [CrossRef]

- Shao, W.; Zuo, Y.; Luo, J. Employing the MCMC Technique to Compute the Projection Depth in High Dimensions. J. Comput. Appl. Math. 2022, 411, 114278. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).