Abstract

The conditioning theory of the generalized inverse is considered in this article. First, we introduce three kinds of condition numbers for the generalized inverse , i.e., normwise, mixed and componentwise ones, and present their explicit expressions. Then, using the intermediate result, which is the derivative of , we can recover the explicit condition number expressions for the solution of the equality constrained indefinite least squares problem. Furthermore, using the augment system, we investigate the componentwise perturbation analysis of the solution and residual of the equality constrained indefinite least squares problem. To estimate these condition numbers with high reliability, we choose the probabilistic spectral norm estimator to devise the first algorithm and the small-sample statistical condition estimation method for the other two algorithms. In the end, the numerical examples illuminate the obtained results.

Keywords:

generalized inverse MSC:

65F20; 65F35; 65F30; 15A12; 15A60

1. Introduction

Throughout this paper, denotes the set of real matrices. For a matrix , is the transpose of A, denotes the rank of A, is the spectral norm of A, and is the Frobenius norm of A. For a vector a, is its ∞-norm, and the 2-norm. The notation is a matrix whose components are the absolute values of the corresponding components of A. For any matrix A, the following four equations uniquely define the Moore–Penrose inverse of A [1]:

The generalized inverse is defined by

where , denotes weight matrix and is the orthogonal projection onto the null space of C and may not have full rank and J is a signature matrix defined by

The generalized inverse originated from the equality constrained indefinite least squares problem (EILS), which is stated as follows [2,3,4,5]:

where and . The EILS problem has a unique solution:

under the following condition:

The above condition implies

then (5) ensures the existence and uniqueness of generalized inverse (see [2,6]). The generalized inverse has significant applications in the study of EILS algorithms, the analysis of large-scale structure, error analysis, perturbation theory, and the solution of the EILS problem [2,3,4,5,7,8,9,10]. The EILS problem was first demonstrated by Bojanczyk et al. [5]. Additionally, we reveal some detailed work on the perturbation analysis of this problem. The perturbation theory of the EILS problem was discussed by Wang [11] and extended by Shi and Liu [8] based on the hyperbolic MGS elimination method. Diao and Zhou [12] recovered the linearized estimate of the backward error of this problem. Later, Li et al. [13] investigated the componentwise condition numbers for the EILS problem. Recently, Wang and Meng [14] studied the condition numbers and normwise perturbation analysis of the EILS problem.

Componentwise perturbation analysis has received significant attention in recent years; for references, see [15,16,17,18,19]. The motivation for studying componentwise perturbation analysis is reasonable for research because, if the perturbation in the input data is measured componentwise rather than by norm, it may help us to measure the sensitivity of a function more accurately [15], and improve the exactness and effectiveness of the EILS solution computation. It has attracted many authors’ attention to consider the componentwise perturbation analysis in which the least squares problem [16] and the weighted least squares problem [17] are included. In this article, we continue the research on componentwise perturbation analysis of the EILS problem. We can recover the componentwise perturbation bounds of the indefinite least squares problem with the intermediate result.

The generalized inverse reduce to K-weighted pseudoinverse when and K has a full row rank. This pseudoinverse was expanded to the MK-weighted pseudoinverse by Wei and Zhang [6], which describes its structure and uniqueness. Its algorithm was developed by Elden [20]. According to Wei [21], the expression of based on GSVD was investigated. A perturbation equation for was given by Gulliksson et al. [22]. The condition numbers for the K-weighted pseudoinverse and their statistical estimate were recently provided by Mahvish et al. [23].

The condition number is a well-known research topic in numerical analysis that estimates the worst-case sensitivity of input data to small perturbations on it (see [24,25,26] and references therein). The normwise condition number [25] has the disadvantage of disregarding the scaling structure of both input and output data. To address this issue, the terms mixed and componentwise condition numbers are introduced [26]. Mixed condition numbers employ componentwise error analysis for input data and normwise error analysis for output data. On the other hand, the componentwise condition numbers employ componentwise error analysis for input and output data. In fact, due to rounding errors and data storage difficulties, it is more practical to estimate input errors componentwise rather than normwise. However, the condition numbers of the generalized inverse have not been discussed until now. Inspired by this, we attempt to present the explicit expressions of normwise, mixed and componentwise condition numbers for the generalized inverse , as well as their statistical estimation due to their importance in EILS research.

The rest of this manuscript is organized as follows: Section 2 provides some preliminaries that will be helpful for the upcoming discussions. With the intermediate result, i.e., the derivative of , we can recover the explicit expression of condition numbers for the solution of the EILS problem in Section 3. Section 4 will present the componentwise perturbation analysis for the EILS problem. In Section 5, we propose the first two algorithms for the normwise condition number by using the probabilistic spectral norm estimator [27] and the small-sample statistical condition estimation [28] method. Additionally, we construct the third algorithm for the mixed and componentwise condition numbers by using the small-sample statistical condition estimation [28] method. To check the efficiency of these algorithms, we demonstrate them through numerical experiments in Section 6.

2. Preliminaries

In this part, we introduce some definitions and important results, which will be used in the upcoming sections.

Firstly, we define the entrywise division between two vectors and by with

Following [1,26,29], the componentwise distance between v and w is defined by

Note that when , gives the relative distance from v to w with respect to w, while the absolute distance for . We describe the distance between the matrices as follows:

In order to define the mixed and componentwise condition numbers, we also need to define the set and for given

Definition 1

([29]). Let be a continuous mapping defined on an open set , and such that .

- (i)

- The normwise condition number of ℵ at v is given by

- (ii)

- The mixed condition number of ℵ at v is given by

- (iii)

- The componentwise condition number of ℵ at v is given by

When the map ℵ in Definition 1 is Fréchet differentiable, the following lemma given in [29] makes the computation of condition numbers easier.

Lemma 1

([29]). Under the assumptions of Definition 1, and supposing ℵ is differentiable at v, we have

where stands for the Fréchet derivative of ℵ at v.

To obtain the explicit expressions of the above condition numbers, we need some properties of the Kronecker product [30] between X and Y:

where the matrix Z has a suitable dimension, and is the vec-permutation matrix, which depends only on the dimensions m and n.

Now, we present the following two lemmas, which will be helpful for obtaining condition numbers and their upper bounds.

Lemma 2

([31], p. 174, Theorem 5). Let S be an open subset of , and let be a matrix function defined and times (continuously) differentiable on S. If is constant on then is k times (continuously) differentiable on S, and

Lemma 3

([1]). For any matrices and with dimensions making the following well defined

we have

and

3. Condition Numbers

First, we define a mapping by

Here, , , and for matrix , and

Then, using Definition 1, we present the definitions of the normwise, mixed, and componentwise condition numbers for generalized inverse as given in [32]:

With the help of the operator, Frobenius, spectral, and Max norms, we can rewrite the definitions of normwise, mixed and componentwise condition numbers as follows:

In the following, we find the expression of the Fréchet derivative of at u.

Lemma 4.

Let the mapping ϕ be continuous. Then, the Fréchet differential at u is:

where

Proof.

Differentiating both sides of (2), we obtain

From ([3], Theorem 2.2), we obtain

Thus, substituting (20) into (19) and differentiating both sides of the equation, we can deduce

Further, using (9), we have

Noting (20), (2), and , the previous equation may be expressed as

Further, by the fact , (21), and

the above equation may be simplified as follows:

Considering , we obtain

Substituting this fact into (23) implies

We can rewrite the above equation by using (2) and (20) as

By applying “vec” operator on (25), and using (6) and (7), we obtain

That is,

Thus, we have obtained the required result by using the definition of Fréchet derivative. □

Remark 1.

Setting , , and C as full row rank, we have and

where the latter is just the result of ([23], Lemma 3.1), with which we can recover the condition numbers for K-weighted pseudoinverse [23].

Using the straightforward results of Lemmas 1 and 4, we derive the following condition numbers for

Next, we provide easier computable upper bounds by minimizing the cost of computing the above condition numbers. The estimation of the upper bounds will be demonstrated by numerical experiments in Section 6.

Corollary 1.

The upper bounds of normwise, mixed and componentwise condition numbers for are

Proof.

For any two matrices X and Y, it is well-known that . With the help of Theorem 1, and (8), we obtain

Secondly, by using Lemma 3 and Theorem 1, we obtain

and finally, we have

□

Remark 2.

Using the GHQR factorization [3] on A and C in (2) and (5):

where and and a -orthogonal matrix, (i.e., ), and are lower triangular and non-singular. We have

where , ; and are, respectively, the submatrices of U and H obtained by taking the first r columns. Putting all the above terms into (18) leads to

Remark 3.

We can obtain using the expression, where (4) is the solution of EILS problem (3). By differentiating (4), we obtain

Thus, using (20), we obtain

Substituting (25) into above equation and using (9), we have

which together with (20)–(22) give

Noting (24), the above equation can be rewritten as

Further, by (20) and (4), we have

where , . By utilizing operator “vec” on (30), and using (6) and (7), we obtain

From the above result, we can recover the condition numbers of the EILS problem provided in [3,13,14]. Further, we observe that . Applying the same procedure, we can determine and condition numbers for residuals of EILS.

4. Componentwise Perturbation Analysis

In the following section, we derive a componentwise perturbation analysis of the augmented system for the EILS problem.

Let the perturbations , , and satisfy , and for a small and . Suppose that the perturbed augmented system is

Denoting

and the perturbations

When A is of full column rank and C has full row rank, S is invertible. It can be verified that

If the spectral radius

then is invertible. Clearly, the condition

implies (31). The following results [24] are important for Theorem 2.

Lemma 5.

The perturbed system of a linear system is defined as follows:

where is the solution to the perturbed system, when the perturbations and are sufficiently small such that is invertible, the perturbation in the solution v satisfies

which implies

Furthermore, when the spectral radius , we have

Now, we have the following bounds for the perturbations in the equality constrained indefinite least squares solution and residual.

Theorem 2.

Under the above assumption, for any satisfying the condition (32), when the componentwise perturbations , and , the error in the solution is bounded by

and error in the residual is bounded by

Proof.

Furthermore, we can obtain the componentwise perturbation bounds of the indefinite least squares solution and its residual.

Remark 4.

Assume that C is a zero matrix, , and . Using the above notations, for any , if the componentwise perturbations satisfy and , then the error in the solution is bounded by

and the error in the residual is bounded by

5. Statistical Condition Estimates

This section proposes three algorithms for estimating the normwise, mixed and componentwise condition numbers for the generalized inverse Algorithm 1 is based on a probabilistic condition estimator method [27] and utilized to examine the normwise condition number for K-weighted pseudoinverse [23], ILS problem [33], constrained and weighted least squares problem [34] and Tikhonov regularization of total least squares problem [35]. Based on the SSCE method [28], we develop Algorithm 2 to estimate the normwise condition number; for details, see [23,33,36,37,38].

| Algorithm 1: Probabilistic condition estimator for the normwise condition number |

|

| Algorithm 2: Small-sample statistical condition estimation method for the normwise condition number |

|

To estimate the mixed and componentwise condition numbers, we need the following SSCE method, which is from [28] and has been applied to many problems (see, e.g., [23,32,33,34,35]).

6. Numerical Experiments

In the following section, we illustrate two specific examples. The first compares the normwise, mixed and componentwise condition numbers and their upper bounds. The second is used to present the efficiency of statistical condition estimators.

Example 1.

In this example, we first compute the condition numbers and their upper bounds by using the below matrix pair, then we demonstrate the reliability of Algorithms 1–3. Matlab2018a has been used to perform all the numerical experiments. We examine 200 matrices that are created by repeatedly applying the matrices from [33] and below.

where and are unit random vectors obtained from Matlab function randn( and . It is simple to determine that the condition number of A, i.e., , is . , where is a nonsymmetric Gaussian random Toeplitz matrix generated by the Matlabs function with , . From Table 1, we can see the numerical outcomes of the ratios given by

Table 1.

Comparison of condition numbers and their upper bounds by choosing different values of and n.

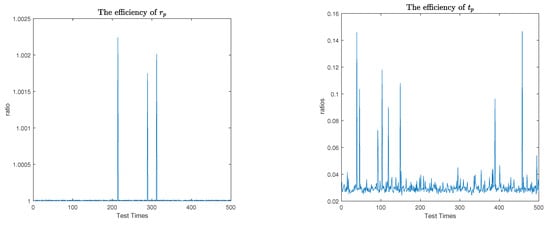

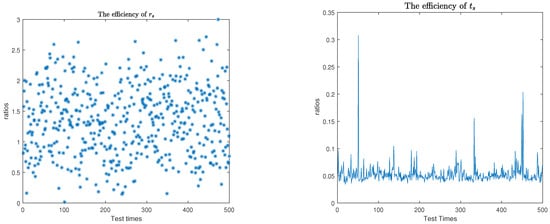

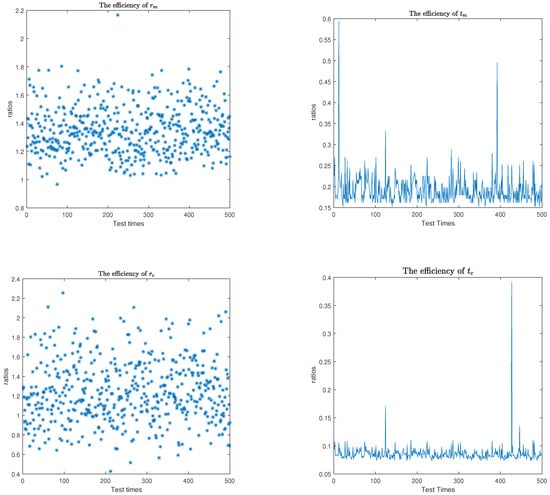

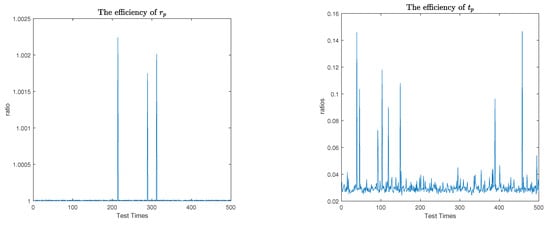

To show the efficiency of the three algorithms discussed above, we run some numerical tests and choose parameters and for Algorithm 1 and for Algorithms 2 and 3. The ratios between the exact condition numbers and their estimated values are determined as follows:

where is the ratio between the exact normwise condition number and the estimated value of Algorithm 1, is the ratio between the exact normwise condition number and the estimated value of Algorithm 2, and and are the ratios between the exact mixed and componentwise condition numbers and estimated values of Algorithm 3.

The results in Table 2 demonstrate that Algorithms 1–3 can reliably estimate the condition numbers in most situations, supporting the statement in ([39], Chapter 15) that an estimate of the condition number that is correct to within a factor 10 is generally appropriate because it is the magnitude of an error bound that is of interest, not its precise value. For the normwise condition number, Algorithm 1 works more effectively and stably.

Table 2.

Results by choosing different values of and n for Algorithms 1–3.

| Algorithm 3: Small-sample statistical condition estimation method for the mixed and componentwise condition numbers |

|

Example 2.

On similar patrons given in [2,3,5], we generate A and C matrices using the GHQR factorization.

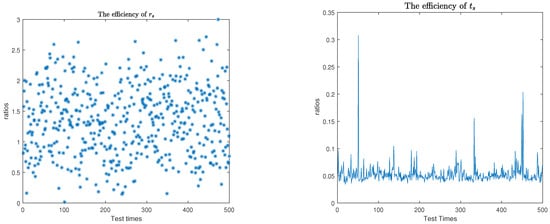

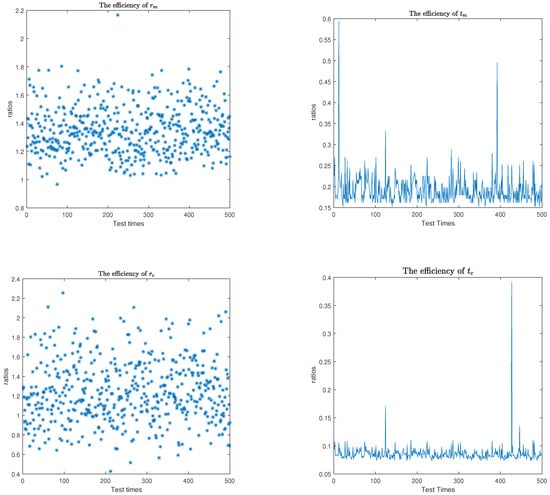

where is J-orthogonal, i.e., , Q is orthogonal, and and are lower triangular and non-singular, respectively. In our experiment, we let be random matrices. H is a random J-orthogonal matrix with a specific condition number generated using the method described in [40]. and generated randomly (by Matlabs gallery (‘qmult ’, …)), , and are generated by QR factorization of random matrices with specified condition numbers and pre-assigned singular value distributions (generated via Matlabs gallery (‘randsvd’, …)). To examine the above algorithms’ performance, we use 500 matrix pairs, variate the condition numbers of A and C, and set , , , and . The ratios between the exact condition numbers and their estimated values are below.

where the parameters δ, ϵ, k, and ratios , , and are the same as given in Example 1. We present these numerical results and CPU time in Figure 1 and Figure 2. The time ratios are defined by

where t is the CPU time of computing the generalized inverse by GHQR decomposition [20]. is the CPU time of Algorithm 1, is the CPU time of Algorithm 2, and and are the CPU times of Algorithm 3. From Figure 1 and Figure 2, we can see that these three algorithms are highly efficient in estimating condition numbers. However, Table 3 shows that the CPU times of Algorithms 1 and 2 are smaller than Algorithm 3.

Figure 1.

Efficiency of normwise condition estimators and CPU times of Algorithms 1 and 2.

Figure 2.

Efficiency of mixed and componentwise condition estimators and CPU time of Algorithm 3.

Table 3.

CPU times for Algorithms 1–3 by choosing different values of and n.

7. Conclusions

In this paper, we provided the explicit expressions and upper bounds for the normwise, mixed, and componentwise condition numbers for the generalized inverse . Additionally, the corresponding results for the K-weighted pseudoinverse can be obtained as a special case. We also show how to recover the previous condition numbers of the EILS solution from the generalized inverse condition numbers. We also developed the componentwise perturbation analysis of the EILS problem. Moreover, we designed three algorithms that efficiently estimate the normwise, mixed, and componentwise conditions for the generalized inverse using the probabilistic condition estimation method and the small-sample statistical condition estimation method. Finally, numerical results demonstrated the performance of these algorithms. In the future, we will continue our research on the MK-weighted generalized inverse.

Author Contributions

Methodology, M.S.; Investigation, M.S. and X.Z.; writing—original draft, M.S.; review and editing, A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Zhejiang Normal University Postdoctoral Research Fund (Grant No. ZC304022938), the Natural Science Foundation of China (Project No. 61976196) and the Zhejiang Provincial Natural Science Foundation of China under Grant No. LZ22F030003.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cucker, F.; Diao, H.; Wei, Y. On mixed and componentwise condition numbers for Moore–penrose inverse and linear least squares problems. Math. Comput. 2007, 76, 947–963. [Google Scholar] [CrossRef]

- Liu, Q.; Pan, B.; Wang, Q. The hyperbolic elimination method for solving the equality constrained indefinite least squares problem. Int. J. Comput. Math. 2010, 87, 2953–2966. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, M. Algebraic properties and perturbation results for the indefinite least squares problem with equality constraints. Int. J. Comput. Math. 2010, 87, 425–434. [Google Scholar] [CrossRef]

- Bjöorck, Å.; Higham, N.J.; Harikrishna, P. Solving the indefinite least squares problem by hyperbolic QR factorization. SIAM J. Matrix Anal. Appl. 2003, 24, 914–931. [Google Scholar]

- Bjöorck, Å.; Higham, N.J.; Harikrishna, P. The equality constrained indefinite least squares problem: Theory and algorithms. BIT Numer. Math. 2003, 43, 505–517. [Google Scholar]

- Wei, M.; Zhang, B. Structures and uniqueness conditions of MK-weighted pseudoinverses. BIT Numer. Math. 1994, 34, 437–450. [Google Scholar] [CrossRef]

- Bjöorck, Å. Algorithms for indefinite linear least squares problems. Linear Algebra Appl. 2021, 623, 104–127. [Google Scholar]

- Shi, C.; Liu, Q. A hyperbolic MGS elimination method for solving the equality constrained indefinite least squares problem. Commun. Appl. Math. Comput. 2011, 25, 65–73. [Google Scholar]

- Mastronardi, N.; Dooren, P.V. An algorithm for solving the indefinite least squares problem with equality constraints. BIT Numer. Math. 2014, 54, 201–218. [Google Scholar] [CrossRef]

- Mastronardi, N.; Dooren, P.V. A structurally backward stable algorithm for solving the indefinite least squares problem with equality constraints. IMA J. Numer. Anal. 2015, 35, 107–132. [Google Scholar] [CrossRef]

- Wang, Q. Perturbation analysis for generalized indefinite least squares problems. J. East China Norm. Univ. Nat. Sci. Ed. 2009, 4, 47–53. [Google Scholar]

- Diao, H.; Zhou, T. Linearised estimate of the backward error for equality constrained indefinite least squares problems. East Asian J. Appl. Math. 2019, 9, 270–279. [Google Scholar]

- Li, H.; Wang, S.; Yang, H. On mixed and componentwise condition numbers for indefinite least squares problem. Linear Algebra Appl. 2014, 448, 104–129. [Google Scholar] [CrossRef]

- Wang, S.; Meng, L. A contribution to the conditioning theory of the indefinite least squares problems. Appl. Numer. Math. 2022, 17, 137–159. [Google Scholar] [CrossRef]

- Skeel, R.D. Scaling for numerical stability in Gaussian elimination. J. ACM 1979, 26, 494–526. [Google Scholar] [CrossRef]

- Bjöorck, Å. Component-wise perturbation analysis and error bounds for linear least squares solutions. BIT Numer. Math. 1991, 31, 238–244. [Google Scholar] [CrossRef]

- Diao, H.; Liang, L.; Qiao, S. A condition analysis of the weighted linear least squares problem using dual norms. Linear Algebra Appl. 2018, 66, 1085–1103. [Google Scholar] [CrossRef]

- Diao, H.; Zhou, T. Backward error and condition number analysis for the indefinite linear least squares problem. Int. J. Comput. Math. 2019, 96, 1603–1622. [Google Scholar] [CrossRef]

- Diao, H. Condition numbers for a linear function of the solution of the linear least squares problem with equality constraints. J. Comput. Appl. Math. 2018, 344, 640–656. [Google Scholar] [CrossRef]

- Eldén, L. A weighted pseudoinverse, generalized singular values, and constrained least squares problems. BIT Numer. Math. 1982, 22, 487–502. [Google Scholar] [CrossRef]

- Wei, M. Algebraic properties of the rank-deficient equality-constrained and weighted least squares problem. Linear Algebra Appl. 1992, 161, 27–43. [Google Scholar] [CrossRef]

- Gulliksson, M.E.; Wedin, P.A.; Wei, Y. Perturbation identities for regularized Tikhonov inverses and weighted pseudoinverses. BITBIT Numer. Math. 2000, 40, 513–523. [Google Scholar] [CrossRef]

- Samar, M.; Li, H.; Wei, Y. Condition numbers for the K-weighted pseudoinverse and their statistical estimation. Linear Multilinear Algebra 2021, 69, 752–770. [Google Scholar] [CrossRef]

- Burgisser, P.; Cucker, F. Condition: The geometry of numerical algorithms. In Grundlehren der Mathematischen Wissenschaften; Springer: Heidelberg, Germany, 2013; Volume 349. [Google Scholar]

- Rice, J. A theory of condition. SIAM J. Numer. Anal. 1966, 3, 287–310. [Google Scholar] [CrossRef]

- Gohberg, I.; Koltracht, I. Mixed, componentwise, and structured condition numbers. SIAM J. Matrix Anal. Appl. 1993, 14, 688–704. [Google Scholar] [CrossRef]

- Hochstenbach, M.E. Probabilistic upper bounds for the matrix two-norm. J. Sci. Comput. 2013, 57, 464–476. [Google Scholar] [CrossRef]

- Kenney, C.S.; Laub, A.J. Small-sample statistical condition estimates for general matrix functions. SIAM J. Sci. Comput. 1994, 15, 36–61. [Google Scholar] [CrossRef]

- Xie, Z.; Li, W.; Jin, X. On condition numbers for the canonical generalized polar decomposition of real matrices. Electron. J. Linear Algebra 2013, 26, 842–857. [Google Scholar] [CrossRef]

- Horn, R.A.; Johnson, C.R. Topics in Matrix Analysis; Cambridge University Press: New York, NY, USA, 1991. [Google Scholar]

- Magnus, J.R.; Neudecker, H. Matrix Differential Calculus with Applications in Statistics and Econometrics, 3rd ed.; John Wiley and Sons: Chichester, UK, 2007. [Google Scholar]

- Diao, H.; Xiang, H.; Wei, Y. Mixed, componentwise condition numbers and small sample statistical condition estimation of Sylvester equations. Numer. Linear Algebra Appl. 2012, 19, 639–654. [Google Scholar] [CrossRef]

- Li, H.; Wang, S. On the partial condition numbers for the indefnite least squares problem. Appl. Numer. Math. 2018, 123, 200–220. [Google Scholar] [CrossRef]

- Samar, M. Condition numbers for a linear function of the solution to the constrained and weighted least squares problem and their statistical estimation. Taiwan J. Math. 2021, 25, 717–741. [Google Scholar] [CrossRef]

- Samar, M.; Lin, F. Perturbation and condition numbers for the Tikhonov regularization of total least squares problem and their statistical estimation. J. Comput. Appl. Math. 2022, 411, 114230. [Google Scholar] [CrossRef]

- Diao, H.; Wei, Y.; Xie, P. Small sample statistical condition estimation for the total least squares problem. Numer. Algor. 2017, 75, 435–455. [Google Scholar] [CrossRef]

- Samar, M.; Zhu, X. Structured conditioning theory for the total least squares problem with linear equality constraint and their estimation. AIMS Math. 2023, 8, 11350–11372. [Google Scholar] [CrossRef]

- Baboulin, M.; Gratton, S.; Lacroix, R.; Laub, A.J. Statistical estimates for the conditioning of linear least squares problems. In Parallel Processing and Applied Mathematics: 10th International Conference, PPAM 2013, Warsaw, Poland, September 8–11, 2013, Revised Selected Papers, Part I 10; Springer: Berlin/Heidelberg, Germany, 2014; Lecture Notes in Computer Science; Volume 8384, pp. 124–133. [Google Scholar]

- Higham, N.J. Accuracy and Stability of Numerical Algorithms, 2nd ed.; SIAM: Philadelphia, PA, USA, 2002. [Google Scholar]

- Higham, N.J. J-Orthogonal matrices: Properties and generation. SIAM Rev. 2003, 45, 504–519. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).