Abstract

A novel flexible extension of the Chen distribution is defined and studied in this paper. Relevant statistical properties of the novel model are derived. For the actuarial risk analysis and evaluation, the maximum likelihood, weighted least squares, ordinary least squares, Cramer–von Mises, moments, and Anderson–Darling methods are utilized. For actuarial purposes, a comprehensive simulation study is presented using various combinations to evaluate the performance of the six methods in analyzing insurance risks. These six methods are used in evaluating actuarial risks using insurance claims data. Two applications on bimodal data are presented to highlight the flexibility and relevance of the new distribution. The new distribution is compared to several competing distributions. Actuarial risks are analyzed and evaluated using actuarial data, and the ability to disclose actuarial risks is compared by a comprehensive simulation study, through which actuarial disclosure models are compared using a wide range of well-known models.

Keywords:

actuarial risks; asymmetric data set; left skewed claims; likelihood; bimodal data; value-at-risk; quantitative risks analysis MSC:

60E05; 62H05; 62E10; 62F10; 62F15; 62P05

1. Introduction

There are several justifications for creating a risk measurement system; such a technique is often used to compare and measure risk. Considering this, we must make sure that any risk measuring system generates coherent findings or at the very least can spot situations where it might not be. The two independent random variables (RVs) employed in each property claim procedure are the claim-count RV and the claim-size RV. The aggregate-loss RV (ALRV), which is produced by combining the first two fundamental claim RVs, represents the total claim amount produced by the underlying claim process. Following Chen [1], a novel flexible extension of the Chen distribution called the generalized Rayleigh Chen (GRC) is defined and studied in this work.

The new risk-density function is used in risk exposure analysis, risk assessment, and risk analysis under some actuarial indicators. Several actuaries employ a broad variety of parametric families of continuous distributions to model the size of property and casualty insurance claim amounts. An examination of the actuarial literature for insurance claims data finds that it is mostly right skewed (see Cooray and Ananda [2], Shrahili et al. [3] and Mohamed et al. [4]). This work analyses and models some data sets including a new actuarial data set of negatively skewed insurance claims. Additionally, the risk exposure is an actuarial estimation of the potential loss that could develop in the future because of a specific action or occurrence. Risks are often ranked according to their likelihood of happening in the future multiplied by the potential loss if they did as part of an assessment of the business’s risk exposure. By assessing the likelihood of expected losses in the future, insurance and reinsurance companies may distinguish between little and major losses. Speculative risks frequently lead to losses, including breaking rules, losing brand value, having security flaws, and having liability problems. Generally, the risk exposure () can be calculated from:

where is the total loss of the risk occurrence, and refers to the probability of the risk occurring. On the other hand, extensive research has been put into employing time series analysis, regression modelling, or continuous distributions to analyze historical insurance data. Actuaries have recently used continuous distributions, especially ones with long tails, to reflect actual insurance data (see, for example, Mohamed et al. [4] and Shrahili et al. [3]). Using continuous heavy-tailed probability distributions, real data have been modeled in a number of real-world applications, including engineering, risk management, dependability, and the actuarial sciences. Due to its monotonically decreasing density form, the Pareto model does not offer a good fit for many actuarial applications when the frequency distributions are hump-shaped. The lognormal is frequently used to model these data sets in these circumstances. It is obvious that neither the lognormal nor the Pareto can account for left-skewed payment data. To address this flaw in the outdated conventional models, we provide the GRC distribution for the left-skewed actuarial claims.

Distributions based on probabilities can provide an accurate depiction of the risk exposure and have been recently used for this actuarial purpose (see Shrahili et al. [3] and Mohamed et al. [4]). The levels of exposure are functions frequently referred to as major risk indicators (RIs) (Klugman et al. [5] ). Such RIs provide risk managers and actuaries with information on the level of risk that the firm is exposed to. There are a variety of RIs that can be taken into consideration and researched, including tailed-value-at-risk (TVAR) (also known as the conditional tail expectation (CTE)). We also study how the mean excess loss (MEL) function may be used to reduce actuarial and economic risks, (see Wirch [6], value-at-risk (VAR), (see Wirch [6], Tasche [7], and Acerbi [8] ), conditional-VAR (CVAR), and tail-variance (TV) (see Furman and Landsman [9] and Landsman [10]).

The probability distribution of massive losses in particular is quantized by the VAR. Actuaries and risk managers usually concentrate on calculating the probability of a bad outcome. The probability of a negative outcome can be represented by the VAR indicator at a given probability/confidence level. Calculating the amount of money required to plan for these potentially devastating circumstances is typically performed using the VAR indicator.

The capacity of the insurance firm to handle such occurrences is of importance to actuaries, regulators, investors, and rating agencies. The GRC model, which is a novel model, takes into account certain RIs, including VAR, TMV, TVAR, and TV, for the left-skewed actuarial claims data (see Artzner [11]).

2. The New Model

Due to Chen [1], the cumulative distribution function (CDF) of the Chen (C) model is given by

where control the shape of the C distribution. For , the C model reduces to another one-parameter C model. The hazard rate function (HRF) of the C model just has a U-shape (decreasing–constant–increasing) for al and has “monotonically increasing HRF “when . The CDF of the generalized Rayleigh (GR-G) family (see Yousof et al. [12] and Cordeiro et al. [13]) is defined as

where controls the shape of the GR-G family of distributions, ; refers to the CDF of the selected baseline model with baseline parameter vector and refers to the survival function (SF) (SF) of the baseline model with baseline parameter vector . Inserting Equation (1) into Equation (2), the CDF of the GRC distribution can be expressed as

where , . The probability density function (PDF) corresponding to (3) can then be derived as

where and The following quantile function can be used for simulating the GRC model:

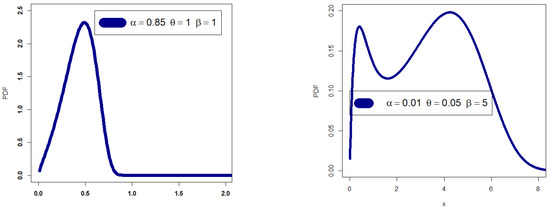

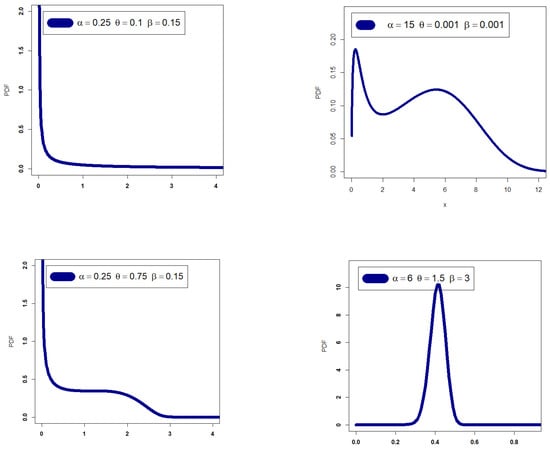

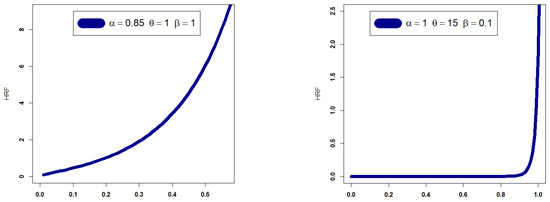

Figure 1 depicts the extensive flexibility of the new GRC PDF, which can be used to explore the new GRC PDF’s adaptability. A few PDF graphs for the GRC model are shown in Figure 1. Some HRF plots for the GRC model are shown in Figure 2.

Figure 1.

Some PDF plots.

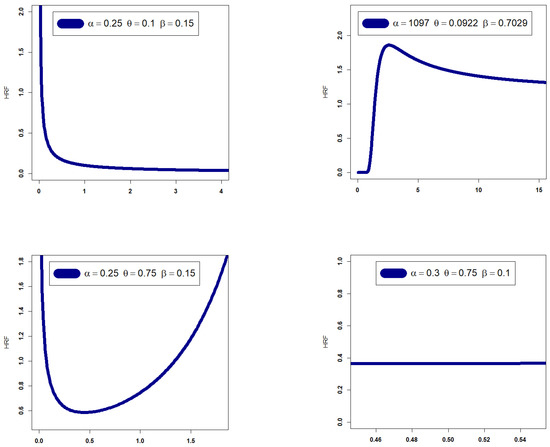

Figure 2.

Some HRF plots.

We are motivated to define and study the GRC for the following reasons:

- (1)

- The new PDF in (4) can be “symmetric density”, “unimodal with right skewed shape”, “bimodal with right skewed shape”, and “left skewed density with no peaks” (see Figure 1).

- (2)

- The HRF of the GRC model can be “monotonically increasing”, “J-HRF” and “upside down”, “decreasing–constant–increasing (bathtub)”, “monotonically decreasing”, and “constant-HRF “ (see Figure 2).

- (3)

- The GRC model may be selected as the optimum probabilistic model for reliability analysis, particularly when modelling the right heavy tail bimodal asymmetric and left heavy tail bimodal asymmetric real data.

3. Properties

3.1. Linear Representation

Using various algebraic techniques, such as the power series, the binomial expansion, and the extended binomial expansion, we summarize and simplify the PDF and the CDF of the GRC model in this section. We may readily obtain several of the GRC model’s associated mathematical and statistical features by simplifying the PDF and CDF. This section’s general goal is to express the PDF and CDF of the GRC model in terms of the exponentiated Chen (Ex-C) model. After that, it is possible to directly deduce the relevant mathematical and statistical characteristics of the exponentiated baseline model from those of the GRC model. Following Yousof et al. [12], the GRC density can then be expressed as

where

is the Ex-C pdf with power parameter , and

This formula can be derived directly from (6) as

where . Two theorems related to the Ex-C model are presented in Dey et al. [14]. These two theorems are used in this paper, especially in actuarial indicators calculations. Using Equation (6) and the theorems of Dey et al. [14], one can derive many related properties for the new model. Equation (6) is the main result of this subsection and without deriving it, we will face many difficulties in deriving the properties of the new model.

3.2. Moments

Based on Theorem 1 of Dey et al. [14], the ordinary moment of the GRC model can then be expressed as

3.3. The Conditional Moments

The conditional moments can be expressed as

3.4. Rényi Entropy

The Rényi entropy is a measure of entropy, a concept in information theory, which is widely used in various fields, including actuarial risk analysis. In actuarial risk analysis, entropy measures are used to quantify the uncertainty or randomness associated with a set of data or events. The Rényi entropy is defined as a generalization of the Shannon entropy and can be used to capture the distributional properties of a random variable more effectively in certain cases. For instance, the Rényi entropy provides a more robust measure of entropy for distributions that are heavy tailed, have high kurtosis, or have other non-standard distributional properties.

In actuarial risk analysis, the Rényi entropy is particularly useful in the modeling of extreme events, such as catastrophic losses. For example, it can be used to quantify the risk associated with natural disasters, pandemics, or other extreme events that may have a significant impact on the insurance industry. The Rényi entropy can also be used to evaluate the uncertainty associated with the future development of the insured population or the underlying economic conditions, which can impact the performance of the insurance business. Generally, the Rényi entropy is a useful tool in actuarial risk analysis as it provides a more comprehensive measure of uncertainty and can be used to evaluate various types of risks. The Rényi entropy of the RV is defined by

Using (4), we have

where

Then,

4. Actuarial Risk Indictors

4.1. VAR Indicator

Any insurance company will inevitably experience risk exposure. Actuaries developed a range of risk indicators as a result for statistically estimating risk exposure. VAR is an effort to calculate the most likely maximum amount of capital that might be lost over a predetermined amount of time. The maximum loss, which may be infinite or at least equal to the portfolio’s value, is not particularly instructive. Portfolios with the same maximum loss may differ greatly in their risk profiles. VAR therefore typically depends on the loss random variable’s probability distribution. The combined distribution of the risk variables impacting the portfolio determines how losses are distributed. There is a wealth of research on modelling risk factors and portfolio loss distributions that depend on the combined distribution of risk factors. Then, for GRC distributions, we can simply write

4.2. TVAR Risk Indicator

Let X denote a LRV. Then, the TVAR at the confidence level is the expected loss given that the loss exceeds the of the distribution of . Then, the TVAR() can be expressed as

Then, depending on Theorem 2 of Dey et al. [14], we have

where , and is the coefficient of in the expansion of and is the coefficient of in the expansion of . Thus, TVAR can be considered as the average of all VAR values above the confidence level . This means that the TVAR indicator provides us more information about the tail of the GRC distribution. Finally, TVAR can also be expressed as

where is the mean excess loss (MEL) function evaluated at the quantile.

4.3. TV Risk Indicator

Let denote an LRV. The TV risk indicator (TV ()) can be expressed as

Then, depending on Theorem 2 of Dey et al. [14] and (15), we have

where , and TVAR is given in (13).

4.4. TMV Risk Indicator

Let denote a loss LRV. The TMV risk indicator (TMV ()) can then be expressed as

Then, for any LRV, , , for the and for the .

5. Applications for Asymmetrical Bimodal Data

Two actual data sets are examined and taken into account in this part using the maximum likelihood estimation (MLE) approach to demonstrate the applicability of the GRC model. The Akaike Information Criteria (AIC), Consistent Akaike Information Criteria (CAIC), Bayesian Akaike Information Criteria (BAIC), the Cramér–Von Mises test (CVMT), and the Anderson–Darling Criteria (ADC) are some of the criteria we take into consideration while evaluating models. The Kolmogorov–Smirnov (K.S) test and associated p-value (p-v) are also carried out. The MLE approach has been used to estimate model parameters. The relief times of data of 20 patients are included in the first data set (1.1, 1.3, 1.4, 1.7, 1.8, 1.6, 1.9, 2.2, 1.7, 4.1, 2.7, 1.8, 1.2, 1.4, 1.5, 3, 1.7, 1.6, 2, 2.3). The second set of data is referred to as the minimum flow and includes the following values: 43.86, 44.97, 46.27, 51.29, 61.19, 61.20, 67.80, 69.00, 71.84, 77.31, 85.39, 86.59, 86.66, 88.16, 96.03, 102.00, 108.29, 113.00, 115.14, 116.71, and 126.00. Table 1 gives the results of the comparing models under the relief data set. Table 2 provides the MLEs and SEs for the relief data set. Table 3 gives the results of comparing the models under the flow data set. Table 4 lists the MLEs and SEs for the minimum flow data. Utilizing the relief times real data set, Table 1 presents statistical tests for contrasting the competing Chen extensions. The MLEs and associated standard errors (SEs) for the relief times real data set are listed in Table 2. The competing Chen extensions are represented in Table 1 and Table 2 as the GRC (the proposed model), Gamma-Chen (GC), Kumaraswamy Chen (KUMC), Beta-Chen (BC), Marshall–Olkin Chen (MOC), Transmuted Chen (TC), and traditional two-parameters Chen. Table 3 offers statistical tests for contrasting the competing models using the minimal flow data set. Table 4 contains a list of the MLEs and SEs for the minimal flow data set. The two real data sets are presented using box charts, quantile–quantile (Q–Q), total time in test (TTT), and nonparametric kernel density estimation (NKDE) plots.

Table 1.

Comparing results using the relief data set.

Table 2.

The MLEs and their corresponding SEs for the relief data set.

Table 3.

Comparing models under the flow data set.

Table 4.

The MLEs and SEs for the minimum flow data.

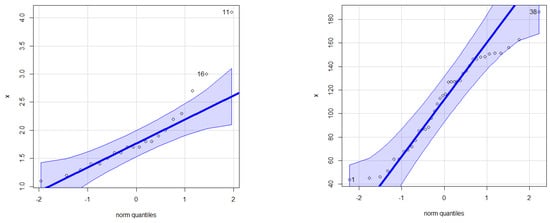

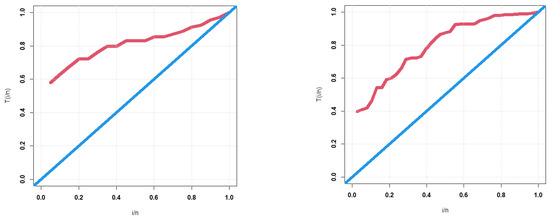

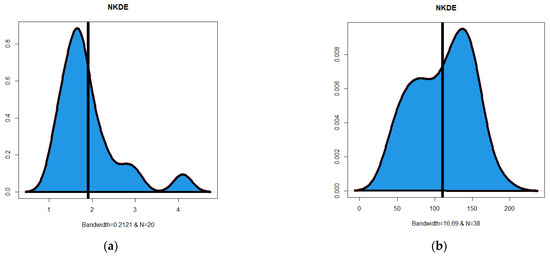

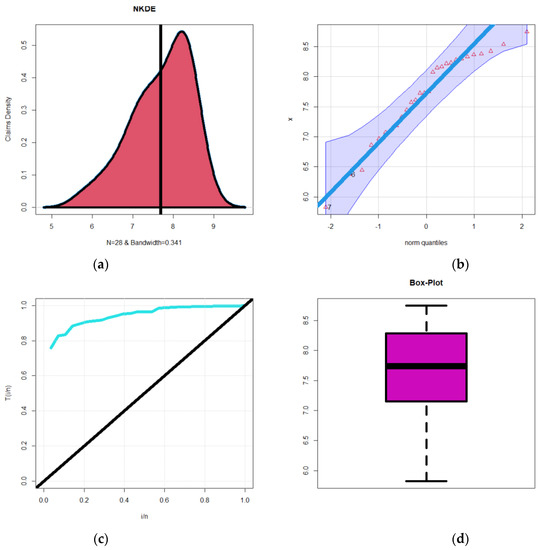

These Q–Q plots are shown in Figure 3. In Figure 4, the TTT graphs are displayed. The NKDE graphs are shown in Figure 5. The Q–Q shows that the first data have some extreme values. The TTT plot shows that the HRFs for the two data sets are “monotonically increasing”. The KDE of the minimum flow data is “asymmetric bimodal density” with a right tail, as shown by the NKDE plot (see Figure 5a). The KDE of the relief data is “asymmetric bimodal density” (see Figure 5b).

Figure 3.

Q–Q plots.

Figure 4.

TTT plots.

Figure 5.

(a) The NKDE plot for the relief times data with suitable bandwidth, and (b) the NKDE plot for the minimum flow data with suitable bandwidth.

Table 1 indicates that the GRC model yields the best outcomes: AIC = 37.505, BIC = 40.492, CVM = 0.402, AD = 0.2321, K.S = 0.1515, and p = 9524. The MLEs (SEs) for the relief periods data are shown in Table 3. Table 3 indicates that the GRC model yields the best outcomes: AIC = 389.436, BIC = 394.349, CVM = 0.0545, AD = 0.443, K.S = 0.1106, and p = 766.

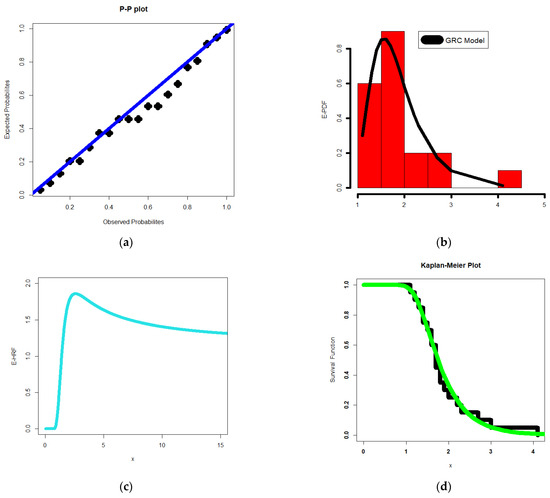

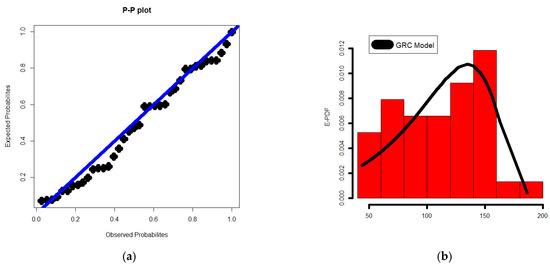

For the relief periods and lowest flow data sets, Figure 6 and Figure 7 show the probability–probability (P–P), estimated-PDF (E-PDF), estimated-HRF (E-HRF), and the Kaplan–Meier survival plot, respectively.

Figure 6.

The P–P (a); E-PDF (b); E-HRF (c); Kaplan–Meier plot (d); for the relief times data.

Figure 7.

The P–P (a); E-PDF (b); E-HRF (c) Kaplan–Meier plot (d); for the minimum flow data.

6. Risk Analysis

6.1. Risk Analysis under Artificial Experiments

For risk analysis and evaluation, the maximum likelihood, weighted least squares, ordinary least squares, Cramér–von Mises, moments, and Anderson–Darling approaches are considered. We will ignore the algebraic derivations and theoretical results of these methods since it is already present in a lot of the statistical literature. On the other hand, we will focus a lot on the practical results and statistical applications of mathematical modeling in general and of the risk disclosure, analysis risk, and evaluation of actuarial risks. For computing the abovementioned KRIs, the following estimation techniques are discussed in these sections: the MLE method, the OLS method, the WLSE method, and the ADE method. Six CLs (q = 60, 70, 90, 95, 99, and 99.9%) and N = 1000 with various sample sizes (n = 20, 50, 150, 300, 500) are considered under . All results are reported in Table A1, Table A2, Table A3 and Table A4. Table A1 gives the KRIs for the GRC under artificial data where n = 50. Table A2 shows the KRIs for the GRC under artificial data where n = 150. Table A3 lists the KRIs for the GRC under artificial data where n = 300. Table A4 provides the KRIs for the GRC under artificial data where n = 500. The simulation’s primary objective is to evaluate the efficacy of the four risk analysis methodologies and select the most appropriate and efficient ones. Table A1, Table A2, Table A3, Table A4 and Table A5 allow us to display the significant conclusions:

- The (), and increase when q increases for all estimation methods.

- The and decrease when q increases for all estimation methods.

- The results of the four tables allow us to confirm that all methods work properly and that it is impossible to clearly advocate one strategy over another. We are required to create an application based on real data considering this basic discovery in the hopes that it will help us choose one approach over another and identify the best and most relevant ways. In other words, while the four approaches gave us comparable findings in risk assessment, the simulation research did not assist us in deciding how to weight the methodologies. These convergent findings reassure us that all techniques perform well and within acceptable limits when modelling actuarial data and assessing risk. More specifically, some main results can be highlighted due to Table A1, Table A2, Table A3, Table A4 and Table A5.

Based on Table A1, we conclude that it is not easy to determine the best way to assess and analyze risks where n = 20. It can be said (in general) that in the case of small samples, all estimation methods help in the risk assessment processes in close proximity to each other. Based on Table A2, we conclude that it is not easy to determine the best way to assess and analyze risks where n = 50. It can be said (in general) that in the case of small samples, all estimation methods help in the risk assessment processes in close proximity to each other. However, based on Table A3 (for n = 150), we conclude that the MLE is the best method for all risk indicators, for example VAR started with 0.577686| and ended with 1.125096|. The same conclusions can be obtained for the other risk indicators. Based on Table A4 and Table A5 (for n = 300 and n = 500), we conclude that the moment method is the worst one; however, all other methods perform well. Table A1, Table A2, Table A3, Table A4 and Table A5 are given in Appendix A.

6.2. Risk Analysis for Insurance Claims Data

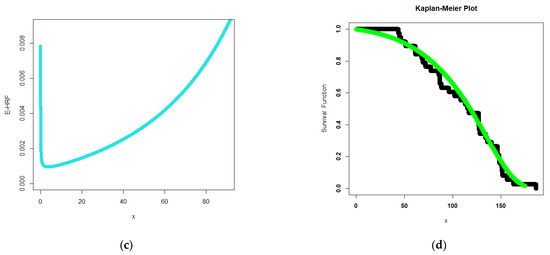

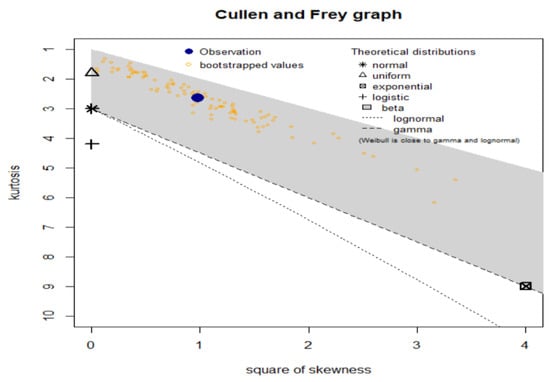

In this part, we use a U.K. motor non-comprehensive account as an example of the insurance claims payment triangle in this study for practical purposes. For the sake of convenience, we decide to place the origin period between 2007 and 2013 (see Mohamed et al. [4]). The claims data are presented in the insurance claims payment data frame in the same way that a database would typically store it. The first column lists the development year, the incremental payments, and the origin year, which spans from 2007 to 2013. It is vital to remember that a probability-based distribution was first used to analyze this data on insurance claims. Real data analysis can be performed numerically, graphically, or by fusing the two. When examining initial fits of theoretical distributions, such as the normal, uniform, exponential, logistic, beta, lognormal, Weibull, and the numerical technique, a few graphical tools, such as the skewness-kurtosis plot (or the Cullen and Frey plot), are taken into consideration (see Figure 8). Figure 8 depicts our left-skewed data with a kurtosis less than three. The initial shape of the insurance claims density is examined using the NKDE approach (see Figure 9a), the current data’s “normality” is examined using the Q–Q plot (see Figure 9b), the empirical HRF’s initial shape is examined using the TTT plot (see Figure 9c), and the explanatory variables are determined using the “box plot” (see Figure 9c,d).

Figure 8.

Cullen–Frey plot for the actuarial claims data.

Figure 9.

NKDE (a); Q–Q (b); TTT (c); box plots (d).

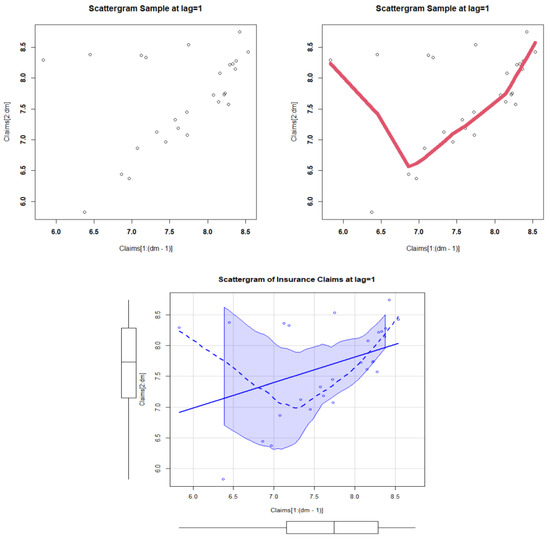

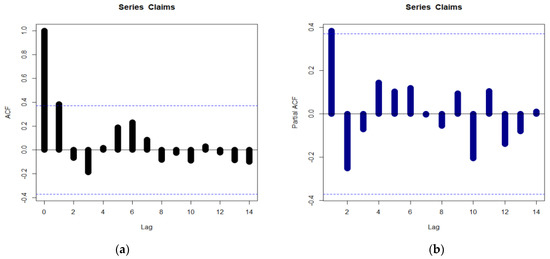

The initial density is depicted as an asymmetric function with a left tail in Figure 9a. Based on Figure 9, no outlandish assertions are apparent in Figure 9d. The HRF for the models that account for the present data should also be monotonically growing (see Figure 9c). The scattergrams for the data on insurance claims are shown in Figure 10. For the data on insurance claims, Figure 11a shows the autocorrelation function (ACF), and Figure 11b shows the partial autocorrelation function (partial ACF). We provide the ACF, which can be used to demonstrate how the correlation between any two signal values alters when the distance between them alters the ACF. The theoretical ACF is a time domain measure of the stochastic process memory and offers no insight into the frequency content of the process. With Lag = k = 1, it offers some details on the distribution of hills and valleys on the surface (see Figure 11a). Additionally included is the theoretical partial ACF with Lag = k = 1 (see Figure 11b). In contrast to the other partial autocorrelations for all other lags, the first lag value is shown in Figure 11b to be statistically significant. The initial NKDE has an asymmetric density with a left tail, as shown in Figure 9a. On the other hand, the interview, matching, and density of the novel model, which incorporates the left tail shape, are significant in statistical modelling. Therefore, it is advised to use the GRC model to simulate the payments for insurance claims. We present an application for risk analysis under VAR, TVAR, TV, TMV, and measures for the insurance claims data. The risk analysis is performed for some confidence level as follows:

Figure 10.

The scattergrams.

Figure 11.

(a) The ACF plot for the insurance claims data, and (b) the partial ACF plot for the insurance claims data.

The five measures are estimated for the GRC and C models. Table 5 gives the KRIs for the GRC under insurance claims data. Table 6 lists the KRIs for the GRC under insurance claims data. Table 7 provides the estimators and ranks for the GRC model under the claims data for all estimation methods. Table 8 provides the estimators and ranks for the C model under the claims data for all estimation methods. Table 8 shows the estimators and ranks for the C model under the claims data for all estimation methods. The C distribution was chosen because it is the baseline distribution on which the new distribution is based. Based on these tables, the following results can be highlighted:

Table 5.

The KRIs for the GRC under insurance claims data.

Table 6.

The KRIs for the C model under insurance claims data.

Table 7.

The estimators and ranks for the GRC model under the claims data for all estimation methods.

Table 8.

The estimators and ranks for the C model under insurance claims data for all estimation methods.

1. For all risk assessment methods|:

2. For all risk assessment methods|:

3. For Most risk assessment methods|:

4. For all risk assessment methods|:

5. For all risk assessment methods|:

6. Under the GRC model and the MLE method, the VAR() is a monotonically increasing indicator starting with 2995.433059 and ending with 8156.146208. The TVAR() in a monotonically increasing indicator starts with 4275.474776 and ends with 4275.474776. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

7. Under the GG model and the MLE method, the VAR() is a monotonically increasing indicator starting with 3018.151137 and ending with 7749.241041. The TVAR() in a monotonically increasing indicator starts with 4228.504423 and ends with 8177.125483. The TV(), the TMV(), and the MEL() are monotonically decreasing indicators.

8. Under the GRC model and the LS method, the VAR() is a monotonically increasing indicator starting with 2995.79625 and ending with 11,699.26883. The TVAR() in a monotonically increasing indicator starts with 4823.79565 and ends with 12,740.63136. However, the TV(), the TMV(), and the () are monotonically decreasing.

9. Under the GG model and the OLSE method, the VAR() is a monotonically increasing indicator starting with 3057.31723 and ending with 9449.44305. The TVAR() in a monotonically increasing indicator starts with 4616.08258 and ends with 10,070.24175. However, the TV (), the TMV (), and the MEL() are monotonically decreasing.

10. Under the GRC model and the WLSE method, the VAR() is a monotonically increasing indicator starting with 2993.45166 and ending with 8505.4338. The TVAR() in a monotonically increasing indicator starts with 4368.83178 and ends with 9010.49496. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

11. Under the GG model and the WLSE method, the VAR() is a monotonically increasing indicator starting with 8361.65005 and ending with 8859.53398. The TVAR() in a monotonically increasing indicator starts with 4357.54867 and ends with 8859.53398. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

12. Under the GRC model and the CVM method, the VAR() is a monotonically increasing indicator starting with 2975.27503 and ending with 10,984.15414. The TVAR() in a monotonically increasing indicator starts with 4684.45314 and ends with 11,922.07451. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

13. Under the GG model and the AE method, the VAR() is a monotonically increasing indicator starting with 3042.58341 and ending with 9292.25602. The TVAR() in a monotonically increasing indicator starts with 4571.44196 and ends with 9896.43605. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

14. Under the GRC model and the ADE method, the VAR() is a monotonically increasing indicator starting with 2977.72629 and ending with 9548.8129. The TVAR() in a monotonically increasing indicator starts with 4499.40165 and ends with 10,227.62167. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

15. Under the GG model and the AE method, the VAR() is a monotonically increasing indicator starting with 3025.58204 and ending with 8445.96804. The TVAR() in a monotonically increasing indicator starts with 4382.96196 and ends with 8952.25142. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

16. Under the GRC model and the moment method, the VAR() is a monotonically increasing indicator starting with 2938.57331 and ending with 8197.24619. The TVAR() in a monotonically increasing indicator starts with 4201.12314 and ends with 8712.92223. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

17. Under the GG model and the AE method, the VAR() is a monotonically increasing indicator starting with 966.65702 and ending with 24,938.9476. The TVAR() in a monotonically increasing indicator starts with 4103.34393 and ends with 29,594.42541. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

18. For the GRC model, the OLSE approach is recommended since it offers the most acceptable risk exposure analysis, followed by the CVME method, and finally the ADE method. The other three techniques work well, though.

19. For the GG model, the moment technique is suggested for all q values since it offers the most acceptable risk exposure analysis. The LSE method is suggested next as a backup, and finally the CVME approach. The other three techniques work nicely, though.

20. The GRC model performs better than the GG model for all q values and for all risk methodologies. The new distribution performs the best when modelling insurance claims reimbursement data and determining actuarial risk, despite the fact that the probability distributions have the same number of parameters. In upcoming actuarial and applied research, we anticipate that actuaries and practitioners will be very interested in the new distribution.

6.3. Quantitative Risk Analysis Based on the Quantiles

To compare the effectiveness of the estimators of VAR based on the quantiles of the proposed GRC distribution, it is recommended to apply the Peaks Over Random Threshold (PORT) Mean-of-Order-p (MOp) methodology. However, in this work, we present a simulation analysis in this section. In order to compare the performance of the proposed distribution, we conduct a simulation study. Here, we consider two cases of sample generation. First, we generate samples of size n, (n = 50, 200, 500, 1000), for selected parameter values of the Chen and then from the proposed GRC distributions. In each case, our interest is to compare the estimation of two basic parameters of the Chen, β and θ, using the maximum likelihood method. Recall that all the other distributions are variants of the Chen distribution, and hence, it is natural that we can check how well the two parameters of the Chen distribution are estimated by the other variants. In addition, we assess the performance using the metrics, mean square error, and bias. Table 9, Table 10, Table 11 and Table 12 show the results of the simulation study for samples of different sizes generated from the Chen distribution with parameters β = 1.25 and θ = 0.75. The estimators of the two parameters from the MOC and GC are the most appropriate as they have less bias and MSE values. In addition, the proposed GRC estimator has smaller bias and MSE values in the estimation of θ than the Chen estimator. On the other hand, in the case of the estimation of β, the Chen estimator is better.

Table 9.

Results for samples generated from the Chen distribution with n = 50.

Table 10.

Results for samples generated from the Chen distribution with n = 200.

Table 11.

Results for samples generated from the Chen distribution with n = 500.

Table 12.

Results for samples generated from the Chen distribution with n = 1000.

In the case of samples generated from the GRC distribution, the results are shown in Table 13, Table 14, Table 15 and Table 16. It can be observed that the estimation of β and θ from the GRC distribution offers the lowest bias and MSE in all cases. In addition, the estimators of the two distributions, GRC and Chen, show empirical consistency as the MSE decreases with increasing sample size.

Table 13.

Results for samples generated from the GRC distribution with n = 50.

Table 14.

Results for samples generated from the GRC distribution with n = 200.

Table 15.

Results for samples generated from the GRC distribution with n = 500.

Table 16.

Results for samples generated from the GRC distribution with n = 1000.

Therefore, we conclude that the GRC provides competitive estimators of the two parameters of the Chen distribution. In particular, it is universally the best for samples generated from the GRC distribution and shows lesser bias and MSE even for samples generated from the Chen distribution.

7. Conclusions and Discussions

In practice, a novel flexible extension of the Chen model called the generalized Rayleigh Chen (GRC) which accommodates “monotonically increasing”, “J-HRF”, “decreasing–constant–increasing (bathtub)”, “monotonically decreasing”, “upside down”, and “ constant” failure rates is defined and studied. The new model is motivated by its wide applicability in modeling the “symmetric shape”, “unimodal with right skewed shape”, “bimodal with right skewed shape”, and “left skewed density with no peaks” real data. Relevant statistical properties of the novel model are derived. The maximum likelihood, weighted least squares, ordinary least squares, Cramér–von Mises, moments, and Anderson–Darling methods are considered for risk analysis and assessment. For this actuarial purpose, a comprehensive simulation study was presented using various combinations in order to evaluate the performance of the six methods in analyzing insurance risks. These six methods were used in evaluating actuarial risks using insurance claims data. The new model has multiple applications in the field of statistical modeling and forecasting as illustrated below:

- In the field of insurance and actuarial sciences, we can use the GRC distribution to examine the insurance claims payment. The standard exponential distribution, the standard Chen distribution, the Rayleigh Chen distribution, the exponential Chen distribution, the Marshall–Olkin generalized Chen distribution, the reduced Burr-X Chen distribution, the Weibull Chen distribution, the Lomax Chen distribution, the standard exponentiated distribution, and the Burr-X exponentiated Chen distribution are just a few of the competing distributions in the field of statistical modelling in insurance and actuarial sciences data. With minimum values of the Akaike Information Criteria, Bayesian Information Criteria, Cramer–Von Mises test, Anderson–Darling test, Kolmogorov–Smirnov test, and its corresponding p-value, the GRC distribution demonstrated its superiority in statistically modelling the planning and management of the use of water resources data.

- The new density accommodates the “symmetric shape”, “unimodal with right skewed shape”, “bimodal with right skewed shape”, and “left skewed density with no peaks”. The new hazard function can be “monotonically increasing”, “J-HRF”, “decreasing–constant–increasing (bathtub)”, “monotonically decreasing”, “upside down”, and “ constant”. Under the proposed distribution, five risk indicators are considered and examined in reference to the insurance claims payments data. The GRC distribution has an advantage over the standard exponentiated distribution, the Burr-X exponentiated Chen distribution, the Burr-XII Chen distribution, the reduced Burr-X Chen distribution, the Weibull Chen distribution, the Lomax Chen distribution, and the Marshall–Olkin generalized Chen distribution as a result of the variety of the HRFs.

Regarding the analysis and assessment of actuarial risks, the following results can be highlighted:

- For all risk assessment methodsand

- Under the GRC model and the MLE method, the VAR() is a monotonically increasing indicator starting with 2995.433059 and ending with 8156.146208. The TVAR() in a monotonically increasing indicator starts with 4275.474776 and ends with 4275.474776.

- Under the GRC model and the LS method, the VAR() is a monotonically increasing indicator starting with 2995.79625 and ending with 11,699.26883. The TVAR() in a monotonically increasing indicator starts with 4823.79565 and ends with 12740.63136. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

- Under the GRC model and the WLSE method, the VAR() is a monotonically increasing indicator starting with 2993.45166 and ending with 8505.4338. The TVAR() in a monotonically increasing indicator starts with 4368.83178 and ends with 9010.49496. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

- Under the GRC model and the CVM method, the VAR() is a monotonically increasing indicator starting with 2975.27503 and ending with 10,984.15414. The TVAR() in a monotonically increasing indicator starts with 4684.45314 and ends with 11,922.07451. However, the TV(), the TMV(), and the MEL() are monotonically decreasing.

- Under the GRC model and the ADE method, the VAR() is a monotonically increasing indicator starting with 2977.72629 and ending with 9548.8129. The TVAR() in a monotonically increasing indicator starts with 4499.40165 and ends with 10227.62167.

- Under the GRC model and the moment method, the VAR() is a monotonically increasing indicator starting with 2938.57331 and ending with 8197.24619. The TVAR() in a monotonically increasing indicator starts with 4201.12314 and ends with 8712.92223.

- For the GRC model, the OLSE approach is recommended since it offers the most acceptable risk exposure analysis, followed by the CVME method and finally the ADE method. The other three techniques work well, though.

- The GRC model performs better than the Chen model for all q values and for all risk methodologies. The new distribution performs the best when modelling insurance claims reimbursement data and determining actuarial risk, despite the fact that the probability distributions have the same number of parameters. In upcoming actuarial and applied research, we anticipate that actuaries and practitioners will be very interested in the new distribution.

- The proposed GRC estimator has smaller bias and MSE values in the estimation of the parameter θ than the Chen estimator. On the other hand, in the case of the estimation of the parameter β, the Chen estimator is better. It can be observed that the estimation of β and θ from the GRC distribution offers the lowest bias and MSE in all cases. In addition, the estimators of the two distributions, GRC and Chen, show empirical consistency as the MSE decreases with increasing sample size. The GRC provides competitive estimators of the two parameters of the Chen distribution. It is universally the best for samples generated from the GRC distribution and shows lesser bias and MSE even for samples generated from the Chen distribution.

Author Contributions

H.M.Y.: review and editing, software, validation, writing the original draft preparation, conceptualization, supervision; W.E.: validation, writing the original draft preparation, conceptualization, data curation, formal analysis, software; Y.T.: methodology, conceptualization, software; M.I.: review and editing, software, validation, writing the original draft preparation, conceptualization; R.M.: validation, conceptualization; M.M.A.: review and editing, conceptualization, supervision. All authors have read and agreed to the published version of the manuscript.

Funding

The study was funded by Researchers Supporting Project number (RSP2023R488), King Saud University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data set can be provided upon requested.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| ALRV | Aggregate-loss RV |

| AIC | Akaike information criterion |

| ADE | Anderson–Darling estimation |

| BIC | Bayesian information criterion |

| BC | Beta-Chen |

| CTE | Conditional tail expectation |

| CAIC | Consistent Akaike information criterion |

| CVME | Cramér–von Mises estimation |

| CDF | Cumulative distribution function |

| E-HRF | Estimated hazard rate function |

| E-PDF | Estimated probability density function |

| GC | Gamma-Chen |

| GRC | Generalized Rayleigh Chen |

| HQIC | Hannan–Quinn information criterion |

| HRF | Hazard rate function |

| K.S | Kolmogorov–Smirnov |

| KUMC | Kumaraswamy Chen |

| MOC | Marshall–Olkin Chen |

| MLE | Maximum likelihood estimation |

| MEL | Mean excess loss |

| MSE | Mean square error |

| NKDE | Nonparametric kernel density estimation |

| OLSE | Ordinary least squares estimation |

| p-v | p-value |

| P–P | Probability–probability |

| Q–Q | Quantile–quantile. |

| RV | Random variable |

| RIs | Risk indicators |

| SEs | Standard errors |

| SF | Survival function |

| TVAR | Tailed-value-at-risk |

| TTT | Total time in test |

| TC | Transmuted Chen |

| VAR | Value-at-risk |

| WLSE | Weighted least squares estimation |

Appendix A

Table A1.

The KRIs for the GRC under artificial data where n = 20.

Table A1.

The KRIs for the GRC under artificial data where n = 20.

| MLE | 0.575558 | 0.717965 | 0.012018 | 0.723974 | 0.142407 | |

| 0.630413 | 0.75645 | 0.010016 | 0.761458 | 0.126037 | ||

| 0.694374 | 0.804036 | 0.008061 | 0.808067 | 0.109662 | ||

| 0.782051 | 0.873112 | 0.00595 | 0.876087 | 0.091061 | ||

| 0.853078 | 0.931657 | 0.004631 | 0.933972 | 0.078578 | ||

| 0.981683 | 1.04207 | 0.002912 | 1.043527 | 0.060387 | ||

| 1.116905 | 1.162705 | 0.001734 | 1.163573 | 0.0458 | ||

| LS | 0.57494 | 0.718544 | 0.012192 | 0.72464 | 0.143604 | |

| 0.630321 | 0.757342 | 0.01015 | 0.762417 | 0.12702 | ||

| 0.694848 | 0.80528 | 0.016736 | 0.813648 | 0.110432 | ||

| 0.783204 | 0.874802 | 0.006009 | 0.877807 | 0.091598 | ||

| 0.854695 | 0.933664 | 0.00467 | 0.935999 | 0.078969 | ||

| 0.983946 | 1.044539 | 0.002929 | 1.046004 | 0.060594 | ||

| 1.119606 | 1.165499 | 0.00174 | 1.166369 | 0.045893 | ||

| WLS | 0.58276 | 0.72621 | 0.01211 | 0.73227 | 0.14345 | |

| 0.63821 | 0.76494 | 0.01006 | 0.76997 | 0.12673 | ||

| 0.70271 | 0.81274 | 0.00807 | 0.81677 | 0.11003 | ||

| 0.79084 | 0.88194 | 0.00593 | 0.8849 | 0.0911 | ||

| 0.86201 | 0.94044 | 0.0046 | 0.94274 | 0.07843 | ||

| 0.99038 | 1.05042 | 0.00287 | 1.05186 | 0.06004 | ||

| 1.12476 | 1.17013 | 0.00169 | 1.17098 | 0.04537 | ||

| CVM | 0.57329 | 0.71613 | 0.01207 | 0.72217 | 0.14284 | |

| 0.62835 | 0.75473 | 0.01006 | 0.75975 | 0.12637 | ||

| 0.69252 | 0.80243 | 0.00809 | 0.80647 | 0.1099 | ||

| 0.78043 | 0.87163 | 0.00596 | 0.87461 | 0.0912 | ||

| 0.85159 | 0.93025 | 0.00464 | 0.93256 | 0.07865 | ||

| 0.98032 | 1.04071 | 0.00291 | 1.04217 | 0.06039 | ||

| 1.11553 | 1.16129 | 0.00173 | 1.16216 | 0.04576 | ||

| ADE | 0.57539 | 0.71919 | 0.01222 | 0.7253 | 0.1438 | |

| 0.63085 | 0.75804 | 0.01017 | 0.76313 | 0.12719 | ||

| 0.69547 | 0.80604 | 0.00818 | 0.81013 | 0.11057 | ||

| 0.78394 | 0.87564 | 0.00602 | 0.87865 | 0.0917 | ||

| 0.85552 | 0.93457 | 0.00468 | 0.93691 | 0.07905 | ||

| 0.9849 | 1.04555 | 0.00293 | 1.04702 | 0.06065 | ||

| 1.12069 | 1.16661 | 0.00174 | 1.16749 | 0.04593 | ||

| Moment | 0.628173 | 0.763601 | 0.011684 | 0.769442 | 0.135428 | |

| 0.660538 | 0.787498 | 0.010577 | 0.792786 | 0.12696 | ||

| 0.696556 | 0.81483 | 0.009459 | 0.819559 | 0.118274 | ||

| 0.738432 | 0.847483 | 0.008298 | 0.851632 | 0.109051 | ||

| 0.790834 | 0.88951 | 0.007035 | 0.893028 | 0.098676 | ||

| 0.867614 | 0.953064 | 0.005508 | 0.955819 | 0.085451 | ||

| 1.007441 | 1.073498 | 0.0035 | 1.075248 | 0.066057 |

Table A2.

The KRIs for the GRC under artificial data where n = 50.

Table A2.

The KRIs for the GRC under artificial data where n = 50.

| MLE | 0.578158 | 0.722400 | 0.012275 | 0.728538 | 0.144242 | |

| 0.633844 | 0.761359 | 0.01021 | 0.766464 | 0.127515 | ||

| 0.698678 | 0.809469 | 0.008197 | 0.813567 | 0.11079 | ||

| 0.787371 | 0.879185 | 0.006029 | 0.882199 | 0.091813 | ||

| 0.859065 | 0.938165 | 0.00468 | 0.940505 | 0.0791 | ||

| 0.988534 | 1.049155 | 0.002929 | 1.05062 | 0.060622 | ||

| 1.124239 | 1.170102 | 0.001733 | 1.170969 | 0.045864 | ||

| LS | 0.57787 | 0.72244 | 0.01232 | 0.72861 | 0.14458 | |

| 0.63371 | 0.76149 | 0.01024 | 0.76661 | 0.12778 | ||

| 0.69871 | 0.80969 | 0.00822 | 0.8138 | 0.11099 | ||

| 0.78758 | 0.87952 | 0.00604 | 0.88254 | 0.09193 | ||

| 0.85939 | 0.93856 | 0.00469 | 0.94091 | 0.07917 | ||

| 0.98898 | 1.04962 | 0.00293 | 1.05109 | 0.06064 | ||

| 1.12472 | 1.17057 | 0.00173 | 1.17144 | 0.04585 | ||

| WLS | 0.57791 | 0.72267 | 0.01234 | 0.72884 | 0.14476 | |

| 0.63386 | 0.76176 | 0.01025 | 0.76689 | 0.1279 | ||

| 0.69896 | 0.81 | 0.00822 | 0.81411 | 0.11104 | ||

| 0.78791 | 0.87983 | 0.00603 | 0.88285 | 0.09193 | ||

| 0.85973 | 0.93886 | 0.00468 | 0.9412 | 0.07913 | ||

| 0.98925 | 1.04981 | 0.00292 | 1.05127 | 0.06056 | ||

| 1.1248 | 1.17055 | 0.00173 | 1.17142 | 0.04576 | ||

| CVM | 0.57823 | 0.72227 | 0.01224 | 0.72839 | 0.14404 | |

| 0.63384 | 0.76117 | 0.01018 | 0.76626 | 0.12733 | ||

| 0.69859 | 0.80921 | 0.00817 | 0.81329 | 0.11062 | ||

| 0.78715 | 0.87881 | 0.00601 | 0.88182 | 0.09167 | ||

| 0.85873 | 0.9377 | 0.00466 | 0.94003 | 0.07897 | ||

| 0.98798 | 1.0485 | 0.00292 | 1.04996 | 0.06051 | ||

| 1.12345 | 1.16922 | 0.00173 | 1.17009 | 0.04578 | ||

| ADE | 0.57913 | 0.72364 | 0.01231 | 0.72979 | 0.1445 | |

| 0.63494 | 0.76266 | 0.01023 | 0.76778 | 0.12772 | ||

| 0.6999 | 0.81084 | 0.00821 | 0.81495 | 0.11094 | ||

| 0.78873 | 0.88063 | 0.00604 | 0.88365 | 0.0919 | ||

| 0.86051 | 0.93966 | 0.00468 | 0.942 | 0.07915 | ||

| 0.99006 | 1.05069 | 0.00293 | 1.05216 | 0.06063 | ||

| 1.12578 | 1.17163 | 0.00173 | 1.17249 | 0.04585 | ||

| Moment | 0.63385 | 0.76648 | 0.01112 | 0.77204 | 0.13263 | |

| 0.66569 | 0.78987 | 0.01005 | 0.79489 | 0.12418 | ||

| 0.70107 | 0.81658 | 0.00896 | 0.82107 | 0.11552 | ||

| 0.74211 | 0.84845 | 0.00784 | 0.85237 | 0.10634 | ||

| 0.79336 | 0.88939 | 0.00663 | 0.89271 | 0.09604 | ||

| 0.86822 | 0.95116 | 0.00517 | 0.95375 | 0.08294 | ||

| 1.00396 | 1.06779 | 0.00326 | 1.06941 | 0.06382 |

Table A3.

The KRIs for the GRC under artificial data where n = 150.

Table A3.

The KRIs for the GRC under artificial data where n = 150.

| MLE | 0.577686 | 0.72254 | 0.012356 | 0.728717 | 0.144854 | |

| 0.633662 | 0.761654 | 0.010268 | 0.766788 | 0.127992 | ||

| 0.698793 | 0.809929 | 0.008235 | 0.814046 | 0.111136 | ||

| 0.787813 | 0.879831 | 0.006048 | 0.882854 | 0.092018 | ||

| 0.859702 | 0.93892 | 0.004689 | 0.941265 | 0.079219 | ||

| 0.98937 | 1.050009 | 0.002929 | 1.051473 | 0.060639 | ||

| 1.125096 | 1.170922 | 0.001731 | 1.171787 | 0.045826 | ||

| LS | 0.57931 | 0.72407 | 0.01234 | 0.73024 | 0.14476 | |

| 0.63525 | 0.76316 | 0.01026 | 0.76828 | 0.12791 | ||

| 0.70033 | 0.8114 | 0.00823 | 0.81551 | 0.11107 | ||

| 0.78929 | 0.88126 | 0.00604 | 0.88428 | 0.09197 | ||

| 0.86114 | 0.94032 | 0.00468 | 0.94266 | 0.07918 | ||

| 0.99074 | 1.05136 | 0.00293 | 1.05282 | 0.06062 | ||

| 1.12642 | 1.17223 | 0.00173 | 1.1731 | 0.04581 | ||

| WLS | 0.58012 | 0.72476 | 0.01231 | 0.73091 | 0.14464 | |

| 0.63605 | 0.76381 | 0.01022 | 0.76892 | 0.12777 | ||

| 0.70109 | 0.81199 | 0.00819 | 0.81609 | 0.1109 | ||

| 0.78995 | 0.88173 | 0.00601 | 0.88474 | 0.09178 | ||

| 0.86167 | 0.94066 | 0.00466 | 0.94299 | 0.07899 | ||

| 0.99097 | 1.0514 | 0.00291 | 1.05285 | 0.06043 | ||

| 1.12622 | 1.17187 | 0.00172 | 1.17273 | 0.04565 | ||

| CVM | 0.57811 | 0.72292 | 0.01235 | 0.7291 | 0.14482 | |

| 0.63407 | 0.76203 | 0.01026 | 0.76716 | 0.12796 | ||

| 0.69918 | 0.81029 | 0.00823 | 0.8144 | 0.11111 | ||

| 0.78818 | 0.88017 | 0.00604 | 0.88319 | 0.09199 | ||

| 0.86005 | 0.93925 | 0.00469 | 0.94159 | 0.0792 | ||

| 0.98968 | 1.05031 | 0.00293 | 1.05177 | 0.06062 | ||

| 1.12537 | 1.17119 | 0.00173 | 1.17205 | 0.04582 | ||

| ADE | 0.57831 | 0.72327 | 0.01237 | 0.72946 | 0.14496 | |

| 0.63434 | 0.76242 | 0.01028 | 0.76756 | 0.12808 | ||

| 0.69952 | 0.81072 | 0.00824 | 0.81484 | 0.1112 | ||

| 0.7886 | 0.88066 | 0.00605 | 0.88369 | 0.09206 | ||

| 0.86053 | 0.93978 | 0.00469 | 0.94213 | 0.07925 | ||

| 0.99025 | 1.05091 | 0.00293 | 1.05238 | 0.06066 | ||

| 1.12602 | 1.17185 | 0.00173 | 1.17272 | 0.04583 | ||

| Moment | 0.63382 | 0.76479 | 0.01079 | 0.77018 | 0.13097 | |

| 0.66536 | 0.78787 | 0.00973 | 0.79274 | 0.12252 | ||

| 0.70035 | 0.81422 | 0.00867 | 0.81855 | 0.11387 | ||

| 0.7409 | 0.84561 | 0.00757 | 0.8494 | 0.10471 | ||

| 0.79146 | 0.8859 | 0.00639 | 0.88909 | 0.09444 | ||

| 0.86518 | 0.94658 | 0.00498 | 0.94907 | 0.0814 | ||

| 0.99842 | 1.06087 | 0.00311 | 1.06242 | 0.06245 |

Table A4.

The KRIs for the GRC under artificial data where n = 300.

Table A4.

The KRIs for the GRC under artificial data where n = 300.

| MLE | 0.578834 | 0.723928 | 0.012394 | 0.730125 | 0.145094 | |

| 0.634911 | 0.763106 | 0.010298 | 0.768255 | 0.128196 | ||

| 0.700153 | 0.811455 | 0.008258 | 0.815584 | 0.111303 | ||

| 0.789313 | 0.881458 | 0.006063 | 0.88449 | 0.092145 | ||

| 0.861306 | 0.940627 | 0.0047 | 0.942977 | 0.079321 | ||

| 0.991142 | 1.05185 | 0.002935 | 1.053317 | 0.060708 | ||

| 1.127019 | 1.172891 | 0.001734 | 1.173758 | 0.045872 | ||

| LS | 0.578709 | 0.723708 | 0.012376 | 0.729896 | 0.144999 | |

| 0.634752 | 0.76286 | 0.010283 | 0.768001 | 0.128108 | ||

| 0.699953 | 0.811175 | 0.008245 | 0.815298 | 0.111223 | ||

| 0.789051 | 0.881126 | 0.006054 | 0.884153 | 0.092075 | ||

| 0.860991 | 0.940249 | 0.004692 | 0.942595 | 0.079258 | ||

| 0.990724 | 1.051381 | 0.00293 | 1.052846 | 0.060657 | ||

| 1.126486 | 1.172317 | 0.001731 | 1.173182 | 0.045831 | ||

| WLS | 0.58055 | 0.72519 | 0.0123 | 0.73134 | 0.14463 | |

| 0.63648 | 0.76423 | 0.01022 | 0.76934 | 0.12775 | ||

| 0.70152 | 0.81241 | 0.00819 | 0.8165 | 0.11089 | ||

| 0.79037 | 0.88214 | 0.00601 | 0.88514 | 0.09177 | ||

| 0.86208 | 0.94105 | 0.00466 | 0.94338 | 0.07897 | ||

| 0.99135 | 1.05176 | 0.00291 | 1.05321 | 0.06041 | ||

| 1.12656 | 1.17219 | 0.00171 | 1.17304 | 0.04563 | ||

| CVM | 0.57826 | 0.72333 | 0.01239 | 0.72953 | 0.14508 | |

| 0.63433 | 0.76251 | 0.01029 | 0.76765 | 0.12818 | ||

| 0.69957 | 0.81085 | 0.00825 | 0.81497 | 0.11128 | ||

| 0.78872 | 0.88083 | 0.00606 | 0.88386 | 0.09211 | ||

| 0.86069 | 0.93998 | 0.0047 | 0.94233 | 0.07929 | ||

| 0.99047 | 1.05115 | 0.00293 | 1.05261 | 0.06067 | ||

| 1.12627 | 1.17211 | 0.00173 | 1.17298 | 0.04584 | ||

| ADE | 0.57847 | 0.72362 | 0.0124 | 0.72982 | 0.14515 | |

| 0.63457 | 0.76281 | 0.0103 | 0.76796 | 0.12823 | ||

| 0.69984 | 0.81117 | 0.00826 | 0.8153 | 0.11132 | ||

| 0.78903 | 0.88118 | 0.00606 | 0.88421 | 0.09215 | ||

| 0.86103 | 0.94035 | 0.0047 | 0.9427 | 0.07932 | ||

| 0.99086 | 1.05156 | 0.00293 | 1.05302 | 0.06069 | ||

| 1.12671 | 1.17256 | 0.00173 | 1.17343 | 0.04585 | ||

| Moment | 0.63452 | 0.76457 | 0.01061 | 0.76988 | 0.13005 | |

| 0.66587 | 0.78749 | 0.00958 | 0.79228 | 0.12162 | ||

| 0.70064 | 0.81364 | 0.00853 | 0.8179 | 0.113 | ||

| 0.74091 | 0.84479 | 0.00744 | 0.84851 | 0.10388 | ||

| 0.7911 | 0.88475 | 0.00627 | 0.88788 | 0.09365 | ||

| 0.86423 | 0.94491 | 0.00487 | 0.94734 | 0.08068 | ||

| 0.99628 | 1.0581 | 0.00305 | 1.05963 | 0.06183 |

Table A5.

The KRIs for the GRC under artificial data where n = 500.

Table A5.

The KRIs for the GRC under artificial data where n = 500.

| MLE | 0.578635 | 0.723537 | 0.012361 | 0.729718 | 0.144903 | |

| 0.634639 | 0.762663 | 0.01027 | 0.767798 | 0.128025 | ||

| 0.699795 | 0.810948 | 0.008236 | 0.815065 | 0.111153 | ||

| 0.788836 | 0.880856 | 0.006047 | 0.883879 | 0.09202 | ||

| 0.860731 | 0.939945 | 0.004687 | 0.942288 | 0.079214 | ||

| 0.990391 | 1.051016 | 0.002927 | 1.05248 | 0.060625 | ||

| 1.126083 | 1.171892 | 0.001729 | 1.172756 | 0.045809 | ||

| LS | 0.578565 | 0.723659 | 0.01239 | 0.729854 | 0.145094 | |

| 0.63465 | 0.762835 | 0.010294 | 0.767982 | 0.128186 | ||

| 0.699895 | 0.811178 | 0.008253 | 0.815305 | 0.111283 | ||

| 0.789048 | 0.881164 | 0.006058 | 0.884193 | 0.092117 | ||

| 0.861023 | 0.940312 | 0.004695 | 0.942659 | 0.079288 | ||

| 0.990806 | 1.051478 | 0.002931 | 1.052943 | 0.060672 | ||

| 1.1266 | 1.172436 | 0.001731 | 1.173302 | 0.045837 | ||

| WLS | 0.58097 | 0.72551 | 0.01229 | 0.73165 | 0.14454 | |

| 0.63687 | 0.76453 | 0.0102 | 0.76963 | 0.12766 | ||

| 0.70187 | 0.81267 | 0.00818 | 0.81676 | 0.1108 | ||

| 0.79065 | 0.88234 | 0.006 | 0.88534 | 0.09169 | ||

| 0.86231 | 0.94121 | 0.00465 | 0.94354 | 0.07891 | ||

| 0.99146 | 1.05182 | 0.0029 | 1.05327 | 0.06036 | ||

| 1.12655 | 1.17213 | 0.00171 | 1.17299 | 0.04558 | ||

| CVM | 0.57891 | 0.72388 | 0.01237 | 0.73007 | 0.14498 | |

| 0.63494 | 0.76303 | 0.01028 | 0.76817 | 0.12809 | ||

| 0.70013 | 0.81133 | 0.00824 | 0.81545 | 0.1112 | ||

| 0.78921 | 0.88127 | 0.00605 | 0.8843 | 0.09206 | ||

| 0.86114 | 0.94038 | 0.00469 | 0.94273 | 0.07924 | ||

| 0.99085 | 1.05149 | 0.00293 | 1.05296 | 0.06064 | ||

| 1.12658 | 1.1724 | 0.00173 | 1.17327 | 0.04582 | ||

| ADE | 0.57897 | 0.72398 | 0.01238 | 0.73017 | 0.14501 | |

| 0.63502 | 0.76313 | 0.01028 | 0.76828 | 0.12812 | ||

| 0.70023 | 0.81145 | 0.00825 | 0.81558 | 0.11123 | ||

| 0.78933 | 0.88141 | 0.00605 | 0.88443 | 0.09208 | ||

| 0.86127 | 0.94053 | 0.00469 | 0.94287 | 0.07926 | ||

| 0.991 | 1.05165 | 0.00293 | 1.05312 | 0.06065 | ||

| 1.12675 | 1.17258 | 0.00173 | 1.17344 | 0.04583 | ||

| Moment | 0.634903 | 0.76443 | 0.010529 | 0.769694 | 0.129527 | |

| 0.666138 | 0.787257 | 0.009492 | 0.792003 | 0.121119 | ||

| 0.700776 | 0.813294 | 0.00845 | 0.817519 | 0.112519 | ||

| 0.740894 | 0.844309 | 0.007374 | 0.847996 | 0.103416 | ||

| 0.790872 | 0.884086 | 0.006211 | 0.887192 | 0.093214 | ||

| 0.863675 | 0.943957 | 0.004819 | 0.946367 | 0.080282 | ||

| 0.995081 | 1.056576 | 0.003013 | 1.058083 | 0.061495 |

References

- Chen, Z. A new two-parameter lifetime distribution with bathtub shape or increasing failure rate function. Stat. Probab. Lett. 2000, 49, 155–161. [Google Scholar] [CrossRef]

- Cooray, K.; Ananda, M.M. Modeling actuarial data with a composite lognormal-Pareto model. Scand. Actuar. J. 2005, 2005, 321–334. [Google Scholar] [CrossRef]

- Shrahili, M.; Elbatal, I.; Yousof, H.M. Asymmetric Density for Risk Claim-Size Data: Prediction and Bimodal Data Applications. Symmetry 2021, 13, 2357. [Google Scholar] [CrossRef]

- Mohamed, H.S.; Cordeiro, G.M.; Minkah, R.; Yousof, H.M.; Ibrahim, M. A size-of-loss model for the negatively skewed insurance claims data: Applications, risk analysis using different methods and statistical forecasting. J. Appl. Stat. Forthcom. 2022. [Google Scholar] [CrossRef]

- Klugman, S.A.; Panjer, H.H.; Willmot, G.E. Loss Models: From Data to Decisions; John Wiley & Sons: Hoboken, NJ, USA, 2012; Volume 715. [Google Scholar]

- Wirch, J.L. Raising value at risk. N. Am. Actuar. J. 1999, 3, 106–115. [Google Scholar] [CrossRef]

- Tasche, D. Expected Shortfall and Beyond. J. Bank. Financ. 2002, 26, 1519–1533. [Google Scholar] [CrossRef]

- Acerbi, C.; Tasche, D. On the coherence of expected shortfall. J. Bank. Financ. 2002, 26, 1487–1503. [Google Scholar] [CrossRef]

- Furman, E.; Landsman, Z. Tail Variance premium with applications for elliptical portfolio of risks. ASTIN Bull. J. IAA 2006, 36, 433–462. [Google Scholar] [CrossRef]

- Landsman, Z. On the tail mean–Variance optimal portfolio selection. Insur. Math. Econ. 2010, 46, 547–553. [Google Scholar] [CrossRef]

- Artzner, P. Application of coherent risk measures to capital requirements in insurance. N. Am. Actuar. J. 1999, 3, 11–25. [Google Scholar] [CrossRef]

- Yousof, H.M.; Afify, A.Z.; Hamedani, G.G.; Aryal, G. The Burr X generator of distributions for lifetime data. J. Stat. Theory Appl. 2017, 16, 288–305. [Google Scholar] [CrossRef]

- Cordeiro, G.M.; Afify, A.Z.; Yousof, H.M.; Pescim, R.R.; Aryal, G.R. The exponentiated Weibull-H family of distributions: Theory and Applications. Mediterr. J. Math. 2017, 14, 155. [Google Scholar] [CrossRef]

- Dey, S.; Kumar, D.; Ramos, P.L.; Louzada, F. Exponentiated Chen distribution: Properties and estimation. Commun. Stat. Simul. Comput. 2017, 46, 8118–8139. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).