Abstract

In matrix analysis, the scaling technique reduces the chances of an ill-conditioning of the matrix. This article proposes a one-parameter scaling memoryless Davidon–Fletcher–Powell (DFP) algorithm for solving a system of monotone nonlinear equations with convex constraints. The measure function that involves all the eigenvalues of the memoryless DFP matrix is minimized to obtain the scaling parameter’s optimal value. The resulting algorithm is matrix and derivative-free with low memory requirements and is globally convergent under some mild conditions. A numerical comparison showed that the algorithm is efficient in terms of the number of iterations, function evaluations, and CPU time. The performance of the algorithm is further illustrated by solving problems arising from image restoration.

Keywords:

one-parameter scaling; memoryless DFP algorithm; measure function; convex constraints; image restoration MSC:

90C30; 90C26; 90C06; 90C56

1. Introduction

The classical quasi–Newton methods are numerically efficient due to their ability to use approximate Jacobian matrices. Consider the following system of monotone nonlinear equations (SMNE) with convex constraints

where , is a nonempty closed convex set and is a continuous and monotone function. The system is monotone if

The solution of system (1) is important in many fields of science and engineering such as control systems [1], signal recovery and image restoration in compressive sensing [2,3,4,5], communications and networking [6], data estimation and modeling [7], and geophysics [8].

Newton’s method is one of the old techniques for solving a system (1) if the Jacobian matrix is invertible [9]. Initially, Davidon [10] derived a method, which was later on reformulated by Fletcher and Powell [11] known as the Davidon–Fletcher–Powell (DFP) method for approximating the Hessian matrix of the unconstrained optimization problems. The method has been widely used for solving nonlinear optimization problems [12,13,14,15].

In recent years, there has been a lot of interest in developing methods for solving the convex-constrained nonlinear monotone problems. Some examples include: Levenberg–Marquardt method [16], scaled trust–region method [17], interior global method [18], Dogleg method [19], Polak–Ribière–Polyak (PRP) method [20], Dai and Yuan method [21], descent derivative–free method [22], projection–based method [23], double projection method [24], multivariate spectral gradient method [25], extension of CG_DESCENT projection method [26], new derivative–free SCG–type projection method [27], partially symmetrical derivative–free Liu–Storey projection method [28], modified spectral gradient projection method [29], efficient generalized conjugate gradient (CG) algorithm [30], modified Fletcher–Reeves method [31], modified decent three–term CG method [32], new hybrid CG projection method [33], self–adaptive three–term CG method [34], efficient three–term CG method [35], derivative–free RMIL CG method [36], and spectral three–term conjugate descent method [37]. A new technique called the inexact Newton method has been introduced by Solodov and Svaiter [9] having an interesting property that the entire system converges to a solution without any assumptions. Later on, Zhou and Toh [38] proved that Solodov and Svaiter method has superlinear convergence under some assumptions. Furthermore, this algorithm was extended by Zhou and Li [39,40] to the Broyden–Fletcher–Goldfarb–Shanno (BFGS) and limited memory BFGS methods. Zhang and Zhou proposed a new method named spectral gradient projection method [41] for the solution of the system (1), which is the combination of spectral gradient method [42] and the projection method [9]. Wang et al. [43] extended the work of Solodov and Svaiter [9] for the solution of monotone equations with convex constraints. Yu et al. [44] extended the spectral gradient projection method for convex constraint problems. Xiao and Zhu [45] extended the CG_DESCENT method [46,47] for large–scale nonlinear convex-constrained monotone equations. Moreover, Awwal et al. [48] derived a new hybrid spectral gradient projection method for the solution of the system (1). Currently, Sabi’u et al. [49] modified the Hager–Zhang CG method by using singular value analysis for solving the system (1).

Inspired by the work of Sabi’u et al. [50,51] for finding some optimal choices of non–negative constants that are involved in some nonlinear CG methods and measure function scaling techniques introduced by Neculai Andrei [52,53]

- We scaled one term of the DFP update formula and found the optimal value of the scaled parameter using the idea of measure function.

- Based on the optimal value of the scaled parameter, we derived a new search direction for the DFP algorithm.

- We proposed a projection-based DFP algorithm for solving large-scale systems of nonlinear monotone equations.

- We provided the global convergence result for the proposed algorithm under some mild assumptions.

- The algorithm is successfully implemented for solving some image restoration problems.

The remainder of this paper is organized as follows. The derivation of the proposed algorithm is given in Section 2. The global convergence of the algorithm is given in Section 3. The numerical results are presented in Section 4. Section 5 contains some applications from the image restoration with its physical explanation. The conclusion is provided in Section 6.

2. One-Parameter Scaled DFP Algorithm

Quasi-Newton schemes are efficient due to their ability to use the Jacobian approximation in the scheme. The DFP update is some of the popular quasi-Newton schemes used for solving large-scale systems of algebraic nonlinear equations. In this section, we present a one-parameter scaled DFP algorithm for solving the system (1). The default iterative formula for the DFP algorithm is as follows

where is the previous iteration, is the current iteration, is the step–length and is the DFP direction defined as

with and is the DFP matrix at . The updating formula for DFP can be found in [10,54] as

where , and . The symmetry of is directly concern with the symmetry of . One of the well-known properties of the DFP update is that is positive definite for [54]. The main contribution of the scaling technique in the DFP update formula is that it reduces the chances of an ill–conditioning of the matrix for all . Now, by multiplying the third term on right-hand side of (5) with a positive scalar , we have

where needs to be determined.

The memoryless concept is applied to the DFP formula to avoid the computation and storage of the matrix at each iteration. In this way, we are replacing the with an identity matrix (where n is dimension of the problem) in (6), so that formula (6) becomes

In addition, we want to utilize the concept of measure function on (7), introduced by Byrd and Nocedal [55];

where tr denotes trace of a positive definite matrix , ln is the natural logarithm, and det is the determinant of . The measure function works with tr and det of at the same time to modify the quasi–Newton method and to collect information about the behavior of the quasi–Newton method. It is the measure of matrices involving all the eigenvalues of [56]. If , then the function is strictly convex [57], while becomes unbounded in case of either is singular or infinite.

Now, the determinant of can be calculated by the Sherman–Morrison formula [58], i.e.,

to have

Using simple algebra on (7), the trace of is given by

Differentiating (12) with respect to , we have

Furthermore, Solodov and Svaiter [9] proposed a predictor-corrector method, in which

and

where

In this work, we use an iterative scheme as

where is any positive constant and P is the projection operator on a convex subset defined by

We recommend that readers read [59] for further details on the advantages and applications of the projection operator. Now, the One-parameter Scaled Memoryless DFP (SMDFP) algorithm for solving system (1) is summarized in Algorithm 1:

| Algorithm 1: Scaled Memoryless DFP (SMDFP) Method |

Step 0 Given a starting point . Step 1 Choose values for with . Compute , set . Step 2 Compute , while testing the stopping criteria, i.e. If , then stop, otherwise move to the next step. Step 3 Compute the step size satisfying the line search

Step 4 Compute the difference and . Step 5 Compute the search direction using (17). Step 6 Compute iterative relations using (21). Step 7 Set and go to Step 2. |

Remark 1.

The proposed SMDFP algorithm is a matrix-free and also derivative-free approach. These make the proposed algorithm more efficient in solving large-scale problems.

3. Convergence Analysis

This section provides the global convergence of the SMDFP algorithm using the following assumptions:

- (a)

- (b)

- For a constant , the function F is Lipschitz continuous on , i.e.,

- (c)

- Let be the solution of the problem (1) such that .

Now, we will proceed with a non–expansive property of projection operator [60].

Lemma 1.

Suppose χ is a nonempty closed and convex subset of . Then the projection operator P can be written as

which shows that, P is a Lipschitz continuous on with .

Lemma 2.

Proof.

Multiplying (17) by , we have

where the second inequality follows from the Cauchy–Schwarz inequality. Hence

□

Lemma 3.

Let the assumptions (a), (b), and (c) hold, then the sequences and generated by the SMDFP algorithm are bounded. Moreover, we have

and

Proof.

Firstly, we will show that the sequences and are bounded. Let be any solution of the problem (1). By monotonicity of F, we get

and from the definition of and line search (23), we have

Hence the sequence is decreasing and convergent. Moreover, the sequence is bounded. Furthermore, from (36), we can write

and repeating the same process, we get the result that

Moreover, from assumption (b), we have

We suppose that , then the sequence is bounded, i.e.,

From the inequality (41), it implies that

which shows that the sequence is also bounded. Moreover, from inequality (36), it follows that

which implies that

Now using the Cauchy–Schwarz inequality on (45), we have

Thus, by using (30), we have

Hence, the proof of (31) is completed. □

Lemma 4.

The direction generated by the SMDFP algorithm is bounded. That is

where q is some positive constant.

Proof.

□

Theorem 1.

Let the sequences and be generated by the SMDFP algorithm, then

Proof.

To prove that (51) holds, we consider the following two cases;

Case 1. Suppose the sequence is generated by the SMDFP method. Then we have

Assume that if

then

Then by continuity property of F, there will be some accumulation point in sequence such that . Since is going to converge and has an accumulation point , is going to converge to .

Case 2. Suppose (51) is not true, and then there exists some positive constant such that

By using Cauchy–Schwarz inequality on descent condition, we have

which implies that

4. Numerical Experimentation

In this section, we perform some numerical experiments to validate the SMDFP algorithm by comparing the computed results with the conjugate gradient hybrid (CGH) method [61] and with the generalized hybrid CGPM–based (GHCGP) method [62]. All algorithms are written in Matlab R2014 on an HP CORE i5 Intel 8th Gen personal computer. In our experiment with the uniform stopping condition, we used the published initial values for the comparison algorithms. However, we used and in the SMDFP algorithm. The numerical simulations are stopped either exceeding the dimension or . For the following problems see [63,64,65,66,67,68].

Problem 1

([63,64]). Set and function is described as

, for .

Problem 2

([65]). Set and function is described as

,

, for .

Problem 3

([66]). Set and function is described as

, for ,

.

Problem 4

([67]). Set and function is described as

, for .

Problem 5

([68]). Set and function is described as

, for .

Problem 6

([63]). Set and function is described as

, for .

Problem 7

([63,64]). Set and function is described as

, for .

Problem 8

([63]). Set and function is described as

,

, for .

In Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7 and Table 8, we used the initial points , , , , , , and . Moreover, the terms ITER, FEV, CPUT, and NORM stand for the number of iterations, the number of function evaluations, CPU times (in seconds), and the norm of the function evaluations, respectively. The failure of a certain algorithm is denoted by “_”. We further show the numerical performance of the SMDFP algorithm along with the CGH [61] and GHCGP [62] methods in terms of the number of iterations, function evaluations, CPU times, and error estimation.

Table 1.

Numerical comparison of the SMDFP, CGH [61], and GHCGP [62] methods.

Table 2.

Numerical comparison of the SMDFP, CGH [61], and GHCGP [62] methods.

Table 3.

Numerical comparison of the SMDFP, CGH [61], and GHCGP [62] methods.

Table 4.

Numerical comparison of the SMDFP, CGH [61], and GHCGP [62] methods.

Table 5.

Numerical comparison of the SMDFP, CGH [61], and GHCGP [62] methods.

Table 6.

Numerical comparison of the SMDFP, CGH [61], and GHCGP [62] methods.

Table 7.

Numerical comparison of the SMDFP, CGH [61], and GHCGP [62] methods.

Table 8.

Numerical comparison of the SMDFP, CGH [61], and GHCGP [62] methods.

In Table 1 and Table 2, the SMDFP algorithm has fewer iterations and function evaluations, shorter CPU times, and smaller errors compared to CGH [61] and GHCGP [62] methods. However, the SMDFP algorithm has more number of iterations, function evaluations, shorter CPU times, and smaller errors than the two compared methods in Table 3, Table 5 and Table 6. The CGH method failed for Problems 4 and 7, as shown in Table 4 and Table 7. Further from Table 8, it is noted that the GHCGP algorithm failed for Problem 8. It is concluded that the overall performance of the SMDFP algorithm is more efficient than both the CGH approach and GHCGP algorithm in terms of the number of iterations, function evaluations, CPU times, and error estimation, as shown in Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7 and Table 8.

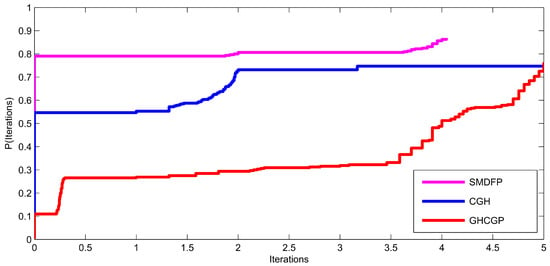

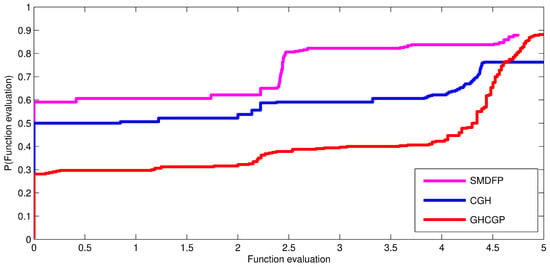

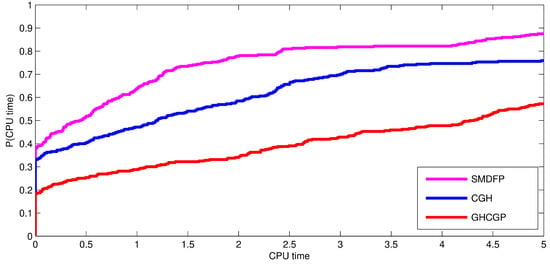

Next, the robustness of the SMDFP algorithm is illustrated by Figure 1, Figure 2 and Figure 3 showing the performance profiles based on Dolan and Moré procedure [69]. In Figure 1, the top curve showed the SMDFP algorithm with respect to the number of iterations, which leads the remaining curves of CGH [61] and GHCGP methods [62]. Similarly, in the case of function evaluations and CPU times, the SMDFP algorithm has better performance, as shown in Figure 2 and Figure 3, respectively. All of the above factors clearly showed that our proposed algorithm is leading and the best in all aspects, i.e., in terms of iterations, function evaluations, and CPU times.

Figure 1.

Performance of the SMDFP, CGH [61], and GHCGP [62] methods for the number of iterations.

Figure 2.

Performance of the SMDFP, CGH [61], and GHCGP [62] methods for the number of function evaluations.

Figure 3.

Performance of the SMDFP, CGH [61], and GHCGP [62] methods for the number of CPU time.

5. Applications

5.1. Image Restoration

This section describes techniques for reducing noise and recovering lost image resolutions. Its applications are in the field of medical imaging [70], astronomical imaging [71], file restoration [72], and image coding [73]. Let be the original sparse signal, and be an observation satisfying

where is a linear operator. This problem is used for finding solutions to the sparse ill-conditioned linear system of equations. According to Bruckstein et al. [74], a function containing a quadratic error term as well as a sparse –regularization term is minimized as,

where , and is a nonnegative balance parameter, denotes the Euclidean norm of x, and is the –norm of x. Problem (62) is a convex unconstrained minimization problem commonly used in compressive sensing if the original signal is sparse or near to sparse on some orthogonal basis.

Many iterative techniques have been introduced in literature such as Figueiredo et al. [75], Hale et al. [76], Figueiredo et al. [77], Van Den Berg et al. [78], Beck et al. [79], and Hager et al. [80] for finding a solution to (62). The GPRS method has the following steps to present (62) as a quadratic problem.

Let be categorized into two different classes, namely, its positive and negative parts:

where for all , and Since, by definition of –norm, we have , where . Thus, (62) can be written as:

which is a bound–constrained and quadratic problem. Moreover, following Figueiredo et al. [77], a standard form of problem (64) can be written as:

where , and . Problem (65) is convex quadratic because A is a positive semi-definite matrix as proved by Xiao et al. [5]. He also transformed (65) into a linear variable inequality problem, which is equivalent to a linear complementarity problem. However, v is a solution to the linear complementarity problem if and only if it is a solution to the nonlinear equation

which is continuously monotone, as shown by Xiao et al. [5]. So, problem (66) can be solved using the SMDFP method.

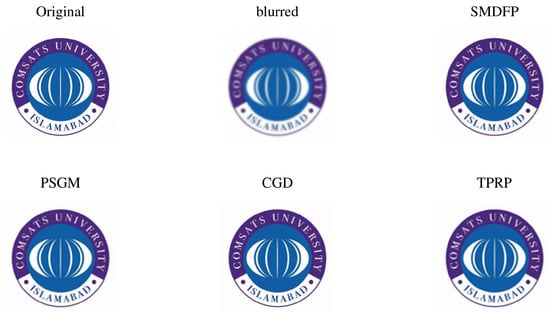

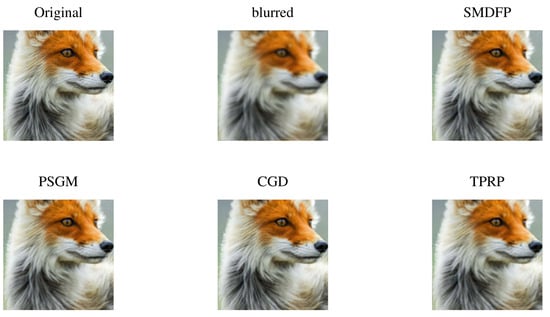

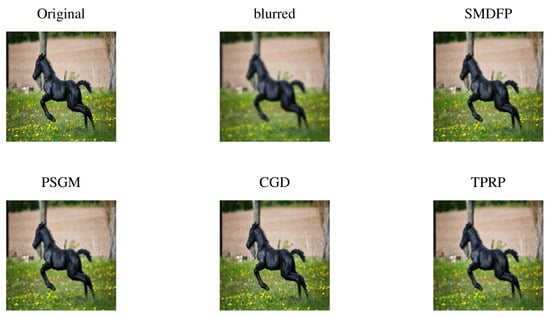

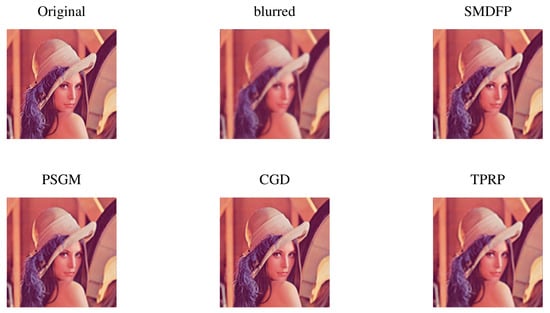

5.2. Implementation

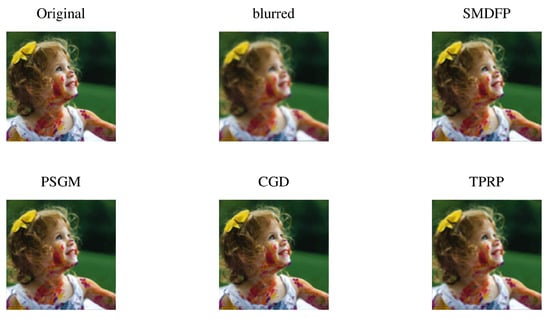

Table 9 shows the numerical comparison for four different methods, namely; SMDFP method, CGD method [45], PSGM method [81], and TPRP method [82] by applying on seven different images, namely, baby, COMSATS, fox, horse, Lena, and Thai-Culture.The mean of squared error (MSE) [45] between the blurred and restored x images is

and the signal–to–ratio (SNR) [45] of the recovered images is

Table 9.

Numerical comparison of SMDFP, CGD [45], PSGM [81], and TPRP [82] methods.

By both MSE and SNR, we measure the image restoration quality by taking and . We studied a compressive sensing situation in which the aim was to reconstruct a length-n sparse signal from m observations, where . We test a modest size signal with , , and the original has 26 randomly non-zero elements due to the PC’s storage restrictions. The random U is the Gaussian matrix created by the Matlab command . The measurement t in this test involves noise,

where is the Gaussian noise distributed as with . We used as the merit function, , is compelled to decrease as adopted in [75], and the iteration terminates if

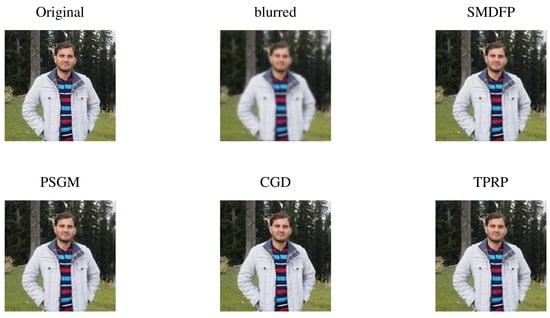

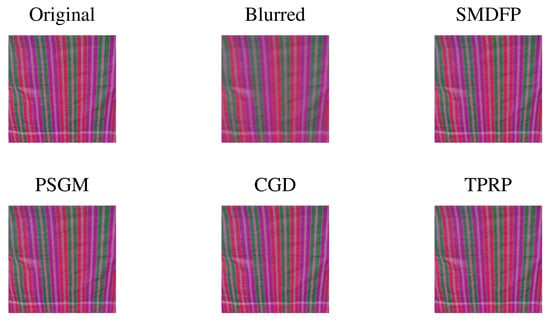

All of the codes in this section are also executed on the same machine and software as described in Section 4. In summary, the performance of the SMDFP method is better than CGD [45], PSGM [81], and TPRP [82] methods for the number of iterations, CPU time, and recovered images SNR quality as clear from Table 9. Consequently, it can be seen from Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10 that the proposed method has restored all the problems with fewer iterations and CPU time.

Figure 4.

Comparison of the original image, blurred image, SMDFP image, CGD image, PSGM image, and TPRP image for the number of iterations, CPU time, MSE, and SNR.

Figure 5.

Comparison of the original image, blurred image, SMDFP image, CGD image, PSGM image, and TPRP image for the number of iterations, CPU time, MSE, and SNR.

Figure 6.

Comparison of the original image, blurred image, SMDFP image, CGD image, PSGM image, and TPRP image for the number of iterations, CPU time, MSE, and SNR.

Figure 7.

Comparison of the original image, blurred image, SMDFP image, CGD image, PSGM image, and TPRP image for the number of iterations, CPU time, MSE, and SNR.

Figure 8.

Comparison of the original image, blurred image, SMDFP image, CGD image, PSGM image, and TPRP image for the number of iterations, CPU time, MSE, and SNR.

Figure 9.

Comparison of the original image, blurred image, SMDFP image, CGD image, PSGM image, and TPRP image for the number of iterations, CPU time, MSE, and SNR.

Figure 10.

Comparison of the original image, blurred image, SMDFP image, CGD image, PSGM image, and TPRP image for the number of iterations, CPU time, MSE, and SNR.

6. Conclusions

This paper presents a one-parameter SMDFP method for solving a system of monotone nonlinear equations with convex constraints. We scaled one term of the DFP update formula and found the optimal value of the scaled parameter using the idea of measure function. Based on the new optimal value of the scaled parameter, we derived a modified search direction for the DFP algorithm. The proposed method is globally convergent using the monotonicity and Lipschitz continuous assumptions. The method’s robustness is demonstrated by solving large-scale monotone nonlinear equations and making comparisons with the related CGH and GHCGP methods. Lastly, the algorithm is successfully implemented for solving some image restoration problems. The proposed scale DFP direction can further be applied to solve unconstrained optimization problems, motion control of two coplanar joint robot manipulators, and many more problems.

Author Contributions

Conceptualization, N.U., J.S., X.J., A.M.A., N.P. and B.P.; Methodology, N.U., A.S., X.J., A.M.A. and N.P.; Software, J.S., X.J., A.M.A. and N.P.; Validation, J.S., X.J., N.P. and S.K.S.; Formal analysis, A.S.; Investigation, N.U., A.S. and S.K.S.; Resources, A.M.A.; Data curation, N.U. and S.K.S.; Writing—original draft, N.U., J.S., X.J., A.M.A., N.P., S.K.S. and B.P.; Writing—review & editing, N.U., A.S., N.P. and B.P.; Visualization, A.M.A. and N.P.; Supervision, A.S., J.S., X.J. and B.P.; Project administration, N.P. and B.P.; Funding acquisition, N.P. and B.P. All authors have read and agreed to the published version of the manuscript.

Funding

The sixth author was supported by Phetchabun Rajabhat University and Thailand Science Research and Innovation (grant number 182093). The eighth author was partially supported by Chiang Mai University and Fundamental Fund 2023, Chiang Mai University, and the NSRF via the Program Management Unit for Human Resources and Institutional Development, Research and Innovation (grant number B05F640183).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Prajna, S.; Parrilo, P.A.; Rantzer, A. Nonlinear control synthesis by convex optimization. IEEE Trans. Autom. Control 2004, 49, 310–314. [Google Scholar] [CrossRef]

- Abubakar, A.B.; Kumam, P.; Mohammad, H.; Awwal, A.M. An efficient conjugate gradient method for convex constrained monotone nonlinear equations with applications. Mathematics 2019, 7, 767. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, Y. An efficient projected gradient method for convex constrained monotone equations with applications in compressive sensing. J. Appl. Math. Phys. 2020, 8, 983–998. [Google Scholar] [CrossRef]

- Liu, J.K.; Du, X.L. A gradient projection method for the sparse signal reconstruction in compressive sensing. Appl. Anal. 2018, 97, 2122–2131. [Google Scholar] [CrossRef]

- Xiao, Y.; Wang, Q.; Hu, Q. Non–smooth equations based method for (l1)–norm problems with applications to compressive sensing. Nonlinear Anal. Theory Methods Appl. 2011, 74, 3570–3577. [Google Scholar] [CrossRef]

- Luo, Z.Q.; Yu, W. An introduction to convex optimization for communications and signal processing. IEEE J. Sel. Areas Commun. 2006, 24, 1426–1438. [Google Scholar]

- Evgeniou, T.; Pontil, M.; Toubia, O. A convex optimization approach to modelling consumer heterogeneity in conjoint estimation. Mark. Sci. 2007, 26, 805–818. [Google Scholar] [CrossRef]

- Bello, L.; Raydan, M. Convex constrained optimization for the seismic reflection tomography problem. J. Appl. Geophys. 2007, 62, 158–166. [Google Scholar] [CrossRef]

- Solodov, M.V.; Svaiter, B.F. A globally convergent inexact Newton method for systems of monotone equations. In Reformulation: Nonsmooth, Piecewise Smooth, Semismooth and Smoothing Methods; Fukushima, M., Qi, L., Eds.; Springer: Boston, MA, USA, 1998; Volume 22, pp. 355–369. [Google Scholar]

- Davidon, W.C. Variable metric method for minimization. SIAM J. Optim. 1991, 1, 1–17. [Google Scholar] [CrossRef]

- Fletcher, R.; Powell, M.J.D. A rapidly convergent descent method for minimization. Comput. J. 1963, 6, 163–168. [Google Scholar] [CrossRef]

- Dingguo, P. Superlinear convergence of the DFP algorithm without exact line search. Acta Math. Appl. Sin. 2001, 17, 430–432. [Google Scholar] [CrossRef]

- Dingguo, P.; Weiwen, T. A class of Broyden algorithms with revised search directions. Asia–Pac. J. Oper. Res. 1997, 14, 93–109. [Google Scholar]

- Pu, D. Convergence of the DFP algorithm without exact line search. J. Optim. Theory Appl. 2002, 112, 187–211. [Google Scholar] [CrossRef]

- Pu, D.; Tian, W. The revised DFP algorithm without exact line search. J. Comput. Appl. Math. 2003, 154, 319–339. [Google Scholar] [CrossRef]

- Kanzow, C.; Yamashita, N.; Fukushima, M. Levenberg–Marquardt methods with strong local convergence properties for solving nonlinear equations with convex constraints. J. Comput. Appl. Math. 2005, 173, 321–343. [Google Scholar] [CrossRef]

- Bellavia, S.; Macconi, M.; Morini, B. A scaled trust–region solver for constrained nonlinear equations. Comput. Optim. Appl. 2004, 28, 31–50. [Google Scholar] [CrossRef]

- Bellavia, S.; Morini, B. An interior global method for nonlinear systems with simple bounds. Optim. Methods Softw. 2005, 20, 453–474. [Google Scholar] [CrossRef]

- Bellavia, S.; Morini, B.; Pieraccini, S. Constrained Dogleg methods for nonlinear systems with simple bounds. Comput. Optim. Appl. 2012, 53, 771–794. [Google Scholar] [CrossRef]

- Yu, G. A derivative–free method for solving large–scale nonlinear systems of equations. J. Ind. Manag. Optim. 2010, 6, 149–160. [Google Scholar] [CrossRef]

- Liu, J.; Feng, Y. A derivative–free iterative method for nonlinear monotone equations with convex constraints. Numer. Algorithms 2019, 82, 245–262. [Google Scholar] [CrossRef]

- Mohammad, H.; Abubakar, A.B. A descent derivative–free algorithm for nonlinear monotone equations with convex constraints. RAIRO–Oper. Res. 2020, 54, 489–505. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Y. A super–linearly convergent projection method for constrained systems of nonlinear equations. J. Glob. Optim. 2009, 44, 283–296. [Google Scholar] [CrossRef]

- Ma, F.; Wang, C. Modified projection method for solving a system of monotone equations with convex constraints. J. Appl. Math. Comput. 2010, 34, 47–56. [Google Scholar] [CrossRef]

- Yu, G.; Niu, S.; Ma, J. Multivariate spectral gradient projection method for nonlinear monotone equations with convex constraints. J. Ind. Manag. Optim. 2013, 9, 117–129. [Google Scholar] [CrossRef]

- Liu, J.K.; Li, S.J. A projection method for convex constrained monotone nonlinear equations with applications. Comput. Math. Appl. 2015, 70, 2442–2453. [Google Scholar] [CrossRef]

- Ou, Y.; Li, J. A new derivative–free SCG–type projection method for nonlinear monotone equations with convex constraints. J. Appl Math Comput. 2018, 56, 195–216. [Google Scholar] [CrossRef]

- Liu, J.K.; Xu, J.L.; Zhang, L.Q. Partially symmetrical derivative–free Liu–Storey projection method for convex constrained equations. Int. J. Comput. Math. 2019, 96, 1787–1798. [Google Scholar] [CrossRef]

- Zheng, L.; Yang, L.; Liang, Y. A modified spectral gradient projection method for solving non–linear monotone equations with convex constraints and its application. IEEE Access. 2020, 8, 92677–92686. [Google Scholar] [CrossRef]

- Liu, Y.; Storey, C. Efficient generalized conjugate gradient algorithms, Part 1: Theory. J. Optim. Theory Appl. 1991, 69, 129–137. [Google Scholar] [CrossRef]

- Dai, Y.H.; Yuan, Y. A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 1999, 10, 177–182. [Google Scholar] [CrossRef]

- Liu, S.Y.; Huang, Y.Y.; Jiao, H.W. Sufficient decent conjugate gradient methods for solving convex constrained nonlinear monotone equations. Abstr. Appl. Anal. 2014, 2014, 305643. [Google Scholar]

- Sun, M.; Liu, J. New hybrid conjugate gradient projection method for the convex constrained equations. Calcolo 2016, 53, 399–411. [Google Scholar] [CrossRef]

- Wang, X.Y.; Li, S.J.; Kou, X.P. A self–adaptive three–term conjugate gradient method for monotone nonlinear equations with convex constraints. Calcolo 2016, 53, 133–145. [Google Scholar] [CrossRef]

- Gao, P.; He, C. An efficient three–term conjugate gradient method for nonlinear monotone equations with convex constraints. Calcolo 2018, 55, 53. [Google Scholar] [CrossRef]

- Ibrahim, A.H.; Garba, A.I.; Usman, H.; Abubakar, J.; Abubakar, A.B. Derivative–free RMIL conjugate gradient method for convex constrained equations. Thai J. Math. 2019, 18, 212–232. [Google Scholar]

- Abubakar, A.B.; Rilwan, J.; Yimer, S.E.; Ibrahim, A.H.; Ahmed, I. Spectral three–term conjugate descent method for solving nonlinear monotone equations with convex constraints. Thai J. Math. 2020, 18, 501–517. [Google Scholar]

- Zhou, G.; Toh, K.C. Superlinear convergence of a Newton type algorithm for monotone equations. J. Optim. Theory Appl. 2005, 125, 205–221. [Google Scholar] [CrossRef]

- Zhou, W.J.; Li, D.H. A globally convergent BFGS method for nonlinear monotone equations without any merit functions. Math. Comput. 2008, 77, 2231–2240. [Google Scholar] [CrossRef]

- Zhou, W.; Li, D. Limited memory BFGS method for nonlinear monotone equations. J. Comput. Math. 2007, 25, 89–96. [Google Scholar]

- Zhang, L.; Zhou, W. Spectral gradient projection method for solving nonlinear monotone equations. J. Comput. Appl. Math. 2006, 196, 478–484. [Google Scholar] [CrossRef]

- Barzilai, J.; Borwein, J.M. Two–point step size gradient methods. IMA J. Numer. Anal. 1988, 8, 141–148. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Y.; Xu, C. A projection method for a system of nonlinear monotone equations with convex constraints. Math. Methods Oper. Res. 2007, 66, 33–46. [Google Scholar] [CrossRef]

- Yu, Z.; Lin, J.; Sun, J.; Xiao, Y.; Liu, L.; Li, Z. Spectral gradient projection method for monotone nonlinear equations with convex constraints. Appl. Numer. Math. 2009, 59, 2416–2423. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhu, H. A conjugate gradient method to solve convex constrained monotone equations with applications in compressive sensing. J. Math. Anal. Appl. 2013, 405, 310–319. [Google Scholar] [CrossRef]

- Hager, W.W.; Zhang, H. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 2005, 16, 170–192. [Google Scholar] [CrossRef]

- Hager, W.W.; Zhang, H. CG_DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 2006, 32, 113–137. [Google Scholar] [CrossRef]

- Muhammed, A.A.; Kumam, P.; Abubakar, A.B.; Wakili, A.; Pakkaranang, N. A new hybrid spectral gradient projection method for monotone system of nonlinear equations with convex constraints. Thai J. Math. 2018, 16, 125–147. [Google Scholar]

- Sabi’u, J.; Shah, A.; Waziri, M.Y.; Ahmed, K. Modified Hager–Zhang conjugate gradient methods via singular value analysis for solving monotone nonlinear equations with convex constraint. Int. J. Comput. Methods 2021, 18, 2050043. [Google Scholar] [CrossRef]

- Sabi’u, J.; Shah, A.; Waziri, M.Y. A modified Hager–Zhang conjugate gradient method with optimal choices for solving monotone nonlinear equations. Int. J. Comput. Math. 2021, 99, 332–354. [Google Scholar] [CrossRef]

- Sabi’u, J.; Shah, A. An efficient three–term conjugate gradient–type algorithm for monotone nonlinear equations. RAIRO Oper. Res. 2021, 55, 1113–1127. [Google Scholar] [CrossRef]

- Andrei, N. A double parameter self–scaling memoryless BFGS method for unconstrained optimization. Comput. Appl. Math. 2020, 39, 1–14. [Google Scholar] [CrossRef]

- Andrei, N. A note on memory–less SR1 and memory–less BFGS methods for large–scale unconstrained optimization. Numer. Algorithms 2021, 99, 223–240. [Google Scholar] [CrossRef]

- Fletcher, R. Practical Methods of Optimization, 2nd ed.; John Wiley & Sons: New York, NY, USA, 1990. [Google Scholar]

- Byrd, R.H.; Nocedal, J. A tool for the analysis of quasi–Newton methods with application to unconstrained minimization. SIAM J. Numer. Anal. 1989, 26, 727–739. [Google Scholar] [CrossRef]

- Andrei, N. A double parameter scaled BFGS method for unconstrained optimization. J. Comput. Appl. Math. 2018, 332, 26–44. [Google Scholar] [CrossRef]

- Fletcher, R. An overview of unconstrained optimization, In The State of the Art; Algorithms for Continuous Optimization; Spedicato, E., Ed.; Kluwer Academic Publishers: Boston, MA, USA, 1994; pp. 109–143. [Google Scholar]

- Sun, W.; Yuan, Y.X. Optimization Theory and Methods, Nonlinear Programming; Springer Science + Business Media: New York, NY, USA, 2006. [Google Scholar]

- Behrens, R.T.; Scharf, L.L. Signal processing applications of oblique projection operators. IEEE Trans. Signal Process 1994, 42, 1413–1424. [Google Scholar] [CrossRef]

- Zarantonello, E.H. Projections on convex sets in Hilbert space and spectral theory: Part I. Projections on convex sets: Part II. Spectral theory. Contrib. Nonlinear Funct. Anal. 1971, 5, 237–424. [Google Scholar]

- Halilu, A.S.; Majumder, A.; Waziri, M.Y.; Ahmed, K. Signal recovery with convex constrained nonlinear monotone equations through conjugate gradient hybrid approach. Math. Compu. Simul. 2021, 187, 520–539. [Google Scholar] [CrossRef]

- Yin, J.H.; Jian, J.B.; Jiang, X.Z. A generalized hybrid CGPM–based algorithm for solving large–scale convex constrained equations with applications to image restoration. J. Comput. Appl. Math. 2021, 391, 113423. [Google Scholar] [CrossRef]

- Sabi’u, J.; Shah, A.; Waziri, M.Y. Two optimal Hager–Zhang conjugate gradient methods for solving monotone nonlinear equations. Appl. Numer. Math. 2020, 153, 217–233. [Google Scholar] [CrossRef]

- Ullah, N.; Sabi’u, J.; Shah, A. A derivative–free scaling memoryless Broyden–Fletcher–Goldfarb–Shanno method for solving a system of monotone nonlinear equations. Numeri. lin. alge. with appl. 2021, 28, e2374. [Google Scholar] [CrossRef]

- Abubakar, A.B.; Sabi’u, J.; Kumam, P.; Shah, A. Solving nonlinear monotone operator equations via modified SR1 update. J. Appl. Math. Comput. 2021, 67, 343–373. [Google Scholar] [CrossRef]

- Halilu, A.S.; Waziri, M.Y. A transformed double step length method for solving large–scale systems of nonlinear equations. J. Numeri. Math. Stoch. 2017, 9, 20–32. [Google Scholar]

- Waziri, M.Y.; Muhammad, L.; Sabi’u, J. A simple three-term conjugate gradient algorithm for solving symmetric systems of nonlinear equations. Int. J. Adv. in Appl. Sci. 2016, 5, 118–127. [Google Scholar] [CrossRef]

- Birgin, E.G.; Martínez, J.M. A spectral conjugate gradient method for unconstrained optimization. Appl Math. Optm 2001, 43, 117–128. [Google Scholar] [CrossRef]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Prog. 2002, 91, 201–213. [Google Scholar] [CrossRef]

- Yasrib, A.; Suhaimi, M.A. Image processing in medical applications. J. Info. Tech. 2003, 3, 63–68. [Google Scholar]

- Collins, K.A.; Kielkopf, J.F.; Stassun, K.G.; Hessman, F.V. Image processing and photometric extraction for ultra-precise astronomical light curves. The Astro. J. 2017, 153, 177. [Google Scholar] [CrossRef]

- Mishra, R.; Mittal, N.; Khatri, S.K. Digital image restoration using image filtering techniques. IEEE Int. Conf. Autom. Comput. Tech. Manag. 2019, 6, 268–272. [Google Scholar]

- Sun, S.; He, T.; Chen, Z. Semantic structured image coding framework for multiple intelligent applications. IEEE Trans. Cir. Syst. Video Tech. 2021, 31, 3631–3642. [Google Scholar] [CrossRef]

- Bruckstein, A.M.; Donoho, D.L.; Elad, M. From sparse solutions of systems of equations to sparse modeling of signals and images. SIAM Rev. 2009, 51, 34–81. [Google Scholar] [CrossRef]

- Figueiredo, M.A.; Nowak, R.D. An EM algorithm for wavelet–based image restoration. IEEE Trans. Image Process 2003, 12, 906–916. [Google Scholar] [CrossRef]

- Hale, E.T.; Yin, W.; Zhang, Y. A Fixed–Point Continuation Method for (l1)–Regularized Minimization with Applications to Compressed Sensing; Technical Report TR07–07; Rice University: Houston, TX, USA, 2007; Volume 43, 44p. [Google Scholar]

- Figueiredo, M.A.; Nowak, R.D.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process 2007, 1, 586–597. [Google Scholar] [CrossRef]

- Van Den Berg, E.; Friedlander, M.P. Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 2008, 31, 890–912. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage–thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Hager, W.W.; Phan, D.T.; Zhang, H. Gradient–based methods for sparse recovery. SIAM J. Imaging Sci. 2011, 4, 146–165. [Google Scholar]

- Awwal, A.M.; Kumam, P.; Mohammad, H.; Watthayu, W.; Abubakar, A.B. A Perry–type derivative–free algorithm for solving nonlinear system of equations and minimizing l1 regularized problem. Optimization 2021, 70, 1231–1259. [Google Scholar] [CrossRef]

- Ibrahim, A.H.; Deepho, J.; Abubakar, A.B.; Adamu, A. A three–term Polak–Ribière–Polyak derivative–free method and its application to image restoration. Sci. Afri. 2021, 13, e00880. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).