Abstract

An optimal control for a dynamical system optimizes a certain objective function. Here, we consider the construction of an optimal control for a stochastic dynamical system with a random structure, Poisson perturbations and random jumps, which makes the system stable in probability. Sufficient conditions of the stability in probability are obtained, using the second Lyapunov method, in which the construction of the corresponding functions plays an important role. Here, we provide a solution to the problem of optimal stabilization in a general case. For a linear system with a quadratic quality function, we give a method of synthesis of optimal control based on the solution of Riccati equations. Finally, in an autonomous case, a system of differential equations was constructed to obtain unknown matrices that are used for the construction of an optimal control. The method using a small parameter is justified for the algorithmic search of an optimal control. This approach brings a novel solution to the problem of optimal stabilization for a stochastic dynamical system with a random structure, Markov switches and Poisson perturbations.

Keywords:

optimal control; Lyapunov function; system of stochastic differential equations; Markov switches; Poisson perturbations MSC:

60J25; 93C73; 93E03; 93E15

1. Introduction

The main problem considered in this paper is the synthesis of an optimal control for a controlled dynamical system, described by a stochastic differential equation (SDE) with Poisson perturbations and external random jumps [1,2]. The importance of this problem is linked to the fact that the dynamics of many real processes cannot be described by continuous models such as ordinary differential equations or Ito’s stochastic differential equations [3]. More complex systems include the presence of jumps, and these jumps can occur at random , or deterministic time moments, . In the first case, the jump-like change can be described by point processes [4,5], or in a more specific case by generalized Poisson processes, the dynamics of which are characterized only by the intensity of the jumps. The jumps of the system at deterministic moments of time, , can be described by the relation:

where , is a random process describing the dynamics of the system, the function g is a finite-valued function that reflects the magnitude of a jump which depends on time t and process x in the time . According to the works of Katz I. Ya. [1], Yasinsky V.K., Yurchenko I.V. and Lukashiv T.O. [6], the description of jumps at deterministic time moments, , are quite accurately described using the Equation (1). It allows a relatively simple transfer of the basic properties of stochastic systems of differential equations without jumps () to systems with jumps. Such properties, as will be noted below, include the Markovian property, , concerning natural filtering, and the martingale properties, [7,8]. It should be noted that the description of real dynamical systems is not limited to the Wiener process and point processes (Poisson process). A more general approach is based on the use of semimartingales [9]. The disadvantage of this approach is that it is impossible to link it with the methods used for systems described by ordinary differential equations or stochastic Ito’s differential equations. The second approach to describe jump processes, , is based on the use of semi-Markov processes, considered in the works of Korolyuk V.S. [10] and Malyk I.V. [2,11]. In particular, the works of Malyk I.V. are devoted to the convergence of semi-Markov evolutions in the averaging scheme and diffusion approximation. The results derived in these works together with the results of the works on large deviations (e.g., [12]) can also be used to investigate the considered problems.

Since we consider generalized classical differential equations, the approaches used will also be classical. The basic research method is based on the Lyapunov methods described in the papers by Katz I. Ya. [1] and Lukashiv T.O. and Malyk I.V. [13]. It should be noted that the application of this method makes it possible to find the optimal control for linear systems with a quadratic quality functional, which also corresponds to classical dynamical systems [14].

It should be noted that a large number of works are devoted to the issues of stability of systems with jumping Markov processes. For example, the works [15,16] consider sufficient conditions for the stability of Ito stochastic differential equations with Markov switching and the presence of variable delay. In the work [15], this theory has gained logical use for modeling neural networks with a decentralized event-triggered mechanism and finding sufficient conditions for stabilizing the process that describe dynamic of the neural network. Note that the authors of this work also considered systems of stochastic differential equations in which the deterministic term near is quasi-linear; that is, the linear component plays the main role in this research. This assumption of quasi-linearity allows, with additional conditions on the value of the nonlinear part, the discovery of sufficient stability conditions of the by constructing suboptimal control . Similar results were obtained also in the work [16], where authors described an algorithm of stabilization by construction of the non-fragile event-triggered controller for Ito stochastic differential equations with varying delay. The authors chose a specific class of admissible controls, which makes it possible to solve the optimization problem for finding a suboptimal control.

The structure of the paper is as follows. In Section 2, we consider the mathematical model of a dynamical system with jumps. It is described by a system of stochastic differential equations with Poisson’s integral and external jumps. Sufficient conditions for the existence and uniqueness of the solution of this system are given there. In Section 3, we investigate the stability in probability of the solution . In this section, we consider the notion of the Lyapunov function and prove the sufficient conditions for stability in probability (Theorem 1). The algorithm for computing the quality functional, , from the known control, , is given in Section 4. Moreover, we further present sufficient conditions for the existence of an optimal control (Theorem 2), which are based on the existence of a Lyapunov function for the given system. Section 5 considers constructing an optimal control for linear non-autonomous systems via the coefficients of the system. The optimal control is found by solving auxiliary Ricatti equations (Theorem 3). For the analysis of linear autonomous systems, we consider the construction of a quadratic functional. Finally, we formulate sufficient conditions of existence of an optimal control (Theorem 4), and present the explicit form of such a control in the case of a quadratic quality functional.

2. Task Definition

On the probability basis [7], consider a stochastic dynamical system of a random structure given by Ito’s stochastic differential Equation (SDE) with Poisson perturbations:

with Markov switches

for and initial conditions

Here, is a homogeneous continuous Markov process with a finite number of states and a generator Q; is a Markov chain with values in the space and the transition probability matrix ; ; is an m-dimensional standard Wiener process; is a centered Poisson measure; and the processes and are independent [3,7]. We denote by

a minimal -algebra, with respect to which is measurable for all and for .

The process is and the control is an m-measure function from the class of admissible controls U [14].

The following mappings are measurable by a set of variables , , and function satisfies the Lipschitz condition

where is defined by , , for , and the condition

3. Stability in Probability

Here we used the definitions from classical works in this area [14,17].

Definition 1.

When applying the second Lyapunov method to the SDE (2) with Markov switches (3), special sequences of the above mentioned functions are required.

Definition 2.

The Lyapunov function for the system of the random structure (2)–(4) is a sequence of non-negative functions for which

- for all the discrete Lyapunov operator is defined (7);

- for

- for

Moreover, and are continuous and strictly monotonous.

Definition 3.

Lemma 1.

Proof of Lemma 1

Using the integral form of the strong solution of Equation (2) [8], for all the following inequality is true:

Consider the designation:

Then, according to the last inequality, satisfies the ratio:

Using the Gronwall inequality, we obtain an estimate of:

as required as proof.

□

Remark 1.

Theorem 1.

Let:

- (1)

- Interval lengths do not exceed , i.e.,

- (2)

- (3)

- There exists Lyapunov functions such that the following inequality holds true

Remark 2.

Proof of Theorem 1

The conditional expectation of the Lyapunov function is:

Then, by the definition of the discrete Lyapunov operator (see (7)) and from Equation (11), taking into account (10), we obtain the following inequality:

From Lemma 1 and the properties of the function , it follows that the conditional expectation of the left part of inequality (12) exists.

Using (11) and (12), let us write the discrete Lyapunov operator , defined by the solutions of (2)–(4):

Then, when , the following inequality is satisfied:

This means that the sequence of random variables is a supermartingale with respect to [5].

Thus, the following inequality holds:

Since the random variable is independent of events of - algebra [4], then

i.e., the inequality (9) also holds for the usual expectation

at , assuming that the stability of the trivial solution is investigated.

Then,

If , then based on the definition of the Lyapunov function, the inequality is fulfilled

Using the inequality for non-negative supermartingales [5,7], we obtain an estimate of the right-hand side of (14):

4. Stabilization

The optimal stabilization problem is such that for an SDE (2) with switches (3), one should construct a control such that the unperturbed motion of the system (2)–(4) is stable in probability on the whole.

It is assumed that the control, u, will be determined by the full feedback principle. In addition, the condition of continuity of on t is in the range

for every fixed and .

It is also assumed that the structure of the system at time , which is independent of the Markov chain ( corresponds to time ), is known.

Obviously, there is an infinite set of controls. The only control should be chosen from the requirement of the best quality of the process, which is expressed in the form of the minimization condition of the functional:

where is a non-negative function defined in the region , .

The algorithm for calculating the functional (18) for a given control, , is as follows:

- (A)

- Find the trajectory with an SDE (2) at , for example, by the Euler–Maruyama method [20];

- (B)

- Substitute , and into the functional (18);

- (C)

- Calculate the value of the function (18) by statistical modeling (Monte Carlo);

- (D)

- The problem of choosing the functional , which determines the estimate and the quality of the process as a strong solution of the SDE (2), is related to the specific features of the problem and the following three conditions can be identified:

- The value of the integral should satisfactorily estimate the computation time spent on generating the control, ;

- The value of the quality functional should satisfactorily estimate the computation time spent on forming the control, ;

- The functional must be such that the solution of the stabilization problem can be constructed.

Remark 3.

For a linear SDE (2), in many cases the quadratic form with respect to the variables x and u is satisfactory

where is a symmetric non-negative matrix of size and is a positively determined matrix of the size for all .

Remark 4.

Note that according to the feedback principle, and depend indirectly on the values of and . Therefore, in the examples below, we will calculate the values of and for fixed and .

The value in the case of the quadratic form of the variables x and u evaluates the quality of the transition process quite well on average. The presence of the term and the minimum condition simultaneously limit the amount of the control action .

Remark 5.

If the jump condition of the phase trajectory is linear, then the solution of the stabilization problem belongs to the class of linear on the phase vector controls . Such problems are called linear-quadratic stabilization problems.

Definition 4.

Theorem 2.

Let the system (2)–(4) have a scalar function and an r-vector function in the region (17) and fulfill the conditions:

- 1.

- The sequence of the functions is the Lyapunov functional;

- 2.

- The sequence of r-measured functions-controlis measurable in all arguments where ;

- 3.

- 4.

- The sequence of infinitesimal operators , calculated for , satisfies the condition for

- 5.

- The value of reaches a minimum at , i.e.,

- 6.

- The seriesconverges.

Proof of Theorem 2

I. Stability in probability in the whole of a dynamical system of a random structure (2)–(4) for immediately follows from Theorem 1, since the functionals for any satisfy the conditions of this theorem.

II. The equality (25) is obviously also a consequence of Theorem 1.

III. Proof by contradiction that the stabilization of a strong solution of a dynamical system of random structure (2)–(4) is controlled by .

Let the control exist, which, when substituted into the SDE (2), realizes a solution with initial conditions (3) and (4), such that the equality

is held.

The fulfilment of conditions (1)–(6) of Theorem 2 will lead to an inequality (see (27)) in contrast to (26).

Averaging (27) over random variables over intervals and integrating over t from 0 to T, we obtain n inequalities:

Taking into account the martingale property of the Lyapunov functions (see condition (1) of the theorem) due to the system (2)–(4), i.e., by the definition of a martingale, we have n equalities with the probability of one being:

According to the assumption (26), it follows that for , the integrals on the right-hand side of (32) converge and, taking into account the convergence of the series (24) (condition (6)), we obtain the inequality:

Indeed, from the convergence of the series (32) (under condition (6)), it follows that the integrands in (33) tend to zero as . In this way, .

Note that it makes sense to consider natural cases when from the condition

it follows that .

Thus, the inequality (33) contradicts the inequality (26). This contradiction proves the statement regarding the optimality of the control .

□

In cases when the Markov process with a finite number of states admits a conditional expansion of the conditional transition probability

we obtain an equation that must be satisfied by the optimal Lyapunov functions, , and the optimal control, .

Note that according to [6,21], the weak infinitesimal operator (7) has the form:

where is a scalar product, , , , ”T” stands for a transposition, is a trace of matrix and is a conditional probability density:

assuming that .

Taking into account Formula (35), the first equation for can be obtained by replacing the left side of (23) with the expression for the averaged infinitesimal operator, [1].

Then, the desired equation at the points has the form:

The second equation for optimal control, , can be obtained from (36) by differentiation with respect to the variable u, since delivers the minimum of the left side of (36):

where – -matrix of Jacobi, stacked with elements , .

Thus, the problem of optimal stabilization, according to the Theorem 2, consists of solving the complex nonlinear system of Equation (23) with partial derivatives to, determine the unknown Lyapunov functions, .

It is quite difficult to solve such a system; therefore, we will further consider linear stochastic systems for which convenient solution schemes can be constructed.

5. Stabilization of Linear Systems

Consider a controlled stochastic system defined by a linear Ito’s SDE with Markov parameters and Poisson perturbations:

with Markov switching

for and initial conditions

Here, and C are piecewise continuous integrable matrix functions of appropriate dimensions.

Let us assume that the conditions for the jump of the phase vector at the moment when of the change in the structure of the system due to the transition in are linear and given in the form:

where are independent random variables for which and and are given as -matrices.

Note that the equality (41) can replace the general jump conditions [6]:

- -

- The case of non-random jumps will be at , i.e.,

- -

- The continuous change in the phase vector means that and (identity -matrix).

The quality of the transition process will be estimated by the quadratic functional

where are symmetric matrices of dimensions and , respectively.

According to the Theorem 2, we need to find optimal Lyapunov functions, , and a control, , for

The optimal Lyapunov functions are sought in the form:

where is a positive-definite symmetric matrix of the size .

Hereafter, when describes a Markov chain with a finite number of states , and describes a Markov chain with values in metric space and with transition probability at the k-th step , we introduce the following notation:

Let us substitute the functional (43) into Equations (36) and (38) to find an optimal Lyapunov function, , and an optimal control, , for . Given the form of a weak infinitesimal operator (35), we obtain:

Note that the partial derivative with respect to u of the operator is equal to zero, which confirms the conjecture about constructing an optimal control that does not depend on switching (39) for the system (40).

Given the matrix equality

and excluding from (44) and equating the resulting matrix with a quadratic form to zero, we can obtain a system of matrix differential equations of Riccati type for finding the matrices , where , , corresponding to the interval :

Thus, we obtain the following statement, which is actually a corollary to Theorem 2.

6. Stabilization of Autonomous Systems

Consider the case of an autonomous system that is given by the SDE

with Markov switching (39) and initial conditions (40). Here, , , , , and are known matrix functions defined in the set of possible values of the Markov chain . is a Poisson process with intensity [4].

In the case of phase vector jumps (41) and the quadratic quality functional (42), the systems (47) and (48) for finding unknown matrices will take the form:

Remark 6.

Note that any differential system written in the normal form (such as the system (38), where the dependence of x on t is explicitly indicated) can be reduced to an autonomous system by increasing the number of unknown functions (coordinates) by one.

Small Parameter Method for Solving the Problem of the Optimal Stabilization

The algorithmic solution to the problem of optimal stabilization of a linear autonomous system of random structure ((43), (39) and (40)) is achieved by introducing a small parameter [1]. There are two ways to introduce the small parameter:

Case I. Transition probabilities of Markov chains are small, i.e., the transition intensities, , due to the small parameter () can be represented as:

Case II. Small jumps of the phase vector , i.e., matrices and from (41), should be presented in the form:

In these cases, we will search for the optimal Lyapunov function , in the form of a convergent power series with a base

According to (46), the optimal control, , should be sought in the form of a convergent series:

Equating the coefficients at the same powers of , we get:

Note that the system (55) consists of independent matrix equations which, for a fixed , gives a solution to the problem of optimal stabilization of the system

with the quality criterion

A necessary and sufficient condition for the solvability of the system (55) is the existence of linear admissible control in the system (57), which provides exponential stability in the mean square of the unperturbed motion of this system [17].

Let us assume that the system of matrix quadratic Equation (55) has a unique positive definite solution, .

Equation (56) to find is linear, so it has a unique solution for a fixed and any matrices that are on the right side of (56).

Indeed, the system

is obtained by closing the system (57) with the optimal control

which provides exponential stability in the mean square. Then, there is a unique solution to the system (56). Note that in the linear case for autonomous systems, the asymptotic stability is equivalent to the exponential stability [2]. Consider a theorem which originates from the results of this work.

Theorem 4.

If a strong solution, , of the system (57) is exponentially stable in the mean square, then there exists Lyapunov functions , which satisfy the conditions:

Thus, the system of matrix Equations (55) and (56) allows us to consistently find the coefficients of the corresponding series (53) and (54), starting with a positive solution of the system (55).

The next step is to prove the convergence of the series (53) and (54). Without the loss of generality, we simplify notations by fixing . Let . Then, from (56), it follows that there is a constant , such that for any the following estimate is correct:

Next, we use the method of majorant series.

Consider the quadratic equation

where the coefficients a and b are chosen such that the power series expansion of one of the roots of this equation is a majorant series for (53).

We obtain

Let us substitute (62) into (61), and equate coefficients at equal powers of . Then, we get an expression for through :

where should be found from the Equation

Using the known a and b from (61), we find that the majorant series for (53) will be the expansion one of the roots of (61). This root is such that its values are determined by

Convergence of the series (53) for follows from the obvious inequality

Thus, we have proved the assertion which is formalized below as Theorem 5:

Theorem 5.

- 2.

Then,

- 1.

- 2.

Case II. Let us substitute the series into (44) and equate the coefficients at the same powers . Then, taking into account (52), we obtain the following equations:

where ,

Based on the equations above, the following theorem is correct:

Theorem 6.

- 2.

7. Model Example

To illustrate the above theoretical results, consider an example with the following parameters:

- The continuous Markov chain is defined by generator

- The values of the function g in the times depend only on the value of x:where . For example, below we use ;

- The intensity of the Poisson process is ;

- The values of the matrices for are

- The values of the matrices for are

- The values of the matrices for are

- The values of the matrices for are

- The control parameters are

For simplicity, we will assume that the random variables are constants and the solution, , and optimal control, , depend only on the random process, .

The main problem of optimal control is the solution of the Riccati Equation (50). There are several basic approaches to finding an approximate solution to this equation. However, in our example, we used the particle swarm optimization method, which allows us to relatively quickly find the solution to Equation (50). The results of finding this equation will be the matrices

Both solutions are positively defined, so by Theorem 3 there exists an optimal control, which stabilizes system (57) and is defined by

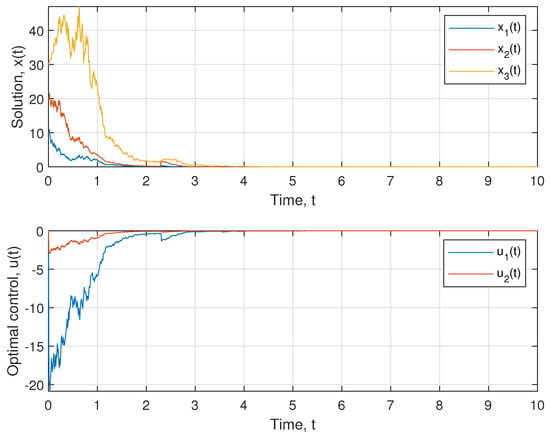

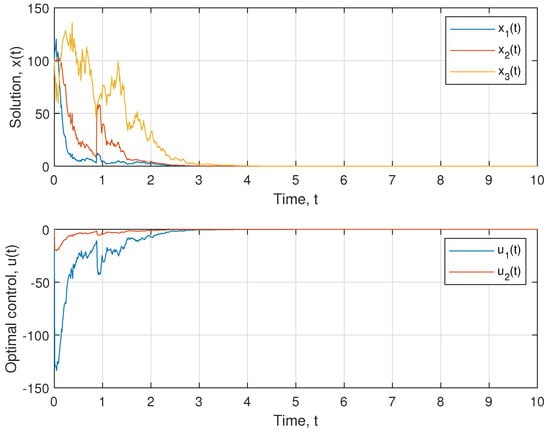

for Two realization of the solution, , and corresponding control, , are shown in Figure 1 and Figure 2.

Figure 1.

Realizations of the solution and optimal control with initial conditions .

Figure 2.

Realizations of solution and optimal control with initial conditions .

As we can see from the above examples, the resulting optimal control, , stabilizes the system, and therefore minimizes the functional . In addition, considering the form of the matrix D from (42), we can see that is close to 0, because

In this way, the optimal control found will agree with the given quality functional .

An analysis of the solution showed that there is an optimal control for an arbitrary . The case corresponds to the compressive case, since in this case the solution is compressed by the coefficient at each step in the point, . The case is not compressible, but the existence of an optimal control can be found based on Theorem 3. The case of is not included in the theory of this work, since in this case, the solution of Equation (50) either does not exist or is not positively defined. This case needs further investigation.

8. Discussion

In this work, we have obtained sufficient conditions for the existence of an optimal solution for a stochastic dynamical system with jumps, which transform the system to a stable one in probability. The second Lyapunov method was used to investigate the existence of an optimal solution. This method is efficient both for ordinary differential equations (ODE) and for stochastic differential equations (SDE). As it can be seen from the proof of the Theorem 2, the existence of finite bounds for jumps at non-random time moments, (), does not impact the stability of the solution. On the other hand, was used for proving the existence of the optimal control (Theorem 3). This restriction is also present in the works of other authors. Thus, a goal of future work could be to construct an optimal control without the assumption , which will considerably expand the scope of the second Lyapunov method.

The limitation of the proposed method is linked to the need for a solution to Riccati´s equations that can be computationally heavy. For small dimensions of m, Riccati′s equations can be solved either by iteration or by genetic algorithms, but for large dimensions of m, only genetic algorithms work.

9. Conclusions

In this work, we obtained sufficient conditions for the existence of a solution to an optimal stabilization problem for dynamical systems with jumps. We considered the case of a linear system with a quadratic quality functional. We showed that by designing an optimal control that stabilizes the system to a stable one in probability reduces the problem of solving the Riccati equations. Additionally, for a linear autonomous system, the method using a small parameter is substantiated for solving the problem of optimal stabilization. The obtained solutions can be used to describe a stock market in economics, biological systems, including models of response to treatment of cancer, and other complex dynamical systems.

In addition, this work serves as a basis for the study of systems of type (3)–(4) under the conditions of the presence of condensation point, i.e.,

Systems with this condition are a mathematical model of real phenomena, in which exceptional events accumulate very quickly over a finite period of time, which can lead to a collapse of the system. For example, paper [22] examines the mathematical model of the collapse of the bridge in Tacoma. At the same time, the authors of this work took into account only deterministic influences, and did not include random events affecting the dynamics of the bridge. Considering both deterministic and random influences can provide a more precise picture for the understanding of such dramatic effects.

Author Contributions

Conceptualization, T.L. and I.V.M.; methodology, T.L. and I.V.M.; validation, T.L., Y.L., I.V.M. and P.V.N.; formal analysis, T.L., Y.L., I.V.M. and P.V.N.; writing—original draft preparation, T.L., Y.L. and I.V.M.; writing—review and editing, T.L., P.V.N. and A.G.; supervision, I.V.M. and P.V.N.; project administration, P.V.N. and A.G.; funding acquisition, A.G. and P.V.N. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Luxembourg National Research Fund C21/BM/15739125/DIOMEDES to T.L., P.V.N. and A.G.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to acknowledge the administrations of the Luxembourg Institute of Health (LIH) and the Luxembourg National Research Fund (FNR) for their support in organizing scientific contacts between research groups in Luxembourg and Ukraine.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ODE | ordinary differential equation |

| SDE | stochastic differential equation |

References

- Kats, I.Y. Lyapunov Function Method in Problems of Stability and Stabilization of Random-Structure Systems; Izd. Uralsk. Gosakademii Putei Soobshcheniya: Yekaterinburg, Russia, 1998. (In Russian) [Google Scholar]

- Tsarkov, Y.F.; Yasinsky, V.K.; Malyk, I.V. Stability in impulsive systems with Markov perturbations in averaging scheme. 2. Averaging principle for impulsive Markov systems and stability analysis based on averaged equations. Cybern. Syst. Anal. 2011, 47, 44–54. [Google Scholar] [CrossRef]

- Oksendal, B. Stochastic Differential Equations; Springer: New York, NY, USA, 2013. [Google Scholar]

- Doob, J.L. Stochastic Processes; Wiley: New York, NY, USA, 1953. [Google Scholar]

- Jacod, J.; Shiryaev, A.N. Limit Theorems for Stochastic Processes. Vols. 1 and 2; Fizmatlit: Moscow, Russia, 1994. (In Russian) [Google Scholar]

- Lukashiv, T.O.; Yurchenko, I.V.; Yasinskii, V.K. Lyapunov function method for investigation of stability of stochastic Ito random-structure systems with impulse Markov switchings. I. General theorems on the stability of stochastic impulse systems. Cybern. Syst. Anal. 2009, 45, 281–290. [Google Scholar]

- Dynkin, E.B. Markov Processes; Academic Press: New York, NY, USA, 1965. [Google Scholar]

- Korolyuk, V.S.; Tsarkov, E.F.; Yasinskii, V.K. Probability, Statistics, and Random Processes. Theory and Computer Practice, Vol. 3, Random Processes. Theory and Computer Practice; Zoloti Lytavry: Chernivtsi, Ukraine, 2009. (In Ukrainian) [Google Scholar]

- Protter, P.E. Stochastic Integration and Differential Equations, 2nd ed.; Springer: New York, NY, USA, 2004. [Google Scholar]

- Koroliouk, V.S.; Samoilenko, I.V. Asymptotic expansion of a functional constructed from a semi-Markov random evolution in the scheme of diffusion approximation. Theory Probab. Math. Stat. 2018, 96, 83–100. [Google Scholar] [CrossRef]

- Tsarkov, Y.F.; Yasinsky, V.K.; Malyk, I.V. Stability in impulsive systems with Markov perturbations in averaging scheme. I. Averaging principle for impulsive Markov systems. Cybern. Syst. Anal. 2010, 46, 975–985. [Google Scholar] [CrossRef]

- Koroliuk, V.S.; Limnios, N. Stochastic Systems in Merging Phase Space; World Scientific Publishing Company: Singapore, 2005. [Google Scholar]

- Lukashiv, T.O.; Malyk, I.V. Stability of controlled stochastic dynamic systems of random structure with Markov switches and Poisson perturbations. Bukovinian Math. J. 2022, 10, 85–99. [Google Scholar] [CrossRef]

- Andreeva, E.A.; Kolmanovskii, V.B.; Shaikhet, L.E. Control of Hereditary Systems; Nauka: Moskow, Russia, 1992. (In Russian) [Google Scholar]

- Vadivel, R.; Ali, M.S.; Alzahranib, F. Robust H∞ synchronization of Markov jump stochastic uncertain neural networks with decentralized event-triggered mechanism. Chin. J. Phys. 2019, 60, 68–87. [Google Scholar] [CrossRef]

- Vadivel, R.; Hammachukiattikul, P.; Zhu, Q.; Gunasekaran, N. Event-triggered synchronization for stochastic delayed neural networks: Passivity and passification case. Asian J. Control. 2022. [Google Scholar] [CrossRef]

- Hasminsky, R.Z. Stability of Systems of Differential Equations under Random Parameter Perturbations; Nauka: Moscow, Russia, 1969. (In Russian) [Google Scholar]

- Skorokhod, A.V. Asymptotic Methods in the Theory of Stochastic Differential Equations; Naukova Dumka: Kyiv, Ukraine, 1987. (In Russian) [Google Scholar]

- Sverdan, M.L.; Tsar’kov, E.F. Stability of Stochastic Impulse Systems; RTU: Riga, Latvia, 1994. (In Russian) [Google Scholar]

- Kloeden, P.E.; Platen, E. Numerical Solution of Stochastic Differential Equations; Springer: Berlin, Germany, 1992. [Google Scholar]

- Lukashiv, T. One Form of Lyapunov Operator for Stochastic Dynamic System with Markov Parameters. J. Math. 2016, 2016, 1694935. [Google Scholar] [CrossRef]

- Arioli, G.; Gazzola, F. A new mathematical explanation of what triggered the catastrophic torsional mode of the Tacoma Narrows Bridge. Appl. Math. Model. 2015, 39, 901–912. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).