Abstract

In this research paper, we present a new inertial method with a self-adaptive technique for solving the split variational inclusion and fixed point problems in real Hilbert spaces. The algorithm is designed to choose the optimal choice of the inertial term at every iteration, and the stepsize is defined self-adaptively without a prior estimate of the Lipschitz constant. A convergence theorem is demonstrated to be strong even under lenient conditions and to showcase the suggested method’s efficiency and precision. Some numerical tests are given. Moreover, the significance of the proposed method is demonstrated through its application to an image reconstruction issue.

Keywords:

split variational inclusion; inertial term; self-adaptive algorithm; maximal monotone; Hilbert spaces MSC:

65K15; 47J25; 65J15; 90C33

1. Introduction

In this paper, we consider the Split Variational Inclusion Problem (SVIP) introduced by Moudafi [1], which is the problem of finding the null point of a monotone operator in a Hilbert space whose image under a bounded linear operator belongs to another Hilbert space. Mathematically, the problem is defined as follows: find

and

where 0 is called the zero vector, with and being both real Hilbert spaces together with the multivalued maximal monotone mappings, where . Furthermore, the bounded linear operator is denoted by . We denote the solution set of (1) and (2) by .

An operator is called:

- (i)

- Monotone if

- (ii)

- Maximal monotone if the graph of any monotone mapping does not properly contain graph of m, where:

- (iii)

- The symbol used to represent the solution of m when a certain value greater than zero is used as a parameter is called the resolvent, which is denoted by :

Other nonlinear optimization problems, such as split feasibility problems, split minimization problems, split variational inequality, split zero problems, and split equilibrium problems, can all be generalized by the SVIP; see [2,3,4]. Reducing SVIPs to split feasibility problems is important when modeling the intensity-modulating radiation therapy (IMRT) treatment planning. Moreso, the SVIPs play important roles in formulating many problems arising from engineering, economics, medicine, data compression, and sensor networks [5,6].

Recently, several authors have introduced some iterative methods for solving SVIPs, which have improved over time. In 2002, Byrne et al. [7] first introduced a weak convergence method for solving SVIPs as follows:

for some parameter , we have representing the adjoint of B together with , and known as the resolvent operator for (with ). The sequence generated by was proved to converge weakly to under some certain conditions. Moudafi [1] proposed an iterative method that helps to solve SVIP with inverse strongly monotone operators; he also obtained weak convergence results using the following iteration:

where with L being the largest absolute value of the operator , and , and together with are the resolvent operators of and , respectively. Lastly, let and be single-valued operators. Marino and Xu [8] presented an iterative scheme that considers the strong convergence of the viscosity approximation method introduced by Moudafi [9]:

f is a function that contracts on the set H with the contraction coefficient . G is a linear operator that is strongly positive and bounded on H with a constant . The parameter is defined in a way that , exclusive. There exists a nonexpansive mapping F and a sequence that takes values in . The strong convergence of the sequence obtained from (5) to the fixed point has been proven. Furthermore, serves as the unique solution of the variational inequality:

The presentation of the optimality condition for the minimization problem is included as follows:

the function h is a potential function for , i.e.,

In 2014, Kazmi and Rizvi [10] were inspired by the work of Byrne et al. (3) to propose the following iteration for solving SVIPs. For a given ,

where , L is the spectral radius of the operator and sequence satisfies the conditions: , , and . The sequences generated by (7) converges strongly to . Note that algorithms (3), (4), and (7) contain a stepsize , which requires the computation of the norm of the bounded linear operator; this computation is not easy to compute making these algorithms difficult to compute. The inertial technique has been gaining attention from researchers to enhance the accuracy and performance of various algorithms. This technique plays a vital role in the convergence rate of the algorithms and is based on a discrete version of a second-order dissipative dynamical system; see, for instance [11,12,13,14,15,16,17,18,19,20]. In Hilbert spaces, Chuang [21] introduced a hybrid inertial proximal algorithm for solving SVIPs in 2017:

The proof of this proposed algorithm establishes that if and meets a specific requirement, then sequence from Algorithm 1 weakly converges to an SVIP solution:

| Algorithm 1 Hybrid inertial proximal algorithm. |

|

It is easy to see that Algorithm 1 depends on a prior estimate of the norm of the bounded operator, and the Condition (8) is too strong to verify before computation.

Furthermore, Kesornprom and Cholamjiak [22] improved the contraction step in Algorithm 2 and introduced the following algorithm for solving the SVIP:

| Algorithm 2 Proximal type algorithms with linesearch and inertial methods. |

|

They also proved a weak convergence result under similar conditions as in Algorithm 1. Let us mention that both Algorithms 1 and 2 involve a line search procedure, which consumes extra computation time and memory during implementation. As a way to overcome this setback, Tang [23] recently introduced a self-adaptive technique for selecting the stepsize without a prior estimate of the Lipschitz constant nor a line search procedure as follows (Algorithm 3):

where , and . The author proved that the sequence generated by Algorithm 3 converges weakly to a solution of the SVIP. Tan, Qin, and Yao [24] introduced four self-adaptive iterative algorithms with inertial effects to solve SVIPs in real Hilbert spaces. This algorithm does not need any prior information about the operator norm. This means that their stepsize is self-adaptive. The conditions assumed in performing the strong convergences of the four algorithms are as follows:

| Algorithm 3 Self-adaptive technique method. |

|

- (C1)

- Let the solution set of (SVIP) be nonempty, i.e., .

- (C2)

- Let and be assumed to be two real Hilbert spaces with a bounded linear operator and its adjoint denoted by and , respectively.

- (C3)

- Let be the set-valued maximal monotone mappings and is a mapping which satisfies the p-contractive property with a constant .

- (C4)

- Let the sequence be positive such that where satisfies and .

Various methods inspired the first iterative algorithm. Namely, Byrne et al.’s [7] method, the viscosity-type method, and the projection and contraction method. An iterative method called the self-adaptive inertial projection and contraction method is utilized for solving the SVIP. A description of the initial iterative method is provided below (Algorithm 4):

| Algorithm 4 Viscocity type with projection and contraction method. |

|

Strong convergence was obtained. The second proposed algorithm is an inertial Mann-type projection and contraction algorithm to solve the SVIP, which is presented as follows (Algorithm 5):

| Algorithm 5 Mann-type with projection and contraction method. |

|

Strong convergence was obtained. The third proposed algorithm is an inertial Mann-type algorithm whereby the new stepsize does not require any line search process, making it a self-adaptive algorithm. The details of the iterative scheme are described below (Algorithm 6):

| Algorithm 6 Inertial Mann-type with self-adaptive method. |

|

Strong convergence was obtained. The algorithm proposed fourthly is a variation of Algorithm 6, which leverages the viscosity-type approach to prove the robust convergence of the proposed method. We present the algorithm as follows (Algorithm 7):

| Algorithm 7 New inertial viscocity method. |

|

Strong convergence was obtained. The four algorithms contain an inertial term that plays a role at the rate of the convergence of Algorithms 4–7. Note that the strong convergence theorems proved for Algorithms 4–7 proposed by Tan, Qin, and Yan were obtained under some weaker conditions. Zhou, Tan, and Li [25] proposed a pair of adaptive hybrid steepest descent algorithms with an inertial extrapolation term for split monotone variational inclusion problems in infinite-dimensional Hilbert spaces. These algorithms benefit from combining two methods, the hybrid steepest descent method and the inertial method, ensuring and achieving strong convergence theorems. Secondly, the stepsizes of the two proposed algorithms are self-adaptive, which overcomes the difficulty of the computation of the operator norm. The details of the first algorithm are presented as follows (Algorithm 8):

| Algorithm 8 Inertial hyrbid steepest descent algorithm. |

Requirements: Take arbitrary starting points . Choose sequences and in and .

|

Strong convergence was obtained. The second proposed algorithm is presented as follows (Algorithm 9):

| Algorithm 9 Self-adaptive hybrid steepest descnt method. |

Requirements: Two arbitrary starting points . Choose sequences and in and .

|

Strong convergence was obtained. The assumptions applied to Algorithms 8 and 9 are as follows: Let and denote two Hilbert spaces, and suppose that is a linear operator that is bounded. Additionally, let be the adjoint operator of B. Let be a -inverse strongly monotone mapping with and be set-valued maximal monotone mappings with . is -Lipschitz continuous and -strongly mapping with . Let be -Lipschitz continuous mapping with . Moreover, Alakoya et al. [26] introduced a method with an inertial extrapolation technique, viscosity approximation, and contains a stepsize that is self-adaptive; thus, the method is known as an inertial self-adaptive algorithm for solving the SVIP (Algorithm 10):

where is a quasi-nonexpansive mapping, is a strongly positive mapping, and is a contraction mapping. The convergence of a common solution for the sequence generated by Algorithm 10 was established by the authors through proof of its strong convergence provided that and satisfy . It is clear that Algorithm 10 performs better than Algorithms 1–3 and other related methods. However, there is a need to improve the performance of Algorithm 10 by using an optimal choice of parameters for the inertial extrapolation term.

| Algorithm 10 General viscosity with self-adaptive and inertial method. |

|

Based on the outcomes above, our paper presents a novel approach that utilizes an optimal selection of inertial term and self-adaptive techniques for solving the SVIP and fixed point problems by employing multivalued demicontractive mappings in actual Hilbert spaces. Our algorithm enhances the results of Algorithms 1–3 and 10, and other associated findings in the literature. We demonstrate a robust convergence outcome, subject to certain mild conditions, and provide relevant numerical experiments to showcase the efficiency of the proposed method. We also consider an application of our algorithm to solving image deblurring problems to demonstrate the applicability of our results.

2. Preliminaries

In this section, we present certain definitions and fundamental outcomes that will be employed in our ensuing analysis. Suppose that H is a real Hilbert space, and C is a subset of H that is closed, nonempty, and convex. We use and to denote the strong and weak convergences, respectively, of a sequence to a point .

For every vector , there exists a unique element in the subspace C such that

The metric projection from H onto C is denoted as and can be defined by the subsequent expression:

- (i)

- For and

- (ii)

- (iii)

- For each and

An operator is called:

- (i)

- -Lipschitz if there is a positive value of such thatand a contraction if ;

- (ii)

- Nonexpansive if F is 1-Lipschitz;

- (iii)

- Quasi-nonexpansive when its fixed point set is not empty and

- (iv)

- -demicontractive if and there exists a constant such that

Note that the nonexpansive and quasi-nonexpansive mappings are contained in the class of -demicontractive mapping; we also follow the same conditions for the Hausdorff mapping, .

Suppose we have a metric space and a family of subsets that are both closed and bounded. We can induce the Hausdorff metric using the metric d on any two subsets . This metric is defined as follows:

where A fixed point of a multivalued mapping is a point that belongs to If only contains a, then we refer to a as a strict fixed point of The study of strictly fixed points for a specific type of contractive mappings was first conducted by Aubin and Siegel [27]. Since then, this condition has been rapidly applied to various multivalued mappings, such as those in [28,29,30].

Lemma 1.

The inequalities stated below are valid in a Hilbert space denoted by H.:

- (i)

- (ii)

We also use the following Lemmas to achieve our goal in the section on the main results; Lemmas [31,32,33].

3. Main Results

In this section, we introduce our algorithm and provide its convergence analysis. First, we prove the state of our algorithm as follows:

Let be two real Hilbert spaces, and the multivalued maximal monotone operators are denoted by , where . We denote the bounded linear operator with its adjoint as and , respectively. For define be a finite family of -demicontractive mappings such that is demiclosed at the point zero with and . Suppose that the solution set:

Let our contraction mapping be with a constant of and be a strongly non-negative operator with being its coefficient where this condition, , is satisfied. Moreover, let be non-negative sequences such that . Define the following functions:

and

Now, we present our algorithm as follows (Algorithm 11):

| Algorithm 11 Proposed new inertial and self-adaptive method. |

|

To ensure our convergence outcomes, we have made assumptions on the control parameters that must meet certain conditions:

- (C1)

- and

- (C2)

- (C3)

- i.e.,

Remark 1.

Convergence Analysis

We begin the convergence of Algorithm 11 by proving the following results.

Lemma 2.

Consider the function and defined in ; then, the functions T and H defined on are Lipschitz continuous.

Proof.

Since , therefore

where . On the other hand,

Combining the above formulas, we have:

T being inverse strong monotone implies that its inverse is L-Lipschitz continuous. Furthermore,

hence

Likewise, it can be observed that the function H exhibits Lipschitz continuity. □

Lemma 3.

The sequence , which was generated by Algorithm 11 is bounded.

Proof.

Given , then

Since , then , , and . Note that , is firmly nonexpansive, therefore we obtain the following:

and

Since then . We use Lemma [31] to obtain the following results:

thus, we apply condition (C2) and have the following:

Follow from , , and to obtain:

Note that exists by Remark 1 and let

Therefore, we have the following:

We continue and use Lemma [32] (i) to imply that is bounded and therefore, is also bounded. Consequently, sequences , , and are bounded. □

Lemma 4.

Given as the sequence generated by the proposed Algorithm 5, put , , , for some and where and .

Then, the following conclusions hold:

- (i)

- .

- (ii)

Proof.

From Algorithm 11, we have

Let us use Lemma 1(i) in order to determine the following results:

thus, substituting into , we obtain

Now, we follow from Lemma 1(ii) and have that

Follow from , , and to obtain

Also,

Furthermore, substitute into and have that

for some , we have that

Furthermore, from the boundedness of , it is easy to see that

Our next objective is to demonstrate that . To do so, we will assume the opposite and suppose that , which implies that there exists where . Therefore, according to (i), we can conclude that

By induction, we obtain

Taking the limit superior of both sides of the last inequality and noting that approaches 0, we obtain

This is a contradiction of being a non-negative real sequence. Therefore, we can conclude that . □

Remark 2.

Given that the , it becomes easier to confirm that also approaches zero. In addition, according to Remark 1, approaches zero with an increasing n value.

Now, we present our strong convergence theorem.

Theorem 1.

Given as the sequence generated by the proposed Algorithm 11 and suppose that Assumption (C1)–(C3) are satisfied. Then, strongly converges to a unique point , which solves the variational inequality

Proof.

Given . We will use to denote . Below are the possible cases we are considering.

CASE A: We start by assuming that there exists a such that is monotonically decreasing . Then, . Our first aim is to demonstrate that .

thus, . From Equations and we have that:

Note that and T together with H are Lipschitz continuous, so we obtain that:

Therefore, and as . From , , , and , we obtain the following results:

Hence,

Thus, by applying condition (C2), we obtain

We also have that

thus . Therefore,

Finally,

which results in the following:

As , the subsequence weakly converges to . Denote , since is firmly nonexpansive, hence and are averaged and nonexpansive. So the subsequence converges weakly to a fixed point of the operator . We now show that that is with and From we have

since T and H are Lipschitz continuous, thus and are bounded. In addition, , hence as . Since the subsequence converges weakly to , therefore, the function f is lower semi-continuous and as , then we can determine

That is,

This implies that is a fixed point of or , then we can have or . Moreover, the point is a fixed point of the operator , which means that . Since hence , consequently , This implies that is a stationary (fixed) point of , in fact, . Furthermore, from (48) and the fact that is demiclosed at zero, then for Hence

First, we show that strongly converges to , where is the unique solution of the variational inequality (VI):

For us to achieve our goal, we prove that . Choose a subsequence of such that . Since and using (10), we have

Furthermore, we make use of Lemma 4, Lemma 4(i), and (51) to obtain that , implying that sequence strongly converges to . Case A is concluded.

CASE B: Now we assume that is not monotonically decreasing. Then for some and , we define by the following:

Moreover, is increasing with and

We can apply a similar argument to the one used in Case A and conclude that

Thus, , where is the weak subsequential limit of . Also, we have

Thus, we follow from Lemma 4(i) and we have

for some and where

Since then from , we obtain the following results:

Hence, we obtain:

Therefore, we obtain the results below:

Since the sequence is bounded and , it follows from Equation and Remark 1.

We can conclude that , the following statement holds:

Hence, . Therefore, we imply that sequence converges strongly to q. This completes the proof. □

The result presented in Theorem 1 can lead to an improvement when compared to the findings of [26]. It is important to recall that the set of quasi-nonexpansive mappings can be classified as 0-demicontractive. Therefore, we can utilize the same discoveries to obtain outcomes when approximating a common solution for the SVIP, together with a restricted number of multivalued quasi-nonexpansive mappings. The following remark highlights our contributions to this paper:

Remark 3.

- (i)

- A new optimal choice of the inertial extrapolation technique is introduced. This can also be adapted for other iterative algorithms to perform better.

- (ii)

- The algorithm obtained a strong convergence result without necessarily imposing a solid condition on the control parameters.

- (iii)

- The self-adaptive technique prevents the need to calculate a prior estimate of the norm of the bounded linear operator at every iteration.

- (iv)

- The algorithm produces suitable solutions that approximate the entire set of solutions Γ as stated in , using appropriate starting points. This feature sets it apart from Tikhonov-type regularization methods, which always converge to the same solution sequence. We find this attribute particularly intriguing.

4. Numerical Illustrations

Let us provide some numerical examples that demonstrate the effectiveness and efficiency of the suggested algorithms. We will compare the performance of Algorithm 11 (also known as Algorithm 11) with Algorithms 1, 2, 3 and 10 (also known as Algorithms 1, 2, 3, and 10, respectively). Kindly note that the renumbering of the article occurred due to the change in the numbering style in the template. All codes were written in MATLAB R2020b and performed on a PC Desktop. Intel(R) Core(TM) i7-6600U CPU @ 3.00 GHz 3.00 GHz, RAM 32.00 GB.

Example 1.

Let and be defined by

It is easy to check that the resolvent operators concerning and are defined by

for and Also, let be defined by

It is clear that and Thus,

Furthermore,

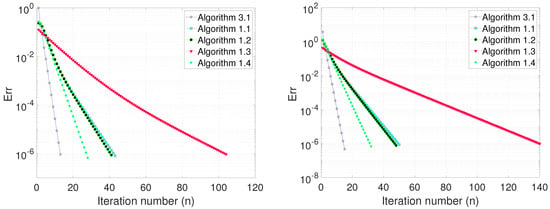

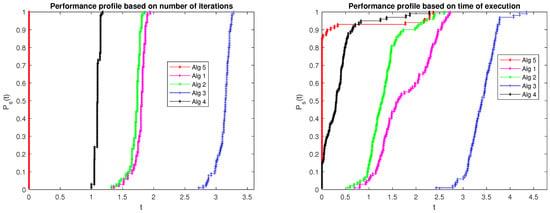

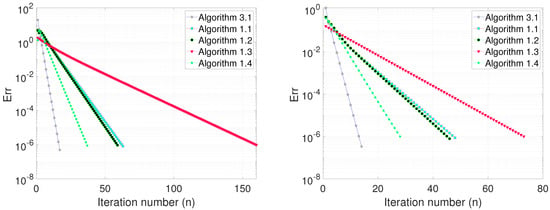

Hence, is -demicontractive with . Moreover, the solution set We choose the following choice of parameters for Algorithm 11: . For Algorithm 1, we take for Algorithm 2, we take for Algorithm 3, we take ; and for Algorithm 10, we take We test the algorithms using the following initial points:

- ([Case I:]) eye(3,1) and rand(3,1),

- ([Case II:]) rand(3,1) and rand(3,1),

- ([Case III:]) randn(3,1) and randn(3,1),

- ([Case IV:]) ones(3,1) and rand(3,1),

where “eye", “randn", “rand", and “ones" are MATLAB functions. We used as the stopping criterion for all the implementation. The numerical results are shown in Table 1 and Figure 1. Furthermore, we run the algorithms for 100 randomly generated starting points to check the performance of the algorithms using the performance profile metric introduced by Dolan and More [34], which is widely accepted as a benchmark for comparing the performance of algorithms. The details of the setup of the performance profile can be found in [34]. In particular, for each algorithm and case we defined a parameter which is the computation value of algorithm for solving problem case such as the number of iterations, time of execution, or error value of Algorithm to solve problem . The performance of each algorithm is scaled concerning the best performance of any other algorithm in , which yields the performance ratio

Table 1.

Numerical results for Example 1.

Figure 1.

Example 1, (Top Left): Case I; (Top Right): Case II, (Bottom Left): Case III; (Bottom Right): Case IV.

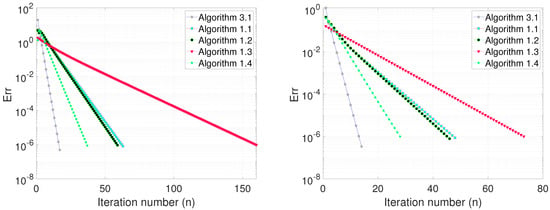

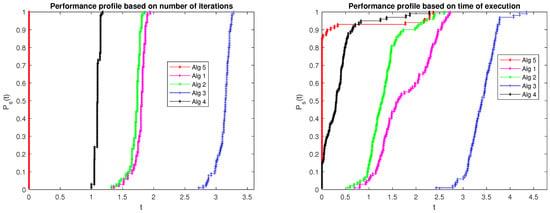

We select a parameter such that for all p and s, and only if solver s is unable to solve problem p. It is worth noting that the choice of does not affect the performance evaluation, as explained in [34]. To determine an overall assessment of each solver’s performance, we use the following measurement:

the probability represents the likelihood of solver to achieve a performance ratio within a factor of the best possible ratio. The performance profile for a solver is a non-decreasing function that is piecewise continuous from the right at each breakpoint when is defined as the cumulative distribution function of the performance ratio. The probability denotes the chance of the solver achieving the best performance among all solvers. The performance profile results (Figure 2 show that Algorithm 11 has the best performance for 100% of the cases considered in terms of the number of iterations. In contrast, Algorithm 3 has the worst performance. Moreover, Algorithm 10 performs better than Algorithms 1–3 even in worst senerios. Also, Algorithm 11 has the best performance for about 82% of the cases in terms of the time of execution, followed by Algorithm 10 for about 18% of the cases. In contrast, Algorithm 3 has the worst performance in terms of the time of execution. It is good to note that despite the self-adaptive technique used in selecting the stepsize for Algorithm 3, its performance is relatively worse than other methods.

Figure 2.

Performance profile results for Example 1 in terms of number of iterations (left) and time of execution (right).

Example 2.

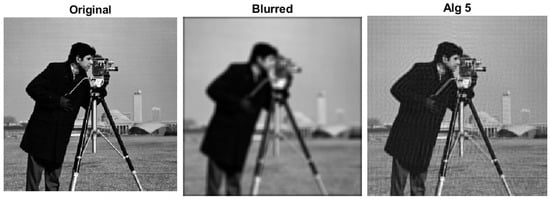

Our algorithms are utilized to solve an image reconstruction problem that can be modeled as the Least Absolute Selection and Shrinkage Operator (LASSO) problem described in Tibshirani’s work [35]. Alternatively, it can be modeled as an underdetermined linear system given by

where a is the original image in , m is the blurring operator in ), ϵ is noise, and z is the degraded or blurred data which must be recovered. Typically, this can be reformulated as a convex unconstrained minimization problem given by

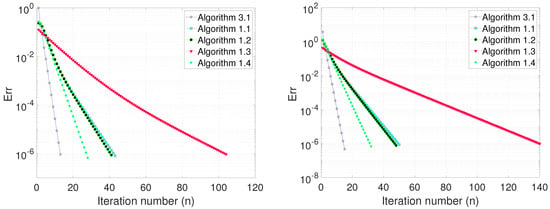

where is the Euclidean norm of a and is the -norm of a. Various scientific and engineering fields have found the problem to be a valuable tool. Over the years, several iterative techniques have been developed to solve Equation (62), with the earliest being the projection approach introduced by Figureido et al. [36]. Equivalently, the LASSO problem (62) can be expressed as an SVIP when and , where and are the indicator functions on C and Q, respectively. We aim to reconstruct the initial image a based on the information the blurred image z provides. The image is in greyscale and has a width of M pixels and a height of N pixels, with each pixel value within the range. The total number of pixels in the image is The signal-to-noise ratio, which is determined by the amount of noise present in the restored image, is used to evaluate the quality of the resulting image, and it is defined by

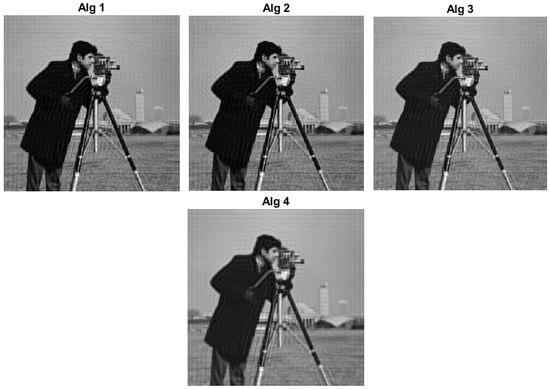

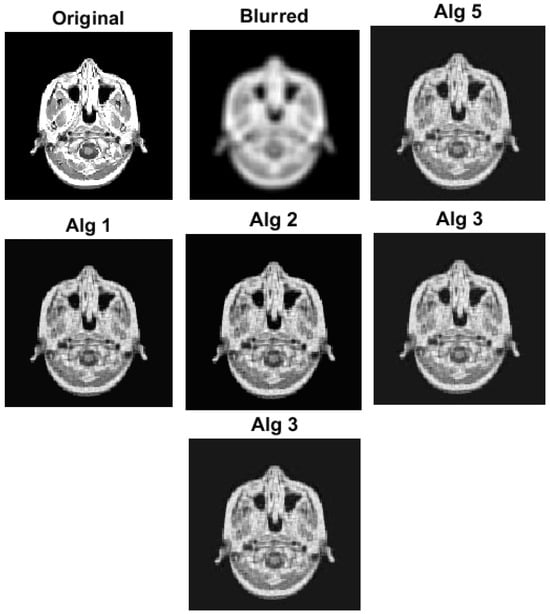

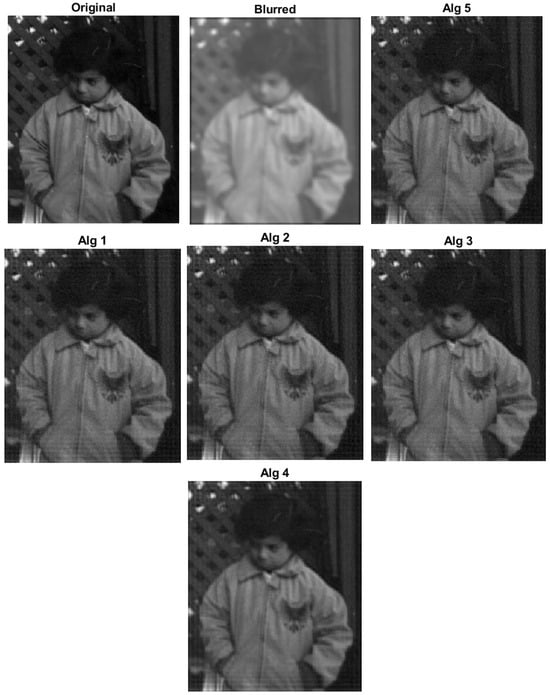

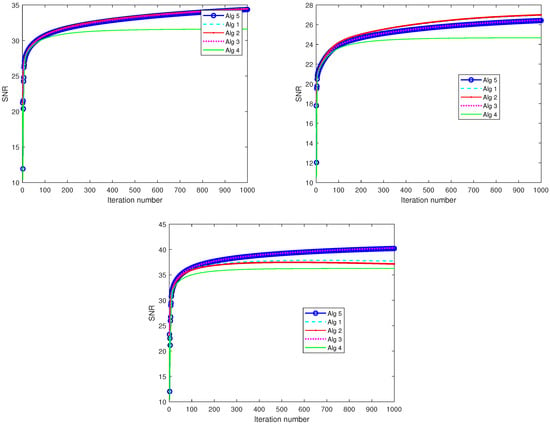

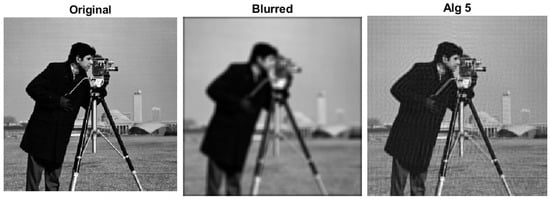

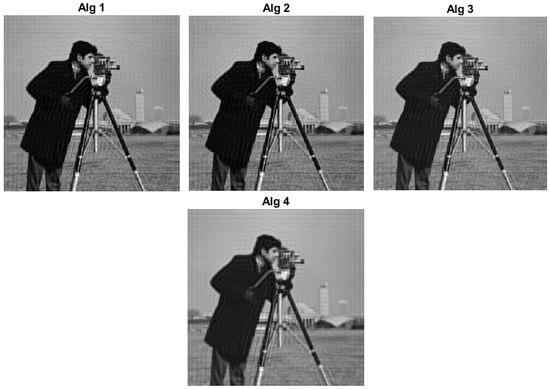

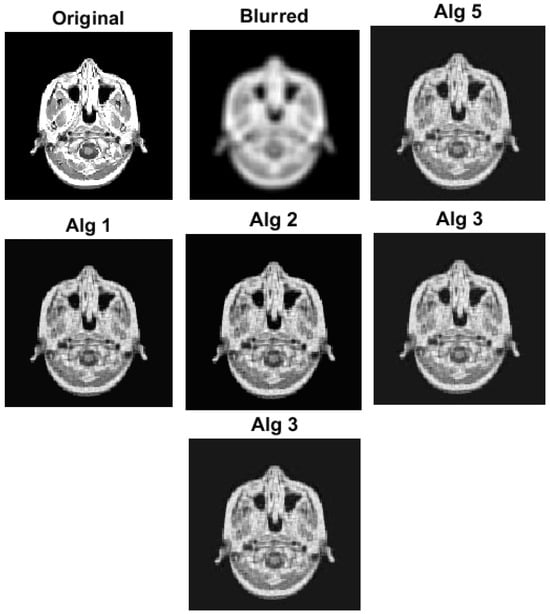

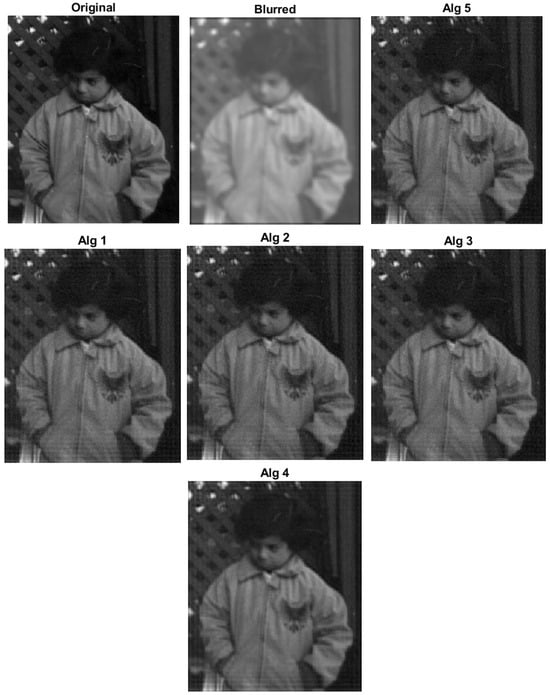

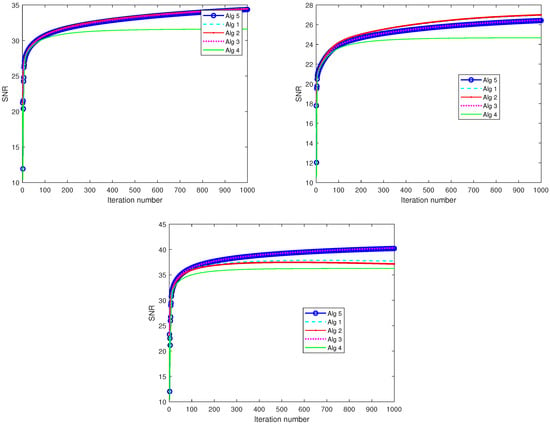

with a and being the original and restored images, respectively. In image restoration, the quality of the restored image is typically measured by its signal-to-noise ratio (SNR), where a higher SNR indicates better quality. To evaluate the effectiveness of our approach, we conducted experiments using three test images: Cameraman (256 × 256), Medical Resonance Imaging (MRI) (128 × 128), and Pout (400 × 318), all of which were obtained from the Image Processing Toolbox in MATLAB. Specifically, we degraded each test image using a Gaussian 7 × 7 blur kernel with a standard deviation of 4. We processed the algorithms using the following control parameters: Algorithm 11: For Algorithm 1, we take for Algorithm 2, we take for Algorithm 3, we take ; and for Algorithm 10, we take We also choose the initial values as and The numerical results are shown in Figure 3, Figure 4, Figure 5 and Figure 6 and Table 2. It is easy to see that all the algorithms efficiently reconstruct the blurred image. Though the performance of the algorithms varies in terms of the quality of the reconstructed image, we note that Algorithm 11 was able to reconstruct the images faster than other algorithms used in the experiments. This also emphasizes the importance of the proposed algorithm.

Figure 3.

Image reconstruction using cameraman (256 × 256) image.

Figure 4.

Image reconstruction using MRI (128 × 128) image.

Figure 5.

Image construction using Pout image (291 × 240).

Figure 6.

Graphs of SNR against iteration number. Top Left: Cameraman; Top Right: MRI; and Bottom: Pout.

Table 2.

Computational result for Example 2.

5. Conclusions

Our paper proposes a novel inertial self-adaptive iterative technique that utilizes viscosity approximation to obtain a common solution for split variational inclusion problems and fixed point problems in real Hilbert spaces. We have selected an optimal inertial extrapolation term to enhance the algorithm’s accuracy. Additionally, we incorporated a self-adaptive technique that allows for stepsize adjustment without relying on prior knowledge of the norm of the bounded linear operator. Our method has been proven to converge strongly, and we have included numerical implementations to demonstrate its efficiency and effectiveness.

Author Contributions

Conceptualization, M.D.N. and L.O.J.; methodology, M.D.N. and L.O.J.; software, I.O.A.; validation, M.A. and L.O.J.; formal analysis, M.D.N. and L.O.J.; investigation, M.D.N.; resources, M.A.; data curation, I.O.A.; writing—original draft preparation, M.D.N.; writing—review and editing, L.O.J.; visualization, L.O.J. and I.O.A.; supervision, M.A. and L.O.J.; project administration, M.D.N.; funding acquisition, M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Moudafi, A. Split monotone variational inclusions. J. Optim. Theory Appl. 2011, 150, 275–283. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multiprojection algorithms using Bregman projection in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Censor, Y.; Bortfeld, T.; Martin, B.; Trofimov, A. A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 2006, 51, 2353–2365. [Google Scholar] [CrossRef] [PubMed]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algorithms 2012, 59, 301–323. [Google Scholar] [CrossRef]

- Byrne, C. Iterative oblique projection onto convex subsets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Combettes, P.L. The convex feasibility problem in image recovery. In Advances in Imaging and Electron Physics; Hawkes, P., Ed.; Academic Press: New York, NY, USA, 1996; pp. 155–270. [Google Scholar]

- Byrne, C.; Censor, Y.; Gibali, A.; Reich, S. Weak and strong convergence of algorithms for the split common null point problem. J. Nonlinear Convex. Anal. 2012, 13, 759–775. [Google Scholar]

- Marino, G.; Xu, H.K. A general iterative method for nonexpansive mapping in Hilbert spaces. J. Math. Anal. Appl. 2006, 318, 43–52. [Google Scholar] [CrossRef]

- Moudafi, A. Viscosity approximation methods for fixed-points problems. J. Math. Anal. Appl. 2000, 241, 46–55. [Google Scholar] [CrossRef]

- Kazmi, K.R.; Rizvi, S.H. An iterative method for split variational inclusion problem and fixed point problem for a nonexpansive mapping. Optim. Lett. 2014, 8, 1113–1124. [Google Scholar] [CrossRef]

- Alvarez, F.; Attouch, H. An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iterarive methods. Z. Vychisl. Mat. Mat. Fiz. 1964, 4, 1–17. [Google Scholar]

- Moudafi, A.; Oliny, M. Convergence of a splitting inertial proximal method for monotone operators. J. Comput. Appl. Math. 2003, 155, 447–454. [Google Scholar] [CrossRef]

- Deepho, J.; Kumam, P. The hybrid steepest descent method for split variational inclusion and constrained convex minimization problem. Abstr. Appl. Anal. 2014, 2014, 365203. [Google Scholar] [CrossRef]

- Anh, P.K.; Thong, D.V.; Dung, V.T. A strongly convergent Mann-type inertial algorithm for solving split variational inclusion problems. Optim. Eng. 2021, 22, 159–185. [Google Scholar] [CrossRef]

- Maingé, P.E. Regularized and inertial algorithms for common fixed points of nonlinear operators. J. Math. Anal. Appl. 2008, 34, 876–887. [Google Scholar] [CrossRef]

- Wangkeeree, R.; Rattanaseeha, K. The general iterative methods for split variational inclusion problem and fixed point problem in Hilbert spaces. J. Comput. Anal. Appl. 2018, 25, 19–31. [Google Scholar]

- Long, L.V.; Thong, D.V.; Dung, V.T. New algorithms for the split variational inclusion problems and application to split feasibility problems. Optimization 2019, 68, 2339–2367. [Google Scholar] [CrossRef]

- Dong, Q.L.; Lu, Y.Y.; Yang, J. The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 2016, 65, 2217–2226. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Jolaoso, L.O.; Mewomo, O.T. Modified inertial subgradient extragradient method with self-adaptive stepsize for solving monotone variational inequality and fixed point problems. Optimization 2020, 545–574. [Google Scholar] [CrossRef]

- Chuang, C.S. Hybrid inertial proximal algorithm for the split variational inclusion problem in Hilbert spaces with applications. Optimization 2017, 66, 777–792. [Google Scholar] [CrossRef]

- Kesornprom, S.; Cholamjiak, P. Proximal type algorithms involving linesearch and inertial technique for split variational inclusion problem in hilbert spaces with applications. Optimization 2019, 68, 2369–2395. [Google Scholar] [CrossRef]

- Tang, Y. Convergence analysis of a new iterative algorithm for solving split variational inclusion problem. J. Ind. Manag. Optim. 2020, 16, 235–259. [Google Scholar] [CrossRef]

- Tan, B.; Qin, X.; Yao, J.C. Strong convergence of self-adaptive inertial algorithms for solving split variational inclusion problems with applications. J. Sci. Comput. 2021, 87, 20. [Google Scholar] [CrossRef]

- Zhou, Z.; Tan, B.; Li, S. Adaptive hybrid steepest descent algorithms involving an inertial extrapolation term for split monotone variational inclusion problems. Math. Methods Appl. Sci. 2022, 45, 8835–8853. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Jolaoso, L.O.; Mewomo, O.T. A self adaptive inertial algorithm for solving split variational inclusion and fixed point problems with applications. J. Ind. Manag. Optim. 2022, 18, 239–265. [Google Scholar] [CrossRef]

- Aubin, J.P.; Siegel, J. Fixed points and stationary points of dissipative multivalued maps. Proc. Am. Math. Soc. 1989, 78, 391–398. [Google Scholar] [CrossRef]

- Panyanak, B. Endpoints of multivalued nonexpansive mappings in geodesic spaces. Fixed Point Theory Appl. 2015, 2015, 147. [Google Scholar] [CrossRef]

- Kahn, M.S.; Rao, K.R.; Cho, Y.J. Common stationary points for set-valued mappings. Int. J. Math. Math. Sci. 1993, 16, 733–736. [Google Scholar] [CrossRef]

- Jailoka, P.; Suantai, S. The split common fixed point problem for multivalued demicontractive mappings and its applications. RACSAM 2019, 113, 689–706. [Google Scholar] [CrossRef]

- Chidume, C.E.; Ezeora, J.N. Krasnoselskii-type algorithm for family of multi-valued strictly pseudo-contractive mappings. Fixed Point Theory Appl. 2014, 2014, 111. [Google Scholar] [CrossRef]

- Mainge, P.E. Convergence theorems for inertial KM-type algorithms. J. Comput. Appl. Math. 2008, 219, 223–236. [Google Scholar] [CrossRef]

- Mainge, P.E. A hybrid extragradient viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 2008, 49, 1499–1515. [Google Scholar] [CrossRef]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program 2002, 91, 201–213. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 2007, 1, 586–597. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).