Abstract

Recently, the split inverse problem has received great research attention due to its several applications in diverse fields. In this paper, we study a new class of split inverse problems called the split variational inequality problem with multiple output sets. We propose a new Tseng extragradient method, which uses self-adaptive step sizes for approximating the solution to the problem when the cost operators are pseudomonotone and non-Lipschitz in the framework of Hilbert spaces. We point out that while the cost operators are non-Lipschitz, our proposed method does not involve any linesearch procedure for its implementation. Instead, we employ a more efficient self-adaptive step size technique with known parameters. In addition, we employ the relaxation method and the inertial technique to improve the convergence properties of the algorithm. Moreover, under some mild conditions on the control parameters and without the knowledge of the operators’ norm, we prove that the sequence generated by our proposed method converges strongly to a minimum-norm solution to the problem. Finally, we apply our result to study certain classes of optimization problems, and we present several numerical experiments to demonstrate the applicability of our proposed method. Several of the existing results in the literature in this direction could be viewed as special cases of our results in this study.

Keywords:

split inverse problems; non-Lipschitz operators; pseudomonotone operators; Tseng’s extragradient method; relaxation and inertial techniques MSC:

65K15; 47J25; 65J15; 90C33

1. Introduction

Let H be a real Hilbert space endowed with inner product and induced norm Let C be a nonempty, closed and convex subset of and let be an operator. Recall that the variational inequality problem (VIP) is formulated as finding an element such that

The solution set of the VIP (1) is denoted by Fichera [1] and Stampacchia [2] were the first to introduce and initiate a study independently on variational inequality theory. The variational inequality model is known to provide a general and useful framework for solving several problems in engineering, optimal control, data sciences, mathematical programming, economics, etc. (see [3,4,5,6,7,8] and the references therein). In recent times, the VIP has received great research attention owing to its several applications in diverse fields, such as economics, operations research, optimization theory, structural analysis, sciences and engineering (see [9,10,11,12,13,14] and the references therein). Several methods have been proposed and analyzed by authors for solving the VIP (see [15,16,17,18,19] and references therein).

One of the well-known and highly efficient methods is the Tseng extragradient method [20] (which is also known as the forward–backward–forward algorithm). The method is a two-step projection iterative method, which only requires single computation of the projection onto the feasible set per iteration. Several authors have modified and improved on the Tseng extragradient method to approximate the solution of the VIP (1) (for instance, see [19,21,22,23] and the references therein).

Another active area of research interest in recent years is the split inverse problem (SIP). The SIP finds applications in various fields, such as in medical image reconstruction, intensity-modulated radiation therapy, signal processing, phase retrieval, data compression, etc. (for instance, see [24,25,26,27]). The SIP model is presented as follows:

such that

where and are real Hilbert spaces, IP denotes an inverse problem formulated in , and IP denotes an inverse problem formulated in and is a bounded linear operator.

The first instance of the SIP, called the split feasibility problem (SFP), was introduced in 1994 by Censor and Elfving [26] for modeling inverse problems that arise from medical image reconstruction. The SFP has numerous areas of applications, for instance, in signal processing, biomedical engineering, control theory, approximation theory, geophysics, communications, etc. [25,27,28]. The SFP is formulated as follows:

where C and Q are nonempty, closed and convex subsets of Hilbert spaces and respectively, and is a bounded linear operator.

A well-known method for solving the SFP is the CQ method proposed by Byrne [29]. The CQ method has been improved and extended by several researchers. Moreover, many authors have proposed and analyzed several other iterative methods for approximating the solution of SFP (4) both in the framework of Hilbert and Banach spaces (for instance, see [25,27,28,30,31]).

Censor et al. [32] introduced an important generalization of the SFP called the split variational inequality problem (SVIP). The SVIP is defined as follows:

such that

where are single-valued operators. Many authors have proposed and analyzed several iterative techniques for solving the SVIP (e.g., see [33,34,35,36]).

Very recently, Reich and Tuyen [37] introduced and studied a new split inverse problem called the split feasibility problem with multiple output sets (SFPMOS) in the framework of Hilbert spaces. Let C and be nonempty, closed and convex subsets of Hilbert spaces H and respectively. Let be bounded linear operators. The SFPMOS is formulated as follows: find an element such that

Reich and Tuyen [38] proposed and analyzed two iterative methods for solving the SFPMOS (7) in the framework of Hilbert spaces. The proposed algorithms are presented as follows:

and

where is a strict contraction, and The authors obtained weak and strong convergence results for Algorithm (8) and Algorithm (9), respectively.

Motivated by the importance and several applications of the split inverse problems, in this paper, we examine a new class of split inverse problems called the split variational inequality problem with multiple output sets. Let be real Hilbert spaces and let be nonempty, closed and convex subsets of real Hilbert spaces H and respectively. Let be bounded linear operators and let be mappings. The split variational inequality problem with multiple output sets (SVIPMOS) is formulated as finding a point such that

In recent times, developing algorithms with high rates of convergence for solving optimization problems has become of great interest to researchers. There are two important techniques that are generally employed by researchers to improve the rate of convergence of iterative methods. These techniques include the inertial technique and the relaxation technique. The inertial technique first introduced by Polyak [39] originates from an implicit time discretization method (the heavy ball method) of second-order dynamical systems. The main feature of the inertial-algorithm is that the method uses the previous two iterates to generate the next iterate. We note that this small change can significantly improve the speed of convergence of an iterative method (for instance, see [21,23,40,41,42,43,44,45]). The relaxation method is another well-known technique employed by authors to improve the rate of convergence of iterative methods (see, e.g., [46,47,48]). The influence of these two techniques on the convergence properties of iterative methods was investigated in [46].

In this study, we introduce and analyze the convergence of a relaxed inertial Tseng extragradient method for solving the SVIPMOS (10) in the framework of Hilbert spaces when the cost operators are pseudomonotone and non-Lipschitz. Our proposed algorithm has the following key features:

- The proposed method does not require the Lipschitz continuity condition often imposed by the cost operator in the literature when solving variational inequality problems. In addition, while the cost operators are non-Lipschitz, the design of our algorithm does not involve any linesearch procedure, which could be time-consuming and too expensive to implement.

- Our proposed method does not require knowledge of the operators’ norm for its implementation. Rather, we employ a very efficient self-adaptive step size technique with known parameters. Moreover, some of the control parameters are relaxed to enlarge the range of values of the step sizes of the algorithm.

- Our algorithm combines the relaxation method and the inertial techniques to improve its convergence properties.

- The sequence generated by our proposed method converges strongly to a minimum-norm solution to the SVIPMOS (10). Finding the minimum-norm solution to a problem is very important and useful in several practical problems.

Finally, we apply our result to study certain classes of optimization problems, and we carry out several numerical experiments to illustrate the applicability of our proposed method.

This paper is organized as follows: In Section 2, we present some definitions and lemmas needed to analyze the convergence of the proposed algorithm, while in Section 3, we present the proposed method. In Section 4, we discuss the convergence of the proposed method, and in Section 5, we apply our result to study certain classes of optimization problems. In Section 6, we present several numerical experiments with graphical illustrations. Finally, in Section 7, we give a concluding remark.

2. Preliminaries

Definition 1

([21,22]). An operator is said to be

- (i)

- α-strongly monotone, if there exists such that

- (ii)

- monotone, if

- (iii)

- pseudomonotone, if

- (iv)

- L-Lipschitz continuous, if there exists a constant such that

- (v)

- uniformly continuous, if for every there exists such that

- (vi)

- sequentially weakly continuous, if for each sequence we have implies that

Remark 1.

It is known that the following implications hold: but the converses are not generally true. We also note that uniform continuity is a weaker notion than Lipschitz continuity.

It is well-known that if D is a convex subset of then is uniformly continuous if and only if, for every there exists a constant such that

Lemma 1

([49]).Suppose is a sequence of nonnegative real numbers, is a sequence in with and is a sequence of real numbers. Assume that

If for every subsequence of satisfying then

Lemma 2

([50]).Suppose and are two nonnegative real sequences such that

If then exists.

Lemma 3

([51]).Let H be a real Hilbert space. Then, the following results hold for all and

- (i)

- (ii)

- (iii)

3. Main Results

In this section, we present our proposed iterative method for solving the SVIPMOS (10). We establish our convergence result for the proposed method under the following conditions:

Let be nonempty, closed and convex subsets of real Hilbert spaces respectively, and let be bounded linear operators with adjoints Let be uniformly continuous pseudomonotone operators satisfying the following property:

Moreover, we assume that the solution set and the control parameters satisfy the following conditions:

Assumption B:

- (A1)

- (A2)

- (A3)

- for each

Now, the Algorithm 1 is presented as follows:

| Algorithm 1. A Relaxed Inertial Tseng’s Extragradient Method for Solving SVIPMOS (10). |

|

| Set and return to Step 1. |

Remark 2.

Remark 3.

We also note that while the cost operators are non-Lipschitz, our method does not require any linesearch procedure, which could be computationally very expensive to implement. Rather, we employ self-adaptive step size techniques that only require simple computations of known parameters per iteration. Moreover, some of the parameters are relaxed to accommodate larger intervals for the step sizes.

Remark 4.

We remark that condition (12) is a weaker assumption than the sequentially weakly continuity condition. We present the following example satisfying condition (12), which also illustrates that the condition is a weaker assumption than the sequentially weakly continuity condition.

Let be an operator defined by

Suppose such that Then, by the weakly lower semi-continuity of the norm we obtain

Thus, we have

Therefore, A satisfies condition (12).

On the other hand, to establish that A is not sequentially weakly continuous, choose where is a standard basis of that is, with 1 at the n-th position. It is clear that and but Consequently, A is not sequentially weakly continuous. Therefore, condition (12) is strictly weaker than the sequentially weakly continuity condition.

4. Convergence Analysis

First, we prove some lemmas needed for our strong convergence theorem.

Lemma 5.

Let be the sequence generated by Algorithm 1 such that Assumption B holds. Then is well-defined for each and where

Proof.

Observe that since is uniformly continuous for each it follows from (11) that for any given there exists such that Thus, for the case for all , we obtain

where for some and Therefore, by the definition of the sequence has lower bound and has upper bound By Lemma 2, the limit exists and is denoted by Clearly, for each □

Lemma 6.

Proof.

If it follows that for each

Since is a bounded linear operator and for each we have

which implies that is a lower bound of for each □

Lemma 7.

Suppose Assumption B of Algorithm 1 holds. Then, there exists a positive integer N such that

Proof.

Since and for each there exists a positive integer such that

Similarly, since and for each we have

Thus, for each there exists a positive integer such that

Now, setting we have the required result. □

Lemma 8.

Let be a sequence generated by Algorithm 1 under Assumption B. Then the following inequality holds for all

Proof.

From the definition of we have

Observe that (15) holds both for and Let Then, it follows that Using the definition of and applying Lemma 3, we have

Since and by the property of the projection map we have

which is equivalent to

Furthermore, since we have

By the pseudomonotonicity of it follows that Since we obtain

Lemma 9.

Suppose is a sequence generated by Algorithm 1 such that Assumption B holds. Then is bounded.

Proof.

Let By the definition of and applying the triangular inequality, we have

By Remark (2), we obtain

Thus, there exists such that for all It follows that

By Lemma 7, there exists a positive integer N such that Consequently, it follows from (19) that for all and

Next, since the function is convex, we have

By Lemma 7, there exists a positive integer N such that for all From (22) and by applying Lemma 3 and (21), we obtain

If then by the definition of , we have

Lemma 10.

Let and be two sequences generated by Algorithm 1 with subsequences and respectively, such that Suppose then

Proof.

From (25), we have

From the last inequality, we obtain

By the definition of we have

From this, we obtain

Since is bounded, it follows that

Hence, we have

From (19), we obtain

Consequently, we have

Since by the property of the projection map, we obtain

which implies that

From the last inequality, it follows that

Observe that

By the continuity of from (31) we obtain

Next, let be a decreasing sequence of positive numbers such that as For each let denote the smallest positive integer such that

where the existence of follows from (36). Since is decreasing, then is increasing. Moreover, since for each we can suppose (otherwise, ) and let

Then, for each From (37), we have

It follows from the pseudomonotonicity of that

which is equivalent to

In order to complete the proof, we need to establish that Since and is a bounded linear operator for each we have Thus, from (31), we obtain Since we have If then which implies that On the contrary, we suppose Since satisfies condition (12), we have for all

Applying the facts that and as we have

which implies that Applying the facts that is continuous, and are bounded and from (38) we get

From the last inequality, we have

By Lemma 4, we obtain

which implies that

Consequently, we have which implies that as desired. □

Lemma 11.

Suppose is a sequence generated by Algorithm 1 under Assumption B. Then, the following inequality holds for all

Proof.

Let By applying Lemma 3 together with the definition of , we obtain

Theorem 1.

Let be a sequence generated by Algorithm 1 under Assumption B. Then, converges strongly to where

Proof.

Let that is, Then, from Lemma 11, we obtain

where

Next, we claim that the sequence converges to zero. To do this, in view of Lemma 1 it suffices to show that for every subsequence of satisfying

Suppose that is a subsequence of such that (41) holds. Again, from Lemma 11, we obtain

By (41), Remark 2 and the fact that we obtain

Consequently, we obtain

From the definition of and by Remark 2, we have

Since is bounded, We choose an element arbitrarily. Then, there exists a subsequence of such that From (42), it follows that Now, by invoking Lemma 10 and applying (42), we obtain Since was selected arbitrarily, it follows that

Next, by the boundedness of there exists a subsequence of such that and

Since it follows from the property of the metric projection map that

5. Applications

In this section, we apply our result to study related optimization problems.

5.1. Generalized Split Variational Inequality Problem

First, we apply our result to study and approximate the solution of the generalized split variational inequality problem (see [37]). Let be nonempty, closed and convex subsets of real Hilbert spaces and let be bounded linear operators, such that Let be single-valued operators. The generalized split variational inequality problem (GSVIP) is formulated as finding a point such that

that is, such that

We note that by setting and then the SVIPMOS (10) becomes the GSVIP (49). Consequently, we obtain the following strong convergence theorem for finding the solution of GSVIP (49) in Hilbert spaces when the cost operators are pseudomonotone and uniformly continuous.

Theorem 2.

Let be nonempty, closed and convex subsets of real Hilbert spaces and suppose are bounded linear operators with adjoints such that Let be uniformly continuous pseudomonotone operators that satisfy condition (12), and suppose Assumption B of Theorem 1 holds and the solution set Then, the sequence generated by the following Algorithm 2 converges in norm to where

| Algorithm 2. A Relaxed Inertial Tseng’s Extragradient Method for Solving GSVIP (49). |

|

| Set and return to Step 1. |

5.2. Split Convex Minimization Problem with Multiple Output Sets

Let C be a nonempty, closed and convex subset of a real Hilbert space The convex minimization problem is defined as finding a point , such that

where g is a real-valued convex function. The solution set of Problem (50) is denoted by

Let be nonempty, closed and convex subsets of real Hilbert spaces respectively, and let be bounded linear operators with adjoints Let be convex and differentiable functions. In this subsection, we apply our result to find the solution of the following split convex minimization problem with multiple output sets (SCMPMOS): Find such that

The following lemma is required to establish our next result.

Lemma 12

([53]).Suppose C is a nonempty, closed and convex subset of a real Banach space and let g be a convex function of E into If g is Fréchet differentiable, then x is a solution of Problem (50) if and only if where is the gradient of

Applying Theorem 1 and Lemma 12, we obtain the following strong convergence theorem for finding the solution of the SCMPMOS (51) in the framework of Hilbert spaces.

Theorem 3.

Let be nonempty, closed and convex subsets of real Hilbert spaces respectively, and suppose are bounded linear operators with adjoints Let be fréchet differentiable convex functions such that are uniformly continuous. Suppose that Assumption B of Theorem 1 holds and the solution set Then, the sequence generated by the following Algorithm 3 converges strongly to where

| Algorithm 3. A Relaxed Inertial Tseng’s Extragradient Method for Solving SCMPMOS (51). |

|

| Set and return to Step 1. |

Proof.

We know that since are convex, then are monotone [53] and, hence, pseudomonotone. Therefore, the required result follows by applying Lemma 12 and taking in Theorem 1. □

6. Numerical Experiments

Here, we carry out some numerical experiments to demonstrate the applicability of our proposed method (Proposed Algorithm 1). For simplicity, in all the experiments, we consider the case when All numerical computations were carried out using Matlab version R2021(b).

In all the computations, we choose

Now, we consider the following numerical examples both in finite and infinite dimensional Hilbert spaces for the proposed algorithm.

Example 1.

For each we define the feasible set and where M is a square matrix given by

We note that M is a Hankel-type matrix with a nonzero reverse diagonal.

Example 2.

Let and We define , and the cost operator is defined by

Finally, we consider the last example in infinite dimensional Hilbert spaces.

Example 3.

Let Let be such that for some The feasible sets are defined as follows for each

The cost operators are defined by

Then are pseudomonotone and uniformly continuous. We choose and we define

We test Examples 1–3 under the following experiments:

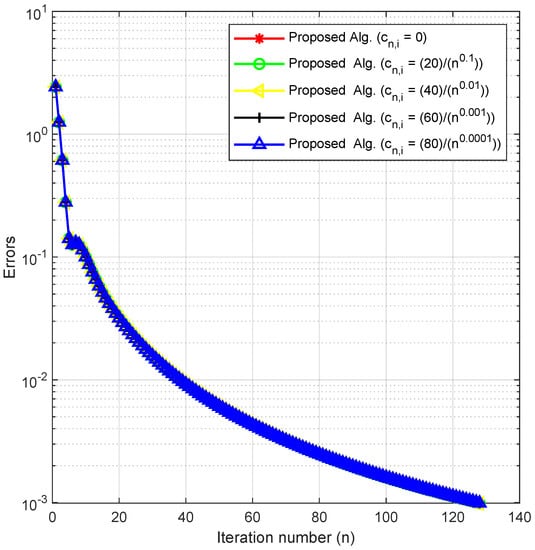

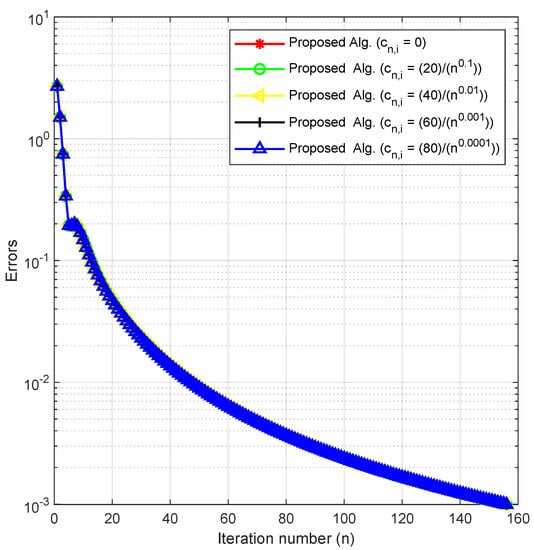

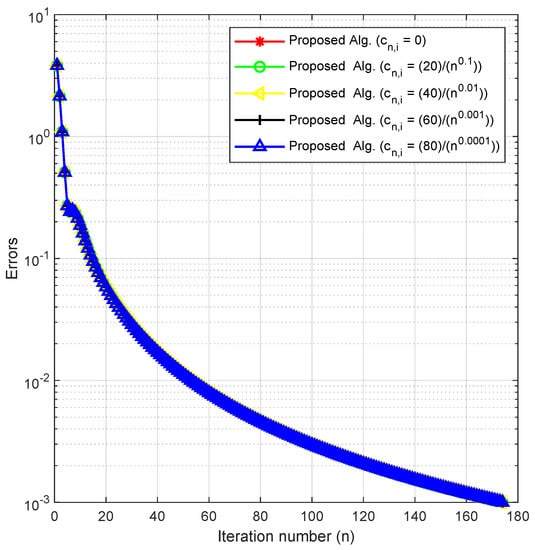

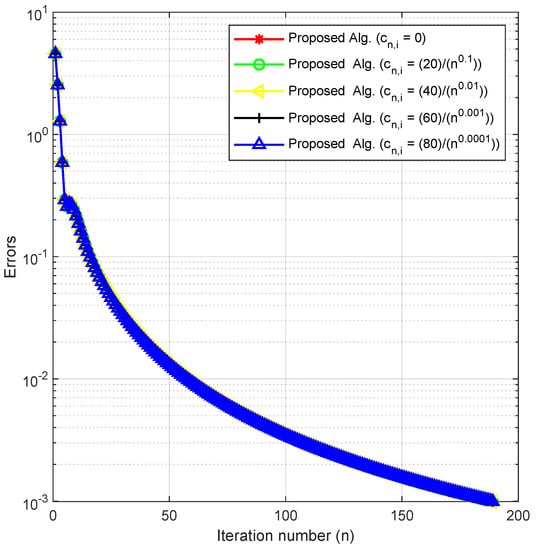

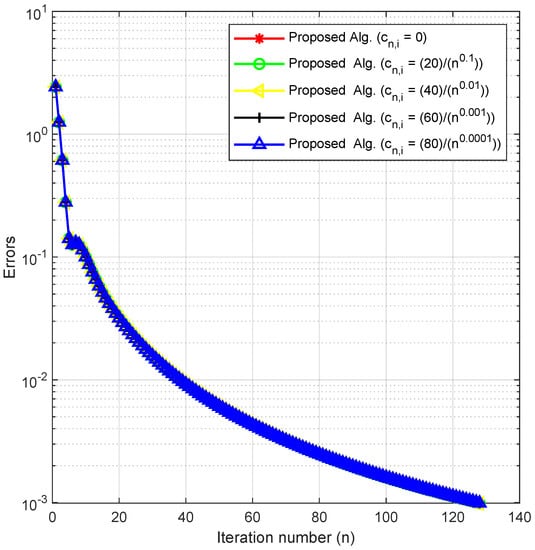

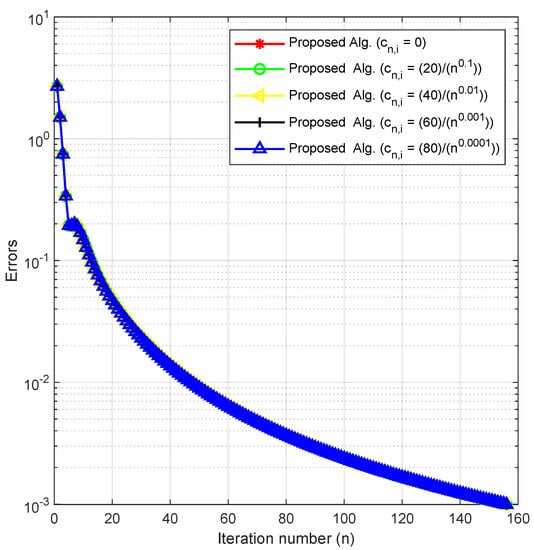

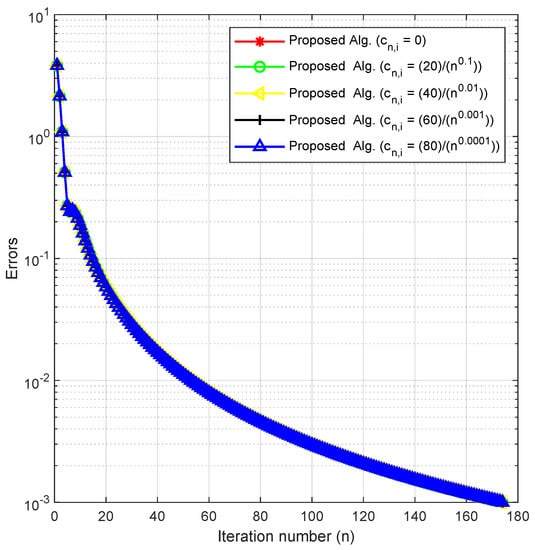

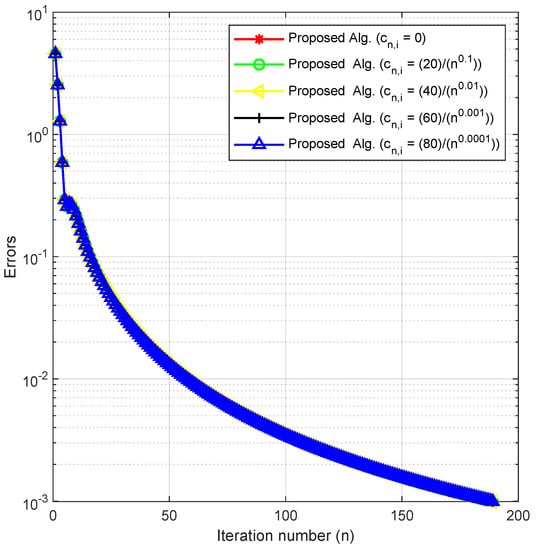

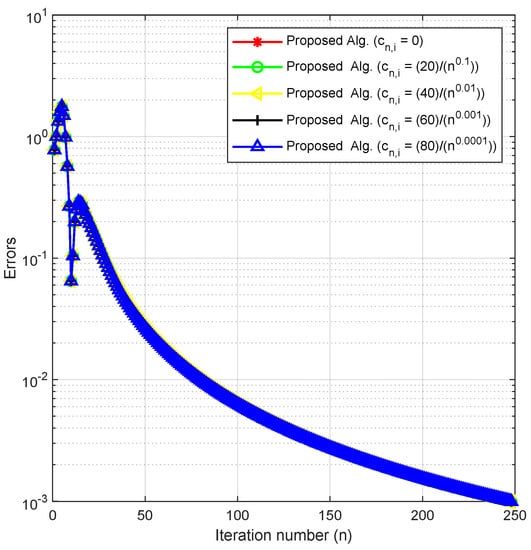

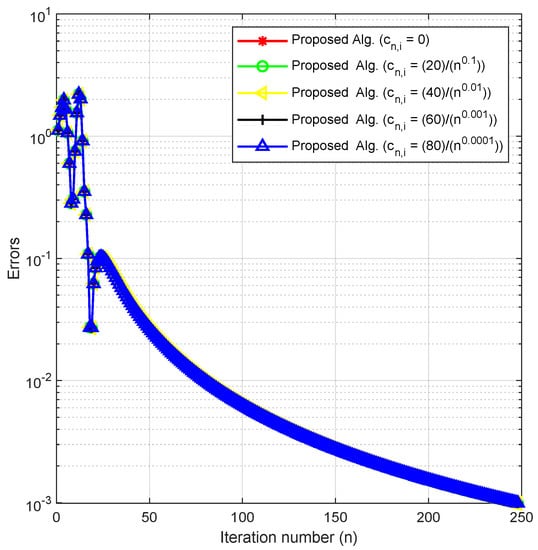

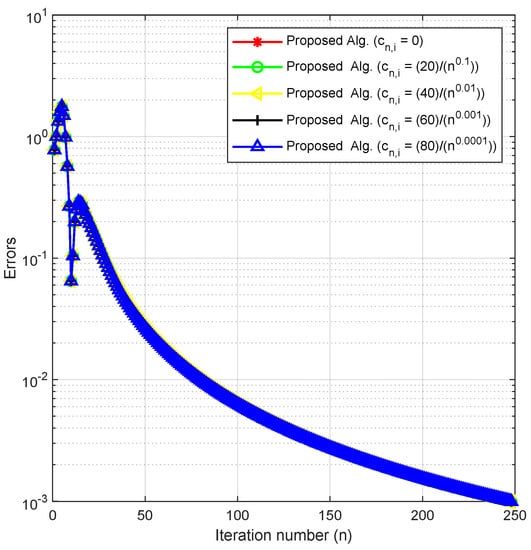

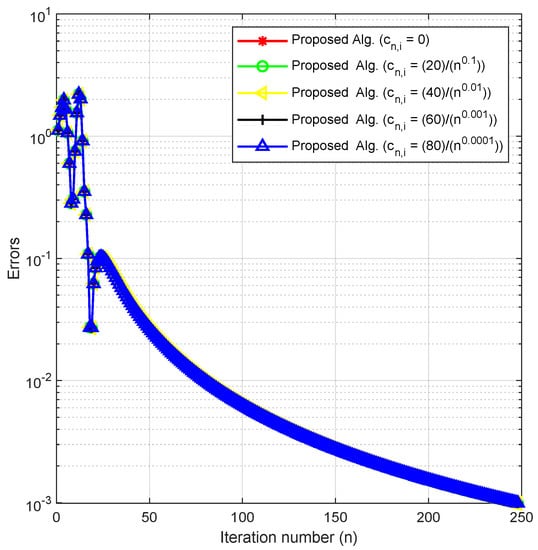

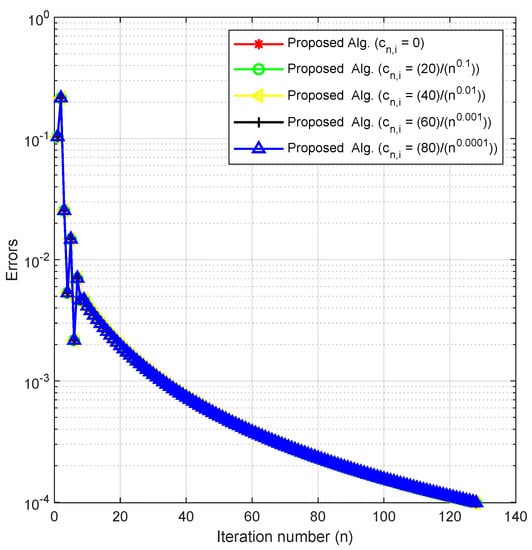

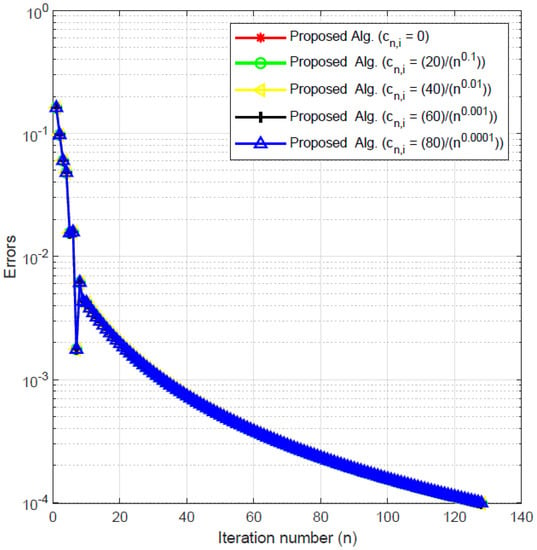

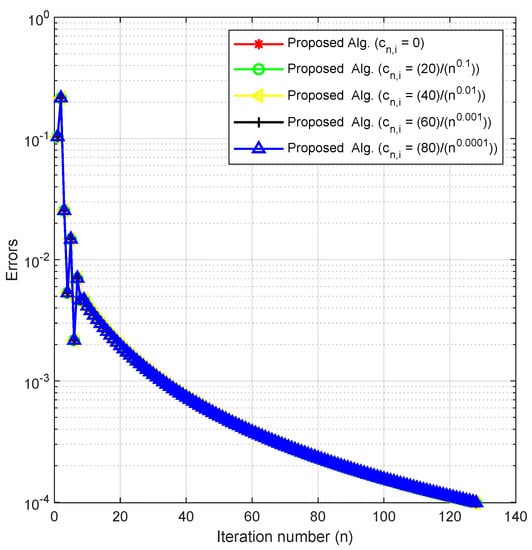

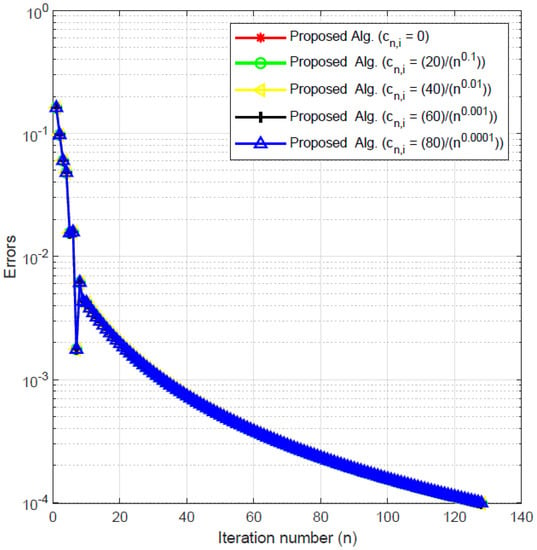

Experiment 1.

In this experiment, we check the behavior of our method by fixing the other parameters and varying in Example 1. We do this to check the effects of this parameter and the sensitivity of our method on it.

We consider with and

Using as the stopping criterion, we plot the graphs of against the number of iterations for each The numerical results are reported in Figure 1, Figure 2, Figure 3 and Figure 4 and Table 1.

Figure 1.

Experiment 1: .

Figure 2.

Experiment 1: .

Figure 3.

Experiment 1: .

Figure 4.

Experiment 1: .

Table 1.

Numerical results for Experiment 1.

Experiment 2.

In this experiment, we check the behavior of our method by fixing the other parameters and varying in Example 2. We do this to check the effects of this parameter and the sensitivity of our method to it.

We consider with the following two cases of initial values and

- Case I:

- Case II:

Using as the stopping criterion, we plot the graphs of against the number of iterations in each case. The numerical results are reported in Figure 5 and Figure 6 and Table 2.

Figure 5.

Experiment 2: Case 1.

Figure 6.

Experiment 2: Case 2.

Table 2.

Numerical results for Experiment 2.

Finally, we test Example 3 under the following experiment:

Experiment 3.

In this experiment, we check the behavior of our method by fixing the other parameters and varying in Example 3. We do this to check the effects of these parameters and the sensitivity of our method to it.

We consider with the following two cases of initial values and

- Case I:

- Case II:

Using as the stopping criterion, we plot the graphs of against the number of iterations in each case. The numerical results are reported in Figure 7 and Figure 8 and Table 3.

Figure 7.

Experiment 3: Case 1.

Figure 8.

Experiment 3: Case 2.

Table 3.

Numerical results for Experiment 3.

Remark 5.

By using different initial values, cases of m and varying the key parameter in Experiments 1–3, we obtained the numerical results displayed in Table 1, Table 2 and Table 3 and Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8. In Figure 1, Figure 2, Figure 3 and Figure 4, we considered different initial values and cases of m with varying values of the key parameter for Experiment 1 in As observed from the figures, these varying choices do not have a significant effect on the behavior of the algorithm. Similarly, Figure 5 and Figure 6 show that the behavior of our algorithm is consistent under varying initial starting points and different values of the key parameter for Experiment 2 in Likewise, Figure 7 and Figure 8 reveal that the behavior of the algorithm is not affected by varying starting points and values of for Experiment 3 in From these results, we can conclude that our method is well-behaved since the choice of the key parameter and initial starting points do not affect the number of iterations or the CPU time in all the experiments.

7. Conclusions

In this article, we studied a new class of split inverse problems called the split variational inequality problem with multiple output sets. We introduced a relaxed inertial Tseng extragradient method with self-adaptive step sizes for finding the solution to the problem when the cost operators are pseudomonotone and non-Lipschitz in the framework of Hilbert spaces. Moreover, we proved a strong convergence theorem for the proposed method under some mild conditions. Finally, we applied our result to study and approximate the solutions of certain classes of optimization problems, and we presented several numerical experiments to demonstrate the applicability of our proposed algorithm. The results of this study open up several opportunities for future research. As part of our future research, we would like to extend the results in this paper to a more general space, such as the reflexive Banach space. Furthermore, we would consider extending the results to a larger class of operators, such as the classes of quasimonotone and non-monotone operators. Moreover, in our future research, we would be interested in investigating the stochastic variant of our results in this study.

Author Contributions

Conceptualization, T.O.A.; Methodology, T.O.A.; Validation, O.T.M.; Formal analysis, T.O.A.; Investigation, T.O.A.; Resources, O.T.M.; Writing—original draft, T.O.A.; Writing —review & editing, O.T.M.; Visualization, O.T.M.; Supervision, O.T.M.; Project administration, O.T.M.; Funding acquisition, O.T.M. All authors have read and agreed to the published version of the manuscript.

Funding

The research of the first author is wholly supported by the University of KwaZulu-Natal, Durban, South Africa, Postdoctoral Fellowship. He is grateful for the funding and financial support. The second author is supported by the National Research Foundation (NRF) of South Africa Incentive Funding for Rated Researchers (Grant Number 119903). Opinions expressed and conclusions arrived at are those of the authors and are not necessarily to be attributed to the NRF.

Acknowledgments

The authors thank the Reviewers and the Editor for the time spent in carefully going through the manuscript, and pointing out typos and areas of corrections, including constructive comments and suggestions, which have all helped to improve on the quality of the manuscript.

Conflicts of Interest

The authors declare that they have no competing interests.

References

- Fichera, G. Sul problema elastostatico di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei VIII. Ser. Rend. Cl. Sci. Fis. Mat. Nat. 1963, 34, 138–142. [Google Scholar]

- Stampacchia, G. Formes bilineaires coercitives sur les ensembles convexes. C. R. Acad. Sci. Paris 1964, 258, 4413–4416. [Google Scholar]

- Alakoya, T.O.; Uzor, V.A.; Mewomo, O.T. A new projection and contraction method for solving split monotone variational inclusion, pseudomonotone variational inequality, and common fixed point problems. Comput. Appl. Math. 2022, 42, 1–33. [Google Scholar] [CrossRef]

- Ansari, Q.H.; Islam, M.; Yao, J.C. Nonsmooth variational inequalities on Hadamard manifolds. Appl. Anal. 2020, 99, 340–358. [Google Scholar] [CrossRef]

- Cubiotti, P.; Yao, J.C. On the Cauchy problem for a class of differential inclusions with applications. Appl. Anal. 2020, 99, 2543–2554. [Google Scholar] [CrossRef]

- Eskandari, Z.; Avazzadeh, Z.; Ghaziani, K.R.; Li, B. Dynamics and bifurcations of a discrete-time Lotka–Volterra model using nonstandard finite difference discretization method. Math. Meth. Appl. Sci. 2022, 1–16. [Google Scholar] [CrossRef]

- Li, B.; Liang, H.; He, Q. Multiple and generic bifurcation analysis of a discrete Hindmarsh-Rose model, Chaos. Solitons Fractals 2021, 146, 110856. [Google Scholar] [CrossRef]

- Vuong, P.T.; Shehu, Y. Convergence of an extragradient-type method for variational inequality with applications to optimal control problems. Numer. Algorithms 2019, 81, 269–291. [Google Scholar] [CrossRef]

- Aubin, J.; Ekeland, I. Applied Nonlinear Analysis; Wiley: New York, NY, USA, 1984. [Google Scholar]

- Baiocchi, C.; Capelo, A. Variational and Quasivariational Inequalities; Applications to Free Boundary Problems; Wiley: New York, NY, USA, 1984. [Google Scholar]

- Gibali, A.; Reich, S.; Zalas, R. Outer approximation methods for solving variational inequalities in Hilbert space. Optimization 2017, 66, 417–437. [Google Scholar] [CrossRef]

- Kinderlehrer, D.; Stampacchia, G. An introduction to variational inequalities and their applications. In Classics in Applied Mathematics; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2000. [Google Scholar]

- Li, B.; Liang, H.; Shi, L.; He, Q. Complex dynamics of Kopel model with nonsymmetric response between oligopolists. Solitons Fractals 2022, 156, 111860. [Google Scholar] [CrossRef]

- Ogwo, G.N.; Alakoya, T.O.; Mewomo, O.T. Iterative algorithm with self-adaptive step size for approximating the common solution of variational inequality and fixed point problems. Optimization 2021. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Jolaoso, L.O.; Mewomo, O.T. Modified inertial subgradient extragradient method with self adaptive stepsize for solving monotone variational inequality and fixed point problems. Optimization 2021, 70, 545–574. [Google Scholar] [CrossRef]

- Ceng, L.C.; Coroian, I.; Qin, X.; Yao, J.C. A general viscosity implicit iterative algorithm for split variational inclusions with hierarchical variational inequality constraints. Fixed Point Theory 2019, 20, 469–482. [Google Scholar] [CrossRef]

- Hai, T.N. Continuous-time ergodic algorithm for solving monotone variational inequalities. J. Nonlinear Var. Anal. 2021, 5, 391–401. [Google Scholar]

- Khan, S.H.; Alakoya, T.O.; Mewomo, O.T. Relaxed projection methods with self-adaptive step size for solving variational inequality and fixed point problems for an infinite family of multivalued relatively nonexpansive mappings in Banach spaces. Math. Comput. Appl. 2020, 25, 54. [Google Scholar] [CrossRef]

- Mewomo, O.T.; Alakoya, T.O.; Yao, J.-C.; Akinyemi, L. Strong convergent inertial Tseng’s extragradient method for solving non-Lipschitz quasimonotone variational Inequalities in Banach spaces. J. Nonlinear Var. Anal. 2023, 7, 145–172. [Google Scholar]

- Tseng, P. A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Mewomo, O.T.; Shehu, Y. Strong convergence results for quasimonotone variational inequalities. Math. Methods Oper. Res. 2022, 2022, 47. [Google Scholar] [CrossRef]

- Godwin, E.C.; Alakoya, T.O.; Mewomo, O.T.; Yao, J.-C. Relaxed inertial Tseng extragradient method for variational inequality and fixed point problems. Appl. Anal. 2022. [Google Scholar] [CrossRef]

- Uzor, V.A.; Alakoya, T.O.; Mewomo, O.T. Strong convergence of a self-adaptive inertial Tseng’s extragradient method for pseudomonotone variational inequalities and fixed point problems. Open Math. 2022, 20, 234–257. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Uzor, V.A.; Mewomo, O.T.; Yao, J.-C. On System of Monotone Variational Inclusion Problems with Fixed-Point Constraint. J. Inequal. Appl. 2022, 2022, 47. [Google Scholar] [CrossRef]

- Censor, Y.; Borteld, T.; Martin, B.; Trofimov, A. A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 2006, 51, 2353–2365. [Google Scholar] [CrossRef] [PubMed]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- López, G.; Martín-Márquez, V.; Xu, H.K. Iterative algorithms for the multiple-sets split feasibility problem. In Biomedical Mathematics: Promising Directions in Imaging, Therapy Planning and Inverse Problems, Medical Physics Publishing, Madison; Medical Physics Publishing: Madison, WI, USA, 2010; pp. 243–279. [Google Scholar]

- Moudafi, A.; Thakur, B.S. Solving proximal split feasibility problems without prior knowledge of operator norms. Optim. Lett. 2014, 8, 2099–2110. [Google Scholar] [CrossRef]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Censor, Y.; Motova, A.; Segal, A. Perturbed projections and subgradient projections for the multiple-sets split feasibility problem. J. Math. Anal. Appl. 2007, 327, 1244–1256. [Google Scholar] [CrossRef]

- Godwin, E.C.; Izuchukwu, C.; Mewomo, O.T. Image restoration using a modified relaxed inertial method for generalized split feasibility problems. Math. Methods Appl. Sci. 2022. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algorithms 2012, 59, 301–323. [Google Scholar] [CrossRef]

- He, H.; Ling, C.; Xu, H.K. A relaxed projection method for split variational inequalities. J. Optim. Theory Appl. 2015, 166, 213–233. [Google Scholar] [CrossRef]

- Kim, J.K.; Salahuddin, S.; Lim, W.H. General nonconvex split variational inequality problems. Korean J. Math. 2017, 25, 469–481. [Google Scholar]

- Ogwo, G.N.; Izuchukwu, C.; Mewomo, O.T. Inertial methods for finding minimum-norm solutions of the split variational inequality problem beyond monotonicity. Numer. Algorithms 2022, 88, 1419–1456. [Google Scholar] [CrossRef]

- Tian, M.; Jiang, B.-N. Weak convergence theorem for a class of split variational inequality problems and applications in Hilbert space. J. Ineq. Appl. 2017, 2017, 123. [Google Scholar] [CrossRef]

- Reich, S.; Tuyen, T.M. Iterative methods for solving the generalized split common null point problem in Hilbert spaces. Optimization 2020, 69, 1013–1038. [Google Scholar] [CrossRef]

- Reich, S.; Tuyen, T.M. The split feasibility problem with multiple output sets in Hilbert spaces. Optim. Lett. 2020, 14, 2335–2353. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. Politehn. Univ. Bucharest Sci. Bull. Ser. A Appl. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Chang, S.-S.; Yao, J.-C.; Wang, L.; Liu, M.; Zhao, L. On the inertial forward-backward splitting technique for solving a system of inclusion problems in Hilbert spaces. Optimization 2021, 70, 2511–2525. [Google Scholar] [CrossRef]

- Ogwo, G.N.; Alakoya, T.O.; Mewomo, O.T. Inertial Iterative Method With Self-Adaptive Step Size for Finite Family of Split Monotone Variational Inclusion and Fixed Point Problems in Banach Spaces. Demonstr. Math. 2022, 55, 193–216. [Google Scholar] [CrossRef]

- Uzor, V.A.; Alakoya, T.O.; Mewomo, O.T. On Split Monotone Variational Inclusion Problem with Multiple Output Sets with fixed point constraints. Comput. Methods Appl. Math. 2022. [Google Scholar] [CrossRef]

- Wang, Z.-B.; Long, X.; Lei, Z.-Y.; Chen, Z.-Y. New self-adaptive methods with double inertial steps for solving splitting monotone variational inclusion problems with applications. Commun. Nonlinear Sci. Numer. Simul. 2022, 114, 106656. [Google Scholar] [CrossRef]

- Yao, Y.; Iyiola, O.S.; Shehu, Y. Subgradient extragradient method with double inertial steps for variational inequalities. J. Sci. Comput. 2022, 90, 71. [Google Scholar] [CrossRef]

- Godwin, E.C.; Alakoya, T.O.; Mewomo, O.T.; Yao, J.-C. Approximation of solutions of split minimization problem with multiple output sets and common fixed point problem in real Banach spaces. J. Nonlinear Var. Anal. 2022, 6, 333–358. [Google Scholar]

- Iutzeler, F.; Hendrickx, J.M. A generic online acceleration scheme for optimization algorithms via relaxation and inertia. Optim. Methods Softw. 2019, 34, 383–405. [Google Scholar] [CrossRef]

- Alvarez, F. Weak convergence of a relaxed-inertial hybrid projection-proximal point algorithm for maximal monotone operators in Hilbert Space. SIAM J. Optim. 2004, 14, 773–782. [Google Scholar] [CrossRef]

- Attouch, H.; Cabot, A. Convergence of a relaxed inertial forward-backward algorithm for structured monotone inclusions. Optimization 2019, 80, 547–598. [Google Scholar] [CrossRef]

- Saejung, S.; Yotkaew, P. Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. 2012, 75, 742–750. [Google Scholar] [CrossRef]

- Tan, K.K.; Xu, H.K. Approximating fixed points of nonexpansive mappings by the Ishikawa iteration process. J. Math. Anal. Appl. 1993, 178, 301–308. [Google Scholar] [CrossRef]

- Chuang, C.S. Strong convergence theorems for the split variational inclusion problem in Hilbert spaces. Fixed Point Theory Appl. 2013, 350. [Google Scholar] [CrossRef]

- Cottle, R.W.; Yao, J.C. Pseudomonotone complementary problems in Hilbert space. J. Optim. Theory Appl. 1992, 75, 281–295. [Google Scholar] [CrossRef]

- Tian, M.; Jiang, B.-N. Inertial Haugazeau’s hybrid subgradient extragradient algorithm for variational inequality problems in Banach spaces. Optimization 2021, 70, 987–1007. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).