Abstract

This paper proposes an improved method for solving diverse optimization problems called EGBO. The EGBO stands for the extended gradient-based optimizer, which improves the local search of the standard version of the gradient-based optimizer (GBO) using expanded and narrowed exploration behaviors. This improvement aims to increase the ability of the GBO to explore a wide area in the search domain for the giving problems. In this regard, the local escaping operator of the GBO is modified to apply the expanded and narrowed exploration behaviors. The effectiveness of the EGBO is evaluated using global optimization functions, namely CEC2019 and twelve benchmark feature selection datasets. The results are analyzed and compared to a set of well-known optimization methods using six performance measures, such as the fitness function’s average, minimum, maximum, and standard deviations, and the computation time. The EGBO shows promising results in terms of performance measures, solving global optimization problems, recording highlight accuracies when selecting significant features, and outperforming the compared methods and the standard version of the GBO.

MSC:

68T20

1. Introduction

Nowadays, data volumes are increasing daily, massively, and rapidly; data include many features and attributes, and some of these attributes may be irrelevant or redundant. However, such data should be saved, processed, and retrieved with reasonable computational time and effort. Therefore, using data processing techniques in terms of volume and compatibility is essential; one of these techniques is the feature selection strategy, which is used to select the essential sub-features. In this context, searching for the best attributes in many features is considered a challenge. Therefore, many feature selection models have been proposed to help solve that problem. For instance, the authors of [1] proposed a modified version of the salp swarm algorithm (SSA) called DSSA to reduce the features of different benchmark datasets; the results showed the effectiveness of the DSSA and good classification accuracy compared to the other methods. Another work was performed by the authors of [2]; they introduced a feature selection stage to detect facial expressions from a real dataset using the sine–cosine algorithm (SCA); their method reduced the feature number by 87%.

Furthermore, the authors of [3] applied the particle swarm optimization (PSO) and fuzzy rough set to reduce the feature set of benchmark datasets. The obtained feature sets were evaluated using the decision tree and naive Bayes classifiers; the results showed their effectiveness at evaluating the selected features. The e-Jaya optimization algorithm was also effectively utilized by the authors of [4] to select the essential features in the group of essays to grade them in less time with more accuracy. In this context, the feature selection was applied in medical applications, such as [5]; it was applied to help classify the influenza A virus cases; the results showed good performances compared to the classic methods. The whale optimization algorithm (WOA) was also applied in feature selection by the authors of [6]. In that study, the WOA was enhanced by a pooling mechanism. The performance of the enhanced WOA was evaluated using two experiments: global optimization and feature selection. It was also evaluated in detecting the coronavirus disease (COVID-19). The results showed the efficiency of the enhanced WOA in solving different problems. More applications for feature selection can be found here [7,8,9,10,11].

From this insight, this paper proposes a new method for feature selection. The proposed method, called EGBO, improves the gradient-based optimizer (GBO) using expanded and narrowed exploration behaviors of the Aquila optimizer (AO) [12]. The effectiveness of the EGBO was evaluated using seven well-known benchmark feature selection datasets and compared with some optimization algorithms.

The gradient-based optimizer is a recent optimization algorithm that was motivated by the gradient-based Newton method [13]. It has been successfully applied to several applications, such as identifying the parameters of photovoltaic systems [14]. The GBO was also applied by [15] to predict the infected, recovered, and death cases for the COVID-19 pandemic in the US. On the other hand, the aquila optimizer (AO) is a recent optimization algorithm; it simulates the hunting behaviors of the Aquila proposed by [12]. The AO was used in several applications, such as forecasting oil production [16] and forecasting China’s rural community population [17]. Furthermore, the authors of [18] applied the GBO to identify the parameters in photovoltaic models. Their method’s performance was evaluated using the single-diode, double-diode, and three-diode models as well as the photovoltaic module model. The results showed that their method obtained the best results and it was highly competitive with the compared technologies. On the same side, the authors of [19] used an improved version of the GBO, called IGBO, for parameter extraction of photovoltaic models. The IGBO used two strategies to identify the adaptive parameters: adaptive weights and chaotic behavior. The results showed competitive performance compared to the other methods. Furthermore, the GBO was also modified and called GBOSMA by the authors of [20]. They applied the slime mould algorithm (SMA) as a local search to improve the GBOSMA to explore the search space; the experimental results demonstrated the superiority of their method. In this regard, the difference between the GBOSMA and this paper can be summarized as follows; the GBOSMA uses a probability to apply the operator of the SMA, whereas this paper extends the local search operator of the GBO using the expanded and narrowed exploration behaviors of the AO algorithm.

From the above studies, the GBO was used in different applications and showed promising results; it also has some advantages, such as ease of use and not having many predefined parameters that need to be optimized. However, it may show slow convergence in some cases and can be trapped in local optima; therefore, this paper introduces a new version of the GBO called EGBO to improve the local search phase of the standard GBO method.

2. Gradient-Based Optimizer

This section introduces a brief description of the gradient-based optimizer (GBO). The GBO is an optimization algorithm proposed by [13]. The optimization process of the GBO contains two main phases: gradient search rule (GSR) and local escaping operator (LEO). These phases are explained in the following subsections.

2.1. Gradient Search Rule (GSR)

The GSR is used to promote the GBO exploration. The following equations are applied to perform the GSR and to update the position .

where and . l and L denote the current and total iterations. is a random value . denotes a normally distributed number. is computed using Equation (5).

where , , , and denote random numbers . In this stage, the is computed as in Equation (9).

After that, the solution of the next loop is computed, as in Equation (12) based on and and the current solution .

2.2. Local Escaping Operator (LEO)

The LEO is applied to improve the efficiency of the GBO algorithm. This phase starts if a random number () exceeds . It also uses another random number () to update the solutions using Equation (14) if ; otherwise, it will update the solution using Equation (15).

where , , and are random solutions. and are random numbers. , , and are generated as in Equations (16)–(18).

where and are generated randomly . in computed as in Equation (19).

where is a binary variable generated randomly. Algorithm 1 shows the structure of the GBO.

| Algorithm 1 Gradient-based optimizer. |

|

3. Proposed Method

This section explains the structure of the proposed EGBO method. The EGBO aims to improve the exploration phase of the standard GBO by using Lévy flight as well as the expanded and narrowed exploration behaviors of the AO algorithm [12]. In this regard, the local escaping operator of the GBO is extended to include the expanded and narrowed exploration behaviors. This modification adds more flexibility and reliability to the GBO to explore different hidden areas in the search space, reflecting on effectively reaching the optimal point.

The EGBO starts by generating its population as in Equation (20), then evaluates it to determine the best solutions.

where N and D denote the population size and dimension, respectively, of the given problem. r is a random function. and denote the lower and upper bounds.

Then, the GSR is applied to accelerate the convergence behavior. After that, the local escaping operator phase is used to maintain the current solution. In this stage, a random variable () is checked to run the improvement phase. This phase runs an expanded or narrowed exploration (using a random variable). Therefore, if , run the expanded exploration; else, run the narrowed exploration.

The EGBO uses Equation (21) to apply the expanded exploration as follows:

where denotes the best solution so far. l and L denote the current and total iterations. is the average of the current solution, and it is calculated as:

Furthermore, the EGBO uses Equation (23) to apply the narrowed exploration. It also applies the Lévy flight distribution to update the solutions as follows:

where applies the Lévy flight distribution as in Equations (24) and (25). is a random solution.

where and . u and denote random numbers ∈ [0,1]. y and q are two variables that simulate the spiral shape, and they are calculated as in Equations (26)–(28), respectively.

where is a random value . and , these values are selected based on the recommendation of the AO study [12].

After that, the best solution is determined and saved using the fitness function. This sequence is repeated until the stop condition is reached. Finally, the best result is presented. Algorithm 2 shows the pseudo-code of the proposed EGBO method.

| Algorithm 2 Pseudo-code of the proposed EGBO method. |

|

4. Experiment and Results

In this section, the proposed EGBO is evaluated in two experiments; the first is global optimization, and the second is feature selection. All results of the proposed EGBO are compared to six algorithms: GBO, particle swarm optimization (PSO) [21], genetic algorithms (GA) [22], differential evolution (DE) [23], dragonfly algorithm (DA) [24], and moth-flame optimization (MFO) [25]. These algorithms are selected because they have shown stability and good results in the literature. They have different behaviors in exploring the search space; for instance, the PSO uses the particle’s position and velocity, whereas the GA uses three phases: selection, crossover, and mutation.

The parameters of all algorithms were set as mentioned in their original paper, whereas the global parameters were as follows: the search agents’ numbers = 25 and the maximum iteration number = 100. The number of the fitness function evaluation is set to 2500 as a stop condition, and each algorithm is applied with 30 independent runs.

4.1. First Experiment: Solving Global Optimization Problem

In this section, the proposed EGBO method is assessed using CEC2019 [26] benchmark functions to solve global optimization functions and the results are compared to some well-known algorithms. This experiment aims to evaluate the proposed EGBO in solving different types of test functions.

The comparison is performed using a set of performance measures: the mean (Equation (29)), min, max, and standard deviations (Equation (30)) of the fitness functions, and the computation times for all algorithms. All experimental results are listed in Table 1, Table 2, Table 3, Table 4 and Table 5.

where f is the produced fitness value and denotes the mean value of f. N indicates the size of the sample.

Table 1.

Results of the average measure of the fitness function values.

Table 2.

Results of the Std measure of the fitness function values.

Table 3.

Results of the max measure of the fitness function values.

Table 4.

Results of the min measure of the fitness function values.

Table 5.

Results of the computation times of the fitness function values.

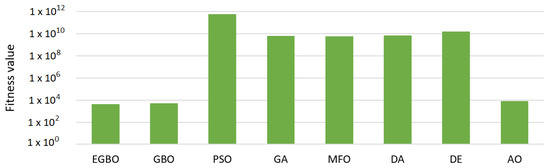

Table 1 tabulates the results of the mean of the fitness function measure. In that table, the proposed EGBO outperformed the other methods in six out of ten functions (i.e., F1–F5, F9); therefore, it was ranked first. The GA was ranked second by obtaining the best values in two functions, F7 and F8. The GBO came in the third rank with the best values in three functions, F1–F3, followed by the MFO. The rest of the algorithms were ordered: the AO, DE, DA, and PSO, respectively. Figure 1 illustrates the average fitness function values for all methods over all functions.

Figure 1.

Average fitness function values for all methods in all functions.

The results of the standard deviation measure are reported in Table 2 to show the stability of each method. In this measure, the smallest value indicates good stability behavior within the independent runs. The results show that the proposed EGBO showed the best stability in 70% of the functions compared to the other methods followed by the DE and GBO. The GA, AO, and MFO came in the fourth, fifth, and sixth ranks.

The results of the max measure are listed in Table 3. In this table, the worst value of each algorithm for each function is recorded. As seen in the table, the worst values of the proposed EGBO were better than the compared methods in six out of ten functions (i.e., F1–F3, F5, and F8–F9). The GA was ranked second by obtaining the best values in both F6 and F10. The MFO and GBO came in the third and fourth ranks, followed by the DE and AO.

Furthermore, the results of the min measure are presented in Table 4. In this measure, the best values so far obtained by each algorithm are recorded. As seen in the results, the proposed EGBO obtained the best values in four out of ten functions (i.e., F1, F2, F5, and F9) and obtained good results in the rest of the functions. The GA ranked second by obtaining the minimum values in F4 and F8 functions. The GBO came in third, followed by the MFO, DA, D, PSO, and AO.

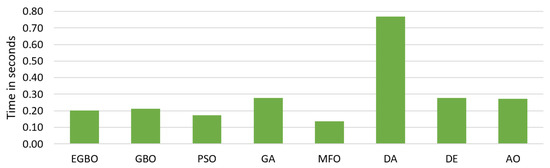

The computation time is also considered in Table 5. In this measure, the MFO algorithm was the fastest among all methods, followed by the PSO and AO. The EGBO consumed an acceptable computation time in each function and was ranked fourth, followed by GBO, GA, and DE. The slower algorithm was the DA; it recorded the longest computation time in each function. Figure 2 illustrates the average computation times for all methods over all functions.

Figure 2.

Average computation times for all methods in all functions.

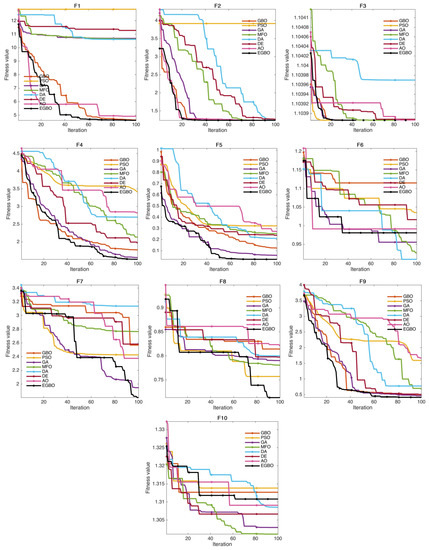

Moreover, Figure 3 illustrates the convergence curves for all methods during the optimization process. From this figure, it can be seen that the proposed EGBO can effectively maintain the populations to converge toward the optimal value as shown in F1, F4, F5, and F9. The PSO, GBO, and MFO also showed second-best convergence. In contrast, the DA algorithm showed the worst convergence in most functions.

Figure 3.

Convergence curves for the proposed EGBO and the compared methods.

Furthermore, Table 6 records the Wilcoxon rank-sum test as a statistical test to check if there is a statistical difference between the EGBO and the compared algorithms at a p-value < 0.05. The results, as recorded in Table 6, show significant differences between the proposed EGBO and the compared algorithms, especially with MFO, DA, DE, and AO, which indicates the effectiveness of the EGBO in solving global optimization problems.

Table 6.

Results of the Wilcoxon rank-sum test for global optimization.

In light of the above results, the proposed EGBO method can effectively solve global optimization problems and provide promising results compared to the other methods. Therefore, in the next section, it will be evaluated in solving different feature selection problems.

4.2. Second Experiment: Solving Feature Selection Problem

In this part, the proposed EGBO method is assessed in solving different feature selection problems using twelve well-known feature selection datasets from [27]; Table 7 lists their descriptions.

Table 7.

Datasets description.

The performance of the proposed method is evaluated by five measures, namely fitness value (Equation (31)), accuracy (Acc) as in Equation (32), standard deviation (Std) as in Equation (30), number of selected features, and the computation time for each algorithm. The results are recorded in Table 8, Table 9, Table 10, Table 11, Table 12 and Table 13.

where denotes the error value of the classification process (kNN is used in this paper as a classifier). The second term defines the selected feature number. is used to balance the number of the selected features and the classification error. c and C are the current and ’all-feature’ numbers, respectively.

where the number of positive classes correctly classified is (), whereas the number of negative classes correctly classified is (). Both and are the numbers of positive and negative classes incorrectly classified, respectively.

Table 8.

Results of the fitness function values.

Table 9.

Results of the standard deviation.

Table 10.

Ratios of the selected features.

Table 11.

Results of the accuracy measure.

Table 12.

Computation times for all algorithms.

Table 13.

Results of the Wilcoxon rank-sum test.

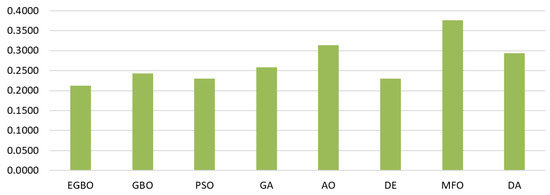

Table 8 shows the results of the fitness function values for all algorithms over all datasets. These results indicate that the proposed EGBO obtained the best fitness value among the compared algorithms and performed well in all datasets. The DE obtained the second-best results in 75% of the datasets. The PSO came in third, followed by GBO, GA, DA, AO, and MFO. Figure 4 illustrates the average of this measure over all datasets, which shows that the EGBO obtained the smallest average among all methods.

Figure 4.

Average of the fitness function values for all methods.

The stability results of all algorithms are listed in Table 9. As in that table, the proposed EGBO showed the most stable algorithm in 7 out of 12 datasets: glass, tic-tac-toe, waveform, clean1data, SPECT, Zoo, and heart. The GA and DE ranked second and third for stability, respectively, followed by PSO, DA, and GBO.

As mentioned above, this experiment aims to decrease each dataset’s features by deleting the redundant descriptors and keeping the best ones. Therefore, Table 10 reports the number of selected features in each dataset. From Table 10, we can see that the EGBO chose the smallest number of features in 9 out of 12 datasets and showed good performance in the remaining datasets. The GBO was ranked second, followed by MFO, AO, DE, PSO, and GA. In this regard, the small number of features is sometimes the best; therefore, we used the classification accuracy measure to evaluate the obtained feature by each algorithm.

Consequently, the classification accuracy measure was used to evaluate the proposed method’s ability to classify the samples of each dataset correctly. The results of this measure were recorded in Table 11. This table shows that the EGBO ranked first; it outperformed the other methods and obtained high-accuracy results in all datasets. The DE came in the second rank, whereas the PSO obtained the third, followed by the GBO, GA, DA, and AO. In contrast, the MFO recorded the worst accuracy measures in most datasets.

The computation times for all methods were also measured. Table 12 records the time consumed by each algorithm. By analyzing the results, we can note that all algorithms consumed similar times to some extent. In detail, the EGBO was the fastest in 59% of the datasets, followed by PSO, MFO, DE, GBO, and DA, respectively, whereas the GA and AO recorded the longest computation time.

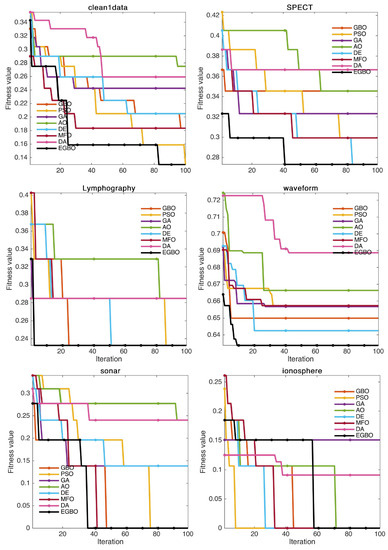

Furthermore, an example of the convergence curves for all methods is illustrated in Figure 5. In this figure, a sample of the datasets is presented, which shows that the proposed EGBO method converged to the optimal values faster than the compared algorithms, which indicates the good convergence behaviors of the EGBO when solving feature selection problems.

Figure 5.

Example of the convergence curves for the proposed EGBO and the compared methods.

In addition, Table 13 shows the Wilcoxon rank-sum test as a statistical test to check if there is a statistical difference between the proposed method and the other algorithms at a p-value < 0.05. From this table, we can see that there are significant differences between the proposed methods and the other algorithms in most datasets, indicating the EGBO’s effectiveness in solving different feature selection problems.

From the previous results, the proposed EGBO method outperformed the compared algorithms in most cases in solving global optimization problems and selecting the most relative features. These promising results can be attributed to a few reasons, e.g., the local escaping operator of the GBO was extended to include the expanded and narrowed exploration behavior, which added more flexibility and reliability to the traditional GBO algorithm. This extension increased the ability of the GBO to explore more areas in the search space, reflecting on effectively reaching the optimal point. On the other hand, although the EGBO obtained good results in most cases, it failed to show the best results in the computation time measure, namely in the global optimization experiment. This defect can be due to the traditional GBO consuming a relatively longer time than the compared algorithm when performing an optimization task. Therefore, this defect can be studied in future work. Generally, the exploration behaviors used in the EGBO increased the performance of the traditional algorithm in terms of performance measures.

5. Conclusions

This paper proposed a new version of the gradient-based optimizer (GBO), called EGBO, to solve diverse optimization problems, namely global optimization and feature selection. The local search of the EGBO was improved and expanded using expanded and narrowed exploration behaviors to increase the ability of the GBO to explore broad areas in the search space. The effectiveness of the EGBO was checked and evaluated using CEC2019 as global optimization functions and twelve benchmark feature selection datasets. The results were analyzed and compared to a set of well-known optimization algorithms using six performance measures. The EGBO showed promising results in solving global optimization problems, recording the highlight accuracy, selecting the most significant features, and outperforming the compared methods and the traditional version of the GBO in terms of performance measures. However, the computation time of the EGBO needs to be improved. In future works, the EGBO will be evaluated and applied in parameter estimation, image segmentation, and classifier optimization. In addition, the complexity of the EGBO will be maintained, and its initial population will be improved using chaotic maps to add more diversity to the search space.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are openly available in [26,27].

Conflicts of Interest

The author declares no conflict of interest.

References

- Tubishat, M.; Ja’afar, S.; Alswaitti, M.; Mirjalili, S.; Idris, N.; Ismail, M.A.; Omar, M.S. Dynamic salp swarm algorithm for feature selection. Expert Syst. Appl. 2021, 164, 113873. [Google Scholar] [CrossRef]

- Ewees, A.A.; ElLaban, H.A.; ElEraky, R.M. Features selection for facial expression recognition. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 1–6. [Google Scholar]

- Huda, R.K.; Banka, H. Efficient feature selection methods using PSO with fuzzy rough set as fitness function. Soft Comput. 2022, 26, 2501–2521. [Google Scholar] [CrossRef]

- Gaheen, M.M.; ElEraky, R.M.; Ewees, A.A. Automated students arabic essay scoring using trained neural network by e-jaya optimization to support personalized system of instruction. Educ. Inf. Technol. 2021, 26, 1165–1181. [Google Scholar] [CrossRef]

- Ewees, A.A.; Al-qaness, M.A.; Abualigah, L.; Oliva, D.; Algamal, Z.Y.; Anter, A.M.; Ali Ibrahim, R.; Ghoniem, R.M.; Abd Elaziz, M. Boosting Arithmetic Optimization Algorithm with Genetic Algorithm Operators for Feature Selection: Case Study on Cox Proportional Hazards Model. Mathematics 2021, 9, 2321. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, R.; Wang, X.; Chen, H.; Li, C. Boosted binary Harris hawks optimizer and feature selection. Eng. Comput. 2021, 37, 3741–3770. [Google Scholar] [CrossRef]

- Banerjee, D.; Chatterjee, B.; Bhowal, P.; Bhattacharyya, T.; Malakar, S.; Sarkar, R. A new wrapper feature selection method for language-invariant offline signature verification. Expert Syst. Appl. 2021, 186, 115756. [Google Scholar] [CrossRef]

- Sathiyabhama, B.; Kumar, S.U.; Jayanthi, J.; Sathiya, T.; Ilavarasi, A.; Yuvarajan, V.; Gopikrishna, K. A novel feature selection framework based on grey wolf optimizer for mammogram image analysis. Neural Comput. Appl. 2021, 33, 14583–14602. [Google Scholar] [CrossRef]

- Ewees, A.A.; Ismail, F.H.; Ghoniem, R.M. Wild Horse Optimizer-Based Spiral Updating for Feature Selection. IEEE Access 2022, 10, 106258–106274. [Google Scholar] [CrossRef]

- Bandyopadhyay, R.; Basu, A.; Cuevas, E.; Sarkar, R. Harris Hawks optimisation with Simulated Annealing as a deep feature selection method for screening of COVID-19 CT-scans. Appl. Soft Comput. 2021, 111, 107698. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.; Gandomi, A.H. Aquila Optimizer: A novel meta-heuristic optimization Algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Bozorg-Haddad, O.; Chu, X. Gradient-based optimizer: A new metaheuristic optimization algorithm. Inf. Sci. 2020, 540, 131–159. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Gong, W.; Heidari, A.A.; Golilarz, N.A.; Samadi-Koucheksaraee, A.; Chen, H. Gradient-based optimization with ranking mechanisms for parameter identification of photovoltaic systems. Energy Rep. 2021, 7, 3979–3997. [Google Scholar] [CrossRef]

- Khalilpourazari, S.; Doulabi, H.H.; Çiftçioğlu, A.Ö.; Weber, G.W. Gradient-based grey wolf optimizer with Gaussian walk: Application in modelling and prediction of the COVID-19 pandemic. Expert Syst. Appl. 2021, 177, 114920. [Google Scholar] [CrossRef] [PubMed]

- AlRassas, A.M.; Al-qaness, M.A.; Ewees, A.A.; Ren, S.; Abd Elaziz, M.; Damaševičius, R.; Krilavičius, T. Optimized ANFIS model using Aquila Optimizer for oil production forecasting. Processes 2021, 9, 1194. [Google Scholar] [CrossRef]

- Ma, L.; Li, J.; Zhao, Y. Population Forecast of China’s Rural Community Based on CFANGBM and Improved Aquila Optimizer Algorithm. Fractal Fract. 2021, 5, 190. [Google Scholar] [CrossRef]

- Zhou, W.; Wang, P.; Heidari, A.A.; Zhao, X.; Turabieh, H.; Chen, H. Random learning gradient based optimization for efficient design of photovoltaic models. Energy Convers. Manag. 2021, 230, 113751. [Google Scholar] [CrossRef]

- Jiang, Y.; Luo, Q.; Zhou, Y. Improved gradient-based optimizer for parameters extraction of photovoltaic models. IET Renew. Power Gener. 2022, 16, 1602–1622. [Google Scholar] [CrossRef]

- Ewees, A.A.; Ismail, F.H.; Sahlol, A.T. Gradient-based optimizer improved by Slime Mould Algorithm for global optimization and feature selection for diverse computation problems. Expert Syst. Appl. 2023, 213, 118872. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Mitchell, M. An Introduction to Genetic Algorithms; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 2016, 27, 1053–1073. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Price, K.; Awad, N.; Ali, M.; Suganthan, P. Problem definitions and evaluation criteria for the 100-digit challenge special session and competition on single objective numerical optimization. In Technical Report; Nanyang Technological University: Singapore, 2018. [Google Scholar]

- Dua, D.; Graff, C. UCI Machine Learning Repository, 2019; University of California, Irvine, School of Information and Computer Sciences: Irvine, CA, USA, 2019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).