Abstract

We introduce a novel iterative algorithm, termed the Heavy-Ball-Based Hard Thresholding Pursuit for sparse phase retrieval problem (SPR-HBHTP), to reconstruct a sparse signal from a small number of magnitude-only measurements. Our algorithm is obtained via a natural combination of the Hard Thresholding Pursuit for sparse phase retrieval (SPR-HTP) and the classical Heavy-Ball (HB) acceleration method. The robustness and convergence for the proposed algorithm were established with the help of the restricted isometry property. Furthermore, we prove that our algorithm can exactly recover a sparse signal with overwhelming probability in finite steps whenever the initialization is in the neighborhood of the underlying sparse signal, provided that the measurement is accurate. Extensive numerical tests show that SPR-HBHTP has a markedly improved recovery performance and runtime compared to existing alternatives, such as the Hard Thresholding Pursuit for sparse phase retrieval problem (SPR-HTP), the SPARse Truncated Amplitude Flow (SPARTA), and Compressive Phase Retrieval with Alternating Minimization (CoPRAM).

Keywords:

sparse phase retrieval; Heavy-Ball method; Hard Thresholding Pursuit; restricted isometry property MSC:

90C26; 65K05; 49M37

1. Introduction

In many engineering problems, one wishes to reconstruct signals from the (squared) modulus of its Fourier (or any linear) transform. This is called phase retrieval (PR). Particularly, if the target signal is sparse, this is referred to a sparse phase retrieval problem. The corresponding mathematical model is used to recover a sparse vector from a system of phaseless quadratic equations taking the form

where are a set of n-dimensional sensing vectors, for are observed modulus data, and s is the sparsity level (s is much less than n and is assumed to be known a priori for theoretical analysis purposes). As a matter of fact, solving the above problem is conducted to solve the following optimization system with the optimal value equal to zero

where the measurement matrix A and observed data y are processed as follows:

The phase retrieval problem arises naturally in many important applications, such as X-ray crystallography, microscopy, and astronomical imaging, etc. Interested readers are referred to [1] and its references for a more detailed discussion of the scientific and engineering background of the model.

Although several heuristic methods [2,3,4,5] are commonly used to solve (1), it is generally accepted that (1) is a very challenging, ill-posed, nonlinear inverse problem in both theory and practice. In particular, (1) is an NP-hard problem [6]. At present, phase retrieval approaches can be mainly categorized as convex and nonconvex. A popular class of nonconvex approaches is based on alternating projections, e.g., the groundbreaking works by Gerchberg and Saxton [2], Fienup [3], Chen et al. [7], and Waldspurger [8]. The convex alternatives either rely on the so-called Shor’s relaxation to obtain a solver based on semidefinite programming (SDP), known simply as PhaseLift [9] and PhaseCut [10], or solve the problem of basis pursuit in dual domains, as conducted in PhaseMax [11,12]. Next, a series of breakthrough results [9,13,14,15] provided provably valid algorithmic processes for special cases, where the measurement vectors are randomly derived from some certain multivariate probability distribution, such as the Gaussian distributions. Phase retrieval problems [9,13,14] require the number of observations m to exceed the problem dimension n. However, for the sparse phase retrieval problem, the true signal can be successfully recovered, even if the number of measurements m is less than the length of the signal n. In particular, a recent paper [16] utilized the random sampling technique to achieve the best empirical sampling complexity; in other words, it requires less measurements than state-of-the-art algorithms for sparse phase retrieval problems. For more on phase recovery, interested readers can refer to [17,18,19,20,21,22,23,24,25,26,27].

There is a close relationship between the phase recovery problem and the compressed sensing problem [28,29,30]. Compressed sensing, also known as compressed sampling, is a new information technology used to find sparse solutions to underdetermined linear systems. Its corresponding mathematical model can be expressed as finding a sparse vector x from the following linear problem with sparsity constraints

Some mainstream algorithms for solving this problem have been proposed, including Iterative Hard Thresholding (IHT) [31], the Orthogonal Matching Pursuit (OMP) [32], the Compressive Sampling Matching Pursuit (CoSaMP) [33], the Subspace Pursuit (SP) [34], and the Hard Thresholding Pursuit (HTP) [29]. The optimization model for the problem (4) is

Comparing two problems, (1) and (4), we see that problem (1) has an extra absolute value over problem (4). This makes the objective function of system (2) nonsmooth compared to system (5). Accordingly, this leads to a huge difference in the design of our algorithms. How can we naturally modify the compressed sensing algorithm to solve the phase recovery problem? Recently, a breakthrough in this direction was the algorithm (we call it SPR-HTP) proposed in [15], which is a modification of HTP from linear measurements to phaseless measurements. Inspired by [15], we think that an acceleration method could be used to accelerate SPR-HTP. The Heavy-Ball was first introduced by Polyak [35], and it is an old as well as efficient way to speed things up. More recently, in [36], the authors combined the Heavy-Ball acceleration method with the classic HTP to obtain the HBHTP for solving compressed sensing. Their numerical experiment shows that the HBHTP performs better than the classical HTP in terms of the recovery capability and runtime.

Considering the above motivation, we propose, in this paper, a novel nonconvex algorithm to offset the disadvantages of existing algorithms. The new algorithm is called the Heavy-Ball-Based Hard Thresholding Pursuit for sparse phase retrieval problem (SPR-HBHTP). Similar to the most of the existing nonconvex algorithms, our proposed algorithm is divided into two stages: the initialization part and the iteration part. The optimization model (2) could have multiple local minimizers due to its nonconvexity. Hence, the initialization step is crucial to ensure the initial point can fall within a certain neighborhood of the real signal. Common initialization methods are orthogonality-promoting initialization, spectral initialization, variant of the spectral initialization, etc. In our algorithm, we adopt off-the-shelf spectral initialization as the initial step. For more details, please refer to reference [15]. For the iterative refinement stage, the search direction for SPR-HTP is

where ⊙ represents the Hadamard product (the definition is given below) of two vectors, and is a positive parameter. Our algorithm SPR-HBHTP is a combination of SPR-HTP and the Heavy-Ball method, and the search direction is represented as

with two parameters , . Clearly SPR-HBHTP reduces to SPR-HTP as . The following theoretical and numerical experiments show that the modified algorithm (SPR-HBHTP) using the momentum term matches the best available state-of-the-art sparse phase retrieval methods. With the help of the restricted isometry property (RIP) [37], the convergence of our algorithm is established, and our algorithm is proved to have robust sparse signal recovery under inaccurate measurement conditions. Moreover, our algorithm can exactly recover a s-sparse signal in finite steps if the measurement is accurate.

Our contributions in this paper are as follows: we propose a new class of HTP-type algorithms called the Heavy-Ball-Based Hard Thresholding Pursuit for sparse phase retrieval problem (SPR-HBHTP), which is a natural combination of the Hard Thresholding Pursuit for sparse phase retrieval (SPR-HTP) and the classical Heavy-Ball (HB) acceleration method. In the theoretical analysis, a new theoretical analysis framework is introduced to establish the theoretical performance results of the proposed algorithm by resorting to the restricted isometry property of measurement matrices. The local convergence of our algorithm is established regardless of whether there is noise in the measurement. An estimation of the iteration number is established under the framework of accurate measurements. For the aspect of numerical experiments, the phase transition curves, grayscale maps, and algorithm selection maps demonstrate that the new algorithm SPR-HBHTP is numerically much more efficient than existing alternatives, such as SPR-HTP, SPARTA, and CoPRAM in terms of both the recovery success rate and the recovery time.

The rest of the paper is organized as follows: we describe the SPR-HBHTP in Section 2. A theoretical analysis of the proposed algorithm is conducted in Section 3. Numerical experiments to illustrate the performance of the algorithm are given in Section 4, and conclusions are drawn in the last section.

2. Preliminary and Algorithms

2.1. Notations

We introduce some notations that are used throughout the paper. Let denote the set and be the cardinality of a set S. Denote by the complement of a set S in . At , the signum function is defined as

The Hard Thresholding operator keeps the s largest absolute entries and sets other ones to zeros. For an index set , denotes the matrix obtained from by keeping only the columns indexed by , and stands for the vector obtained by retaining the components of x indexed by and zeroing out the remaining components of x. The set is called the support of x, and stands for the support of . For two sets, S and , is the symmetric difference between S and . For , the distance between x and z is defined as

The notation ⊙ represents the Hadamard product of two vectors, i.e.,

2.2. New Algorithms

The alternative initialization methods include orthogonality-promoting initialization [38], spectral initialization [39], or the other initialization [40], etc. In this paper, we use spectral initialization to constitute the initial step of the algorithm.

| Algorithm 1: Heavy-Ball-Based Hard Thresholding Pursuit for sparse phase retrieval (SPR-HBHTP). |

Input: a matrix , a vector , and two parameters and . Initialization: where is the unit principal eigenvector of W. Set . Repeat: Output: the s-sparse vector |

The following is a brief explanation of initialization in the Algorithm 1. For a more rigorous and in-depth discussion of this aspect, please refer to [15,39].

Estimate the support: Since = , contains a mass of correct support, it could be a good approximation of the support of x.

Compute the signal: Note that

Hence, the principal eigenvector of the matrix W in Algorithm 1 gives a good initial direction guess of the true signal x. We select the principal eigenvector vector with a length of as the initial point to ensure that the power of the initial estimate is close to that of the true signal x.

The most important conclusion of the initialization process is that the initial point can fall within a certain neighborhood of the real signal relative to the distance measurement defined in (6).

Lemma 1

([39] [Theorem IV.1]). Let be i.i.d Gaussian random vectors with a mean of 0 and variance matrix of I. Let be generated by initialization in Algorithm 1 with the input for , where is a signal satisfying . Then, for any , there exists a positive constant C depending only on , such that if , we have

with a probability of at least .

3. Convergence Analysis

We first list some of the main Lemmas and present our results on the local convergence of the proposed algorithm (SPR-HBHTP).

Lemma 2

([30] [Theorem 9.27]). Let be i.i.d Gaussian random vectors with a mean of 0 and variance matrix of I. Let A be defined in (3). Some universal positive constants , exist, such that for any natural number and any , if , then A satisfies the following r-RIP with a probability of at least ,

Lemma 3

([36] [Lemma 3.1]). Suppose that the non-negative sequence satisfies

where and . Then,

with

Lemma 4

- (i).

- If , then

- (ii).

- If , then

Lemma 5

([15] [Lemma 2]). Let be i.i.d. Gaussian random vectors with a mean of 0 and variance matrix of I. Let be any constant in . After fixing any given , the universal positive constants , exist. If

then with a probability of at least , it holds that

whenever satisfies and .

Lemma 6.

Let be i.i.d. Gaussian random vectors with a mean of 0 and variance matrix of I. Let be given as

For any , the universal positive constants and exist, such that if

then the following inequality holds with a probability of at least

where and with .

Proof of Lemma 6.

Pick , where comes from Lemmas 2 and 5. The condition (12) ensures the validity of (7) with a probability of at least and (11) with a probability of at least , respectively. Let

It is easy to see that . In other words, the probability of (7) and (11) being true is at least . By replacing , and used in [15] [Equation (16)] by , z and x, we obtain

This, together with (10) in Lemma 4, leads to

□

The local convergence property of Algorithm 1 is established in the following result.

Theorem 1.

(Local convergence). Let be i.i.d. Gaussian random vectors with a mean of 0 and variance matrix of I. Let be any constant in . Suppose that the RIC, , of matrix A and the parameters α and β obey

where

and is the unique root in the interval of the equation with

For a s-sparse signal , the universal positive constants , exist, such that if

then the sequence generated by Algorithm 1 with input measured data satisfies

with a probability of at least , where

and is guaranteed under the conditions (13) and (14).

Proof of Theorem 1.

Note that in the design of Algorithm 1 and is generated by utilizing the initialization process. Hence, by Lemma 1. Since , we can assume without a loss of generalization that (the case of can be proved by following a similar argument). Hence, , . Our proof is based on mathematical induction, and hence, we further assume that , .

The first step of the proof is a consequence of the pursuit step of Algorithm 1. Recall that

As the best -approximation to from the space , the vector is characterized by

i.e., or . We derive, in particular,

Due to , and . It follows from Lemmas 4 and 6 that if , then

with a probability of at least and

with a probability of at least . Combining (18), (19) with (20) leads to

Hence,

Denote . This reads as for the quadratic polynomial defined by

Hence, is bounded by the largest root of g, i.e.,

Based on for , we obtain

The second step of the proof is a consequence of the Hard Thresholding step of Algorithm 1. Denote

Since and , we have

According to and , we yield

Taking and into consideration, we write

Hence,

Note from (23) that

Then,

where the second inequality is based on the fact that

with a probability of at least as by (9) in Lemma 4 due to , and

with a probability of at least as by Lemma 6 due to . Combining (24) with (25), we have

Putting (22) and (28) together yields

where b is given by (17). The recursive inequality (29) is in the form (8) in Lemma 3 by letting . Note that, to ensure the validity of (19), (20), (26) and (27) with a corresponding probability of at least , the number of measurements must satisfy

Hence, we can pick and , satisfying

and

In other words, the above choices of and guarantee that (19), (20), (26), and (27) hold with a probability of at least as . This, in turn, means that the (29) holds accordingly.

In the following, we need to show that the coefficients of the right-hand side of the (29) satisfy the conditions required for Lemma 3, i.e., .

First, we clarify that the parameters appearing in (13) and (14) are well-defined. On the interval , define

Note that and the root of is . It is easy to see

Thus,

Note that

Then, is equivalent to saying that . Taking the square on both sides yields . The roots of the above equation in the interval are . By substituting these into (31), we obtain the root of in (0,1) is . It is easy to see that h is monotonically decreasing on and increasing on (,1). Due to , as by the monotonicity of h. On the other hand, is monotonically increasing and as . Hence for the given and , there is a unique solution in the interval , denoted by , satisfying . In addition according to the decreasing monotonicity of h on this interval, we know for all . Thus, as . This, together with (30), yields , i.e.,

Multiplying on both sides of (33) yields

where the last step comes from

due to . Note that (35) can be rewritten as

Using the fact again, (36) rearranges into

This means that the range for in (14) is also well-defined.

To show , let us consider the following two cases.

- Case 1:

- ifthenand hence

- Case 2:

- ifthenand hence

On both sides, we always obtain , i.e., . Therefore, by Lemma 3.

According to the recursive relation (29) and , as shown above, we obtain

Hence, it follows from (29) and Lemma 3 that

The above analysis assumes that the measurements are noiseless. The following result demonstrates that the Algorithm 1 is robust in the presence of noise.

Theorem 2.

(The noisy case): Let be i.i.d. Gaussian random vectors with a mean of 0 and variance matrix of I. Suppose that the RIC, , of matrix A and the parameters α and β satisfy the conditions (13) and (14). Take a s-sparse signal and , satisfying

where , b, η, are given in Theorem 1. The universal positive constants , exist, such that if

then the sequence generated by Algorithm 1 with the input measured data satisfies

with a probability of at least , where is given by (17).

Proof of Theorem 2.

Let be the iterate sequence generated by Algorithm 1 in the framework of noisy data. Thus, in this case . Following a similar argument to that in [15] [Equation (27)], we obtain from Lemma 6 that

Now, we need to modify the proof of Theorem 1 for the noisy case, i.e., some formula appearing in the proof of Theorem 1 should be replaced. Precisely, (21) and (22) take the following forms:

and

where and . In addition, (25) is modified to

Putting (39) and (41) together and recalling the definitions of and above, we then have

where b, , are given exactly as shown in Theorem 1. Hence, , as , satisfy conditions (13) and (14). This further ensures that by applying Lemma 3 to the recursive Formula (42).

It remains to be shown that the iterative sequence belongs into a neighbor of x. First, , . Now, assume , . We claim that . Indeed, recall that

i.e.,

Since and , we obtain

Taking into account (42) and applying Lemma 3, we obtain

Moreover, this inequality holds true by following symmetrical arguments, such as replacing x by . Thus, according to the definition of ’dist’ given in (6), the desired result follows. □

Finite Termination

In the framework of accurate measurements, the true signal can be recovered after a finite number of iterations. An estimation of the iteration number is given below.

Theorem 3.

Let be i.i.d. Gaussian random vectors with a mean of 0 and variance matrix of I. Suppose that the RIC, , of the matrix A and the parameters α and β satisfy the conditions (13) and (14) with . For a s-sparse signal and an initial point given in Algorithm 1, the universal positive constants , exist, such that if

then the s-sparse signal is recovered by Algorithm 1 with in, at most,

iterations with a probability of at least , where , η and τ, b are given by (15) and (17), respectively, in Theorem 1, and , are the smallest nonzero entries of x, y in the modulus.

Proof of Theorem 3.

The case of is trivial. Indeed, under this case, the initial point generated by the initial step of the Algorithm 1 is 0. This means that the true signal x is recovered in, at most, one step. Therefore, we only need to consider the case of .

We first assume that . Now, we need to determine an integer n such that . Recall that is given as shown in (23). According to the definitions of , for all and , we have

Note that

where is the smallest nonzero entry of x in the modulus, and

where due to . Combining the above two inequalities yields

It follows from (17) that

According to (29), we have

Taking into account the definition of in (23), we know that is satisfied as soon as (45) holds. Due to (47), this can be ensured, provided that

We next demonstrate that by contradiction. If , i.e., , according to Lemma 2, then A satisfies with a probability of at least as . Due to and , we can deduce that This contradicts the hypothesis.

Now, we also need to determine an integer n, such that . Denote

and

where and are given by (15) and (17), respectively, in Theorem 1, and is the minimum nonzero entry of y. According to the argument of proof given in part (b) of [15] [Theorem 1], we can obtain that as . Since it is already known from the previous proof that is the smallest positive integer, such that , then, as , we have both and . This ensures that, in the Algorithm 1,

Thus, due to . Therefore, Algorithm 1 successfully recovers the s-sparse signal after a finite number of iterations.

Similarly, if , we can obtain that and as . In this case, (48) takes the form

Thus, due to . This completes the proof. □

4. Numerical Experiments

In order to make our paper more convenient and clear to read, we give Table 1 before the numerical experiments.

Table 1.

The descriptions of the parameters and the abbreviations of the algorithm names.

All experiments were performed on a laptop with an Apple M1 processor and 8GB memory by using MATLAB R2021a. In terms of the recovery capability and average runtime, a comparison of SPR-HBHTP with three popular algorithms, including SPR-HTP [15] (an extension of HTP from traditional compressed sensing to sparse phase recovery), CoPRAM [39] (a combination of the classical alternating minimization approach for phase retrieval with the CoSaMP algorithm for sparse recovery) and SPARTA [38] (a combination of TWF and TAF) for the sparse phase retrieval problem is shown in this section.

In the following experiments, the s-sparse vector with a fixed dimension of is randomly generated, whose nonzero entries are independent identically distributed (i.i.d) and follow the standard normal distribution . Particularly, the indices in support of x follow a uniform distribution. The measurement matrix A is the i.i.d Gaussian matrix whose elements follow , which satisfies the RIP with a high probability as m is large enough (Chapter 9, [30]). On the other hand, the observed modulus data y are expressed as follows: in noiseless environments, and in noisy environments, where e is the noise vector with elements following and is the noise level.

The maximum number of iterations for all algorithms was set as 50. All initial points of the algorithms were generated by initialization in SPR-HBHTP, in which two initial points were produced for SPR-HBHTP, while the other algorithms just needed an initial point . The algorithmic parameters of SPARTA were , , and [38], and the stepsize of SPR-HTP was set as [15]. The choice of algorithmic parameters for SPR-HBHTP is discussed in Section 4.1. For each sparsity level s, 100 independent trials were used to test the success rates of the algorithms. The trial was said to be a ’success’ if the following recovery condition

was satisfied, where x is the target signal, is the corresponding approximated value generated by the algorithm, and the distance is given by (6).

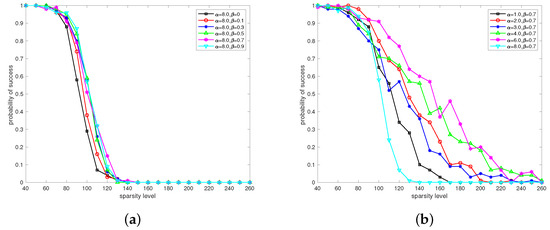

4.1. Choices of Parameters and

In order to choose suitable algorithmic parameters in terms of the recovery capability, we considered the success rates of SPR-HBHTP for recovery with a fixed value of in noiseless environments. The numerical results are displayed in Figure 1, in which the sparsity level s ranges from 40 to 260 with a stepsize of 10. Figure 1a corresponds to the case with and , while Figure 1b corresponds to the case with and . Figure 1a shows that the recovery ability of SPR-HBHTP becomes stronger with an increase in the coefficient of the momentum. Note that SPR-HBHTP reduces to SPR-HTP as , which indicates that an important role played by the momentum is to enlarge the range of the stepsize of SPR-HBHTP. From Figure 1b, we can see that SPR-HBHTP is sensitive to the stepsize , and its recovery capability with is stronger than that of . However, the recovery effect of SPR-HBHTP with is worse than that of for large sparsity levels s, while the former is slightly better than the latter for small s. Thus, we use and for the rest of the article.

Figure 1.

Comparison of the success rates of SPR-HBHTP with different parameters. (a) Different momentum coefficients . (b) Different stepsizes .

4.2. Phase Transition

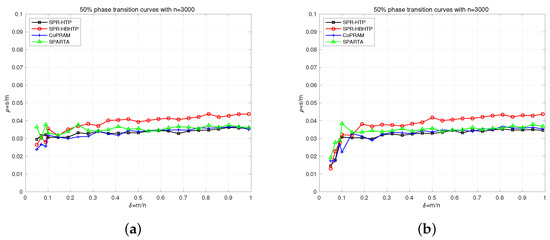

In this section, we use the phase transition curve (PTC) [42,43] and grayscale map to compare the recovery capabilities of the algorithms.

4.2.1. Phase Transition Curves

The phase transition curve is a logistic regression curve identifying a 50% success rate for the given algorithms in this paper. Indeed, a ratio of 50% can be replaced by other values in the interval based on the practical background. Denote and The -plane is separated by the PTC of an algorithm into success and failure regions (see Figure 2). The former corresponds to the region below the PTC, wherein the algorithm can reconstruct the target sparse signal successfully; the latter corresponds to the region above the PTC. In other words, the higher the PTC, the stronger the recovery capability of the algorithm.

Figure 2.

Comparison of the PTCs for the algorithms with accurate/inaccurate measurements. (a) Accurate measurements. (b) Inaccurate measurements with = 0.01.

To generate the PTC, are taken as follows

where the interval is equally divided into 20 parts. For each given in (49), the ‘glmfit’ function in Matlab is used to generate the logistic regression curve based on the success rates with different sparsity levels s. The PTC is obtained directly by identifying the point in the logistic regression curve with a 50% success rate. This technique is almost the same as that shown in [36], except that the ‘glmfit’ function is replaced by the logistic regression model. Thus, the process of the generation of the PTC is omitted here, and the interested reader can consult [36] for detailed information.

The comparison of the PTCs for algorithms including SPR-HBHTP, SPR-HTP, CoPRAM, and SPARTA is shown in Figure 2, wherein Figure 2a,b correspond to accurate and inaccurate measurements, respectively. From Figure 2a, we see that SPR-HBHTP has the highest PTC as , which indicates that the recovery capability of SPR-HBHTP is stronger than that of the other three algorithms, especially for larger . The PTCs in Figure 2b are similar to those in Figure 2a as ; that is, all algorithms are stable under small disturbances. However, when , all PTCs in Figure 2b decrease rapidly with a decrease in , which is different from the phenomena shown in Figure 2a. This indicates that all algorithms require more measurements to ensure the recovery effect under the disturbance for the sparse phase retrieval problem. Finally, it should be noted that the PTCs of SPR-HTP, CoPRAM, and SPARTA are close to each other in Figure 2a,b, which means that the reconstruction capability of these three algorithms is almost the same. Comparatively speaking, the recovery capability of SPR-HTP is slightly better than those of CoPRAM and SPARTA.

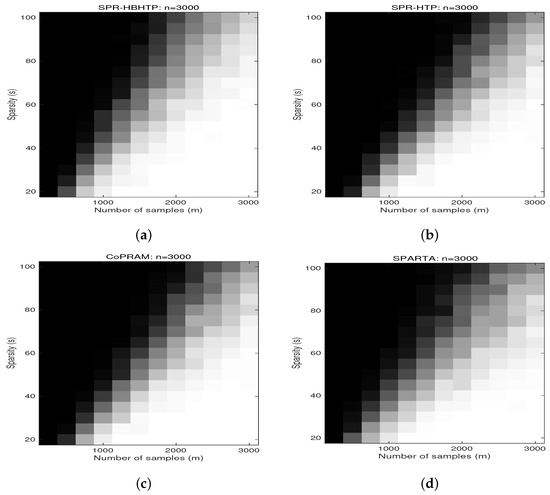

4.2.2. Greyscale Maps

A grayscale map is an image that contains only brightness information and does not contain color information. In grayscale maps, the successful recovery rate of the algorithms is expressed by the different gray levels of the corresponding blocks, where black indicates a successful recovery rate of 0%, white is 100%, and gray is 0% to 100%.

Grayscale maps for different algorithms, such as SPR-HBHTP, SPR-HTP, CoPRAM and SPARTA, with signal lengths of n = 3000 are displayed in Figure 3. In our experiments, the sample size m ranged from 250 to 3000 with a stepsize of 250 and the sparsity s ranged from 20 to 100 with a stepsize of 5. By comparing the four graphs in Figure 3, it can be seen that when the sparsity is relatively large, the recovery ability of SPR-HBHTP is stronger than that of other algorithms. For smaller sparsity s values, SPR-HTP and CoPRAM have almost the same recovery ability, and similarly, SPARTA and SPR-HBHTP have comparable recovery abilities. This is consistent with our results for phase transition curves.

Figure 3.

Grayscale map for different algorithms with n = 3000. (a) SPR-HBHTP. (b) SPR-HTP. (c) CoPRAM. (d) SPARTA.

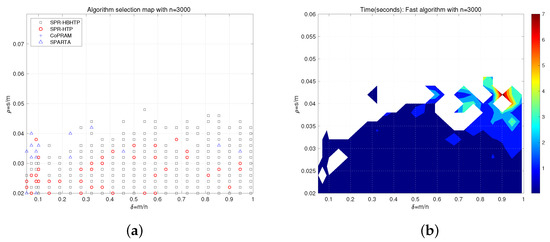

4.3. Algorithm Selection Maps

In practice, the algorithm that consumes the least amount of time should be selected naturally when more than one algorithm can reconstruct the target signal successfully. Hence, it is meaningful to choose the fastest algorithm at the intersection of the success regions of the algorithms, which builds the algorithm selection maps (ASM) proposed in [42,43]. Note that the algorithm will be selected automatically if it is the only one that can recover the signal successfully.

Denote and Next, we establish the ASM in the -plane with given as follows

where the intervals and are equally divided into 20 and 40 parts, respectively. For each , we tested 10 problem instances at for each algorithm with an increase in until the success frequency was less than 50%. The ASM and average runtime of the fastest algorithm with accurate measurements are summarized in Figure 4. Figure 4a indicates that SPR-HBHTP or SPR-HTP is the fastest algorithm in most areas, and CoPRAM is a slower algorithm relatively, since it does not appear in the ASM. Figure 4b shows us that the average runtime of the fastest algorithm is less than one second in most regions, and it increases by up to 3–7 s for larger and . This demonstrates that all algorithms will take more time, as the sparsity level s becomes larger.

Figure 4.

ASM and the shortest average runtime with accurate measurements. (a) ASM. (b) Average runtime of the fastest algorithm.

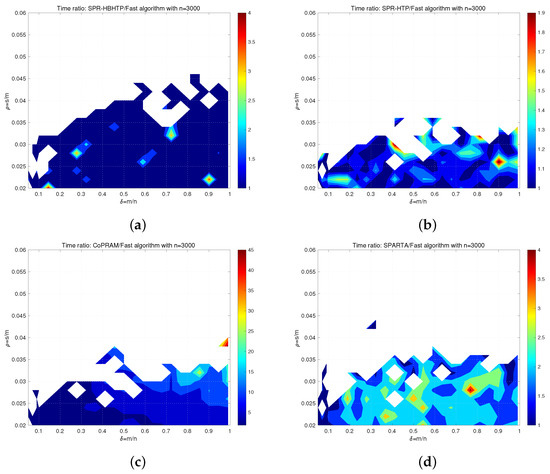

For comparing the algorithms thoroughly, we collected detailed information about their average runtimes, as shown in Figure 5, and the ratios of the average runtimes for the algorithms against the fastest one are shown in Figure 4b. Figure 5a,b reveal that the ratios of SPR-HBHTP and SPR-HTP are close to 1 in most areas, which indicates that they are faster than CoPRAM and SPARTA. In particular, the advantage value of SPR-HBHTP lies in the region with a larger compared to SPR-HTP. In Figure 5c, the ratio of CoPRAM is about 5–20 in most regions, and the minimum value is 5, which means that it is slower than the other three algorithms. Finally, by comparing Figure 5d with Figure 5a–c, we find that SPARTA is slower than SPR-HBHTP and SPR-HTP in most cases, but it is much faster than CoPRAM.

Figure 5.

The ratios of average runtimes for the algorithms against the fastest one. (a) SPR-HBHTP. (b) SPR-HTP. (c) CoPRAM. (d) SPARTA.

5. Conclusions

We introduced a second-order accelerated method for the Hard Thresholding Pursuit, named the Heavy-Ball-Based Hard Thresholding Pursuit, to reconstruct a sparse signal from phaseless measurements. Under the restricted isometry property, SPR-HBHTP enjoys an exact provable recovery in the finite steps as soon as the number of noiseless Gaussian measurements exceeds a certain bound. It is remarkably different from the existing phase recovery algorithms in terms of theoretical analysis. Moreover, numerical experiments on random problem instances indicate that our algorithm outperforms the state-of-the-art algorithms, such as SPR-HTP, CoPRAM, and SPARTA, in terms of its recovery success rate and computational times through the phase transition analysis. Conducting experiments on realistic situations to truly verify the practical performance of SPR-HBHTP is worthy of further research. In addition, studying the random alternating minimization method based on Heavy-Ball acceleration is an interesting research topic.

Author Contributions

Conceptualization, J.Z. and Z.S.; Methodology, Z.S. and J.T.; Investigation, Y.L. and J.Z.; writing—original draft, Y.L. and J.T.; Supervision, J.Z. and Z.S.; Funding acquisition, J.Z. and J.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (11771255), the Young Innovation Teams of Shandong Province (2019KJI013), the Shandong Province Natural Science Foundation (ZR2021MA066), the Natural Science Foundation of Henan Province (222300420520), and the Key Scientific Research Projects of Higher Education of Henan Province (22A110020).

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

References

- Shechtman, Y.; Eldar, Y.C.; Cohen, O.; Chapman, H.N.; Miao, J.W.; Segev, M. Phase retrieval with application to optical imaging: A contemporary overview. IEEE Signal Process Mag. 2015, 32, 87–109. [Google Scholar] [CrossRef]

- Gerchberg, R.; Saxton, W. A practical algorithm for the determination of plane from image and diffraction pictures. Optik 1972, 35, 237–246. [Google Scholar]

- Fienup, J.R. Phase retrieval algorithms: A comparison. Appl. Opt. 1982, 21, 2758–2769. [Google Scholar] [CrossRef] [PubMed]

- Marchesini, S. Phase retrieval and saddle-point optimization. J. Opt. Soc. Am. A 2007, 24, 3289–3296. [Google Scholar] [CrossRef] [PubMed]

- Nugent, K.; Peele, A.; Chapman, H.; Mancuso, A. Unique phase recovery for nonperiodic objects. Phys. Rev. Lett. 2003, 91, 203902. [Google Scholar] [CrossRef] [PubMed]

- Fickus, M.; Mixon, D.G.; Nelson, A.A.; Wan, Y. Phase retrieval from very few measurements. Linear Algebra Appl. 2014, 449, 475–499. [Google Scholar] [CrossRef]

- Chen, P.; Fannjiang, A.; Liu, G.R. Phase retrieval with one or two diffraction patterns by alternating projections with the null initialization. J. Fourier Anal. Appl. 2018, 24, 719–758. [Google Scholar] [CrossRef]

- Waldspurger, I. Phase retrieval with random gaussian sensing vectors by alternating projections. IEEE Trans. Inf. Theory 2018, 64, 3301–3312. [Google Scholar] [CrossRef]

- Candès, E.J.; Strohmer, T.; Voroninski, V. Phaselift: Exact and stable signal recovery from magnitude measurements via convex programming. Commun. Pure Appl. Math. 2013, 66, 1241–1274. [Google Scholar] [CrossRef]

- Waldspurger, I.; d’Aspremont, A.; Mallat, S. Phase recovery, maxcut and complex semidefinite programming. Math. Program. 2015, 149, 47–81. [Google Scholar] [CrossRef]

- Goldstein, T.; Studer, C. Phasemax: Convex phase retrieval via basis pursuit. IEEE Trans. Inf. Theory 2018, 64, 2675–2689. [Google Scholar] [CrossRef]

- Hand, P.; Voroninski, V. An elementary proof of convex phase retrieval in the natural parameter space via the linear program PhaseMax. Commun. Math. Sci. 2018, 16, 2047–2051. [Google Scholar] [CrossRef]

- Candès, E.J.; Li, X.; Soltanolkotabi, M. Phase retrieval via wirtinger flow: Theory and algorithms. IEEE Trans. Inf. Theory 2015, 61, 1985–2007. [Google Scholar] [CrossRef]

- Netrapalli, P.; Jain, P.; Sanghavi, S. Phase retrieval using alternating minimization. IEEE Trans. Signal Process 2015, 18, 4814–4826. [Google Scholar] [CrossRef]

- Cai, J.F.; Li, J.; Lu, X.; You, J. Sparse signal recovery from phaseless measurements via hard thresholding pursuit. Appl. Comput. Harmon. Anal. 2022, 56, 367–390. [Google Scholar] [CrossRef]

- Cai, J.F.; Jiao, Y.L.; Lu, X.L.; You, J.T. Sample-efficient sparse phase retrieval via stochastic alternating minimization. IEEE Trans. Signal Process 2022, 70, 4951–4966. [Google Scholar] [CrossRef]

- Yang, M.H.; Hong, Y.W.P.; Wu, J.Y. Sparse affine sampling: Ambiguity-free and efficient sparse phase retrieval. IEEE Trans. Inf. Theory 2022, 68, 7604–7626. [Google Scholar] [CrossRef]

- Bakhshizadeh, M.; Maleki, A.; Jalali, S. Using black-box compression algorithms for phase retrieval. IEEE Trans. Inf. Theory 2020, 66, 7978–8001. [Google Scholar] [CrossRef]

- Cha, E.; Lee, C.; Jang, M.; Ye, J.C. DeepPhaseCut: Deep relaxation in phase for unsupervised fourier phase retrieval. IEEE Trans. Pattern. Anal. Mach. Intell. 2022, 44, 9931–9943. [Google Scholar] [CrossRef]

- Wang, B.; Fang, J.; Duan, H.; Li, H. PhaseEqual: Convex phase retrieval via alternating direction method of multipliers. IEEE Trans. Signal Process 2020, 68, 1274–1285. [Google Scholar] [CrossRef]

- Liu, T.; Tillmann, A.M.; Yang, Y.; Eldar, Y.C.; Pesavento, M. Extended successive convex approximation for phase retrieval with dictionary learning. IEEE Trans. Signal Process 2022, 70, 6300–6315. [Google Scholar] [CrossRef]

- Fung, S.W.; Di, Z.W. Multigrid optimization for large-scale ptychographic phase retrieval. SIAM J. Imaging Sci. 2020, 13, 214–233. [Google Scholar] [CrossRef]

- Chen, Y.; Chi, Y.; Fan, J.; Ma, C. Gradient descent with random initialization: Fast global convergence for nonconvex phase retrieval. Math. Program. 2019, 176, 5–37. [Google Scholar] [CrossRef]

- Cai, J.F.; Huang, M.; Li, D.; Wang, Y. Solving phase retrieval with random initial guess is nearly as good as by spectral initialization. Appl. Comput. Harmon. Anal. 2022, 58, 60–84. [Google Scholar] [CrossRef]

- Soltanolkotabi, M. Structured signal recovery from quadratic measurements: Breaking sample complexity barriers via nonconvex optimization. IEEE Trans. Inf. Theory 2019, 65, 2374–2400. [Google Scholar] [CrossRef]

- Jaganathan, K.; Oymak, S.; Hassibi, B. Sparse phase retrieval: Uniqueness guarantees and recovery algorithms. IEEE Trans. Signal Process. 2017, 65, 2402–2410. [Google Scholar] [CrossRef]

- Killedar, V.; Seelamantula, C.S. Compressive phase retrieval based on sparse latent generative priors. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 1596–1600. [Google Scholar]

- Wen, J.; He, H.; He, Z.; Zhu, F. A pseudo-inverse-based hard thresholding algorithm for sparse signal recovery. IEEE Trans. Intell. Transp. Syst. 2022. [Google Scholar] [CrossRef]

- Foucart, S. Hard thresholding pursuit: An algorithm for compressive sensing. SIAM J. Numer. Anal. 2011, 49, 2543–2563. [Google Scholar] [CrossRef]

- Foucart, S.; Rauhut, H. An Invitation to Compressive Sensing. In A Mathematical Introduction to Compressive Sensing; Springer: Berlin/Heidelberg, Germany, 2013; pp. 1–39. [Google Scholar]

- Blumensath, T.; Davies, M.E. Iterative hard thresholding for compressed sensing. Appl. Comput. Harmon. Anal. 2009, 27, 265–274. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Needell, D.; Tropp, J. CoSaMP: Iterative signal recovery from incomplete and inaccurate samples. Appl. Comput. Harmon. Anal. 2009, 26, 301–321. [Google Scholar] [CrossRef]

- Dai, W.; Milenkovic, O. Subspace pursuit for compressive sensing signal reconstruction. IEEE Trans. Inf. Theory 2009, 55, 2230–2249. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. Comp. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Sun, Z.F.; Zhou, J.C.; Zhao, Y.B.; Meng, N. Heavy-ball-based hard thresholding algorithms for sparse signal recovery. J. Comput. Appl. Math. 2023, 430, 115264. [Google Scholar] [CrossRef]

- Candès, E.J. The restricted isometry property and its implications for compressed sensing. Crendus. Math. 2008, 346, 589–592. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, L.; Giannakis, G.B.; Akçakaya, M.; Chen, J. Sparse phase retrieval via truncated amplitude flow. IEEE Trans. Signal Process 2018, 66, 479–491. [Google Scholar] [CrossRef]

- Jagatap, G.; Hegde, C. Sample-efficient algorithms for recovering structured signals from magnitude-only measurements. IEEE Trans. Inf. Theory 2019, 65, 4434–4456. [Google Scholar] [CrossRef]

- Cai, T.T.; Li, X.; Ma, Z. Optimal rates of convergence for noisy sparse phase retrieval via thresholded wirtinger flow. Ann. Stat. 2016, 44, 2221–2251. [Google Scholar] [CrossRef]

- Zhao, Y.B. Optimal k-thresholding algorithms for sparse optimization problems. SIAM J. Optim. 2020, 30, 31–55. [Google Scholar] [CrossRef]

- Blanchard, J.D.; Tanner, J. Performance comparisons of greedy algorithms in compressed sensing. Numer. Linear Algebra Appl. 2015, 22, 254–282. [Google Scholar] [CrossRef]

- Blanchard, J.D.; Tanner, J.; Wei, K. CGIHT: Conjugate gradient iterative hard thresholding for compressed sensing and matrix completion. Inf. Inference 2015, 4, 289–327. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).