Abstract

In this work, we aim to prove the strong convergence of the sequence generated by the modified inertial parallel viscosity-type algorithm for finding a common fixed point of a finite family of nonexpansive mappings under mild conditions in real Hilbert spaces. Moreover, we present the numerical experiments to solve linear systems and differential problems using Gauss–Seidel, weight Jacobi, and successive over relaxation methods. Furthermore, we provide our algorithm to show the efficiency and implementation of the LASSO problems in signal recovery. The novelty of our algorithm is that we show that the algorithm is efficient compared with the existing algorithms.

MSC:

47H10; 65K05; 94A12

1. Introduction

Let be a nonempty closed and convex subset of a real Hilbert space and be a mapping with the fixed point set , i.e., . A mapping T is said to be:

- (1)

- Contractive if there exists a constant such that for all ;

- (2)

- Nonexpansive if for all .

Many problems in optimization can be solved by solving the transmission fixed point problem of a nonexpansive mapping, such as minimization problems, variational inequality, variational inclusion, etc. [1,2]. Thus, nonexpansive mapping has been studied extensively, including creating an algorithm to find its fixed point [3,4]. Constructing an algorithm to achieve strong convergence is a critical issue that many mathematicians focus on. One of them is the viscosity approximation method of selecting a particular fixed point of a given nonexpansive mapping as proposed by Moudafi [5] in 2000. Later, a class of viscosity-type methods was introduced by many authors; see [6,7]. One of the modified viscosity methods introduced by Aoyama and Kimura [8] is called the viscosity approximation method (VAM) for a countable family of nonexpansive mappings. The VAM was applied to a variational problem and zero point problem. When the contraction mappings are set by some fixed vectors, the VAM is reduced to a Halpern-type iteration method (HTI). To improve the convergence of the method, one of the most commonly used methods is the inertial method. In 1964, Polyak [9] introduced an algorithm that can speed up the gradient descent, and its modification was made immensely popular by Nesterov’s accelerated gradient algorithm, which was an algorithm proposed by Nesterov in 1983 [10]. This well-known method, which has improved the convergence rate, is known as the inertial iteration for the operator. Many researchers have given various acceleration techniques such as [11,12] to obtain a faster convergence method.

Many real-world problems can be modeled as common problems. Therefore, the study of the solving of these problem is important and has received the attention of many mathematicians. In 2015, Anh and Hieu [13] introduced a parallel monotone hybrid method (PMHM), and Khatibzadeh and Ranjbar [14] introduced Halpern-type iterations to approximate a finite family of quasi-nonexpansive mappingsin Banach space. Recently, there has been some research involving the parallel method for solving many problems. It is shown that the method can be applied in real-world problems such as image deblurring and related applications [15,16].

Inspired by previous works, in this work, we are interested in presenting a viscosity modification combined with the parallel monotone algorithm for a finite nonexpansive mapping. We provide a strong convergence theorem for the proposed algorithm with a parallel monotone algorithm. We provide numerical experiments of our algorithm for solving the linear system problem, differential problem, and signal recovery problem. The efficiency of the proposed algorithm is shown by comparing with existing algorithms.

2. Preliminaries

In this section, we give some definitions and lemmas that play an essential role in our analysis. The strong and weak convergence of to x will be denoted by and , respectively. The projection of s on to A is defined by

where A is a nonempty, closed set.

Lemma 1

([17]). Let be a sequence of nonnegative real numbers such that there exists a subsequence of satisfying for all . Then, there exists a nondecreasing sequence of N such that and the following properties are satisfied for all (sufficiently large) numbers :

Lemma 2

([2]). Let be a sequence of nonnegative real numbers such that

where with and satisfies or . Then, .

Lemma 3

([18]). Assume is a sequence of nonnegative real numbers such that

and

where , is a sequence of nonnegative real numbers, and and are real sequences such that:

- ;

- ;

- implies for any subsequence of real numbers of .

Then, .

Proposition 1

([19]). Let H be a real Hilbert space. Let be fixed. Let and for all with . Then, we have

3. Main Results

In this section, we introduce viscosity modification combined with the inertial parallel monotone algorithm for a finite family of nonexpansive mappings. Before proving the strong convergence theorem, we give the following Definition 1:

Definition 1.

Let C be a nonempty subset of a real Hilbert space H. Let be nonexpansive mappings for all . Then, is said to satisfy Condition (A)

if, for each bounded sequence , there exists sequence such that for all with implying that for all .

For the example of , which satisfies Condition (A), we can set , where , is an -inverse strongly monotone operator, is a maximal monotone operator, and satisfies the assumptions in Theorem 3.1 of [1]. Assume that the following conditions hold:

- C1.

- ;

- C2.

- , ;

- C3.

- , .

Theorem 1.

Let be defined by Algorithm 1, and let satisfyCondition (A)such that . Then, the sequence converges strongly to

| Algorithm 1: Let C be a nonempty closed convex subset of a real Hilbert space H, and let be a contraction mapping. Let be nonexpansive mappings for all . |

Suppose that with and , , and are sequences in . For , let be a sequence generated by , and define the following: Step 1. Calculate the inertial step: Step 2. Compute: Step 3. Construct by

Step 4. Set , and go to Step 1. |

Proof.

For each , we set to be defined by and

Let . Then, for each , we have

This shows that is bounded for all . From the definition of , there exist such that

Therefore, we obtain

By our assumptions and Lemma 2, we conclude that

By Proposition 1 and (2), we obtain

Setting implies that

Assume that , then we show that . We will consider this for two possible cases on sequence :

Case 1. Suppose that there exists such that for all . This implies that exists. From (5), we have

From Condition (A), (6), and bounded, we obtain

From Condition C3 and (7), this implies that

As and from (3), this implies that . Since satisfies Condition (A), for all . By the demiclosed property of nonexpansive mapping, we obtain .

Case 2. Suppose that there exists a subsequence of such that for all . In this case, it follows from Lemma 1 that there is a nondecreasing subsequence of N such that , and the following inequalities hold for all :

Similar to Case 1, we obtain . It is known that , which implies . Therefore, . We next show that . From (4), we see that

Since is bounded, there exists a subsequence of such that

For this purpose, one assumes that is a subsequence of such that . This implies that

From the definition of , we obtain

By Cases 1 and 2, there exists a subsequence of such that

and

Since is bounded, there exists a subsequence of converging weakly to some . Without loss of generality, we replace by , and we have

Since , . From (11), we obtain

Therefore, by using Lemma 3 and (5). By , this implies that . We thus complete the proof. □

4. Numerical Experiments

In this section, we present our algorithm to solve linear systems and differential problems. All computational experiments were written in Matlab 2022b and conducted on a Processor Intel(R) Core(TM) i7-9700 CPU @ 3.00 GHz, 3000 Mhz, with 8 cores and 8 logical processors.

4.1. Linear System Problems

We now consider the linear system:

where is a linear and positive operator and . Then, the linear system (12) has a unique solution. There are many different ways of rearranging Equation (12) in the form of fixed point equation . For example, the well-known weight Jacobi (WJ) and successive over relaxation (SOR) methods [12,20,21] provide the linear system (12) as the fixed point equation and .

From Table 1, we set is the weight parameter; the diagonal component of matrix A is D, whereas the lower triangular component of matrix is L.

Table 1.

The different ways of rearranging the linear systems (12) into the form .

Setting , where , we can see that

where S is an operator with . In controlling the operators and in the form of where

and where

It follows from (13) that and are nonexpansive mapping, and their weight parameter needs to be appropriately adjusted. The weight parameter implemented for the operator of the WJ and SOR methods has a norm less than one. Moreover, the optimal weight parameter in obtaining the smallest norm for each type of operator is indicated in Table 2.

Table 2.

Implemented weight parameter and optimal weight parameter of operator S.

The parameters and are the maximum and minimum eigenvalue of matrix , respectively, and is the spectral radius of the iteration matrix of the Jacobi method ( with ). Thus, we can convert this linear system into fixed point equations to obtain the solution of the linear system (12).

where is the common solution of Equation (14). By utilizing the nonexpansive mapping , we provide a new parallel iterative method in solving the common solution of Equation (14). Iteratively, the generated sequence is produced by using two initial data and

where are appropriate real sequences in and f is a contraction mapping. The stopping criterion is employed as follows:

and after that, set and .

Next, the proposed method (15) was compared with the well-known WJ, SOR, and Gauss–Seidel (the SOR method with , called the GS method) methods in obtaining the solution of the linear system:

and , with . For simplicity, the proposed method (15) with and the nonexpansive mapping T are chosen from , and , and . The results of the WJ, GS, SOR, and proposed methods are given for the following cases:

- Case 1.

- The proposed method with ;

- Case 2.

- The proposed method with ;

- Case 3.

- The proposed method with ;

- Case 4.

- The proposed method with –;

- Case 5.

- The proposed method with –;

- Case 6.

- The proposed method with –;

- Case 7.

- The proposed method with ––.

These are demonstrated and discussed for solving the linear system (16). The weight parameter of the proposed methods is set as its optimum weight parameter () defined in Table 2. We used the following parameters:

, and

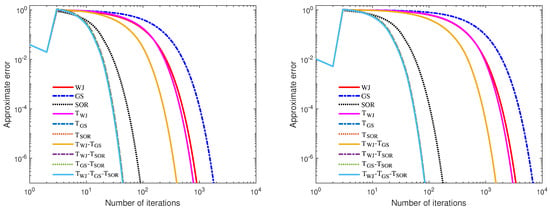

where is the number of iterations at which we want to stop with . The estimated error per iteration step for all cases was measured by using the relative error . Figure 1 shows the estimated error per iteration step for all cases with and .

Figure 1.

Relative error of the GS, WJ, and SOR methods and all cases of the proposed methods in solving the problem (16) with and , respectively.

The trend of the number of iterations for the WJ, GS, and SOR methods and all case studies of the proposed methods in solving the linear system (16) with and is shown in Figure 2.

Figure 2.

The progression in the number of iterations for the GS, WJ, and SOR methods and the proposed methods in solving the problem (12)) with and , respectively.

Figure 1 and Figure 2 show that the proposed method using was better than the WJ method, the proposed method with was better than the GS method, and the proposed method with was better than SOR method when the speed of convergence and the number of iterations are compared. We also found that, when the proposed method with was used (parallel algorithm), the number of iterations was based on the minimum number of iterations used in the nonparallel proposed methods. That is, when the parallel algorithms were used, it can be seen from Figure 2 that the number of iterations of the proposed method with – was the same as the proposed method with and the number of iterations of the proposed method with –, –, –– was the same as the proposed method with . As a result of the parallel algorithm in which was used as its partial components (The proposed methods with –, –, ––), this will give us the fastest convergence.

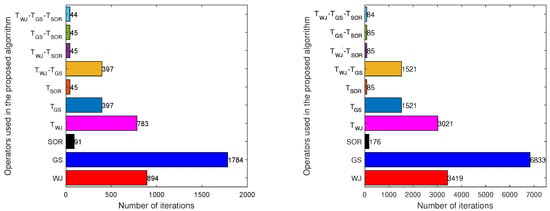

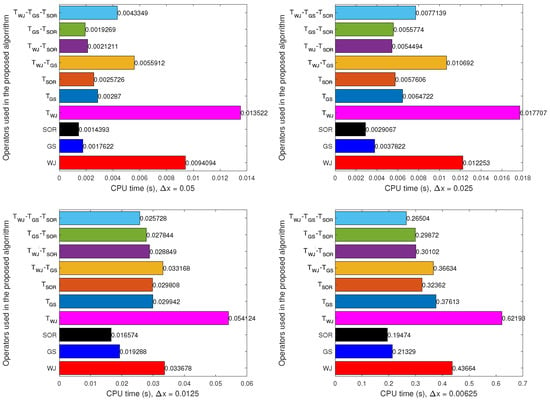

Figure 3 shows that the CPU time of the SOR method was better than the other methods. However, the CPU time of the proposed method using the parallel technique –– was better, close to the SOR method as compared to the other way, when the grid size of matrix A was increased.

Figure 3.

The progression of the CPU time for the GS, WJ, and SOR methods and the proposed methods in solving the problem (12) with and , respectively.

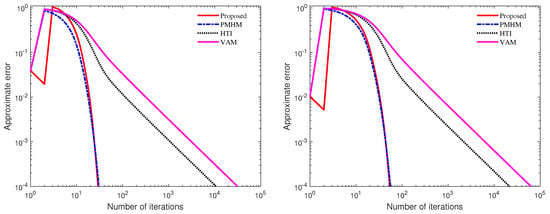

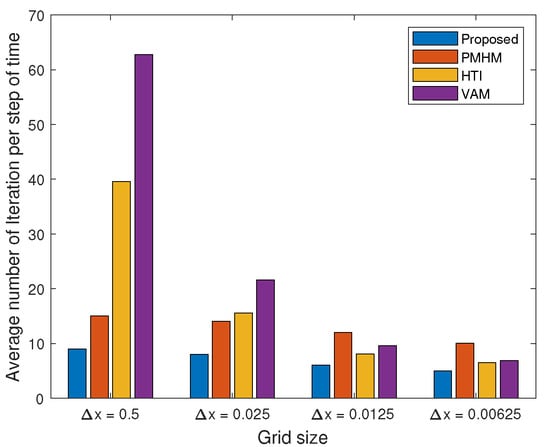

Next, we provide a comparison of proposed algorithm with the PMHM, HTI, and VAM (where is the -mapping, which was introduced by Shimoji and Takahashi [22] with setting ). For the parameter in the HTI and VAM, we chose . Let in the VAM algorithm and in the HTI algorithm. The results are reported in Table 3 and Figure 4.

Table 3.

The progression of the CPU time for the linear system problem.

Figure 4.

The progression of the number of iterations for the proposed methods and the PMHM, HTI, and VAM in solving the problem (12) with and , respectively.

4.2. Differential Problems

Consider the following simple and well-known periodic heat problem with Dirichlet boundary conditions (DBCs) and initial data:

where is constant, represents the temperature at points and , and and are sufficiently smooth functions. Below, we use the notations and to represent the approximate numerical values of and , and , where denotes the size of the temporal mesh. The following well-known Crank–Nicolson-type scheme [12,21] is the foundation for a set of schemes used to solve the heat problem (20):

with initial data:

and DBCs:

To approximate the term of , we used the standard centered discretization with space. The matrix form of the well-known second-order finite difference scheme (FDS) in solving the heat problem (20) can be written as

where :

, , and , . According to Equation (24), matrix A is square and symmetrically positive definite. This scheme uses a three-point stencil and reaches the second-order approximation with time and space. The scheme (21) is consistent with the problem (20). The required and sufficient criterion for the stability of the scheme (21) is (see [12]).

The discretization of the considered problem (24) has traditionally been solved using iterative methods. Here, the well-known WJ and SOR methods were chosen as examples (see Table 4).

Table 4.

The specific name of and in solving the discretization of the considered problem (24).

The weight parameter is ; the diagonal component of matrix A is D; the lower triangular part of matrix is L. Moreover, the optimal weight parameter is also indicated with the same formula in Table 2. The step sizes of the time play an important role in the stability needed for the WJ and SOR methods in solving the linear systems (24) generated from the discretization of the considered problem (20). The discussion on the stability of the WJ and SOR methods in solving the linear systems (24) can be found in [20,21].

Let us consider the linear system:

where is a linear and positive operator and . We transformed this linear system into the form of a fixed point equation to determine the solution of the linear system (25). For example, the well-known WJ, SOR, and GS approaches present the linear system (25) as a fixed point equation (see Table 5).

Table 5.

The alternative method of rearranging the linear systems (25) into the form .

We introduced a new parallel iterative method using the nonexpansive mapping . Iteratively, the generated sequence is created by employing two initial data and

where the second superscript “s”, , denotes the number of iterations, are appropriate real sequences in , and f is a contraction mapping. The following stopping criteria were employed:

where ” denotes the last iteration at time , and then, we set

Next, the proposed method (26) in obtaining the solution of the problem (24) generated from the discretization of the heat problem with DBCs and the initial data (20) was then compared to the well-known WJ, GS, and SOR methods with their optimal parameters. The proposed method (26) with and the nonexpansive mapping T chosen from , and were compared.

Let us consider the simple heat problems:

The results of the WJ, GS, and SOR methods were compared with all case studies of the proposed methods, which is the same as Section 4.1. Because we focused on the convergence of the proposed method, the stability analysis in selecting the time step sizes is not described in depth. The proposed methods’ time step size was based on the least step size selected from the WJ and SOR methods in solving the problem (24) obtained from the discretization of the considered problem (27).

All computations were carried out on a uniform grid of N nodes, which corresponds to the solution of the problem (24) with sizes of matrix A and . The weight parameter of the proposed method is defined as the optimum weight parameter () in Table 2.

We used , , , the default parameters , and the function f set as Equations (17)–(19) and

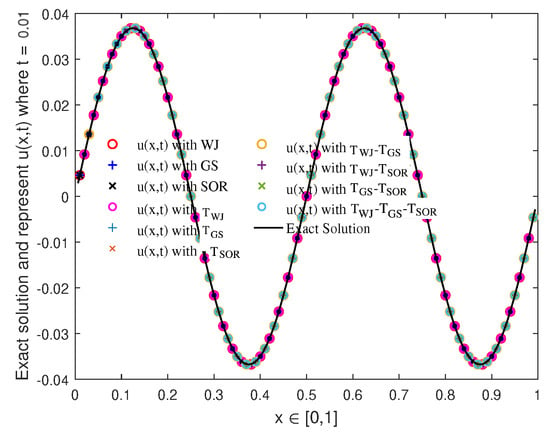

where is the number of iterations at which we want to stop. For testing purposes only, all computations were carried out for (when ). Figure 5 shows the approximate solution of the problem (27) with 101 nodes at by using the WJ, GS, and SOR methods and the proposed methods.

Figure 5.

Approximate solutions of the GS, WJ, and SOR methods and all cases of the proposed methods in solving the problem (27) with 101 nodes.

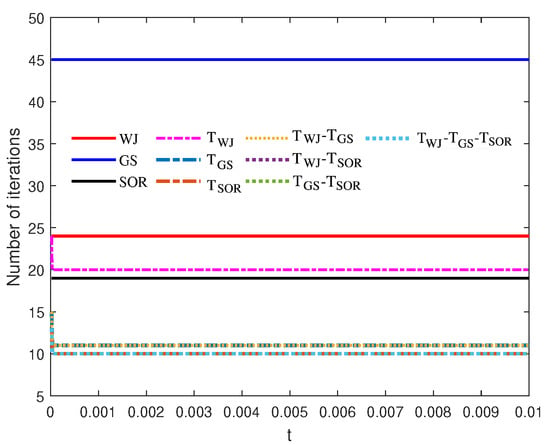

It can be seen from Figure 5 that all numerical solutions matched the analytical solution reasonably well. Figure 6 shows the trend of the iteration number for the WJ, GS, and SOR methods and the proposed methods in solving the problem (24) generated from the discretization of the considered problem (27) with 101 nodes. It was found that the proposed method with was better than the WJ method, the proposed method with was better than the GS method, and the proposed method with was better than the SOR method when the number of iterations was compared.

Figure 6.

The evolution of the number of iterations for the WJ, GS, and SOR methods and the proposed methods in solving the problem (20) with 101 nodes and .

We see that the number of iterations of the proposed method with depends on the minimum number of iterations of the nonparallel proposed methods used. That is, the number of iterations of the proposed method at each time step with – was the same as the proposed method with , and the number of iterations at each time step of the proposed methods with –, –, and –– was the same as the proposed method with .

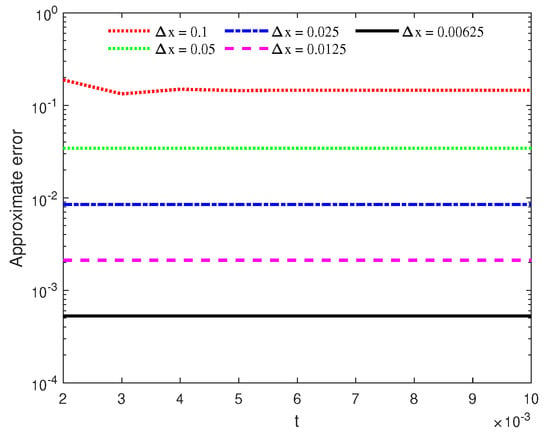

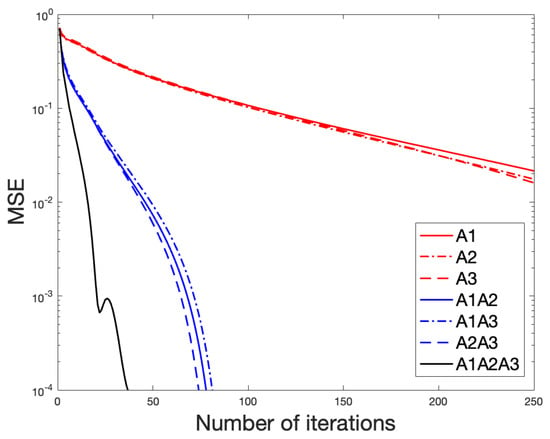

Next, the proposed method with –– was chosen in solving the problem (24) generated from the discretization of the considered problem (27) to test and verify the order of accuracy for the presented FDS in solving the heat problem. All computations were carried out on uniform grids of 11, 21, 41, 81, and 161 nodes, which correspond to the solution of the discretization of the heat problem (27) with = 0.1, 0.05, 0.025, 0.0125, and 0.0625, respectively. The evolution of their relative error at each time step reached under the acceptable tolerance for the numerical solution of the heat problem problem with various grid sizes is shown in Figure 7.

Figure 7.

The evolution of the relative error in obtain the numerical solution of the problem (27) with various grid sizes by using the proposed method with ––.

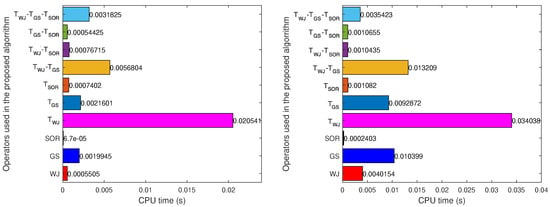

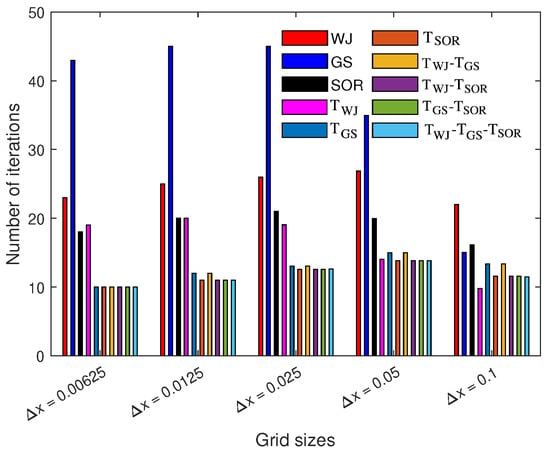

When the distance between the graphs of all computational grid sizes was examined, the proposed method using –– was shown to have second-order accuracy. That is, the order of the accuracy of the proposed method using –– corresponds to the FDS construction. Figure 8 shows the trend of the iteration number for the WJ, GS, and SOR methods compared with all cases of the proposed methods in solving the discretization of the considered problem (27) with varying grid sizes.

Figure 8.

The evolution of the iteration number for the GS, WJ, and SOR methods and the proposed methods in solving the problem (20) with and .

It can be seen that, when the grid size was small, the parallel algorithm in which the or was used as its partial components gave us the lowest number of iterations under the accepted tolerance.

From Figure 9, we see that the CPU time of the SOR method was better than the other methods. However, the CPU time of the proposed method using the parallel technique –– was better, close to the SOR method as compared to the other way, when the grid size of matrix A was increased.

Figure 9.

The evolution of the CPU time for the GS, WJ, and SOR methods and the proposed method in solving problem (20) with and .

Next, we provide a comparison of the proposed algorithm with the PMHM, HTI, and VAM (where is a mapping, which was introduced by Shimoji and Takahashi [22] with setting ). As the parameter in the HTI and VAM, we chose . Let in the VAM algorithm and in the HTI algorithm. The results are reported in Figure 10 and Figure 11.

Figure 10.

The average number of iterations for the proposed methods and the PMHM, HTI, and VAM.

Figure 11.

The CPU time for the proposed methods and the PMHM, HTI, and VAM.

From Figure 10 and Figure 11, we see that the CPU time and number of iterations of the proposed algorithm were better than the PMHM, HTI, and VAM.

Moreover, the our method can solve many real-word problems such as image and signal processing, optimal control, regression, and classification problems by setting as the proximal gradient operator. Therefore, we present the examples of the signal recovery in the next.

4.3. Signal Recovery

In this part, we present some numerical examples of the signal recovery by the proposed methods. A signal recovery problem can be modeled as the following underdetermined linear equation system:

where is the original signal and is the observed signal, which is squashed by the filter matrix and noise . It is well known that the problem (29) can be solved by the LASSO problem:

where . As a result, various techniques and iterative schemes have been developed over the years to solve the LASSO problem; see [15,16]. In this case, we set , where and . It is known that T is a nonexpansive mapping when , and L is the Lipschitz constant of .

The goal in this paper was to remove noise without knowing the type of filter and noise. Thus, we are interested in the following problem:

where u is the original signal, is a bounded linear operator, and is an observed signal with noise for all

We can apply Algorithm 1 to solve the problem (31) by setting .

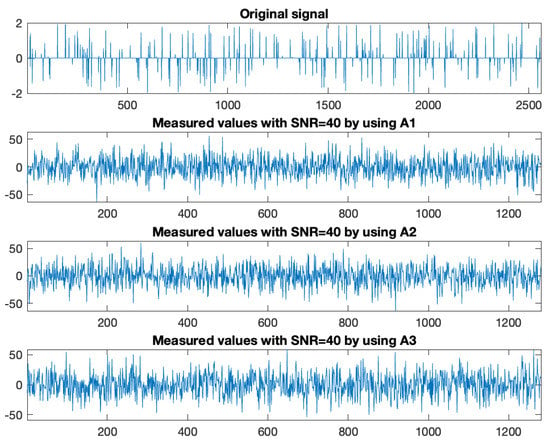

In our experiment, the sparse vector was generated by the uniform distribution in [−2, 2] with n nonzero elements. were generated by the normal distribution matrix , respectively, with white Gaussian noise such that the signal-to-noise ratio (SNR) = 40. The initial point was picked randomly. We used the mean-squared error (MSE) for estimating the restoration accuracy, which is defined as follows:

where is the estimated signal of u.

In what follows, let the step size parameter for all , when the contraction mapping is defined by . We study the convergence behavior of the sequence when

where K is the number of iterations at which we want to stop.

The iterative scheme was varied by choosing different in the following cases:

- Case 1.

- ;

- Case 2.

- ;

- Case 3.

- ;

- Case 4.

- ;

- Case 5.

- ;

- Case 6.

- .

We set the number of iterations at which we wanted to stop 10,000, and in all cases, we set , , and . The results are reported in Table 6.

Table 6.

The convergence of Algorithm 1 with each for parameter .

From Table 6, it is shown that the parameter depends on using the number of iterations with the CPU time of our algorithm more than the other . Furthermore, we see that the case of inputting had a lower number of iterations and CPU time than the case of inputting and for all of the cases. This means that the efficiency of the proposed algorithm is better when the number of subproblems is increasing.

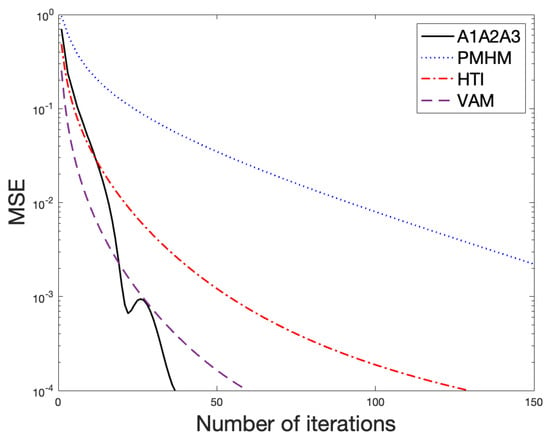

Next, we provide a comparison of the proposed algorithm with the PMHM, HTI, and VAM (where is a -mapping, which was introduced by Shimoji and Takahashi [22] with setting ). We set the parameter in the PMHM algorithm as . The parameter in the HTI and VAM was chosen as and . Let in the VAM algorithm and in the HTI algorithm. We plot the number of iterations versus the mean-squared error (MSE) and the original signal, observation data, and recovered signal for one case with , and . The results are reported in Table 7.

Table 7.

The computational results for solving the LASSO problem.

From Table 7, we see by the MSE values that our algorithm using the parallel method was faster than the PMHM, HTI, and VAM in terms of the number of iterations and the CPU time.

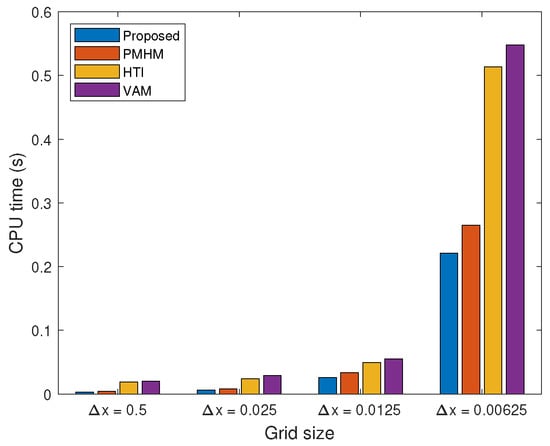

From Figure 12, it is shown that the MSE value of Algorithm 1 with decreased faster than that of Algorithm 1 with , and that with decreased faster than that of Algorithm 1 with .

Figure 12.

The graphs of the MSE for Algorithm 1 with the input .

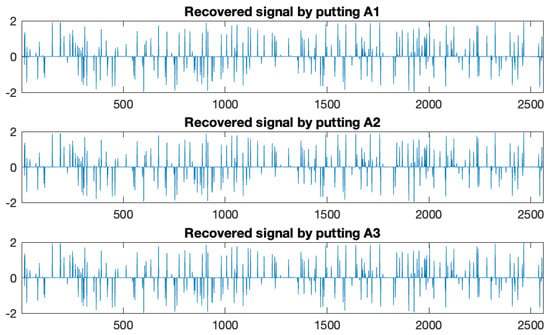

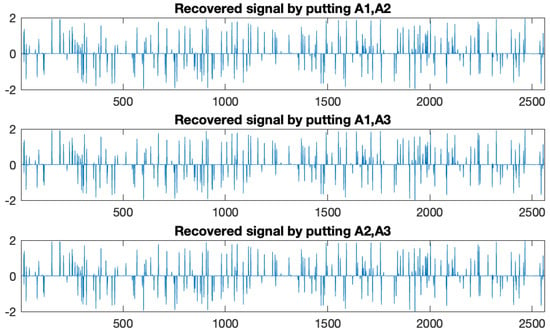

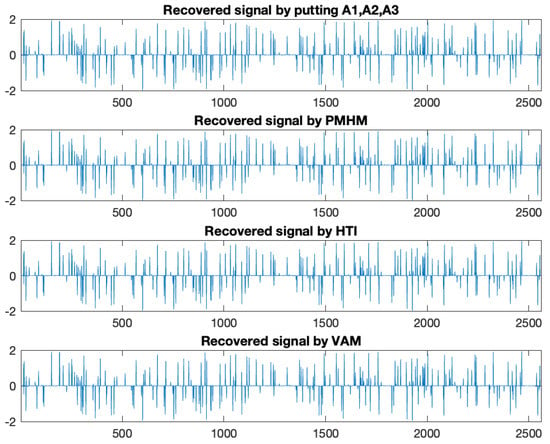

The original signal, observation data, and recovered signal are shown in Figure 13, Figure 14, Figure 15 and Figure 16.

Figure 13.

The original signal size , and 250 spikes and the measured values with , SNR = 40, respectively.

Figure 14.

The recovered signal with by A1 (503 Iter, CPU = 1.6346), A2 (474 Iter, CPU = 1.5908), and A3 (427 Iter, CPU = 1.3930), respectively.

Figure 15.

The recovered signal with by A1A2 (78 Iter, CPU = 1.5538), A1A3 (82 Iter, CPU = 1.3578), and A2A3 (75 Iter, CPU = 1.2515), respectively.

Figure 16.

The recovered signal with by A1A2A3 (37 Iter, CPU = 1.8094), PMHM (264 Iter, CPU = 2.4789), HTI (130 Iter, CPU = 2.0365), and VAM (60 Iter, CPU = 2.0194), respectively.

From Figure 16 and Figure 17, it is shown that Algorithm 1 with A1A2A3 converged faster than the PMHM, HTI, and VAM.

Figure 17.

The graphs of the MSE for Algorithm 1 with A1A2A3 and the PMHM algorithm, respectively.

5. Conclusions

In this work, we introduced a viscosity modification combined with the parallel monotone algorithm for a finite family of nonexpansive mappings. We also established a strong convergence theorem. We provided numerical experiments of our algorithm for solving linear system problems and differential problems and showed the efficiency of the proposed algorithm. In the signal recovery problem, it was found that our algorithm had a better convergence behavior than the other algorithms. In the future, the proposed algorithm can be executed to solve a generalized nonexpansive mapping and be applied to solve many real-word problems such as image and signal processing, optimal control, regression, and classification problems.

Author Contributions

Funding acquisition and supervision, S.S.; writing—review and editing, W.C.; writing—original draft, K.K.; software, D.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Chiang Mai University, Thailand and the NSRI via the program Management Unit for Human Resources & Institutional Development, Research and Innovation (Grant No. B05F640183). W. Cholamjiak was supported by the Thailand Science Research and Innovation Fund and University of Phayao (Grant No. FF66-UoE). D. Yambangwai was supported by the School of Science, University of Phayao (Grant No. PBTSC65008).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank reviewers and the editor for valuable comments for improving the original manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cholamjiak, W.; Dutta, H. Viscosity modification with parallel inertial two steps forward-backward splitting methods for inclusion problems applied to signal recovery. Chaos Solitons Fractals 2022, 157, 111858. [Google Scholar] [CrossRef]

- Osilike, M.O.; Aniagbosor, S.C. Weak and strong convergence theorems for fixed points of asymptotically nonexpansive mappings. Math. Comput. Model. 2000, 32, 1181–1191. [Google Scholar] [CrossRef]

- Browder, F.E. Convergence of approximants to fixed points of nonexpansive nonlinear mappings in Banach spaces. Arch. Ration. Mech. Anal. 1967, 24, 82–90. [Google Scholar] [CrossRef]

- Halpern, B. Fixed points of nonexpanding maps. Bull. Am. Math. Soc. 1967, 73, 957–961. [Google Scholar] [CrossRef]

- Moudafi, A. Viscosity approximation methods for fixed-points problems. J. Math. Anal. Appl. 2000, 241, 46–55. [Google Scholar] [CrossRef]

- Kankam, K.; Cholamjiak, P. Strong convergence of the forward–backward splitting algorithms via linesearches in Hilbert spaces. Appl. Anal. 2021, 1–20. [Google Scholar] [CrossRef]

- Xu, H.K. Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 2004, 298, 279–291. [Google Scholar] [CrossRef]

- Aoyama, K.; Kimura, Y. Viscosity approximation methods with a sequence of contractions. Cubo 2014, 16, 9–20. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Nesterov, Y.E. A method for solving the convex programming problem with convergence rate O(1/k2). Dokl. Akad. Nauk SSSR 1983, 269, 543–547. [Google Scholar]

- Maingé, P.E. Inertial iterative process for fixed points of certain quasi-nonexpansive mappings. Set-Valued Anal. 2007, 15, 67–79. [Google Scholar] [CrossRef]

- Yambangwai, D.; Moshkin, N. Deferred correction technique to construct high-order schemes for the heat equation with Dirichlet and Neumann boundary conditions. Eng. Lett. 2013, 21, 61–67. [Google Scholar]

- Anh, P.K.; Van Hieu, D. Parallel and sequential hybrid methods for a finite family of asymptotically quasi ϕ-nonexpansive mappings. J. Appl. Math. Comput. 2015, 48, 241–263. [Google Scholar] [CrossRef]

- Khatibzadeh, H.; Ranjbar, S. Halpern type iterations for strongly quasi-nonexpansive sequences and its applications. Taiwan. J. Math. 2015, 19, 1561–1576. [Google Scholar] [CrossRef]

- Suantai, S.; Kankam, K.; Cholamjiak, W.; Yajai, W. Parallel Hybrid Algorithms for a Finite Family of G-Nonexpansive Mappings and Its Application in a Novel Signal Recovery. Mathematics 2022, 10, 2140. [Google Scholar] [CrossRef]

- Suantai, S.; Kankam, K.; Cholamjiak, P.; Cholamjiak, W. A parallel monotone hybrid algorithm for a finite family of G-nonexpansive mappings in Hilbert spaces endowed with a graph applicable in signal recovery. Comput. Appl. Math. 2021, 40, 1–17. [Google Scholar] [CrossRef]

- Maingé, P.E. Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

- He, S.; Yang, C. Solving the variational inequality problem defined on intersection of finite level sets. Abstr. Appl. Anal. 2013, 2013, 942315. [Google Scholar] [CrossRef]

- Cholamjiak, P. A generalized forward-backward splitting method for solving quasi inclusion problems in Banach spaces. Numer. Algorithm. 2016, 71, 915–932. [Google Scholar] [CrossRef]

- Grzegorski, S.M. On optimal parameter not only for the SOR method. Appl. Comput. Math. 2019, 8, 82–87. [Google Scholar] [CrossRef]

- Yambangwai, D.; Cholamjiak, W.; Thianwan, T.; Dutta, H. On a new weight tridiagonal iterative method and its applications. Soft Comput. 2021, 25, 725–740. [Google Scholar] [CrossRef]

- Shimoji, K.; Takahashi, W. Strong convergence to common fixed points of infinite nonexpansive mappings and applications. Taiwan. J. Math. 2001, 5, 387–404. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).