Abstract

The paper develops a modified inertial subgradient extragradient method to find a solution to the variational inequality problem over the set of common solutions to the variational inequality and null point problems. The proposed method adopts a nonmonotonic stepsize rule without any linesearch procedure. We describe how to incorporate the regularization technique and the subgradient extragradient method; then, we establish the strong convergence of the proposed method under some appropriate conditions. Several numerical experiments are also provided to verify the efficiency of the introduced method with respect to previous methods.

Keywords:

Hilbert space; strong convergence; regularization method; subgradient extragradient method; monotone operator MSC:

90C25; 47H05; 65K15; 47J25; 65K15

1. Introduction

Let H be a real Hilbert space with scalar product and generated norm . Let be a nonlinear operator. Recall that the variational inequality problem (VIP) for the operator A on C is described as follows:

Denote by the set of solutions of the VIP (1). The VIP theory, which was first proposed independently by Fichera [1] and Stampacchia [2], is a powerful and effective tool in mathematics. It has a vast application across several fields of study, such as engineering mechanics, nonlinear equations, necessary optimality conditions, fractional differential equations, economics, and so on [3,4,5,6,7,8,9,10,11,12,13,14,15,16].

Let C be a nonempty, closed, and convex subset of a real Hilbert space H. A mapping is:

- (i)

- monotone, if

- (ii)

- - strongly monotone, if there exists a number such that

- (iii)

- -inverse strongly monotone, if there exists a positive number such that

- (iv)

- k- Lipschitz continuous, if there exists such that

- (v)

- nonexpansive, if

Remark 1.

Notice that any γ-inverse strongly monotone mapping is -Lipschitz continuous, and both strongly monotone and inverse strongly monotone mappings are monotone. It is also known that the set of fixed points of a nonexpansive mapping is closed and convex.

For each , because C is nonempty, closed, and convex, then there exists a unique point in C, denoted by , which is the nearest to p. The mapping is called the metric projection from H onto C. We give two explicit formulas to find the projection of any point onto a ball and a half-space.

- The projection of p onto a ball is computed by

- The projection of p onto a half-space is computed by

The problem, which will be studied in this work, is stated as follows:

where S is inverse-strongly monotone, F is strongly monotone, and A is monotone.

Recently, many works of literature on iterative methods for solving variational inequality problems have been proposed and studied, see, e.g., [6,9,11,12,14,15] and the references therein. The simplest and oldest method is the projected-gradient method in which only one projection on a feasible set is performed. A convergence of the method, however, requires a strong hypothesis: strong monotonicity or inverse strong monotonicity on A. To weaken this strong hypothesis, Korpelevich [9] suggested the following extragradient method (EGM):

where A is monotone and L-Lipschitz continuous. It is known that the sequence generated by the EGM converges weakly to a solution of the VIP provided that . However, the EGM needs to compute the projection onto the feasible set twice in each iteration. This may be expensive if the feasible set C has a complicated structure. Furthermore, it might seriously affect the efficiency by using such a method. In 2011, Censor et al. [15] modified the EGM and first proposed the subgradient extragradient method (SEGM) (Algorithm 1):

| Algorithm 1: The subgradient extragradient algorithm (SEGM). |

Initialization: Set , and let be arbitrary. Step 1. Given , compute

and construct the half-space the bounding hyperplane of which supports C at ,

Step 2. Calculate the next iterate

Step 3. If , then stop. Otherwise, set and return to Step 1. |

The main advantage of the SEGM is that it replaces the second projection from the convex and closed subset C to the half-space , and this projection can be computed by an explicit formula. Notice that both EGM and SEGM have been proven to possess only weak convergence in real Hilbert spaces. It is worth noting that strong convergence is more desirable than weak convergence in infinite dimensional spaces. One the other hand, we point out here that the stepsize of the EGM and SEGM plays a significant role in the convergence properties of the iterative methods. The stepsize defined by a constant is often very small and slows down the convergence rate. On the contrary, variable and appropriate stepsizes can often cause better numerical results. Recently, Yang et al. [17] introduced a self-adaptive subgradient extragradient method for solving the VIP.

It should be noted that strong convergence theorems can be obtained by using Algorithm 2 in real Hilbert spaces. However, the stepsize used in Algorithms 2 is monotonically decreasing, which may also affect the execution efficiency of such a method. Following the ideas of the EGM, the SEGM, and the SSEGM, Tan and Qin [18] proposed the following inertial extragradient algorithm with nonmonotonic stepsizes for solving the monotone variational inequality problem in real Hilbert spaces.

| Algorithm 2: Self-adaptive subgradient extragradient method (SSEGM). |

Initialization: Set , , and , and let be arbitrary. Step 1. Given , compute

If , then stop: is a solution. Otherwise, go to Step 2. Step 2. Construct the half-space the bounding hyperplane of which supports C at ,

and calculate

Step 3. Compute

where is updated by

Step 4. Set and return to Step 1. |

Under some suitable conditions, they proved that the iterative sequence constructed by Algorithm 3 converges strongly to a solution of the VIP (1).

| Algorithm 3: Self adaptive viscosity-type inertial subgradient extragradient method with nonmonotonic stepsizes (SSEGMN). |

Initialization: Set , , , and , and let be arbitrary. Step 1. Given and , compute

where

Step 2. Compute

and construct the half-space the bounding hyperplane of which supports C at ,

Step 3. Compute

Step 4. Compute

where is updated by

Step 5. Set and return to Step 1. |

Motivated by Korpelevich [9], Censor et al. [15], Yang et al. [17], and Tan and Qin [18], we introduce a modified inertial subgradient extragradient method with a nonmonotonic stepsize rule for finding a solution to the variational inequality problem over the set of common solutions to the variational inequality and null point problems. Different from the Halpern iteration and the viscosity methods, the strong convergence of the new method is based on the regularization technique. We also give some numerical examples to illustrate the efficiency of the proposed method over some existing ones.

2. Preliminaries

In this work, we denote the strong and weak convergence of by and , respectively. We denote by the set of fixed points of a mapping (i.e., ). We use to denote the weak -limit set of . In view of Alaoglu’s Theorem, we have: each bounded sequence in H has a subsequence that is weakly convergent (see [19]).

Lemma 1

([20]). Let C be a nonempty closed convex subset of a real Hilbert space H. For and , if and only if

Lemma 2

([19]). Assume C is a nonempty closed convex subset of a real Hilbert space H, and is a nonexpansive mapping. Then, the mapping is demiclosed, i.e., if is a sequence in C such that and , then .

Lemma 3

([19]). Let be a sequence in H. If and , then .

Lemma 4

([21]). Suppose is a hemicontinuous and (pseudo) monotone operator; then, is a solution of the VIP (1) if and only if is a solution of the following problem:

Lemma 5

([22,23]). Let be a sequence of nonnegative real numbers. Assume that

where satisfy the conditions

- (i)

- ,

- (ii)

- .Then, .

3. Main Results

In this section, let C be a nonempty, closed, and convex subset of H, be -inverse-strongly monotone, be - strongly monotone, and k- Lipschitz continuous. Let be L- Lipschitz continuous and monotone such that It is easily seen that the mapping is nonexpansive for any (also, see [24]). Furthermore, one finds from Remark 1 that is closed and convex. Since is closed and convex, then is also closed and convex. These ensure the uniqueness of solutions to problem (2). Let be a fixed positive real number. We now construct a regularization solution for problem (2) by solving the following general variational inequality problem:

The following lemmas play an important role in proving the main results.

Lemma 6.

For each the problem (6) has a unique solution .

Proof.

Since A is monotone and Lipschitz continuous, S is inverse-strongly monotone, and F is strongly monotone and Lipschitz continuous, then we deduce that is strongly monotone and Lipschitz continuous. It is well-known that if a mapping A is Lipschitzian and strongly monotone, then the VIP (1) has a unique solution (see [25] for more details). Hence, replacing A by , we derive that the problem (6) has a unique solution for each . □

Lemma 7.

It holds that .

Proof.

Namely,

Since by substituting it into (9), we find

If , then it holds that

which implies

If , then the above inequality obviously holds. Particularly, one also obtains

Thus, we deduce that is bounded. Furthermore, there is a subsequence of and some point such that . Utilizing the inverse strongly monotone property of S, the monotone property of A, and noting (6), one has

which leads to

By using the -inverse-strongly monotone property of S, noticing and (11), one obtains

which yields that

Since the mapping is nonexpansive for any (see [24]), noting Lemma 2, one obtains that demiclosed at zero. Therefore, one deduces that which implies

We now prove that . It follows from the monotonic property of A, S, F, and (6) that

or, equivalently,

Since as , one deduces from (13) that

Hence, one obtains from Lemma 4 that . This together with (12) implies

We prove ( One deduces from (9) that

Passing to the limit in (15) as , and noting the fact one infers

which implies by Lemma 4 that is the solution of problem (2). Since the solution of problem (2) is unique, one deduces that Thus, the set only has one element ; that is, Therefore, the whole sequence converges weakly to . Again, using (9) with , one finds

Passing in (16) to the limit as , and noting the fact one obtains

We take another subsequence of and some point such that By following a similar proof to the above, one derives , and Therefore, one deduces that . This completes the proof. □

Lemma 8.

for all , where .

Proof.

Suppose , are two solutions of the problem (6). Without loss of generality, we assume that . Then,

and

Summing up the above two inequalities, one finds that

Noting the monotonic property of A and S, one obtains

or, equivalently,

It follows from the - strong monotonicity of F that

which yields

Thus, one obtains

The mappings S and F are bounded due to the fact that they are Lipschitz. From the Lagrange’s mean-value theorem to a continuous function on , one deduces that

This, together with (17), implies that

where . If , by interchanging and in the above proof, one can also obtain the same results. This finishes the proof. □

Now, the iterative method is presented as follows:

Lemma 9

([18]). The sequence generated by (20) is well defined, and and , where

Assumption 1.

- (C1)

- , where τ is that in Lemma 9;

- (C2)

- , ;

- (C3)

- ;

- (C4)

- ;

- (C5)

- .

Theorem 1.

The sequence generated by Algorithm 4 converges strongly to the unique solutions of problem (2) under Assumption A1 (C1)–(C5).

| Algorithm 4: Modified inertial subgradient extragradient method with regularization (MSEMR). |

Initialization: Set , , , , , and . Choose a nonnegative real sequence such that . Let be arbitrary. Step 1.Compute where Step 2.Compute and construct the half-space the bounding hyperplane of which supports C at , Step 3.Calculate where is updated by Step 4.Set and return to Step 1. |

Proof.

Denote by the solution of the problem (6) with . By (C2) and Lemma 7, one obtains as . Thus, it is sufficient to prove

Setting , and noting for any , one obtains

It follows from Lemma 1 that

This, together with (21), implies

Noticing the definition of and , one derives

This, together with (26), leads to

Now, we estimate the last term in inequality (27). Observe that

Since S and A are monotone, and is the solution of the problem (6) with one finds that

and which, by (28) and the strongly monotonicity of F, imply that

Let , and be positive real numbers such that

By virtue of Lemma 9, (C1), (C2), and (C4), there exists such that

Observe that

It follows from (33), (34), (35), and (37) that

where , and From (C2), one obtains that and . Furthermore, one derives by (C3) and (C4) that

By using Lemma 5, one deduces . Thus, one has . □

Remark 2.

Compared with Algorithm (3) (), Algorithm 1 (), Algorithm 2 (), and Algorithm 3 (), our Algorithm 4 () is very different in the following aspects.

- Note that the stepsizes adopted in the , , , and are monotonically decreasing, which may affect the execution efficiency of such methods. However, the adopts a new nonmonotonic stepsize criterion that overcomes the drawback of the monotonically decreasing stepsize sequences generated by the other mentioned methods.

- One of the advantages of the is its strong convergence, which is preferable to the weak convergence resulting from the and in infinite dimensional spaces.

- The strong convergence of the comes from the regularization technique, which is different to the Halpern iteration and the viscosity methods used in the and , respectively.

4. Application to Split Minimization Problems

Let C be a nonempty closed convex subset of H. The constrained convex minimization problem is to find a point such that

where is a continuous differentiable function. The following lemma is useful to prove Theorem 2.

Lemma 10

([26]). A necessary condition of optimality for a point to be a solution to the minimization problem (39) is that solves the inequality

Equivalently, solves the fixed point equation

for every constant . If, in addition, f is convex, then the optimality condition (40) is also sufficient.

Theorem 2.

Suppose is a differentiable and convex function whose gradient is L-Lipschitz continuous. Assume that the optimization problem is consistent, (i.e., its solution set is nonempty). Then, the sequence constructed by Algorithm 5 converges to the minimization problem (39) under Assumption 1 (C1)–(C4).

| Algorithm 5: Modified inertial subgradient extragradient method to split minimization problems (MSESM). |

Initialization: Set , , , , , and . Choose a nonnegative real sequence such that . Let be arbitrary. Step 1.Compute where Step 2.Compute and construct the half-space the bounding hyperplane of which supports C at , Step 3.Calculate where is updated by Step 4.Set and return to Step 1. |

5. Numerical Illustrations

In the sequel, we provide some numerical experiments to demonstrate the advantages of the proposed method and compare it with the , , , and .

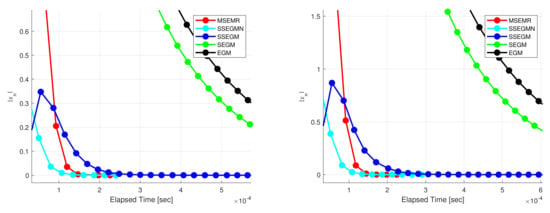

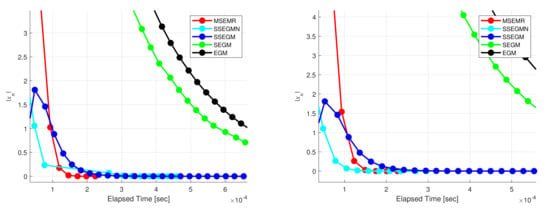

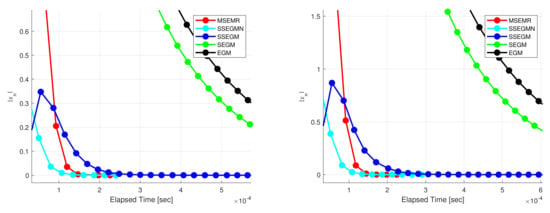

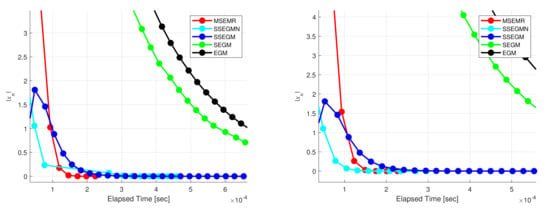

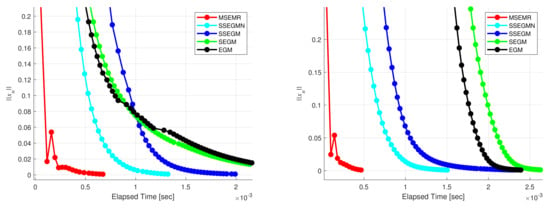

Example 3.

Let , and . Let be given by and be given by for all . It is not difficult to determine that 0 is the unique solution of problem (2). Let us choose , and We test the for different values of and , see Figure 1 and Figure 2.

Figure 1.

Example 3. Left: and . Right: and .

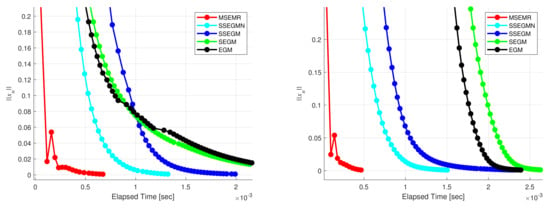

Figure 2.

Example 3. Left: and . Right: and .

Example 4.

Let , and . Assume the operator is given by , and is given by for all . It is obvious that A is monotone and Lipschitz continuous. For this experiment, in all the methods, we use the same starting points, which are randomly generated. The control parameters are chosen as , and Figure 3 describes the numerical results for Example 4 in and , respectively.

Figure 3.

Example 4. Left: . Right: .

From Examples 3 and 4, one finds that the MSEMR seems better than the other mentioned methods. The reasonable use of inertial terms and new stepsize greatly improves the computational performance of the proposed method.

6. Conclusions

The paper proposed an inertial subgradient extragradient method for finding a solution to the variational inequality problem over the set of common solutions to the variational inequality and null point problems. The introduced method used a nonmonotonic stepsize rule without any linesearch procedure. This allowed the proposed method to perform without the prior knowledge of the Lipschitz constants. The regularization technique was incorporated into the proposed method to obtain the strong convergence. Finally, several numerical experiments were also provided to verify the efficiency of the introduced method with respect to previous methods.

Author Contributions

Conceptualization, Y.S.; Data curation, O.B.; Funding acquisition, Y.S.; Methodology, Y.S. and O.B.; Supervision, O.B.; Writing—original draft, Y.S. and O.B.; Writing—review & editing, Y.S. and O.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Key Scientific Research Project for Colleges and Universities in Henan Province (No.20A110038), Key Young Teachers of Colleges and Universities in Henan Province (No.2019GGJS143).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the reviewers and the editor for the valuable comments to improve the original manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fichera, G. Sul problema elastostatico di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei Rend. Cl. Sci. Fis. Mat. Nat. 1963, 34, 138–142. [Google Scholar]

- Hartman, P.; Stampacchia, G. On some nonlinear elliptic differential functional equations. Acta Math. 1966, 115, 271–310. [Google Scholar] [CrossRef]

- Song, Y.; Chen, X. Analysis of Subgradient Extragradient Method for Variational Inequality Problems and Null Point Problems. Symmetry 2022, 14, 636. [Google Scholar] [CrossRef]

- Yao, Y.; Shehu, Y.; Li, X.H.; Dong, Q.L. A method with inertial extrapolation step for split monotone inclusion problems. Optimization 2021, 70, 741–761. [Google Scholar] [CrossRef]

- Ogwo, G.N.; Izuchukwu, C.; Mewomo, O.T. Inertial methods for finding minimum-norm solutions of the split variational inequality problem beyond monotonicity. Numer. Algor. 2021, 88, 1419–1456. [Google Scholar] [CrossRef]

- Kazmi, K.R.; Rizvi, S.H. Iterative approximation of a common solution of a split equilibrium problem, a variational inequality problem and a fixed point problem. J. Egypt. Math. Soc. 2013, 21, 44–51. [Google Scholar] [CrossRef]

- Song, Y.L.; Ceng, L.C. Convergence theorems for accretive operators with nonlinear mappings in Banach spaces. Abstr. Appl. Anal. 2014, 12, 1–12. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Karahan, I. A general alternative regularization method with line search technique for solving split equilibrium and fixed point problems in Hilbert spaces. Comput. Appl. Math. 2020, 39, 1–22. [Google Scholar] [CrossRef]

- Korpelevich, G.M. An extragradient method for finding saddle points and for other problems. Matecon 1976, 12, 747–756. [Google Scholar]

- Akashi, S.; Takahashi, W. Weak convergence theorem for an infinite family of demimetric mappings in a Hilbert space. J. Nonlinear Convex Anal. 2016, 10, 2159–2169. [Google Scholar]

- Yao, Y.; Cho, Y.J.; Liou, Y.C. Algorithms of common solutions for variational inclusions, mixed equilibrium problems and fixed point problems. Eur. J. Oper. Res. 2011, 212, 242–250. [Google Scholar] [CrossRef]

- Alvarez, F.; Attouch, H. An inertial proximal method for monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Song, Y.L.; Ceng, L.C. Strong convergence of a general iterative algorithm for a finite family of accretive operators in Banach spaces. Fixed Point Theory Appl. 2015, 2015, 1–24. [Google Scholar] [CrossRef]

- Tan, B.; Qin, X.; Yao, J.C. Strong convergence of self-adaptive inertial algorithms for solving split variational inclusion problems with applications. J. Sci. Comput. 2021, 87, 1–34. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef]

- Fulga, A.; Afshari, H.; Shojaat, H. Common fixed point theorems on quasi-cone metric space over a divisible Banach algebra. Adv. Differ. Equations 2021, 2021, 1–15. [Google Scholar] [CrossRef]

- Yang, J.; Liu, H.; Liu, Z. Modified subgradient extragradient algorithms for solving monotone variational inequalities. Optimization 2018, 67, 2247–2258. [Google Scholar] [CrossRef]

- Tan, B.; Qin, X. Self adaptive viscosity-type inertial extragradient algorithms for solving variational inequalities with applications. Math. Model. Anal. 2022, 27, 41–58. [Google Scholar] [CrossRef]

- Goebel, K.; Kirk, W.A. Topics in Metric Fixed Point Theory; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Goebel, K.; Reich, S. Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings; Dekker: New York, NY, USA, 1984. [Google Scholar]

- Cottle, R.W.; Yao, J.C. Pseudo-monotone complementarity problems in Hilbert space. J. Optim. Theory Appl. 1992, 75, 281–295. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative algorithm for nonlinear operators. J. Lond. Math. Soc. 2002, 2, 1–17. [Google Scholar] [CrossRef]

- Xu, H.K. Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 2004, 298, 279–291. [Google Scholar] [CrossRef]

- Iiduka, H.; Takahashi, W. Strong convergence theorems for nonexpansive mappings and inverse-strongly monotone mappings. Nonlinear Anal. Theory Methods Appl. 2005, 61, 341–350. [Google Scholar] [CrossRef]

- Zhou, H.; Zhou, Y.; Feng, G. Iterative methods for solving a class of monotone variational inequality problems with applications. J. Inequalities Appl. 2015, 2015, 1–17. [Google Scholar] [CrossRef][Green Version]

- Meng, S.; Xu, H.K. Remarks on the gradient-projection algorithm. J. Nonlinear Anal. Optim. 2010, 1, 35–43. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).