Abstract

The k-means problem has been paid much attention for many applications. In this paper, we define the uncertain constrained k-means problem and propose a -approximate algorithm for the problem. First, a general mathematical model of the uncertain constrained k-means problem is proposed. Second, the random sampling properties of the uncertain constrained k-means problem are studied. This paper mainly studies the gap between the center of random sampling and the real center, which should be controlled within a given range with a large probability, so as to obtain the important sampling properties to solve this kind of problem. Finally, using mathematical induction, we assume that the first cluster centers are obtained, so we only need to solve the j-th center. The algorithm has the elapsed time , and outputs a collection of size of candidate sets including approximation centers.

1. Introduction

The k-means problem has received much attention in the past several decades. The k-means problems consists of partitioning a set P of points in d-dimensional space into k subsets such that is minimized, where is the center of , and is the distance between two points of p and q. The k-means problem is one of the classical NP-hard problems, and has been paid much attention in the literature [1,2,3].

For many applications, each cluster of the point set may satisfy some additional constraints, such as chromatic clustering [4], r-capacity clustering [5], r-gather clustering [6], fault tolerant clustering [7], uncertain data clustering [8], semi-supervised clustering [9], and l-diversity clustering [10]. The constrained clustering problems was studied by Ding and Xu, who presented the first unified framework in [11]. Given a point set , and a positive integer k, a list of constraints , the constrained k-means problem is to partition P into k clusters , such that all constraints in are satisfied and is minimized, where denotes the centroid of .

In recent years, particular research has been focused on the constrained k-means problem. Ding and Xu [11] showed the first polynomial time approximation scheme with running time for the constrained k-means problem, and obtained a collection of size of candidate approximate centers. The existing fastest approximation schemes for the constrained k-means problem takes time [12,13], which was first shown by Bhattacharya, Jaiswai, and Kumar [12]. Their algorithm gives a collection of size of candidate approximate centers. In this paper, we propose the uncertain constrained k-means problem, which supposes that all points are random variables with probabilistic distributions. We present a stochastic approximate algorithm for the uncertain constrained k-means problem. The uncertain constrained k-means problem can be regarded as a generalization of the constrained k-means problem. We prove the random sampling properties of the uncertain constrained k-means problem, which are fundamental for our proposed algorithm. By applying random sampling and mathematical induction, we propose a stochastic approximate algorithm with lower complexity for the uncertain constrained k-means problem.

This paper is organized as follows. Some basic notations are given in Section 2. Section 3 provides an overview of the new algorithm for the uncertain constrained k-means problem. In Section 4, we discuss the detailed algorithm for the uncertain constrained k-means problem. In Section 5, we investigate the correctness, success probability, and running time analysis of the algorithm. Section 6 concludes this paper and gives possible directions for future research.

2. Preliminaries

Definition 1

(Uncertain constrained k-means problem). Given a random variable set , the probability density function for every random variable , a list of constraints , and a positive integer k, the uncertain constrained k-means problem is to partition into k clusters , such that all constraints in are satisfied and is minimized, where denotes the centroid of .

Definition 2

([13]). Let be a set of random variables in , be probability density function for every random variable , and and P be a set of points in , .

- Define .

- Define .

- Define .

Definition 3

([13]). Let be a set of random variables in , be the probability density function for every random variable , and be a partition of .

- Define .

- .

- Define .

- Define.

- Define .

Lemma 1.

For any point and a random variable set , .

Proof.

Let be the probability density function for every random variable .

The (3) equality follows from the fact that . □

Lemma 2.

Let be a set of random variables in and be the probability density function for every random variable . Assume that is a set of random variables obtained by sampling random variables from uniformly and independently. For ∀, we have:

where .

Proof.

First, observe that

where . Then apply the Markov inequality to obtain the following.

□

Lemma 3.

Let be a set of random variables in , be the probability density function for every random variable , and be an arbitrary subset of with random variables for some . Then , where .

Proof.

Lemma 4

([12]). For any , then .

Theorem 1

([14]). Let be s, an independent random variable, where takes 1 with a probability of at least p for . Let . Then, for any , .

3. Overview of Our Method

In this section, we first introduce the main idea of our methodology to solve the uncertain constrained k-means problem.

Considering the optimal partition of , since , if we could sample a set of size from uniformly and independently, then at least random variables in are from with a certain probability. All subsets of of size could be enumerated to discover the approximate center of .

We assume that is the set including approximate centers of the . Let , where . The set is divided into two parts: and , where and . For each random variable X, let be the nearest point (particular random variable) in to X. Let , and .

If most of the random variables of are in , our idea is to use the center of to approximate the center of . The center of is found based on . If most of the random variables of are in , our ideal is to replace the center of with the center of . For seeking out the approximate center of , we should find out a subset by uniformly sampling from . However, the set is unknown. We need to find the set . We apply a branching strategy to find a set such that , and . Then, a random variables set is obtained by sampling random variables from independently and uniformly. And the set can be replaced by a subset of from . Based on and , the approximation center of could be obtained. Therefore, the algorithm presented in this paper outputs a collection of size of candidate sets containing approximation centers, and has the running time .

4. Our Algorithm cMeans

Given an instance of the uncertain constrained k-means problem, denotes an optimal partition of . There exist six parameters (, , g, k, C, U) in our , where is the approximate factor, is the input random variable set, g is the number of centers, k is the number of the clusters, C is the set of approximate cluster centers, and U is a collection of candidate sets including the approximate center. Let , where M is the size of subsets of the sampling set and N is the size of the sampling set. Without loss of generality, assume that values of M and N are integers.

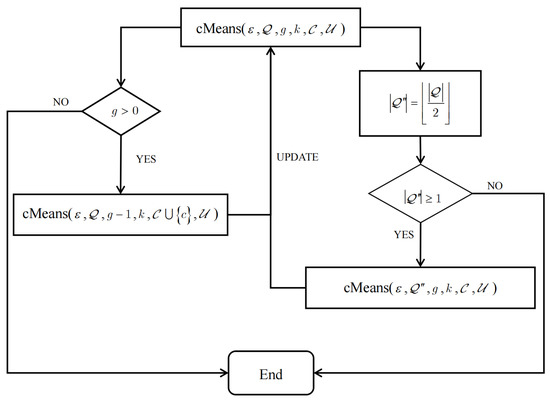

We use the branching strategy to seek out the approximate centers of clusters in . There exist two branches in our algorithm , which can be seen in Figure 1. On one branch, a size N set is obtained by sampling from uniformly and independently; is constructed by and M copies of each point in C. Moreover, we consider each subset of size M of , and the centroid c of is solved to represent the approximate center of , and our algorithm () is used to obtain the remaining cluster centers.

Figure 1.

Flow chart of our algorithm .

On the other branch, for each random variable , we calculate the distance between X and C first. H denotes the set of all distances of random variables in to C, where H is a multi-set. We should obtain the median value m for all values in H, which is the -th element if all of the values in H are sorted. In the second branch, is divided into two parts, and , based on m such that for , , , where , . Subroutine () is used to obtain the remaining g cluster centers. Therefore, we present the specific algorithm for seeking out a collection of candidate sets in the Algorithm 1.

| Algorithm 1:() |

|

5. Analysis of Our Algorithm

We investigate the success probability, correctness, and time complexity analysis of the algorithm in this section.

Lemma 5.

There exists a candidate set, with a probability of at least , including the approximate center in U satisfying .

The following Lemmas from Lemma 6 to 16 are used to prove Lemma 5. We prove Lemma 5 via induction on j. For , we can obtain easily, and prove the success probability first.

Lemma 6.

In the process of finding in our algorithm , by sampling a set of random variables from independently and uniformly, denoted by , the probability that at least random variables in are from is at least .

Proof.

In our algorithm , we assume that , where . Let be the corresponding random variables of elements in . If , then . Otherwise . It is known easily that . Let . We obtain that . Then,

□

From Lemma 6, an with size of can be obtained, and the probability that all points in are from is at least . Let denote the centroid of , and . For , by Lemma 2, we conclude that holds with a probability of at least . Then, the probability that a subset of size of can be found such that holds is at least . Therefore, we conclude that Lemma 5 holds for .

Moreover, we assume that for , Lemma 5 holds with a probability of at least . Considering the case , we prove Lemma 5 by the following two cases: (1); (2).

5.1. Analysis for Case 1:

Since , most of the random variables of are in . Our idea is to replace the center of with the center of . Thus, we need to find the approximate center of and the bound distance . We divide the distance into the following three parts: , , and . We first study the distance between and .

Lemma 7.

.

Proof.

Since and , the proportion of in is at least . By Lemma 3, . □

Lemma 8.

.

Proof.

Since , and , we can obtain the following:

□

Lemma 9.

.

Proof.

Since , by 1, we have . Then,

□

Lemma 10.

In the process of finding in our algorithm , for the set in step 5, a subset of size of can be obtained such that all random variables in are from . Let be the centroid of . Then, the inequality holds with a probability of at least .

Proof.

For each point , copies of p are added to in step 9 in our algorithm . Thus, a subset of size of can be obtained such that all random variables in are from . Let . Since , by Lemma 2, holds with a probability of at least . Assume that . Then,

□

Lemma 11.

If satisfies , then .

Proof.

Assume that satisfies . Then,

□

5.2. Analysis for Case 2:

Let , and denote the centroid of . Our idea is to replace the center of with the center of . But it is difficult to seek out the center of . Thus, we try to find an approximate center of .

Lemma 12.

.

Proof.

□

Lemma 13.

.

Proof.

□

Lemma 14.

.

Proof.

□

Lemma 15.

In the process of finding in our algorithm , we assume that satisfies and . For the set in step 5, a subset of size of can be obtained such that all random variables in are from with a probability of . Let denotes the centroid of . Then, the inequality holds with a probability of at least .

Proof.

In our algorithm , we assume that , where . Let be the corresponding random variables of elements in . If , obtain , or else . It is known easily that by Lemma 12. Let . We obtain that , and

Then, the probability that at least random variables in are from is at least . Since copies of each point in , a subset of size of can be obtained, and the probability that all random variables in are from is at least . Let denote the centroid of and . For and , by Lemma 2, holds with a probability of at least . Assume that . Then,

□

Lemma 16.

If satisfies , then .

Proof.

Assume that satisfies . Then,

□

Lemma 17.

Given an instance of the uncertain constrained k-means problem, where the size of is n, for , we assume that by using our algorithm (ϵ, , k, C, U) (C and U are initialized as empty sets), a collection U of candidate sets including approximate centers is obtained. If there exists a set in U satisfying that , then is a -approximation for the uncertain constrained k-means problem.

Proof.

Assume that is a set in U satisfying that . Then,

□

5.3. Time Complexity Analysis

We analyze the time complexity for our algorithm in this section.

Lemma 18.

The time complexity of our algorithm is .

Proof.

Let , which , . By the Stirling formula,

In our algorithm , steps 5–9 have a run time of , step 11 have a run time of , and steps 13–16 have a run time of . Let denote the time complexity of algorithm , where g is the number of cluster centers, and n is the size of .

If , . When , . Because , . Therefore, , where .

For and , the recurrence of could be obtained as follows:

Because , two constants and with and could be obtained to arrive at the following recurrence.

Now we claim that . If , then . If , then , and the claim holds. Suppose that if , the claim holds for , and if , the claim holds for . We need to prove that:

The above formula can be simplified as , which holds for . For , . □

Thus, we can obtain the following Theorem 2.

Theorem 2.

Given an instance of the uncertain constrained k-means problem, where the size of is n, for , by using our algorithm , a collection U of candidate sets including approximate centers can be obtained with a probability of at least such that U includes at least one candidate set including approximate centers that is a -approximation for the uncertain constrained k-means problem, and the time complexity of our algorithm is .

6. Conclusions

In this paper, we defined the uncertain constrained k-means problem first, and then presented a stochastic approximate algorithm for the problem in detail. We proposed a general mathematical model of the uncertain constrained k-means problem, and studied the random sampling properties, which are very important to deal with the uncertain constrained k-means problem. By applying a random sampling technique, we obtained a -approximate algorithm for the problem. Then, we investigated the success probability, correctness and time complexity analysis of our algorithm , whose running time is . However, there also exists a big gap between the current algorithms for the uncertain constrained k-means problem and the practical algorithms for the problem, which has been mentioned in [13] similarly.

We will try to explore a much more practical algorithm for the uncertain constrained k-means problem in future. It is known that the 2-means problem is the smallest version of the k-means problem, and remains NP-hard. The approximation schemes for the 2-means problem can be generalized to solve the k-means problem. Due to the particularity of the uncertain constrained 2-means problem, we will study approximation schemes for the uncertain constrained 2-means problem and reduce the algorithm complexity of approximation schemes for the uncertain constrained k-means problem through approximation schemes of the uncertain constrained 2-means problem. Additionally, we will apply the proposed algorithm to some practical problems in the future.

Author Contributions

J.L. and J.T. contributed to supervision, methodology, validation and project administration. B.X. and X.T. contributed to review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Science and Technology Foundation of Guizhou Province ([2021]015), in part by the Open Fund of Guizhou Provincial Public Big Data Key Laboratory (2017BDKFJJ019), in part by the Guizhou University Foundation for the introduction of talent ((2016) No. 13), in part by the GuangDong Basic and Applied Basic Research Foundation (No. 2020A1515110554), and in part by the Science and Technology Program of Guangzhou (No. 202002030138), China.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Feldman, D.; Monemizadeh, M.; Sohler, C. A PTAS for k-means clustering based on weak coresets. In Proceedings of the 23rd ACM Symposium on Computational Geometry, SoCG, Gyeongju, Korea, 6–8 June 2007; pp. 11–18. [Google Scholar]

- Ostrovsky, R.; Rabani, Y.; Schulman, L.J.; Swamy, C. The effectiveness of lloyd-type methods for the k-means problem. J. ACM 2012, 59, 28:1–28:22. [Google Scholar] [CrossRef]

- Jaiswal, R.; Kumar, A.; Sen, S. A simple D2-sampling based PTAS for k-means and other clustering problems. Algorithmica 2014, 71, 22–46. [Google Scholar] [CrossRef]

- Arkin, E.M.; Diaz-Banez, J.M.; Hurtado, F.; Kumar, P.; Mitchell, J.S.; Palop, B.; Perez-Lantero, P.; Saumell, M.; Silveira, R.I. Bichromatic 2-center of pairs of points. Comput. Geom. 2015, 48, 94–107. [Google Scholar] [CrossRef]

- Yhuller, S.; Sussmann, Y.J. The capacitated k-center problem. SIAM J. Discrete Math. 2000, 13, 403–418. [Google Scholar]

- Har-Peled, S.; Raichel, B. Net and prune: A linear time algorithm for Euclidean distance problems. J. ACM 2015, 62, 4401–4435. [Google Scholar] [CrossRef]

- Swamy, C.; Shmoys, D.B. Fault-tolerant facility location. ACM Trans. Algorithms 2008, 4, 1–27. [Google Scholar] [CrossRef]

- Xu, G.; Xu, J. Efficient approximation algorithms for clustering point-sets. Comput. Geom. 2010, 43, 59–66. [Google Scholar] [CrossRef][Green Version]

- Valls, A.; Batet, M.; Lopez, E.M. Using expert’s rules as background knowledge in the clusdm methodology. Eur. J. Oper. Res. 2009, 195, 864–875. [Google Scholar] [CrossRef]

- Li, J.; Yi, K.; Zhang, Q. Clustering with deversity. In Proceedings of the 37th International Colloquium on Automata, Languages and Programming, ICALP, Bordeaux, France, 6–10 July 2010; pp. 188–200. [Google Scholar]

- Ding, H.; Xu, J. A unified framework for clustering constrained data without locality property. In Proceedings of the 26th Annual ACM-SIAM Symposium on Discrete Algorithms, SODA, San Diego, CA, USA, 4–6 January 2015; pp. 1471–1490. [Google Scholar]

- Bhattacharya, A.; Jaiswal, R.; Kumar, A. Faster algorithms for the constrained k-means problem. Theory Comput. Syst. 2018, 62, 93–115. [Google Scholar] [CrossRef]

- Feng, Q.; Hu, J.; Huang, N.; Wang, J. Improved PTAS for the constrained k-means problem. J. Comb. Optim. 2019, 37, 1091–1110. [Google Scholar] [CrossRef]

- Hoeffding, W. Probability inequalities for sums of bounded random variables. J. Am. Stat. Assoc. 1963, 58, 13–30. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).