Abstract

Artificial intelligence (AI) holds tremendous potential for promoting equity and access to science, technology, engineering, and mathematics (STEM) education, particularly for students with disabilities. This conceptual review explores how AI can address the barriers faced by this underrepresented group by enhancing accessibility and supporting STEM practices like critical thinking, inquiry, and problem solving, as evidenced by tools like adaptive learning platforms and intelligent tutors. Results show that AI can positively influence student engagement, achievement, and motivation in STEM subjects. By aligning AI tools with Universal Design for Learning (UDL) principles, this paper highlights how AI can personalize learning, improve accessibility, and close achievement gaps in STEM content areas. Furthermore, the natural intersection of STEM principles and standards with the AI4K12 guidelines justifies the logical need for AI–STEM integration. Ethical concerns, such as algorithmic bias (e.g., unequal representation in training datasets leading to unfair assessments) and data privacy risks (e.g., potential breaches of sensitive student data), require critical attention to ensure AI systems promote equity rather than exacerbate disparities. The findings suggest that while AI presents a promising avenue for creating inclusive STEM environments, further research conducted with intentionality is needed to refine AI tools and ensure they meet the diverse needs of students with disabilities to access STEM.

1. Introduction

Despite rapid technological advancements in science, technology, engineering, and mathematics (STEM) fields, they remain inaccessible to over seven million students with disabilities in the U.S., perpetuating economic and occupational inequities (National Center for Education Statistics, 2022; National Center for Science and Engineering Statistics, 2023; U.S. Department of Education, 2019). While a growing body of research explores artificial intelligence (AI) in educational settings (e.g., see Table 1 and Table 2), there remains a notable gap in its application, specifically within STEM accessibility (National Center for Education Statistics, 2022; U.S. Department of Education, 2019). The current article addresses these gaps by reviewing how AI applications can enhance STEM accessibility and support Universal Design for Learning (UDL) for students with disabilities in K-12 settings. While the existing literature extensively explores the use of AI in education (Kavitha & Joshith, 2024; Zhai et al., 2021), AI in STEM education (Chng et al., 2023; Hwang & Tu, 2021; Jia et al., 2024; Mohamed et al., 2022; Ouyang et al., 2023; Xu & Ouyang, 2022), and AI for supporting students with disabilities (Barua et al., 2022; Bhatti et al., 2024; Hopcan et al., 2022; Pierrès et al., 2024; Rice & Dunn, 2023), insufficient attention has focused on the specific intersection of AI in STEM education for students with disabilities.

1.1. The Unfortunate Reality of STEM Education for Individuals with Disabilities

In 2020, only 3% of the U.S. STEM workforce consisted of individuals with disabilities, highlighting a systemic issue that begins in K-12 education (Hall & Rathbun, 2020; National Center for Science and Engineering Statistics, 2023). As the demand for STEM knowledge continues to escalate, driven by rapid technological advancements and evolving global challenges, proficiency in these fields has become increasingly indispensable for everyday functions and will remain so in the foreseeable future (Executive Office of the President, 2020; Marino et al., 2021). Science, technology, engineering, and mathematics skills are crucial for specialized careers, navigating modern society, contributing to informed decision making, and fostering innovation. These skills, such as critical thinking and problem solving, are foundational for informed citizenship and future employability for all students, regardless of disability status (Maass et al., 2022; National Academies of Sciences et al., 2021; National Research Council, 2012).

Despite nearly seven million students with disabilities receiving special education services (Office of Special Education and Rehabilitative Services, 2024), they consistently underperform in STEM content areas compared to their peers without disabilities, exhibiting significant gaps in science and mathematics achievement across grade levels (National Center for Education Statistics, 2022; U.S. Department of Education, 2019). Students with disabilities score on average 36% lower in STEM assessments than their peers by 12th grade, a disparity exacerbated by inaccessible instructional methods (National Center for Education Statistics, 2022; U.S. Department of Education, 2019). Given the importance of STEM skills in daily life and global competitiveness, ensuring high-quality STEM education for students with and without disabilities is imperative (Executive Office of the President, 2020). The underrepresentation of individuals with disabilities in STEM fields constitutes both an economic and occupational injustice (Kolne & Lindsay, 2020; National Center for Science and Engineering Statistics, 2023; Paul et al., 2020).

Furthermore, a diverse and robust workforce, inclusive of traditionally marginalized communities such as individuals with disabilities, is essential for the vitality of STEM professions (Executive Office of the President, 2020; National Academies of Sciences et al., 2021). Increasing representation from individuals with disabilities enriches STEM fields with diverse perspectives and enhances national competitiveness (Basham et al., 2020; Marino et al., 2021; National Academies of Sciences et al., 2021). Despite national recognition of the importance of STEM skills and a diverse STEM workforce, students with disabilities continue to fall behind their peers (National Center for Education Statistics, 2022; U.S. Department of Education, 2019), indicating potential deficiencies in delivering effective STEM instruction to these students, leaving lasting effects (Hall & Rathbun, 2020).

Barriers to STEM Content

Students with disabilities face ongoing and multifaceted barriers when attempting to access STEM education. Barriers include inaccessible teaching methods, limited teacher training, and systemic biases in STEM instruction (Doabler et al., 2021; Klimaitis & Mullen, 2021b; Marino et al., 2021; Therrien et al., 2017). For example, students with disabilities often struggle with language, knowledge acquisition, retention, and fundamental academic skills (e.g., reading and writing) inherent in STEM learning (Marino et al., 2021; Therrien et al., 2017). Traditional methods of STEM instruction, primarily lecture-based, tend to emphasize skills that may not cater to the needs of all students, particularly those with disabilities (Friedensen et al., 2021; Therrien et al., 2017).

Consequently, these methods may hinder the accessibility of STEM education for traditionally underrepresented groups, including students with disabilities (Basham et al., 2020). Moreover, a lack of familiarity among teachers with inclusive practices and technologies could further impede the success of students with disabilities (Klimaitis & Mullen, 2021a, 2021b). Furthermore, barriers exist at the organizational level (e.g., access to facilities and resources, time limitations, and departmental isolation (Friedensen et al., 2021; Klimaitis & Mullen, 2021a, 2021b; Marino et al., 2021). Artificial intelligence poses possible solutions for addressing the STEM barriers faced by students with disabilities (Marino et al., 2023). Tools such as Microsoft’s Immersive Reader, which converts text to audio, and Google’s Lookout (Google LLC, 2019), which supports visually impaired students in navigating digital content, illustrate how AI can help overcome these challenges.

1.2. Artificial Intelligence: What It Is and Its Not-So-New History

Artificial intelligence is a branch of computer science that aims to create systems capable of performing tasks that typically require human intelligence, such as learning, reasoning, problem solving, perception, and language understanding. While AI has recently become a topic of concern in education, its history is long. The history of AI has seen significant milestones and evolving definitions influenced by philosophical inquiries (Copeland & Proudfoot, 2007), technological advancements (Kaul et al., 2020), and interdisciplinary collaborations (Hassabis et al., 2017).

The modern era of AI began in the mid-20th century, with pivotal contributions from figures like Alan Turing, who proposed the concept of a machine that could mimic human intelligence (Copeland & Proudfoot, 2007). In 1956, John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon organized the Dartmouth Conference, often cited as the official birth of AI as a field of study. The attendees ambitiously predicted that “every aspect of learning or any other feature of intelligence can, in principle, be so precisely described a machine can be made to simulate it” (Crevier, 1993, p. 50).

The following decades saw substantial progress and setbacks in AI. Early successes in the 1950s and 1960s included programs that could play chess and solve algebra problems. Advances in machine learning, particularly neural networks and deep learning, drove the resurgence of AI in the late 20th and early 21st centuries. These technologies have enabled significant breakthroughs in image and speech recognition, autonomous vehicles, and natural language processing (Hamet & Tremblay, 2017). Artificial intelligence encompasses various applications, from medical diagnostics to autonomous vehicles (Groumpos, 2023).

1.3. Alignment of AI to STEM Practices and Standards

Jia and colleagues found 88% (67 out of 76) of the reviewed articles reported positive educational outcomes and findings from applying AI techniques in science education (Jia et al., 2024). The implementation of AI naturally aligns with STEM practices and supports STEM learning. Artificial intelligence supports and improves inquiry and skill application, facilitates interdisciplinary knowledge and creativity, and promotes higher-order thinking skills such as computational thinking, problem solving, and critical thinking. Additionally, educators can utilize AI to identify student learning patterns and behaviors unique to the STEM setting, such as higher-order thinking and problem solving (Xu & Ouyang, 2022).

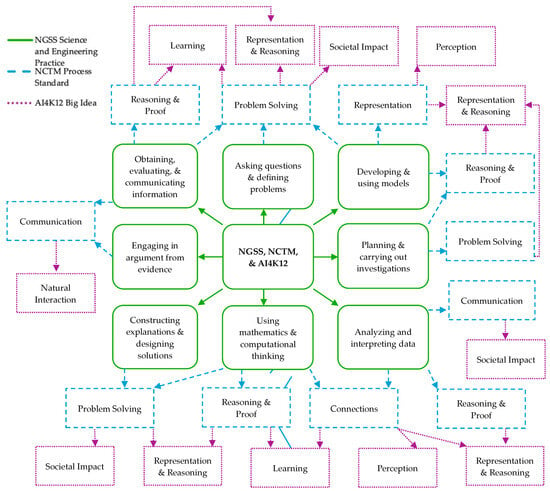

Moreover, STEM content and academic standards naturally align, such as the National Council of Teachers of Mathematics (NCTM) process standards and the Next Generation Science Standards (NGSS; Holman & Kohnke, 2024). For example, the NGSS science and engineering practice of using mathematics and computational thinking directly overlaps with mathematics standards. Furthermore, mathematics and science standards and practices align with the AI educational guidelines (e.g., AI4K12 guidelines and big ideas). For example, the NGSS science and engineering practice of constructing explanations and designing solutions aligns with the NCTM process standard of problem solving. Both then align with AI4K12’s big idea of societal impact. The authors of the present article further illustrate the alignment between the AI4K12 guidelines, the NGSS scientific and engineering practices, and the NCTM process standards in Figure 1. While the transdisciplinary intersection needs further research, the intersection of AI4K12 and NGSS is evident in the recent literature. Touretzky and colleagues illustrate how the NGSS crosscutting concepts (i.e., patterns, systems, and system models) and the science and engineering practices (i.e., asking questions and defining problems, developing and using models, planning and carrying out investigations, analyzing and interpreting data, constructing explanations and designing solutions) align with the skills required for STEM and AI education (Touretzky et al., 2023).

Figure 1.

Transdisciplinary Relationship between NGSS, NCTM, and AI4K12.

Ongoing Emphasis on Access and Equity

The emphasis on improving equity and access in STEM areas is ongoing (Executive Office of the President, 2020). The NGSS highlights the importance of creating access for traditionally underserved groups in science (NGSS Lead States, 2013). Additionally, equity is the first of the six NCTM mathematics principles (Ferrini-Mundy, 2000; National Council of Teachers of Mathematics, 2000). Furthermore, societal impact, AI4K12’s fifth big idea in AI, is closely related to equity and access in terms of recognizing bias and reaching various underrepresented populations (e.g., geographic, socioeconomic, race, ethnicity, disability, resource availability for schools and communities, formal and informal learning). Using AI can create equity of access (AI4K12, 2020, 2024). Denying students experiences with AI technologies in a STEM setting limits their ability to thrive in a technology-driven future (Yang, 2022). Additionally, AI and STEM education emphasize the importance of creative inquiry and problem-solving skills, both of which will be highly important in the future workforce (Yang, 2022).

2. Methodology

Early in the process, we identified a significant gap in research addressing the intersection of AI, STEM education, and students with disabilities. To address this, we shifted focus toward identifying emerging themes in AI’s application in STEM education, emphasizing accessibility and personalized learning for students with disabilities and universal design. Given the limited research directly exploring this intersection, we adopted a conceptual review approach (Hulland, 2020), uncovering patterns and insights across related fields. This approach provides a comprehensive understanding of current research trends and gaps, informing future investigations (Hulland, 2020).

In late June 2024, we conducted a targeted search to identify relevant literature across EBSCOhost, PsycINFO, ERIC (EBSCO), and Google Scholar. To ensure comprehensive coverage, we categorized our search into five key areas: (1) AI and education, (2) AI and STEM education, including individual disciplines of science, mathematics, technology, and engineering, (3) AI and students with disabilities or special education, (4) AI and Universal Design for Learning (UDL), and (5) combinations of these categories (e.g., AI, STEM, and students with disabilities or AI, mathematics, disabilities, and UDL).

The search process began with descriptors from the ERIC Thesaurus, such as “Artificial Intelligence” and “Special Education”, to ensure consistency and alignment with standardized terms and enhance discoverability. We paired these descriptors with synonyms (e.g., A.I. or AI or machine learning or deep learning) to identify relevant literature. We included studies that addressed AI in K-12 or post-secondary educational contexts. We reviewed abstracts for relevance and excluded articles unavailable through our universities’ library systems, not written in English, or focused on non-educational applications of AI. Our conceptual analysis focused on article type, general findings, types and purposes of AI, educational settings, populations studied, and AI’s impacts. See Table 1 for a summary of the review articles examined.

Table 1.

Highlighted Reviews.

Table 1.

Highlighted Reviews.

| Citation | Topic | Article Type | Number of Articles Reviewed | Types of Articles Reviewed |

|---|---|---|---|---|

| Ahmad et al. (2020) | AI and Education | Bibliometric analysis and systematic review | N = 3246 | Articles from top venues from 2014 to 2020 |

| Dai and Ke (2022) | AI and Education | Systematic mapping review and thematic synthesis | N = 59 | Peer-reviewed journal articles, book chapters, and conference proceedings. Years not specified |

| Kavitha and Joshith (2024) | AI and Education | Bibliometric analysis | N = 324 | Articles from 2003 to 2023 in Scopus |

| Misra et al. (2023) | AI and Education | Thematic review | N = 280 | Articles from 1976 to 2023 |

| Paek and Kim (2021) | AI and Education | Bibliometric analysis | N = 5035 | Articles from 2001 to 2021 |

| Yousuf and Wahid (2021) | AI and Education | Review study | Not specified | Not specified |

| Zhai et al. (2021) | AI and Education | Systematic review | N = 142 | Research articles, review papers, interview papers, and book reviews from 2010 to 2020 |

| Chng et al. (2023) | AI and STEM | Literature review | N = 82 | Empirical articles. Years not specified |

| Ouyang et al. (2023) | AI and STEM | Systematic review | N = 17 | Empirical research articles from January 2011 to April 2023 |

| Xu and Ouyang (2022) | AI and STEM | Systematic review | N = 63 | Empirical articles from 2011 to 2021 |

| Hwang and Tu (2021) | AI and Mathematics | Bibliometric analysis and systematic review | N = 43 | Publications from 1996 to 2020 |

| Mohamed et al. (2022) | AI and Mathematics | Systematic review | N = 20 | Articles from 2017 to 2021 in indexed journals |

| Jia et al. (2024) | AI and Science | Systematic review | N = 76 | Studies from 2013 to 2023 |

| Barua et al. (2022) | AI and Students with disabilities | Systematic review | N = 26 | Peer-reviewed articles from 2011 to 2021 |

| Bhatti et al. (2024) | AI and Students with disabilities | Systematic review | N = 16 | Journal articles and conference proceedings from 2015 to 2022 |

| Hopcan et al. (2022) | AI and Students with disabilities | Systematic review | N = 29 | Studies between 2008 and 2020 |

| Kharbat et al. (2021) | AI and Students with disabilities | Systematic review | N = 105 | Peer-reviewed articles published between January 2000 and 2020 |

| Pierrès et al. (2024) | AI and Students with disabilities | Systematic review | N = 72 | Articles from 2018 to 2022 |

| Rice and Dunn (2023) | AI and Students with disabilities | Systematic review | N = 18 | Publications from 2009 to 2022 |

| Zdravkova et al. (2022) | AI and Students with disabilities | Narrative literature review | Not stated | Articles from 2012 to 2022 |

| Bray et al. (2024) | UDL and Technology | Systematic review | N = 15 | Peer-reviewed publications. Years not specified |

Note. AI: artificial intelligence; STEM: science, technology, engineering, and mathematics education; UDL: Universal Design for Learning.

To address gaps in the available literature reviews, we included primary studies, book chapters, and practitioner articles when existing reviews lacked comprehensive coverage of key areas, such as AI and UDL or AI, STEM, and students with disabilities. This approach allowed us to integrate diverse sources to capture emerging themes and provide a multifaceted understanding of the current state of research (see Table 2).

Table 2.

Resources Other than Reviews.

Table 2.

Resources Other than Reviews.

| Citation | Topic | Article Type | Population Focus |

|---|---|---|---|

| Gawande et al. (2020) | AI and Education | Research article | Higher education |

| Ivanović et al. (2022) | AI and Education | Book chapter | All levels |

| How and Hung (2019) | AI and STEM/STEAM | Application article | K-12 STEAM settings |

| Ogunkunle and Qu (2020) | AI and STEM | Research article | Students in STEM subjects |

| Vasconcelos and Santos (2023) | AI and STEM | Research article | Students in simulated STEM learning experience |

| Yelamarthi et al. (2024) | AI and engineering | Position article | Students in engineering education |

| McMahon and Walker (2019) | AI and UDL | Practitioner article | Not specified |

| Saborío-Taylor and Rojas-Ramírez (2024) | AI and UDL | Practitioner article | Not specified |

| Almufareh et al. (2024) | AI and Students with disabilities | Conceptual paper | Individuals with disabilities |

| Ivanović et al. (2019) | AI and Students with disabilities | Position article | Students with disabilities in virtual enviornments |

| Lamb et al. (2023) | AI and Students with disabilities | Opinion paper | Students with disabilities |

| Marino et al. (2023) | AI and Students with disabilities | Position article | Students receiving special education in K-12 settings |

| Rai et al. (2023) | AI and Students with disabilities | Research article | Students with learning disabilities |

| Center for Innovation, Design, and Digital Learning (2024) | AI and all learners | Report | All learners, early childhood–higher education |

| Hyatt and Owenz (2024) | AI, UDL, and Students with disabilities | Research article | Graduate students with disabilities |

| Shukla et al. (2016) | AI, STEM, and Students with disabilities | Research article | Individuals with profound and multiple learning disabilities |

| Hughes et al. (2022) | AI, STEM, UDL, and Students with disabilities | Research article | Elementary students with autism spectrum disorder |

Note. AI: artificial intelligence; STEM: science, technology, engineering, and mathematics education; UDL: Universal Design for Learning.

3. Results: Current Educational Trends of Artificial Intelligence

Artificial intelligence is rapidly transforming education, with applications ranging from intelligent tutoring systems (e.g., ASSISTment) to automated grading (e.g., Graded Pro) and personalized learning tools like Knewton Alta or DreamBox (Ivanović et al., 2022; Yousuf & Wahid, 2021). Recent trends include the use of AI in simulation-based learning, affective computing, and assessment (Dai & Ke, 2022). Emerging technologies such as hologram technology, ubiquitous learning, and green computing are shaping the future of higher education (Gawande et al., 2020). Research on AI in education has significantly increased since 2015. Paek and Kim identified eight main topics: changes in teaching content, feedback on assessment, interaction in online learning, learning strategies and achievement, language learning, AI-driven educational technology, medical education, and machine learning algorithms (Paek & Kim, 2021). Despite its potential, AI in education faces challenges related to ethical concerns, changing roles of teachers and students, and the need for proper implementation (Zhai et al., 2021). As the field evolves, there is a growing focus on explainable AI, AI literacy, and the application of AI in lifelong learning (Ahmad et al., 2020; Misra et al., 2023).

3.1. Artificial Intelligence Within STEM Education

Science, technology, engineering, and mathematics educators encounter challenges in teaching complex problems inherent within STEM content and can benefit from the advantages provided by AI (How & Hung, 2019; Xu & Ouyang, 2022). However, likely due to the natural alignment of technological advancements and the innovative characteristics of STEM education, several recent literature reviews and bibliometric analyses have investigated the relationships between AI and STEM education (Chng et al., 2023; Ouyang et al., 2023; Xu & Ouyang, 2022), science education (Jia et al., 2024), and mathematics education (Hwang & Tu, 2021; Mohamed et al., 2022).

As machine learning has become readily available, the focus on AI in STEM education and research and the varieties of AI have expanded (Hwang & Tu, 2021; Jia et al., 2024; Mohamed et al., 2022; Ouyang et al., 2023; Xu & Ouyang, 2022). The most common types are considered to be machine learning (Hwang & Tu, 2021; Jia et al., 2024; Ouyang et al., 2023), predictive analytics (Chng et al., 2023; Jia et al., 2024; Xu & Ouyang, 2022), educational robots (Hwang & Tu, 2021; Jia et al., 2024; Mohamed et al., 2022; Ouyang et al., 2023; Xu & Ouyang, 2022), and intelligent tutoring systems (Chng et al., 2023; Jia et al., 2024; Xu & Ouyang, 2022). Additional types of AI include deep learning (Hwang & Tu, 2021; Ouyang et al., 2023), natural language processing (Chng et al., 2023; Ouyang et al., 2023), and computer vision (Chng et al., 2023). AI-related technologies, such as augmented reality and mixed reality, are also integrated into STEM education to enhance learning experiences (Chng et al., 2023). Furthermore, educators and students implement AI at various education levels (e.g., Kindergarten–12th grade, higher education) in STEM education. Most reviews (Hwang & Tu, 2021; Jia et al., 2024; Xu & Ouyang, 2022) highlighted higher education as the most common setting for AI implementation. However, Chng and colleagues found K-12 settings to be more common, though they focused on broader “emerging technologies” terminology rather than specifically focusing on AI (Chng et al., 2023).

Artificial intelligence in STEM education serves several key purposes. Chng et al. (2023) categorized the purposes as conceptual, epistemic, social, and other. Artificial intelligence enhances instructional design (How & Hung, 2019; Hwang & Tu, 2021; Jia et al., 2024; Xu & Ouyang, 2022), reviews assessments (i.e., academic performance, instructional quality; Ouyang et al., 2023), predicts student learning performance and behavior to help shape instruction, and provides personalized support to students (Ouyang et al., 2023; Xu & Ouyang, 2022). With this personalization and monitoring, AI improves student learning experiences (How & Hung, 2019; Hwang & Tu, 2021; Jia et al., 2024; Xu & Ouyang, 2022), learning achievements, motivation, and attitudes (Hwang & Tu, 2021). Artificial intelligence also addresses various educational aspects, including conceptual understanding, the advantages and disadvantages of different methods, factors influencing learning, idea suggestions, strategies, and overall effectiveness (Mohamed et al., 2022). Engineering and computer science education also view generative AI as a transformative tool that can design and deliver current content, personalize learning, and optimize educator time (Yelamarthi et al., 2024).

3.2. Artificial Intelligence for Students with Disabilities

Much like STEM education, the interest in AI for special education and serving students with disabilities has increased, evidenced by recent literature reviews (Barua et al., 2022; Bhatti et al., 2024; Hopcan et al., 2022; Pierrès et al., 2024; Rice & Dunn, 2023) and position articles (Marino et al., 2023). Marino and colleagues highlight the potential of AI to revolutionize special education and emphasize the need for rigorous research to assess its effectiveness for students with disabilities (Marino et al., 2023). Furthermore, AI-enabled tools, such as text-to-speech software and voice recognition systems, significantly enhance accessibility and personalized learning, particularly for individuals with physical challenges, speech, language, and visual impairments (Marino et al., 2023).

The AI technologies supporting students with disabilities include adaptive learning systems, facial expression analysis, chatbots, communication aids, mastery learning systems, intelligent tutoring systems, and interactive robots. Adaptive learning systems utilize predictive, screening, and diagnostic tools for personalized education (Bhatti et al., 2024). Facial expression analysis helps recognize and respond to students’ emotional states (Barua et al., 2022). Chatbots and communication aids facilitate interaction and provide immediate assistance (Barua et al., 2022), while initiatives like Project RAISE, which pairs AI companions with students, show promise in developing STEM skills and addressing communication barriers for students with autism (Hughes et al., 2022). Mastery learning and intelligent tutoring systems ensure personalized, pace-appropriate instruction (Bhatti et al., 2024), demonstrating a 67% greater engagement rate than static online platforms (Shukla et al., 2016). Interactive robots engage students in dynamic learning activities (Hopcan et al., 2022). These technologies leverage general AI software, such as neural networks, adaptive software, and expert systems, to enhance their effectiveness in educational settings (Barua et al., 2022; Bhatti et al., 2024; Hopcan et al., 2022).

Artificial intelligence in special education serves multiple purposes. It delivers tailored learning support by assessing engagement and offering accessibility resources, personalized feedback, and communication assistance (Bhatti et al., 2024). It monitors progress, supports the relearning process, and targets skill improvement while identifying individual learning difficulties and proficiency levels, thereby offering personalized learning strategies (Bhatti et al., 2024; Pierrès et al., 2024). Artificial intelligence technologies in special education enhance learning through profiling, predictions, assessment, evaluation, intelligent tutoring systems, and recommenders (Hopcan et al., 2022; Pierrès et al., 2024). They support students’ intellectual and emotional development, streamline data collection and evaluation processes, enhance educational enjoyment, and test instruments for broader use with various disabilities (Rice & Dunn, 2023). Furthermore, AI is associated with positive outcomes for students with disabilities (Barua et al., 2022; Rice & Dunn, 2023).

Recent literature reviews highlight various disabilities studied in AI applications in special education. Autism spectrum disorder (ASD) is a frequent focus (Barua et al., 2022; Hopcan et al., 2022; Rice & Dunn, 2023). Intellectual and learning disabilities are also significant areas of study (Barua et al., 2022; Bhatti et al., 2024; Hopcan et al., 2022; Rice & Dunn, 2023). Attention-deficit/hyperactivity disorder (ADHD) is another prevalent focus (Barua et al., 2022; Hopcan et al., 2022). Additionally, researchers investigated individuals with visual impairments and multiple disabilities (Barua et al., 2022; Rice & Dunn, 2023). These studies underline the diverse applications of AI in enhancing educational outcomes for students with various disabilities.

3.2.1. Providing Universal Design Through AI

Universal Design for Learning (UDL) is a proactive approach to designing classroom instruction that provides multiple means of engagement (i.e., the WHY of learning), representation (i.e., the WHAT of learning), and action and expression (i.e., the HOW of learning; CAST, 2018). This approach is critical for ensuring the success of all students in an inclusive classroom by addressing diverse learning needs and removing barriers (Basham et al., 2020; Basham & Marino, 2013; Marino et al., 2021). Additionally, the National Education Technology Plan (NETP) recognizes UDL as a key strategy for addressing the digital access, design, and use divides (Center for Innovation, Design, and Digital Learning, 2024). Artificial intelligence can be a means of providing UDL (Bray et al., 2024; Saborío-Taylor & Rojas-Ramírez, 2024). Together, AI and UDL provide a pedagogical framework for improving access for individuals with disabilities (Hyatt & Owenz, 2024; McMahon & Walker, 2019; Saborío-Taylor & Rojas-Ramírez, 2024).

Artificial intelligence aligns with the UDL principles (i.e., multiple means of representation, action and expression, and engagement) and improves how educators present, provide access, assess, and tailor content for personalized learning. For example, when considering multiple means of representation, AI provides personalization and ready access to various modalities (e.g., text to speech; Saborío-Taylor & Rojas-Ramírez, 2024). Furthermore, AI allows users to adapt and refine content to meet specific needs. For example, a user might take complex text and alter the Lexile level or the overall formatting (e.g., bullet list, table, graphic). Users can use various means of demonstrating knowledge to incorporate multiple means of action and expression (Bray et al., 2024; Saborío-Taylor & Rojas-Ramírez, 2024). Table 3 further illustrates how AI facilitates STEM learning by aligning with UDL principles, relevant mathematics and science standards, and AI education guidelines.

Table 3.

Alignment of AI in STEM Education with UDL Principles and Content Standards.

The UDL framework is starting to be employed to make STEM education more accessible to students with disabilities. Hyatt and Owenz (2024) investigated how graduate students, with and without disabilities, perceive using AI-based assignments combined with UDL to create more equitable assignments. The results indicate students appreciate the flexibility and options of universally designed assignments incorporating AI (Hyatt & Owenz, 2024). By leveraging AI, educators can create more inclusive learning environments that cater to a wide range of learning needs.

Consider a high school chemistry teacher who wants to assess their students’ knowledge without using a formal test, written lab report, or worksheet but is unsure of the direction they want to take. Therefore, the teacher turns to AI, in this case, Ludia, a chatbot specifically aligned with the UDL framework (Rostan & Stark, 2023; Stark & Rostan, 2023) for recommendations on assessing students’ knowledge. See Table 3 for the specific input and output of this example. The multiple means of engagement principle is represented in AI through its ability to adapt and personalize in real time (Bray et al., 2024; Saborío-Taylor & Rojas-Ramírez, 2024). For example, users can ask for multiple real-world examples, align content to interests, or ask clarifying questions. The symbiosis between AI and UDL transforms educational settings into inclusive environments fostering inclusivity (Table 4). Key advantages include customizing and personalizing content and reducing learning obstacles. Educators can leverage these practical applications of AI and UDL to advance inclusive education (Saborío-Taylor & Rojas-Ramírez, 2024).

Table 4.

Ludia Example of AI for Universal Design.

3.2.2. Providing Personalization Through Artificial Intelligence

Artificial intelligence offers significant advantages in STEM education, particularly through personalized and adaptive learning (Hwang & Tu, 2021; Jia et al., 2024; Mohamed et al., 2022; Ouyang et al., 2023; Xu & Ouyang, 2022; Yelamarthi et al., 2024). Artificial intelligence technologies can provide personalized guidance and support, such as creating supportive interfaces to support individuals with visual impairments (Hwang & Tu, 2021). These advancements make courses more accessible, improve teacher–student communication, and free up students’ time to pursue interests outside of school (Mohamed et al., 2022). Additionally, AI algorithms can analyze students’ performance data to identify struggles and provide personalized recommendations (Ouyang et al., 2023). Artificial intelligence fosters student-centered learning by offering adaptive tutoring, recommending personalized resources, diagnosing learning gaps, and assessing STEM learning performances automatically (Xu & Ouyang, 2022). The effectiveness of such approaches is evident in a recent study by an adaptive learning company, which found that students using their AI-powered program improved their test scores by 62% compared to those who did not (MMD, 2021). This personalized, interactive, and innovative approach holds promise for enhancing educational experiences (Jia et al., 2024; Yelamarthi et al., 2024).

3.2.3. Technological Advancements and Inclusivity

Recent technological advancements have significantly impacted education outcomes for students with disabilities. Artificial intelligence and assistive technologies have transformed inclusive education by creating adaptive learning environments, enhancing communication, and improving accessibility (Almufareh et al., 2024; Zdravkova et al., 2022). Artificial intelligence-driven solutions, such as voice recognition and smart glasses, have transformed various domains, including healthcare and mobility (Almufareh et al., 2024). In education, AI tools adapt to individual learning styles, supporting academic success for students with disabilities (Lamb et al., 2023; Rai et al., 2023). Data mining algorithms optimize the selection of educational resources based on students’ learning traits (Ogunkunle & Qu, 2020). Virtual environments and innovative educational tools have emerged, offering equal opportunities for students with diverse abilities (Ivanović et al., 2019). However, developers and policymakers must address ethical concerns, including data privacy and algorithmic bias, to fully harness AI’s potential to empower individuals with disabilities (Almufareh et al., 2024; Rai et al., 2023).

3.2.4. Artificial Intelligence as Assistive Technology

Students with disabilities face numerous barriers to accessing education despite legal protections and increasing enrollment rates (Amaral, 2020; Reed et al., 2006). These barriers include physical inaccessibility, lack of appropriate resources, and insufficient support services (Beyene et al., 2023; West & Kregel, 1993). Additionally, attitudinal barriers from faculty, staff, and peers and inadequate institutional policies hinder full inclusion (Nash et al., 2022; Paul et al., 2020). International authorities recognize the right to inclusive education, but implementation remains challenging (Kanter, 2019; Sandoval-Gomez et al., 2020). To address these issues, institutions should adopt universal design principles, improve communication with stakeholders, and provide better training for educators (Amaral, 2020; Reed et al., 2006). Furthermore, revitalizing libraries as learning commons and enhancing disability resource professionals’ roles can support students with disabilities (Beyene et al., 2023; Nash et al., 2022). A coordinated approach involving all stakeholders is crucial to ensure equal access and success for students with disabilities in higher education.

Research indicates that while many students with disabilities use computers and the Internet, many require adaptations to use these technologies effectively (Fichten et al., 2000; Mack et al., 2023; Zorec et al., 2024). Barriers include a lack of appropriate assistive technologies (ATs) and insufficient training for students and educators (Sarasola Sánchez-Serrano et al., 2020). Studies show that ATs can positively impact educational engagement, academic self-efficacy, well-being, and psychosocial outcomes for students with disabilities in higher education (McNicholl et al., 2021, 2023). However, people with disabilities are less likely to use computers and the Internet, partly due to socioeconomic factors (Bastien et al., 2020; Dobransky & Hargittai, 2006; Scanlan, 2022). Schools and universities should provide multimedia elements with subtitles, transcriptions, and editable formats (Rodrigo & Tabuenca, 2020) to address these issues. Additionally, adaptive technology laboratories can facilitate equitable access to education for students with disabilities (Grodzinsky, 1997; Lunsford & Bargerhuff, 2006). Overall, meeting AT needs is crucial for enhancing the educational experience of students with disabilities. Artificial intelligence-driven assistive technologies can provide personalized and adaptive learning environments, improving accessibility for students with learning or sensory disabilities. These technologies can reduce cognitive load, enhance understanding, and boost student confidence (Al Omoush & Mehigan, 2023).

Artificial intelligence pre-installed assistive technologies in mainstream devices, such as screen readers and text-to-speech functions, offer practical solutions for enhancing accessibility without additional costly equipment. These technologies support sensory, physical, learning, and attention disabilities (Koch, 2017). For example, Apple’s VoiceOver© screen reader enables users with visual impairments to navigate their devices using auditory feedback, and Microsoft’s Math Solver© helps students with mathematics-related learning disabilities by providing step-by-step solutions and explanations for various mathematics problems (Koch, 2017).

Artificial intelligence has the potential to significantly mitigate access issues for students with disabilities by providing personalized, adaptive, and accessible educational experiences. Integrating AI-driven tools and assistive technologies into educational settings can empower students with disabilities, creating more inclusive learning environments that cater to the diverse needs of all students (Koch, 2017).

3.3. Artificial Intelligence for Students with Disabilities in STEM Education

Artificial intelligence has immense potential to support students with disabilities in STEM education by offering personalized learning experiences and enhancing accessibility (How & Hung, 2019; Martiniello et al., 2020). Developers have designed AI-powered tools to assist students with various disabilities, such as visual impairments, dyslexia, and speech disorders (Gupta et al., 2024). Artificial intelligence in STEM education enhances personalized learning, advanced analytics, and instructional automation. Tailoring educational resources to individual needs addresses practical challenges and improves learning outcomes for students with learning disabilities (Ogunkunle & Qu, 2020). Applying data mining algorithms to optimize the selection of educational resources ensures that students receive content best suited to their learning needs. This personalized approach enhances engagement and supports more effective learning, particularly for students struggling with traditional instructional methods (Ampadu, 2023).

However, ethical concerns surrounding data bias, privacy, and potential discrimination against students with disabilities raise important issues the field must address (Pierrès et al., 2024). To ensure that AI benefits all students, inclusive AI development must consider the specific needs of students with disabilities (Kharbat et al., 2021; Rice & Dunn, 2023). Despite the promising potential of AI, there is a notable gap in comprehensive studies evaluating its impact on educational outcomes for students with disabilities in STEM fields (Bhatti et al., 2024; Leddy, 2010; Zhai et al., 2021). Addressing these challenges and involving students with disabilities in the AI development process is essential for maximizing the benefits of AI in education (Thurston et al., 2017).

The use of AI in STEM education for students with disabilities is an emerging and growing area of interest. While there is extensive literature on AI applications in STEM education, a limited focus remains on leveraging AI to support students with disabilities in STEM settings specifically (Koch, 2017). However, educators are increasingly adopting AI technologies to enhance the learning experiences of students with disabilities in STEM education. The integration of assistive robotics has shown great promise in empowering these students and promoting their inclusion in scientific and technological fields (Demirbilek & Talan, 2022). Assistive robots provide interactive, hands-on experiences that make STEM subjects more accessible, allowing students with disabilities to engage in ways to meet their individual needs. In addition, these technologies foster the development of critical thinking and problem-solving skills essential for success in STEM (Demirbilek & Talan, 2022).

Artificial intelligence-driven assistive technologies, such as virtual learning environments, intelligent tutoring systems, and assistive robotics, further facilitate access to STEM education for students with intellectual disabilities. These technologies help overcome communication barriers and offer real-time support to enhance learning experiences (Rice & Dunn, 2023). Furthermore, the RAISE (Robotics & AI to Improve STEM) program has demonstrated success in helping children with autism develop STEM skills, such as coding, while also improving their social–emotional and communication abilities (Hughes et al., 2022). The program uses an AI companion that adapts to the needs of each student, providing personalized support and feedback, which fosters peer communication and engagement. By incorporating UDL principles, the RAISE program ensures multiple means of engagement, representation, and expression, allowing students to develop and express their knowledge and skills more effectively over time (Hughes et al., 2022).

As exemplified by the RAISE program, integrating advanced technologies significantly enhances educational outcomes for students with disabilities by offering personalized support and fostering essential skills. By aligning with UDL principles, these technologies provide varied ways of engaging students, presenting information, and allowing students to demonstrate their learning, which ultimately support a more inclusive and effective learning environment (Hughes et al., 2022).

4. Precautions for AI Implementation and Research

Implementing AI technologies involves various challenges requiring careful planning and execution to ensure safety, effectiveness, and ethical compliance. In sensitive areas like healthcare and education, where AI systems collect vast amounts of personal data, stakeholders must address concerns about privacy rights and potential data breaches. They should implement robust encryption, access controls, and data anonymization techniques to prevent unauthorized access. Additionally, organizations must establish transparent data practices, enforce stringent data protection measures, and clarify data ownership and control policies (He et al., 2019; Vasquez et al., 2024).

Additionally, transparency in AI algorithms is crucial for building trust among users and stakeholders. Explainable AI techniques can provide clear insights into decision making, thus fostering confidence in the technology’s outcomes (Wiencierz & Lünich, 2022). Explainable AI (XAI) techniques aim to make the operations of AI systems more transparent and interpretable. This means that users, developers, or stakeholders can understand how and why an AI system reaches a particular decision or outcome (Ali et al., 2023).

Regulatory compliance is another significant aspect when AI must adhere to stringent standards. Navigating the regulatory landscape is essential to ensure that AI applications meet legal and ethical requirements (Nilsen et al., 2023). Furthermore, interoperability and standardization are critical for seamlessly integrating AI systems into existing infrastructure. Standardized protocols and formats facilitate efficient data exchange and operation across different platforms, ensuring AI applications function cohesively within the broader technological ecosystem (Svedberg et al., 2022).

Artificial intelligence in education offers potential benefits but raises significant ethical concerns regarding fairness, confidentiality, and algorithmic prejudice (Barnes & Hutson, 2024; Vasquez et al., 2024; Weber, 2020). Ensuring equitable access to AI technologies is crucial to prevent exacerbating existing educational and social inequalities (Baker & Hawn, 2022; Vasquez et al., 2024; Weber, 2020). For example, students in well-funded schools may have greater access to cutting-edge AI tools. Algorithmic bias stemming from biased training data can perpetuate inequalities and unfair admissions, grading, and assessments, highlighting the importance of designing AI systems that are transparent, explainable, and developed with a critical awareness of potential biases (Slimi & Carballido, 2023; Vasquez et al., 2024).

Researchers emphasize the need for comprehensive ethical frameworks (Caccavale et al., 2022) and strategies to mitigate bias, such as diverse datasets and adherence to ethical guidelines (Barnes & Hutson, 2024). Addressing these challenges requires interdisciplinary collaboration (Barnes & Hutson, 2024), policy regulation (Slimi & Carballido, 2023), and education about AI ethics (Weber, 2020). Efforts to ensure algorithmic fairness in education should consider various notions of fairness (Kizilcec & Lee, 2023) and focus on moving from unknown to known bias and from fairness to equity (Baker & Hawn, 2022). Continuous vigilance and proactive strategies are crucial for responsible AI integration in education (Barnes & Hutson, 2024; Massala, 2023).

Integrating AI in education also presents opportunities to enhance instructional methodologies and personalize learning experiences. However, viewing AI as a tool to augment rather than replace teachers’ roles is essential, emphasizing the importance of soft skills such as emotional intelligence and creativity. Ongoing professional development and support for teachers are crucial to effectively integrate AI tools into their teaching practices and address ethical considerations. Educators play a vital role in teaching students about the nature of AI, its potential biases, and its impact on society, fostering students’ critical perspective and ethical awareness. Ultimately, navigating the ethical implications of AI integration in education requires a conscientious approach that prioritizes equitable access, ethical awareness, and the cultivation of technical and soft skills to prepare students for a technologically advanced future while upholding shared values of equity, inclusivity, and human dignity (Vasquez et al., 2024).

Closed vs. Open Bot: Information Source and Transparency

The debate between closed and open bots primarily revolves around their transparency and the source of information they utilize. Maslej and colleagues delineate several key differences between open and closed AI bots, focusing on accessibility, performance, transparency, and security in the 2024 Artificial Intelligence Index Report (Maslej et al., 2024).

Developers retain exclusive access to closed models (e.g., Google’s Gemini), keeping them fully closed. In contrast, some models, including OpenAI’s GPT-4 and Anthropic’s Claude 2, provide limited access via an API without releasing their model weights for independent scrutiny or modification. Open models (e.g., Meta’s Llama 2, Stability AI’s Stable Diffusion) release their model weights publicly, allowing broader use and independent modifications (Maslej et al., 2024). While a closed bot, the Education and Learning in an Inclusive Environment (EL) chatbot allows users to see where the output information comes from to be transparent (Zaugg, 2024).

In terms of performance, closed models consistently surpass open models across various benchmarks. Closed models exhibit a median performance advantage of 24.2% on ten selected AI benchmarks, with performance differences ranging from 4.0% on tasks like GSM8K to 317.7% on tasks like AgentBench. While open models offer accessibility and modifiability, they generally lag behind closed models in performance, as evidenced by significant performance gaps across various benchmarks (Maslej et al., 2024).

Transparency represents another critical distinction. Closed models typically score lower on transparency metrics, with an average transparency score of 30.9%, indicating limited access to information about their development and operations. Conversely, open models achieve significantly higher transparency scores, averaging 51.3%. The openness of these models allows for greater auditability and a broader range of perspectives in their use and development (Maslej et al., 2024).

Security considerations further differentiate these models. Proponents of closed models argue that controlled access reduces the likelihood of their use for malicious purposes, such as creating disinformation or bioweapons. This controlled access helps mitigate security risks associated with powerful AI capabilities. However, while open models foster innovation and transparency, they also pose significant security risks. Their accessibility can facilitate the creation and dissemination of harmful content, necessitating robust security measures to prevent misuse (Maslej et al., 2024).

These differences between open and closed AI bots hold important implications for AI policy and practice. The superior performance of closed models suggests a trade-off between accessibility and performance. Policymakers and practitioners must balance the benefits of transparency and innovation associated with open models against the enhanced performance and potentially reduced security risks of closed models. This balance is crucial in shaping the future landscape of AI development and deployment (Maslej et al., 2024).

5. Conclusions

Despite federal policies to improve access (e.g., Rehabilitation Act of 1973, Americans with Disabilities Act of 1990, Individuals with Disabilities Education Improvement Act, 2004) and national initiatives to increase underrepresented populations in STEM fields (Executive Office of the President, 2020), significant achievement gaps in STEM content areas remain between students with and without disabilities (National Center for Education Statistics, 2022; U.S. Department of Education, 2019). While these policies and initiatives have laid the groundwork for improving access, they have not fully addressed the persistent disparities in STEM achievement for students with disabilities. However, recent advancements in AI offer promising solutions to enhance accessibility and support for students with disabilities to reduce educational barriers (Educating All Learners Alliance & New America, 2024; Marino et al., 2023).

This conceptual review highlights the underexplored intersection of AI, STEM education, and students with disabilities. We uncovered patterns and insights across related disciplines, offering a deeper understanding of current research trends and gaps to inform research, practice, and technological developments. Our findings emphasize AI’s potential to enhance equity and accessibility in STEM education. Additionally, we advocate for the intentional integration of UDL principles, STEM standards (e.g., AI4K12, NGSS, NCTM), and AI to promote greater access for students with disabilities. Ultimately, this article contributes evidence-based insights to inform future research and guide practical applications.

Current research highlights AI’s transforming potential in education, particularly for special education and STEM fields. Artificial intelligence can personalize learning, adapt content, and promote inclusion for students with disabilities (Nixon et al., 2024; Santos et al., 2024). However, challenges persist, including ethical concerns related to privacy, bias, and transparency (Holmes et al., 2022; Ifenthaler et al., 2024). Policymakers must prioritize AI research funding, update curricula, provide professional development for educators (Dieker et al., 2024; Mosher et al., 2024), and develop assessment metrics (Huong, 2024; Saxena, 2024). Experts recommend interdisciplinary collaboration, innovative methodologies, and long-term impact evaluations to maximize AI’s potential while maintaining ethical standards (Hwang et al., 2020; Tanveer et al., 2020). They stress developing clear ethical guidelines, enhancing teacher training, and creating frameworks that balance technological advancements with human-centered approaches (Holmes et al., 2022; Ifenthaler et al., 2024) to ensure equity and accessibility for all students.

Integrating AI with UDL principles enhances inclusivity and accessibility in education. By personalizing learning experiences and addressing diverse student needs, this approach would particularly benefit students with disabilities in STEM fields and improve academic outcomes through AI-based assignments with UDL options (Basham & Marino, 2013; Hyatt & Owenz, 2024; Izzo, 2012; Mrayhi et al., 2023; Roshanaei et al., 2023; Saborío-Taylor & Rojas-Ramírez, 2024; Song et al., 2024; Southworth et al., 2023).

6. Implications and a Call for Research

Artificial intelligence has great potential for enhancing the learning experiences and outcomes for students with disabilities, particularly in STEM courses. However, realizing this potential requires careful consideration of the implications for educational practice, future research, and technology development. The following subsections will explore these implications in detail, offering a roadmap for educators, researchers, and developers.

6.1. Implications for Practice

Incorporating AI in STEM education to serve students with disabilities requires clear guidelines for identifying AI tools that align with UDL principles (Center for Innovation, Design, and Digital Learning, 2024). Teachers must grasp best practices and strategies for implementing AI in the classroom to support individualized learning and ensure that AI tools meet diverse student needs (Center for Innovation, Design, and Digital Learning, 2024; Mohamed et al., 2022).

To provide a concrete example of how AI (e.g., AI4k12) can align with stem standards and UDL principles, we highlight a lesson developed by the Education and Learning in an Inclusive Environment (EL) chatbot (Zaugg, 2024). The initial prompt was the following: “I need a lesson plan that incorporates the use of AI. Address NGSS MS-LS2-1 and MS-LS2-4 and one of the AI4K12’s big ideas. Provide recommendations for UDL and supporting students with disabilities.” Refer to Table 5 for the output of the Exploring Ecosystems with AI lesson plan. In this lesson, students utilize AI tools to analyze and interpret data on ecosystems, simulate the effects of changes in resource availability, and construct evidence-based arguments on how these changes impact populations, enhancing their understanding of ecological dynamics and the application of technology in environmental science.

Table 5.

EL Chatbot Output: Exploring Ecosystems with AI Lesson Plan.

To facilitate effective AI integration, it is essential to provide teachers with comprehensive resources, guidelines, ongoing coaching, and professional development tailored to varying levels of AI incorporation (Center for Innovation, Design, and Digital Learning, 2024; Dieker et al., 2024; Marino et al., 2023; Mosher et al., 2024). This support should accommodate teachers’ and students’ comfort and proficiency levels, as well as differing institutional policies and resources. Establishing clear ethical guidelines for AI usage is crucial (Center for Innovation, Design, and Digital Learning, 2024; Dieker et al., 2024; Marino et al., 2023; Mosher et al., 2024); teacher preparation programs and instructional coaches should equip educators to facilitate informed discussions about AI with students, parents, and colleagues. Additionally, teachers must understand the potential risks associated with AI and develop strategies to mitigate them (Center for Innovation, Design, and Digital Learning, 2024; Dieker et al., 2024; Marino et al., 2023; Mosher et al., 2024) while staying informed about emerging trends and technological advancements. Educators should explicitly teach students how to use AI tools, highlighting their limitations and promoting critical thinking and responsible usage.

Teacher preparation programs and professional development coaches must prioritize equipping educators with the knowledge and skills to select, adapt, and implement AI-driven solutions that enhance accessibility and foster inclusive learning environments (Center for Innovation, Design, and Digital Learning, 2024; Dieker et al., 2024; Mosher et al., 2024). This approach empowers educators to leverage AI’s potential while addressing the unique needs of students with disabilities in STEM fields. Furthermore, educators require dedicated time to explore and effectively utilize AI tools, allowing them to intentionally design instruction, modify activities, and support all students, including those with disabilities, in their use of AI. Effective assessment of AI tools is vital for measuring their impact on learning outcomes (Ouyang et al., 2023). Yet, educators need instruction and support in utilizing assessment data to inform their instruction while efficiently using time to reduce teacher burnout. Ultimately, educators play an irreplaceable role in facilitating learning, even in a technology- and AI-driven culture (Center for Innovation, Design, and Digital Learning, 2024; Kohnke, 2023; Ouyang et al., 2023).

6.2. Implications for Technology Developers

Understanding the diverse needs of public school systems is critical for technology developers aiming to create effective and inclusive educational tools. Developers must account for the significant differences in Internet connectivity between urban and rural districts. For example, the Federal Communications Commission (FCC) reported in 2021 that 23% of rural Americans lack broadband coverage compared to only 1.5% of urban Americas (Federal Communications Commission, 2021), as well as the varying patterns of day-to-day technology use (Center for Innovation, Design, and Digital Learning, 2024). Options for offline functioning should be available. Developers should also consider cost limitations, particularly in underfunded districts (Hopcan et al., 2022; Mohamed et al., 2022). Collaboration between technology developers, educational practitioners, and researchers is crucial to ensure that AI tools are responsive to educational needs while adhering to ethical standards (Center for Innovation, Design, and Digital Learning, 2024; Nixon et al., 2024; Pierrès et al., 2024).

Moreover, developers should prioritize the creation of AI-embedded curricula and educational tools that integrate Universal Design for Learning (UDL) principles, providing accommodation and support for students with diverse abilities and knowledge levels. Research shows that students value interactive elements and support within various technology programs (Kohnke, 2023; Pierrès et al., 2024). Furthermore, integrating AI into extended reality environments could enhance these tools by offering virtual intelligent tutoring systems for content support, inquiry guidance, real-time feedback, and coaching (Kohnke, 2023). These features would enable educators and students to tailor virtual support, making extended reality and other virtual curriculum tools more inclusive and adaptive (Kohnke, 2023; Mohamed et al., 2022).

6.3. Future Research Directions and Their Implications

While AI education research has been an increasing trend, more research focuses on STEM education. Therefore, more researchers should focus on studying the use of AI in special education and serving individuals with disabilities (Bhatti et al., 2024; Marino et al., 2023; Zhai et al., 2021). Furthermore, future research should focus on the intersection of AI as a means for students with disabilities to access STEM content areas, exploring how AI-driven tools can bridge gaps in learning and engagement (Hopcan et al., 2022; Nixon et al., 2024). One initial avenue is researching AI’s role as a scaffold to enhance students’ learning processes (How & Hung, 2019; Rice & Dunn, 2023) and supporting teachers (Marino et al., 2023) rather than focusing on disability identification (Rice & Dunn, 2023). An additional potential opportunity would be to enhance the investigation of the use of AI to support students’ social, emotional, behavioral, and academic development, which is essential for STEM careers (Hopcan et al., 2022; Hughes et al., 2012). As the attention to AI for supporting students with disabilities in STEM education matures, systematic and thematic literature reviews and meta-analyses should analyze this developing intersection. Once promising evidence is established, they should be studied longitudinally to determine the sustained impact on student learning.

Researchers should also prioritize multidisciplinary collaborations, such as educational technology, STEM education, and special education, working together on the same project (Center for Innovation, Design, and Digital Learning, 2024; Jia et al., 2024). Collaborating with and utilizing the perspectives of educators, parents, and students with disabilities can foster inclusive, innovative, and pertinent applications (Marino et al., 2023; Pierrès et al., 2024; Rice & Dunn, 2023). Furthermore, researchers must prioritize disseminating findings to practitioners with the same importance as dissemination to peer-reviewed research journals through social media, blogs, podcasts, and practitioner articles (Center for Innovation, Design, and Digital Learning, 2024).

Researchers must address AI’s ethical concerns, particularly regarding data privacy, algorithmic bias, and equitable access, ensuring AI solutions benefit all students, including those from diverse backgrounds and with varying disabilities (Barnes & Hutson, 2024; Vasquez et al., 2024). Incorporating representative samples in AI research is essential to reflect the unique experiences of students with disabilities, enabling the development of inclusive and effective AI tools (Center for Innovation, Design, and Digital Learning, 2024; Jia et al., 2024; Marino et al., 2023). This requires researchers and policymakers to prioritize diversity in datasets, establish clear ethical guidelines, and collaborate across disciplines to ensure that AI integration in education upholds fairness, inclusivity, and equity principles. These efforts will help mitigate the risk of exacerbating existing inequalities and foster the creation of AI technologies that are accessible, transparent, and equitable for all learners.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ahmad, K., Qadir, J., Al-Fuqaha, A., Iqbal, W., Elhassan, A., Benhaddou, D., & Ayyash, M. (2020). Artificial intelligence in education: A comprehensive review. Preprint, 1–42. [Google Scholar] [CrossRef]

- AI4K12. (2020). Big ideas poster. Available online: https://ai4k12.org/resources/big-ideas-poster/ (accessed on 24 July 2024).

- AI4K12. (2024). Artificial intelligence (AI) for K-12 initiative (AI4K12). Available online: https://ai4k12.org/ (accessed on 24 July 2024).

- Ali, S., Abuhmed, T., El-Sappagh, S., Muhammad, K., Alonso-Moral, J. M., Confalonieri, R., Guidotti, R., Del Ser, J., Diaz-Rodriguez, N., & Herrera, F. (2023). Explainable artificial intelligence (XAI): What we know and what is left to attain trustworthy artificial intelligence. Information Fusion, 99, 101805. [Google Scholar] [CrossRef]

- Almufareh, M. F., Kausar, S., Humayun, M., & Tehsin, S. (2024). A conceptual model for inclusive technology: Advancing disability inclusion through artificial intelligence. Journal of Disability Research, 3(1), 1–11. [Google Scholar] [CrossRef]

- Al Omoush, M., & Mehigan, T. (2023). Personalised presentation of mathematics for visually impaired or dyslexic students: Challenges and benefits. Ubiquity Proceedings, 3(1), 409–415. [Google Scholar] [CrossRef]

- Amaral, M. (2020). Wheelchair access and inclusion barriers on campus: Exploring Universal Design models in higher education. In Accessibility and Diversity in Education: Breakthroughs in Research and Practice (pp. 509–534). IGI Global. [Google Scholar] [CrossRef]

- Ampadu, Y. (2023). Handling big data in education: A review of educational data mining techniques for specific educational problems. AI, Computer Science and Robotics Technology, 2023(2), 1–16. [Google Scholar] [CrossRef]

- Baker, R. S., & Hawn, A. (2022). Algorithmic bias in education. International Journal of Artificial Intelligence in Education, 32(4), 1052–1092. [Google Scholar] [CrossRef]

- Barnes, E., & Hutson, J. (2024). Navigating the ethical terrain of AI in higher education: Strategies for mitigating bias and promoting fairness. Forum for Education Studies, 2(2), 1229. [Google Scholar] [CrossRef]

- Barua, P. D., Vicnesh, J., Gururajan, R., Oh, S. L., Palmer, E., Azizan, M. M., Kadri, N. A., & Acharya, U. R. (2022). Artificial intelligence enabled personalised assistive tools to enhance education of children with neurodevelopmental disorders—A review. International Journal of Environmental Research and Public Health, 19(3), 1192. [Google Scholar] [CrossRef]

- Basham, J. D., & Marino, M. T. (2013). Understanding STEM education and supporting students through Universal Design for Learning. Teaching Exceptional Children, 45(4), 8–15. [Google Scholar] [CrossRef]

- Basham, J. D., Marino, M. T., Hunt, C. L., & Han, K. (2020). Considering STEM for learners with disabilities and other diverse needs. In C. C. Johnson, M. J. Mohr-Schroeder, T. J. Moore, & L. D. English (Eds.), Handbook of Research on STEM Education (1st ed., pp. 128–137). Routledge. [Google Scholar]

- Bastien, F., Koop, R., Small, T. A., Giasson, T., & Jansen, H. (2020). The role of online technologies and digital skills in the political participation of citizens with disabilities. Journal of Information Technology & Politics, 17(3), 218–231. [Google Scholar] [CrossRef]

- Beyene, W. M., Mekonnen, A. T., & Giannoumis, G. A. (2023). Inclusion, access, and accessibility of educational resources in higher education institutions: Exploring the ethiopian context. International Journal of Inclusive Education, 27(1), 18–34. [Google Scholar] [CrossRef]

- Bhatti, I., Mohi-U-din, S. F., Hayat, Y., & Tariq, M. (2024). Artificial intelligence applications for students with learning disabilities: A systematic review. European Journal of Science, Innovation and Technology, 4(2), 2. [Google Scholar]

- Bray, A., Devitt, A., Banks, J., Sanchez Fuentes, S., Sandoval, M., Riviou, K., Byrne, D., Flood, M., Reale, J., & Terrenzio, S. (2024). What next for Universal Design for Learning? A systematic literature review of technology in UDL implementations at second level. British Journal of Educational Technology, 55(1), 113–138. [Google Scholar] [CrossRef]

- Caccavale, F., Caccavale, C. L., Gernaey, K., & Krühne, U. (2022). To be fAIr: Ethical and fair application of artificial intelligence in virtual laboratories. In SEFI 50th Annual Conference of The European Society for Engineering Education: Towards a new future in engineering education, new scenarios that European alliances of tech universities open up (pp. 1022–1030). Universitat Politècnica de Catalunya. [Google Scholar] [CrossRef]

- CAST. (2018). UDL: The UDL guidelines version 2.2. Available online: http://udlguidelines.cast.org/ (accessed on 24 July 2024).

- Center for Innovation, Design, and Digital Learning. (2024). Inclusive intelligence: The impact of ai on education for all learners (pp. 1–99). Available online: https://ciddl.org/wp-content/uploads/2024/04/InclusiveIntelligence_a11y_navadded.pdf (accessed on 18 October 2024).

- Chng, E., Tan, A. L., & Tan, S. C. (2023). Examining the use of emerging technologies in schools: A review of artificial intelligence and immersive technologies in STEM education. Journal for STEM Education Research, 6(3), 385–407. [Google Scholar] [CrossRef]

- Copeland, B. J., & Proudfoot, D. (2007). Artificial intelligence: History, foundations, and philosophical issues. In Philosophy of psychology and cognitive science (pp. 429–482). North-Holland. [Google Scholar] [CrossRef]

- Crevier, D. (1993). AI: The tumultuous history of the search for artificial intelligence. Basic Book. Available online: https://research.ebsco.com/linkprocessor/plink?id=a8db9061-709f-3e7a-b47a-a7e07b3033d6 (accessed on 24 July 2024).

- Dai, C.-P., & Ke, F. (2022). Educational applications of artificial intelligence in simulation-based learning: A systematic mapping review. Computers and Education: Artificial Intelligence, 3, 100087. [Google Scholar] [CrossRef]

- Demirbilek, M., & Talan, T. (2022). Integrating assistive robotics in STEM education to empower people with disabilities. In Designing, constructing, and programming robots for learning (pp. 179–200). IGI Global. [Google Scholar] [CrossRef]

- Dieker, L., Hines, R., Wilkins, I., Hughes, C., Hawkins Scott, K., Smith, S., Ingraham, K., Ali, K., Zaugg, T., & Shah, S. (2024). Using an artificial intelligence (AI) agent to support teacher instruction and student learning. Journal of Special Education Preparation, 4(2), 78–88. [Google Scholar] [CrossRef]

- Doabler, C. T., Therrien, W. J., Longhi, M. A., Roberts, G., Hess, K. E., Maddox, S. A., Uy, J., Lovette, G. E., Fall, A.-M., Kimmel, G. L., Benson, S., VanUitert, V. J., Emily Wilson, S., Powell, S. R., Sampson, V., & Toprac, P. (2021). Efficacy of a second-grade science program: Increasing science outcomes for all students. Remedial and Special Education, 42(3), 140–154. [Google Scholar] [CrossRef]

- Dobransky, K., & Hargittai, E. (2006). The disability divide in internet access and use. Information, Communication & Society, 9(3), 313–334. [Google Scholar] [CrossRef]

- Educating All Learners Alliance & New America. (2024). Prioritizing students with disabilities in ai policy (pp. 1–15). Policy Brief. Available online: https://drive.google.com/file/d/1iaY6s466mlvzo-9SmcuKbohxVF1Pc274/view?usp=sharing&usp=embed_facebook (accessed on 18 October 2024).

- Executive Office of the President. (2020). Progress report on the federal implementation of the STEM education strategic plan. Available online: https://trumpwhitehouse.archives.gov/wp-content/uploads/2017/12/Progress-Report-Federal-Implementation-STEM-Education-Strategic-Plan-Dec-2020.pdf (accessed on 27 July 2024).

- Federal Communications Commission. (2021). Fourteenth broadband deployment report (FCC 21-18; Broadband Progress Reports, pp. 1–209). Federal Communications Commission. Available online: https://www.fcc.gov/reports-research/reports/broadband-progress-reports/fourteenth-broadband-deployment-report (accessed on 23 December 2024).

- Ferrini-Mundy, J. (2000). Principles and standards for school mathematics: A guide for mathematicians. Notices of the American Mathematical Society, 47(8), 868–876. [Google Scholar]

- Fichten, C. S., Asuncion, J. V., Barile, M., Fossey, M., & de Simone, C. (2000). Access to educational and instructional computer technologies for post-secondary students with disabilities: Lessons from three empirical studies. Journal of Educational Media, 25(3), 179–201. [Google Scholar] [CrossRef]

- Friedensen, R., Lauterbach, A., Kimball, E., & Mwangi, C. G. (2021). Students with high-incidence disabilities in STEM: Barriers encountered in postsecondary learning environments. Journal of Postsecondary Education and Disability, 34(1), 77–90. [Google Scholar]

- Gawande, V., Badi, H., & Al Makharoumi, K. (2020). An empirical study on emerging rrends in artificial intelligence and its impact on higher education. International Journal of Computer Applications, 175, 43–47. [Google Scholar] [CrossRef]

- Google LLC. (2019). Lookout—Accessibility app (Version 12/5/2024) [Computer software]. Available online: https://play.google.com/store/apps/details?id=com.google.android.apps.accessibility.reveal&hl=en_US (accessed on 22 December 2024).

- Grodzinsky, F. S. (1997). Computer access for students with disabilities: An adaptive technology laboratory. SIGCSE Bulletin, 29(1), 292–295. [Google Scholar] [CrossRef]

- Groumpos, P. P. (2023). A critical historic overview of artificial intelligence: Issues, challenges, opportunities, and threats. Artificial Intelligence and Applications, 1(4), 4. [Google Scholar] [CrossRef]

- Gupta, S., Gupta, S. B., & Gupta, M. (2024). Importance of artificial intelligence in achieving sdgs in India. International Journal of Built Environment and Sustainability, 11(2), 1–26. [Google Scholar] [CrossRef]

- Hall, M., & Rathbun, A. (2020). Health and STEM career expectations and science literacy achievement of U.S. 15-year-old students: Stats in brief (NCES 2020-034; Stats in Brief, pp. 1–23). National Center for Education Statistics. Available online: https://nces.ed.gov/pubs2020/2020034.pdf (accessed on 26 December 2024).

- Hamet, P., & Tremblay, J. (2017). Artificial intelligence in medicine. Metabolism: Clinical & Experimental, 69, S36–S40. [Google Scholar] [CrossRef]

- Hassabis, D., Kumaran, D., Summerfield, C., & Botvinick, M. (2017). Neuroscience-inspired artificial intelligence. Neuron, 95(2), 245–258. [Google Scholar] [CrossRef]

- He, J., Baxter, S. L., Xu, J., Xu, J., Zhou, X., & Zhang, K. (2019). The practical implementation of artificial intelligence technologies in medicine. Nature Medicine, 25(1), 30. [Google Scholar] [CrossRef]

- Holman, K., & Kohnke, S. (2024). Melding mindsets: Isolated content, same destination, hidden opportunity. Constellations: Online STEM Teacher Education Journal, 1(1), 3. [Google Scholar]

- Holmes, W., Porayska-Pomsta, K., Holstein, K., Sutherland, E., Baker, T., Shum, S. B., Santos, O. C., Rodrigo, M. T., Cukurova, M., Bittencourt, I. I., & Koedinger, K. R. (2022). Ethics of AI in education: Towards a community-wide framework. International Journal of Artificial Intelligence in Education, 32(3), 504–526. [Google Scholar] [CrossRef]

- Hopcan, S., Polat, E., Ozturk, M. E., & Ozturk, L. (2022). Artificial intelligence in special education: A systematic review. Interactive Learning Environments, 31(10), 7335–7353. [Google Scholar] [CrossRef]

- How, M.-L., & Hung, W. L. D. (2019). Educing ai-thinking in science, technology, engineering, arts, and mathematics (steam) education. Education Sciences, 9(3), 184. [Google Scholar] [CrossRef]

- Hughes, C. E., Dieker, L. A., Glavey, E. M., Hines, R. A., Wilkins, I., Ingraham, K., Bukaty, C. A., Ali, K., Shah, S., Murphy, J., & Taylor, M. S. (2022). RAISE: Robotics & AI to improve STEM and social skills for elementary school students. Frontiers in Virtual Reality, 3. [Google Scholar] [CrossRef]

- Hughes, S., Pennington, J. L., & Makris, S. (2012). Translating autoethnography across the AERA standards: Toward understanding autoethnographic scholarship as empirical research. Educational Researcher, 41(6), 209–219. [Google Scholar] [CrossRef]

- Hulland, J. (2020). Conceptual review papers: Revisiting existing research to develop and refine theory. AMS Review, 10(1–2), 27–35. [Google Scholar] [CrossRef]

- Huong, X. V. (2024). The implications of artificial intelligence for educational systems: Challenges, opportunities, and transformative potential. The American Journal of Social Science and Education Innovations, 6(3), 101–111. [Google Scholar] [CrossRef]

- Hwang, G.-J., & Tu, Y.-F. (2021). Roles and research trends of artificial intelligence in mathematics education: A bibliometric mapping analysis and systematic review. Mathematics, 9(6), 584. [Google Scholar] [CrossRef]

- Hwang, G.-J., Xie, H., Wah, B. W., & Gašević, D. (2020). Vision, challenges, roles and research issues of artificial intelligence in education. Computers and Education: Artificial Intelligence, 1, 100001. [Google Scholar] [CrossRef]

- Hyatt, S. E., & Owenz, M. B. (2024). Using Universal Design for Learning and artificial intelligence to support students with disabilities. College Teaching, 1–8. [Google Scholar] [CrossRef]