Abstract

Early detection of cancer is critical for effective treatment, particularly for aggressive malignancies like skin cancer and brain tumors. This research presents an integrated deep learning approach combining augmentation, segmentation, and classification techniques to identify diverse tumor types in skin lesions and brain MRI scans. Our method employs a fine-tuned InceptionV3 convolutional neural network trained on a multi-modal dataset comprising dermatoscopy images from the Human Against Machine archive and brain MRI scans from the ISIC 2023 repository. To address class imbalance, we implement advanced preprocessing and Generative Adversarial Network (GAN)-based augmentation. The model achieves 97% accuracy in classifying images across ten categories: seven skin cancer types, multiple brain tumor variants, and an “undefined” class. These results suggest clinical applicability for multi-cancer detection.

1. Introduction

Cancer begins when damaged cells in our body grow uncontrollably instead of dying off and being replaced. Some unusual growths are harmless lumps called benign tumors, but others become dangerous, cancerous growths known as malignant tumors. These harmful tumors are serious because they can grow into nearby healthy tissues and spread to other parts of the body, creating new tumors called metastases [1].

Skin cancer is common and comes in three main types: melanoma, basal cell carcinoma (BCC), and squamous cell carcinoma (SCC). Melanoma is the most aggressive and dangerous type. Non-melanoma skin cancers, such as basal cell carcinoma (BCC) and squamous cell carcinoma (SCC), are less likely to be fatal [2]. Finding melanoma early is significant. Research shows it can boost survival chances by up to 90% compared to seeing it at a later stage [3]. Unfortunately, spotting skin cancer early can be challenging. Even with medical imaging, harmful skin cancers and harmless spots often appear remarkably similar, making them difficult to distinguish [4].

Brain tumors are difficult to detect and can be dangerous, even if they are not cancerous. They can grow in critical areas of the brain, with two brain tumor types [5]. The primary tumor type starts in the brain, but the secondary brain tumor type spreads to the brain from cancer in another part of the body [6]. There are over forty diverse types, with dangerous forms like glioblastomas, astrocytomas, and oligodendrogliomas being common [7]. Early detection here is also vital because malignant brain tumors can quickly invade surrounding brain tissue, leading to severe health decline [8].

Artificial Intelligence (AI), specifically deep learning, offers significant assistance in analyzing medical images, such as skin photos or brain scans. A key tool for identifying skin cancer is Convolutional Neural Networks (CNNs). These networks work like advanced pattern recognition systems. CNNs can recognize suspicious skin lesions in images with the same accuracy as dermatologists [9]. For brain tumors, CNNs aid in analyzing MRI scans to detect tumors, precisely outline their boundaries (segmentation), and identify their type (classification), providing results quickly and accurately [10]. This technique makes early cancer diagnoses more accurate and effective in hospitals for specialists and non-specialists [11].

Using AI on medical images has challenges. These images often have “noise” or unwanted marks that can hide vital details. In photos of skin, features such as hairs, air bubbles, or uneven lighting can obscure key parts of a lesion [12]. In brain MRI scans, reflections from the skull or uneven signals from the machine can make it harder to pinpoint and correctly classify tumors [13]. To overcome this, cleaning up the images first using advanced techniques to reduce noise and remove artifacts is essential for helping the AI perform well [14].

Training AI systems to recognize medical images can be challenging. This is especially true for rare cancers, where there are not always enough labeled images available. Researchers have found clever ways to address this issue. One method is called transfer learning. Instead of building a new model from the ground up, they use an existing model that has been built to identify basic patterns from millions of everyday photos, like those trained on ImageNet. They then adjust this model to identify cancer in medical images specifically. This approach allows AI to perform well even with limited medical data [15]. Another challenge is that some cancer types appear much less frequently in image collections than others (class imbalance). To prevent the AI from being biased towards common types, researchers use data augmentation, creating extra, slightly varied training images. A powerful technique for this purpose utilizes GANs, specialized AI systems that can generate realistic, synthetic images of rare cancers. Adding these synthetic images helps the AI learn more effectively and improves its accuracy in classifying all types, including rare ones [16].

1.1. Motivation

Early detection of aggressive cancers like skin cancer and brain tumors greatly enhances patient survival. Current clinical practice relies on specialized computational tools for each cancer type, which leads to workflow fragmentation in time-sensitive medical settings. The main challenge is the fundamental histological difference between cancers: dermatoscopy features of skin lesions do not resemble the neuroradiological appearances of brain tumors. Creating a unified diagnostic system that can manage these morphologically distinct cancers remains an unresolved issue in computational oncology.

Compounding this challenge, medical imaging data often contain artifacts such as noise, occlusions, and scanner distortions, and they also suffer from imbalanced representation of rare cancer subtypes, which leads to inconsistent diagnostic performance across different populations. Our research tackles these two issues through a new artificial intelligence framework that pioneers cross-tissue diagnostic unification. Unlike existing siloed approaches, our solution combines multi-modal image analysis within a single computational architecture, specifically designed to overcome the histological heterogeneity barrier between epithelial (skin) and neural (brain) malignancies. This approach demonstrates for the first time that a unified system can maintain diagnostic accuracy across fundamentally different cancer types while accounting for real-world data imperfections. The scalability of our framework’s architecture creates a foundation for including additional cancer types, a crucial step toward comprehensive oncology screening tools that improve diagnostic accessibility for both specialists and primary care providers.

1.2. Contribution

There is a critical need for a fast, reliable, and accurate framework for early cancer diagnosis, especially for complex types like skin cancer [1,3,4] and brain tumors [17]. To overcome limitations in current systems- such as hardware fragmentation, image artifacts, and [12,13,14] class imbalance [16], we present a unified framework with these innovative contributions:

1. First Unified Architecture for Multi-Source Oncology Imaging:

Unlike isolated diagnostic tools, we introduce an end-to-end pipeline that integrates conditional GANs (cGANs), U-Net, and InceptionV3 to handle both dermatoscopy images and brain MRIs within a single computational system. This removes cross-platform compatibility issues in clinical workflows.

2. Domain- Adapted Transfer Learning Protocol:

We developed a new two-phase training method where InceptionV3 (pre-trained on natural images) is re-tuned using task-specific feature extraction. By fine-tuning convolutional filters on combined dermatological (HAM10000) and neuroimaging (ISIC 2023) datasets [15], we enable faster convergence while maintaining discriminative ability across tissue types.

3. Modality- Specific Artifact Suppression:

Introducing dual-path preprocessing:

- Dermatoscopy branch: Suppresses non-biological artifacts (hairs, air bubbles) through inpainting-based masking.

- Neuroimaging branch: Applies skull stripping and bias field correction to isolate parenchyma [16].

This removes confounding noise without affecting pathological signatures.

4. Hybrid Data Augmentation for Rare Classes:

We address dataset imbalance through synthetic minority oversampling: Conditional GANs generate anatomically realistic tumor variants [12], while geometric transformations increase lesion diversity. This combined approach reduces bias toward common cancers.

5. Validation of High-Precision Multi-Class Diagnosis:

Our system achieves 97% accuracy in ten-class discrimination (seven skin cancers, two brain tumors, and one indeterminate class), demonstrating for the first time that a single system can reliably identify histologically different malignancies.

1.3. Paper Structure

The following section discusses the structure of the proposed paper. Section 2 (Related Work) reviews recent studies on detecting skin cancer and brain tumors using deep learning, with a particular focus on how tools like CNNs are currently assisting physicians and the general public. This review highlights both progress and areas where more work is needed.

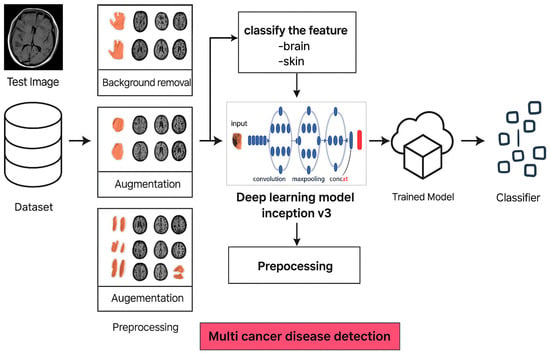

Section 3 (Methodology and Processing) outlines how our proposed framework operates. Figure 1 provides a clear visual roadmap of the entire process. It starts with raw medical images (photos of skin or brain scans), which are first cleaned up. Then, using the U-Net, backgrounds are stripped away, and tumor areas are precisely highlighted. Images are then resized for consistency. Some cancers rarely appear in image collections (datasets), so special generators (cGANs) are used to create realistic extra examples to ensure the system learns all types equally well. Finally, these prepared datasets are split into groups for training, testing, and validation. InceptionV3 (a powerful pattern recognition tool pre-trained on everyday objects) was specifically retrained here using combined skin and brain cancer data to spot subtle signs of disease across different datasets (diverse types of cancer).

Figure 1.

Overview of the image processing and classification workflow in the proposed cancer detection model.

Section 4 (Results and Discussion) presents and discusses the test results. The proposed framework proved highly accurate, correctly identifying 97% of cases across all ten categories of different cancer types, including tricky distinctions between seven skin cancer types, various brain tumors, and unusual cases. Importantly, it remained dependable when faced with brand-new medical images it had never encountered, demonstrating its real-world usefulness. While excellent at identifying common melanomas, the analysis also reveals opportunities to improve the detection of sporadic tumors or scans of inferior quality. These results confirm that the system works effectively as a single diagnostic tool for multiple cancers.

Section 5 (Conclusion and Future Prospects) highlights the key achievements of how a single, adaptable framework successfully detects both skin and brain cancers. This framework offers an online, real-time service that enables cancer checks and diagnoses, simplifying their use in clinics or at home. Additionally, explain the plan for generalizing and enhancing the proposed framework to detect and classify all types of cancer.

2. Related Work

The lack of data is considered one of the major problems in analyzing medical images, especially in cancer detection, such as brain tumors and skin cancer. Building an innovative diagnosis framework involves training an AI model to identify these issues, which requires vast amounts of labeled examples; however, these are often scarce. Researchers overcome this limitation by exploring creative techniques to artificially expand these rare datasets, such as modifying existing images or generating synthetic ones.

Skin Cancer Classification: Soyal et al. [18] utilized GANs to create synthetic skin lesion images that closely mimic real data distributions. They further employed Enhanced Super-Resolution Generative Adversarial Networks (ESRGANs) to improve the resolution of existing images. This two-pronged approach resulted in a more diverse and informative training dataset for their CNN models. Researchers paired these models with a classic machine learning tool called Support Vector Machine (SVM), which helped them reach a 96% accuracy rate when using VGG19 alongside SVM on the ISIC 2019 dataset. This success story highlights how blending innovative deep learning with older, reliable machine learning methods can sharpen the precision of skin cancer diagnosis.

Meanwhile, Lembhe et al. [19] investigated methods to enhance low-quality medical images using advanced image processing techniques and paired these with convolutional neural networks (CNNs) for skin cancer detection. Their results showed a strong 95.1% accuracy rate; however, the research does not fully explain the details of their process, such as exactly how they refined images or evaluated outcomes.

Yu et al. [20] emphasized the importance of effective data enhancement in improving model performance. They use a two-step approach to enhance their training data. First, they created realistic synthetic images of skin lesions using GANs. Next, they made simple adjustments to the images, like cropping, rotating, or flipping them, to add more variety. When they combined this approach with pre-trained CNN models, such as VGG19 and Inceptionv3, they achieved a top accuracy of 96.90% on the HAM10000 dataset. Nasr-Esfahani et al. [21] investigated a deep learning architecture that combines Inception-ResNetV2 and NasNet Mobile models. These models were explicitly fine-tuned for skin cancer classification, focusing on adjusting hyperparameters in the later layers while keeping the earlier layers frozen to preserve their learned features. This method achieved an accuracy of 97.1% on the HAM10000 dataset, although it was not evaluated on the more recent ISIC 2023 dataset.

Guo et al. [22] developed a two-part system to address the issue of hair-hiding skin lesions in medical images. First, their method locates and outlines the areas of the lesions. Then, it uses a generative tool to create realistic images of those areas without hair. This process helps minimize mistakes caused by hair covering essential details in the photos, which can lead to wrong diagnoses. Bhara et al. [23] developed a key model for generating synthetic images of skin lesions. This model improves the ability to produce realistic images. However, they did not provide specific performance statistics, making it hard to see their progress in real-world diagnostics.

Li et al. [24] emphasized the importance of hair removal as a preprocessing step before feeding images into a CNN architecture for classification. They utilized a hair removal algorithm in conjunction with an Efficient Attention Net CNN, achieving an accuracy of 94.1%. This approach effectively removes hair from images while preserving essential information about skin lesions, although its accuracy is slightly lower than that reported in other studies.

Brain Tumor Classification: Data augmentation techniques have also proven effective for brain tumor classification. Ishan et al. [25] developed a framework using Conditional GANs (cGANs) to generate synthetic brain tumor MR images from various tumor grades. This approach addresses the class imbalance in medical datasets and enriches the training data for deep learning models. Their method, when combined with a 3D CNN architecture, showed promising results in brain tumor segmentation.

Khan [17] presents an innovative approach that leverages diverse networks to detect brain tumors using the BraTS 2020 dataset. Although this method requires significant computational resources, it achieved an impressive success rate of 98.34%. A.A. Asiri [6] fine-tuned ResNet50 and U-Net models for brain tumor classification on the TCGA-LGG and TCIA datasets, achieving an accuracy of 94.21%. This approach performs well with limited data but may not generalize well to unseen data. S. Şengör [2] achieved an impressive accuracy of 99.04% by utilizing a pre-trained model, VGG16, with transfer learning to classify brain tumors on public datasets, such as the Rembrandt dataset. This fantastic outcome highlights the incredible potential of pre-trained models. Their success truly shines when using high-quality models.

Recent research into skin cancer and brain tumor diagnoses highlights new opportunities to enhance deep learning models in medical image analysis through data augmentation, precise preprocessing, and transfer learning can surmount the twin obstacles of scarce annotations and heterogeneous modalities: synthetic lesion generation enriches training sets but often demands heavy compute, whereas targeted preprocessing such as meticulous hair-artifact removal in dermatoscopy, it offers a lightweight alternative when algorithms are rigorously tuned to avoid spurious distortions. Meanwhile, repurposing convolutional backbones pre-trained on large natural-image corpora expedites convergence. It elevates baseline performance, provided that architectural and hyperparameter adjustments align the model’s representations with clinical nuances, recognizing, for instance, that what works for surface skin photography may not be effective on T1-weighted brain scans. As Table 1 illustrates, the most robust pipelines thoughtfully integrate these components to strike an optimal balance between computational limitation and diagnostic accuracy across both dermatological and neuroradiological cancer classification tasks.

Table 1.

Summarizes the most recent research on cancer classifications, detailing the methods, accuracy, strengths, and limitations of each approach.

3. Methodology and Processing

To overcome the complex challenge of identifying cancers, such as those affecting the skin and brain, where current diagnostic results can be inconsistent, this approach proposes a specialized three-step framework using optimized neural networks. The latest real-world challenges in cancer diagnosis techniques, as detailed in Table 1, were utilized to develop an enhanced cancer detection framework. This address significantly enhances detection accuracy and offers free online cancer detection tools. As illustrated in the proposed framework pipeline in Figure 2, our process unfolds in three distinct stages.

Figure 2.

Proposed Multi-Cancer Recognition Pipeline.

Phase 1. Data Augmentation and Class Balancing: Medical images frequently lack sufficient examples of specific cancers. We utilize a specialized AI model (a cGAN) to generate realistic synthetic images for these underrepresented classes. The result of data augmentation on the dataset creates a stronger foundation for training accurate classifiers.

Phase 2. Feature Extraction: A U-Net model was employed to extract the most telling visual characteristics from the enhanced images. These features capture the unique signatures of different cancer types, allowing us to sort each image into one of ten broad categories.

Phase 3. Fine-tuned Classification for Specific Cancer Types: Finally, the detailed features identified in Step 2 are fed into a finely tuned Inception V3 model (a powerful image recognition system). This crucial last stage delivers the exact diagnosis, differentiating between specific skin cancers (Melanoma (MEL), Nevus (NV), Basal Cell Carcinoma (BCC), Actinic Keratosis (AKIEC), Benign Keratosis (BKL), Dermatofibroma (DF), and Vascular Lesion (VASC)) and distinguishing brain tumors from healthy brain tissue.

3.1. Data Collection and Preprocessing

Our dataset encompasses various modalities to represent different types of cancer.

- Skin Cancer: We utilize dermoscopic images from publicly available datasets, such as the Human Against Machine (HAM10000) archive [26]. These images are close-up, magnified views of skin lesions captured with a specialized device called a dermatoscope. The HAM archive provides dermoscopic pictures from a diverse population with various skin conditions, including the following classifications:

- -

- MEL: A severe form of skin cancer arising from pigment-producing cells.

- -

- NV: known as a mole, it is a common, usually benign growth of pigment cells.

- -

- BCC: The most frequent type of skin cancer, typically slow-growing and treatable.

- -

- AKIEC: A precancerous skin lesion that may develop into squamous cell carcinoma if left untreated.

- -

- BKL: A noncancerous, scaly growth on the skin.

- -

- DF: A benign skin tumor composed of fibrous tissue.

- -

- VASC: A noncancerous abnormality of blood vessels in the skin.

- Brain Tumors: Brain tumor data is obtained from MRI scans sourced from the BraTS 2020 challenge dataset [27]. MRI scans provide detailed anatomical information about the brain and surrounding tissues. The BraTS 2020 dataset specifically focuses on gliomas, a common type of brain tumor. It includes multi-modal MRI scans (e.g., T1-weighted, T2-weighted, contrast-enhanced) that capture different aspects of the tumor and surrounding brain tissue. Our system is designed to distinguish between brain scans with tumors and those with normal, healthy brain tissue.

This study utilizes publicly available medical image datasets for the analysis of skin cancer [26] and brain tumors [27]. It is assumed that informed consent was obtained from participants during the initial collection of these datasets. We acknowledge the importance of using ethically sourced data for research purposes. Our research adheres to the ethical principles regarding the use of human subjects.

Dermatologist and Neurologist Annotations: Dermatologists and neurologists, experts in skin cancer and brain tumors, respectively, carefully marked the tumor areas within the images from both datasets. These annotations serve as ground truth for training and evaluating our proposed multi-cancer recognition system.

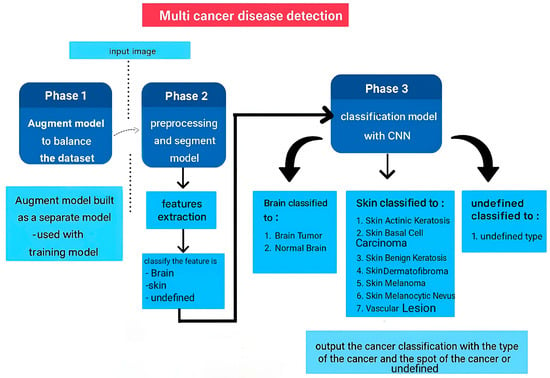

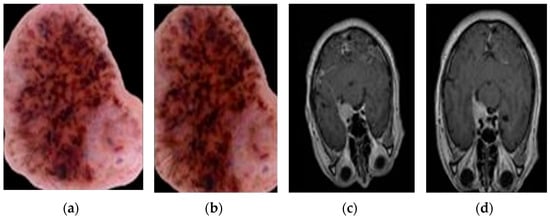

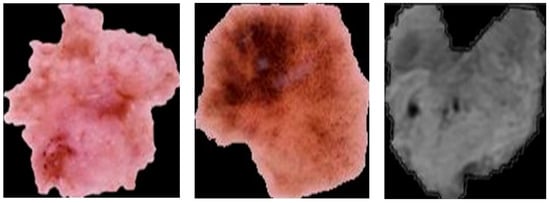

Figure 3 presents sample images from the datasets used in this work. Figure 3a,b depict examples of dermoscopic skin lesions obtained from the HAM archive. Figure 3c shows an MRI brain scan from the BraTS 2020 dataset.

Figure 3.

Sample images from public datasets. (a,b) are dermoscopic skin lesions, and (c) MRI brain scan.

Image Preprocessing and Normalization

In the data preprocessing stage, we performed image normalization to standardize the pixel intensity values within a specific range. This step plays a crucial role in enhancing the training process of deep learning models. Normalization ensures all pixel values fall within a similar range, leading to several advantages:

- Improved Training Stability: Deep learning models rely on gradient descent for optimization. Normalization prevents features with large values from dominating the gradients, leading to smoother and more stable training.

- Faster Convergence: When pixel intensities are on a similar scale, the model can learn the optimal weights and biases more efficiently, resulting in faster convergence during training.

- Reduced Sensitivity to Preprocessing: Normalization minimizes the impact of minor variations in image acquisition conditions (e.g., lighting, camera settings) on pixel intensities. This makes the model less sensitive to preprocessing variations within your datasets.

- Enhanced Activation Function Performance: Certain activation functions employed in deep learning models, such as sigmoid or tanh, exhibit optimal performance ranges. Normalization ensures pixel values fall within these ranges, maximizing the effectiveness of these activation functions.

In our multi-cancer recognition system, dealing with both skin cancer (dermoscopic images) and brain tumors (MRI scans), normalization is significant for the following reasons:

- Bridging the Gap Between Modalities: Dermoscopic images and MRI scans employ different technologies, resulting in a range of pixel intensities. Normalization helps bridge this gap and ensures both types of images are processed on a similar scale within the model.

- Managing Data Variability: Skin lesions and brain tumors can exhibit diverse appearances. Normalization helps manage this variability by focusing on the relative relationships between pixel intensities within each image, allowing the model to learn more effectively from both datasets combined.

We compared the performance of our learner using three normalization techniques:

- Mean and Standard Deviation Normalization: We initially subtracted the mean RGB values (averaged across each image) and divided them by the standard deviation, following the approach suggested by [28].

- Training Set Mean Normalization: Another approach involved subtracting the mean RGB values, calculated from the training set images only, followed by division by their standard deviation [29].

- ImageNet Mean Subtraction: We also employed pre-computed ImageNet mean subtraction as a normalization step. This method utilizes a constant value derived from the extensive ImageNet image database [30].

3.2. Data Augmentation

To address the data imbalances described in Section 3.1, we employed techniques such as rotation, flipping, cropping, and color jittering to artificially expand the dataset. For datasets with severe class disparity, synthetic data generation using GANs was also employed to create realistic samples for underrepresented classes. We carefully balanced the oversampling of minority classes and undersampling of majority classes to avoid overfitting and to keep valuable information. We applied and evaluated various augmentation methods to ensure that the model retained a balanced significance of the images for each class while enhancing its performance.

3.2.1. Deep Convolutional Generative Adversarial Network (DCGAN)

We leveraged DCGANs to address the class imbalance in our image datasets. Class imbalance is a common challenge where some image types (classes) are significantly less frequent than others. This can lead to problems during model training as the model might prioritize the more frequent classes.

A DCGAN is a type of GAN designed explicitly for generating realistic images [31]. It works by pitting two neural networks against each other in an iterative training process:

- Generator: This network attempts to generate new images that resemble the training data (Figure 4a).

Figure 4. Original image (a) and images generated by the DCGAN (b,c), conditioned on the class information from the original image in (a).

Figure 4. Original image (a) and images generated by the DCGAN (b,c), conditioned on the class information from the original image in (a). - Discriminator: This network aims to distinguish between real images from the dataset and the generated images by the generator (Figure 4b,c).

Through this competition, both networks improve their functionalities. The generator learns to create increasingly realistic images while the discriminator becomes more adept at identifying fake ones.

In our case, we trained a single DCGAN conditioned on the class label. During training, DCGAN begins with random noise to create images, but it also receives information about the type of image it should produce, such as a specific object or lesion. This class label enables DCGAN to determine which features to include in the images it generates. By creating new and realistic images, we can increase the number of examples for less common classes. This leads to a more balanced dataset, which can improve the model’s performance. Figure 4 compares original images from an underrepresented class with images generated by a DCGAN model. Figure 4a shows a single example of an original image. Figure 4b,c present images created by the DCGAN, conditioned on the class information from the original image in Figure 4a. This process enables the DCGAN to learn the characteristics of the underrepresented class and generate new, similar images.

3.2.2. Augmentor Library

We also employed Augmentor, a Python (3.11) mage augmentation library, to further expand our image datasets [32]. Augmentation refers to techniques for creating new variations of existing images. This can be particularly helpful for datasets with limited overall sample sizes or when the data lacks certain variations.

Augmentor provides various image manipulation techniques. Depending on the specific dataset and its characteristics, we utilized Augmentor for operations such as:

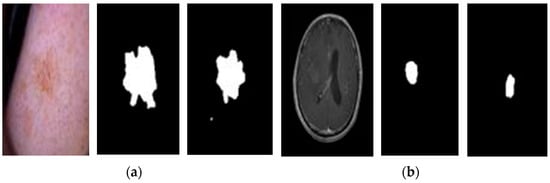

- Horizontal and vertical flips: Mirroring the image horizontally or vertically (as presented in Figure 5d).

Figure 5. Augmentation examples using Augmentor. (a) Original skin image, (b) Augmented skin image, (c) Original brain image, and (d) Augmented brain image.

Figure 5. Augmentation examples using Augmentor. (a) Original skin image, (b) Augmented skin image, (c) Original brain image, and (d) Augmented brain image. - Contrast adjustments: Increasing or decreasing the contrast between light and dark areas in the image (as presented in Figure 5b).

- Brightness corrections: Adjust the image to be brighter or darker (as shown in Figure 5b).

Figure 5 shows how Augmentor can change images. In Figure 5a, you see the original image. In Figure 5b, the same image has had its contrast and brightness adjusted. Figure 5c shows another original image. In Figure 5d, that image has been flipped vertically and had its brightness corrected. Augmentor allows for several ways to modify images, which can help expand a dataset and strengthen machine learning models.

To address dataset limitations noted in [33], we made three essential adaptations:

- Class-Conditioned Focus: Our DCGAN used explicit class labels during training, guiding feature learning toward specific cancer types despite limited data.

- Augmentation Synergy: We combined DCGAN outputs with traditional augmentations (rotation/flipping from Section 3.2) to make the most of the limited samples.

- Progressive Training: We started with a higher sampling of the minority class before fine-tuning with balanced batches.

3.3. Data Segmentation

Deep learning models can encounter challenges due to the presence of unnecessary information in medical images.

- Skin Lesions: In skin lesion images, hair and surrounding healthy skin can introduce noise, making it difficult for the model to distinguish the lesion itself. The model may focus on irrelevant features instead of the lesion characteristics that are crucial for accurate classification.

- Brain Tumors: Similarly, in brain MRI scans, the model may be overwhelmed by information from healthy brain tissue rather than focusing solely on the tumor region. This can negatively influence the model’s ability to segment the tumor accurately.

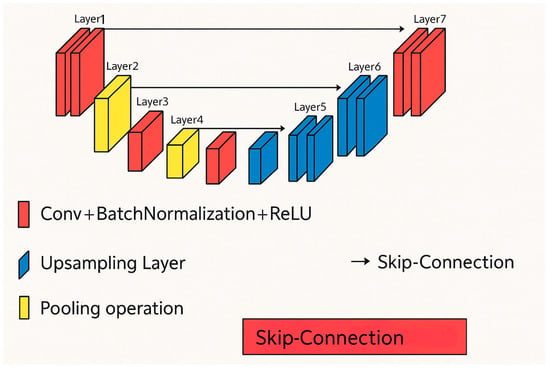

To address these challenges and improve model accuracy, we employ a U-Net for Lesion Segmentation.

U-Net is a CNN architecture designed explicitly for biomedical image segmentation [34]. It was developed at the Computer Science Department of the University of Freiburg. A standard CNN architecture might struggle with limited training data, which is often the case in medical imaging. U-Net addresses this challenge by incorporating features from both the contracting and expanding paths of the network, allowing for precise segmentation even with fewer training images. The architecture of the U-Net is illustrated in Figure 6.

Figure 6.

U-Net architecture [33].

The U-Net model takes an image as input and predicts the location of the lesion. The output is a mask image that highlights the segmented lesion area, as depicted in Figure 7. Figure 7 illustrates the concept of segmentation. Figure 7a,b show the original image containing the lesion, the ground truth mask (a manually created mask indicating the exact lesion area), and the predicted mask generated by the U-Net model, for skin and brain images, respectively.

Figure 7.

Segmentation examples. (a) Original and mask skin images, and (b) Original and mask brain images.

3.4. Feature Extraction and Deep Learning Architectures

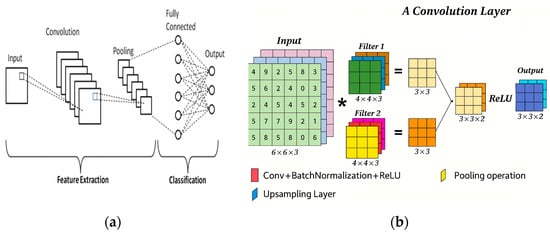

CNNs are the leading models in deep learning for tasks involving image analysis, including the classification of medical images [35]. Unlike traditional shallow networks, CNNs are particularly effective at uncovering complex relationships within multidimensional data, such as medical images, where pixels carry essential spatial information. This section will examine the fundamental functions of CNNs and review notable deep-learning architectures.

3.5. The Power of Convolution

A typical CNN architecture operates like a structured pipeline, transforming raw images into refined representations for classification. Early layers focus on extracting low-level features, such as edges and textures. In contrast, later layers identify complex object parts and their relationships. As illustrated in Figure 8a, a standard CNN consists of several key layers: the input layer feeds image data into the network; convolutional layers extract features from input images; pooling layers reduce dimensionality to simplify data; activation layers introduce non-linearity to enhance learning; and fully connected layers integrate extracted features for final classification. Each component is essential for effectively processing and classifying images [31].

Figure 8.

(a) A typical CNN architecture consists of several layers, and (b) Convolution operation visualization [31].

- Input Layer: This is the starting point, where the preprocessed medical image data is fed into the network. It specifies the image size (width and height) and the number of channels (e.g., RGB for color images).

- Convolutional Layer: The convolutional layer is the primary component of a CNN, extracting features from the input image. A small filter, called a kernel, moves across the image and calculates the dot product between its weights and the corresponding pixel values. You can think of the kernel as a magnifying glass that examines small patches of the image closely to identify patterns. The size of the kernel is significant.

- Smaller kernels are adept at capturing localized features like edges and textures.

- Larger kernels can learn more complex patterns that span larger image regions.

Stride and padding help improve the process of applying a kernel to an image. Stride controls how far the kernel moves each time it is applied, while padding prevents information loss at the edges of the image. As shown in Figure 8b, the convolution operation involves sliding the kernel across the image, calculating the dot product at each position, and creating a feature map that highlights specific features based on the kernel’s design.

- Activation Function: Not all extracted features are equally important. Here, the activation function acts as a gatekeeper, allowing only significant activations to pass through. Popular choices, such as ReLU (Rectified Linear Unit), suppress insignificant features and address the vanishing gradient problem that can hinder training in deep networks [36].

- Pooling Layer: This layer helps to simplify the data, making it easier for the network to process. Techniques like max-pooling focus on the strongest activation in a small area, which summarizes the local information. This not only speeds up processing time but also helps the network recognize objects even if they shift slightly in the image [37].

- Fully Connected Layer: After the convolutional and pooling layers have extracted and summarized local features, the fully connected layer becomes essential. In this layer, every neuron connects to all neurons in the previous layer, unlike the localized connections seen before. This connection allows the network to combine the extracted features and understand the entire image. The last fully connected layer typically employs a SoftMax activation function. This function changes the network’s output into class probabilities for image classification tasks [38].

CNN Architectures: InceptionV3 and ResNet

Two prominent CNN architectures, InceptionV3 and ResNet, are well-suited for image classification tasks and have been successfully applied to various datasets.

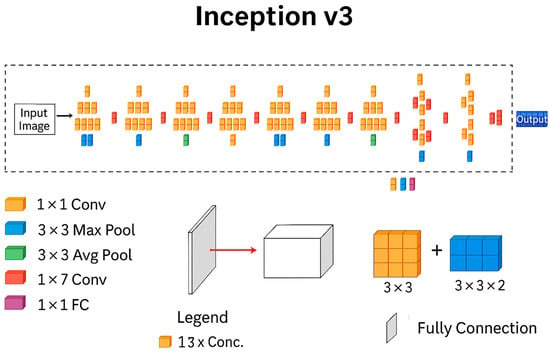

- InceptionV3 [39]:

InceptionV3, which won the 2014 ImageNet competition, is known for its high accuracy and efficient design. It has twenty-two layers, as shown in Figure 9, and outperforms earlier models, such as AlexNet. Its main feature is the Inception module, which allows the network to extract features of varied sizes using parallel convolutional layers. This design improves efficiency and lowers computational costs.

Figure 9.

InceptionV3 architecture [39].

The network utilizes both symmetric and asymmetric Inception modules, along with standard convolutional and pooling layers (including max and average pooling), to gather several types of spatial information. It combines features using concatenation, applies dropout to prevent overfitting, and uses batch normalization to improve training stability.

Figure 9 provides a detailed view of the Inception module, the core building block of InceptionV3. Notice the parallel convolution layers with different filter sizes. This allows the network to extract features at various scales (small, medium, large) from the input image in a single step, contributing to InceptionV3′s efficiency.

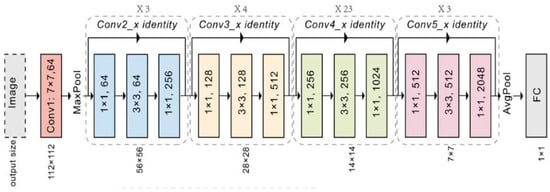

- ResNet-50 [40]:

ResNet, introduced in 2015, addresses a key problem in deep CNNs: the vanishing gradient problem. This problem occurs when the network gets too complex and deep. As a result, the gradients used to update the weights in earlier layers become ridiculously small or disappear altogether. This makes it hard for the network to learn from the earlier layers.

ResNet solves this issue by using residual connections. These connections create a shortcut for information to flow directly from the input of a layer to its output, as illustrated in Figure 10. This bypasses the usual processing steps within the layer. The output then combines the original input with the transformed features. This approach enables the gradient signal to propagate effectively through deep networks.

Figure 10.

ResNet50 Architecture [40].

Figure 10 depicts a simplified example of a residual connection. The input (X) is directly added to the output from a convolutional layer (F(X)), creating the final output (Y). This simple addition enables the network to learn from much deeper architectures (ResNet-50 has forty-eight convolutional layers) compared to traditional CNNs, allowing for the extraction of more complex features from images.

3.6. Transfer Learning for Skin and Brain Tumor Classification

In the realm of medical image analysis, transfer learning has become a cornerstone technique for tasks like skin and brain tumor classification. This approach leverages the knowledge gained from a pre-trained deep convolutional neural network (CNN) on a vast image dataset (source task). It specifically addresses the challenge of classifying tumors in medical images (target task) [41].

Popular pre-trained CNN architectures, such as InceptionV3 and ResNet-50, have been trained on massive datasets like ImageNet. These models learn valuable features for recognizing shapes, colors, and textures within images. These learned features provide a solid foundation for transfer learning in medical image analysis.

Here is a breakdown of how transfer learning can be applied in skin and brain tumor classification:

- Source Task: A pre-trained CNN, like InceptionV3 or ResNet-50, serves as the starting point.

- Target Task: Your dataset (described in the Section 3.1) containing labeled images represents the target task.

- Transfer: The initial layers of the pre-trained CNN, which have learned general image recognition features, are retained.

- Fine-tuning: The final layers of the pre-trained CNN are fine-tuned with your specific skin lesion dataset. This fine-tuning process enables the model to specialize in identifying relevant patterns within skin lesions, allowing it to distinguish between benign and malignant types.

Benefits of Transfer Learning in Medical Imaging

- Reduced Training Data Requirements: Acquiring and labeling large datasets of medical images can be a challenging task. Transfer learning allows you to build effective models for skin and brain tumor classification even with limited labeled data.

- Faster Training Time: By leveraging pre-trained knowledge from the initial layers, transfer learning significantly reduces training time compared to training a model from scratch.

- Improved Performance: Transfer learning can lead to enhanced performance, particularly when working with limited medical image datasets. The pre-trained CNN provides a solid foundation for learning features relevant to medical images, even if the source task (ImageNet) involves general images.

Considerations

- Level of Transfer: The level of transfer learning can be adjusted. In medical image classification, fine-tuning only the final layer of the pre-trained CNN is often more effective than complete transfer (using the entire pre-trained model).

- Task Similarity: While the source task (general image recognition) might seem quite different from the target task (medical image classification), both tasks involve recognizing patterns within images. This underlying similarity allows transfer learning to be effective in this scenario.

Using transfer learning can significantly enhance the accuracy and speed of your models in classifying skin and brain tumors. This method has enormous potential to help doctors detect and diagnose these conditions early.

4. Results

This framework proposes a novel deep learning approach for early cancer classification, aiming to differentiate various cancer types from skin lesion images and brain MRI scans, including skin cancers (MEL, NV, BCC, AKIEC, BKL, DF, VASC) or brain tumors (Normal, Brain Tumor) or undefined. The approach integrates data augmentation, segmentation with U-Net, transfer learning with InceptionV3, and fine-tuning to achieve highly accurate cancer-type identification.

The proposed approach consists of three distinct phases, each crucial to the overall classification pipeline:

4.1. Data Augmentation and Class Balancing

- Combined Dataset (described in Section 3.1): We leverage a rich dataset that combines skin lesion images from the publicly accessible Human Against Machine (HAM) archive, and brain MRI scans from the BraTS 2020 challenge [26,27]. All training and test images were uniformly formatted in RGB with a fixed size of 600 × 450 pixels. Figure 3a,b illustrate dermoscopic skin lesions from the HAM archive. Figure 3c depicts an MRI brain scan from the BraTS 2020 dataset.

- Data Augmentation: To address potential limitations in dataset size and variations in image quality (e.g., illumination errors, inconsistent staining, image noise), we employ data augmentation techniques described in Section 3.2. These techniques, such as rotation, flipping, and scaling, artificially expand the Dataset and help the model learn from a wider range of image presentations. The effectiveness of data augmentation is visually demonstrated in Figure 4 and Figure 5, which show the original images alongside their augmented counterparts.

- Class Balancing: The data is carefully balanced to ensure each cancer type (e.g., Melanoma, Basal Cell Carcinoma) has enough representative images (details in Table 2). This is crucial to prevent the model from biasing its predictions towards more frequent classes.

Table 2. Number of images in each class before and after augmentation.

Table 2. Number of images in each class before and after augmentation.

4.2. Feature Extraction and Cancer Type Classification with U-Net

- U-Net Segmentation (described in Section 3.3): a U-Net architecture is employed to extract informative features from the preprocessed images. This segmentation step focuses on identifying the region of interest (ROI) within the image, which contains the potential cancerous tissue (skin lesion or brain tumor). This targeted approach allows the model to concentrate on the most relevant image area for classification. The segmentation results for sample images are illustrated in Figure 7, demonstrating the U-Net’s ability to identify potential problem regions.

- ROI Cropping: Following U-Net segmentation, the generated mask is used to crop the image to a smaller size (400 × 400 pixels), as demonstrated in Figure 11. This reduces the computational burden on the classification model without compromising essential information.

Figure 11. Skin cancer and brain tumor after segmentation using the U-Net model.

Figure 11. Skin cancer and brain tumor after segmentation using the U-Net model.

Table 3 displays the accuracy of the outcome stemming from various image normalization and preprocessing techniques, with simple blurring and scaling images, and one with U-Net segmentation and using AdamW as an optimizer.

Table 3.

Outcome accuracy of preprocessing techniques.

4.3. Deep Learning Classification with Transfer Learning

- Transfer Learning with InceptionV3 (described in Section 3.4): We leverage the power of transfer learning by utilizing the pre-trained InceptionV3 architecture. InceptionV3, trained on a massive image dataset like ImageNet, has already learned valuable features for recognizing shapes, textures, and patterns within images.

- Fine-tuning: The pre-trained weights of InceptionV3 are fine-tuned for the specific task of cancer type classification. This involves adjusting the final layers of the model to adapt to the new dataset and cancer classification problem.

- Multi-class Classification: The fine-tuned InceptionV3 model is equipped to differentiate between various skin cancers (Melanoma (MEL), Nevus (NV), Basal Cell Carcinoma (BCC), etc.) and brain tumors. The output layer comprises multiple neurons corresponding to each cancer type, enabling the model to predict the cancer class for a given image.

5. Discussion

5.1. Experimental Setup and Hyperparameter Tuning

To build a reliable cancer detection system, the data was carefully divided: 75% of images from every cancer type were used for training the model, 10% helped fine-tune it during development, and 15% were held back for the final, unbiased test. This random split ensured no image appeared in more than one group, preventing overconfidence and giving trustworthy performance results.

All development was conducted using Python [42] with Keras [43] and TensorFlow [44], widely adopted tools that enable researchers to build complex AI models efficiently. Experiments were conducted on a standard research computer (Intel i5 CPU, 8GB RAM), demonstrating that the approach is practical without specialized hardware.

Finding the best settings for the InceptionV3 cancer detector involved systematic testing:

- Batch size thirty-two balanced training speed and memory usage

- A learning rate of 0.001 provided stable improvement from a common starting point.

- Freezing 70% of the layers proved critical, as it kept most of the initial patterns learned from general images unchanged while updating only the later layers for cancer-specific details. This cut reduced trainable parameters from twenty-one million to 12.9 million (Table 4), speeding up training while reducing the risk of overfitting.

Table 4. The summary of the inception model.

Table 4. The summary of the inception model. - Activation Function: The ReLU (Rectified Linear Unit) activation function [45] was employed in all layers due to its computational efficiency and effectiveness in deep learning architectures.

- Dropout Layers: Dropout layers with a rate of 0.5 [46] were incorporated after the convolutional layers to drop out a certain percentage of neurons during training randomly. This helps mitigate overfitting by preventing co-adaptation of features.

- Optimizer: The Adam optimizer [47], with a momentum of 0.99 and a learning rate of 0.001, was used for training. Adam is an adaptive learning rate optimization algorithm that has proven effective in training various deep learning models.

- Epochs: The model was trained for a maximum of 20 epochs.

- Classification Layer: The final layer of the pre-trained InceptionV3 was replaced with a new fully connected layer with ten output neurons corresponding to the standard and malignant cancer classes. A SoftMax activation function was applied to this layer to normalize the output probabilities.

Figure 12 depicts the following modifications made to the original InceptionV3 architecture:

Figure 12.

The fine-tuned architecture for cancer classification.

- Freezing Layers (70%): These layers are not trainable, and their weights remain unchanged during the fine-tuning process. Freezing layers help leverage the pre-trained features for general image recognition tasks while reducing the number of trainable parameters.

- Removing the Final Layer: The final classification layer of the pre-trained InceptionV3, which is designed for the ImageNet categories, is removed.

- Adding a New Fully Connected Layer: A new fully connected layer is added at the end of the architecture. This layer has several neurons equal to the number of cancer classes (10 classes).

- Activation Function: The new fully connected layer uses a SoftMax activation function to normalize the output probabilities.

5.2. Model Training Progress

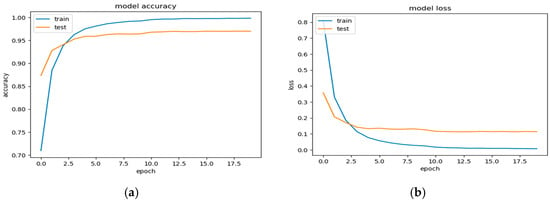

Monitoring a model’s training process is crucial for achieving optimal performance. In the case of our fine-tuned InceptionV3 model for early cancer classification, visualizing the accuracy and loss curves over training epochs provides valuable insights into its learning behavior.

The accuracy curve plots the model’s accuracy on the training and validation datasets over 20 epochs. The accuracy Curve (Figure 13a) provides an ideal scenario for the accuracy curve to rise steadily towards 1.0 for the training data. This indicates the model’s increasing ability to classify images within the training set correctly. However, the key lies in the validation accuracy curve as well. A validation accuracy that consistently increases alongside the training accuracy suggests the model is generalizing well and avoiding overfitting to the specific training data. Conversely, a stagnant or even decreasing validation accuracy curve might indicate overfitting.

Figure 13.

(a) The accuracy curves, and (b) the loss curves over 20 epochs.

The loss curve in Figure 13b ideally shows a consistent decrease for both the training and validation sets. This signifies that the model is effectively learning from the training data and reducing its classification error with each epoch. A significant decrease in the training loss accompanied by a similar trend in the validation loss is an encouraging sign.

The accuracy and loss curves offer promising results. The increasing accuracy on both the training and validation datasets suggests that the fine-tuned InceptionV3 model is effectively learning to classify early-stage cancer. The decreasing loss curves on both datasets imply that the model is reducing its classification error. These observations are encouraging and suggest that the model has the potential to generalize well for unseen data during evaluation.

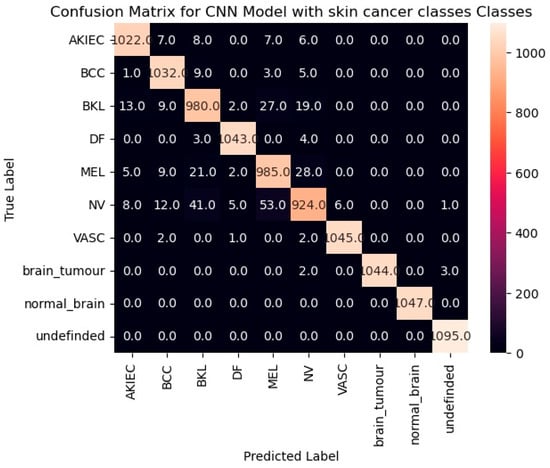

5.3. Examining Model Performance

Our fine-tuned InceptionV3 model addresses the challenge of classifying ten distinct categories: seven types of skin cancer, two types of brain tumors, and an additional class for images that fall outside the scope of skin or brain (labeled “undefined”). To assess the model’s effectiveness in distinguishing between these classes, we will leverage a powerful tool called a confusion matrix (presented in Figure 14). It displays the number of correct and incorrect predictions made by the model, categorized by each class.

Figure 14.

The confusion matrix for the fine-tuned InceptionV3 model.

By analyzing the confusion matrix (Figure 14), we can gain insights into the model’s performance for each class. High values along the diagonal cells are encouraging, as they indicate a suitable number of correct predictions for each category.

Model evaluation serves as a cornerstone in the development process, ensuring a model’s effectiveness and ability to adapt to unseen data. Just as a well-trained detective requires regular performance checks, so too does a machine learning model. Evaluation enables us not only to gauge a model’s overall performance but also to pinpoint its strengths and weaknesses, thereby paving the way for targeted improvements.

A rich arsenal of metrics exists for model evaluation, each offering a unique perspective on performance. In our study, we employed two primary metrics: accuracy and F1-score.

Accuracy: This metric, a cornerstone of evaluation, reflects the proportion of correct predictions made by the model relative to the total number of predictions. It provides a general sense of how often the model makes accurate classifications. However, for imbalanced datasets with a significant skew towards one class, accuracy alone might not be the most robust indicator.

F1-Score: This metric strikes a balance between two crucial concepts: precision and recall. Precision measures the proportion of optimistic predictions that are truly positive, while recall measures the proportion of actual positive cases that the model correctly identifies as such. The F1-score, calculated as the harmonic means of precision and recall, provides a more balanced view of performance, particularly for imbalanced datasets.

Table 5 provides a deeper examination of the performance of our fine-tuned InceptionV3 model for early cancer classification. It presents a classification report that analyzes the model’s effectiveness for each of the ten classes it was trained to identify: seven types of skin cancer, two types of brain tumor, and an additional class for images that fall outside the scope of skin or brain (“undefined”).

Table 5.

Classification report on the model.

The table focuses on three key metrics for each class:

- Precision: This metric reflects the proportion of positive predictions that were truly positive for a specific class. In simpler terms, it indicates how often the model correctly identified a cancer type out of all the images it predicted as that cancer type (e.g., a precision of 0.97 for AKIEC signifies that 97% of AKIEC predictions were truly AKIEC).

- Recall: This metric signifies the proportion of actual positive cases (images with a specific cancer type) that the model correctly identified. In other words, it indicates how often the model identified a particular cancer type out of all the images that had that cancer (e.g., a recall of 0.97 for AKIEC means the model identified 97% of the actual AKIEC images).

- F1-score: This metric strikes a balance between precision and recall, providing a more comprehensive view of the model’s performance for each class (ideally close to 1.00).

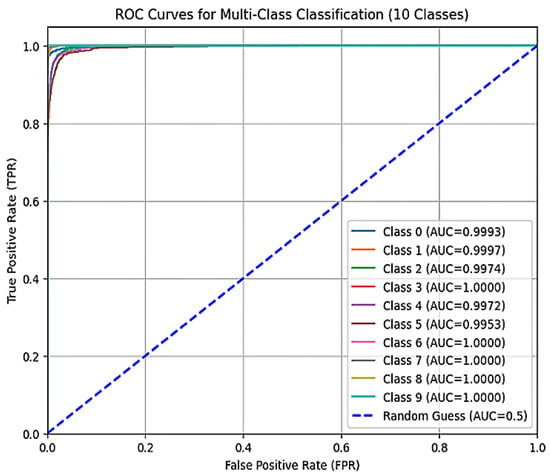

Complementing the insights from Table 5 (classification report), Figure 15 depicts the ROC (Receiver Operating Characteristic) curve for our fine-tuned InceptionV3 model. The ROC curve serves as a powerful tool for visualizing and evaluating the performance of a classification model across all classes.

Figure 15.

The ROC curve of the model.

The ROC curve plots the True Positive Rate (TPR) on the y-axis against the False Positive Rate (FPR) on the x-axis.

- Actual Positive Rate (TPR): This metric represents the proportion of actual positive cases (images with a specific cancer type) that the model correctly identified. It is also known as recall.

- False Positive Rate (FPR): This metric represents the proportion of negative cases (images without the specific cancer type) that the model incorrectly classified as positive.

An ideal ROC curve strives to hug the upper left corner of the graph. This signifies that the model has a high TPR (correctly identifying the most positive cases) with a low FPR (making minimal mistakes by classifying negative cases as positive).

By analyzing the ROC curve in conjunction with the classification report (Table 5), we gain a comprehensive understanding of the model’s performance across all classes. The ROC curve visually depicts the trade-off between correctly classifying positive cases and avoiding false positives. Our fine-tuned InceptionV3 model delivered an impressive performance on the training set, achieving an accuracy of 99.7%. This signifies an elevated level of proficiency in correctly classifying cancer images within the training data. However, the actual test lies in generalizability—how well the model performs on unseen data. Here, the model’s accuracy on the evaluation set, at 97%, is encouraging. While a slight drop from the training set is observed, it falls within an acceptable range, suggesting the model can effectively adapt to previously unseen cancer images.

5.4. Comparison with Recent Models

To further evaluate our model, we compared its ROC curve with those presented in recent studies that utilized similar datasets. The ROC curve for our fine-tuned InceptionV3 model demonstrates competitive performance, closely approaching the ideal shape of the upper left corner, indicating a prominent level of sensitivity and specificity. Compared to recent models, such as those proposed by Yu et al. [19] and Nasr-Esfahani et al. [21], our model exhibits comparable or superior true positive rates while maintaining a low false positive rate. For instance, Yu et al.’s model utilized a dataset with a higher-class imbalance, where certain cancer types were underrepresented, which may have resulted in a lower true positive rate for these specific classes compared to our balanced training approach. This difference in dataset composition impacted the resulting ROC curves, highlighting the importance of addressing class imbalance in training data. This comparison underscores the robustness of our model in distinguishing between the various cancer types and highlights its potential for practical clinical application.

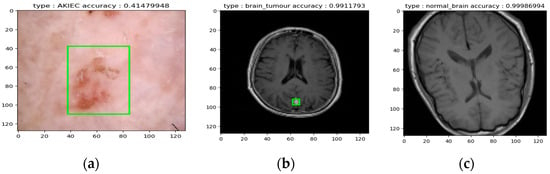

5.5. Evaluation of the Inception Model for Cancer Classification and Localization

This section presents the evaluation of a fine-tuned Inception model for classifying and localizing cancerous lesions in skin and brain tissue. The Inception architecture serves as a pre-trained deep learning framework, further optimized for this specific task.

Methodology:

- Image Acquisition: Single test images were presented to the model.

- Image Processing: The fine-tuned Inception model performed the following actions on each image:

- Classification: The model classified the image into one of ten categories:

- Seven classes of Skin Cancer: If a cancerous lesion was identified (e.g., potentially AKIEC skin cancer, as suggested in Figure 16a), the model attempted to classify the specific cancer type.

Figure 16. (a) Example of AKIEC skin cancer detection, (b) Example of brain tumor Detectio, and (c) Example of a normal brain classification.

Figure 16. (a) Example of AKIEC skin cancer detection, (b) Example of brain tumor Detectio, and (c) Example of a normal brain classification. - Undefined Image: Images falling outside the expected range for skin or brain tissue were classified as “undefined.”

- Localization (if applicable): For classified skin or brain cancer images (as exemplified in Figure 16a,b), the model aimed to localize the potential lesion within the image.

Overall, the test demonstrates the model’s ability to categorize various scenarios, including different skin cancer types, brain tumors, healthy brain tissue, and unrecognizable images. Figure 16a,b specifically illustrate the model’s potential for successful cancer classification alongside the localization of the lesion. Figure 16c illustrates the normal brain. However, further evaluation with a diverse dataset is necessary to comprehensively assess the model’s accuracy and generalizability across all seven skin cancer classes and various brain tumor presentations.

6. Conclusions and Future Prospects

This study presents a novel deep-learning approach for multi-class cancer classification using medical images. We propose a framework that leverages the strengths of Inception and U-Net architectures for feature extraction and segmentation, respectively. The system aims to automate the process of distinguishing between healthy tissues and seven distinct skin cancer types, as well as potentially identifying images that are out of distribution. This could significantly enhance physician decision-making by automating initial screening and potentially flagging suspicious lesions for further investigation. Additionally, the localization capability of the U-Net component could be valuable for treatment planning, as it precisely identifies the cancerous region.

Our investigation involved evaluating individual well-tuned Inception and U-Net models, as well as exploring their ensemble configurations. The proposed framework extracts informative features from pre-trained and fine-tuned deep Convolutional Neural Networks (CNNs). These features are then utilized for classification or fed into an ensemble network for improved accuracy. Our preliminary findings indicate the efficacy of this approach in differentiating between healthy and cancerous tissues, potentially achieving high accuracy across various skin cancer types.

6.1. Strengths of the Proposed Approach

- Leverages Complementary Architectures: Inception excels at capturing intricate spatial relationships, while U-Net is well-suited for segmentation tasks. This combination could offer robust feature extraction and precise localization of cancer regions, aiding in both classification and treatment planning.

- Data Augmentation and Transfer Learning: The framework incorporates data augmentation techniques to address the limitations of potentially smaller datasets. Additionally, transfer learning from pre-trained models helps expedite the learning process and enhance network performance.

6.2. Future Work

- Interpretability Enhancement: We aim to integrate techniques like Grad-CAM (Gradient-weighted Class Activation Mapping) to enhance model interpretability, allowing for better understanding of the decision-making process behind classifications.

- Improved Discrimination Accuracy: We will continue to refine our model architecture and training strategies to achieve even higher accuracy in differentiating between various skin cancer types (including potentially rarer types) and healthy tissues. We will also explore techniques for handling imbalanced datasets, which are common in medical image classification tasks.

- Generalization to Diverse Cancers: We plan to expand the applicability of our framework by evaluating it on datasets encompassing a broader range of cancer types, demonstrating its potential for broader clinical utility. Additionally, we will investigate the feasibility of implementing the model in a real-time or near-real-time setting for potential use in clinical settings.

Author Contributions

Conceptualization, M.A.S., A.G.G., R.M.E., Z.G.H. and A.A.A.; Methodology, A.G.G. and Z.G.H.; Software, A.G.G. and A.A.A.; Validation, M.A.S., Z.G.H. and A.A.A.; Formal analysis, A.G.G.; Data curation, M.A.S., R.M.E. and Z.G.H.; Writing—original draft, M.A.S. and A.G.G.; Writing—review & editing, M.A.S., A.G.G., R.M.E., Z.G.H. and A.A.A.; Visualization, R.M.E.; Supervision, A.A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| AI | Artificial Intelligence |

| AKIEC | Actinic Keratosis |

| BCC | Basal Cell Carcinoma |

| BKL | Benign Keratosis |

| BraTS | Brain Tumor Segmentation |

| cGANs | Conditional Generative Adversarial Networks |

| CNN | Convolutional Neural Network |

| DCGAN | Deep Convolutional Generative Adversarial Network |

| DF | Dermatofibroma |

| ESRGANs | Enhanced Super-Resolution GANs |

| GANs | Generative Adversarial Networks |

| HAM10000 | Human Against Machine (10k-image dataset) |

| ISIC | International Skin Imaging Collaboration |

| LD | Linear Dichroism |

| MEL | Melanoma |

| MDPI | Multidisciplinary Digital Publishing Institute |

| MRI | Magnetic Resonance Imaging |

| NV | Nevus |

| RGB | Red, Green, Blue |

| SCC | Squamous Cell Carcinoma |

| SVM | Support Vector Machine |

| TLA | Three Letter Acronym |

| VASC | Vascular Lesion |

References

- Patel, R.H.; Foltz, E.A.; Witkowski, A.; Ludzik, J. Analysis of artificial intelligence-based approaches applied to non-invasive imaging for early detection of melanoma: A systematic review. Cancers 2023, 15, 4694. [Google Scholar] [CrossRef] [PubMed]

- Güler, M.; Namlı, E. Brain Tumor Detection with Deep Learning Methods’ Classifier Optimization Using Medical Images. Appl. Sci. 2024, 14, 642. [Google Scholar] [CrossRef]

- Melarkode, N.; Srinivasan, K.; Qaisar, S.M.; Plawiak, P. AI-Powered Diagnosis of Skin Cancer: A Contemporary Review, Open Challenges and Future Research Directions. Cancers 2023, 15, 1183. [Google Scholar] [CrossRef]

- Schadendorf, D.; van Akkooi, A.C.J.; Berking, C.; Griewank, K.G.; Gutzmer, R.; Hauschild, A.; Stang, A.; Roesch, A.; Ugurel, S. Melanoma. Lancet 2018, 392, 971–984. [Google Scholar] [CrossRef] [PubMed]

- Bernal, J.; Kushibar, K.; Asfaw, D.S.; Valverde, S.; Oliver, A.; Martí, R.; Lladó, X. Deep convolutional neural networks for brain image analysis on magnetic resonance imaging: A review. Artif. Intell. Med. 2019, 95, 64–81. [Google Scholar] [CrossRef]

- Asiri, A.A.; Shaf, A.; Ali, T.; Aamir, M.; Irfan, M.; Alqahtani, S.; Mehdar, K.M.; Halawani, H.T.; Alghamdi, A.H.; Alshamrani, A.F.A.; et al. Brain Tumor Detection and Classification Using Fine-Tuned CNN with ResNet50 and U-Net Model: A Study on TCGA-LGG and TCIA Dataset for MRI Applications. Life 2023, 13, 1449. [Google Scholar] [CrossRef]

- Kulkarni, A.J.; Satapathy, S.C. Optimization techniques for machine learning. In Optimization in Machine Learning and Applications; Springer: Singapore, 2020; pp. 31–50. [Google Scholar] [CrossRef]

- Gao, Y.; Li, J.; Zhou, Y.; Xiao, F.; Liu, H. Optimization Methods for Large-Scale Machine Learning. In Proceedings of the 2021 18th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 17–19 December 2021; pp. 304–308. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Oliveira, R.B.; Filho, E.M.; Ma, Z.; Papa, J.P.; Pereira, A.S.; Tavares, J.M.R.S. Computational methods for the image segmentation of pigmented skin lesions: A review. Comput. Methods Programs Biomed. 2016, 131, 127–141. [Google Scholar] [CrossRef]

- Sayedelahl, M.A. A novel edge detection filter based on fractional order Legendre-Laguerre functions. Int. J. Intell. Syst. Technol. Appl. 2023, 21, 321–343. [Google Scholar] [CrossRef]

- Xu, M.; Yoon, S.; Fuentes, A.; Park, D.S. A Comprehensive Survey of Image Augmentation Techniques for Deep Learning. Pattern Recognit. 2023, 137, 109347. [Google Scholar] [CrossRef]

- Lee, T.; Ng, V.; Gallagher, R.; Coldman, A.; McLean, D. Dullrazor®: A Software Approach to Hair Removal from Images. Comput. Biol. Med. 1997, 27, 533–543. [Google Scholar] [CrossRef]

- Toossi, M.T.B.; Pourreza, H.R.; Zare, H.; Sigari, M.H.; Layegh, P.; Azimi, A. An effective hair removal algorithm for dermoscopy images. Skin Res Technol. 2013, 19, 230–235. [Google Scholar] [CrossRef]

- Mir, A.N.; Nissar, I.; Rizvi, D.R.; Kumar, A. LesNet: An Automated Skin Lesion Deep Convolutional Neural Network Classifier through Augmentation and Transfer Learning. Procedia Comput. Sci. 2024, 235, 112–121. [Google Scholar] [CrossRef]

- Smith, S.M. Fast Robust Automated Brain Extraction. Hum. Brain Mapp. 2002, 17, 143–155. [Google Scholar] [CrossRef] [PubMed]

- Daimary, D.; Bora, M.B.; Amitab, K.; Kandar, D. Brain Tumor Segmentation from MRI Images using Hybrid Convolutional Neural Networks. Procedia Comput. Sci. 2020, 167, 2419–2428. [Google Scholar] [CrossRef]

- Innani, S.; Dutande, P.; Baid, U.; Pokuri, V.; Bakas, S.; Talbar, S.; Baheti, B.; Guntuku, S.C. Generative Adversarial Networks for Skin Lesion Classification. Sci. Rep. 2023, 13, 13467. [Google Scholar] [CrossRef]

- Vega-Huerta, H.; Rivera-Obregón, M.; Maquen-Niño, G.L.E.; De-la-Cruz-VdV, P.; Lázaro-Guillermo, J.C.; Pantoja-Collantes, J.; Cámara-Figueroa, A. Classification model of skin cancer using convolutional neural network. Ingénierie Des Systèmes D’information 2025, 30, 387–394. [Google Scholar] [CrossRef]

- Yu, L.; Chen, H.; Dou, Q.; Qin, J.; Heng, P.-A. Automated Melanoma Recognition in Dermoscopy Images via Very Deep Residual Networks. IEEE Trans. Med. Imaging 2016, 36, 994–1004. [Google Scholar] [CrossRef]

- Kavitha, C.; Priyanka, S.; Kumar, M.P.; Kusuma, V. Skin Cancer Detection and Classification using Deep Learning Techniques. Sensors 2020, 20, 3206. [Google Scholar] [CrossRef]

- El-Shafai, W.; El-Fattah, I.A.; Taha, T.E. Deep learning-based hair removal for improved diagnostics of skin diseases. Multimed. Tools Appl. 2024, 83, 27331–27355. [Google Scholar] [CrossRef]

- Qin, Z.; Liu, Z.; Zhu, P.; Xue, Y. A GAN-based image synthesis method for skin lesion classification. Comput. Methods Programs Biomed. 2020, 195, 105568. [Google Scholar] [CrossRef]

- Quishpe-Usca, A.; Cuenca-Dominguez, S.; Arias-Viñansaca, A.; Bosmediano-Angos, K.; Villalba-Meneses, F.; Ramírez-Cando, L.; Tirado-Espín, A.; Cadena-Morejón, C.; Almeida-Galárraga, D.; Guevara, C. The effect of hair removal and filtering on melanoma detection: A comparative deep learning study with AlexNet CNN. PeerJ Comput. Sci. 2024, 10, e1953. [Google Scholar] [CrossRef]

- Ali, A.; Sharif, M.; Faisal, C.M.S.; Rizwan, A.; Atteia, G.; Alabdulhafith, M. Brain Tumor Segmentation Using Generative Adversarial Networks. IEEE Access 2024, 12, 183525–183541. [Google Scholar] [CrossRef]

- Tschandl, F.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. In Data Repository (Harvard Dataverse); Harvard University: Cambridge, MA, USA, 2018; pp. 1–3. Available online: https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/DBW86T (accessed on 2 January 2024).

- Musthafa, N.; Memon, Q.A.; Masud, M.M. Advancing Brain Tumor Analysis: Current Trends, Key Challenges, and Perspectives in Deep Learning-Based Brain MRI Tumor Diagnosis. Eng 2025, 6, 82. [Google Scholar] [CrossRef]

- Roy, S.; Jain, A.K.; Lal, S.; Kini, J. A study about color normalization methods for histopathology images. Micron 2018, 114, 42–61. [Google Scholar] [CrossRef] [PubMed]

- Picon, A.; Bereciartua-Perez, A.; Eguskiza, I.; Romero-Rodriguez, J.; Jimenez-Ruiz, C.J.; Eggers, T.; Klukas, C.; Navarra-Mestre, R. Deep convolutional neural network for damaged vegetation segmentation from RGB images based on virtual NIR-channel estimation. Artif. Intell. Agric. 2022, 6, 199–210. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Ian, G.; Bengio, Y.; Courville, A. Deep Learning; Chapter 9: Generative Adversarial Networks; MIT Press: Cambridge, MA, USA, 2016; Available online: https://www.deeplearningbook.org/ (accessed on 2 January 2024).

- Augmentor Team. Augmentor: Image Augmentation Library for Python. Available online: https://augmentor.readthedocs.io/ (accessed on 2 January 2024).

- Al-Kababji, A.; Bensaali, F.; Dakua, S.P.; Himeur, Y. Automated liver tissues delineation techniques: A systematic survey on machine learning current trends and future orientations. Eng. Appl. Artif. Intell. 2023, 117, 105532. [Google Scholar] [CrossRef]

- Olaf, R.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18; Springer International Publishing: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Suganyadevi, S.; Seethalakshmi, V.; Balasamy, K. A review of deep learning on medical image analysis. Int. J. Multimed. Inf. Retr. 2021, 11, 19–38. [Google Scholar] [CrossRef]

- Ravanmehr, R.; Mohamadrezaei, R. Deep Learning Overview. In Session-Based Recommender Systems Using Deep Learning; Springer: Cham, Switzerland, 2024; pp. 27–72. [Google Scholar] [CrossRef]

- Vinod, N.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Gayatri, K.; Vora, D. Activation functions and training algorithms for deep neural network. UGC Approv. J. Int. J. Comput. Eng. Res. Trends 2018, 5, 98–104. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Karl, W.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Antonio, G.; Pal, S. Deep Learning with Keras; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for Large-Scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Fred, A.A. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Sungheon, P.; Kwak, N. Analysis on the dropout effect in convolutional neural networks. In Computer Vision–ACCV 2016, Proceedings of the 13th Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Revised Selected Papers, Part II 13; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Zijun, Z. Improved adam optimizer for deep neural networks. In Proceedings of the 2018 IEEE/ACM 26th International Symposium on Quality of Service (IWQoS), Banff, AB, Canada, 4–6 June 2018. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).