Abstract

The rapid development and increasing availability of high-resolution satellite (HRS) images provides increased opportunities to monitor large scale land cover. However, inefficiency and excessive independence on expert knowledge limits the usage of HRS images on a large scale. As a knowledge organization and representation method, ontology can assist in improving the efficiency of automatic or semi-automatic land cover information extraction, especially for HRS images. This paper presents an ontology-based framework that was used to model the land cover extraction knowledge and interpret HRS remote sensing images at the regional level. The land cover ontology structure is explicitly defined, accounting for the spectral, textural, and shape features, and allowing for the automatic interpretation of the extracted results. With the help of regional prototypes for land cover class stored in Web Ontology Language (OWL) file, automated land cover extraction of the study area is then attempted. Experiments are conducted using ZY-3 (Ziyuan-3) imagery, which were acquired for the Jiangxia District, Wuhan, China, in the summers of 2012 and 2013.The experimental method provided good land cover extraction results as the overall accuracy reached 65.07%. Especially for bare surfaces, highways, ponds, and lakes, whose producer and user accuracies were both higher than 75%. The results highlight the capability of the ontology-based method to automatically extract land cover using ZY-3 HRS images.

1. Introduction

High-resolution satellite (HRS) data are widely used to monitor land cover by different application departments [1]. For instance, the China Geographic National Conditions Monitoring (CGNCM) mission monitors fundamental geographic information in China using HRS images, such as ZY-3 images [2]. The majority of the geographic information monitored by this mission is associated with land cover, which supports both short- and long-term land planning and government decision-making.

The interpretation of satellite images is challenging [1]. To achieve high accuracy of image interpretation, HRS remote sensing data are used in most applications and studies with its advantage of high resolution [2]. Land use and land cover reflects the impact of human activities on the natural environment and ecosystem [3]. Remote sensing and geographic information system techniques are very useful for conducting researches like land cover change detection analysis and predicting the future scenario [4]. As it was mentioned, the CGNCM mission which is proposed and implemented by National Administration of Surveying, Mapping, and Geoinformation, China requires annual land cover monitoring via HRS remote sensing data. Moreover, manual land cover delineations are needed to validate the accuracy of the remotely sensed data. Implementing these manual land cover delineations, which cover the entirety of China, is time consuming and challenging, even on an annual basis. Therefore, a fast and effective automatic or semi-automatic land cover extraction method is needed.

Pixel-based image extraction is a commonly used extraction process for quite a long time. However, the information contained in an image is not fully utilized and unsuitable for high-resolution images [1,5]. Object-oriented feature extraction uses segmentation, spectral, texture, and shape information and is widely used for image feature extraction over given periods of time [5,6,7].

However, object-oriented feature extraction also possesses some limitations. Geographic object-based image analysis (GEOBIA) methods are rarely transferable because they are based on expert knowledge and the implementation of uncontrolled processing chains [8]. Thus, land cover image analyses require expert knowledge from remote sensing professionals, which is rarely formalized and difficult to automate [1].

Moreover, the object-oriented method is limited by its reusability and automation, especially when used by non-professionals or in batch processes [5,8]. Commercial software that mainly uses object-oriented method has proven relatively inefficient for such extraction work, largely due to an inherent trial and error process. These processes become particularly cumbersome at large scales, such as the national scale GCNCM missions.

The application of ontology to remote sensing image extraction has been discussed in the geographic information system (GIS) and remote sensing communities [5,9]. Ontology includes the representation of concepts, instances, relationships and axioms, and permits the inference of implicit knowledge [10]. Ontologies have effectively expressed different domain knowledge in the computer science field [1].

Ontology can be used to provide expert knowledge and improve the satellite image extraction automation process by describing image segments based on spectral, textural, and shape features [1]. Many studies proven that ontology can feasibly support the semantic representation of remote sensing images [1,6,11,12,13]. Arvor et al. [8] suggested that ontologies will be beneficial in GEOBIA, especially for data discovery, automatic image interpretation, data interoperability, workflow management, and data publication.

However, these current studies possess limitations. For example, the feature values stored by different ontological classes are based on an expert’s prior knowledge and experiences in a study area. Thus, the results are highly dependent on the expert’s knowledge and prior experiences of a specific area, which may significantly vary between experts and users. The second limitation is related to the object diversities. These diversities include variations in the shapes and spectral characteristics of land cover objects. Shape variations may be caused by segmentation algorithm differences or due to the quality of the remotely sensed image. Spectral characteristic variations may be due to different materials covered on the objects, even for the objects in the same class. In addition, current studies generally ignore temporal land cover variations, which may result in spectral features that vary based on the time or season [14].

This study attempts to use hierarchical ontology with formalized knowledge to extract regional land cover data. A fundamental geographic information category (FGIC) has been proposed by the Chinese Administration of Surveying, Mapping and Geo-information. The FGIC contains almost all of the classes that appear on topographic maps, including land cover. Some researchers have created FGIC ontologies based on the FGIC category. Wang and Li created an FGIC ontology, which included shape, location, and function parameters [15]. According to the FGIC ontology, remotely-sensed geographic information class data can be formalized based on the associated properties and some additional remotely sensed feature properties like spectral, texture, and so on. Although numerous studies have examined land cover using remote sensing applications, only a small portion of the associated knowledge has been stored, reused or shared. Thus, a remotely-sensed land cover knowledge base is needed.

Building a knowledge base is difficult because the knowledge is often implicit and possessed by experts [16]. Therefore, the semantic gap between experts and non-expert users must be narrowed [17]. The Prototype theory in cognitive psychology provides a potential starting point for the knowledge base [18]. Medin and Smith [19] suggested that a concept is the abstraction of different classes, and the prototype is the idealistic representation of this concept. In other words, the prototype is an abstraction that contains all of the centralized feature characteristics of the concept or class. The prototype can be used to classify other unknown object. Creating a land cover class prototype may also benefit the remote sensing knowledge organization and image extraction processes. Land cover extraction knowledge can be stored for each land cover class and utilized by future analyses.

The CGNCM mission uses knowledge in the automation or semi-automation extraction processes. This knowledge significantly improves the land cover information extraction efficiency and effectiveness. As required by the CGNCM, annual geographic conditions should be monitored during the summer, which can served as a starting point for ontological land cover knowledge.

In this paper, we examine the ability of ontologies to facilitate land cover classification using satellite images. We focus on the use of a land cover ontology and regional prototype, which are used to perform the regional land cover extraction. This study introduces an ontology-based framework and method for land cover extraction. The method uses the create land cover ontology, and creates a regional land cover class prototype for automation image extraction. This paper is organized into four sections. The image data and methodology are described in Section 2. Section 3 discusses the experimental results of the proposed method. Finally, conclusions are provided in Section 4.

2. Data and Methods

2.1. Data

As the first civil high-resolution satellite in China, ZY-3 (Ziyuan-3, Resource-3) was launched on 19 January 2012 by the Satellite Surveying and Mapping Application Center (SASMAC), National Administration of Surveying, Mapping and Geo-information (NASG), China, with the purpose of surveying and monitoring land resources. ZY-3 is equipped with 2.1 m panchromatic Time Delayed and Integration-Charge Coupled Devices (TDI-CCD) and 5.8 m multispectral cameras. The latter includes four bands in the blue, green, red, and near-infrared bandpass filters [20]. ZY-3 imagery is widely used for land management, surveying and environmental studies in China, representing the main data source for the CGNCM mission. The ZY-3 images used in this study are provided by SASMAC, NASG. Orthoretification and radiation corrections have been completed for the reflectance image, providing a quantitative result and avoiding effects related to temporal and environmental variations [21]. Panchromatic images (2.1 m spatial resolution) are merged with multispectral images (5.8 m spatial resolution) using the Gram–Schmidt method, resulting in a 2.1 m spatial resolution image with four spectral bands. The acquisition times of the ZY-3 images include 20 July 2012, and 7 August 2013. In addition to the 2012 land cover map, the former image is used to analyze land cover features, and the feature attribute data are stored as prototypes. The 2013 image land cover is then extracted for the experiment. Similar to the requirement of CGNCM mission land cover extraction, we study the extraction experiment in the summer season, when the feature of land cover is most obvious, especially for vegetation.

This experiment focus on the land cover monitoring of the Jiangxia District in Wuhan, which lies in the Eastern Jianghan Plain at the intersection of the middle reaches of the Yangtze and Han Rivers. The summer is long and hot in Wuhan, lasting from May to August each year, with an average temperature of 28.7 °C. Urban areas in the Jiangxia District have recently expanded, leading to significant land cover variations each year. The study area includes urban and suburban area, some of which is under construction.

2.2. Methods

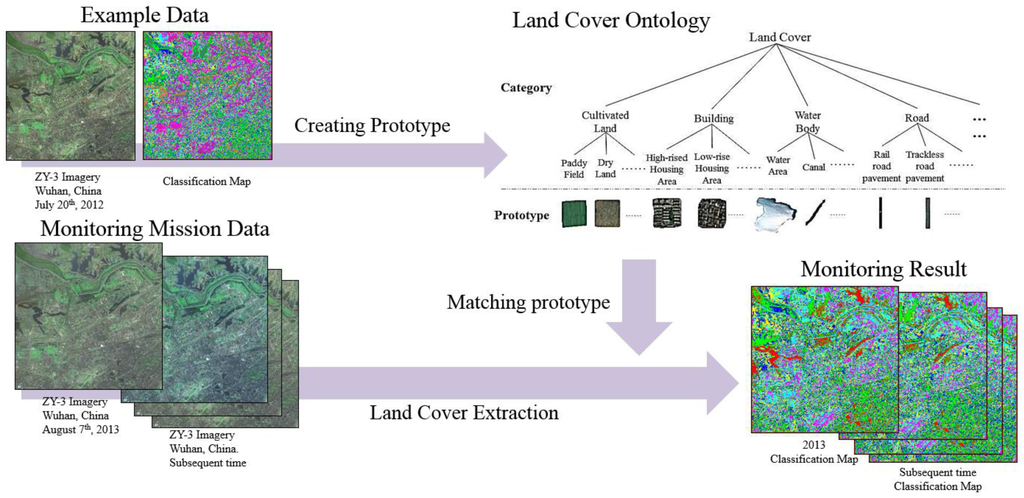

Our study first establishes an ontology based on land cover classes, properties and a hierarchal system. The land cover ontology based on the Chinese FGIC land cover [22]. The ontology utilizes the spectral, textural, spatial, and segmentation land cover class features from remote sensing images. The 2012 land cover map and ZY-3 image are used to analyze the land cover features and estimate a centralized value range for each feature. A land cover ontology prototype is then created. Finally, the ontology and prototype are used to extract the 2013 data.

2.2.1. Land Cover Hierarchy

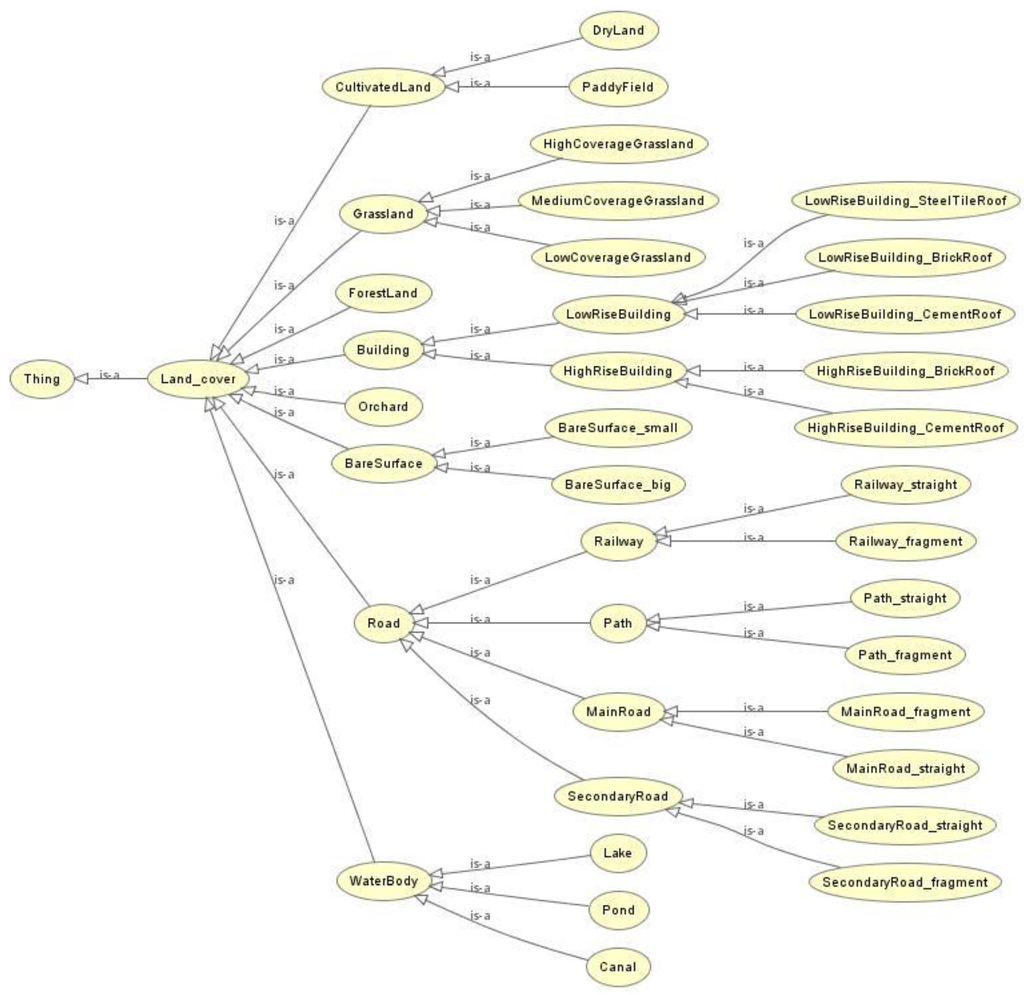

The land cover in the study area consists of eight classes, including building, road, bare surface, grassland, cultivated land, forests, orchards, and water bodies. Some of these classes include subclasses, such as high-rise and low-rise building for the building class. Based on the FGIC ontology proposed by Wang and Li [15], the land cover classes are selected to create the land cover ontology, and their hierarchy is established via Protégé 3.4.7., which is provided by Stanford University, California, USA. The main relationship among these classes is based on “is-a”, which builds the hierarchical land cover structure (Figure 1).

Figure 1.

Hierarchy of land cover ontology. According to the China Fundamental Geographic Information Category, land cover classes are selected for the land cover ontology. Land cover classes have the relationship of “is-a” in the ontology hierarchy.

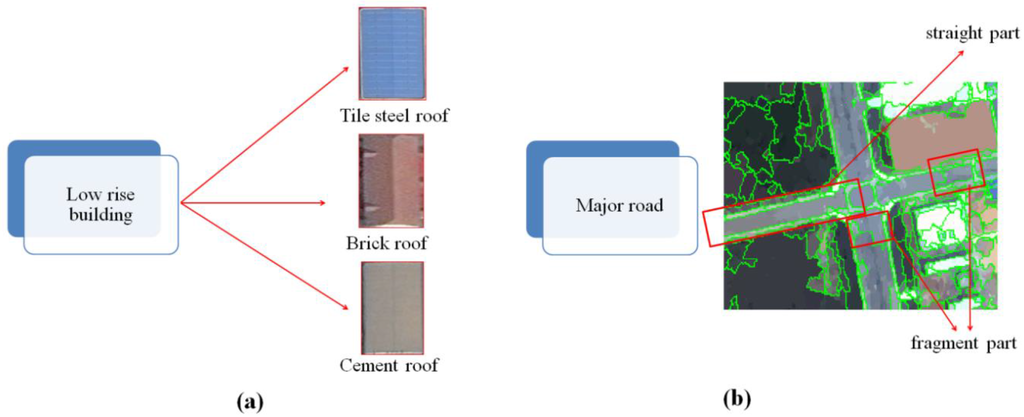

An increased land cover object diversity may decrease the feature extraction accuracy. This may occur for the objects with in the same class that are composed of different material. In this case, the spectral and textural features of the two objects may be completely different despite belonging to the same class, and they can be mistakenly extracted into different classes (Figure 2a). For example, low-rise building roofs may be covered with different materials, such as steel tiles, bricks, or cement, and may be classified into different classes. A similar scenario may occur for objects in the same class with different shapes, which may be due to the segmentation algorithm or image quality. For example, major roads can be segmented into different sections, including straight and fragmented portions. These different sections exhibit similar spectral and textural characteristics, but distinct shapes, which may cause shape feature calculation errors and affect the extraction results (Figure 2b).

Figure 2.

Diversity of land cover objects. (a) shows the spectral diversity resulted from the difference of materials, e.g., the low- rise building; and (b) is about the shape diversity, which may be the result of image quality or segmentation algorithm, e.g., the major road may be over segmented.

These problems are avoided by creating subclasses. For instance, low-rise buildings are classified into three subclasses based on their material, including low-rise buildings with tile steel, brick, and cement roofs. Major roads can be divided into straight and fragmented portions based on shape. Subclasses are stored in the ontology and invisible to users. After extraction, subclasses are combined based on the original classes.

2.2.2. Land Cover Properties

Many attributes usually need to be considered to identify objects in satellite images, e.g., the spectral response, shape, textural, and spatial relationships in satellite images were examined [8]. [1] provideds a semantic description of image objects contained in a Landsat TM image from the Brazilian Amazon, which was used to classify the area using spectral rules defined in an ontology. [12,23] successfully combined ontologies and the use of spectral and geometric attributes to interpret urban objects (e.g., roads, vegetation, water, and houses).

In ontology, features about remote sensing characteristics of land cover can be considered and represented as properties of land cover ontology, i.e., Spectral, textural, and shape attributes are used to represent land cover features, which are organized as ontological properties.

Spectral features are computed for each band of the input image. The attribute value for a particular pixel cluster is computed based on the input data band, where the image segmentation label has the same value. Spectral attributes include: the mean, the average value of the pixels comprising the region in band x; standard deviation, the standard deviation value of the pixels comprising the region in band x; maximun value, maximum value of the pixels comprising the region in band x; and minimun value, the minimum value of the pixels comprising the region in band x.

Texture features are computed for each band of the input image. Texture attributes require a two-step process. The first step applies a square kernel of pre-defined size to the input image band. The attributes are then calculated for all pixels in the kernel window and the result is referenced to the center kernel pixel. In our study, the kernel window size is set to 3. Next, the attribute results are averaged across each pixel in the pixel cluster to create the attribute value for that band’s segmentation label. There are texture attributes, including the mean value, variance, data range, and entropy. Mean is the average value of the pixels comprising the region inside the kernel; variance is the average variance of the pixels comprising the region inside the kernel; range means the average data range of the pixels comprising the region inside the kernel; and entropy is the average entropy value of the pixels comprising the region inside the kernel.

Spatial features (shapes) are computed using the polygon that defines the pixel cluster boundary. Spatial attributes include the area, compactness, convexity, solidity, roundness, form factor, elongation and rectangular fit, all of which reflect the shape of the object. Area is the total area of the polygon, minus the area of the holes. Compactness means the shape measure that indicates the compactness of the polygon; Convexity is polygons are either convex or concave. This attribute measures the convexity of the polygon; solidity is a shape measure that compares the area of the polygon to the area of a convex hull surrounding the polygon; roundness is a shape measure that compares the area of the polygon to the area of a convex hull surrounding the polygon; form factor is a shape measure that compares the area of the polygon to the square of the total perimeter; elongation means a shape measure that indicates the ratio of the major axis of the polygon to the minor axis of the polygon. The major and minor axes are derived from an oriented bounding box containing the polygon; rectangular fit is a shape measure that indicates how well the shape is described by a rectangle. This attribute compares the area of the polygon to the area of the oriented bounding box enclosing the polygon. All of the features calculation method can referred to [24].

Remotely sensed land cover class features represent the extracted properties, which are stored in OWL, with the help of Protégé 3.4.7.

2.2.3. Create Land Cover Class Prototype

Land cover extraction knowledge can be accumulated over time when conducting various tasks. A suitable land cover knowledge representation and organization method is needed to efficiently reuse this knowledge. A prototype approach, which is rooted in cognitive psychology knowledge representation theory is used in this study. Numerous land cover examples were input for the study area. A statistical method was then used to calculate the centralized ranges of every feature for each class, which were used to create a prototype for each class.

The land cover vector layer can be generated based on the land cover map. The IDL is then used to segment the ZY-3 image into objects according to the land cover vector layer. These objects belong to corresponding land cover classes. The features of these objects are then analyzed for each class.

At the region level, the local variability of spatially-explicit land-cover/use changes displays different types of natural resource depletion [25]. The land cover features may differ in different regions, especially spectral features. For example, oak spectral features may vary between Northern and Southern China in June. In contrast, land cover in the same area or similar areas may display similar characteristic features. Therefore, it is possible to create a land cover prototype at the regional scale.

HRS images and classified maps are used to create the prototype. The entire Wuhan area exhibits similar geographic and climatic conditions. Therefore, the land cover prototype can be established for this area. Only the Jiangxia District is selected to create a prototype in this experiment. 2012 ZY-3 reflectance image and land cover map are used in this portion of the analysis. Therefore, 2012 can be considered the base year for the Jiangxia District land cover monitoring. According to the prototype, land cover extraction analyses can be conducted in the summer of the subsequent year (2013), which meets the requirement of the CGNCM mission.

The land cover reflectance characteristics may seasonally vary, especially for the vegetation class [25]. Therefore, annual land cover monitoring studies should utilize images from the same season, which will optimize the comparability between the images. For example, the CGNCM missions require land cover monitoring during the summer of each year [2]. This study attempts to analyze the land cover during summer 2012.

Confidence intervals are estimated using a statistical method and used to attain centralized feature values for every class. A normal distribution is then fit to the land cover feature data, which is used to create confidence intervals for each feature class.

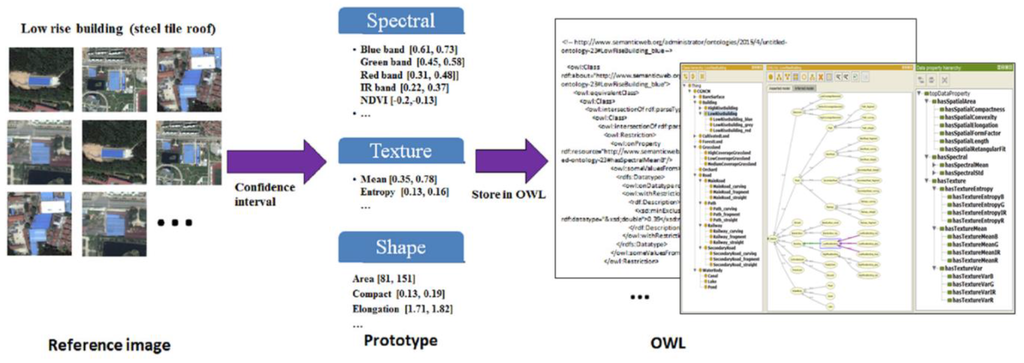

The prototype of one class is the combination of all of the features within a confidence interval. For instance, the high rise building class includes 500 objects based on the reference image and land cover map. The mean texture value can be calculated for these 500 objects. If the object data fits the normal distribution, then the confidence interval can be determined the mean high-rise building texture. The confidence intervals of other features (e.g., the spectral mean, standard deviation, minimum, etc.; textural variance, entropy, etc.; and shape area, elongation, roundness, etc.) can be calculated as well. These confidence intervals are used to create the high-rise building prototype. This feature information is stored as data properties in Protégé. Therefore, the prototype of this class is ready to use (Figure 3). After the ontology is created and prototype is stored for a study area, the land cover classes can be automatically extracted at the same time or season every year.

Figure 3.

Create prototype for land cover. With the help of referenced image and land cover map, the confidential interval of each feature can be calculated. Then, all these data are stored in Protégé 3.4.7.

2.2.4. Land Cover Extraction

As in GEOBIA methods, the procedure for relating geographic and image objects relies on a segmentation step and a classification step [26]. Segmentation is an important GEOBIA feature extraction step. This study uses the ENVI EX segmentation tool for this step. The tool uses an edge detection-based segmentation method and details of the method can be found in the ENVI user guide [4]. Edge-based segmentation is suitable for detecting feature edges if objects of interest have sharp edges. Scale level parameters should be effectively set to delineate features.

Since the geographic entity descriptions and corresponding representations in images are scale-dependent, the scale must be considered when conducting geographic analyses [27,28]. Studies have used remote sensing imagery to show that the segmentation accuracy can limit object-oriented extraction [8]. No segmentation method is completely suitable for all land cover classes due to image quality, pre-processing methods, and the object complexity variations [5,8].

The land cover extraction segmentation scale used in this study was provided by experts. Utilizing a trial-and-error process, the input parameters of the segmentation algorithm can be adjusted [8]. After the manual adjustments, the suitable segmentation scale level parameter was set to 20 for the ZY-3 2.1m fused image. The scale level value is also stored in an OWL file.

Based on the ontology and prototype from 2012, land cover extraction tool can be created using ENVI/IDL. An August 2013 image of the same area is used as the input. The program loads the land cover classes and prototype from the ontology, creating a rule-based extraction module (Figure 4).

Figure 4.

Procedure of using ontology and prototype in land cover extraction. With the help of prototype, which is created from the example data, the data ranges of features are inputted into the extraction procedure, and then automatic land cover extraction for subsequent image can be done.

2.2.5. Accuracy Assessment

Land cover map produced in summer, 2013 of the study area is used as the ground truth data for assessing the accuracy of the extraction results. This referenced land cover map is under the scale of 1:25000, and is about 2 m per pixel. The average error is less than one pixel. There are also eight classes in this land cover map, which includes building, road, bare surface, grassland, cultivated land, forest, orchard, and water bodies. With help of ground truth data, accuracy assessment can be done for our extraction experiment of 2013 land cover. In the accuracy assessment, first, we calculate the confusion matrix of all classes. From confusion matrix, the user’s accuracy and producer’s accuracy can be estimated. Then, we analyze the overall accuracy and Kappa coefficient for further analysis of the experimental results. In our study, post-classification processing is not hired after the land cover automatic extraction. We only take automatic extraction result into account for accuracy assessment. Due to post-classification processing, which is often used during the practical applications, is somewhat subjective. Uncertainty related with operators may exist in the processing results.

3. Results and Discussion

ENVI/IDL is used for land cover extraction. The segmentation scale is 20 and merge scale is 90 in this study. These two parameters are used in the subsequent image extraction. The rule-based ENVI EX classifier is set to 4.8, confidence threshold value to 0.40 and the default fuzzy tolerance value to 5%. S-Type was chosen as the default membership function set type.

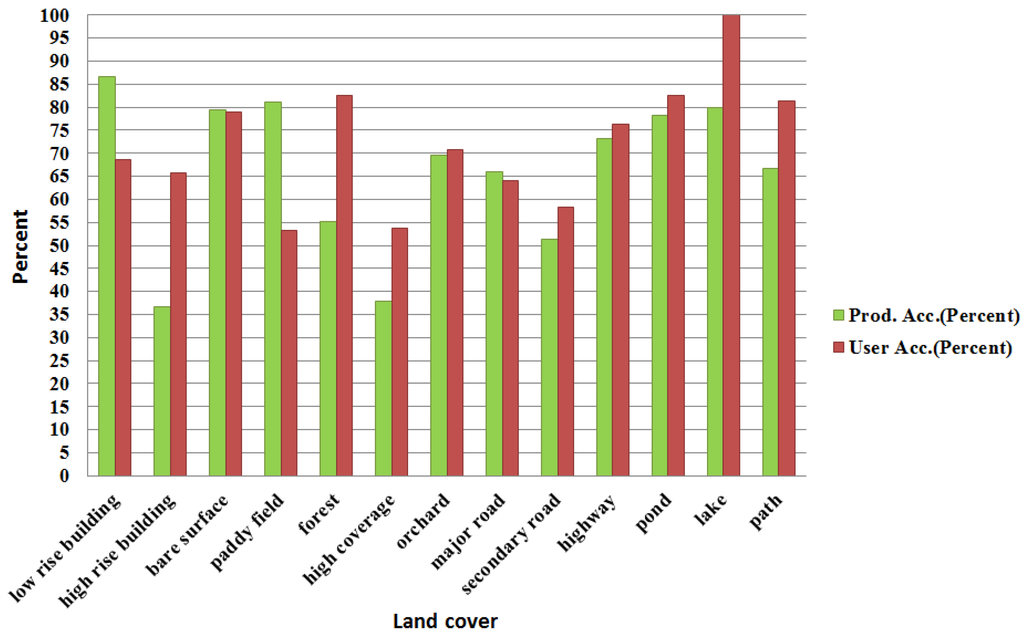

We set the 2013 land cover map to represent the ground true, which were used to compute a confusion matrix. The Kappa coefficient of the experiment is 0.59, and the overall accuracy is 65.07%. The producer’s accuracy and user’s accuracy results can be seen in Table 1.

Table 1.

Accuracy assessment of land cover extraction.

The low rise building class exhibits the highest producer’s accuracy of 86.52%, but relatively low user’s accuracy of 68.63% (Table 1). Per Figure 5, this discrepancy is due to the confusion of low-rise and high rise buildings because low-rise buildings display similar features as high rise buildings. The majority of the path is over segmented. Therefore, if low-rise buildings are partially covered in dust, they may be incorrectly classified.

Figure 5.

Comparison of land cover extraction results. Columns in green color represent the producer’s accuracy for land cover extraction, and columns in red color represent the user’s accuracy.

The paddy field class also displayed high producer and low user accuracies of 81.18% and 53.27%, respectively (Table 1). As illustrated in Figure 5, the paddy field class displays an acceptable producer’s accuracy, but is still mistakenly classified into other classes, such as the orchard, forest, and low-rise building classes. This confusion causes the low paddy field user’s accuracy.

The user accuracies of the bare surface, forest, highway, pond, lake, and path classes are all higher than 75%, suggesting that the majority of the objects in these classes are correctly extracted. The bare surface, pond and lake classes displayed the both producer and user accuracies larger than 75% (Table 1). These results can also be seen in Figure 5. The high-rise building and grassland classes were not correctly extracted, as their producer accuracies are lower than 50%.

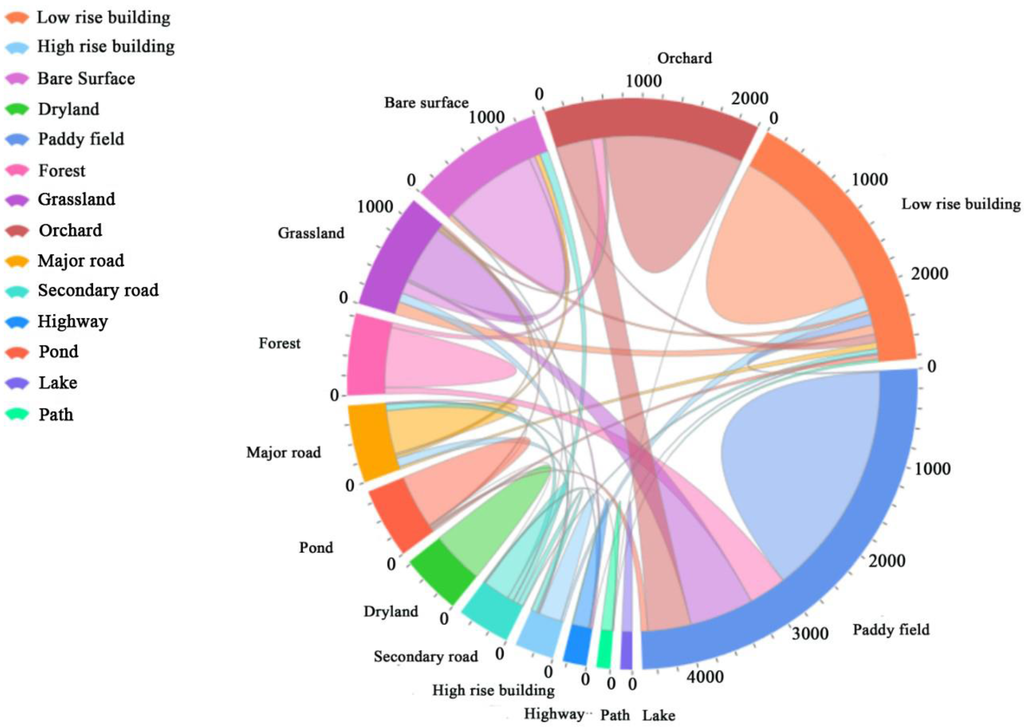

Details of the extraction results can be seen in the confusion matrix in Table 2 and the chord chart in Figure 6. In the chord chart of Figure 6, different colors represent different land cover classes. The length of the arc for each class represents the number of pixels, the unit is thousands of pixels. The link strips between different classes mean the pixels of the objects that are incorrectly classified into the other class. As it is shown in our results, more than half of the paddy fields are correctly extracted. However, certain portions of the paddy field are not correct, most of which are orchard, grassland, and forest, and it seems that the paddy field is more likely to be classified into orchard by mistake. There are two reasons; one is that because the orchard always has low trees planted in it, and summer is its flourishing period, especially for its leaves, and the paddy field is also covered by vegetation in summer. However, in the 2.1 m image, the textures of these two different classes are very close, along with similar spectral and shape, the objects of the paddy field are very easy to be interpreted as the orchard by mistake. Meanwhile, about 1/6 of orchards are in fact paddy field. For the extracted low-rise building objects, most of which are correct, but low-rise buildings can still be easily classified into all kinds of other land cover classes. Comparatively, bare surface, dry land, ponds, and lakes have good results in the extraction.

Table 2.

Confusion matrix of land cover.

Figure 6.

The chord chart of confusion matrix for land cover extraction. Different colors represent different land cover classes. Length of the arc for each class represents the number of pixels, the unit is thousands of pixels. The link strips between different classes mean the pixels of the objects that are incorrectly classified into the other class.

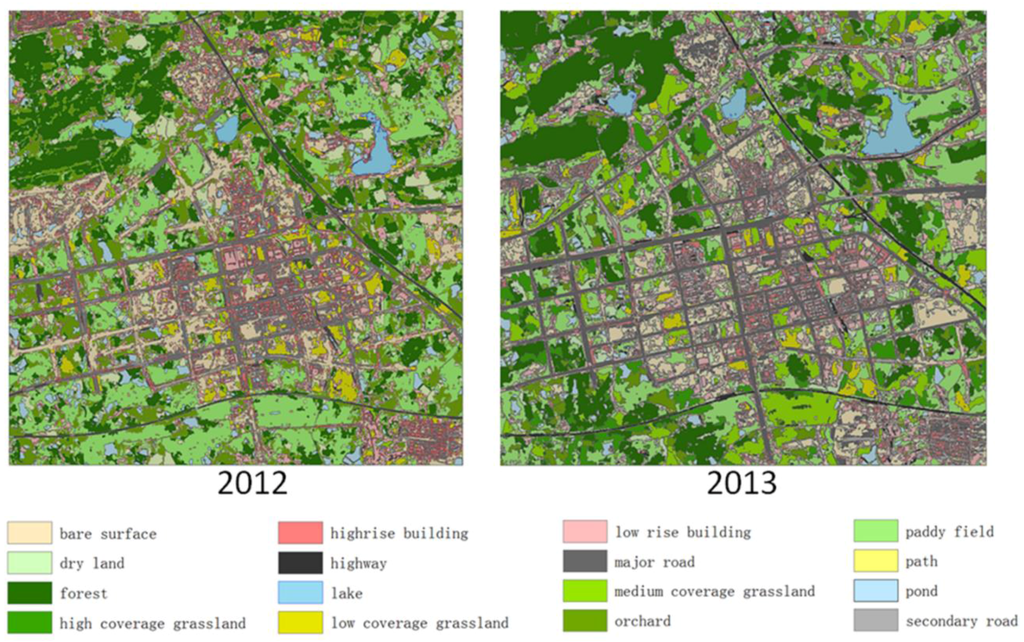

Clear changes can be observed between the 2012 and 2013 land cover images. For example, a larger proportion of bare surface can be seen in 2012, and dirt and dust cover a large area of the Jiangxia District (Figure 7 left). However, most of the buildings and roads are completed in 2013. Thus, some new residential areas and road networks (including main roads, secondary roads and highways) can be seen in Figure 7 (right). In addition, forested areas were planted after the construction was completed. However, some areas are still under construction in the 2013 image. Detailed information about the land cover change between 2012 and 2013 can be seen in Table 3.

Figure 7.

Classification result of the study area. Left is the extraction result for study area in 2012. Right is the result in 2013. It can be seen from the results that certain areas of bare surface become roads or buildings in 2013.

Table 3.

The matrix of land cover changes between 2012 and 2013. The unit is m2.

The results show that ontology and prototype can be used to efficiently and effectively conduct automatic land cover extractions. The bare surface, pond, and lake classes exhibited high accuracies. The low-rise building, paddy field, forest, orchard, major road, secondary road, and highway classes displayed moderate accuracies.

Though the overall accuracy of 65.07% is not very high, comparatively, the efficiency and convenience of our proposed method is still obvious. With the help of the land cover ontology and prototype, extraction can be quickly completed without great amount of work on parameters trial and error test, expert knowledge preparation, not to mention the manual delineation, As mentioned above in the 2.2.5 accuracy assessment, post-classification processing is not applied after the automatic land cover extraction in our study, which is considered to significantly facilitate the improvement of extraction result and is often required in practical work. In other words, when this ontology-based extraction method is used in the real work, taking further advantage of post-classification processing, both greater efficiency and higher accuracy can be seen in the extraction results.

4. Conclusions

Our study proposes an ontology-based image extraction method for land cover. The land cover ontology is first established for study area, including spectral, texture, and shape properties. A referenced land cover map and ZY-3 image are then used to create a land cover regional prototype for the study area, which is stored in an OWL file. A land cover extraction experiment is then conducted for the study area the year after the referenced year. Results of this study show that the use of regional prototypes can help organize land cover extraction knowledge in various study areas, avoiding the variance and subjectivity introduced by different remote sensing experts. Moreover, this process benefits non-expert users conducting land cover analyses because they only need a land cover map, or referenced classification map, and HRS image, as extraction work of the subsequent step is automatically completed. The ontological approach reuses knowledge, simplifying temporal and spatial analyses.

The main contribution of this study is the attempt to use ontological methods and prototypes for land cover extraction in certain regional scales. This method has the advantage of reusability of knowledge of land cover remotely-sensed characteristics, and it provides a more automatic, more efficient, and less expert knowledge-dependent way for land cover monitoring.

Future studies should include additional factors, such as the NDVI and NDWI indexes or additional band ratios, which can improve the land cover class accuracies. In addition, this study only analyzed the summer season, so temporal resolution and variations should be addressed in future studies. These resolutions should include seasonal, or even monthly, temporal scales. Larger areas will also be analyzed in future studies, including province-scale land cover monitoring based on the combination of regional prototypes. Additional land cover maps and images are required for larger scale studies, and a more automated tool should be developed. Therefore, a province-scale land cover ontology with a regional prototype will simplify land cover interpretation and data extraction. In this case, the land cover monitoring task (CGNCM mission) can be more efficiently and accurately completed.

Acknowledgments

This research is supported by the National Administration of Surveying, Mapping and Geoinformation, China under the Special Fund for Surveying, Mapping and Geographical Information Scientific Research in the Public Interest (No. 201412014). We also acknowledge the help of the Satellite Surveying and Mapping Application Center, National Administration of Surveying, Mapping and Geo-information (NASG), China, for providing the ZY-3 data.

Author Contributions

This research was mainly performed and prepared by Heng Luo and Lin Li. Heng Luo and Lin Li contributed with ideas, conceived and designed the study. Heng Luo wrote the paper. Haihong Zhu supervised the study and her comments were considered throughout the paper. Xi Kuai and Yu Liu contributed the tools, and analyze the results of the experiment. Zhijun Zhang reviewed and edited the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Andres, S.; Arvor, D.; Pierkot, C. The towards an ontological approach for classifying remote sensing images. In Proceeding of 8th International Conference on Signal Image Technology & Internet Based Systems (Sitis 2012), Naples, FL, USA, 25 November 2012; pp. 825–832.

- Li, D.; Sui, H.; Shan, J. Discussion on key technologies of geogrpahic national condition monitoring. Geomat. Inf. Sci. Wuhan Univ. 2012, 37, 505–512. [Google Scholar]

- Zhou, Q.; Sun, B. Analysis of spatio-temporal pattern and driving force of land cover change using multi-temporal remote sensing images. Sci. China Technol. Sci. 2010, 53, 111–119. [Google Scholar] [CrossRef]

- Ahmed, B.; Ahmed, R. Modeling urban land cover growth dynamics using multi‑temporal satellite images: A case study of dhaka, bangladesh. ISPRS Int. J. Geo-Inf. 2012, 1, 3–31. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic object-based image analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed]

- Forestier, G.; Puissant, A.; Wemmert, C.; Gancarski, P. Knowledge-based region labeling for remote sensing image interpretation. Comput. Environ. Urban Syst. 2012, 36, 470–480. [Google Scholar] [CrossRef]

- Baraldi, A.; Boschetti, L. Operational automatic remote sensing image understanding systems: Beyond geographic object-based and object-oriented image analysis (GEOBIA/GEOOIA). Part 1: Introduction. Remote Sens. 2012, 4, 2694–2735. [Google Scholar] [CrossRef]

- Arvor, D.; Durieux, L.; Andres, S.; Laporte, M.A. Advances in geographic object-based image analysis with ontologies: A review of main contributions and limitations from a remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2013, 82, 125–137. [Google Scholar] [CrossRef]

- Agarwal, P. Contested nature of place: Lnowledge mapping for resolving ontological distinctions between geographical concepts. In Geographic Information Science, Proceedings; Egenhofer, M.J., Freksa, C., Miller, H.J., Eds.; Springer-Verlag Berlin: Berlin, Germany, 2004; pp. 1–21. [Google Scholar]

- Fonseca, F.T.; Egenhofer, M.J.; Agouris, P.; Câmara, G. Using ontologies for integrated geographic information systems. Trans. GIS 2002, 6, 231–257. [Google Scholar] [CrossRef]

- Forestier, G.; Wemmert, C.; Puissant, A. Coastal image interpretation using background knowledge and semantics. Comput. Geosci. 2013, 54, 88–96. [Google Scholar] [CrossRef]

- Durand, N.; Derivaux, S.; Forestier, G.; Wemmert, C.; Gancarski, P.; Boussaid, O.; Puissant, A. Ontology-based object recognition for remote sensing image interpretation. In Proceedings of the 19th IEEE International Conference on Tools with Artificial Intelligence (ICTAI 2007), Patras, Greece, 29–31 October 2007; pp. 472–479.

- Hashimoto, S.; Tadono, T.; Onosato, M.; Hori, M.; Moriyama, T.; IEEE. A Framework of Ontology-Based Knowledge Information Processing for Change Detection in Remote Sensing Data; IEEE: New York, NY, USA, 2011; pp. 3927–3930. [Google Scholar]

- Jensen, J.R. Remote Sensing of the Environment: An Earth Resource Perspective; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Wang, H.; Li, L.; Mei, Y.; Song, P.C. Concepts' Relationship of Geo-Ontology; Publishing House Electronics Industry: Beijing, China, 2008; pp. 217–221. [Google Scholar]

- Tonjes, R.; Growe, S.; Buckner, J.; Liedtke, C.E. Knowledge-based interpretation of remote sensing images using semantic nets. Photogramm. Eng. Remote Sens. 1999, 65, 811–821. [Google Scholar]

- Rejichi, S.; Chaabane, F.; Tupin, F. Expert knowledge-based method for satellite image time series analysis and interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2138–2150. [Google Scholar] [CrossRef]

- Galotti, K.M. Cognitive Psychology in and out of the Laboratory; SAGE: Thousand Oaks, CA, USA, 2013. [Google Scholar]

- Medin, D.L.; Smith, E.E. Concepts and concept formation. Annu. Rev. psychol. 1984, 35, 113–138. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Luo, H.; She, M.Y.; Zhu, H.H. User-oriented image quality assessment of zy-3 satellite imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 4601–4609. [Google Scholar] [CrossRef]

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and change detection using Landsat TM data: When and how to correct atmospheric effects? Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Li, L.; Wang., H. Classification of fundamental geographic information based on formal ontology. Geomat. Inf. Sci. Wuhan Univ. 2006, 31, 253–256. [Google Scholar]

- Puissant, A.; Durand, N.; Sheeren, D.; Weber, C.; Gancarski, P. Urban ontology for semantic interpretation of multi-source images, 2nd. In Proceeding of Workshop Ontologies for Urban Development: Conceptual Models for Practitioners, Castello del Valentino, Turin, Italy, 18 October 2007.

- ITT-Visual Information Solutions. ENVI EX User Guide; ITT-Visual Information Solutions: Boulder, CO, USA, 2010. [Google Scholar]

- Jansen, L.J.M.; Carrai, G.; Morandini, L.; Cerutti, P.O.; Spisni, A. Analysis of the spatio-temporal and semantic aspects of land-cover/use change dynamics 1991–2001 in albania at national and district levels. Environ. Monit. Assess. 2006, 119, 107–136. [Google Scholar] [CrossRef] [PubMed]

- Lang, S. Object-based image analysis for remote sensing applications: Modeling reality–dealing with complexity. In Object-Based Image Analysis; Blaschke, T., Lang, S., Hay, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 3–27. [Google Scholar]

- Agarwal, P. Ontological considerations in giscience. Int. J. Geogr. Inf. Sci. 2005, 19, 501–536. [Google Scholar] [CrossRef]

- Mark, D.; Smith, B.; Egenhofer, M.; Hirtle, S. Ontological foundations for geographic information science. Res. Chall. Geogr. Inf. Sci. 2004, 28, 335–350. [Google Scholar]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).