Abstract

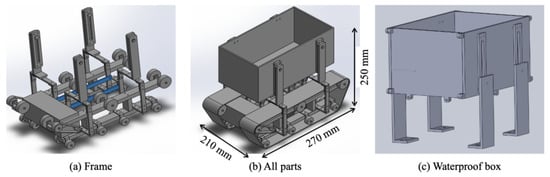

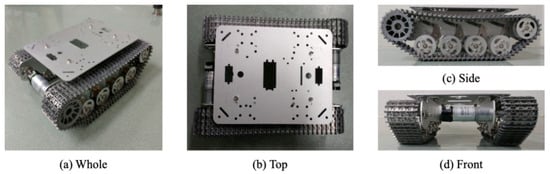

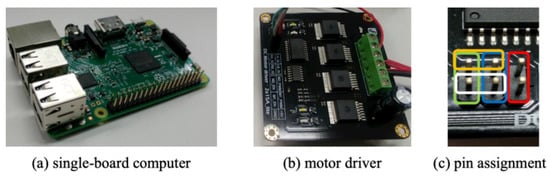

This study was conducted to develop robot prototypes of three models that navigate mallards to achieve high-efficiency rice-duck farming. We examined two robotics navigation approaches based on imprinting and feeding. As the first approach, we used imprinting applied to baby mallards. They exhibited follow behavior to our first prototype after imprinting. Experimentally obtained observation results revealed the importance of providing imprinting immediately up to one week after hatching. As another approach, we used feed placed on the top of our second prototype. Experimentally obtained results showed that adult mallards exhibited wariness not only against the robot, but also against the feeder. After relieving wariness with provision of more than one week time to become accustomed, adult mallards ate feed in the box on the robot. However, they ran away immediately at a slight movement. Based on this confirmation, we developed the third prototype as an autonomous mobile robot aimed for mallard navigation in a paddy field. The body width is less than the length between rice stalks. After checking the waterproof capability of a body waterproof box, we conducted an indoor driving test for manual operation. Moreover, we conducted outdoor evaluation tests to assess running on an actual paddy field. We developed indoor and outdoor image datasets using an onboard monocular camera. For the outdoor image datasets, our segmentation method based on SegNet achieved semantic segmentation for three semantic categories. For the indoor image datasets, our prediction method based on CNN and LSTM achieved visual prediction for three motion categories.

1. Introduction

With the evolutionary development of machines and information technologies represented by robots, artificial intelligence (AI), and the internet of things (IoT), precision agriculture and smart farming have been attracting attention in recent years [1,2,3,4,5,6,7]. Herein, we propose a novel agricultural concept as remote farming for fundamental technologies to actualize smart farming. Remote farming specifically emphasizes not only the use of information and communications technology (ICT) to improve production efficiency and reduce costs but also remote robot operation and remote field monitoring. For this study, we intend to develop a small robot that accommodates functions to move autonomously along a path between rice paddies and that automatically monitors crop growth, disease infection, and natural enemy attacks.

Existing large agricultural machines demand great effort to ensure safety during locomotion [8]. By contrast, small farming robots accomplish proximity crop monitoring and various tasks in complex and narrow areas that are difficult for large robots to negotiate. Moreover, damage from collision with grown crops or animals as livestock or work animals is reduced by a safe robot system [9]. Nevertheless, smaller robots impose constraints on the mounting of devices and sensors. For this study, we used a monocular camera to recognize surroundings for our robot to achieve autonomous locomotion as a part of a monocular vision-based navigation system [10].

Laser imaging detection and ranging (LiDAR) sensors offer great potential in agriculture to measure crop volumes and structural parameters [11]. Nevertheless, our robot uses no LiDAR sensor because of the reduction of parts to be mounted on the body, the total cost, and system complexity. Moreover, the field of view (FOV) of LiDAR is narrower than that of a normal camera. The rapid development of image processing methods enhanced with deep-learning (DL) techniques [12] has expanded to use open-source code libraries that have been released on numerous websites [13]. Therefore, various technologies and approaches to elucidate objects, scenes, and their surroundings for autonomous locomotion can be applicable for numerous applications to images obtained using an ordinarily available monocular camera [14].

We consider that time-series FOV images obtained from a frontal monocular camera while moving between rice paddies provide consistency in image features to line up on both sides of a route. Moreover, we consider that machine learning (ML) algorithms present benefits for processing image features for accomplishing necessary functions for autonomous locomotion. However, the paddy field soil states undergo various changes concomitantly with growth of the rice plants. In the case of a typical Japanese rice crop, a paddy field is flooded to approximately 300 mm depth after rice planting in spring. Then the water depth decreases along with the progress of rice growth in summer. Water is completely drained from the field for harvesting in autumn.

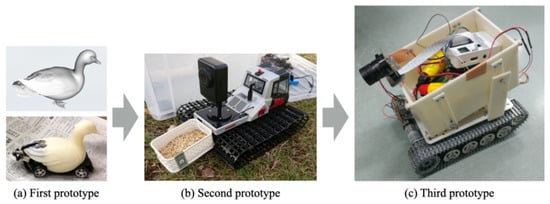

In a paddy field, soil conditional changes vary concomitantly with the growth of rice plants, seasons, regional climate characteristics, wind direction and speed, and solar radiation. One important challenge for an autonomous mobile robot in a paddy field is to maintain robustness and adaptability to environmental changes. We assume that incremental learning for field locomotion images based on ML algorithms has an effective potential to improve generalization capability. This study was conducted to develop three robot prototypes as depicted in Figure 1 that move among rice plants. Moreover, this study was done to implement and evaluate our proposed method based on time series learning algorithms for vision-based autonomous locomotion.

Figure 1.

Exterior photos of respective robot prototypes that moves among rice plants for mallard navigation.

The contribution of this paper is as follows:

- investigation of the possibility of small robots to actualize highly efficient rice-duck farming,

- verification of useful methods to navigate mallards using robot prototypes based on imprinting and feeding,

- construction of image datasets among rice paddies obtained using an onboard monocular camera,

- and demonstration of segmentation and prediction based on deep-learning-based methods.

This paper is structured as follows. In Section 2, we briefly review related studies of existing rice-ducking robots and navigation methods. Section 3 presents the first prototype as depicted in Figure 1a used for imprinting-based navigation and its basic experimental results applied for baby mallards. Subsequently, Section 4 presents the second prototype as depicted in Figure 1b used for feeding-based navigation and its basic experimental results applied for adult mallards. As a fully customized mallard navigation robot, Section 5 and Section 6 respectively present the design of the third prototype as depicted in Figure 1c and the basic results of locomotion experiments in an actual paddy field based on visual navigation. Finally, Section 7 concludes and highlights future work. Herein, we had proposed this basic method with originally developed sensors of two proceedings [15,16]. For this paper, we have described detail results and discussion as a whole system.

2. Related Studies

2.1. Rice-Ducking Farming

Rice-duck farming is an environmentally friendly rice cultivation method that employs neither chemical fertilizers nor pesticides. Although hybrid ducks are generally used for rice-duck farming, farmers in northern Japan use mallards (Anas platyrhynchos) because of their utility value as a livestock product. For this study, we specifically examine rice-duck farming using mallards considering regional characteristics. Figure 2 depicts rice-duck farming using mallards.

Figure 2.

Rice-duck farming using mallards in northern Japan. For protecting mallards from natural enemies, colored fishing lines made of nylon are stretched over the paddy field. Paddy rice is eaten by mallards. Mallards often concentrate in a specific area because of a swarm habit. These photographs were taken in Ogata Village, Akita, Japan (4000 N, 14000 E).

Regional competition of rice production in Japan has come to be difficult since the rice reduction policy was abolished in 2018. Rice farmers are requested not only to secure food safety for the nation, but also to switch to novel rice production styles that are requested by consumers and which are consequently rewarded on the market. One solution is organic farming rice production. To reduce the environmental load related to agricultural production, organic farming uses no agricultural chemicals including fertilizers and pesticides. For the benefit of natural production capacity, organic farming has come to be regarded as an effective approach to improve the value of products.

Since ancient times, mallards have been domesticated as poultry for human consumption. Mallards are used not only for rice-duck farming, but also for meat because their smell is not strong. Mallards eat leaves, stems, seeds, and shells of plants. For weeding and pest control, mallards eat not only aquatic weeds such as Echinochloa esculenta, Cyperus microiria, and Juncus effuses, but also aquatic insects such as Lissorhoptrus oryzophilus, Sogatella furcifera, Nilaparvata lugens, Laodelphax striatellus, but also river snails in a paddy field. Mallard movements in a paddy field also produce positive effects of full-time paddling. For weed prevention, turbid water suppresses photosynthesis of weeds below the surface. Moreover, mallards not only provide feces for nutrients of growing rice, but also contact rice during movements as a stimulus. A paddy field is a place of abundant water and life for mallards [17]. Moreover, the grown paddy rice can provide refuge from natural enemies.

Organic farming using hybrid ducks or mallards is commonly known as rice-duck farming. Ducks provide weed control and pest control in paddy fields. As an example, the rice price using rice-duck farming is up to three times higher than that of conventional farming using agricultural chemicals and fertilizers. Therefore, improved stabilization for farmers is expected. Generally, organic farming without pesticides requires more labor than conventional farming for weed control and for cultivation management. In underpopulated regions, especially in rural areas with population aging and labor shortage, rice-duck farming is extremely attractive because it provides a substitute for human labor. However, one important shortcoming is that a flock of ducks tends to gather in a specific area that then becomes a spring pond. No rice is grown in such a spring pond because seedlings are pushed down. Another shortcoming is the ineffective weed control achieved in areas outside of ducks’ active moving areas. We consider that robotics technologies, especially for small robots [18,19], provide the potential to solve these diverse shortcomings. The aim of this study was to develop an autonomous mobile robot that guides mallards to realize highly efficient rice-duck farming. This study was conducted to develop robot prototypes of three models that navigates mallards to achieve high-efficiency rice-duck farming.

2.2. Rice-Ducking Robots

As air-cushion vehicles (ACVs), Yasuda et al. [20] developed a brush-roller type paddy weeding robot that floats in a paddy field using a hovercraft mechanism. They used a tension member coated with glass fiber with polypropylene for a brush roller. Rotation of the brush roller behind the robot actualized weeding to the roots of rice. Although blowers for feeding air into skirts and the brush roller were driven using dedicated motors, a generator with a gasoline engine was used because the robot had no battery. Regarding locomotion in a large paddy field, they provided not only manual operation, but also automatic pilot using GPS. Their prototype robot assumed for a practical use had the ability to perform weeding of 10,000 m2 up to 4 h. However, miniaturization was important future work because the body of 1900 mm long, 1860 mm wide, and 630 mm high was big, especially for carrying on a light truck used for transportation by small-scale farmers in Japan. Furthermore, the brush roller using the tension member damaged the rice. An important difficulty was that the rice yield decreased by up to 30%. Although they considered an alternative cultivation approach to increase the number of planted seedlings as a countermeasure against damage to paddy rice, the production cost and the rice quality remained as a subject for future work in this area.

For controlling weed growth with soil agitation, a small weeding robot named iGAMO was developed by the Gifu Prefectural Research Institute of Information Technology, Japan [21]. This robot was improved in collaboration with an agricultural machine manufacturer for practical use and dissemination [22]. The main body is 580 mm long, 480 mm wide, and 520 mm high. Two crawlers with 150 mm gap provided locomotion to run over a line of rice plants. The robot achieved autonomous locomotion from rice plant distribution information detected using a near infrared (IR) camera and two position-sensitive device (PSD) depth sensors. Two 1.41 N·m motors driven by a 180 Wh battery provided continuous operation up to 3 h with working efficiency of 1000 m2/h. Moreover, they improved the weeding efficiency using not only stirring of the crawlers, but also metal chains for scraping the soil surface. They compared their robot with a conventional weeding machine and actual hybrid ducks in several rice paddy fields The experimentally obtained results revealed amounts of residual grass and differences of yields and rice grade. Sori et al. [23] proposed a rice paddy weeding robot with paddle wheels instead of crawlers. The main body is 428 mm long, 558 mm wide, and 465 mm high. The two paddle wheels provide locomotion to run over a line of rice plants. The experimentally obtained results revealed that the number of tillers was increased when using their robot compared to the case without weeding.

Nakai et al. [24] described a small weed-suppression robot. The main body is 400 mm long, 190 mm wide, and 250 mm high. The robot achieved locomotion with a passage width of 300 mm, which is the standard rice plant interval in Japan. The robot accommodates a tri-axial manipulator with an iron brush for improving the efficiency of weeding, combined with crawler-based weeding. For autonomous locomotion, they actualized stable movement in rough paddy fields using a laser range finder (LRF). However, hybrid ducks provide not only weeding, but also pest predation and excrement, the latter of which provides nutrients for the rice. Weeding robots can provide no such effect. Hybrid ducks do not move freely in a paddy field because they have no consciousness or responsibility for agricultural work. Therefore, improving weeding efficiency, pest control, and nutrient injection from excrement remain as challenging tasks. An approach that combines robots and green ducks is positioned as an excellent solution for outdoor cultivation in conventional farming.

Yamada et al. [25] proposed an autonomous mobile robot that navigated hybrid ducks. They conducted an imprinting experiment for baby hybrid ducks using the robot. The main body size is similar to the mean size of parent hybrid birds. They used crawlers for the locomotion mechanism of the robot in the paddy field. In the imprinting experiment, they put a baby hybrid bird at 48 hr after hatching in a square box of 300 mm length. They applied visual stimulation for 45 min repeated six times. They confirmed that baby hybrid ducks acted according to robot behavior patterns. This result demonstrated that imprinting using a robot was possible for baby hybrid ducks. The robot appearance need not be similar to that of parent hybrid ducks. Imprinting was performed on quadrangular objects with no pattern. Moreover, they conducted induction experiments for up to four baby hybrid ducks that had hatched seven days before. The experimentally obtained results revealed the effectiveness of imprinting for the effect between feeding as a reward for bait and as a direct reward from stamped stimulus. Nevertheless, no experiment was conducted in actual paddy fields. In an artificial environment of 1500 mm long and 2000 mm wide, the robot merely repeated a reciprocating motion over an acrylic board. Regarding the influence of duck calling, no significant effect was found.

Moreover, Yamada et al. [26] noted the fact that about one week had passed for baby hybrid ducks for hatching as a condition for farmers. They conducted not only imprinting on ducklings that had passed the critical period of imprinting, but also conducted induction experiments with feeding. This experiment was conducted at a paddy field. The robot supported a camera, a speaker used for sound reproduction, and two pyroelectric IR sensors from the rear. In addition, a feeding port for feed learning was provided at the tail end of the robot. According to the sensor conditions, the feed port had a function of automatically opening and closing. The pyroelectric IR sensors covered from 0.3 m through 0.5 m for near measurements and from 0.5 m through 1.5 m for remote measurements for detecting objects including obstacles. Although they performed 45 min × 6 imprinting operations for two hybrid ducks, they concluded that no action was observed to approach an autonomous mobile robot after the critical period. However, respective behaviors after playing a call of a parent bird from a robot without movement revealed that feed-learned hybrid ducks approached the feed outlet of their robot system. Moreover, results revealed as the effect of feeding learning that feeding-learned individuals were more likely to follow the robot than unlearned individuals. They inferred that hybrid ducks learned that the robot was harmless because they contacted the robot several times. However, the movement ranges of the robot and hybrid ducks in the evaluation experiment was about 1.50 m2. Compared with the actual paddy field, the result of this experiment is limited to induction within a limited range. The robot guided two hybrid ducks in the stop status and one hybrid duck in the moving status. Therefore, no indication was shown for feeding guidance for hybrid ducks, which show group behavior as a basic habit.

2.3. Animal–Robot Interaction

Animal–robot interaction (ARI) is an extended concept of human–robot interaction (HRI) and human–robot relation based on ethorobotics, which relies on evolutionary, ecological, and ethological concepts for developing social robots [27,28]. One important factor for ARI is that developers should have full knowledge of animal behaviors and emotions to make a robot understand their needs sufficiently for natural interaction with the animal [29]. Moreover, robot behaviors should be well designed considering the animal ethology for a sudden movement [29]. Recently, ARI studies and technologies represent a relatively novel research field of bio-robotics and are opening up to new opportunities for multidisciplinary studies, including biological investigations, as well as bio-inspired engineering design [30]. Numerous ARI studies have been conducted, especially on Zebrafish [31,32,33,34,35,36,37], the green bottle fly (Lucilia sericata) [38], squirrels, crabs, honeybees, rats, and other animal species, including the studies on interactive bio-robotics [39]. In the case of waterfowl, two representative studies are as follows.

Vaughan et al. [40] developed a mobile sheepdog robot that maneuvers a flock of ducks to a specified goal position. After verifying the basic characteristics through simulations, they evaluated the navigation of 12 ducks to an arbitrary position set as a goal in an experimental arena with a 7 m diameter created as an actual environment. Nevertheless, they indicated no specifications such as the mobile performance of their robot.

Henderson et al. [41] navigated domestic ducks (Anas platyrhynchos domesticus) using two stimuli: a small mobile vehicle and a walking human. They navigated 37 adult ducks of 6 flocks at a donut-shaped experimental course. The experimentally obtained comparison results demonstrated the differences in navigation capability between humans and robots based on the index of mean latency to return to food.

2.4. Autonomous Locomotion and Navigation

Numerous state-of-the-art robots including flying robots as unmanned aerial vehicles (UAV) have been proposed for autonomous locomotion and navigation. Chen et al. [42] proposed a legged stable walking control strategy based on multi-sensor information feedback for large load parallel hexapod wheel-legged robot developing. They developed a mobile robot that has six legs and six wheels applied in complex terrain environments. The results revealed that their proposed active compliance controllers based on impedance control reduced the contact impact between the foot-end and the ground for improving the stability of the robot body. Moreover, they actualized the anti-sliding ability after introducing the swing leg retraction that provided stable walking in complex terrain environments.

Li et al. [43] developed a wheel-legged robot with a flexible lateral control scheme using a cubature Kalman algorithm. They proposed a fuzzy compensation and preview angle-enhanced sliding model controller to improve the tracking accuracy and robustness. The simulations and experimentally obtained results demonstrated that their proposed method achieved satisfactory performance in high-precision trajectory tracking and stability control of their mobile robot.

Chen et al. [44] proposed an exact formulation based on mixed-integer linear programming to fully search the solution space and produce optimal flight paths for autonomous UAVs. They designed an original clustering-based algorithm to classify regions into clusters and obtain approximate optimal point-to-point paths for UAVs. The experimentally obtained results with randomly generated regions demonstrated the efficiency and effectiveness for the coverage path planning problem of autonomous heterogeneous UAVs on a bounded number of regions.

In a paddy field, mallards often concentrate in a specific area because of a swarm habit. Some areas therefore have persistent weeds because mallards do not disperse. Mallards do not weed a whole paddy field uniformly. Moreover, a stepping pond occurs where all the paddy rice has been eaten by mallards. As a different approach, some farmers use feed to navigate mallards. The difficulty of this approach is the necessity of human burdens, especially for large paddy fields. Therefore, farmers must weed them using a weed-removing machine. For this study, we examined three navigation approaches used for mallards: imprinting, pheromone tracking, and feeding.

Imprinting is a unique behavior observed in nidifugous birds such as ducks, geese, and chickens [45]. Imprinting is a contacting and follow response to a stimulus that is received for the first time during a short period after hatching. Moreover, imprinting is enhanced for running and following in response to sounds or moving shadows. For this study, we specifically examined imprinting-based navigation. We used a small robot as an imprinting target for baby mallards.

Pheromones are chemicals that promote changes in behavior and development of conspecific individuals after being produced inside the body and secreted outside the body [46]. Pheromones are used mainly when insects communicate with conspecific individuals. Although no pheromone is available to navigate mallards, we consider indirect navigation using insects favored by mallards with pheromone. Specifically, we devised an indirect usage that navigates mallards using insects that are gathered to a pheromone trap attached to a robot. Although baby mallards attempt to eat insects, adult birds have no interest in them. We consider that the efficiency of this approach decreases along with mallard development. For this study, we conducted no experiments using pheromone-based navigation because of the difficulty of the procedures using insects and the weak overall effects.

For rice-duck farming, breeders use feed to collect ducks. Breeders give minimum feed for ducks because ducks stop eating weeds if too much feed is given. We expect that feeding-based navigation is effective for adult mallards because imprinting can only be performed during the baby mallard period. Yamada et al. tested the effects of feed learning and navigation for hybrid ducks. However, they described no test for feed learning for mallard navigation. For this study, we specifically examined the method of navigating mallards following the use of feed combined with a small robot.

For actualizing self-driving cars, research and development of autonomous locomotion have been conducted actively [47] in the field of automated driving using multiple sensors [48]. Murase et al. [49] have applied a convolutional neural network (CNN) to their proposed method for automatic automobile driving to improve control precision and accuracy. Their developed CNN model was trained with vehicle states using on-board camera images and vehicle speeds as system inputs and the volumes of steering, acceleration, and break operations as system outputs. The experimentally obtained results demonstrated the effectiveness of the combination of time-series images and CNNs for automated driving. Although the method exhibited usefulness for weather and changes, the evaluation results using video datasets remain as simulations.

Kamiya et al. [50] attempted to estimate car motion patterns in video images from a first-person view (FPV) [51] using a recurrent convolutional neural network (RCNN) [52]. They compared estimation accuracies with those of three RCNN models trained with three input patterns: color images, dynamic vector images obtained from optical flow features, and both images. Evaluation experiments targeting four behavior prediction patterns, which comprise moving forward, turning right, turning left, and moving backward, demonstrated the usefulness of switching input features to accommodate the driving scenario characteristics.

Xu et al. [53] proposed a generic vehicle motion model using end-to-end trainable architecture to predict a distribution over future vehicle ego-motion from instantaneous monocular camera observations and previous vehicle states. They evaluated their model using the Berkeley DeepDrive Video dataset (BDDV) [54] to predict four driving actions: straight, stop, turn left, and turn right. Their proposed model demonstrated superior accuracy compared with existing state-of-the-art prediction models based on deep learning (DL) algorithms.

As described above, various studies using DL-based methods for elucidation, estimation, and prediction of semantics from video images for automatic driving have been actively conducted. Nevertheless, no report of the relevant literature has described a feasible means of realizing small farming robots that can move autonomously in a field based on DL and visual processing. Moreover, studies of mobile robots that can infer behavior patterns from time-series images in a field of complex surface conditions have not progressed. Therefore, the challenge remains of [55] exploring the applicability of DL technologies to a small robot that moves autonomously in a paddy field.

For this study, we assume that the environment used for our prototypes as a mobile robot is a paddy field filled with water to 200 mm depth. In a paddy field, the robot encounters difficulties with locomotion because the ground condition is muddy, rough, and underwater. The robot must move in a passage of approximately up to 300 mm wide between rice plants with inter-rice locomotion. Moreover, stems and leaves are spreading according to the growing of rice plants. Therefore, we assume that the robot body size is restricted to a similar size to that of a sheet of A4 paper.

6. Autonomous Locomotion Experiment

6.1. Outdoor Experiment

This experiment was conducted outdoors in an actual paddy field. The experimentally obtained results verify the basic locomotion capabilities of our robot and evaluation of the semantic segmentation accuracy for FOV time-series images obtained by the onboard camera.

6.1.1. Locomotion among the Rice Plants

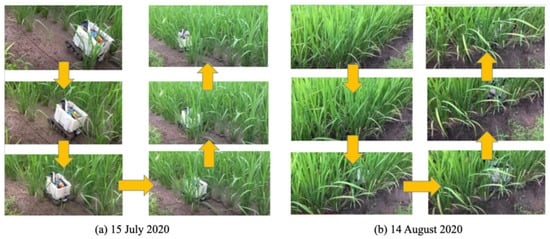

Figure 14 depicts images of locomotion of our robot at two dates. The time-series images in Figure 14a depict the paddy condition two months after rice planting. The rice plants had grown to approximately 300 mm. As a result of the low rice plant density, the robot moved easily among the rice plants.

Figure 14.

Robot locomotion on two days.

Figure 14b depicts the paddy condition three months after rice planting. Rice plants grew to approximately 500 mm. The locomotion can be seen slightly through a small gap in the rice plants. The radius of each bunch of rice plants is approximately 30 mm. The mean margin was 140 mm wide because the robot was 200 mm wide and the passage was 300 mm wide. This margin width gave rise to the robot being unable to be allowed to meander slightly. As a result of this experiment, the most common locomotion patterns were for forward movement. If the robot meandered to the left or right, then the operation to switch move backward was activated rather than steering the opposite direction to the proper path.

Despite this situation, we obtained time-series FOV images, as depicted in Figure 15, using the onboard monocular camera (Raspberry Pi Hight Quality Camera; Raspberry Pi Foundation) with a CMOS imaging sensor (IMX477R; Sony Corp., Tokyo, Japan). The maximum image resolution is 4056 × 3040 pixels. As a result of the processing costs and memory size, we obtained images of 1016 × 760-pixel resolution.

Figure 15.

Time-series FOV images obtained using an onboard monocular camera.

6.1.2. Segmentation Results

Kirillov et al. [56] divided image segmentation tasks into two types: semantic segmentation and instance segmentation. Semantic segmentation treats thing classes as stuff, which is amorphous and uncountable. Instance segmentation detects each object and its delineation with a bounding box or segmentation mask. For this study, our segmentation targets comprise ground, paddy, and other categories that belong to stuff. Numerous methods have been proposed for semantic segmentation in terms of PSPNet, ICNet, and PSANet by Zhao et al. [57,58,59], ESPNet by Mehta et al. [60], MaskLab and DeepLabv3+ by Chen et al. [61,62], AdaptSegNet by Tsai et al. [63], Auto-DeepLab by Liu et al. [64], Gated-SCNN by Takikawa et al. [65], RandLA-Net by Hu et al. [66], and PolarNet by Zhang et al. [67]. For this study, we used SegNet [68] for the segmentation of time-series FOV images because this classical method is well established for the segmentation of road scene images such as the CamVid Database [69].

Table 2 presents details of the number of images assigned to training, validation, and testing. We annotated all the pixels of 1160 images. The annotation labels comprise three types: ground pixels, paddy pixels, and other pixels.

Table 2.

Numbers of images assigned to training, validation, and testing [images].

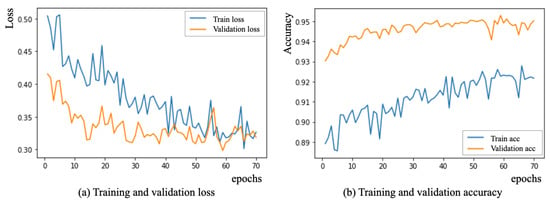

Figure 16 depicts loss and accuracy curves for the learning processes. Both training and validation losses converged along with the progression of generations. Similarly, both training and validation accuracies converged along with the progression of generations. Compared with both tendencies, the verification accuracy is higher than the training accuracy. We consider that this reversal resulted from the ratio of training images to verification images as denoted in Table 2.

Figure 16.

Loss and accuracy curves in the learning processes.

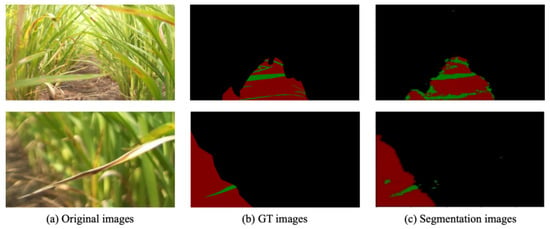

Figure 17 depicts two examples of segmentation results with SegNet. The left, center, and right panels respectively represent the original images, ground truth (GT) images, and segmentation images. As a local tendency, the images include several false positive and false negative pixels, especially near the category boundaries. As an overall tendency, segmentation images are consistent with the GT images.

Figure 17.

Segmentation results with SegNet.

Table 3 presents detailed segmentation results for each category. The accuracies for the ground pixels, the paddy pixels, and the other pixels are, respectively, 99.1%, 92.4%, and 44.8%. The mean accuracy is 97.0%. Effects of other pixels on the overall accuracy were slight because the occupancy of other pixels was merely 1.5% (=110,838/7,257,600) of the total pixels.

Table 3.

Segmentation results [pixels].

6.2. Indoor Experiment

This experiment was conducted on an imitation paddy field indoors. The experimentally obtained results provide evaluation of behavior prediction accuracy in navigation based on visual processing from FOV time-series images obtained using the onboard camera.

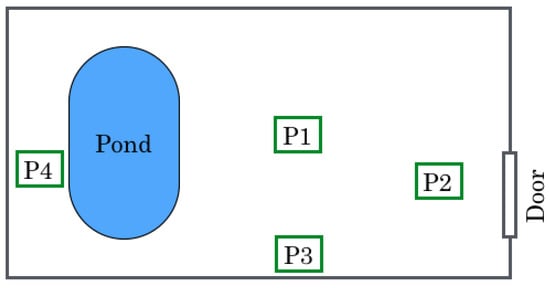

6.2.1. Experiment Setup

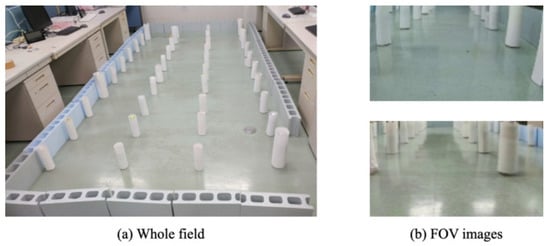

Figure 18 depicts the exterior of the field built in imitation of a paddy field. The field size is 2.0 m wide and 4.4 m long. White objects are imitations of rice plants created using plastic bottles covered with white paper. The object intervals in the longitudinal and lateral are, respectively, 0.6 m and 0.4 m. These lengths are twice as large as those of actual intervals.

Figure 18.

Experiment field resembling a paddy field for obtaining FOV time-series images from our robot.

Unlike an actual muddy field, this experimental field provided steady locomotion because of sufficiently high friction between the floor surface and the robot crawlers. Therefore, the proportion of images annotated as moving forward was increasing. To ensure the effective number of images annotated at right and left turns, the initial angles were arranged from the moving direction; then the initial positions were shifted from the centerline between rice plants.

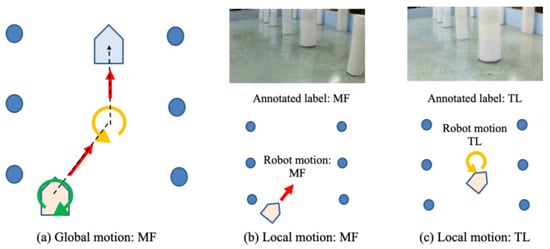

Regarding the robot speed and the burden for annotation, image sampling was changed from 60 fps to 10 fps. We manually annotated all images. Table 4 denotes the number of obtained images and the detailed information of three behavior categories: move forward (MF), turn right (TR), and turn left (TL).

Table 4.

Numbers of obtained images and their detailed information of three behavior categories.

We obtained 4626 images in total. The data amounts of MF, TR, and TL images are, respectively, 2419, 1347, and 860 images. The data ratios of MF and TL are, respectively, 52.3% for the maximum and 18.6% for the minimum. At the experimental field terminals, the robot turned right to change its path to the next path between rice plants. This locomotion increased the number of TR images compared to that of TL images.

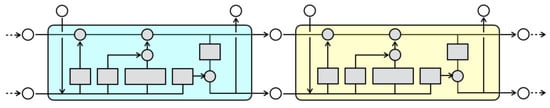

Table 5 shows the fundamental network parameters of our implementation model combined with a CNN and a long short-term memory (LSTM) [70] network. Figure 19 depicts a network structure of LSTM. Repeating modules are connected in a series of cascades.

Table 5.

Set values of fundamental network parameters (E1).

Figure 19.

Network structure of LSTM.

The filter sizes of the first convolutional layer and the second through fifth convolutional layers are, respectively, 5 × 5 and 3 × 3. The pooling widths for the respective layers are 2 × 2. Herein, the number of hidden layers is a dominant LSTM parameter.

Letting and respectively represent the learning coefficient and the batch size, and letting be the number of the look-back parameter, then the dimension of the LSTM as the length of the long-term memory controlled by . For generalization, we employed the Adam algorithm [71] as a Stochastic Gradient Descent (SGD) method that incorporates low-order moments. The default values of , , and were set, respectively, to 0.01, 2 [72], and 50 [73]. The data ratio between training and validation is 80:20.

The parameter values which maximize behavior prediction accuracy vary in datasets. For this experiment, we attempted the following nine experiments, labeled as E1–E9 below, with different combinations of parameters and setting values. Regarding the computational burden and time, we set 100 training epochs as a common value.

- E1:

- Default parameter values are set to the initial network model.

- E2:

- The sampling frame rate is changed from 10 fps to 5 fps.

- E3:

- is changed from 2 to 5.

- E4:

- is changed from 50 to 10.

- E5:

- The input-layer depth is changed from 3 dimensions to 6 dimensions to accommodate segmentation images with SegNet in addition to the original images.

- E6:

- The additional dropout layer between the first and second convolutional layers invalidates 25% of the connection between the two layers.

- E7:

- A new dropout layer that disables 25% of the connections is added to each layer after the second convolutional layer.

- E8:

- A new pathway is appended to bypass the third and fourth convolution layers.

- E9:

- The pooling width of the pooling layers is changed to 4 × 4.

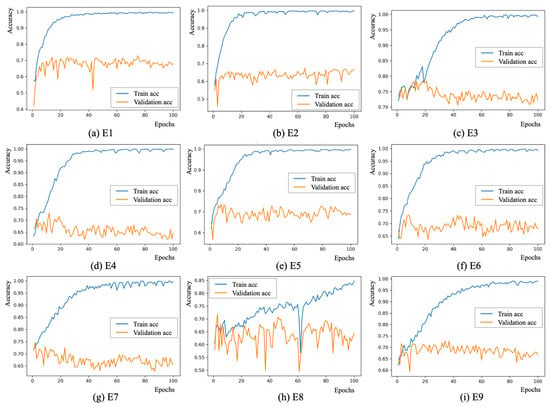

6.2.2. Training Results

Figure 20 depicts transitions of the training and validation accuracies. For the training datasets, the global accuracy improved constantly and steadily. Particularly, the trends of E1, E2, E4, E5, and E6 show convergence around the 20th epoch. Trends of E3, E7, and E9 all indicate convergence at around the 40th epoch. In contrast, the trend of E8 shows a slow convergence tendency with a marked drop at around the 60th epoch. The convergence properties for validation datasets are insufficient compared with those obtained for training datasets. The characteristic curves for the validation datasets are saturated at around 0.70.

Figure 20.

Transitions of the degrees of training and validation accuracies.

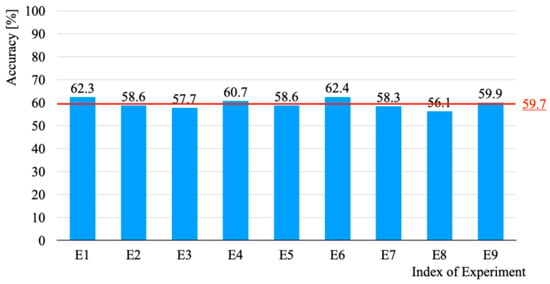

6.2.3. Locomotion Prediction Results

Figure 21 presents the prediction results of the respective experiments. The accuracy of E6 achieved 62.4%, which was the highest. In contrast, the accuracy of E8 was 56.1%, which was the lowest. The accuracy difference between them was 6.3 percentage points. The accuracies of E1, E4, E6, and E9 were all greater than the mean accuracy of 59.7%.

Figure 21.

Locomotion prediction results for nine experimental conditions.

Table 6 presents the prediction accuracy obtained for each locomotion pattern. The accuracy of MF achieved 81.6%, which was the maximum. In contrast, the accuracy of TL exhibited 25.8%, which was the minimum.

Table 6.

Locomotion prediction accuracy.

6.2.4. Analysis and Discussion

The uneven number of images is attributable to a limitation in the data collection method related to the locomotion mechanism of the robot. Figure 22 portrays an example of a TR path. Although the robot is turning left globally, MF is selected locally and shortly because the robot must recover to the correct path. The upper-middle panel depicts an FOV image of the robot moving towards rice plants, annotated as MF instead of TL. The accumulation of this situation increased the number of images annotated as MF.

Figure 22.

Inconsistency between the actual purpose of robot motion and annotated labels.

To predict global locomotion patterns, we consider the necessity of understanding the scene context [74] as autonomous agents [75]. Moreover, we consider that we can assign detailed annotation labels in terms of “forward locomotion concomitantly with the center of a route between rice plants” and “adjusting locomotion to the center of a route”. However, it is unrealistic to assign such complex labels manually. Therefore, we infer the implementation of an automatic annotation function that recognizes the scene context.

7. Conclusions

This paper provided a small robot for realization of remote farming. To actualize highly efficient rice-duck farming, this study presented experimentally obtained results to verify a useful method to navigate mallards using robot prototypes based on imprinting and feeding. Experimentally obtained results revealed that baby mallards with imprinting followed our first prototype. We considered that adult mallards require more than two days to become accustomed to the robot and the feeder because they have strong wariness against unknown objects. Although we did not actualize mallard navigation in an actual field, we found that mallards ate feed in the feeder on our second prototype. Based on this preliminary result, we developed our third prototype as a fully customized mobile robot for mallard navigation. We conducted an indoor driving test to assess manual operation and outdoor evaluation tests to run on an actual paddy field. We developed indoor and outdoor image datasets using an onboard monocular camera. For the outdoor image datasets, our segmentation method based on SegNet achieved 97.0% mean segmentation accuracy for three semantic categories. For the indoor image datasets, our prediction method based on CNN and LSTM achieved 59.7% mean prediction accuracy for three motion categories.

As future work, we expect to quantify the imprinting effects of shared time between the robot and mallards in a breeding process. Moreover, we expect to conduct a navigation experiment using feed of several types in a paddy field for baby mallards after imprinting. For autonomous locomotion, we will achieve motor control incorporating a feedback mechanism. We would like to combine autonomous locomotion and navigation based on imprinting and feeding. We must statistically analyze the trajectories of baby mallards. To avoid overfitting caused by overtraining, we can reconsider the loss function to converge training and validation processes. We intend to improve the segmentation accuracy and the prediction accuracy for fully autonomous locomotion in actual paddy fields of various types. Finally, we would like to conduct statistical analyses for experimentally obtained results based on the concept of ARI.

Author Contributions

Conceptualization, S.Y.; methodology, H.W.; software, H.M.; validation, S.N.; formal analysis, K.S.; investigation, S.Y.; resources, Y.N.; data curation, H.W.; writing—original draft preparation, H.M.; writing—review and editing, H.M.; visualization, S.N.; supervision, K.S.; project administration, Y.N.; funding acquisition, H.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Japan Society for the Promotion of Science (JSPS) KAKENHI Grant Numbers 17K00384 and 21H02321.

Institutional Review Board Statement

The study was conducted according to the guidelines approved by the Institutional Review Board of Akita Prefectural University (Kendaiken–91; 27 April 2018).

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets presented in this study are available on request to the corresponding author.

Acknowledgments

We would like to show our appreciation to Takuma Watanabe and Mizuho Kurosawa, who are graduates of Akita Prefectural University, for their great cooperation in the experiments.

Conflicts of Interest

The authors declare that they have no conflicts of interest. The funders had no role in the design of the study, in the collection, analyses, or interpretation of data, in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| ABS | Acrylonitrile butadiene styrene |

| ACVs | Air-cushion vehicles |

| AI | Artificial intelligence |

| ARI | Animal–robot interaction |

| BDDV | Berkeley DeepDrive video dataset |

| CNN | Convolutional neural network |

| DL | Deep learning |

| GPIO | General-purpose input and output |

| GT | ground truth |

| FOV | Field of view |

| FPV | First-person view |

| HRI | Human–robot interaction |

| ICT | Information and communications technology |

| IoT | Internet of things |

| IP | Internet protocol |

| IR | InfRared |

| LiDAR | Laser imaging detection and ranging |

| LRF | Laser range finder |

| LSTM | Long short-term memory |

| MF | Move forward |

| PWM | Pulse width modulation |

| RCNN | Recurrent convolutional neural network |

| SGD | Stochastic gradient descent |

| TL | Turn left |

| TR | Turn right |

| UAV | Unmanned aerial vehicles |

References

- Wolfert, S.; Ge, L.; Verdouw, C.; Bogaardt, M.J. Big Data in Smart Farming: A Review. Agric. Syst. 2017, 153, 69–80. [Google Scholar] [CrossRef]

- Farooq, M.S.; Riaz, S.; Abid, A.; Abid, K.; Naeem, M.A. Survey on the Role of IoT in Agriculture for the Implementation of Smart Farming. IEEE Access 2019, 7, 156237–156271. [Google Scholar] [CrossRef]

- Abbasi, M.; Yaghmaee, M.H.; Rahnama, F. Internet of Things in agriculture: A survey. In Proceedings of the Third International Conference on Internet of Things and Applications (IoT), Isfahan, Iran, 17–18 April 2019; pp. 1–12. [Google Scholar]

- Gia, T.N.; Qingqing, L.; Queralta, J.P.; Zou, Z.; Tenhunen, H.; Westerlund, T. Edge AI in Smart Farming IoT: CNNs at the Edge and Fog Computing with LoRa. In Proceedings of the IEEE AFRICON 2019, Accra, Ghana, 25–27 September 2019; pp. 1–6. [Google Scholar]

- Alreshidi, E. Smart Sustainable Agriculture (SSA) Solution Underpinned by Internet of Things (IoT) and Artificial Intelligence (AI). Int. J. Adv. Comput. Sci. Appl. 2019, 10, 93–102. [Google Scholar] [CrossRef]

- Dahane, A.; Benameur, R.; Kechar, B.; Benyamina, A. An IoT Based Smart Farming System Using Machine Learning. In Proceeding of the International Symposium on Networks, Computers and Communications, Montreal, QC, Canada, 20–22 October 2020; pp. 1–6. [Google Scholar]

- Balafoutis, A.T.; Evert, F.K.V.; Fountas, S. Smart Farming Technology Trends: Economic and Environmental Effects, Labor Impact, and Adoption Readiness. Agronomy 2020, 10, 743. [Google Scholar] [CrossRef]

- Yaghoubi, S.; Akbarzadeh, N.A.; Bazargani, S.S.; Bamizan, M. Autonomous Robots for Agricultural Tasks and Farm Assignment and Future Trends in Agro Robots. Int. J. Mech. Mechatron. Eng. 2017, 13, 1–6. [Google Scholar]

- Noguchi, N.; Barawid, O.C. Robot Farming System Using Multiple Robot Tractors in Japan Agriculture. In Proceedings of the 18th World Congress of the International Federation of Automatic Control, Milano, Italy, 28 August–2 September 2011; pp. 633–637. [Google Scholar]

- Chang, C.K.; Siagian, C.; Itti, L. Mobile Robot Monocular Vision Navigation Based on Road Region and Boundary Estimation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 1043–1050. [Google Scholar]

- Yandun, F.; Narvaez, F.Y.; Reina, G.; Torres-Torriti, M.; Kantor, G.; Cheein, F.A. A Survey of Ranging and Imaging Techniques for Precision Agriculture Phenotyping. IEEE/ASME Trans. Mechatron. 2017, 22, 2428–2439. [Google Scholar] [CrossRef]

- Niitani, Y.; Ogawa, T.; Saito, S.; Saito, M. ChainerCV: A Library for Deep Learning in Computer Vision. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1217–1220. [Google Scholar]

- Bradski, G.; Kaehler, A.; Pisarevsky, V. Learning-Based Computer Vision with Intel’s Open Source Computer Vision Library. Intel Technol. J. 2005, 9, 119–130. [Google Scholar]

- Madokoro, H.; Woo, H.; Nix, S.; Sato, K. Benchmark Dataset Based on Category Maps with Indoor–Outdoor Mixed Features for Positional Scene Recognition by a Mobile Robot. Robotics 2020, 9, 40. [Google Scholar] [CrossRef]

- Madokoro, H.; Yamamoto, S.; Woo, H.; Sato, K. Mallard Navigation Using Unmanned Ground Vehicles, Imprinting, and Feeding. In Proceedings of the International Joint Conference on JSAM and SASJ and 13th CIGR VI Technical Symposium Joining FWFNWG and FSWG Workshops, Sapporo, Japan, 3–6 September 2019; pp. 1–8. [Google Scholar]

- Watanabe, T.; Madokoro, H.; Yamamoto, S.; Woo, H.; Sato, K. Prototype Development of a Mallard Guided Robot. In Proceedings of the 19th International Conference on Control, Automation and Systems, Jeju, Korea, 15–18 October 2019; pp. 1566–1571. [Google Scholar]

- Pernollet, C.A.; Simpson, D.; Gauthier-Clerc, M.; Guillemain, M. Rice and Duck, A Good Combination? Identifying the Incentives and Triggers for Joint Rice Farming and Wild Duck Conservation. Agric. Ecosyst. Environ. 2015, 214, 118–132. [Google Scholar] [CrossRef]

- Ball, D.; Ross, P.; English, A.; Patten, T.; Upcroft, B.; Fitch, R.; Sukkarieh, S.; Wyeth, G.; Corke, P. Robotics for Sustainable Broad-Acre Agriculture. In Field and Service Robotics; Springer: Cham, Switzerland, 2015; Volume 105, pp. 439–453. [Google Scholar]

- Velasquez, A.E.B.; Higuti, V.A.H.; Guerrero, H.B.; Becker, M. HELVIS: A Small-scale Agricultural Mobile Robot Prototype for Precision Agriculture. In Proceedings of the 13th International Conference on Precision Agriculture, St. Louis, MO, USA, 31 July–3 August 2016; Volume 1, pp. 1–18. [Google Scholar]

- Yasuda, K.; Takakai, F.; Kaneta, Y.; Imai, A. Evaluation of Weeding Ability of Brush-Roller Type Paddy Weeding Robot and Its Influence on the Rice Growth. J. Weed Sci. Technol. 2017, 62, 139–148. [Google Scholar] [CrossRef][Green Version]

- Mitsui, T.; Kagiya, T.; Ooba, S.; Hirose, T.; Kobayashi, T.; Inaba, A. Development of a Small Rover(AIGAMO ROBOT) to Assist Organic Culture in Paddy Fields: Field Experiment Using Robot for Weeding in 2007. In Proceedings of the JSME annual Conference on Robotics and Mechatronics, Nagoya, Japan, 5–7 June 2008; pp. 1–3. [Google Scholar]

- Fujii, K.; Tabata, K.; Yokoyama, T.; Kutomi, S.; Endo, Y. Development of a Small Weeding Robot “AIGAMO ROBOT” for Paddy Fields. Tech. Rep. Gifu Prefect. Res. Inst. Inf. Technol. 2015, 17, 48–51. [Google Scholar]

- Sori, H.; Inoue, H.; Hatta, H.; Ando, Y. Effect for a Paddy Weeding Robot in Wet Rice Culture. J. Robot. Mechatron. 2018, 30, 198–205. [Google Scholar] [CrossRef]

- Nakai, S.; Yamada, Y. Development of a Weed Suppression Robot for Rice Cultivation: Weed Suppression and Posture Control. Int. J. Electr. Comput. Energetic, Electron. Commun. Eng. 2014, 8, 1879–1883. [Google Scholar]

- Yamada, Y.; Yamauchi, S. Study on Imprinting and Guidance of a Duck Flock by an Autonomous Mobile Robot (Effectiveness of Visual and Auditory Stimuli). J. Jpn. Soc. Des. Eng. 2018, 53, 691–704. [Google Scholar]

- Yamada, Y.; Yamauchi, S.; Tsuchida, T. Study on Imprinting and Guidance of a Duck Flock by an Autonomous Mobile Robot (Effectiveness of Imprinting after Critical Period and Effectiveness of Feeding and Auditory Stimulus). J. Jpn. Soc. Des. Eng. 2018, 53, 855–868. [Google Scholar]

- Miklósi, Á.; Korondi, P.; Matellán, V.; Gácsi, M. Ethorobotics: A New Approach to Human-Robot Relationship. Front. Psychol. 2017, 8, 958. [Google Scholar] [CrossRef]

- Korondi, P.; Korcsok, B.; Kovács, S.; Niitsuma, M. Etho-Robotics: What Kind of Behaviour Can We Learn from the Animals? In Proceedings of the 11th IFAC Symposium on Robot Control, Salvador, Brazil, 26–28 August 2015; pp. 244–255. [Google Scholar]

- Kim, J.; Choi, S.; Kim, D.; Kim, J.; Cho, M. Animal-Robot Interaction for Pet Caring. In Proceedings of the IEEE International Symposium on Computational Intelligence in Robotics and Automation, Daejeon, Korea, 15–18 December 2009; pp. 159–164. [Google Scholar]

- Romano, D.; Donati, E.; Benelli, G.; Stefanini, C. A Review on Animal—Robot Interaction: From Bio-Hybrid Organisms to Mixed Societies. Biol. Cybern 2019, 113, 201–225. [Google Scholar] [CrossRef] [PubMed]

- Romano, D.; Stefanini, C. Individual Neon Tetras (Paracheirodon Innesi, Myers) Optimise Their Position in the Group Depending on External Selective Contexts: Lesson Learned from a Fish-Robot Hybrid School. Biosyst. Eng. 2021, 204, 170–180. [Google Scholar] [CrossRef]

- Macri, S.; Karakaya, M.; Spinello, C.; Porfiri, M. Zebrafish Exhibit Associative Learning for an Aversive Robotic Stimulus. Lab Anim. 2020, 49, 259–264. [Google Scholar] [CrossRef]

- Karakaya, M.; Macri, S.; Porfiri, M. Behavioral Teleporting of Individual Ethograms onto Inanimate Robots: Experiments on Social Interactions in Live Zebrafish. Iscience 2020, 23, 101418. [Google Scholar] [CrossRef]

- Clément, R.J.; Macri, S.; Porfiri, M. Design and Development of a Robotic Predator as a Stimulus in Conditioned Place Aversion for the Study of the Effect of Ethanol and Citalopram in Zebrafish. Behav. Brain Res. 2020, 378, 112256. [Google Scholar] [CrossRef] [PubMed]

- Polverino, G.; Karakaya, M.; Spinello, C.; Soman, V.R.; Porfiri, M. Behavioural and Life-History Responses of Mosquitofish to Biologically Inspired and Interactive Robotic Predators. J. R. Soc. Interface 2019, 16, 158. [Google Scholar] [CrossRef] [PubMed]

- Butail, S.; Ladu, F.; Spinello, D.; Porfiri, M. Information Flow in Animal-Robot Interactions. Entropy 2014, 16, 1315–1330. [Google Scholar] [CrossRef]

- Spinello, C.; Yang, Y.; Macri, S.; Porfiri, M. Zebrafish Adjust Their Behavior in Response to an Interactive Robotic Predator. Front. Robot. AI 2019, 6, 38. [Google Scholar] [CrossRef] [PubMed]

- Romano, D.; Benelli, G.; Stefanini, C. Opposite Valence Social Information Provided by Bio-Robotic Demonstrators Shapes Selection Processes in the Green Bottle Fly. J. R. Soc. Interface 2021, 18, 176. [Google Scholar] [CrossRef]

- Datteri, E. The Logic of Interactive Biorobotics. Front. Bioeng. Biotechnol. 2020, 8, 637. [Google Scholar] [CrossRef]

- Vaughan, R.; Sumpter, N.; Henderson, J.; Frost, A.; Cameron, S. Experiments in Automatic Flock Control. Robot. Auton. Syst. 2000, 31, 109–117. [Google Scholar] [CrossRef]

- Henderson, J.V.; Nicol, C.J.; Lines, J.A.; White, R.P.; Wathes, C.M. Behaviour of Domestic Ducks Exposed to Mobile Predator Stimuli. 1. Flock Responses. Br. Poult. Sci. 2001, 42, 433–438. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, S.; Wang, J.; Xu, K.; Lei, T.; Zhang, H.; Wang, X.; Liu, D.; Si, J. Control Strategy of Stable Walking for a Hexapod Wheel-Legged Robot. ISA Trans. 2021, 108, 367–380. [Google Scholar] [CrossRef]

- Li, J.; Wang, J.; Peng, H.; Hu, Y.; Su, H. Fuzzy-Torque Approximation-Enhanced Sliding Mode Control for Lateral Stability of Mobile Robot. IEEE Trans. Syst. Man Cybern. Syst. 2021, 1–10. [Google Scholar] [CrossRef]

- Chen, J.; Du, C.; Zhang, Y.; Han, P.; Wei, W. A Clustering-Based Coverage Path Planning Method for Autonomous Heterogeneous UAVs. IEEE Trans. Intell. Transp. Syst. 2021, 1–11. [Google Scholar] [CrossRef]

- Hess, E.H. Imprinting. Science 1959, 130, 133–141. [Google Scholar] [CrossRef] [PubMed]

- Karlson, P.; Butenandt, A. Pheromones (Ectohormones) in Insects. Annu. Rev. Entomol. 1959, 4, 39–58. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Murase, T.; Hirakawa, T.; Yamashita, T.; Fujiyoshi, H. Self-State-Aware Convolutional Neural Network for Autonomous Driving. In Proceedings of the IEICE Technical Report of Pattern Recognition and Media Understanding, Kumamoto, Japan, 12–13 October 2017; pp. 85–90.

- Kamiya, R.; Kawaguchi, T.; Fukui, H.; Ishii, Y.; Otsuka, K.; Hagawa, R.; Tsukizawa, S.; Yamashita, K.; Yamauchi, T.; Fujiyoshi, H. Self-Motion Identification Using Convolutional Recurrent Neural Network. In Proceedings of the 22nd Symposium on Sensing via Image Information, Yokohama, Japan, 8–10 June 2016. [Google Scholar]

- Kanade, T.; Hebert, M. First-Person Vision. Proc. IEEE 2012, 100, 2442–2453. [Google Scholar] [CrossRef]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent Convolutional Neural Networks for Text Classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 2267–2273. [Google Scholar]

- Xu, H.; Gao, Y.; Yu, F.; Darrell, T. End-to-end Learning of Driving Models from Large-scale Video Datasets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2174–2182. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 2633–2642. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.A. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9396–9405. [Google Scholar]

- Zhang, P.; Liu, W.; Lei, Y.; Wang, H.; Lu, H. RAPNet: Residual Atrous Pyramid Network for Importance-Aware Street Scene Parsing. IEEE Trans. Image Process. 2020, 29, 5010–5021. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNet for Real-Time Semantic Segmentation on High-Resolution Images. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 405–420. [Google Scholar]

- Zhao, H.; Zhang, Y.; Liu, S.; Shi, J.; Loy, C.; Lin, D.; Jia, J. PSANet: Point-wise Spatial Attention Network for Scene Parsing. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 267–283. [Google Scholar]

- Mehta, S.; Rastegari, M.; Caspi, A.; Shapiro, L.; Hajishirzi, H. ESPNet: Efficient Spatial Pyramid of Dilated Convolutions for Semantic Segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 552–568. [Google Scholar]

- Chen, L.; Hermans, A.; Papandreou, G.; Schroff, F.; Wang, P.; Adam, H. MaskLab: Instance Segmentation by Refining Object Detection with Semantic and Direction Features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4013–4022. [Google Scholar]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Tsai, Y.; Hung, W.; Schulter, S.; Sohn, K.; Yang, M.; Chandraker, M. Learning to Adapt Structured Output Space for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7472–7481. [Google Scholar]

- Liu, C.; Chen, L.-C.; Schroff, F.; Adam, H.; Hua, W.; Yuille, A.L.; Fei-Fei, L. Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Image Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 82–92. [Google Scholar]

- Takikawa, T.; Acuna, D.; Jampani, V.; Fidler, S. Gated-SCNN: Gated Shape CNNs for Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5228–5237. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 16–18 June 2020; pp. 11108–11117. [Google Scholar]

- Zhang, Y.; Zhou, Z.; David, P.; Yue, X.; Xi, Z.; Gong, B.; Foroosh, H. PolarNet: An Improved Grid Representation for Online LiDAR Point Clouds Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 16–18 June 2020; pp. 9601–9610. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder–Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Brostow, G.J.; Fauqueur, J.; Cipolla, R. Semantic Object Classes in Video: A High-Definition Ground Truth Database. Pattern Recognit. Lett. 2009, 30, 88–97. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Adam, B.J. A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Saud, A.S.; Shakya, S. Analysis of Look Back Period for Stock Price Prediction with RNN Variants: A Case Study of Banking Sector of NEPSE. Procedia Comput. Sci. 2020, 167, 788–798. [Google Scholar] [CrossRef]

- Byeon, W.; Breuel, T.M.; Raue, F.; Liwicki, M. Scene Labeling With LSTM Recurrent Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3547–3555. [Google Scholar]

- Joubert, O.R.; Rousselet, G.A.; Fize, D.; Fabre-Thorpe, M. Processing Scene Context: Fast Categorization and Object Interference. Vis. Res. 2007, 47, 3286–3297. [Google Scholar] [CrossRef] [PubMed]

- Naseer, M.; Khan, S.; Porikli, F. Indoor Scene Understanding in 2.5/3D for Autonomous Agents: A Survey. IEEE Access 2019, 7, 1859–1887. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).