Abstract

Cyber–physical systems are created at the intersection of physical processes, networking, and computation. For applications developed to implement cyber–physical interactions, in the face of limited resources, an optimization of efficiency needs to be handled across all entities—communication, computing, and control. This gives rise to the emerging area of “co-design” that addresses the challenge of designing applications or systems at the intersection of control, communication, and compute when these domains can and should no longer be considered to be fully independent. In this article a co-design framework is presented that provides a structured way of addressing the co-design problem. Different co-design degrees are specified that group application design approaches according to their needs on criticality/dependability and relate these to the knowledge, insights, and required interactions with the communication and computation infrastructure. The applicability of the framework is illustrated for the example of autonomous mobile robots, showing how different co-design degrees exploit the relationships and permit the identification of technical solutions that achieve improved resource efficiency, increased robustness, and improved performance compared to traditional application design approaches. The framework is of relevance both for concrete near-term application implementation design as well as more futuristic concept development.

1. Introduction

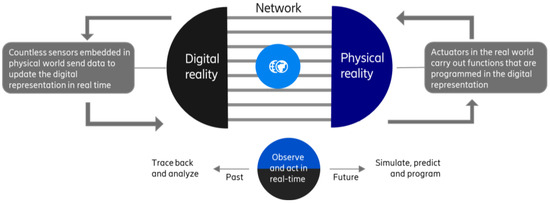

The ongoing development towards a reality, where physical objects are connected and digitally engaged to execute on their assigned activities and processes, has established the term cyber–physical systems (CPSs). Cyber–physical systems are created at the intersection of physical processes, networking, and computation. They integrate components of various kind whose main purpose is to control a physical process and, through feedback, adapt themselves to new conditions in real time [1]. Whether it is in the consumer world (e.g., mixed reality), or the industrial world (e.g., autonomous mobility), or both (e.g., massive digital twins), cyber–physical interactions are becoming an integral part of our lives, and this development is expected to grow [2]. Figure 1 illustrates the cyber–physical continuum. In the cyber–physical continuum, the physical and digital world have merged. It is now possible to move freely between the connected physical world of senses, actions and experiences, and its programmable digital representation. This is achieved by countless sensors that are embedded in the physical world. These send data to update the digital representation of the physical world in real time. At the same time, actuators in the real world carry out functions that are programmed in the digital representation. Observations, but also tracing back and analyzing the past are key elements to act in real-time but also to simulate, predict, and program the future. As an example, a robot operating in the physical world may be updated, re-designed, optimized, etc., iteratively by moving freely between observations and operations in the digital reality while combining this information with testing and predicting operations in the digital reality.

Figure 1.

The cyber–physical continuum.

For applications developed to implement such types of control, access to and interaction with computation and communication are expected to play an increasingly important role [3]. The vision is that digitalization will be embraced across industries and society at large creating an increasingly digitalized, programmable, and intelligent world embracing the cyber–physical continuum [2].

Future innovative applications developed for cyber–physical systems are envisioned to take smarter decisions than today’s applications by, e.g., making heavy use of Machine Learning (ML) and Artificial Intelligence (AI), as well as adding the possibility to execute some/all parts of their functionality from a cloud compute environment [4]. These interactions require the control applications to have a detailed understanding of their requirements and technical needs when it comes to communication and computing and to make corresponding requests for communication and computational resources.

All of the above calls for a shift towards seamless interworking among communication, computing, and control entities within a cyber–physical system, enabled by the exchange of capabilities and demands among them. Such interworking is essential to meet stringent requirements on availability, latency, and reliability for application use cases, surpassing just ‘best effort’ standards. Furthermore, in the face of limited resources, all entities—communication, computing, and control—must be optimized for efficiency. With the ever-increasing number of applications entering the cyber–physical continuum, developing an improved understanding of how to optimize efficiency across different domains under the constraint of limited resources, therefore, becomes increasingly important. A key observation in this regard is that compared to previous approaches, in order to achieve higher performance, the domains of communication, compute, and control can no longer be considered independently [3,5,6]. Therefore, a new approach of “co-designing” cyber–physical systems across the domains of communication, compute and control is starting to take shape for which better performance, scalability, and resilience are expected to be feasible. Three different actors are part of the co-design: Communication platform(s) (e.g., networks), computing platform(s) (e.g., edge, cloud, fog), as well as the control domain (e.g., application developers). Each of these actors plays a role in the co-design. However, the ambition of co-design is not to solve the efficiency optimization problem for all domains with a joint design in a centralized way. Instead, the approach is to address the optimization problem by exposing and exploiting insights from the different domains.

This article makes an important contribution to the area of co-design by suggesting a co-design framework that allows for a structured approach towards the adoption of co-design principles.

2. Research Aims and Objectives

In this section the definition of co-design, its approach and ambition as well as more details on the contributions of this article to this area are outlined.

2.1. Definition of Communication–Compute–Control Co-Design and Its Ambition

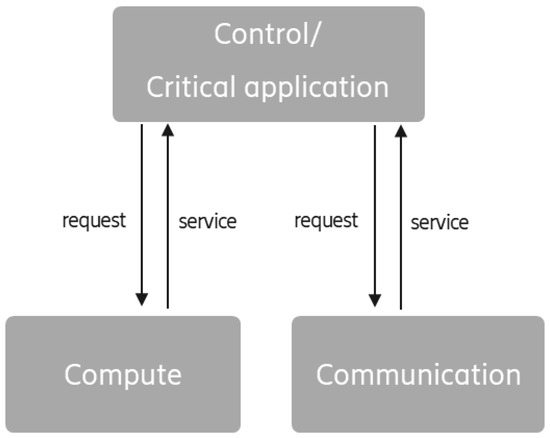

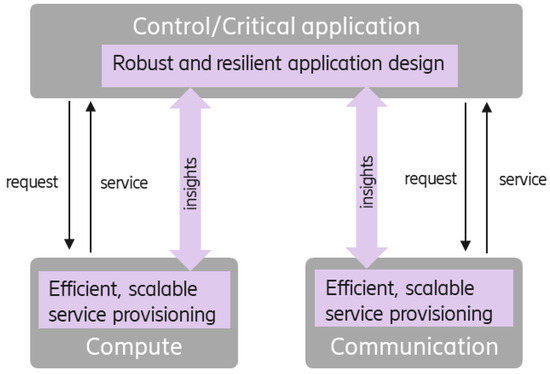

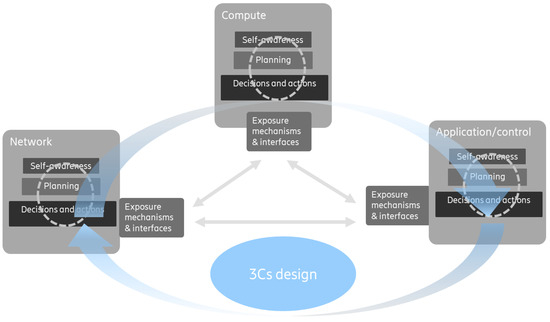

Communication–Compute–Control (3C) co-design is an application or system design method that understands and makes use of the relations among (wireless) communication, (cloud) compute, and (control) applications to enhance application and/or overall system performance. The objective of the co-design approach is to exploit the relationships to find technical solutions that achieve improved resource efficiency, increased robustness, and advanced performance compared to traditional approaches. Another ambition of the co-design methodology is to potentially relax requirements on each of the 3Cs components compared to independent design, thereby also enabling applications that would otherwise (1) not be feasible to implement due to too stringent requirements or (2) require a very costly infrastructure. Figure 2 and Figure 3 illustrate the traditional approach and the ambition with the co-design approach. Unlike the simple publish–subscribe communication paradigm of information exchange [7], in advanced co-design approaches, insights from the compute and communication entities are also shared in response to the requested service.

Figure 2.

Traditional design approach.

Figure 3.

3Cs co-design approach.

2.2. Contributions of This Article

The key contribution of this article is the development and description of a framework for 3Cs co-design, which takes a structured approach towards the adoption of the co-design principles. By defining different “degrees” of co-design, it is shown that a co-design approach can be applied at different levels, where the level depends on the requirements of the application. In particular, it is shown how the challenge of co-design can be broken down into meaningful steps that still allow for exploiting the advantage of shared knowledge and insights. By exemplifying the framework with an industrial use case, the benefits and challenges of the different co-design degrees are illustrated. The identification of key building blocks and tradeoffs allows for a better understanding of ongoing and required next steps for research and development.

The framework presented in this article is formulated on a conceptual level for a general type of application. Here, “application” can be considered as an abstract function that realizes a set of tasks for a given use case. The focus is on possible interactions of this abstract application domain with the network and compute domains to realize the wanted outcome.

2.3. Structure of the Article

This article is structured as follows: Section 1 and Section 2 present the topic and provide an introduction, including details on the scope and contributions of the work. Section 3 outlines the state of the art, observed trends, as well as related and prior work. Section 4 introduces the framework of co-design developed in this work, together with an example that illustrates the applicability of the framework. Section 5 outlines the building blocks of co-design, and Section 6 discusses tradeoffs, important considerations, as well as possible next steps. In Section 7, the work is summarized and concluded.

3. State of the Art, Observed Trends in Industry and Prior Work

In the following Section, the state of the art in industry together with ongoing trends are described that highlight the dependencies of communication, compute, and control and motivate the need for studying 3Cs co-design. Furthermore, prior and related work are described.

3.1. State of the Art and Trends in Industry

3.1.1. Trend Towards Wireless Communication for CPSs

While most of the control systems of today continue to rely on wired communication, there is a growing inclination towards adapting wireless technology in control systems. For instances wireless communication is becoming an integral part of modern smart manufacturing [4]. Examples include, for instance, (1) remote wireless control of devices and heavy machinery [8] that unlocks the potential for advanced services where cables are not able to reach, or (2) wireless condition monitoring that enables services, e.g., for moving parts [9]. Generally, compared to connecting devices and machines with cables, wireless connectivity allows for a higher degree of flexibility and can significantly reduce setup and maintenance time and costs [10]. Non-critical applications can easily be connected using wireless technology. But real-time control systems that impose very strict requirements towards the wireless network are a challenge for wireless connectivity solutions since wireless channels are naturally subject to stochastic variations. Numerous efforts have been made to design wireless communication systems that closely match the performance of wired systems in terms of data rate, latency, reliability, etc. [11], in order to support critical real-time applications. However, the past ten years have shown that it is only if the wireless link performs just like a wired link (e.g., negligible delay, jitter, and packet loss), the wireless link could attempt a “simple replacement” of communication cables with wireless links in control applications without making any changes to the application. The reason for this is that applications that are designed to work only over wired connections, typically assume close to ideal network characteristics and lack the robustness or flexibility to adjust to statistical variances resulting from connectivity [12]. Wireless solutions that perform just like a wired link, however, require extensive network resources, which becomes impractical, costly, or even infeasible in several deployments [13]. While this may indicate that wireless communication may be less suitable for critical control systems, there is on the contrary, a growing interest in considering wireless communications also for these. One of the key reasons comes from the trend towards cloudification and cloud-native application design, as described in the following subsection. Another key reason comes from the insight from the control perspective that the strictness in requirements when it comes to the determinism of control systems, is to a significant extent, an implementation choice during application design. Modifying the implementation of control systems can affect/reduce the degree of determinism [3]. Moreover, the strictness of requirements may vary, e.g., depending on the scenario, over time, etc. This flexibility combined with the flexibility of today and tomorrow’s wireless networks opens up new opportunities, and co-design will play a key role [3]. Re-considering design choices for control applications to accommodate wireless communication is a significant demand on industrial application developers. But re-considering design choices for applications is also a necessary step to accommodate the trend towards cloudification and cloud-native application design for CPSs.

3.1.2. Trend Towards Cloudification and Cloud-Native Application Design for CPSs

The demand for cloud computing has gained significant traction over the last years. Cloud-native software development practices enable businesses to think beyond on-premise boundaries, attain enterprise mobility, increase scalability, etc. [14,15]. Moreover, in order to exploit the potential of artificial intelligence (AI) and machine learning (ML), existing solutions are shown to be limiting. For instance, the computation capacity of today’s customized hardware is usually inadequate for processing large datasets or executing advanced mechanisms and for training and executing the AI models in real-time and at scale. Therefore, there is an ongoing move in industry towards cloudification of applications [16]. With the desire of executing applications from the cloud, consequently, there is a rising focus to offload functionality from physical systems—and transition to cyber–physical systems (and a move from embedded control to networked control)—which is driving industry digitalization and the benefits it can bring [10]. Cloud computing facilitates low-cost, scalable access to vast computing power in the digital domain, enabling optimizations driven by data and machine learning (in control, predictive maintenance, fault root-cause analysis, system planning via digital twins) [17]. Offloading also provides simplification benefits (footprint/size, weight, power consumption, complexity, and maintainability) for the physical systems. This drives the interest in offloaded and networked control, often in combination with wireless connectivity [1,17,18]. Since introducing cloud execution for monolithic applications is considered to be inefficient, instead the move is towards cloud-native applications [19]. For this transition as a first step decomposing the control program into functions that support efficient resource usage is needed, and in a next step developing fully cloud-native control applications [19]. For critical real-time applications, challenges do not only arise towards the communication platform (as described in the previous section) but also towards the computing platform: real-time control applications typically have well-defined timeliness constraints. Therefore, processing must be performed within the defined time constraints, or it is considered a failure [20,21]. Thus, determinism is required for cloud execution and applies to all layers that make up the cloud stack. Such determinism cannot be found in today’s cloud computing environments. In summary, communication as well as computing aspects need to undergo a change in order to exploit technologies such as AI, ML, etc. [22].

3.1.3. Trend Towards Flexible Interaction and Interoperability

The trends towards wireless communication and cloud-computing/cloud-native application design highlight how new players enter the CPSs’ ecosystem. At the same time, vendor dependability and lock-in is not desired by enterprises and, therefore, more and more end-users express a need for more flexibility and vendor-independence, which in turn increases the need for open interfaces and explicit integration of systems and sub-systems (from multiple vendors and suppliers) between all entities. This has given rise to a platform business starting to become established as well as new types of service offerings (e.g., software-as-a-service (SaaS) [23], Communication Platform as a Service (CPaas), Global network API platform [24], and robot-as-a-service (RaaS) [25]). Unified non-vendor specific interfaces are increasingly requested and, in particular, in the smart manufacturing ecosystem, OPC UA [26] is starting to establish itself as a standardized information model and protocol framework that can be used to model physical elements, functions and processes in a standardized and vendor agnostic fashion. Likewise, concepts like the Asset Administration Shell (AAS) [27] aim towards standardizing digital representations and interactions of assets to enable interoperability of Industry 4.0 components in a much wider scope than today’s CPSs achieve. Here, also intent-based automation [28] is starting to obtain traction. The idea of intent-based automation is to enable autonomy in a way that a system can adapt its behavior and generate new solutions instead of following human-defined recipes and policies. Flexibility, interoperability, and automation are an integral part of all of the mentioned approaches. They all contribute to a demand towards application developers to incorporate increased dynamicity and adaptability in their application design. Initiatives like CAMARA [29], the GSMA Open Gateway [30], and the GSMA Operator Platform [31] further support this trend by providing standardized Application Programming Interfaces (APIs) and frameworks that enhance network capability access and flexibility across diverse ecosystems.

3.2. Related and Prior Work

The topic of Communication–Compute–Control co-design has recently gained more interest and importance but has been studied before with different angles and in sub-domains, and substantial prior work exists in these sub-domains. In this section prior work relevant to our contributions is summarized. First, the most relevant domains of prior work from the compute domain and the networked control domain are provided, then, a summary of prior work from practical studies is given, and, finally, first approaches of 3Cs co-design on concept level, similar to this publication, are discussed. For all prior work, the additional contributions are outlined.

3.2.1. Prior Work from the Compute Domain

A disrupting conceptual architecture is introduced in [32], called Cloud Fog Automation (CFA), and it was further promoted in [15]. CFA promotes a transition towards cloud and fog computing, with a unified architecture that can integrate Information Technology (IT) and Operation Technology (OT) domains. The realization of CFA promotes a transition to offload higher layer control functions into the cloud infrastructure, as well as to migrate lower layer processes from purpose-specific hardware to virtualized fog computing infrastructure, where fog computing refers to the deployment of programmable compute capabilities on devices closer to the data source, enabling more efficient data processing and analysis at the edge of the network. Cloudifying the higher layer functions is becoming a common practice in enterprise applications today [15]. In addition, there is active research in the area of cloud robotics, which recently emerged as a collaborative technology between cloud computing and robotics enabled through progress in wireless networking and cloud technologies [33].

The device-edge-cloud continuum paradigm described in [34] aims at making use of a distributed cloud-edge infrastructure to run applications on heterogeneous hardware and different locations wherever they perform best. Cloud-native technologies such as containerization, a lightweight virtualization alternative [35], as well as dynamic orchestration have proven to be advantageous over Virtual Machines (VM) [36]. The vision of CFA inspired the framework of RoboKube to accelerate the CFA practice in the robotics domain to seamlessly deploy, migrate, and scale applications across the device, edge and cloud continuum [37] with wireless connectivity [18].

All of the above-mentioned cloud-related work incorporate the co-design methodology in some way, since they optimize the cloud deployment of the application. However, a high-level, systematic framework is not included in these studies.

3.2.2. Prior Work from Networked Control Systems (NCSs) and Wireless NCSs Domain

The idea of making control applications adapt to the environment for increased robustness or better performance has already been gaining interest in the literature. Several articles center around the area of Networked Control Systems and Wireless NCSs (WNCSs). NCSs concentrate on how controllers, sensors, and actuators are connected over a network and with the consequences thereof, e.g., the effects of network characteristics on the control performance. NCS have been used in several areas such as industrial automation, automotive, and teleoperations as discussed in a survey paper from Zhang et al. [38]. The authors of [39,40] and the references therein investigate how network imperfections affect the control performance of NCS. This effect is even more articulated in the area of Wireless Networked Control Systems (WNCSs), where the control system components communicate through wireless connections and are thereby facing non-zero delay and message error probability in data transmissions [12]. Thus, WNCS introduce some novel control design mechanisms to tolerate delay and message losses [13,41,42]. A comprehensive overview on WNCS is also offered in [43], focusing on the communication perspectives. They highlight the need for a joint design within WNCSs, to build a well-performing WNCS,, including the joint design between communication and control, edge-assisted network design, and goal-oriented communication in WNCSs. The mechanisms proposed for NCSs and WNCSs typically either handle the co-design as a joint optimization problem or operate on low-level, focusing on stochastic fluctuations and relying on control theory-based approaches.

3.2.3. Prior Work from Practical Studies

There are a few practical studies around co-design where real scenarios are also taken into account. For example, a case study is discussed in [44] where a latency-aware wireless control framework is presented and employed in a real ball-and-beam control system. The authors discuss the differences in the co-designed system compared to the independent design approach and show improved robustness of the system. In [45], another case study with cloud controlled Autonomous Guided Vehicles (AGVs) is provided to show how co-design can facilitate the lowering of the consumption of wireless network resources. The proposed solution resulted in lower probability of system instability and higher number of admissible AGVs. Lyu et al. [46] worked on a network hardware-in-the-loop (NHiL) framework to evaluate the impacts of wireless network on robot control and then to improve the design by employing correlation analysis between communication and control performances. The proposed method eliminates the huge efforts and costs of building and testing the entire physical robot system in real life.

While these works are very valuable for taking a realistic approach, they investigate very specific co-design algorithms and do not discuss a generic co-design framework.

3.2.4. Prior Work on Concept Level

A recent concept work on this topic discusses the necessity and the potential of co-designing the communication and control system, highlights the importance of tight interaction between communication and control systems, as well as identifies some opportunities, technical challenges, and open issues [3]. Our contribution compared to this work is that we propose a structured, general framework for co-design and in addition extend the scope with considering the cloud domain as well.

A significant part of the existing literature focuses on formulating a joint optimization problem to solve the co-design of communication and control. The authors of [45] analyzed and summarized the existing co-design methods and identified two kinds of problems: (i) control optimization problem with communication constraints and (ii) communication optimization problem with control constraints. Some parameters influence both the control and communication systems at the same time, which is the key to communication–control co-design. The example of a cloud-controlled AGV is provided, where the control parameters are optimized to achieve a higher coding rate threshold and lower probability of system instability.

The solutions mentioned above formulate the problem analytically and target a typically complex, optimal solution of the problem. However, a key contribution of the work described in this article is the split of the co-design problem into smaller, pragmatic steps to be tractable and be able to adapt to the actual application needs, since not all kinds of applications require the same level of co-design.

The dependability and programmability of communication and compute systems have been identified as a key essence required in infrastructures to support critical real-time applications [47,48]. Dependability is a collective term that comprises characteristics of availability, reliability, and maintainability [49]. The framework described in this article builds on the concept of dependability and applies it to services and application design. A dependable industrial service is designed such that it can be trusted to provide the desired value/outcome with a quantifiable probability. Similarly, a dependable application is designed to bound uncertainties (e.g., communication latency) to deliver a trusted outcome. A tabular summary of the most relevant prior art is added to Table 1.

Table 1.

Tabular summary of related works.

4. The Framework of Communication–Compute–Control-Optimized Application Design

4.1. Co-Design Framework

In this section, the framework of Communication–Compute–Control design is explained. It provides a structured way of grouping application design approaches according to their needs on dependability and relate these to the knowledge, insights and interactions with the communication and computation infrastructure that could support enhanced application design. The underlying principle is that the more intelligent an application becomes, the higher levels of criticality/dependability it can accomplish when executing its tasks. There are two main ways of achieving a higher application intelligence: (1) via smarter algorithm design, e.g., innovative new approaches from the control domain; (2) by obtaining relevant information and exploiting additional knowledge from other sources. This work has a strong focus on exploring the latter approach, which aims for improved application scalability and robustness. The application intelligence is enhanced by exploiting additional knowledge from the network/compute infrastructure. A framework can then be developed by grouping this additional knowledge obtained from the network and compute domains into different degrees ranging from simple to advanced insights together with the different mechanisms to obtain this knowledge. This conceptual structure can then serve application developers to support or guide in choosing the right type of insight and interaction with the network/compute infrastructure depending on the requirements and technical needs of the application. Moreover, the framework allows for a structured identification of challenges, research questions, and required improvements related to the different building blocks involved. The framework is illustrated in Table 2. For different degrees the different design approaches of the application are detailed together with the required interaction/interfacing towards network/compute infrastructure. Each degree is mapped to an application approach according to the criticality/dependability of the application. The knowledge insights and interactions that the application exploits start from the lowest degree (with no insights and no interaction). With every degree the detail of knowledge/insights available to the application about network/compute infrastructure increases (general awareness, specific knowledge, etc.) by using increasingly sophisticated interaction mechanisms. At the same time, with every degree, when exploiting the additional knowledge, the application cannot only guarantee increasing levels of dependability but also ensure increasing levels of resource efficiency.

Table 2.

Different degrees of 3Cs application design.

The reason for taking this approach is that the decision on what type of 3Cs design is needed should continue to primarily lie in the hands of the application developer. The application developer knows, understands, and controls the application needs. At the same time, in order for the application developers to make the best use of communication and compute infrastructure, easy-to-use interfaces providing insightful information about these infrastructures are important. The means of interaction to support the application developer should be simple and suit the envisioned purpose.

The framework as it is depicted in Table 2 has its focus on the application and information/insights/interaction exposed by network and computing domains towards the application. However, with increasing degrees of co-design, the network and compute domains can also directly or indirectly gain more insights about the application. This additional knowledge allows those domains to adjust, re-configure, or optimize in order to support the application. Note that conventional non-co-design methods correspond to degree 0.

4.1.1. Degree 0: Unawareness

In this degree, the application is designed with no awareness of the characteristics of neither the network nor the computing infrastructure it makes use of.

- Additional knowledge utilized by the application: None.

- Type of application: This type of application design has its justification for, e.g., applications with unlimited access to computing resources, as well as for wireless non-critical applications when, e.g., usage of computing and communication resources is so limited that access to these can almost always be assumed feasible under perfect conditions.

While this approach benefits from simplicity, it suffers from the fact that no notion of dependability can be achieved. Any type of resource constraint may lead to an unsuccessful operation of the application.

4.1.2. Degree 1: Awareness

In this degree, the application is designed with awareness about the general characteristics of the networking and the computing infrastructure. By exploiting this knowledge, the application can be designed more intelligently in comparison to degree-0 applications. As an example, the application may be aware that delay, jitter, or loss of application data may occur in wireless networks and when using external computing infrastructure. While basic applications may simply only accept that there is an imperfect link, more advanced applications could adapt to certain changes such as latency.

This leads to the following characteristics for the degree-1 design:

- Additional knowledge utilized by the application: Understanding of general characteristics of network/compute, e.g., awareness that resource constraints may occur;

- Type of application: Typical applications for which degree-1 design is suitable are non-critical applications that are using only very limited compute or network resources or that can be run with resource allocations following the default best-effort paradigm.

This degree benefits from its simplicity, but still the application is designed to deal with some performance uncertainties in the communication and compute and adapts its operation accordingly. This is a basic step towards increased application flexibility and adaptability.

4.1.3. Degree 2: Interface Exposure

In this degree, the application is designed to actively make use of interfaces, e.g., Application Programming Interfaces (APIs) to obtain more specific information and insights about the network and computing infrastructure. This information brings additional knowledge to the application than the general awareness about communication and computing infrastructure as in degree 1. By exploiting this additional more specific knowledge, the application can now be designed to also perform critical tasks with higher levels of required dependability. This leads to the following characteristics for the degree-2 design:

- Additional knowledge utilized by the application: information on the current or predicted operational status of the network/compute domains, including, e.g., upcoming resource constraints or resulting performance variations;

- Typical application: This type of application design is most suitable for applications solving moderate/critical tasks with a moderate degree of guaranteed accomplishment.

This application design approach benefits from more detailed insights about network/compute for more advanced handling of varying conditions without having to request more advanced services from network/compute, which may come with additional costs. Applications can benefit from some resilience or fallback behavior, which can be activated in, e.g., resource constrained situations.

4.1.4. Degree 3: (Mutual) Interaction

In this degree, the application is designed not only using exposure interfaces to obtain information from the network and cloud domains (as in degree 2), but also for triggering specific service requests towards the network and/or the compute entity. These requests typically demand changes in the services provided by the network/compute infrastructure, potentially related to network/compute configuration aspects. A typical request could involve demanding communication (e.g., Quality-of-Service (QoS) requests) and computing resources with strict communication demands on timeliness and reliability unlike best effort performance. A further enhancement of this type of service request would involve the expression of service needs via intents. This leads to the following characteristics for the degree-3 design:

- Additional knowledge utilized by the application: More detailed performance insights on specific network/compute infrastructure that can be provided. Moreover, the application can now rely on service assurance of communication/compute infrastructure and can work with a quantifiable assessment of their dependability;

- Typical application: This type of application design is most suitable for accomplishing tasks with high levels of required guaranteed accomplishment and/or applications that require significant amounts of resources and/or applications that need to operate with high levels of resource efficiency. By ensuring access to communication and/or compute resources via service requests, the highest degree of guaranteed task accomplishment can be achieved.

This application design approach comes with the advantage that the application developer can rely on dependable communication and compute services via explicit requests. With adaptability implemented in the application, resources are only requested when needed. Moreover, if the network and the compute infrastructure implement dynamic and flexible resource allocation this would allow for a more optimized utilization of the resources as well as a higher number of applications potentially being supported.

4.1.5. Degree 4: Negotiation

In this most advanced degree of co-design approach, it is envisioned that an application is designed using knowledge about its context and intents towards an overarching common goal it contributes to. Communication and compute resource allocation could then carry out negotiation principles to determine an optimal distribution and timing of resources to achieve the overarching goal. This degree can very well benefit from the concept of intents. This leads to the following characteristics for the degree-4 design:

- Additional knowledge utilized by the application: Increased understanding of relations and resource dependencies towards other applications in a wider context.

- Typical applications in this degree could be part of a larger context, for which a higher-level task/application coordination exists. This could for instance be in the form of digital twins. The negotiation process would then take place or relate to a higher level, e.g., between some notion of network, compute, and system digital twin.

This is the most advanced application design and requires complex knowledge and interactions.

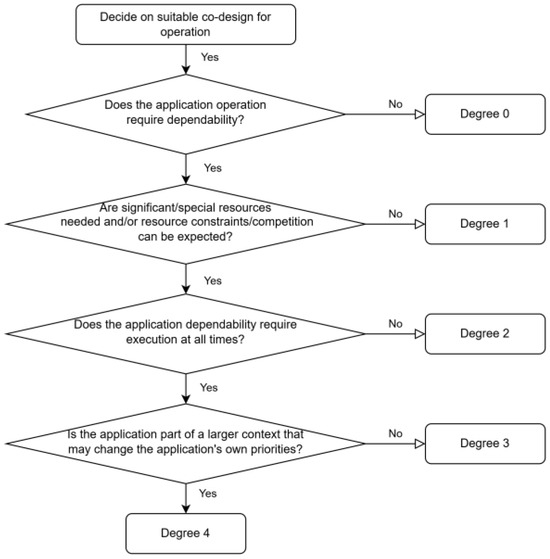

4.1.6. Summary

Figure 4 depicts a flow-chart summarizing the basic steps for understanding a suitable degree of co-design depending on the application’s needs and the environment/context it operates in. Note that this is a very simplified view and only depicts the most important steps. Table 3 summarizes advantages and challenges for the application for the different degrees.

Figure 4.

Simplified view on co-design degree choice.

Table 3.

Advantages and challenges of co-design degrees from an application perspective.

If an application has changing needs with respect to dependability, the same flowchart can also be applied for understanding the degrees suitable for different operation modes of an application depending on current needs. By keeping application implementations for operations that do not require advanced levels of dependability to the lower co-design degrees, the overall complexity of an application can be kept low.

4.2. Examples of Use Case Realizations Using Co-Design with Different Degrees

To illustrate the applicability of the framework, we explore in the coming subsections for the example of Autonomous Mobile Robots (AMRs), how a 3Cs co-design approach for AMRs can benefit from the different degrees of co-design. Each degree represents a different level of complexity, integration, and responsiveness between the AMR, the compute platform, and the network.

4.2.1. AMR Use Case Scenario and Assumptions

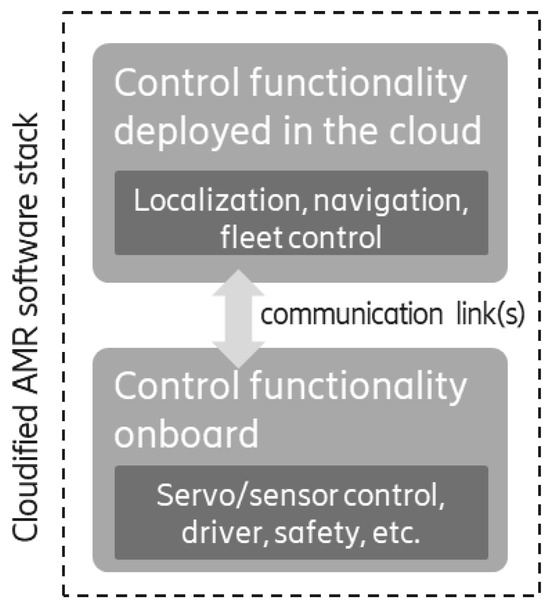

For this use case, we consider deploying an AMR following the principles of cloud-fog-automation within the device-cloud continuum. The software stack is composed mainly by three blocks, each cloudified to enable seamless deployment and integration across devices, edge, or cloud environments, depending on the prevailing conditions. Additional tasks are usually added, e.g., object detection, object tracking, or other heavy AI processing. The three blocks are:

- Localization: This component consists of a set of entities designed to provide an accurate localization system. It enables the robot to determine its position within the environment and detect obstacles. For example, Simultaneous Localization and Mapping (SLAM);

- Navigation: Building on the localization system, the navigation component comprises entities responsible for moving the robot within the environment. This includes trajectory and motion planning, allowing the robot to travel between points A and B;

- Hardware-dependent functions: This component contains entities that are specific to the hardware running on the robot and cannot be offloaded to the edge or cloud, such as servo control, sensors, and safety and emergency mechanisms.

Each of these components are tightly interconnected, with varying communication and computation requirements.

Figure 5 illustrates the architecture of the AMR. In the example, the computationally heavy parts of the application, here navigation and localization, are offloaded to the edge or cloud. The hardware-dependent functions, e.g., for controlling the drives, are embedded into the AMR platform. A safe fallback solution not requiring any network or compute resources allows the robot to stop safely if resources are not available.

Figure 5.

AMR architecture.

The scenario is as follows: An AMR operated in an outdoor public area is tasked to move from point A to point B. Its performance, measured by the time the AMR takes to complete the task, is a critical factor in determining the Quality of Experience (QoE), as it directly impacts user satisfaction and the perceived efficiency of the system. Since the AMR is placed in a public area, it is operating in an unstructured environment, where humans, cars, etc. are moving around creating changes/obstacles in the environment and impacting network conditions. The AMR is required to navigate in this environment, finding the fastest path from A to B despite the dynamic changes.

The following assumptions are made:

- The AMR is navigating in an environment where there is a high density of connected cars, sensors, devices, humans, etc., competing for both communication and computing resources. (see Section 2 on trends towards wireless communication).

- The AMR is implemented in a cloud-native way, using container orchestration platforms to simplify the deployment and management of the application in the device-cloud continuum. (see Section 2 on trends towards cloudification).

In the following, the same scenario is played out for the different co-design degrees, showing how the increases in insights and the more and more advanced interactions benefit the use case.

Co-design with unawareness, degree 0, corresponds to a reference case, where the localization and navigation in their default functionality are simply offloaded to the edge cloud, relying on the network capability as it is.

4.2.2. AMR Co-Design with Awareness, Degree 1

The AMR is designed with an understanding that its operation requires network and computing resources and is aware that there may be resource constraints. Since in this degree it is assumed that the AMR is not designed with the capability to make specific performance requests towards network and compute, the AMR can only operate in best-effort mode. In case resources become constrained, the AMR halts its mission, standing still, waiting until resources becomes freed up again. This may happen several times on its trajectory from A to B and, therefore, this potentially leads to unpredictable completion times of the task of the AMR. The awareness, however, can be exploited to make the robot control algorithms more resilient against shorter glitches due to temporal network or cloud issues, e.g., continuation of trajectory during a short period of time even during lost connections.

4.2.3. AMR Co-Design with Interface Exposure, Degree 2

Similarly to co-design degree 1, the AMR knows its requirements and technical needs towards the network and compute infrastructure and operates in best-effort mode. However, with a degree-2 design the AMR is enabled to request information about network and compute, and, thus, obtain additional insights, e.g., the robot control application can obtain in advance information from the network/compute infrastructure about predicted resource constraints over time/in certain areas, or receive insights into the expected range of computing times or communication latencies [50,51]. With this information the AMR can pro-actively adjust its trajectory and plan ahead. This will allow the AMR to operate more robust and smoothly (less frequent stops) from A to B. Furthermore, the task completion time becomes more predictable. Since operation is, however, still on best-effort support from network/compute infrastructure, critical applications cannot be guaranteed to be supported in an optimal way since the AMR has to adapt to the availability of compute and communication resources.

4.2.4. AMR Co-Design with Mutual Interaction, Degree 3

Similarly to co-design degree 2, the AMR knows its requirements and technical needs towards network and compute and is designed to use information exposed by network and computing infrastructure. On top of that, with degree-3 design it is enabled to make specific requests towards the network and the compute infrastructure detailing its needs for dependable services. If these dependable services become granted, the network and compute infrastructure manage their resources such that the requested resource usage of the AMR can be fulfilled—at least with high likelihood—even during resource fluctuations. As a result, the AMR can move from best-effort operation to dependable operation. The task completion time becomes predictable. The AMR can be implemented such that there exist different operation modes with different needs and requirements towards dependable services from the network/compute infrastructure. The AMR can then choose a suitable operation mode in a resource constrained scenario and adapt its operation pro-actively by switching to different operation modes.

4.2.5. AMR Co-Design with Negotiations, Degree 4

The AMR is designed with the same level of awareness and capabilities as in degree 3 but on top of this, in degree 4-design, the AMR is aware of its context. In the case of resource constraints, the AMR actively participates in a negotiation process with other involved actors in the system, e.g., by suggesting alternative operation modes that it could switch to, in order to free-up resources. Towards this purpose, the AMR can express its intents in an abstract format and can flexibly adjust its behavior, e.g., changing priorities, traffic profile, etc. Thereby, the AMR contributes to finding solutions for problems that are larger than its own scope.

4.2.6. AMR Co-Design with Different Degrees for Different Segments

As already indicated in the degree-3 design, AMRs may also be designed to implement more than one degree of co-design. This is for instance suitable when the operations of the AMR have different levels of criticality at different times; an AMR executing low-priority missions may at times receive high-priority missions. When executing the high-priority missions, the AMR switches to co-design degree 3 routines, while during low-priority missions, an operation at degree 2 is good enough, thereby freeing up resources for other users in the system.

4.2.7. Discussion

The example of different co-design degrees implementations for AMRs have highlighted the required interactions of the AMR for the different degrees, as well as the outcome that can be expected regarding the execution of the AMRs operation. The example furthermore demonstrates how increased flexibility and adaptability in application design together with co-design degrees 3 (or 4) ensures a more resilient execution of the application. At the same time, resources are used in a more efficient way.

AMRs are only one example where co-design is foreseen to provide major benefits. But the framework is applicable to any dependable application requiring both communication and compute resources for its execution. For instance, in [49], dependable and adaptive service design for 6G networks is explored for the examples of exoskeletons, XR for industrial workers, adaptive manufacturing systems, and smart farming use cases.

Practical evaluations and case studies are ongoing or the subject of future work. A first evaluation that classifies as co-design degree 3 is provided in [52], where the operation of time-critical applications for 5G-enabled unmanned aerial vehicles (UAVs) is studied to determine how their operation can be improved by the possibility to dynamically switch between QoS data flows with different priorities.

5. Building Blocks of 3Cs Co-Design

The framework described in this article has identified flexibility, adaptability, and interaction as key ingredients to enable co-design. In this section the building blocks for achieving a next level of flexibility, adaptability and interaction are outlined together with pointers towards first solutions, contributing technologies, etc. Moreover, research gaps are identified.

Figure 6 illustrates the building blocks of co-design across the 3 different domains and their relationship on a conceptual level. For each of the domains, the same blocks have been identified to be highly relevant. These are (1) self-awareness, (2) evolved exposure mechanisms and interfaces, and (3) planning, decisions, and actions. Each domain (i.e., network, compute, and application) in the co-design approach can enhance its own capabilities by developing and improving its own building blocks independently from the other domains. However, for applications with mission critical requirements, the benefits of co-design are more pronounced when all the three domains evolve jointly. By using exposure mechanisms and interfaces, interactions between the domains become possible. Finally, decisions and actions are determined in each domain and across domains aiming for an overarching 3Cs co-design.

Figure 6.

Building blocks for Communication–Compute–Control co-design and the need for interaction and exchange between communication, compute and control entities.

5.1. Self-Awareness, Decisions, and Actions

One building block towards improved understanding of capabilities and towards co-design is self-awareness. Self-awareness in this context refers to the understanding of a domain about its own capabilities as well as its needs and requirements. Today’s applications, compute and network infrastructure have already a certain level of self-awareness. Co-design benefits are foreseen by further increasing this self-awareness, e.g., in its granularity over time, space, etc. In general, for increased self-awareness and control of all three domains, internal observability is a key capability to develop advanced insights. Self-awareness is moreover a key pre-requisite for developing advanced decision-making algorithms within a specific domain. To this end, various approaches can be applied, e.g., model-based approaches or data-driven approaches (leveraging machine learning), or a combination thereof. Increased self-awareness together with external insights obtained via exposure interfaces will allow for more advanced decision taking, both within and across the domains. The building block self-awareness, as needed for co-design, is explained together with the possible impact on the decision, actions, and algorithms building block in more detail per domain in the following.

5.1.1. Application Self-Awareness, Decisions, and Actions

Self-awareness of an application entails that the application can clearly define in a service specification the performance requirements towards the network and compute platforms and the level of guarantee/dependability that needs to be provided to achieve its own desired quality of experience. The service specification(s) need to be sufficiently granular in characteristics of the application’s communication traffic and compute demand, as well as in performance requirements and their criticality over time. The service specification should enable the communication and compute domains, to assess if and how such a service request can be supported at the required performance level and with an availability level that matches the criticality of the performance need.

Advanced self-awareness would then allow for a more dynamic application design in terms of decisions, actions, and algorithms. Increasing the flexibility and responsiveness of an application towards changing network/compute conditions can provide application resilience and is seen to become of increasing importance in this context. As an example, advanced self-awareness could entail that an application is designed to make service requests towards the network and compute platforms not only for the worst-case scenario with respect to network and compute performance (i.e., targeting the minimum performance requirements) but also for a “normal” or “preferred” operation mode. That is, the application is aware of its changing needs towards network and compute performance based on its own operational state, which may change over time. The application may support from one to several specific operation modes, which all should be well-understood by the application, e.g., in terms of performance requirements and expected execution guarantees as well as the characteristics of the traffic for a given operation mode and the need on compute capabilities.

Awareness, planning, and prediction of an application’s service specifications into the future are seen to become increasingly important in this context. In particular, when facing resource constraints from network and/or compute, re-planning, and re-assessment may potentially be required on a more frequent basis.

5.1.2. Network Self-Awareness, Decisions, and Actions

For network services providing more than best-effort guarantees, the network needs to be able to specify what services can be offered (in terms of supported performance levels) together with an assessment of the dependability (availability, reliability, maintainability) for such services. This means it should understand which performance levels (e.g., related to latency, jitter, data rate, etc.) it can guarantee at which availability level. Application developers should cater for an application design that can benefit from such insights and provide not only high performance to the application but also the resilience that allows adapting to changing network characteristics. The network needs to continuously monitor and assess its own capabilities to obtain its own awareness about when, where, and under which conditions (e.g., traffic load) the specified connectivity services can be guaranteed. The network should not only be aware of its capabilities at any specific moment in time during its operation, but it should also be able to plan and predict its capabilities into the future. To be able to provide a dependable network connectivity, in addition to an anticipated (e.g., average) performance level, there needs to be an assessment of the order of magnitude for a guarantee that can be associated with the performance level (i.e., quantile-prediction). If the bottleneck is identified, the network should be able to assess what potential actions should be taken to mitigate that bottleneck.

5.1.3. Compute Self-Awareness, Decisions, and Actions

For compute services providing guarantees, the compute infrastructure needs to be able to specify what services can be offered together with an assessment of the dependability (availability, reliability, maintainability) of these services. If further, more detailed capabilities can be guaranteed (e.g., related to fault-tolerance and bounded compute execution times) this should be visible to application developers. The compute infrastructure needs to continuously monitor and assess its own capabilities to obtain an own awareness about when, where, and under which conditions the specified compute services can be guaranteed. Also, the compute infrastructure should extend its self-awareness and allow it to plan and predict its capabilities into the future. If the bottleneck or root-cause for a limitation is identified, the compute infrastructure should be able to assess what potential actions could be taken to improve the compute infrastructure.

5.2. Exposure Mechanisms and Interfaces

Exposure mechanisms and interfaces play a crucial role in the information exchange between the 3Cs domains, and it is one of the identified building blocks towards co-design. Exposure refers to the capability of one domain to provide information about its capabilities and characteristics to external consumers via some API. It also allows for service requests or configuration requests to be received. The need for standardized interfaces and mechanisms has been identified and is ongoing work. For example, when it comes to interfaces towards the network infrastructure, in [53], the need of industrial automation systems has been described to be able to obtain information about network capabilities and request connectivity services. In [54,55] it has been demonstrated how an industrial automation system can obtain characteristics from a 5G network, configure connectivity relations between industrial automation assets via 5G, and request connectivity services with appropriate QoS according to the needs of the applications running between the industrial automation assets.

While there are many parameters and characteristics that could potentially be exchanged between application, compute, and network to increase knowledge and insights among the 3Cs domains, the content of the information exchanged should be limited to what is actually used and provides a value, that is, purpose-driven information exchange is desirable. A typical purpose-driven information element from application to network and compute infrastructure are requests for services, e.g.,:

- Requests for specific connectivity and compute services or a change in these;

- Requests for network and compute information and requests for subscribing to a specific information channel from network and compute elements, e.g., notifications about changes in network and compute conditions.

With increased self-awareness, every domain can choose to expose more information, as well as more advanced and potentially more insightful information to other domains. But not every insight obtained in a domain is relevant for the other domains at all times. Therefore, also the way information and insights are shared should be conducted in a purpose-driven format. Suitable data exchange patterns may involve, e.g., publish–subscribe data exchange patterns, request–reply data exchange patterns, etc.

5.3. Example Flow of Interactions

Given the building blocks of co-design illustrated in Figure 6 and described in the previous sections, below an example flow of interactions is outlined for degree-3 co-design.

- Self-awareness: All entities (network, application, compute) evaluate their own needs and requirements with suitable time intervals, keeping an updated view. This is performed by each entity independently.

- Exposure of self-awareness information via a suitable method, thereby giving the other entities the possibility to consume this knowledge.

- Planning and decision making: Each entity acts based on self-awareness and by consuming exposed information and potential requests from the other entities. If there are different alternatives of actions, conflicts, or a decision on an action is made an iterative process is foreseen, e.g., starting with updating self-awareness.

7. Summary and Conclusions

This article has introduced a framework that provides a structured approach for analyzing and developing applications in the cyber–physical continuum. The analysis of state of the art and observed trends in industry and academia (Section 3) has shown the need but also several ongoing trends and developments that are moving towards a highly integrated design of CPSs. Ongoing development towards increasing the adoption of wireless communication for CPSs as well as trends for cloudification and cloud-native implementations are paving the way to create the right pre-requisites for enabling a more integrated application design for CPSs. Combined with the general vision of Industry 4.0 for flexible interaction and improved interoperability these trends call for a tighter interaction between different domains and ecosystems. Therefore, the term Communication–Compute–Control co-design has emerged as a collective term for CPS’s design approaches that consider and exploit insights from all three domains.

The prior work analysis (Section 3) showed how contributions to the area of 3Cs co-design come from the different domains, that the need for an overarching concept has been identified, and first steps have been taken in this direction, both with conceptual work as well as practical studies. However, an overarching framework is missing, which this article tries to address. The proposed 3Cs co-design framework is described in detail (Section 4), explaining the different degrees of co-design that an application can be categorized into depending on its needs and requirement. The co-design approach is exemplified for a co-designed autonomous mobile robot with different degrees, showing how the different degrees affect the application design but also the outcome of the use case in the example. The main building blocks of co-design have been outlined in Section 5. A discussion on tradeoffs and important parameters that affect choices when co-designing applications has been outlined in Section 6. Here is also where key research and development questions for future work are described.

To conclude, 3Cs co-design is a promising research and development field aiming for better application performance, improved resource efficiency, and more resilient design of CPSs, which requires interdisciplinary efforts. Its impact stretches all the way from concrete near-term application implementation design to futuristic concept development. There are varying degrees of complexity in co-design due to the different domain interactions, and the many tradeoffs that need to be handled. However, with a structured approach, it is possible to break down complexity and move one step closer to the vision of co-designed applications for CPSs.

Author Contributions

Conceptualization, methodology, validation, writing: L.G., J.S., J.A., A.H.H., N.R. and C.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the European Union’s Horizon 2020 Research and Innovation Program through the H2020 Project DETERMINISTIC6G under Grant 101096504.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors Leefke Grosjean and Aitor Hernandez Herranz were employed by the company Ericsson, Sweden. The authors Joachim Sachs and Junaid Ansari were employed by the company Ericsson GmbH, Germany. The author Norbert Reider was employed by the company Ericsson Telecommunications Ltd., Hungary. The author Christer Holmberg was employed by the company Oy L M Ericsson Ab, Finland. The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Alur, R. Principles of Cyber-Physical Systems; The MIT Press: Cambridge, MA, USA, 2015; ISBN 978-026-254-892-2. [Google Scholar]

- Karapantelakis, A.; Sahlin, B.; Balakrishnan, B.; Roeland, D.; Rune, G.; Wikström, G.; Wiemann, H.; Arkko, J.; Vandikas, K.; Coldrey, M.; et al. Co-Creating a Cyber Physical World with 6G. 2024. Available online: https://www.ericsson.com/en/reports-and-papers/white-papers/co-creating-a-cyber-physical-world (accessed on 3 December 2024).

- Zhao, G.; Imran, M.; Pang, Z.; Chen, Z.; Li, L. Toward Real-Time Control in Future Wireless Networks: Communication-Control Co-Design. IEEE Commun. Mag. 2019, 57, 138–144. [Google Scholar] [CrossRef]

- Noor-A-Rahim, M.; John, J.; Firyaguna, F.; Sherazi, H.; Kushch, S.; Vijayan, A.; O’Connell, E.; Pesch, D.; O’Flynn, B.; O’Brien, W.; et al. Wireless Communications for Smart Manufacturing and Industrial IoT: Existing Technologies, 5G and Beyond. Sensors 2023, 23, 73. [Google Scholar] [CrossRef]

- Yu, R.; Xue, G. Principles and Practices for Application-Network Co-Design in Edge Computing. IEEE Netw. 2023, 37, 137–144. [Google Scholar] [CrossRef]

- Guo, F.; Peng, M.; Li, N.; Sun, Q.; Li, X. Communication-Computing Built-in-Design in Next-Generation Radio Access Networks: Architecture and Key Technologies. IEEE Netw. 2024, 38, 100–108. [Google Scholar] [CrossRef]

- Tarkoma, S. Publish/Subscribe Systems: Design and Principles; John Wiley & Sons: Hoboken, NJ, USA, 2012; ISBN 978-111-995-154-4. [Google Scholar]

- 5G Use Cases, Ericsson. 2023. Available online: https://www.ericsson.com/4ac5b1/assets/local/cases/customer-cases/2016/documents/5g-use-cases-ericsson.pdf (accessed on 3 December 2024).

- Ansari, J.; Lange, D.; Overbeck, D. Report on 5G Capabilities for Enhanced Industrial Manufacturing Processes, Deliverable 3.4, 5G-SMART. 2022. Available online: https://5gsmart.eu/wp-content/uploads/5G-SMART-D3.4-v1.0.pdf (accessed on 3 December 2024).

- Grosjean, L.; Landernäs, K.; Sayrac, B.; Dobrijevic, O.; König, N.; Harutyunyan, D.; Patel, D.; Monserrat, J.F.; Sachs, J. 5G-Enabled Smart Manufacturing—A booklet by 5G-SMART. 2022. Available online: https://5gsmart.eu/wp-content/uploads/2022-5G-SMART-Booklet.pdf (accessed on 3 December 2024).

- Jonsson, M.; Kunert, K. Towards Reliable Wireless Industrial Communication with Real-Time Guarantees. IEEE Trans. Ind. Inform. 2009, 5, 429–442. [Google Scholar] [CrossRef]

- Park, P.; Coleri, E.S.; Fischione, C.; Lu, C.; Johansson, K.H. Wireless Network Design for Control Systems: A Survey. IEEE Commun. Surv. Tutor. 2018, 20, 978–1013. [Google Scholar] [CrossRef]

- Willig, A. Recent and Emerging Topics in Wireless Industrial Communications: A Selection. IEEE Trans. Ind. Inform. 2008, 4, 102–124. [Google Scholar] [CrossRef]

- Deng, S.; Zhao, H.; Huang, B.; Zhang, C.; Chen, F.; Deng, Y.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Cloud-Native Computing: A Survey from the Perspective of Services. Proc. IEEE 2024, 112, 12–46. [Google Scholar] [CrossRef]

- Jin, J.; Yu, K.; Kua, J.; Zhang, N.; Pang, Z.; Han, Q. Cloud-Fog Automation: Vision, Enabling Technologies, and Future Research Directions. IEEE Trans. Ind. Inform. 2024, 20, 1039–1054. [Google Scholar] [CrossRef]

- Gil, G.; Corujo, D.; Pedreiras, P. Cloud Native Computing for Industry 4.0: Challenges and Opportunities. In Proceedings of the 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Västerås, Sweden, 7–10 September 2021; pp. 1–4. [Google Scholar]

- Yu, W.; Liang, F.; He, X.; Hatcher, W.; Lu, C.; Lin, J.; Yang, X. A Survey on the Edge Computing for the Internet of Things. IEEE Access 2018, 6, 6900–6919. [Google Scholar] [CrossRef]

- Liu, Y.; Hernandez, A. Enabling 5G QoS configuration capabilities for IoT applications on container orchestration platform. In Proceedings of the 14th IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Napoli, Italy, 4–6 December 2023; pp. 63–68. [Google Scholar]

- Skarin, P.; Tärneberg, W.; Årzen, K.; Kihl, M. Towards Mission-Critical Control at the Edge and Over 5G. In Proceedings of the IEEE International Conference on Edge Computing (EDGE), San Francisco, CA, USA, 2–7 July 2018; pp. 50–57. [Google Scholar]

- Abeni, L.; Andreoli, R.; Gustafsson, H.; Mini, R.; Cucinotta, T. Fault Tolerance in Real-Time Cloud Computing. In Proceedings of the 26th IEEE International Symposium on Real-Time Distributed Computing (ISORC), Nashville, TN, USA, 23–25 May 2023; pp. 170–175. [Google Scholar]

- Afrin, M.; Jin, J.; Rahman, A.; Wan, J.; Hossain, E. Resource Allocation and Service Provisioning in Multi-Agent Cloud Robotics: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2021, 23, 842–870. [Google Scholar] [CrossRef]

- Peres, R.; Jia, X.; Lee, J.; Sun, K.; Colombo, A.; Barata, J. Industrial Artificial Intelligence in Industry 4.0—Systematic Review, Challenges and Outlook. IEEE Access 2020, 8, 220121–220139. [Google Scholar] [CrossRef]

- Erl, T.; Monroy, E. Cloud Computing: Concepts, Technology, Security, and Architecture, 2nd ed.; Pearson Education: London, UK, 2023; ISBN 978-013-805-225-6. [Google Scholar]

- Boberg, C.; Kakhadze, G.; Axelsson, M. From CPaaS to a Global Network API Platform, Enabling CSPs to Monetize on 5G. 2024. Available online: https://www.ericsson.com/en/reports-and-papers/white-papers/global-network-api-platform-to-monetize-5g (accessed on 3 December 2024).

- Buerkle, A.; Eaton, W.; Al-Yacoub, A.; Zimmer, M.; Kinnell, P.; Henshaw, M.; Coombes, M.; Chen, W.-H.; Lohse, N. Towards industrial robots as a service (IRaaS): Flexibility, usability, safety and business models. Robot. Comput.-Integr. Manuf. 2023, 81, 102484. [Google Scholar] [CrossRef]

- OPC Foundation, OPC Unified Architecture (UA), Part 1: Overview and Concepts. 2022. Available online: https://reference.opcfoundation.org/Core/Part1/v105/docs/ (accessed on 3 December 2024).

- Plattform Industrie 4.0, Details of the Asset Administration Shell—Part 1. 2022. Available online: https://www.plattform-i40.de/IP/Redaktion/EN/Downloads/Publikation/Details_of_the_Asset_Administration_Shell_Part1_V3.pdf (accessed on 3 December 2024).

- TM Forum, Intent-Based Automation. Available online: https://www.tmforum.org/opendigitalframework/intent-based-automation/ (accessed on 3 December 2024).

- Linux Foundation, CAMARA—The Telco Global API Alliance. Available online: https://camaraproject.org/ (accessed on 3 December 2024).

- GSMA, Open Gateway. Available online: https://www.gsma.com/solutions-and-impact/gsma-open-gateway/ (accessed on 3 December 2024).

- GSMA, Operator Platform. Available online: https://www.gsma.com/solutions-and-impact/technologies/networks/operator-platform-hp/ (accessed on 3 December 2024).

- Lee, K.; Candell, R.; Bernhard, H.-P.; Cavalcanti, D.; Pang, Z.; Val, I. Reliable, High-Performance Wireless Systems for Factory Automation. NIST Interagency/Internal Report (NISTIR)–8317. 2020. Available online: https://nvlpubs.nist.gov/nistpubs/ir/2020/NIST.IR.8317.pdf (accessed on 3 December 2024).

- Saha, O.; Dasgupta, P.A. Comprehensive Survey of Recent Trends in Cloud Robotics Architectures and Applications. Robotics 2018, 7, 47. [Google Scholar] [CrossRef]

- Savaglio, C.; Fortino, G.; Zhou, M.; Ma, J. Device-Edge-Cloud Continuum, Paradigms, Architectures and Applications; Springer: Berlin/Heidelberg, Germany, 2023; ISBN 978-303-142-193-8. [Google Scholar]

- Liu, Y.; Lan, D.; Pang, Z.; Karlsson, M.; Gong, S. Performance evaluation of containerization in edge-cloud computing stacks for industrial applications: A client perspective. IEEE Open J. Ind. Electron. Soc. 2021, 2, 153–168. [Google Scholar] [CrossRef]

- Bentaleb, O.; Belloum, A.S.; Sebaa, A.; El-Maouhab, A. Containerization technologies: Taxonomies, applications and challenges. J. Supercomput. 2022, 78, 1144–1181. [Google Scholar] [CrossRef]

- Liu, Y.; Hernandez, A.; Sundin, R.C. Robokube: Establishing a new foundation for the cloud native evolution in robotics. In Proceedings of the 10th International Conference on Automation, Robotics and Applications (ICARA), Athens, Greece, 22–24 February 2024; pp. 23–27. [Google Scholar]

- Zhang, X.M.; Han, Q.-L.; Ge, X.; Ding, D.; Ding, L.; Yue, D.; Peng, C. Networked control systems: A survey of trends and techniques. IEEE/CAA J. Autom. Sin. 2020, 7, 1–17. [Google Scholar] [CrossRef]

- Hespanha, J.P.; Naghshtabrizi, P.; Xu, Y.G. A survey of recent results in networked control systems. Proc. IEEE 2007, 95, 138–162. [Google Scholar] [CrossRef]

- Gupta, R.A.; Chow, M.Y. Networked control system: Overview and research trends. IEEE Trans. Ind. Electron. 2010, 57, 2527–2535. [Google Scholar] [CrossRef]

- Gungor, V.C.; Hancke, G.P. Industrial wireless sensor networks: Challenges, design principles, and technical approaches. IEEE Trans. Ind. Electron. 2009, 56, 4258–4265. [Google Scholar] [CrossRef]

- Wildhagen, S.; Pezzutto, M.; Schenato, L.; Allgöwer, F. Self-triggered MPC robust to bounded packet loss via a min-max approach. In Proceedings of the 61st IEEE Conference on Decision and Control (CDC), Cancún, Mexico, 6–9 December 2022; pp. 7670–7675. [Google Scholar]

- Wang, Y.; Wu, S.; Lei, C.; Jiao, J.; Zhang, Q. A Review on Wireless Networked Control System: The Communication Perspective. IEEE Internet Things J. 2024, 11, 7499–7524. [Google Scholar] [CrossRef]

- Lyu, H.; Bengtsson, A.; Nilsson, S.; Pang, Z.; Isaksson, A.; Yang, G. Latency-Aware Control for Wireless Cloud Fog Automation: Framework and Case Study. IEEE Trans. Autom. Sci. Eng. 2023, 1–11. [Google Scholar] [CrossRef]

- Qiao, Y.; Fu, Y.; Yuan, M. Communication–Control Co-Design in Wireless Networks: A Cloud Control AGV Example. IEEE Internet Things J. 2023, 10, 2346–2359. [Google Scholar] [CrossRef]

- Lyu, H.; Pang, Z.; Bhimavarapu, K.; Yang, G. Impacts of Wireless on Robot Control: The Network Hardware-in-the-Loop Simulation Framework and Real-Life Comparisons. IEEE Trans. Ind. Inform. 2023, 19, 9255–9265. [Google Scholar] [CrossRef]

- Sharma, G.P.; Patel, D.; Sachs, J.; De Andrade, M.; Farkas, J.; Harmatos, J.; Varga, B.; Bernhard, H.-P.; Muzaffar, R.; Atiq, M.K.; et al. Towards Deterministic Communications in 6G Networks: State of the Art, Open Challenges and the Way Forward. IEEE Access 2023, 11, 106898–106923. [Google Scholar] [CrossRef]

- DETERMINISTIC6G. First Report on DETERMINISTIC6G Architecture. Deliverable D1.2, April 2024. Available online: https://deterministic6g.eu/images/deliverables/DETERMINISTIC6G-D1.2-v1.0.pdf (accessed on 5 December 2024).

- DETERMINISTIC6G. Report on Deterministic Service Design, Deliverable D1.3, January 2025. Available online: https://deterministic6g.eu/images/deliverables/DETERMINISTIC6G-D1.3-v1.0.pdf (accessed on 19 February 2025).

- DETERMINISTIC6G. First Report on 6G Centric Enabler. Deliverable D2.1, December 2023. Available online: https://deterministic6g.eu/images/deliverables/DETERMINISTIC6G-D2.1-v2.0.pdf (accessed on 5 December 2024).

- DETERMINISTIC6G, Digest on First DetCom Simulator Framework Release, Deliverable D4.1, December 2023. Available online: https://deterministic6g.eu/images/deliverables/DETERMINISTIC6G-D4.1-v1.0.pdf (accessed on 19 February 2025).

- Damigos, G.; Saradagi, A.; Sandberg, S.; Nikolakopoulos, G. Environmental Awareness Dynamic 5G QoS for Retaining Real Time Constraints in Robotic Applications. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 12069–12075. [Google Scholar]

- 5G-ACIA, Exposure of 5G Capabilities for Connected Industries and Automation Applications. 2021. Available online: https://5g-acia.org/whitepapers/exposure-of-5g-capabilities-for-connected-industries-and-automation-applications-2/ (accessed on 5 December 2024).

- Seres, G.; Schulz, D.; Dobrijevic, O.; Karaağaç, A.; Przybysz, H.; Nazari, A.; Chen, P.; Mikecz, M.L.; Szabó, Á.D. Creating programmable 5G systems for the Industrial IoT. Ericsson Technol. Rev. 2022, 10, 2–12. [Google Scholar] [CrossRef]

- Karaagac, A.; Dobrijevic, O.; Schulz, D.; Seres, G.; Nazari, A.; Przybysz, H.; Sachs, J. Managing 5G Non-Public Networks from Industrial Automation Systems. In Proceedings of the 19th IEEE International Conference on Factory Communication Systems (WFCS), Pavia, Italy, 26–28 April 2023; pp. 1–8. [Google Scholar]

- Industry IoT Consortium. The Industrial Internet of Things Connectivity Framework. 2022. Available online: https://www.iiconsortium.org/iicf/ (accessed on 3 February 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).