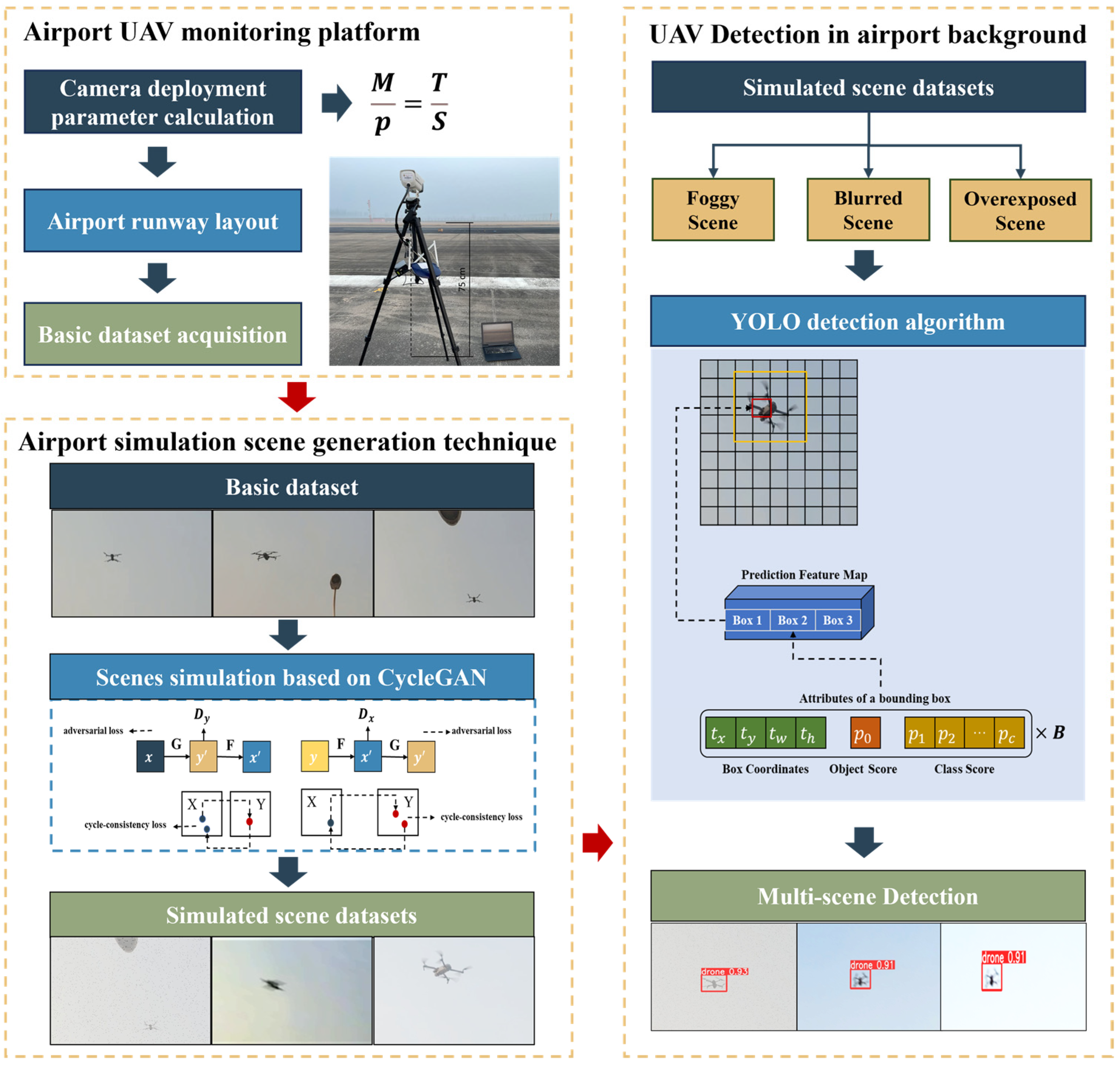

A Novel Anti-UAV Detection Method for Airport Safety Based on Style Transfer Learning and Deep Learning

Abstract

1. Introduction

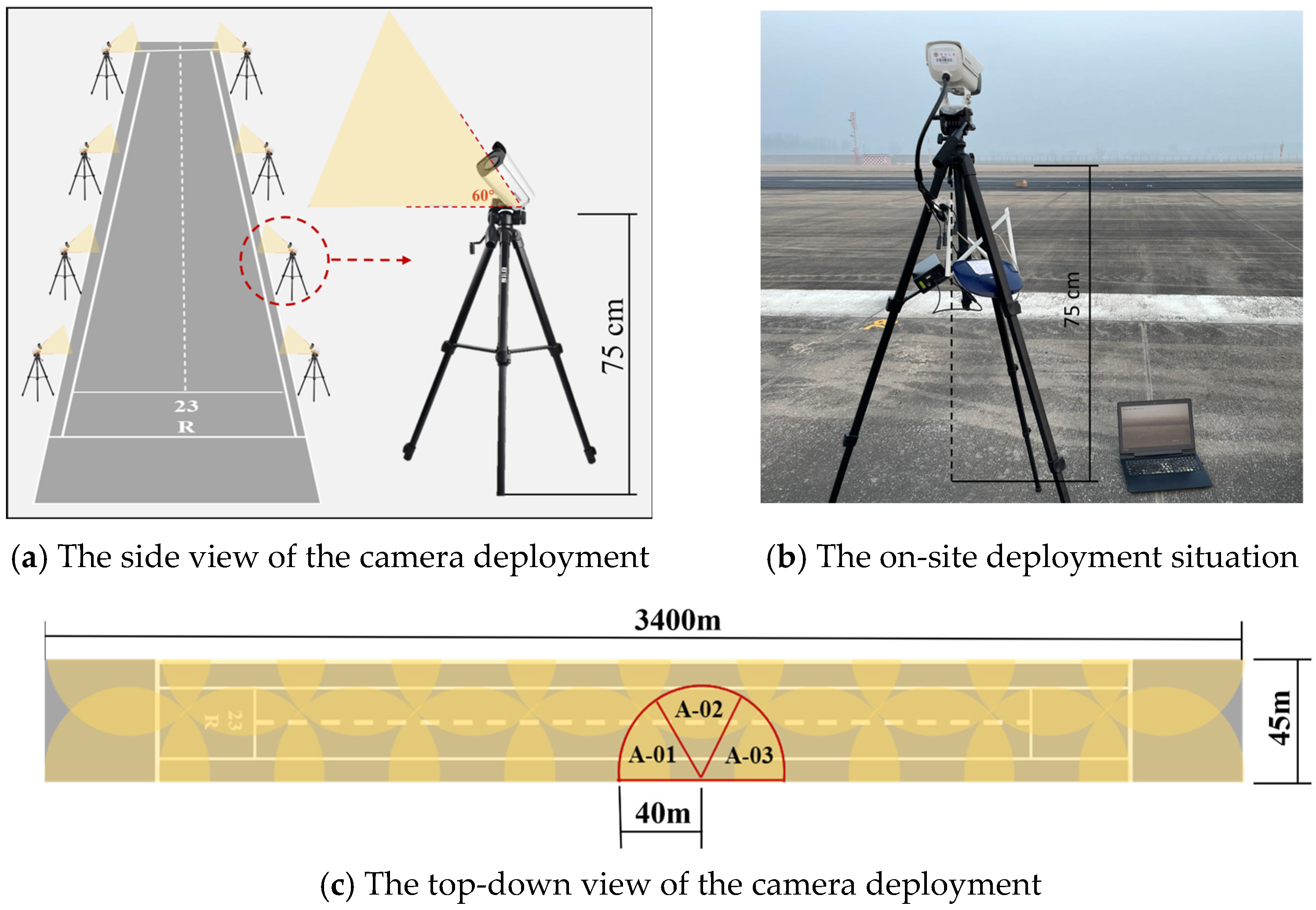

2. Establishment of a UAV Monitoring Platform

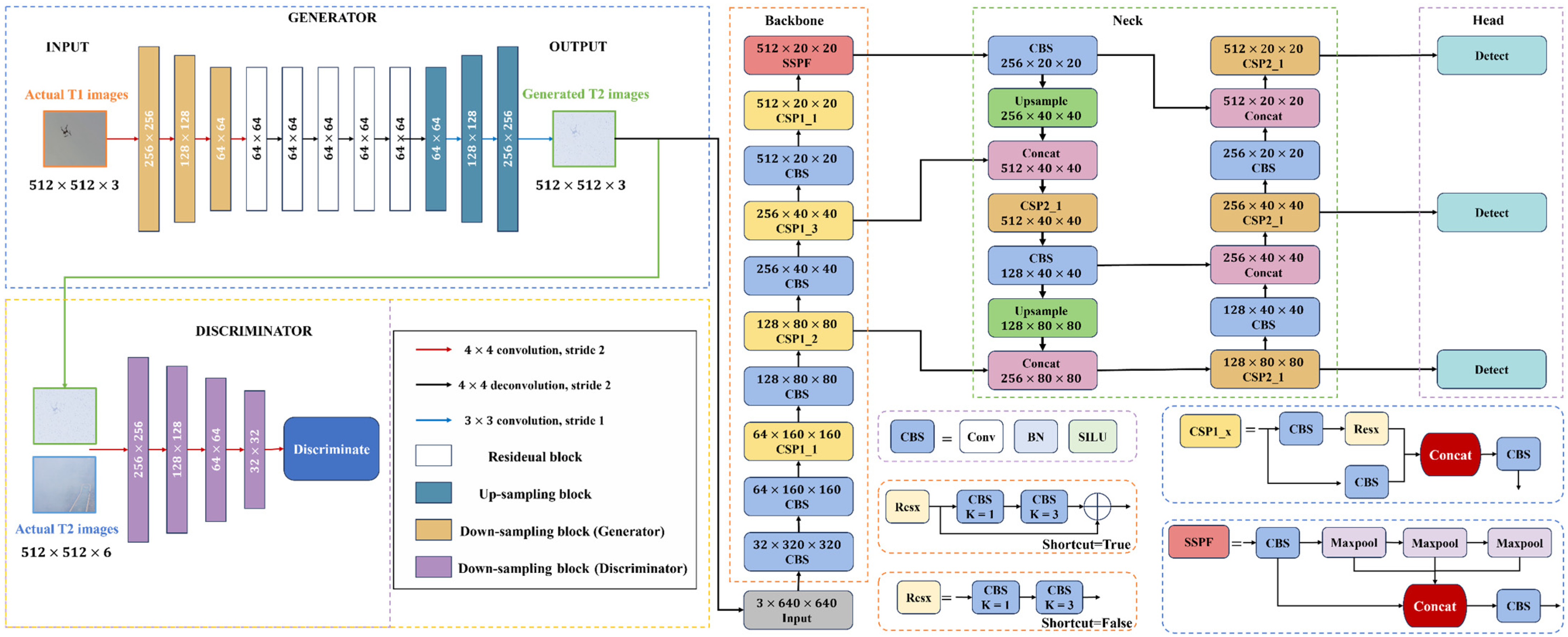

3. Airport Simulation Scene Generation Technique Based on CycleGAN

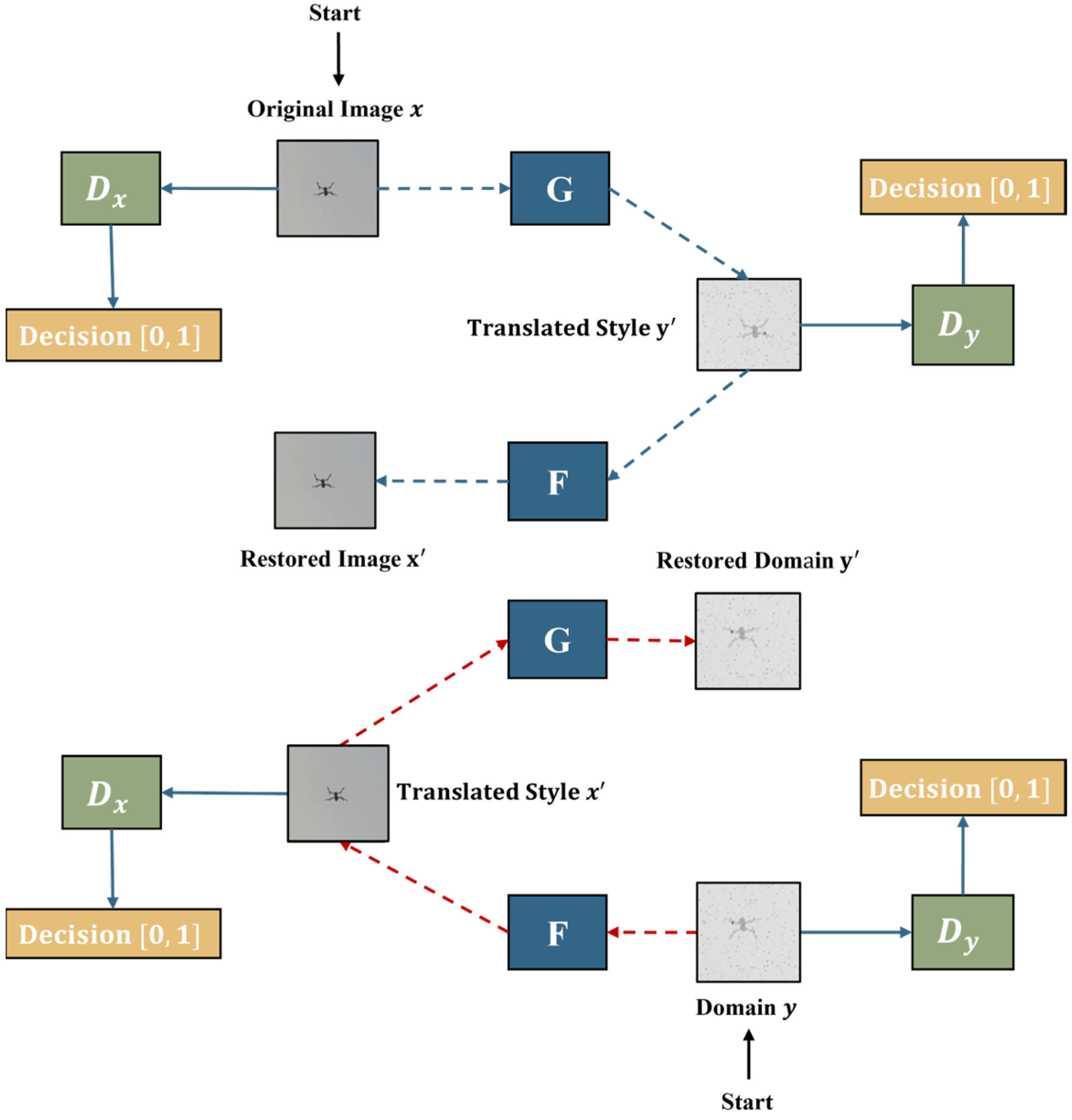

3.1. Airport Simulation Scene Generation Based on CycleGAN

3.1.1. Principle of CycleGAN

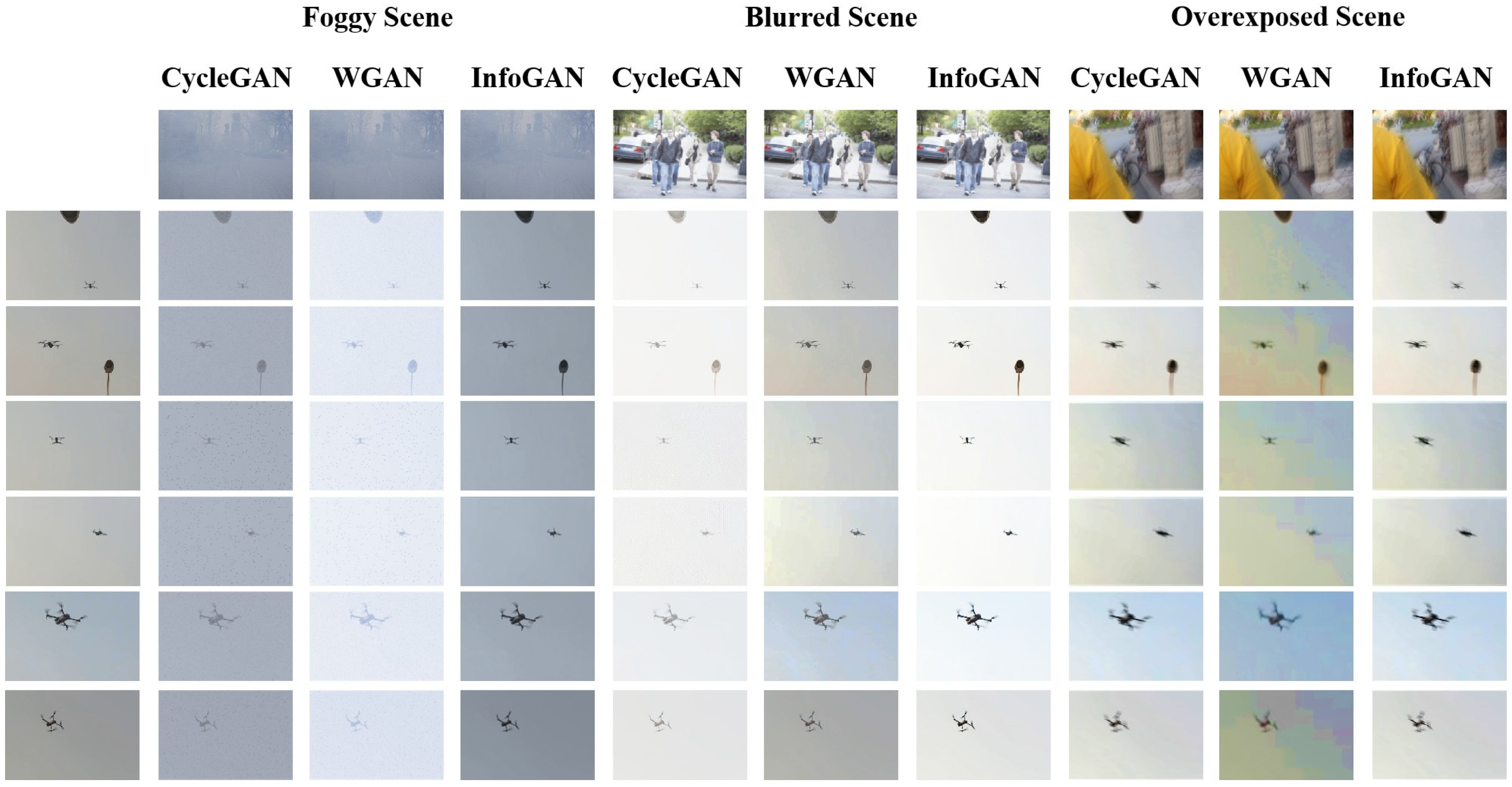

3.1.2. Generation of Three Simulated Scene Datasets

3.2. Quality Evaluation of Simulated Scene Datasets

4. Anti-UAV Detection Experiment Setup

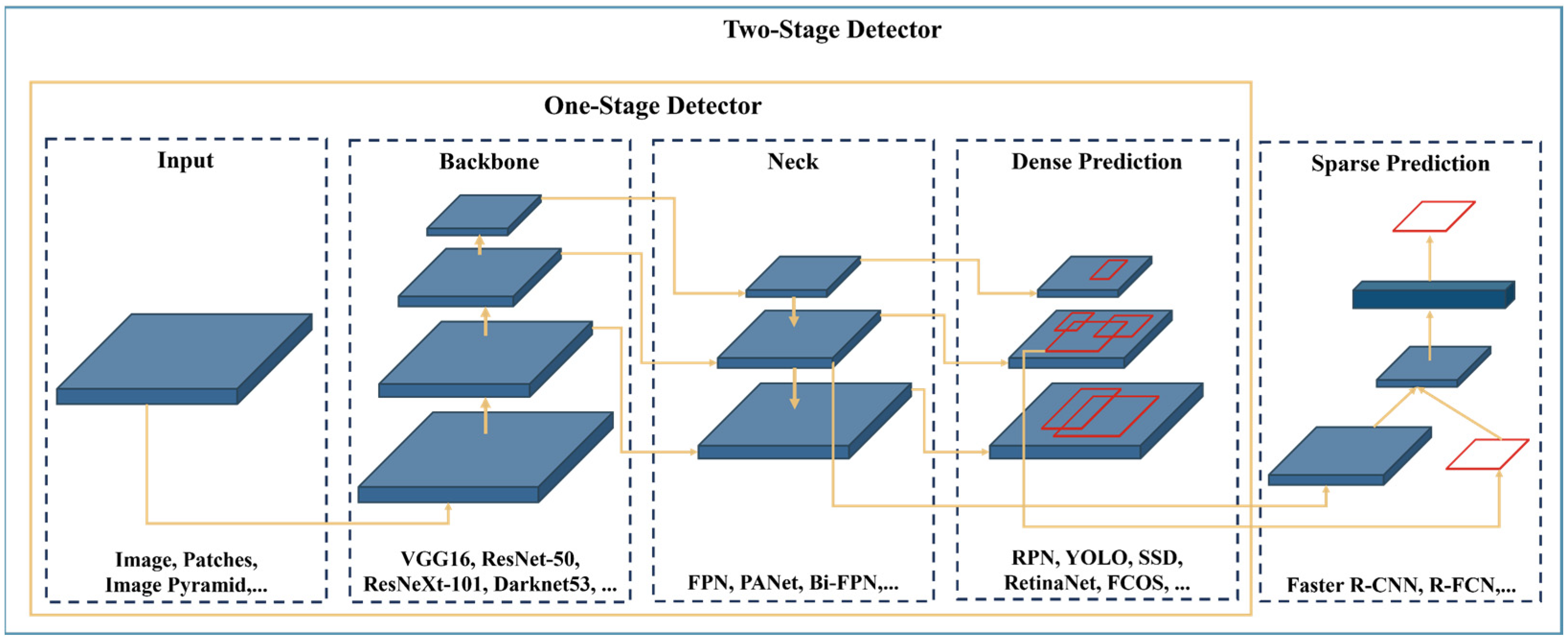

4.1. Deep Learning Object Detection Algorithms

4.2. Test Environment and Parameter Setting

4.3. Evaluation Metrics

5. Result and Analysis

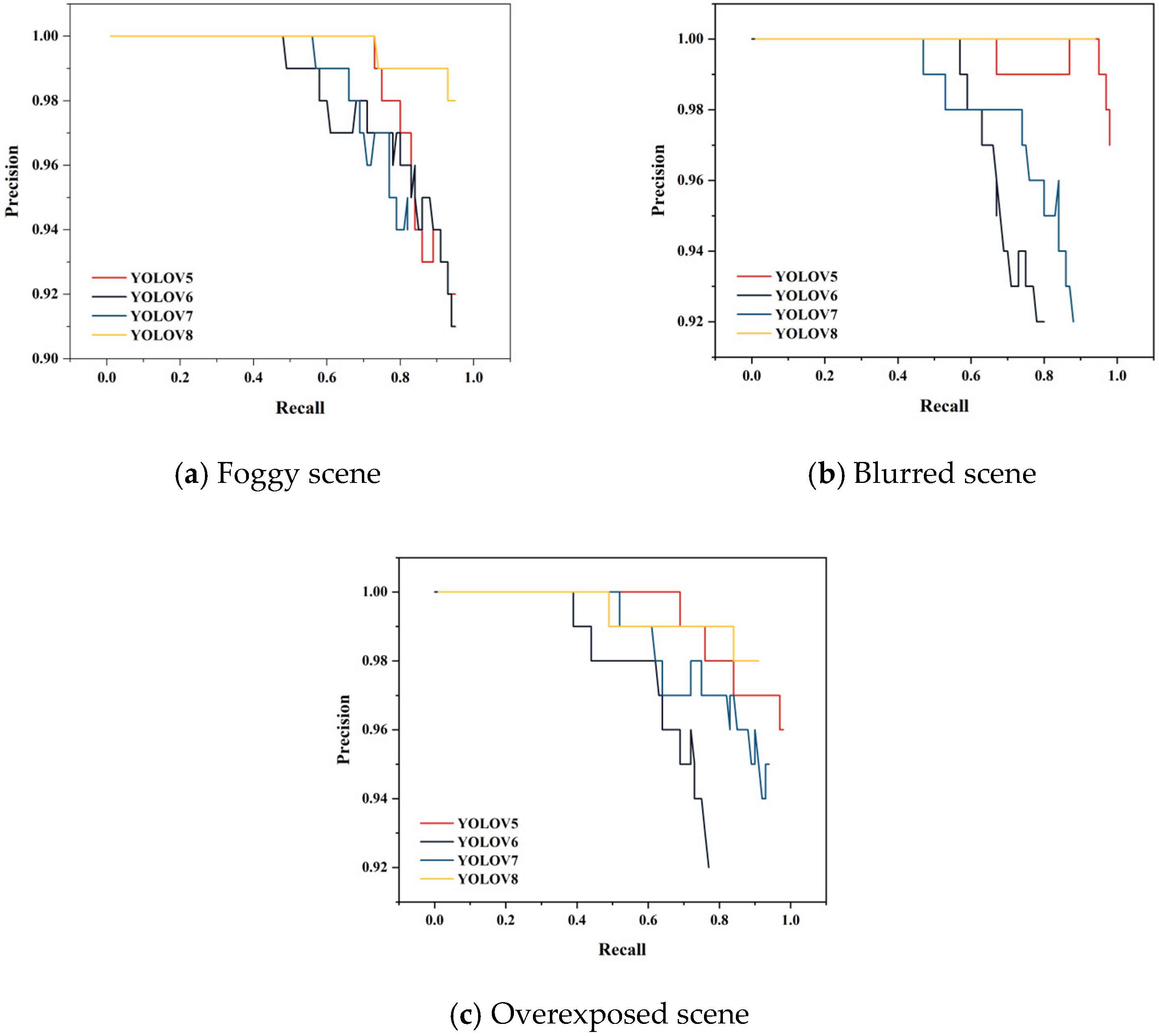

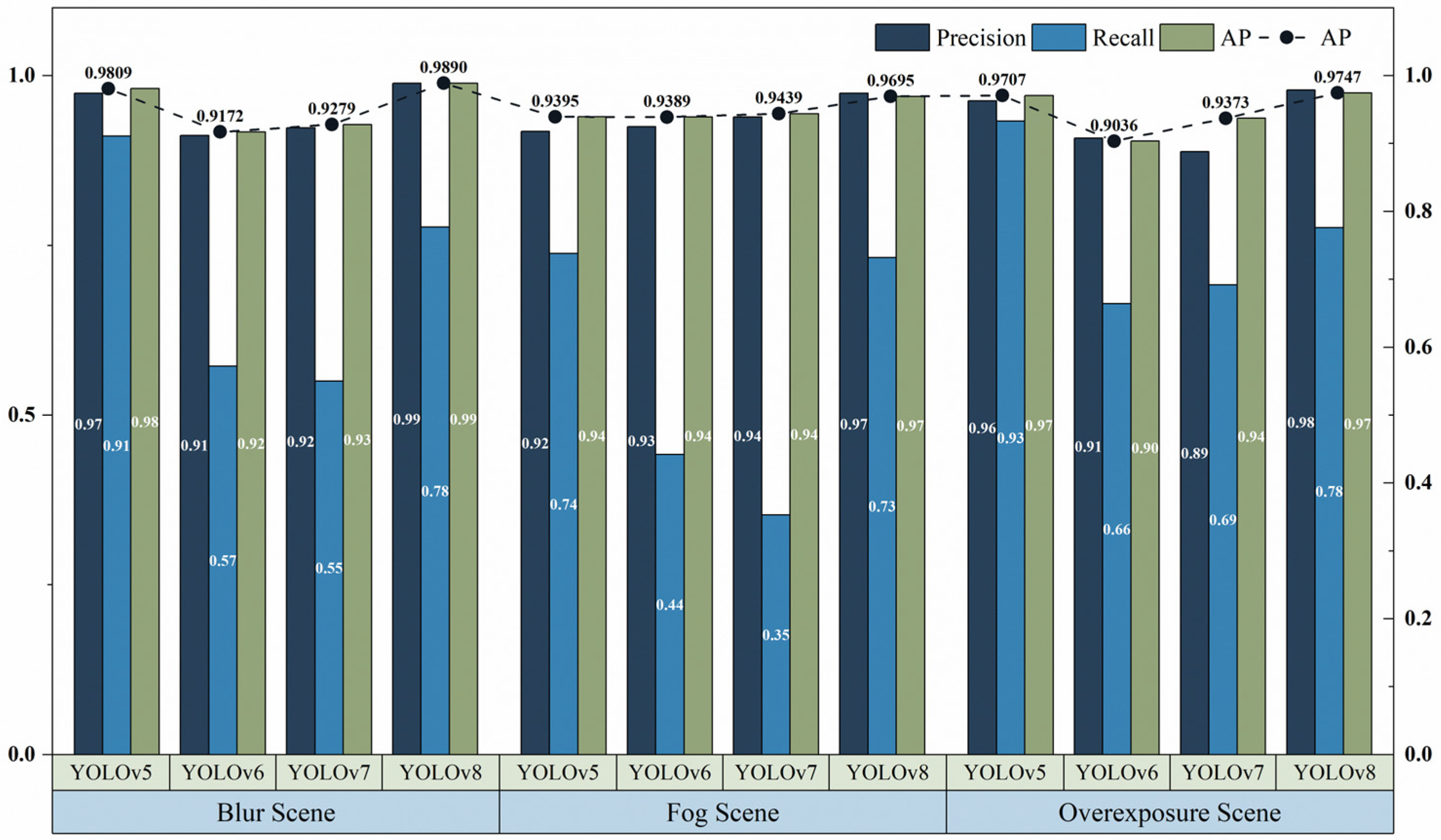

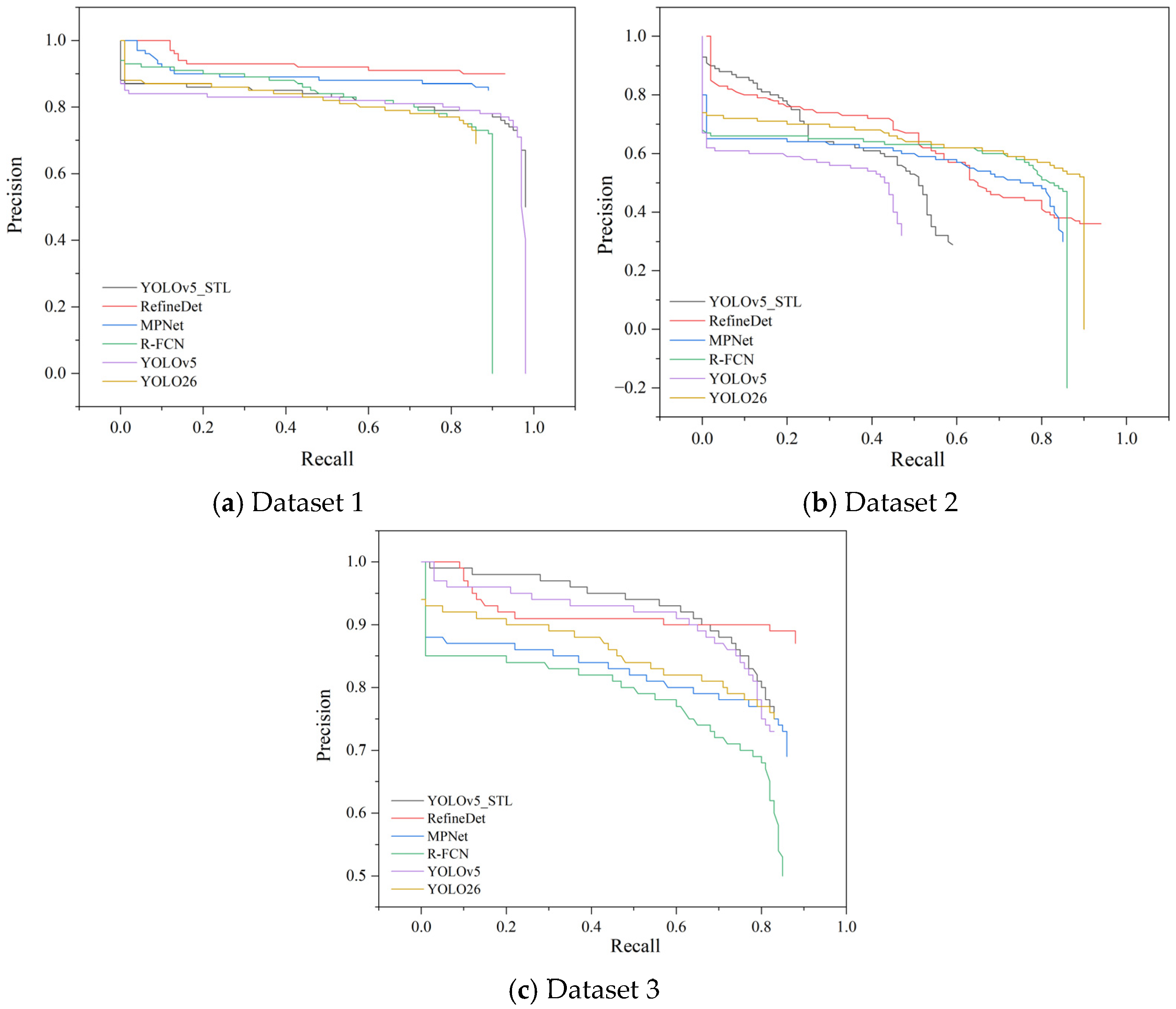

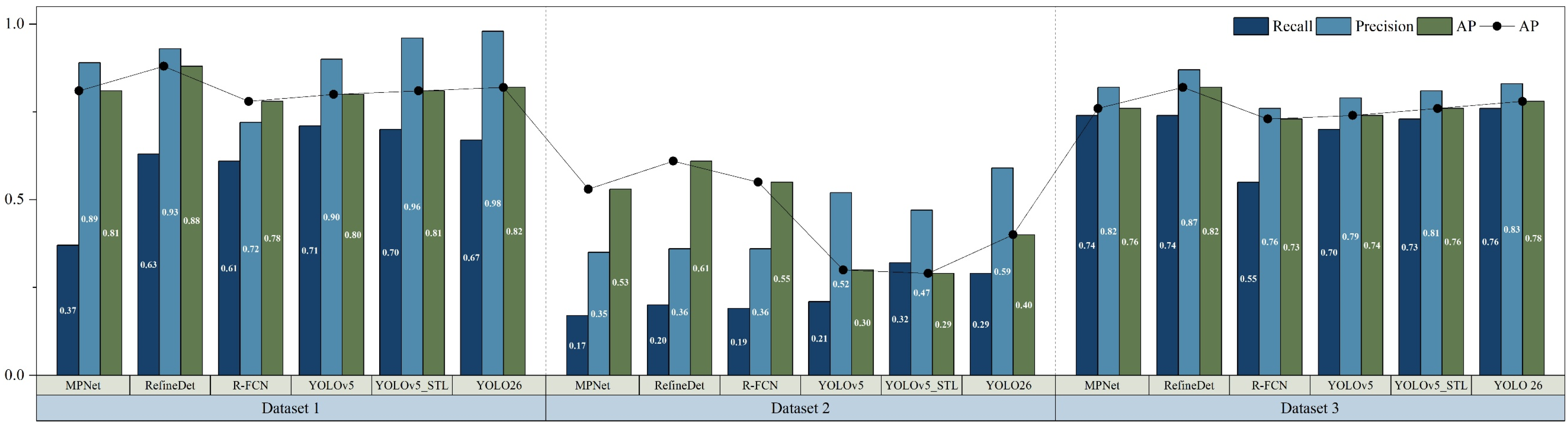

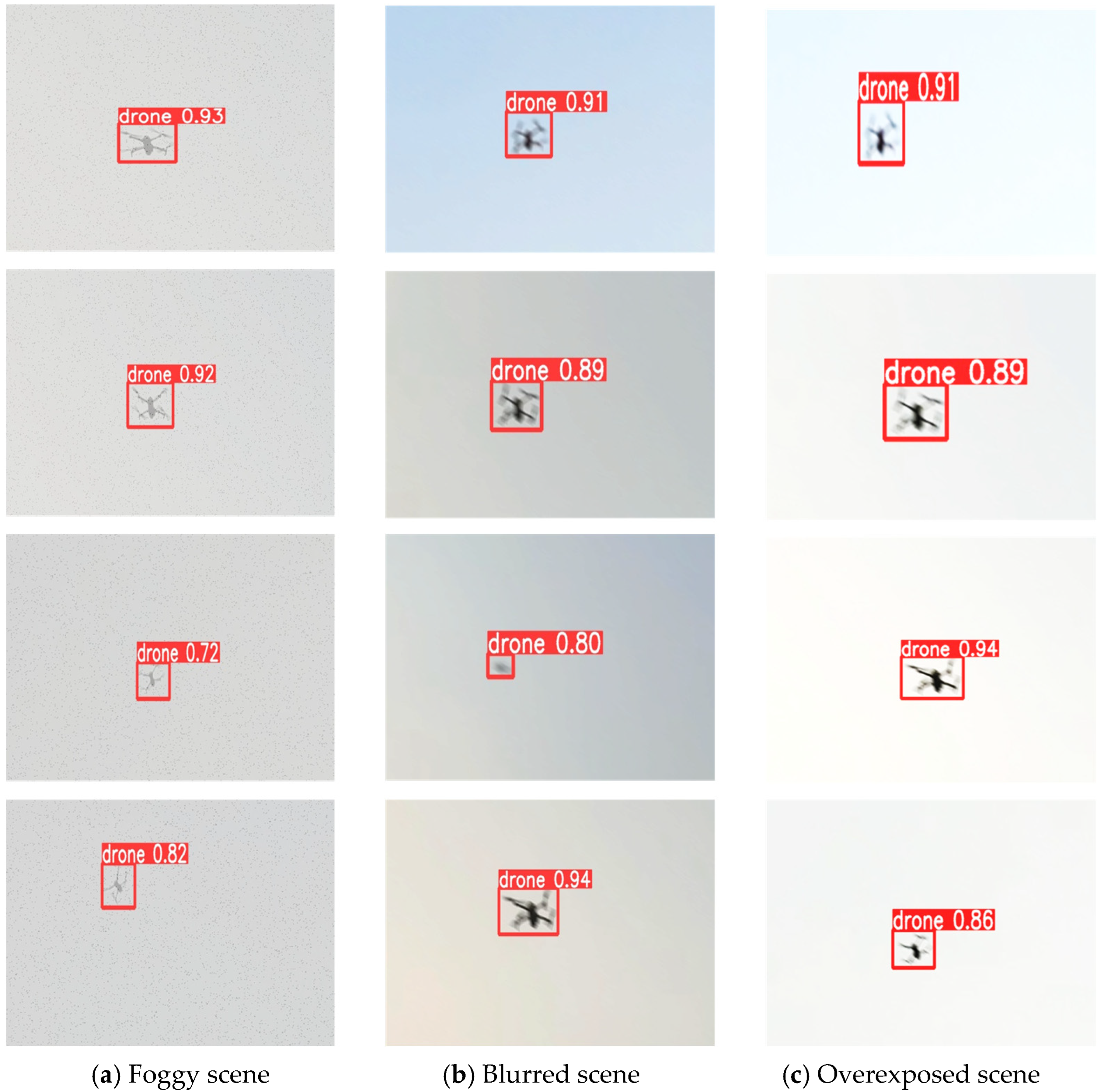

5.1. Performance Comparison and Analysis of 4 Detection Models

5.2. Validation on Open Datasets and Field Test Data

6. Conclusions

- (1)

- The UAV monitoring platform proposed in this study has demonstrated UAV surveillance capabilities. The proposed deployment scheme, which ensures full coverage of the anti-UAV detection range, has been proven to be an effective data acquisition approach.

- (2)

- Based on a generative adversarial network, this study established the first simulated dataset of UAVs for various backgrounds at airports, which can be utilized for anti-UAV detection training.

- (3)

- The research indicated that YOLOv5 exhibited the best prediction performance with high recall rates and relatively faster model inference time, achieving AP values of 93.95%, 98.09%, and 97.07% on three scenes.

- (4)

- YOLOv5_STL demonstrates superior detection performance on open datasets, showcasing the efficacy of the proposed approach in augmenting the training dataset. This augmentation enhances the model generalization capabilities, enabling adaptability to diverse scenarios.

- (5)

- During on-site testing, YOLOv5_STL achieved an AP value of 95.37%, which meets the surveillance requirement of airports.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, J.; Zhong, J.; Ma, T.; Huang, X.; Zhang, W.; Zhou, Y. Pavement distress detection using convolutional neural networks with images captured via UAV. Autom. Constr. 2022, 133, 103991. [Google Scholar] [CrossRef]

- Siddiqi, M.A.; Iwendi, C.; Jaroslava, K.; Anumbe, N. Analysis on security-related concerns of unmanned aerial vehicle: Attacks, limitations, and recommendations. Math. Biosci. Eng. 2022, 19, 2641–2670. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Rui, T.; Li, Y.; Zuo, X. A UAV patrol system using panoramic stitching and object detection. Comput. Electr. Eng. 2019, 80, 106473. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Cheng, H.; Li, Y.; Zhang, R.; Zhang, W. Airport-FOD3S: A Three-Stage Detection-Driven Framework for Realistic Foreign Object Debris Synthesis. Sensors 2025, 25, 4565. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 27th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. Ieee Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Zhao, J.; Zhang, J.; Li, D.; Wang, D. Vision-based anti-uav detection and tracking. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25323–25334. [Google Scholar] [CrossRef]

- Shi, Q.; Li, J. Objects detection of UAV for anti-UAV based on YOLOv4. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Weihai, China, 14–16 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1048–1052. [Google Scholar]

- Hu, Y.; Wu, X.; Zheng, G.; Liu, X. Object detection of UAV for anti-UAV based on improved YOLO v3. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 8386–8390. [Google Scholar]

- Zhu, J.; Rong, J.; Kou, W.; Zhou, Q.; Suo, P. Accurate recognition of UAVs on multi-scenario perception with YOLOv9-CAG. Sci. Rep. 2025, 15, 27755. [Google Scholar] [CrossRef]

- Zhong, J.; Huyan, J.; Zhang, W.; Cheng, H.; Zhang, J.; Tong, Z.; Jiang, X.; Huang, B. A deeper generative adversarial network for grooved cement concrete pavement crack detection. Eng. Appl. Artif. Intell. 2023, 119, 105808. [Google Scholar] [CrossRef]

- Li, B.; Guo, H.; Wang, Z. Data augmentation using CycleGAN-based methods for automatic bridge crack detection. Structures 2024, 62, 106321. [Google Scholar] [CrossRef]

- Arezoomandan, S.; Klohoker, J.; Han, D.K. Data augmentation pipeline for enhanced uav surveillance. In Proceedings of the International Conference on Pattern Recognition, Hammamet City, Tunisia, 25–27 September 2025; Springer: Berlin/Heidelberg, Germany, 2025; pp. 366–380. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 2778–2788. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. Infogan: Interpretable representation learning by information maximizing generative adversarial nets. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar] [CrossRef]

- Wang, S. A hybrid SMOTE and Trans-CWGAN for data imbalance in real operational AHU AFDD: A case study of an auditorium building. Energy Build. 2025, 348, 116447. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Li, F.-F. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9906, pp. 694–711. [Google Scholar] [CrossRef]

- Shafiq, M.; Gu, Z. Deep Residual Learning for Image Recognition: A Survey. Appl. Sci. 2022, 12, 8972. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Li, C.; Wand, M. Precomputed Real-Time Texture Synthesis with Markovian Generative Adversarial Networks. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2016; Volume 9907, pp. 702–716. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar] [CrossRef]

- Jin, Y.; Yan, W.; Yang, W.; Tan, R.T. Structure representation network and uncertainty feedback learning for dense non-uniform fog removal. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 155–172. [Google Scholar]

- Afifi, M.; Derpanis, K.G.; Ommer, B.; Brown, M.S. Learning multi-scale photo exposure correction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9157–9167. [Google Scholar]

- Park, D.; Kim, J.; Chun, S.Y. Down-scaling with learned kernels in multi-scale deep neural networks for non-uniform single image deblurring. arXiv 2019, arXiv:1903.10157. [Google Scholar]

- Huang, S.-C.; Yeh, C.-H. Image contrast enhancement for preserving mean brightness without losing image features. Eng. Appl. Artif. Intell. 2013, 26, 1487–1492. [Google Scholar] [CrossRef]

- Li, L.; Liu, Z.; Li, Y. Modeling and Simulation of Image Quality Evaluation. Comput. Simul. 2012, 29, 284–287. [Google Scholar]

- Kurban, R.; Durmus, A.; Karakose, E. A comparison of novel metaheuristic algorithms on color aerial image multilevel thresholding. Eng. Appl. Artif. Intell. 2021, 105, 104410. [Google Scholar] [CrossRef]

- Ye, S.-N.; Su, K.-N.; Xiao, C.-B.; Duan, J.J.A.E.S. Image quality assessment based on structural information extraction. Acta Electron. Sin. 2008, 36, 856. [Google Scholar]

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Li, K.; Wang, X.; Lin, H.; Li, L.; Yang, Y.; Meng, C.; Gao, J. Survey of One-Stage Small Object Detection Methods in Deep Learning. J. Front. Comput. Sci. Technol. 2022, 16, 41–58. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Zhang, X.; Sun, J. Object Detection Networks on Convolutional Feature Maps. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1476–1481. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar] [CrossRef]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-shot refinement neural network for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4203–4212. [Google Scholar]

- Zagoruyko, S.; Zagoruyko, S.; Lerer, A.; Lin, T.Y.; Pinheiro, P.O.; Gross, S.; Chintala, S.; Dollár, P. A multipath network for object detection. arXiv 2016, arXiv:1604.02135. [Google Scholar] [CrossRef]

- Pawełczyk, M.Ł.; Wojtyra, M. Real World Object Detection Dataset for Quadcopter Unmanned Aerial Vehicle Detection. IEEE Access 2020, 8, 174394–174409. [Google Scholar] [CrossRef]

- Svanström, F.; Englund, C.; Alonso-Fernandez, F. Real-time drone detection and tracking with visible, thermal and acoustic sensors. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Virtual, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2020; pp. 7265–7272. [Google Scholar]

- Zhang, Z.; Wang, J.; Li, S.; Jin, L.; Wu, H.; Zhao, J.; Zhang, B. Review and Analysis of RGBT Single Object Tracking Methods: A Fusion Perspective. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 20, 259. [Google Scholar] [CrossRef]

- Sapkota, R.; Cheppally, R.H.; Sharda, A.; Karkee, M. Yolo26: Key architectural enhancements and performance benchmarking for real-time object detection. arXiv 2025, arXiv:2509.25164. [Google Scholar] [CrossRef]

| Method | Images | Fog | Blur | Overexposure |

|---|---|---|---|---|

| Mean PSNR | Initial UAV images | 20.5428 | 21.0485 | 23.6723 |

| Model I CycleGAN [19] | 18.7436 | 18.5922 | 20.8549 | |

| Model II WGAN [23] | 17.7436 | 16.5922 | 15.5922 | |

| Model III InfoGAN [24] | 18.4273 | 18.7983 | 19.9579 | |

| Mean SSIM | Initial UAV images | 0.7515 | 0.7355 | 0.7183 |

| Model I CycleGAN [19] | 0.9655 | 0.7983 | 0.9281 | |

| Model II WGAN [23] | 0.7721 | 0.6491 | 0.6278 | |

| Model III InfoGAN [24] | 0.9232 | 0.8283 | 0.9027 |

| Model | Anchor | Data Augmentation | Backbone | Neck | Predict | Param (M) |

|---|---|---|---|---|---|---|

| YOLOv5 [20] | Anchor based | Mosaic | CSPDarknet-53, SPP, Focus, CSP | FPN + PAN | Classification loss: BCE, Bounding box regression loss: CIoU | 21.2 |

| YOLOv6 [21] | Anchor free | Mosaic augmentation was disabled for the final 10 epochs | CSPDarknet-53 | Rep-PAN, CSP-Block in YOLOv5 was replaced by The RepBlock | Classification loss: VFL Loss, Regression loss: DFL Loss + CIOU Loss, Efficient Decoupled Head | 34.9 |

| YOLOv7 [22] | Anchor based | Mosaic | Darknet-53 | SPP, PAN | CloU_Loss, DIOU_Nms, Auxiliary head | 36.9 |

| YOLOv8 | Anchor free | Mosaic augmentation was disabled for the final 10 epochs | Darknet-53 | PAFPN | Classification loss: VFL Loss, Regression loss: DFL Loss + CIOU Loss, DIOU_Nms | 25.9 |

| Model Name | Average Precision (AP) | True Positives (TP) | False Negatives (FN) | False Positives (FP) | Precision Rate | Recall Rate | FPS | |

|---|---|---|---|---|---|---|---|---|

| YOLOv5 [20] | Fog | 93.95% | 169 | 60 | 15 | 0.918 | 0.738 | 119 |

| Blur | 98.09% | 226 | 22 | 6 | 0.974 | 0.911 | 94 | |

| Overexposure | 97.07% | 209 | 15 | 8 | 0.963 | 0.933 | 94 | |

| YOLOv6 [21] | Fog | 93.89% | 148 | 187 | 12 | 0.925 | 0.442 | 82 |

| Blur | 91.72% | 150 | 168 | 11 | 0.912 | 0.572 | 89 | |

| Overexposure | 90.36% | 178 | 90 | 18 | 0.908 | 0.664 | 94 | |

| YOLOv7 [22] | Fog | 94.39% | 155 | 284 | 10 | 0.939 | 0.353 | 88 |

| Blur | 92.79% | 155 | 127 | 13 | 0.923 | 0.550 | 100 | |

| Overexposure | 93.73% | 182 | 81 | 23 | 0.888 | 0.692 | 93 | |

| YOLOv8 | Fog | 96.95% | 188 | 69 | 5 | 0.974 | 0.732 | 86 |

| Blur | 98.9% | 195 | 56 | 2 | 0.989 | 0.777 | 84 | |

| Overexposure | 97.47% | 184 | 53 | 4 | 0.979 | 0.776 | 85 | |

| Dataset Number | Dataset |

|---|---|

| Dataset 1 | Drone Dataset: Amateur Unmanned Air Vehicle Detection [44] |

| Dataset 2 | Drone Detection Dataset [45] |

| Dataset 3 | Anti-UAV Dataset [46] |

| Method | Solution | Improvement Type |

|---|---|---|

| R-FCN [41] | Single feature map | Multi-scale feature learning |

| RefineDet [42] | Variants of FPN | Multi-scale feature learning |

| MPNet [43] | Local context | Context-based detection |

| YOLO26 [47] | Multi-scale aggregation | P3 branch |

| YOLOv5_STL | Style Transfer Learning | Data augmentation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Song, Y.; Zhang, R.; Lei, Y.; Cheng, H.; Zhong, J. A Novel Anti-UAV Detection Method for Airport Safety Based on Style Transfer Learning and Deep Learning. Electronics 2025, 14, 4620. https://doi.org/10.3390/electronics14234620

Zhang R, Song Y, Zhang R, Lei Y, Cheng H, Zhong J. A Novel Anti-UAV Detection Method for Airport Safety Based on Style Transfer Learning and Deep Learning. Electronics. 2025; 14(23):4620. https://doi.org/10.3390/electronics14234620

Chicago/Turabian StyleZhang, Ruiheng, Yitao Song, Ruoxi Zhang, Yang Lei, Hanglin Cheng, and Jingtao Zhong. 2025. "A Novel Anti-UAV Detection Method for Airport Safety Based on Style Transfer Learning and Deep Learning" Electronics 14, no. 23: 4620. https://doi.org/10.3390/electronics14234620

APA StyleZhang, R., Song, Y., Zhang, R., Lei, Y., Cheng, H., & Zhong, J. (2025). A Novel Anti-UAV Detection Method for Airport Safety Based on Style Transfer Learning and Deep Learning. Electronics, 14(23), 4620. https://doi.org/10.3390/electronics14234620