Abstract

Phishing remains a persistent and evolving cyber threat, constantly adapting its tactics to bypass traditional security measures. The advent of Machine Learning (ML) and Neural Networks (NN) has significantly enhanced the capabilities of automated phishing detection systems. This comprehensive review systematically examines the landscape of ML- and NN-based approaches for identifying and mitigating phishing attacks. Our analysis, based on a rigorous search methodology, focuses on articles published between 2017 and 2024 across relevant subject areas in computer science and mathematics. We categorize existing research by phishing delivery channels, including websites, electronic mail, social networking, and malware. Furthermore, we delve into the specific machine learning models and techniques employed, such as various algorithms, classification and ensemble methods, neural network architectures (including deep learning), and feature engineering strategies. This review provides insights into the prevailing research trends, identifies key challenges, and highlights promising future directions in the application of machine learning and neural networks for robust phishing detection.

1. Introduction

In recent years, the need to ensure comprehensive cybersecurity on a global scale has become increasingly evident. The growing sophistication and volume of cyber threats have prompted research institutions and industry stakeholders worldwide to focus on enhancing the efficiency of threat detection systems. This includes the design and deployment of more advanced and effective countermeasures. Within this context, phishing attacks remain one of the most pervasive and adaptive forms of cybercrime, and their evolution is closely tied to the rapid expansion of digital communication platforms and services. The global landscape suggests that further changes in phishing techniques are inevitable, driven by the continuous growth in attack volume and the diversity of delivery channels.

A widely accepted definition of phishing is provided by the Anti-Phishing Working Group (APWG) [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32], an international coalition that coordinates the global response to phishing and cybercrime. This definition captures phishing’s core characteristics and is frequently cited in research and industry reports. According to APWG,

Definition 1. “Phishing is a crime employing both social engineering and technical subterfuge to steal consumers’ personal identity data and financial account credentials. Social engineering schemes prey on unwary victims by fooling them into believing they are dealing with a trusted, legitimate party, such as by using deceptive email addresses and messages, bogus web sites, and deceptive domain names. These are designed to lead consumers to counterfeit Web sites that trick recipients into divulging financial data such as usernames and passwords. Technical subterfuge schemes plant malware onto computers to steal credentials directly, often using systems that intercept consumers’ account usernames and passwords or misdirect consumers to counterfeit Web sites” [32].

The general overview of early phishing detection methods are presented in Table 1. Each method provided incremental improvements but suffered from high false negative rates, limited adaptability, or high computational costs.

Table 1.

Evolution of phishing detection methods (2000–2016).

The earliest scientific publications on phishing indexed in Scopus (https://www.scopus.com) appeared in 2006, marking the formal beginning of academic research in this field. Detection methods have since evolved rapidly. Starting around 2016, these methods began to be widely replaced or supplemented by Machine Learning (ML) and Neural Network (NN) approaches. This shift reflects the need for more adaptive, data-driven systems capable of addressing zero-day attacks and evolving threat patterns. The present article examines this transformation in depth, providing a structured analysis of research published between 2017 and 2024, identifying key methodological trends, evaluating technical implementations, and mapping global contributions. By synthesizing existing knowledge, it aims to clarify the current state of the field, highlight gaps in research, and suggest potential directions for future development.

In the current literature, there is no deployment-oriented synthesis across the four delivery channels through which phishing is propagated (Websites, Electronic Mail, Malware, and Social Networking) that comparably examines data quality, leakage risk between training and test sets, time-aware validation, model selection procedures, and system-level metrics.

This article addresses this gap by introducing a unified assessment of selected studies in Table 2, which defines fields that normalize evidence and track common validity threats, including leakage and temporal drift, and linking these fields to per-channel deployment checklists that translate the literature into actionable guidance. In addition, we complement the synthesis with a coherent categorization of the corpus and a quantitative summary that organize studies by delivery channel, classes of ML and neural network methods, methodological practices, and geographic distribution. We complement this with a synthesis of findings from cross-sectional cross-tabulations that show the diversity of technique and methodology profiles observed across phishing delivery channels.

Table 2.

Structured appraisal rubric for included studies.

2. Materials and Methods

This article presents a review of the literature on phishing detection methods using ML and NN. The aim was to collect, organize, and analyze studies published between 2017 and 2024. The scope includes examines phishing delivery channels, ML models and techniques, as well as research methodologies.

2.1. Data Retrieval and Corpus Construction

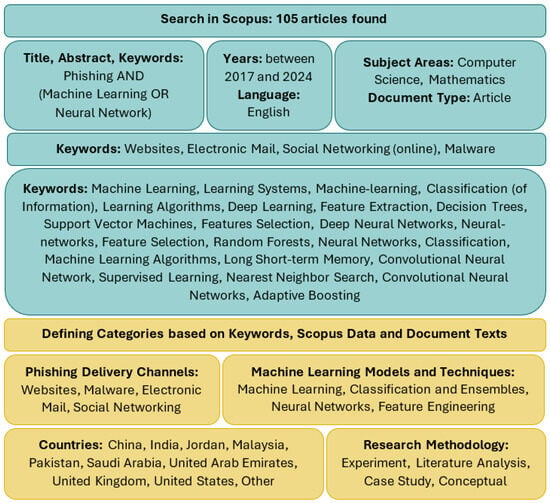

To ensure a focused review, bibliographic data were retrieved from the Scopus database. A structured search strategy was developed to capture research on phishing detection using machine learning or neural networks (Figure 1). The search query was formulated to match occurrences of the term phishing combined with either machine learning or neural network in the title, abstract, or keywords fields. The search was limited to journal articles published between 2017 and 2024, written in English, and indexed under the Computer Science or Mathematics subject areas. The time frame was set between 2017 and 2024 because earlier years showed very limited coverage of this topic in Scopus, with only sporadic publications indexed before 2017. The end year was set to 2024, since 2025 is still in progress and does not yet provide a complete set of annual research outputs. Publications from unrelated subject areas, such as medicine, economics, or the arts, were excluded using Scopus filters. To focus on detection methods tailored to individual delivery channels (Websites, Electronic Mail, Social Networking (online), and Malware), an additional “Limit to” filter was applied.

Figure 1.

Overview of the search strategy and thematic scope for data retrieval. The Scopus query focused on phishing detection using Machine Learning (ML) and Neural Networks (NN) between 2017 and 2024, filtered by subject area, document type, language, and index keywords reflecting delivery channels and learning techniques. A total of 105 articles met the final criteria. Source: Scopus, search performed 21 July 2025.

To allow replication of the dataset, we provide the exact wording of the query:

TITLE-ABS-KEY (“Phishing” AND (“Machine Learning” OR “Neural Network”))

AND PUBYEAR > 2016 AND PUBYEAR < 2025

AND (EXCLUDE (SUBJAREA, “CENG”) OR EXCLUDE (SUBJAREA, “ARTS”) OR EXCLUDE (SUBJAREA, “NEUR”) OR EXCLUDE (SUBJAREA, “ECON”) OR EXCLUDE (SUBJAREA, “ENVI”) OR EXCLUDE (SUBJAREA, “BUSI”) OR EXCLUDE (SUBJAREA, “MEDI”) OR EXCLUDE (SUBJAREA, “PHYS”) OR EXCLUDE (SUBJAREA, “ENER”) OR EXCLUDE (SUBJAREA, “MATE”) OR EXCLUDE (SUBJAREA, “ENGI”) OR EXCLUDE (SUBJAREA, “MULT”) OR EXCLUDE (SUBJAREA, “PHAR”) OR EXCLUDE (SUBJAREA, “EART”) OR EXCLUDE (SUBJAREA, “CHEM”) OR EXCLUDE (SUBJAREA, “BIOC”) OR EXCLUDE (SUBJAREA, “SOCI”) OR EXCLUDE (SUBJAREA, “DECI”))

AND (LIMIT-TO (DOCTYPE, “ar”))

AND (LIMIT-TO (LANGUAGE, “English”))

AND (LIMIT-TO (EXACTKEYWORD, “Websites”))

OR LIMIT-TO (EXACTKEYWORD, “Electronic Mail”)

OR LIMIT-TO (EXACTKEYWORD, “Social Networking (online)”)

OR LIMIT-TO (EXACTKEYWORD, “Malware”)

Finally, we further refined the keywords to capture studies involving specific machine learning models and techniques:

AND (LIMIT-TO (EXACTKEYWORD, “Machine Learning”))

OR LIMIT-TO (EXACTKEYWORD, “Learning Systems”)

OR LIMIT-TO (EXACTKEYWORD, “Machine-learning”)

OR LIMIT-TO (EXACTKEYWORD, “Classification (of Information)”)

OR LIMIT-TO (EXACTKEYWORD, “Learning Algorithms”)

OR LIMIT-TO (EXACTKEYWORD, “Deep Learning”)

OR LIMIT-TO (EXACTKEYWORD, “Feature Extraction”)

OR LIMIT-TO (EXACTKEYWORD, “Decision Trees”)

OR LIMIT-TO (EXACTKEYWORD, “Support Vector Machines”)

OR LIMIT-TO (EXACTKEYWORD, “Features Selection”)

OR LIMIT-TO (EXACTKEYWORD, “Deep Neural Networks”)

OR LIMIT-TO (EXACTKEYWORD, “Neural-networks”)

OR LIMIT-TO (EXACTKEYWORD, “Feature Selection”)

OR LIMIT-TO (EXACTKEYWORD, “Random Forests”)

OR LIMIT-TO (EXACTKEYWORD, “Neural Networks”)

OR LIMIT-TO (EXACTKEYWORD, “Classification”)

OR LIMIT-TO (EXACTKEYWORD, “Machine Learning Algorithms”)

OR LIMIT-TO (EXACTKEYWORD, “Long Short-term Memory”)

OR LIMIT-TO (EXACTKEYWORD, “Convolutional Neural Network”)

OR LIMIT-TO (EXACTKEYWORD, “Supervised Learning”)

OR LIMIT-TO (EXACTKEYWORD, “Nearest Neighbor Search”)

OR LIMIT-TO (EXACTKEYWORD, “Convolutional Neural Networks”)

OR LIMIT-TO (EXACTKEYWORD, “Adaptive Boosting”)

Only articles containing at least one term from a predefined list of 23 machine learning-related keywords (e.g., support vector machines, deep neural networks, feature selection) were retained. This step resulted in a final set of 105 articles.

Based on the index keywords applied in this initial filtering step, the first thematic grouping was established under the category Phishing Delivery Channels, comprising four distinct types: Websites, Malware, Electronic Mail, and Social Networking (Section 3). Subsequently, we used index-keyword filtering to define a second thematic grouping, Machine Learning Models and Techniques, encompassing machine learning, neural networks, classification and ensemble methods, and feature engineering. Additionally, authors’ countries of affiliation were identified from Scopus metadata. The Research Methodology category was derived by manual content analysis of the articles.

The metadata of the selected publications were exported to a Comma-Separated Values (CSV) file containing details such as title, authors, year of publication, and other bibliographic fields. This file was then imported into a PostgreSQL 16.2 database to enable query-based analysis, data mining, and aggregation via Structured Query Language (SQL). The process was fully automated using a Python 3.12.2 script, which also generated tables and graphs to support further analysis. The data were exported on 21 July 2025. Throughout the remainder of this article, we refer to this dataset as the corpus to avoid confusion with other datasets used in the study.

All relevant replication materials, including the raw scopus.csv export (Table S1), the thesaurus_mapping.csv file (Table S2), and the apwg_data.csv dataset (Table S3), are provided in the Supplementary Materials to enable full replication of the analysis.

2.2. Supplementary Data Sources

To provide a broader empirical context for the review, this study incorporates statistical data published by the Anti-Phishing Working Group (APWG) in its Phishing Activity Trends Reports [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32]. These quarterly reports are recognized as one of the most authoritative global sources on phishing activity, offering aggregated metrics such as the number of unique phishing websites, the volume of phishing email campaigns, and the number of targeted brands. Incorporating APWG data documents changes in the volume assets of phishing attacks over time, enabling interpretation of research trends alongside real-world developments in the threat landscape.

For this study, APWG data for 2017–2024 were obtained from official reports on the organization’s website (https://apwg.org/trendsreports (accessed on 8 August 2025)). In particular, the data were manually extracted from the listed quarterly reports and processed using a Python 3.12.2 script. In later sections, these figures are used to divide the study period into two distinct intervals, highlighting a clear shift in the phishing dynamics, with a relatively stable phase followed by a period of sharp, sustained growth in activity.

2.3. Bibliometric Analysis Procedure

To gain a comprehensive understanding of research directions and thematic structures in phishing detection using machine learning and neural networks, we conducted a bibliometric analysis. This approach enables the identification of key concepts, their interconnections, and emerging trends within the scientific literature. The objective was to identify and visualize the most significant research themes and their relationships.

The analysis was conducted using VOSviewer (version 1.6.20, https://www.VOSviewer.com), which generated a co-occurrence map of keywords derived from Scopus bibliographic data. The dataset used for this purpose comprised the 105 documents described in Section 2.1, exported from Scopus in CSV format. Index keywords were considered, with a thesaurus file applied that introduced minimal intervention—limited solely to resolving spelling differences—in order to preserve the most faithful observation of the dataset. A minimum occurrence threshold of 5 was set, and fractional counting was applied to measure link strengths. This configuration ensured a balanced and reliable representation of keyword relationships in the analyzed corpus.

2.4. Review Protocol and Publication Quality

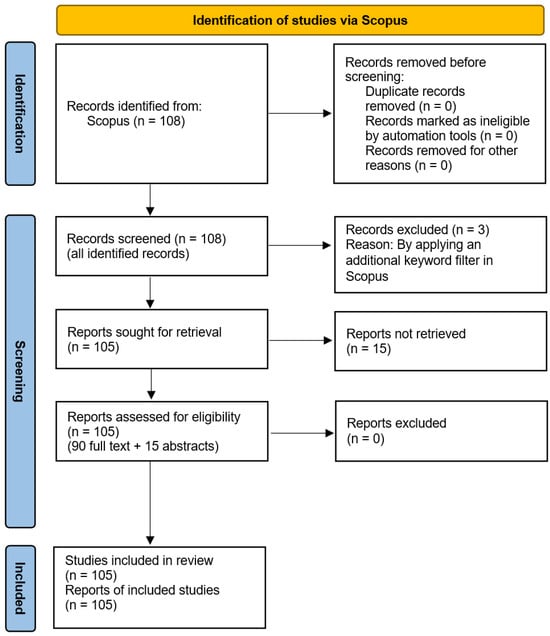

This systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) framework. The process was carried out in three main stages (Figure 2):

Figure 2.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow diagram illustrating the identification, screening, eligibility assessment, and inclusion of studies retrieved from Scopus.

- In the identification stage, a comprehensive search was conducted in the Scopus database. The search strategy used a defined set of keywords applied to titles, abstracts, or author keywords in order to capture relevant publications. Filters were applied to restrict the results to English-language articles within the defined time frame (2017–2024). Records from unrelated subject areas were removed. A total of 108 records were identified.

- In the screening stage, all 108 identified in the previous step records were examined. Three records were excluded after applying an additional keyword filter in Scopus. This left 105 records for further retrieval.

- In the eligibility assessment, 90 full-text articles and 15 abstracts were reviewed. The inclusion of abstracts helped maintain methodological consistency and increased the sample size, which was essential for conducting a reliable quantitative analysis. Although abstracts provide less detail than full texts, they contain key information on the scope of the study, the applied methods, and the main findings, making them a valuable source of data in a systematic review.

The quality of the included publications (full texts and abstracts) was ensured by selecting only peer-reviewed articles indexed in Scopus. The selection covered major publishers such as Springer, Elsevier, the Institute of Electrical and Electronics Engineers (IEEE), and the Multidisciplinary Digital Publishing Institute (MDPI), as well as other recognized peer-reviewed journals including the Institution of Engineering and Technology (IET), Hindawi (Wiley), and the International Journal of Advanced Computer Science and Applications (IJACSA). The final set of 105 publications represented both recent studies with few citations and highly cited works, showing the coexistence of emerging approaches and established research.

Each publication was independently assessed by two authors, with disagreements resolved through discussion to reach a consensus. This process enabled accurate multi-labeling of hybrid publications, as reflected in the tables in Section 4. The evaluation considered topic relevance to phishing detection, publication completeness, and methodological clarity. The verification was consistent with the results obtained from the search process.

2.5. Study Quality and Risk-of-Bias Assessment

To ensure the credibility and reliability of the review, each included study was systematically assessed for methodological quality and potential sources of bias. A structured appraisal rubric was developed to evaluate common threats to validity in machine learning-based phishing detection research (Table 2). The evaluation considered the following main aspects: data quality, class balance, external sources used (blacklists/metadata), risk of data leakage, validation method, model selection procedure, evaluation metrics, and handling of class imbalance. This process ensured a consistent basis for comparing studies and made it possible to identify common weaknesses.

The column Data quality reports how the dataset was constructed and from which sources it was obtained (single-source, combined; repository names as applicable), then records the acquisition window or snapshot used and any preprocessing steps that affect inclusion such as duplicate removal, unreachable links, or Uniform Resource Locator (URL) sanitation; the entry concludes with one overall item count for the entire dataset. This scope keeps provenance and basic quality controls together. Note on “Total items”: Even when per-source counts are listed, a single overall total is often unavailable or unreliable because sources commonly overlap and must be deduplicated; authors may not specify the exact snapshot or time window used for each source; and preprocessing steps such as URL validation, removal of duplicates, and filtering unreachable or malformed entries change the final size. Unless the paper reports the post-processing size of the dataset actually used for training and testing, this field is recorded as Not reported.

The Class balance column begins with a short status (e.g., Balanced, Imbalanced, or Not reported), then shows the distribution between phishing and benign classes. If the authors report per-split distributions, the column presents the Train, Validation, and Test splits. If only an overall distribution is reported, the column reflects that. If the information is missing, the cell states Not reported.

The column External sources used (blacklists/metadata) states whether a study relied on external sources either for labels or for input metadata, which helps normalize evidence across papers and assess comparability and leakage risk. Cells follow a fixed pattern: “Labels: ... Metadata: ...”. Labels indicate the origin of ground-truth class assignments, for example PhishTank or OpenPhish, preferably with a snapshot date or version if provided. Metadata refers only to external signals obtained beyond the Uniform Resource Locator (URL) string itself, for example, Registration Data Access Protocol (RDAP) registration data, Domain Name System (DNS) records such as Address (A) and Name Server (NS) records with properties like time to live (TTL), and Transport Layer Security (TLS) certificate information including Certificate Transparency (CT) evidence. These sources are transformed into numeric or categorical features, such as domain age, registrar, record counts, TTL values, issuer fields, and presence in CT logs, and then used as model inputs. Features derived solely from the Uniform Resource Locator (URL) string are not external metadata in this column. In such cases, write “Metadata: none”.

The column Risk of data leakage indicates the likelihood that the reported results may have been affected by an unintended overlap between training and test data. A Low rating indicates that the dataset was clearly separated between training and test sets, with no evidence of overlap. A Medium rating indicates that multiple datasets were combined and/or the separation procedure was insufficiently described, leaving the possibility of overlap between training and test sets. A High rating indicates that studies either provided insufficient information or used procedures that strongly suggest a risk of overlap between the training and test sets.

The column Validation method specifies how each study divided the dataset into training, validation, and test sets. The most common strategy is a hold-out split. In this approach, the dataset is divided once into set parts, for example, 80/20 (80% for training and 20% for testing) or 60/20/20 (60% for training, 20% for validation, and 20% testing). A variant is the random split, where the partitioning is performed randomly. If class proportions are preserved within each subset, this is termed a stratified random split. Another common approach is k-fold cross-validation (CV), in which the dataset is split into k folds, and the model is trained and tested k times, each time using a different fold as the test set; when k is specified, it is written as, for example, 10-fold CV. A more rigorous design, nested CV, uses an inner loop for hyperparameter tuning and an outer loop for performance estimation, thereby reducing bias from model selection. In the table, the terminology follows the authors’ descriptions; when not explicitly stated, the generic term hold-out split is used to denote a fixed partition of the dataset. Because URL liveness and labels age, time-based splits are necessary to estimate performance under drift rather than on mixed-era samples.

The column Model selection procedure describes how the final model and its hyperparameters were chosen. Not reported means that the procedure was not described.

The column Evaluation/system metrics presents, for each study, the performance criteria used to assess predictive quality and, where available, quantitative characteristics of computational cost. The evaluation part enumerates metric families such as Accuracy, Precision, Recall, F1-score, Receiver Operating Characteristic Area Under the Curve (ROC AUC), and Matthews Correlation Coefficient (MCC). The System metrics part reports numerical efficiency and resource indicators provided by the authors, including training and inference time, per-request latency, throughput, and memory or model size, with values and units exactly as stated in the source. When a study does not include runtime, latency, memory, or throughput figures, this part indicates that such cost or time metrics were not reported.

Based on the approaches discussed in recent studies on imbalanced learning [140,141,142], the authors adopted a three-level categorization to assess how class imbalance was handled in the reviewed publications. The column Handling of class imbalance reflects whether and how the studies addressed the problem of unequal class distribution in phishing datasets. A Not addressed rating indicates that the study relied primarily on accuracy or omitted any discussion of class imbalance. Partially addressed (metrics only) means that the authors reported appropriate evaluation metrics such as Precision, Recall, F1-score, MCC, or AUC, but did not apply explicit balancing techniques. Adequately addressed (metrics and techniques) refers to studies that combined suitable metrics with explicit methods such as Synthetic Minority Over-sampling Technique (SMOTE), undersampling, or class weighting to mitigate the effects of imbalance.

Table 2 was compiled from full-text analysis of all included articles, based on a predefined appraisal rubric. Two authors independently coded each study, and any disagreements were resolved through discussion until consensus was reached. The table was prepared manually in a word processor rather than generated by software. To support replication, a concise legend placed directly below Table 2 explains the meaning and coding rules for every column, and the Supplementary Materials include the Scopus export that lists all publications considered in the review.

2.6. Summary

This study combines a constructed Scopus corpus of 105 journal articles on phishing detection using machine learning or neural networks (2017–2024) with statistical data from the APWG to compare research trends with real-world attack dynamics. The corpus was compiled using a structured, replicable query restricted to relevant subject areas, delivery channels, and a predefined set of 23 machine-learning keywords. APWG quarterly reports provide authoritative global metrics on phishing activity, enabling contextual interpretation of bibliometric results. Keyword co-occurrence analysis using VOSviewer identified key research themes and their interconnections, forming the basis for the thematic analysis in subsequent sections.

During the preparation of this work, the authors used ChatGPT (GPT-4.5, GPT-5, and GPT-5 Thinking; OpenAI, https://chat.openai.com) to refine the language.

3. Deployment Checklists by Phishing Delivery Channel

Section 3.1, Section 3.2, Section 3.3 and Section 3.4 translate our review findings into actionable deployment checklists for each phishing delivery channel. For each channel, we summarize privacy controls, data collection risks, fail-safe behavior, model updates or rollbacks, and explainability for analyst triage, with each item anchored in the evidence fields captured in Table 2. This framing clarifies what the reported results imply for engineering and operations across contexts.

Across all channels, privacy controls follow a common baseline. Limit collection and retention to what is necessary for detection, prefer on-device feature extraction, remove direct identifiers when telemetry leaves a device, keep raw artifacts only for short, defined windows, and document any third-party inputs using the exact Table 2 columns External sources used (blacklists/metadata) and Data quality. Channel sections provide representative examples from Table 2 rather than an exhaustive catalog. Further detailed rules and recommendations on privacy controls are available in legal sources [143] and technical frameworks [144]. This article focuses on translating the evidence encoded in Table 2 into deployable, channel-specific controls with representative examples.

Data collection risks are consistently addressed using the study-level evidence recorded in Table 2. Deployment should mirror the controls in Table 2 by documenting snapshot windows, applying deduplication and liveness or crawl-validity checks, preventing cross-split overlap, and stating post-processing class balance. When sources are continuously updated, use time-aware splits to reduce temporal leakage and reflect the order of arrival in production. These practices map to Table 2 fields (Data quality, Risk of data leakage, Class balance, and Validation method), and address limitations noted in the corpus regarding outdated data, overlap, and drift.

Fail-safe behavior and safe defaults use the same vocabulary as Evaluation/system metrics field in Table 2. Where latency, throughput, memory, or runtime are reported, use them to set timeouts, backoff, caching, and degradation paths for partial features or service unavailability. When cost metrics are not reported in a source study, record Not reported in Table 2, and define explicit operational budgets for deployment.

Model updates and rollbacks adhere to the validation and selection practices documented in Table 2. Version data snapshots, models, feature schemas, and any External sources used, gate promotions with shadow or forward-chaining tests consistent with the recorded Validation method, and keep a last-known-good bundle for rapid rollback. Where Model selection procedure was not nested or not reported, treat pre-deployment checks and canary thresholds as mandatory safeguards.

Explainability for triage provides concise, case-level reasons consistent with the features actually used by the model in each channel. Store the top-contributing indicators with the prediction, link them to the model version and data snapshot ID, and retain only the minimal artifacts needed for audit. Channel sections surface the kinds of indicators reported in the studies and tie them to the Evaluation/system metrics evidence.

Finally, the channel subsections present evidence-backed examples drawn from Table 2. They are representative rather than exhaustive and can be extended in future revisions by first documenting additional signals in Table 2 and then incorporating them into the corresponding checklists.

3.1. Deployment Checklist for the Phishing Delivery Channel: Websites

This checklist is anchored in the evidence fields in Table 2 for the Websites channel (Data, Data quality, Risk of data leakage, Class balance, Validation method, Model selection procedure, External sources used (blacklists/metadata), Use of external lists or metadata) [34,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95].

3.1.1. Privacy Controls

For website detectors that process URLs, Hypertext Markup Language (HTML), or rendered snapshots, Table 2 documents feature families such as URL lexical tokens [92], DOM-, HTML-, or render-derived features [91], and third-party metadata where applicable, including WHOIS domain registration records (WHOIS), DNS, and TLS certificate fields [36,37,90]. Use the table’s column names when documenting provenance in the External sources used (blacklists/metadata) field and data handling in the Data quality field [34,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95].

3.1.2. Data Collection Risks

Make data collection reproducible and contamination-aware. Table 2 records snapshot dates (when reported), deduplication, liveness or crawl-validity checks, class balance, and overlap between training and test URL lists; mirror these controls by documenting snapshot windows, enforcing deduplication and liveness checks, and preventing cross-split overlap [36,37,75,92]. Typical risks identified in Table 2 include merged sources without clear separation [36,37], missing deduplication in hold-out or CV settings [92], and mismatched labeling in mixed live and archival sets [75].

3.1.3. Failsafe Behavior and Safe Defaults

Align operational safeguards with the Evaluation/system metrics field in Table 2. Where cost figures exist, set timeouts and degradation paths accordingly; examples include prototype/extension response times [37] and per-request detection/classification times [92]. If metrics are not reported, state this explicitly and record results using the same vocabulary [37,92]. Common patterns include time limits for rendering [37,91,94] and fallback to URL-only features when HTML is unavailable [43,44,92].

3.1.4. Model Updates and Rollback

Keep versioned, dated snapshots of models, feature schemas, and any External sources used (blacklists/metadata) as recorded in Table 2. Gate promotions using the Validation method actually reported (e.g., 10-fold or 5-fold CV; hold-outs) and keep decisions consistent with the documented Model selection procedure (e.g., GridSearchCV, Bayesian optimization, or Not reported) [36,37,88,92]; pin versions or snapshots of external sources where applicable [75,90].

3.1.5. Explainability for Triage

Provide concise case-level rationales consistent with feature families used by the Websites studies. Table 2 indicates which studies report feature importance or instance-level diagnostics; for example, random forest importance reports and per-instance cues in website classifiers [75]. Surface influential URL tokens, key DOM/HTML elements, and simple visual cues when those features are used by the model; link explanations to the model version and data snapshot ID [75].

3.2. Deployment Checklist for the Phishing Delivery Channel: Malware

This checklist is anchored in the evidence fields of Table 2 for the Malware channel [36,55,66,69,92,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116].

3.2.1. Privacy Controls

Representative signals documented for this channel include dynamic Application Programming Interface (API) call sequences captured prior to encryption in the RISS ransomware dataset [98], Android static and dynamic features, such as declared permissions and selected API call counts, reported for Drebin [99], and network-level aggregates used in the included studies, for example, NetFlow statistics from CTU-13 [101]. Prefer transmitting derived features documented in Table 2 rather that raw binaries or packet captures [98,99,101].

3.2.2. Data Collection Risks

Table 2 indicates typical risks for Malware studies that deployments should mirror and mitigate [98,99,100,101,104]. Examples include merged sources or mixed benign/malicious collections without deduplication or temporal isolation [99,104], single-scenario NetFlow evaluations without flow-correlation isolation [101], and random or k-fold splits without nesting of model selection [98,101,104]. Use time-aware splits where feeds evolve, avoid cross-split near-duplicates, and document snapshot windows.

3.2.3. Failsafe Behavior and Safe Defaults

System-cost reporting is often sparse for Malware entries in Table 2, with the Evaluation/system metrics field frequently marked Not reported [98,99,101]. Define explicit timeouts, backoff, and safe defaults, and record degradation paths when features or services are unavailable, then log outcomes using the same metric vocabulary used for evaluation.

3.2.4. Model Updates and Rollback

Version models, feature schemas, and any External sources used (blacklists/metadata) listed in Table 2, and keep immutable, dated snapshots [98,99]. Gate promotions using the same Validation method recorded for this channel and keep decisions consistent with the documented Model selection procedure [98,101,104]. Monitor and log field behavior using the vocabulary of Evaluation/system metrics field, noting explicitly when system metrics are not reported in the source studies [98,99,101]. Pin versions and refresh cadence for external sources following the table’s “Labels: …; Metadata: …” pattern [98,99,101,104,108].

3.2.5. Explainability for Triage

Provide case-level rationales mapped to the feature families recorded for the Malware channel in Table 2. For ransomware pre-encryption detectors, surface the most influential dynamic API call sequences prior to encryption, as reported for the RISS dataset [98]. For Android malware, show top-contributing static permissions and selected API-call counts consistent with Drebin-based analyses [99]. For traffic-driven detectors, report aggregates aligned with the literature, for example, NetFlow statistics in CTU-13 [101] and DNS-derived fields, such as TTL distributions and query types, in ISOT botnet experiments [104]. Keep summaries concise and restricted to inputs documented in Table 2 for this channel.

3.3. Deployment Checklist for the Phishing Delivery Channel: Electronic Mail

This checklist is anchored in the evidence fields of Table 2 for the Electronic Mail channel (Data, Data quality, Risk of data leakage, Class balance, Validation method, Model selection procedure, Evaluation/system metrics, External sources used (blacklists/metadata)) [45,50,69,75,92,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134].

3.3.1. Privacy Controls

Use signals that studies actually derive from messages: header and body features and attributes of embedded URLs [119,121,122,123]. Representative inputs in Table 2 include header irregularities and sender–recipient patterns, tokenized subject/body features, and URL-level vectors [117,119,121,122,123]. Keep references aligned with the table’s Data quality and External sources used (blacklists/metadata) fields.

3.3.2. Data Collection Risks

Table 2 highlights the risks of merged corpora without thorough deduplication or time-aware separation, and of random splits or k-fold CV that allow leakage across folds [117,119,121,122,123]. Examples include 10-fold CV without deduplication or timestamp isolation [117], and multi-corpus merges with benign-only deduplication and no cross-split deduplication [119]. Mirror the controls in Table 2 by documenting snapshot windows and preventing cross-split overlap.

3.3.3. Failsafe Behavior and Safe Defaults

For many e-mail entries, System metrics are Not reported or limited to training-time figures [117,121]. Use the Evaluation/system metrics vocabulary from Table 2 when recording costs in deployment, and note explicitly when a source study provides no system metrics.

3.3.4. Model Updates and Rollback

Align promotions with the Validation method and Model selection procedure used in the channel studies. Table 2 records random hold-out and k-fold protocols for merged datasets [119,121]; where sources evolve over time, prefer date-aware checks consistent with these entries, and keep snapshot references for comparability.

3.3.5. Explainability for Triage

Provide short rationales tied to the feature families evidenced in the e-mail rows. Surface the most influential header or body indicators and URL attributes when these features are part of the model [117,121,122,123]. Keep explanations consistent with the inputs and metric families used in Table 2 for this channel.

3.4. Deployment Checklist for the Phishing Delivery Channel: Social Networking

This checklist is anchored in the evidence fields of Table 2 for the Social Networking channel (Data quality, Risk of data leakage, Class balance, Validation method, Model selection procedure, Evaluation/system metrics, External sources used (blacklists/metadata)) [85,100,103,135,136,137,138,139].

3.4.1. Privacy Controls

Limit data collection and storage to the signal families actually used by studies in this channel: domain reputation of linked URLs in Twitter spam detection [100]; account- and content-level features for malicious-user detection [103]; profile-level features for Instagram fake-account detection [135]; and behavioral signals relevant to Sybil and multi-account deception [138,139]. Document how these signals are derived and retained, and avoid processing raw personal content beyond what these feature sets require.

3.4.2. Data Collection Risks

Guard against leakage when datasets are merged and randomly split. Table 2 flags a high leakage risk in a study that combined Twitter and Instagram accounts using an 80/20 random hold-out without identity-level separation or deduplication [103]. Use identity- or account-level isolation and avoid random splits in such settings.

When URL or domain features are part of the feature set, ensure grouping and deduplication policies prevent cross-split overlap of identical or near-duplicates, consistent with the risk patterns highlighted for this channel [100,103].

3.4.3. Failsafe Behavior and Safe Defaults

When features or feeds are partially unavailable, degrade gracefully by relying on feature families evidenced in Table 2 for this channel, for example domain reputation [100], account or profile features [103,135], and behavioral cues for Sybil or multi-account deception [138,139].

3.4.4. Model Updates and Rollback

Align update checks with the Validation method and Model selection procedure recorded fields recorded for Social Networking entries. For example, mirror the reported hold-out or cross-validation setup during pre-promotion tests, and assess changes using the evaluation metrics reported in Table 2 (Accuracy, Precision, Recall, F1) [103]. Keep versioned, dated snapshots of models and feature schemas so you can revert if metrics regress.

3.4.5. Explainability for Triage

Surface the most influential signals that correspond to Table 2 features for this channel: report reputation indicators for linked domains in tweet-borne spam [100], profile- and content-level attributes used by malicious-user and Instagram fake-account detectors [103,135], and behavioral patterns relevant to Sybil or multi-account deception [138,139]. Keep summaries concise and consistent with the feature families Table 2 documents for Social Networking.

3.5. Summary

Each checklist item maps to Table 2 fields for the corresponding channel, so readers can trace operational guidance back to the reported validation methods, model selection procedures, leakage risks, and system metrics.

4. Discussion

This section presents a comprehensive analysis of research on phishing detection using Machine Learning (ML) and Neural Networks (NN). The analysis is based on the curated Scopus corpus described in Section 2. The results are organized to present both the conceptual landscape and the methodological distribution of studies published between 2017 and 2024. The discussion begins with a keyword co-occurrence analysis. This step highlights dominant topics and their interconnections within the dataset. The section then examines the relationship between global phishing activity and research engagement. A categorization framework is applied to classify publications by delivery channel, applied ML/NN techniques, and research methodology. Subsequent sections investigate international contributions. This is followed by an analysis of methodological patterns across channels. The structure enables identification of dominant approaches, persistent gaps, and emerging areas of interest. This multi-layered provides a foundation for interpreting how technical and methodological trends align with evolving phishing threats.

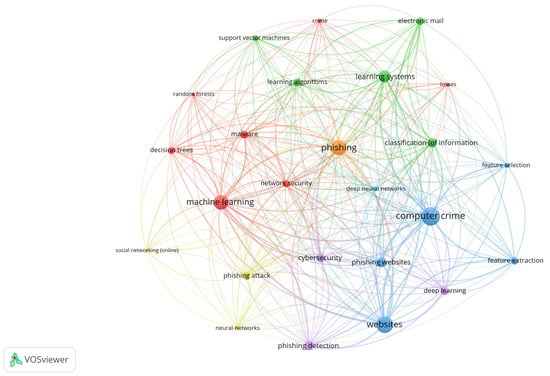

4.1. Keyword Co-Occurrence Map: Dataset, Parameters, and Metrics

This subsection provides a quantitative overview of the keyword landscape in the Scopus corpus exported on 21 July 2025. We use VOSviewer to construct a co-occurrence map from bibliographic data (Figure 3), focusing on index keywords and normalizing terms with a thesaurus file. A minimum occurrence threshold of five was applied; 49 of 737 keywords met this criterion. Fractional counting was used and the 25 most relevant terms were selected for visualization. We report three standard VOSviewer metrics: occurrences (how many publications in this corpus include a given keyword), co-occurrence (how often two keywords appear together in the same publication, with contributions down-weighted for records listing many keywords) and total link strength (the overall strength of a keyword’s connections to all other keywords in the map) [145]. The purpose of Section 4.1 is to complement the qualitative review by identifying the dominant topics and the strongest interrelations strictly within this dataset and configuration.

Figure 3.

Network visualization of relationships between keywords generated using VOSviewer software [146].

In the analyzed map, the most frequent keywords (Occurrences) are computer crime (n = 68), websites (n = 61), phishing (n = 55), machine learning (n = 50), learning systems (n = 39), phishing websites (n = 31), classification (of information) (n = 30), phishing detection (n = 29), cybersecurity (n = 27), and malware (n = 25). The ranking by total link strength matches the ranking by occurrences (same ordering and values): computer crime (68), websites (61), phishing (55), machine learning (50), learning systems (39), phishing websites (31), classification (of information) (30), phishing detection (29), cybersecurity (27), and malware (25). The co-occurrence analysis shows that frequency and connectivity coincide: the most frequent terms are also the most strongly connected to the rest of the vocabulary. No rare yet structurally central terms emerge, and there are no very frequent but weakly connected terms. As a result, the network exhibits a compact conceptual core dominated by a small set of general keywords; we do not observe bridging niche terms that would tie distant topical areas, and the diversity of cross-topic relations is correspondingly limited. These counts refer exclusively to publications in this corpus.

We analyze the color-coded clusters in the VOSviewer co-occurrence map to see how keywords group together and how strongly they are connected within this corpus. For each cluster, we report the top keywords by Occurrences and by Total Link Strength to establish both frequency and connectivity. We describe internal connectivity by reporting the number of links that key terms in the cluster have with other terms and by identifying the strongest edges within the cluster and to neighboring clusters. We also check alignment with the delivery channels introduced in Section 3 (Websites, Malware, Electronic Mail, Social Networking), ensuring that the quantitative structure matches the substantive organization of the review. Finally, we add practical significance—what the observed patterns suggest for data, features, model placement, or evaluation—stating such implications cautiously when the evidence is indirect.

Across clusters, we look for signals that shape the narrative: whether bridging terms appear (rare but central keywords that connect distant areas) or are absent; whether the map shows cohesion or separation (a compact core versus dispersed topics); and whether frequency and connectivity are consistent (that is, whether Occurrences and TLS identify the same or different sets of key terms).

Cluster 1 (red, machine-learning-centric)

Within this cluster, the dominant keywords are machine learning (n = 50), malware (n = 25), decision trees (n = 21), network security (n = 21), crime (n = 10), losses (n = 9), and random forests (n = 9). Internally, the subgraph is fully connected: every term links to every other term in the cluster, with the strongest internal edges observed for decision trees–machine learning (≈3.31), machine learning–malware (≈3.09), and malware–network security (≈1.66). Externally, this cluster is tightly integrated with the network’s core concepts: machine learning forms high-weight links to phishing (≈5.66) and websites (≈4.59), and it also connects to phishing detection (≈2.63) and electronic mail (≈2.12). Degree counts underline this connectivity: machine learning and malware are linked to 24 of the 24 other selected terms (Links = 24, i.e., 24 is the maximum possible number of links in this map given the selected parameters), and network security links to 23. Taken together, these patterns indicate that the cluster aligns with multiple delivery channels from Section 3—most directly with Malware (present inside the cluster) and, via strong cross-links, with Websites and Electronic Mail—so its role is methodological and cross-channel rather than tied to a single medium. Practically, this suggests keeping robust classical ML baselines (e.g., decision trees, random forests) alongside newer models, reporting results per channel where possible (malware/web/email) and checking for data leakage between related samples, since the same ML methods are widely reused across contexts in this corpus.

Cluster 2 (green; learning-oriented + e-mail)

In this cluster the dominant keywords are learning systems (n = 39), classification (of information) (n = 30), learning algorithms (n = 24), electronic mail (n = 23), and support vector machines (n = 16). Internally, the strongest links are learning algorithms–learning systems (~2.76), electronic mail–learning systems (~2.46), classification–electronic mail (~2.09), and classification–learning systems (~2.08); remaining pairs (e.g., with SVM) also connect but with lower weights (~1.20, ~1.10, ~0.98). By degree, classification (of information) connects to 24 other selected terms (Links = 24), while learning systems and learning algorithms connect to 23, and electronic mail and SVM to 22.

Externally, this cluster is well connected to the network core. The highest-weight cross-cluster edges include computer crime–learning systems (~5.82), learning systems–websites (~3.67), learning systems–phishing (~3.01), electronic mail–phishing (~2.89), classification–websites (~2.86), classification–phishing (~2.69), and several links to machine learning and malware (~2.46–2.36). Read together, these patterns indicate that Cluster 2 captures the learning/classification spine of the literature with a clear attachment to the Electronic Mail channel, while remaining strongly coupled to the web-centric and general “abuse” terminology at the network’s core.

Practical significance (cautious): The prominence of classification/learning alongside electronic mail suggests prioritizing well-specified e-mail feature sets (headers/body/attachments) and stable baselines (e.g., SVM) in evaluations, with metrics reported under class imbalance. The dense links from learning systems to websites/phishing suggest reporting per-channel results (email vs. web) and checking for data leakage, since the same learning setups recur across channels in this corpus.

Cluster 3 (blue; web-centric detection focus)

This cluster centers around keywords related to websites and detection strategies. The dominant terms are websites (n = 61), phishing websites (n = 31), phishing detection (n = 29), phishing (n = 55), detection rate (n = 12), and false positive rate (n = 7). Internally, the strongest edges are between websites–phishing (~5.75), websites–phishing detection (~4.38), and phishing–phishing websites (~3.36). This subgraph is densely connected, with websites and phishing each linked to 24 of the 24 other selected terms (Links = 24), and phishing detection to 22. These degree counts confirm that the cluster is tightly embedded in the network’s conceptual core.

Cross-cluster connectivity is also strong: websites links to machine learning (~4.59), learning systems (~3.67), classification (~2.86), and electronic mail (~2.55), among others. Phishing detection also bridges to the machine-learning-centric cluster through links to decision trees, support vector machines, and random forests. This high degree of integration indicates that website-based phishing remains a dominant testbed for evaluating learning algorithms, particularly for classification tasks and metrics such as detection rates and false positive rates.

The practical implication of this structure is twofold. First, it suggests that many detection systems, especially those benchmarked in this literature, have been trained and tested on datasets derived from phishing websites. Second, because these website-centered terms are highly connected to general learning methods and metrics, results from such studies may not be generalized to other delivery channels (e.g., e-mail or malware). Therefore, reporting performance per delivery channel becomes essential. Without such disaggregation, conclusions drawn from web-based benchmarks may be incorrectly extrapolated to e-mail or malware contexts, despite the structural and behavioral differences between them. This is particularly important in studies that reuse similar learning pipelines across multiple types of data; separation helps avoid conflating distinct detection challenges and feature spaces.

Cluster 4 (yellow; neural networks + social networking)

This cluster groups together the keywords phishing attack (n = 22), neural networks (n = 10), and social networking (online) (n = 8). It forms a distinct but peripheral area on the map, with relatively low frequencies and total link strengths. The strongest internal links are phishing attack–neural networks (~1.19) and phishing attack–social networking (online) (~0.79), while neural networks and social networking are weakly connected to each other and to the rest of the network. All three terms exhibit lower external integration compared to the main ML-related nodes.

Despite these limitations, phishing attack is linked to 24 other terms on the map, including phishing (~1.67), phishing detection (~1.27), machine learning (~1.86), and multiple learning methods such as decision trees (~0.62), support vector machines (~0.37), and deep learning (~1.06). These connections confirm that phishing attack functions as the conceptual hub of the cluster and serves as a bridge to the core ML vocabulary.

The presence of neural networks and social networking (online) in this cluster suggests that these publications investigate phishing attacks on social media platforms using neural architectures. However, the relative isolation of social networking (Links = 16) and the weak integration of neural networks (Links = 20) imply that this direction is still underrepresented in the dataset. Stronger ties between phishing attack and central terms like phishing detection and machine learning confirm topical alignment, but the low co-occurrence weights suggest that this area remains niche.

Practical significance (cautious): The limited size and sparse connectivity of this cluster imply that the use of neural networks for detecting phishing on social networking platforms is still emerging. The strong dependence on phishing attack as a bridging term and the weak ties of neural-networks and social networking (online) to the broader ML ecosystem highlight a potential gap in the literature. This suggests a need for more studies applying neural network architectures in social media contexts, with attention to platform-specific features and evolving threat models.

Cluster 5 (purple; phishing detection + cybersecurity + deep learning)

This cluster comprises phishing detection (n = 29), cybersecurity (n = 27) and deep learning (n = 24). Internal links are moderate: phishing detection–cybersecurity (2.13), phishing detection–deep learning (2.08) and cybersecurity–deep learning (0.92). Each keyword connects to almost every other node in the network (cybersecurity = 24 links; phishing detection = 23; deep learning = 23), exhibiting high network centrality rather than dense intra-cluster cohesion. Such “connector-hub” behavior–high degree centrality with weaker internal density–matches patterns described in bibliometric network theory [145].

Strong cross-cluster ties reinforce this bridging role: deep learning–websites (2.85) and cybersecurity–websites (2.07) link to the web-centric cluster; deep learning–phishing (2.51) and phishing detection–machine learning (2.63) anchor the group to classical ML topics; phishing detection also couples to decision trees (1.01) and support vector machines (0.55). Connections to electronic mail (1.22, 1.17, 0.52, respectively) show that research framed by this cluster spans multiple delivery channels outlined in Section 3.

Practical significance (cautious). The mixture of deep-learning terms with classical models and several attack channels (Websites, Electronic Mail, Social Networking) suggests that neural architectures are typically evaluated alongside, not in isolation from, traditional algorithms. Comparative studies that disclose full model configurations and report channel-specific metrics remain essential for reproducibility and for quantifying the incremental benefit of deep models.

Cluster 6 (teal; deep neural networks)

This cluster is a single-node group, containing only deep neural networks (n = 12); consequently, no internal edges exist. However, the term links to 23 of the other 24 keywords (Links = 23), giving it a total-link-strength of 12.00 and marking it as a narrowly defined yet well-connected node in the overall map.

The strongest outward links are to websites (1.23), computer crime (1.23), learning systems (1.10), phishing (1.08) and deep learning (0.67). Additional edges above 0.60 connect to learning algorithms (0.65), phishing attack (0.65) and phishing websites (0.62). These values—lower than the top weights in Clusters 1–5—confirm that deep neural networks function as a bridge term referenced across web-centric, crime-focused and learning-method studies rather than as the nucleus of a cohesive sub-topic.

Practical significance (cautious). The single-node status reveals a vocabulary split: some papers prefer the generic label deep learning, others the more specific deep neural networks. Keeping the terms distinct preserves fidelity to the source dataset. Subsequent sections will discuss results under broader headings, but in this section the two labels remain separate to reflect the Scopus classification exactly.

Cluster 7 (orange; phishing core term)

This cluster is a single-node group containing only phishing (n = 55). Because no companion keywords belong to the same cluster, there are no internal edges. Even so, phishing links to every other selected keyword (Links = 24) and has the highest total-link-strength in the map (TLS = 55), confirming its role as the conceptual hub of the entire network.

The strongest outward edges tie phishing to websites (6.77), computer crime (6.02) and machine learning (5.66). Additional high-weight links include learning systems (3.01), electronic mail (2.89), classification (of information) (2.69), phishing websites (2.65), deep learning (2.51), phishing detection (2.33) and malware (2.25). This pattern shows that the term acts as an all-purpose connector across every attack channel and methodological family represented in the corpus.

Practical significance (cautious). The single-node status illustrates how a broad, domain-wide keyword can dominate co-occurrence metrics, potentially masking finer distinctions among delivery channels or model types. Retaining phishing as a standalone label preserves fidelity to the Scopus dataset; however, later analytical sections will treat this core term as an overarching context, while narrower keywords (e.g., phishing websites, phishing detection) provide channel- and task-specific detail.

To keep the keyword map aligned with the goals of this review, we applied a minimum-occurrence threshold of five, fractional counting and a “top-25 most relevant terms” filter. These settings reduce visual noise and stabilize co-occurrence statistics, ensuring that the visualization highlights the core vocabulary and its strongest relationship.

Within the resulting map, frequency and connectivity coincide: computer crime, websites, phishing, and machine learning are simultaneously the most frequent (n ≈ 50–68) and the most strongly linked (TLS ≈ 50–68). No rare yet structurally central keywords appear, and no very frequent but weakly connected ones emerge. Consequently, the network exhibits a compact conceptual core dominated by a small set of broadly framed terms, with color-coded clusters aligning closely to the delivery channels defined in Section 3.

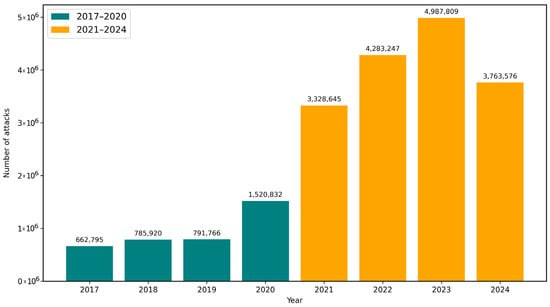

4.2. Trends in Global Phishing Activity

Table 2 presents a numbered review of scientific articles published between 2017 and 2024 that focus on machine learning and neural networks for phishing detection from different perspectives. To contextualize the evolution of these methods, our primary metric is the quarterly count of unique phishing websites detected in each quarter, which serves as a reliable indicator of the overall scale and evolution of phishing attacks over time. We follow the APWG reporting convention for “unique phishing websites” as documented in the quarterly reports; year-to-year definitional notes are enumerated in Supplement Table S1 and were taken into account during aggregation. Based on an analysis of phishing attack data reported by APWG [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32] (Figure 4) between 2017 and 2024, we divided the period into two intervals to reflect significant changes in attack dynamics. The series comprises 32 quarterly observations (2017 Q1–2024 Q4) derived from the extraction sheet provided in the Supplement. The raw APWG data in CSV format (apwg_data.csv) and the Python script used for analysis (trend_break_analysis.py, Table S4) are included in the Supplementary Materials.

Figure 4.

Number of reported phishing attacks worldwide between 2017 and 2024, based on Anti-Phishing Working Group (APWG) Phishing Activity Trends Reports.

For the APWG quarterly data, a two-segment model identifies a statistically significant structural break in Q3 2020 (F = 18.1192, p = 0.00020), indicating a sharp increase in phishing activity. Although this analysis reveals several statistically significant breaks around the 2020–2021 period (including 2020 Q1, 2020 Q2, 2020 Q4, 2021 Q1, and 2021 Q2), the strongest statistical evidence for a fundamental shift in trend is located in the second half of 2020. These results consistently justify the division of the timeline into two phases.

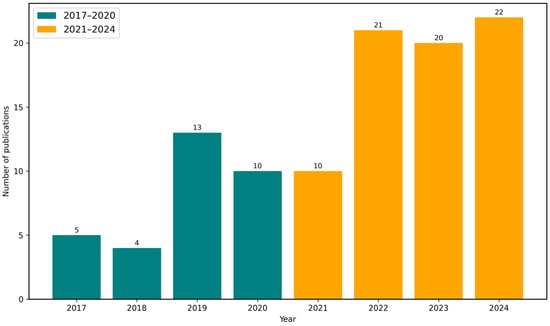

Figure 5 presents the annual distribution of publications in the analyzed corpus between 2017 and 2024. For annual publication counts, while joinpoint tests are underpowered with eight points, a Poisson block comparison shows a 2.28-fold higher publication rate in 2021–2024 versus 2017–2020 (95 percent CI 1.51–3.46).

Figure 5.

Number of publications per year between 2017 and 2024, based on corpus.

Consistently, the APWG quarterly series exhibits a structural break in 2021 Q2, with a 95 percent confidence interval spanning 2021 Q1 to 2021 Q3 (F = 11.65, p = 0.00021); a monthly reanalysis identifies April 2021 with comparable significance (F = 30.78, p < 1 × 10−10). Incidence rate ratios (IRR) were estimated with a Poisson generalized linear model using a post-2020 indicator; 95 percent confidence intervals are Wald intervals, and a Negative Binomial sensitivity analysis produces similar point estimates. Breakpoints were estimated using piecewise linear regression with a Chow-type comparison against a single-trend model and residual bootstrap for the break-date uncertainty; joinpoint regression is reported as a sensitivity check. Consequently, separating the timeline into two distinct phases—pre-2021 (moderate growth) and post-2021 (high-intensity attacks)—enables more accurate trend analysis and contextual interpretation of technological advancements in detection methods, particularly those leveraging machine learning models and neural networks architectures.

4.3. Categorization Framework for Analyzed Publications

Table 3 presents a quantitative review of scientific articles published between 2017 and 2024, showing the number of publications across predefined categories and features related to machine learning and neural networks for phishing detection.

Table 3.

Publications across all categories by time period (2017–2020, 2021–2024).

The categorization applied for the analysis of publications is structured into three main dimensions: Phishing Delivery Channels, Machine Learning Models and Techniques, and Research Methodology (Table 3). This approach allows for a systematic examination of studies based on both the nature of phishing threats and the technical solutions proposed for detection.

The first category, Phishing Delivery Channels, includes four primary vectors through which phishing attacks are executed: Websites, Malware, Electronic Mail, and Social Networking. These channels represent the main media exploited by attackers, enabling the differentiation of research based on the attack surface. Grouping by delivery channel is essential because defensive strategies and detection mechanisms often vary significantly depending on the context (e.g., email-based phishing vs. website-based phishing).

The second category, Machine Learning Models and Techniques, focuses on the machine learning and neural networks approaches utilized for phishing detection: Machine Learning, Neural Networks, Classification and Ensembles, and Feature Engineering. This categorization enables evaluation of the specific algorithms, learning paradigms, and feature selection strategies applied in the studies. It is justified by the need to understand not only which algorithms are employed but also how feature engineering contributes to detection performance, as it often plays a critical role in phishing detection systems.

The third category, Research Methodology, addresses the methodological basis of the studies: Experiment, Literature Analysis, Case Study, and Conceptual. This classification reflects the level of empirical validation and scientific rigor of the research. Experimental studies typically provide quantitative performance metrics, while conceptual papers may introduce theoretical frameworks or new models without extensive testing.

This multidimensional classification provides a comprehensive lens for analyzing research from three perspectives: the problem domain (delivery channels), the applied solution (machine learning methods), and the scientific approach (research methodology). It ensures comparability across studies and highlights trends, strengths, and gaps in the existing literature.

It is important to note that the total number of publications within individual categories does not sum up to 105 (or 100%), as some studies were classified under multiple categories. This overlap occurs because a single publication may address several delivery channels, apply different machine learning techniques, or combine various research methodologies. Consequently, a strictly mutually exclusive classification was not possible, and the categorization should be interpreted as a representation of thematic coverage rather than distinct groups.

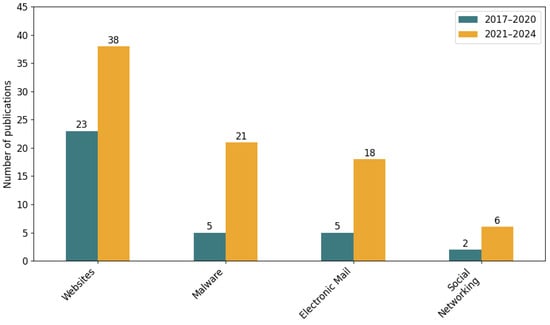

The distribution of publications across phishing delivery channels (Figure 6) indicates a clear research focus on web-based phishing. A total of 61 studies (approximately 58% of the analyzed sample) addressed the detection of phishing on websites. In comparison, 26 publications (≈25%) explored malware-related phishing, while 23 studies (≈22%) concentrated on phishing through electronic mail. Only 8 studies (≈8%) investigated threats originating from social networking platforms. This pattern remained relatively stable across the examined periods, confirming the persistent dominance of website-based phishing as the primary research area.

Figure 6.

Number of publications per phishing delivery channel by period (2017–2020 vs. 2021–2024).

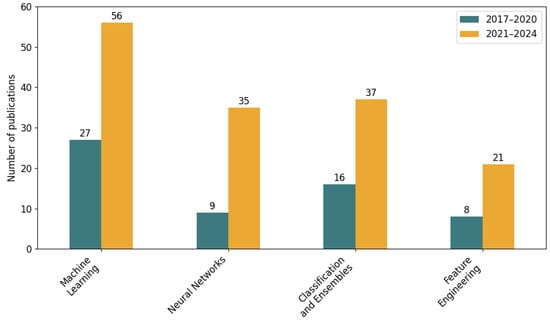

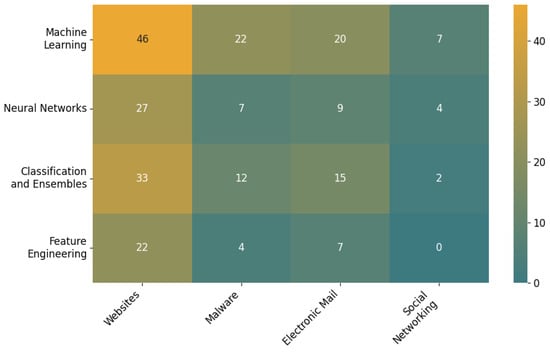

The Machine Learning Models and Techniques category (Figure 7) encompasses various approaches and components applied in phishing detection research. The Machine Learning subcategory includes studies that utilize general supervised [83,85,97,101,104,111,113,126], semi-supervised [109,124], unsupervised [97] or mixed [85,131] learning models for phishing detection.

Figure 7.

Number of publications per Machine Learning Models and Techniques.

The Neural Networks subcategory covers research employing deep learning [41,44,49,54,64,66], convolutional neural networks (CNN) [40,50] or artificial neural networks [65,68] to classify phishing threats.

The Classification and Ensembles subcategory refers to approaches that combine multiple classifiers (e.g., Random Forest, boosting) to improve prediction performance [42,51,64,71].

The Feature Engineering subcategory involves techniques for selecting, extracting, and optimizing input features to enhance model accuracy and reduce complexity [38,44,48,73].

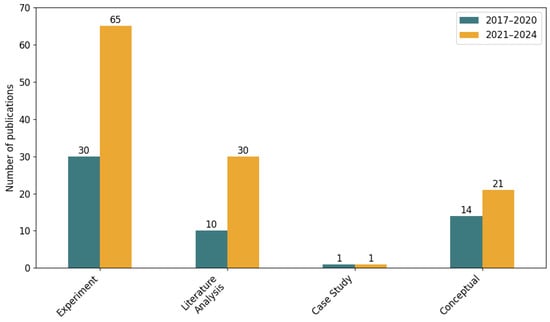

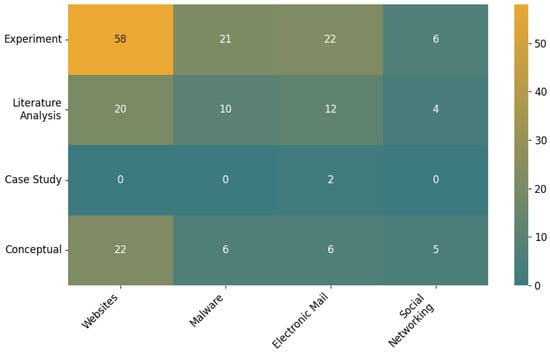

The category Research Methodology (Figure 8) refers to the approach adopted by authors to conduct their research. It includes experimental research [66,68,70], where models such as machine learning algorithms or neural networks are implemented and tested on datasets to evaluate performance. Literature analysis [71,138,139] involves reviewing and synthesizing existing research to identify trends and techniques. The Case Study category involves practical research conducted in real-world environments, such as developing phishing email detection models using actual company data [134] or implementing real-time spear phishing detection within organizational networks to validate effectiveness in operational settings [120]. Conceptual research [66,72] introduces new frameworks, models, or theoretical concepts without extensive experimental validation.

Figure 8.

Number of publications per research methodology by period.

4.4. International Research Contributions in Phishing Detection

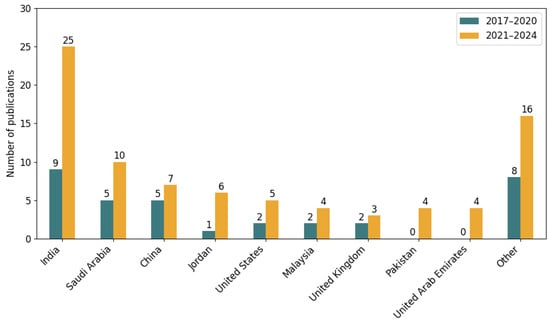

The dataset presents the distribution of phishing detection research publications using ML and NN across countries between 2017 and 2024 (Figure 9). The timeline is split into two subperiods, 2017–2020 and 2021–2024, allowing observation of temporal trends in research activity.

Figure 9.

Publications by year in countries.

During 2017–2020, the total output across all countries was 32 publications. This number more than doubled in the subsequent period (2021–2024), reaching 73 publications, indicating a clear acceleration in global research efforts. In total, 105 publications were identified for the full period.

India leads the ranking with 34 publications (32.38% of all records). The country shows strong growth, increasing from 9 publications in the first period to 25 in the second, suggesting a significant expansion of academic and institutional engagement in ML- and NN-based phishing detection research.

Saudi Arabia holds the second position with 15 publications (14.29%), also showing a positive trend—from 5 to 10 publications between the two periods. China follows with 12 publications (11.43%), maintaining steady growth from 5 to 7 publications.

Jordan and the United States each contributed 7 publications (6.67%), with Jordan showing a sharp increase (from 1 to 6), while the United States exhibited a more gradual rise (from 2 to 5). Malaysia’s output grew from 2 to 4 publications, for a total of 6 (5.71%). The United Kingdom produced 5 publications (4.76%) over the period, with a modest increase from 2 to 3.

Notably, Pakistan and the United Arab Emirates contributed no publications in the first period but entered the field in 2021–2024 with 4 publications each (3.81%). This emergence may reflect a recent strategic focus or the establishment of new research programs.

The “Other” category, encompassing all remaining countries, accounts for 24 publications (22.86%), increasing from 8 to 16 publications.

The data reveal a significant increase in global research activity on phishing detection using Machine Learning and Neural Networks, with publication output more than double between the first and second period. This upward trend confirms the growing importance of the topic in the international cybersecurity agenda. Notably, the entry of Pakistan and the United Arab Emirates in the later years suggests the emergence of new regional initiatives and the possible influence of targeted funding schemes. India stands out as the leading contributor, combining the highest publication volume with consistent growth, which points to a strong academic and industrial foundation in learning-based research for cybersecurity. The rising share of the “Other” group indicates a gradual broadening of participation, with more countries contributing to the field despite lower individual outputs. In addition, the substantial presence of Saudi Arabia, Jordan, and the United Arab Emirates highlights the Middle East as an emerging region of interest, reflecting increasing investment in learning-based security solutions.

4.5. Technical and Methodological Approaches to Phishing Detection by Channel

The purpose of this section is to quantify and interpret how research approaches are distributed across phishing delivery channels (Table 4). Using numerical data, descriptive statistics, and visual representations, this section identifies dominant research strategies, notes methodological trends, and highlights underexplored intersections that offer opportunities for further study. Shares are calculated within each channel (not against the 105-publication corpus). Percentages therefore reflect the proportion of occurrences within each channel, and counts are shown in parentheses. Totals across channels or categories may exceed 105 because individual publications can be coded to multiple categories and, in some cases, to multiple channels.

Table 4.

Publications by Phishing Delivery Channels in other categories.

Websites. Among the Machine Learning Models and Techniques topics (Figure 10), Machine Learning accounts for 36% (46 documents), Neural Networks for 21% (27), Classification and Ensembles for 26% (33), and Feature Engineering for 17% (22). This mix indicates a balanced focus between model-centric work and feature-driven design for web data. In methodology (Figure 11), experimental studies dominate at 58% (58 documents), with literature analysis at 20% (20) and conceptual contributions at 22% (22). No case studies are recorded, 0% (0). The prevalence of experiments suggests dataset-based evaluation pipelines for website phishing detection, while the share of conceptual work indicates ongoing refinement of problem framing and architectures.

Figure 10.

Cross-tabulation of machine learning models and techniques applied to phishing detection across different delivery channels.

Figure 11.

Cross-tabulation of research methodologies used in phishing detection studies across different delivery channels.

Malware. For the Machine Learning Models and Techniques topics (Figure 10), Machine Learning accounts for 49% (22 documents), Classification and Ensembles for 27% (12), Neural Networks for 16% (7), and Feature Engineering for 9% (4). The pattern emphasizes general machine-learning solutions and ensemble strategies, while explicit feature-engineering reports are less common. Methodologically, experiments again lead with 57% (21), followed by literature analysis at 27% (10) and conceptual work at 16% (6); case studies are not present, 0% (0). This distribution points to sustained empirical testing, with secondary emphasis on evidence synthesis and problem conceptualization.

Electronic Mail. Topic shares are Machine Learning 39% (20), Classification and Ensembles 29% (15), Neural Networks 18% (9), and Feature Engineering 14% (7). The profile is more evenly spread across learning approaches than in malware. Methodologically, experiments constitute 52% (22), literature analysis 29% (12), conceptual work 14% (6), and case studies 5% (2). Notably, case studies appear only in this channel, indicating efforts to situate findings in concrete organizational or campaign contexts [120,134].

Social Networking (online). Topic shares are Machine Learning 54% (7), Neural Networks 31% (4), and Classification and Ensembles 15% (2). The emphasis falls on learning-driven approaches, with a comparatively high share for Neural Networks. In methodology, experiments account for 40% (6), conceptual work 33% (5), and literature analysis 27% (4). The relatively greater weight of conceptual contributions suggests this channel is still consolidating tasks, data representations, and evaluation standards. Interpretations for this channel should be made with caution due to a small base of five unique publications.

Machine Learning Models and Techniques topics concentrate on the websites phishing delivery channel (Figure 10). For this research approach, the distribution is Websites 44% (46), Malware 21% (22), Electronic Mail 19% (20), and Social Networking 7% (7). For Neural Networks, the distribution is Websites 26% (27), Malware 7% (7), Electronic Mail 9% (9), and Social Networking 4% (4). For Classification and Ensembles, the distribution is Websites 31% (33), Malware 11% (12), Electronic Mail 14% (15), and Social Networking 2% (2). For Feature Engineering, the distribution is Websites 21% (22), Malware 4% (4), Electronic Mail 7% (7), and Social Networking 0% (0).

The results indicate a clear channel hierarchy. Websites concentrate the majority of work across topics and methods. Malware and electronic mail receive moderate but steady attention. Social networking remains underrepresented, including no instances of feature engineering, 0% (0). Case studies are almost absent and occur only in electronic mail, 2% (2). These gaps highlight opportunities for deeper empirical and design-oriented studies in social networking and for more case-based evaluations across all channels.

In response to this gap, recent research explores transformer-based models for detecting fake or inauthentic profiles on social platforms. For instance, [147] introduces an encoder-only, attention-guided Transformer that captures profile and behavioral signals using positional encodings and multi-head self-attention. The attention weights emphasize attributes such as follower count, number of favorites, and total posts. Hyperparameters are optimized using a Tree-structured Parzen Estimator. This method reduces dependence on manual feature engineering and offers built-in explainability to support triage workflows. We reference [147] to highlight the relevance of social-media impersonation, which enables pretext creation and dissemination in phishing campaigns.

A complementary research direction involves agentic Large Language Model (LLM) pipelines that leverage social-media streams as sources of cyber threat intelligence. Retrieval-augmented agents can collect suspicious posts and profiles, contextualize them using open-source reports, and integrate entities and tactics into a knowledge graph to assist analysts in triage and attribution. This line of work also motivates the development of multimodal defenses against deepfakes and chatbot-driven social engineering, alongside time-sensitive evaluation methods for rapidly evolving campaigns [148].

Another related approach adapts reasoning-centric, multimodal link analysis—originally developed for email security—to social-network content. Recent findings on phishing-email URLs demonstrate improved accuracy when models receive layered metadata for each link, including domain information, certificate details, regulatory filings, browser context, and Optical Character Recognition (OCR) of rendered previews, and when they generate explanations prior to predictions. Applying this framework to social-network posts involves combining post text, account metadata, rendered previews, and explanation-first prompting, thereby enhancing robustness and operator trust [149].