Abstract

The rapid response of emergency vehicles is crucial but often hindered because sirens lose effectiveness in modern traffic due to soundproofing, noise, and distractions. Automatic in-vehicle detection can help, but existing solutions struggle with efficiency, interpretability, and embedded suitability. This paper presents a hardware-constrained Simulink implementation of a yelp siren detector designed for embedded operation. Building on a MATLAB-based proof-of-concept validated in an idealized floating-point setting, the present system reflects practical implementation realities. Key features include the use of a realistically modeled digital-to-analog converter (DAC), filter designs restricted to standard E-series component values, interrupt service routine (ISR)-driven processing, and fixed-point data type handling that mirror microcontroller execution. For benchmarking, the dataset used in the earlier proof-of-concept to tune system parameters was also employed to train three representative machine learning classifiers (k-nearest neighbors, support vector machine, and neural network), serving as reference classifiers. To assess generalization, 200 test signals were synthesized with AudioLDM using real siren and road noise recordings as inputs. On this test set, the proposed system outperformed the reference classifiers and, when compared with state-of-the-art methods reported in the literature, achieved competitive accuracy while preserving low complexity.

1. Introduction

In time-critical emergencies the speed and efficiency of response of the corresponding authorities are paramount. Minimizing the time it takes for emergency services to reach a scene and transport individuals to medical facilities directly influences the outcome of these interventions. To achieve this, emergency vehicles often resort to maneuvers that would be prohibited for civilian drivers. However, ensuring public safety requires that other road users be adequately and promptly alerted to their presence.

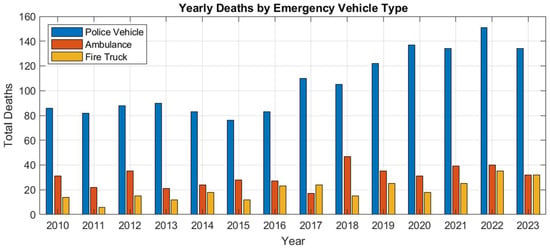

Despite the use of visual and acoustic warning systems, emergency vehicles can still go unnoticed, especially in noisy traffic environments. Statistics from the United States indicate that in 2021, 198 fatalities were associated with accidents involving emergency vehicles [1,2]. A longitudinal analysis covering 2010–2023 (Figure 1) reveals that police vehicles were disproportionately involved in these fatal incidents.

Figure 1.

Yearly fatalities from crashes involving police vehicles, ambulances, and fire trucks (2010–2023).

This paper centers on the acoustic warning component of emergency vehicles: the siren. Sirens emit distinctive sounds designed to alert nearby drivers to yield. The primary types include Yelp (rapid modulated tones), Wail (gradually sweeping frequencies), Phaser (fluctuating modulations for uniqueness), and Hi-Lo (alternating tones).

However, the growing focus on vehicle soundproofing, aimed at improving in-cabin comfort, diminishes the audibility of sirens. This is further exacerbated by drivers playing loud music. Such conditions render traditional sirens less effective, particularly for hearing-impaired drivers, who rely less on auditory cues. In response, alternative alerting systems (i.e., such as dashboard lights, haptic feedback, or automatic volume adjustment) are necessary to ensure that emergency warnings remain perceptible to all drivers.

Current research in this domain is gaining momentum. Major industry players like Bosch are exploring AI-based siren detection systems, although their deployment is hindered by the high volume of training data required [3]. Other initiatives, such as iHear [4] and Waymo [5], also aim to tackle this challenge, but their solutions lack commercial maturity or public validation. In contrast, the system proposed in this paper emphasizes practicality, efficiency, and real-world applicability, addressing key gaps in the existing landscape.

Traditional siren detection systems predominantly rely on digital signal processing (DSP) methods [6,7,8,9,10,11,12,13,14,15,16,17,18], which are computationally intensive and costly. Recent approaches based on machine learning [19,20,21,22,23,24,25] and deep learning [26,27,28] offer improved recognition capabilities but come with trade-offs: high energy demands, complex models difficult to interpret, and challenges in real-time operation—all critical issues in safety-related applications.

Beyond yelp-based solutions, recent research has revisited traditional DSP principles to address other siren types. For instance [29], proposed a Goertzel filter combined with a sliding window to improve wail signal detection in noisy environments. This lightweight approach demonstrated high accuracy while maintaining low computational overhead, making it particularly suited for embedded systems and real-time deployment. Such studies reinforce the relevance of interpretable, resource-efficient techniques in complementing data-driven solutions.

Anand et al. [30] recently proposed an AI-based framework for emergency siren detection and localization, incorporating ResNet, U-Net, and Transformer models to address issues such as variable source distance and Doppler effects. This reflects a broader trend in the field, where increasingly sophisticated methods are being explored to cope with complex real-world conditions. Such developments highlight that siren detection remains an active research area, with new challenges emerging as vehicle and traffic environments evolve.

A notable departure from conventional approaches is found in [31], which explores the use of analog circuitry for siren signal detection. This method offers an appealing compromise between performance and efficiency, with a flexible design that can be realized either through mostly analog circuits or simplified digital equivalents, making it a scalable and practical solution.

Building on this foundation, earlier developments in [31,32,33] introduced a mathematical framework for yelp signal detection based on envelope extraction, implemented via a cascade of filters and thresholding logic. These works also provided theoretical modeling that helped establish a structured, analog-inspired approach to the problem.

Our subsequent contribution in [33] extended these ideas by proposing a cost-effective and low-complexity yelp siren detector grounded in the analog signal processing principles developed in the previous works. The system demonstrated strong performance with minimal computational requirements, making it suitable for integration into vehicles, including those intended for accessibility support. However, the implementation described in [31] was carried out in an idealized MATLAB simulation environment, where parameters could take continuous values due to floating-point representation. While this flexibility facilitated optimization and proof-of-concept validation, it does not reflect the constraints typically encountered in real hardware implementations.

This work advances the implementation of the system in [33] into a hardware-constrained Simulink model. Thus, we present a continuation of that work through a detailed Simulink implementation of the yelp detection system, designed with realistic digital and analog constraints in place. First, the Simulink model limits the system parameters to values consistent with actual electronic component availability and embedded design practices. For example, the band-pass filter’s center frequency is selected based on standard E-series resistor and capacitor values, ensuring compatibility with typical analog hardware. Similarly, the threshold applied to the comparator is generated through a digitally modeled DAC with 10-bit resolution, introducing quantization effects absent in the earlier floating-point model. Secondly, the control flow of the system is restructured to reflect ISRs, mirroring how a microcontroller would operate the detection algorithm in practice. Each stage, from threshold adaptation to decision-making, is discretely modeled to match embedded scheduling constraints and execution timing. The result is a functional digital model that reflects the eventual embedded system with high fidelity. Automatic code generation can be used to obtain the C code based on the developed model.

In our previous study [33], all available labeled signals were used to tune the system parameters for maximum TPR (true positive rate) on yelp sirens and minimum FPR (false positive rate) on road noise. Consequently, no additional data remained for an unbiased test. To maintain a fair comparison when benchmarking our Simulink system against machine learning methods, we followed the same strategy: three machine learning classifiers —k-Nearest Neighbors (KNN), Support Vector Machine (SVM), and Neural Network (NN)—were trained on the complete set of signals used in the previous work. However, to evaluate the models’ generalization capabilities, we needed a new set of test signals. Given the scarcity of publicly available datasets containing labeled siren recordings, we turned to generative AI. Using AudioLDM [34], we synthesized 100 new yelp siren signals and 100 new road noise signals, leveraging its audio-to-audio generation capability to expand the dataset in a controlled and targeted manner. The inputs for this generation process were representative samples from both classes (yelp sirens and road noise) originally drawn from the dataset used in our previous work. Since all those signals had already been exhausted in system optimization and training, this reuse through generative modeling was a key step in producing novel, class-representative signals without relying on external or inconsistent data sources. This approach ensures a high degree of continuity and relevance between the training and test sets, reinforcing the validity of our performance comparison.

This allowed us to test how both the Simulink implementation and the trained machine learning (ML) classifiers perform on previously unseen data. Despite not relying on data-driven training, our system achieved a better performance than the best machine learning model from the three that were used as reference. This result reinforces the benefits of interpretable, resource-efficient architectures that are directly compatible with embedded deployment.

The main contributions of this work can be summarized as follows:

- Hardware-constrained, deployment-ready implementation: we developed a fixed-point Simulink model for Yelp siren detection that incorporates realistic hardware constraints, including DAC quantization, ISR-driven execution, and E-series component limitations, while maintaining high detection performance. This advances the system from a theoretical prototype toward a higher Technology Readiness Level (TRL), supporting embedded deployment feasibility.

- Creation and release of a test dataset: a novel benchmark dataset was synthesized using AudioLDM and real siren and road noise recordings. It is publicly released to enable reproducible evaluation and stimulate further research.

- Full model transparency for reproducibility and extension: the Simulink model and its parameters are documented in detail, allowing researchers to replicate our experiments and build upon the proposed design.

- Comprehensive performance evaluation and benchmarking: the system was rigorously evaluated against three classical ML algorithms and compared with multiple state-of-the-art works, demonstrating competitive or superior accuracy under resource-constrained conditions.

This work therefore not only bridges the gap between algorithm design and hardware implementation but also strengthens the case for low-complexity, interpretable signal processing systems in safety-critical automotive applications. The remainder of this paper is structured as follows: Section 2 describes the Simulink model architecture, the dataset generation process using AudioLDM, and the machine learning baselines used for benchmarking. Section 3 presents a detailed performance evaluation comparing the proposed system to ML classifiers. Section 4 discusses the implications of these results, and Section 5 concludes with key takeaways and future directions.

2. Materials and Methods

This section details the design and implementation of the proposed Simulink-based siren detection system, the methodology for generating an independent test set using AudioLDM, and the configuration of benchmark machine learning models for performance comparison.

2.1. Simulink Implementation

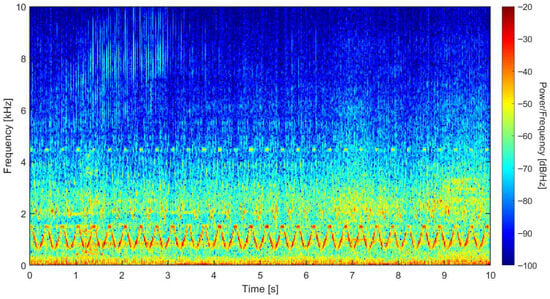

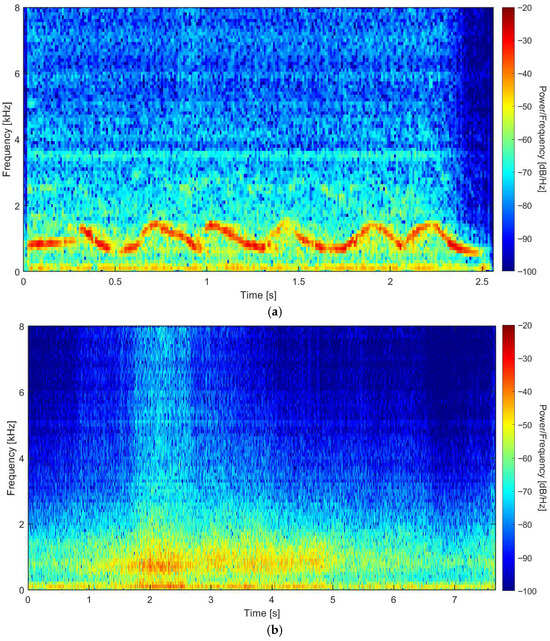

The yelp siren signal shows periodic variation in its fundamental frequency. Therefore, its instantaneous frequency will match a given frequency value between its minimum and maximum variation values a number of times per second. This is a robust indicator of the presence of the siren signal. To highlight these aspects, a spectrogram of a yelp signal is shown in Figure 2.

Figure 2.

Spectrogram of a yelp siren signal.

The yelp siren is one of the most used and easily identifiable sounds emitted by emergency vehicles across the globe. Its widespread use is largely due to its ability to quickly draw attention and clearly signal urgency in traffic environments. Acoustically, the yelp siren is a frequency-modulated signal, where the frequency continuously shifts between two defined limits. This modulation creates a pattern of rising and falling tones at a specific rate, typically measured in cycles per minute (CPM). The distinctive auditory signature of the yelp siren arises from this dynamic variation. Key parameters that characterize the yelp signal include its upper and lower CPM values, the minimum and maximum fundamental frequencies, and the overall sweep range. Although these parameters are critical for understanding the signal’s behavior, there are no universally fixed standard values. A detailed summary of these technical characteristics is available in [35].

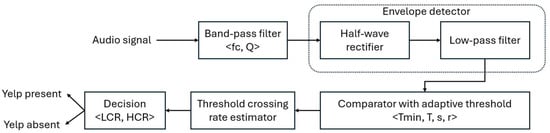

To detect this property, the following operations are implemented. The input signal is first filtered using a band-pass filter. This is equivalent with picking a frequency value to watch how many times per second the signal’s instantaneous frequency crosses it. This filtered signal is then rectified and passed through a low-pass filter to obtain its envelope. The envelope is compared against a threshold to detect periods of high energy. The system employs an adaptive threshold to maintain robustness across varying signal strength conditions. The comparison produces a binary signal whose transitions are counted over a specified time interval. Finally, the number of transitions in a second is compared to two thresholds, resulting in a detection decision. A siren is considered present if the threshold crossing rate is between the two limit values for at least half a second. The threshold crossing rate is updated every 100 ms. This principle of detection is illustrated in Figure 3.

Figure 3.

Block diagram illustrating the principle of low-complexity yelp siren detection.

The main parameters are defined as follows. The center frequency and quality factor of the band-pass filter are denoted by fc and Q, respectively. The adaptive threshold is represented by T, with Tmin specifying its minimum allowable value. The threshold can only increase or decrease in discrete increments determined by the step size s, while the adaptation rate, denoted with r, controls how quickly these changes occur. The parameters LCR and HCR define the lower and upper bounds of the threshold crossing rate, which are used to determine the presence of a siren. A detection is confirmed when the threshold crossing rate lies inclusively within the interval [LCR, HCR]. While this paper focuses primarily on implementation constraints, the algorithms describing the functional principles of the system have been detailed in our previous work [33].

The system architecture follows the design proposed in our study. It includes band-pass filtering, envelope detection, adaptive thresholding via DAC, threshold crossing rate estimation, and a decision stage. In Simulink, the DAC was modeled numerically, and the model was structured to enable code generation using Embedded Coder. While the detection principle was demonstrated in [33], in this paper a much more realistic system is evaluated. The real DAC imposes the variation steps for the comparator’s threshold, and the central frequency of the band-pass filter strongly depends on the values of the components as they are available in the standard E series. The main advantages of the proposed approach are the low cost and the reduced complexity. Even though it can be argued that technical solutions may exist to reduce disadvantages caused by the real components, their use would contradict the main advantages of the system. The Simulink model is presented in detail onwards.

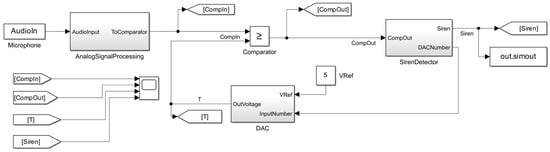

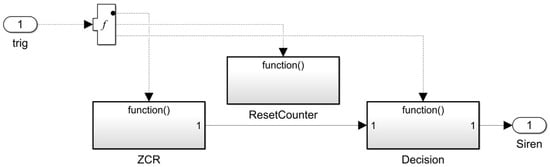

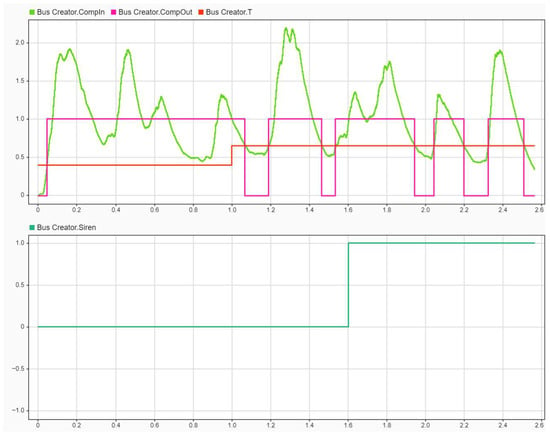

The topmost level of the system represents a block diagram, highlighting the major stages of signal processing, and it is presented in Figure 4. For block diagrams, describing the principle and the unconstrained processing, the readers are encouraged to consult our previous work [33]. Four signals (CompIn, CompOut, T, Siren) were captured using an oscilloscope (Simulink Scope block) for evaluation. They are shown in Figure 14.

Figure 4.

The topmost level of the Simulink siren detection system.

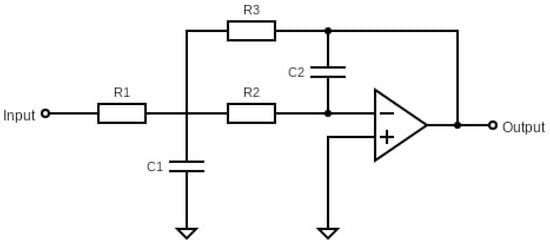

The modeling of the analog signal processing part incorporates the band pass filter, the rectifier and the low-pass filter. The band pass filter has the role to detect high energy at a certain frequency. This happens when the fundamental frequency of the siren signal matches the central frequency of the filter. The output of the filter is difficult to process because, when the siren is present, it represents bursts of a signal having the frequency very close to the central frequency of the filter. Therefore, the envelope of such signal would be a much better resource to be processed further. To obtain the envelope, the output of the filter is rectified and then filtered with a low-pass filter. The band pass filter has the central frequency equal to 802.793 Hz and a quality factor equal to 5.017 that can be obtained using a multiple feedback filter configuration as shown in Figure 5. The values of the components are: R1 = 3.74 kΩ, R2 = 11.3 kΩ, R3 = 3.74 kΩ, C1 = 0.62 µF, C2 = 1.5 nF. The resistance values are from the E96 series, and the capacitance values are from the E24 series.

Figure 5.

Analog band-pass filter–multiple feedback configuration.

The low-pass filter is a simple Butterworth, first order, filter, with the cutoff frequency equal to 2 Hz. The block diagram of this subsystem (AnalogSignalProcessing) is shown in Figure 6.

Figure 6.

The modeling of the analog signal processing block (AnalogSignalProcessing subsystem).

While we aim to use analog band-pass and low-pass filters in the final, real, implementation, the Simulink model employs digital equivalents to enable a complete Model-in-the-Loop (MIL) simulation. These digital filters are configured to match the characteristics of the corresponding analog designs (e.g., center frequency, Q factor, and cutoff frequency), ensuring that simulation results accurately reflect the behavior of the real hardware-constrained system.

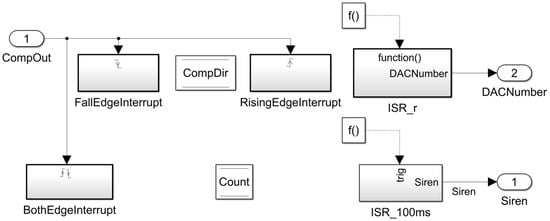

The main part of the processing, conducted using the microcontroller, is contained in the siren detector (SirenDetector) subsystem. It contains the detection algorithm implemented in such way to facilitate automatic code generation using the Embedded Coder. All the processing is triggered by various interrupting sources: rising and falling edges of the output signal of the comparator, and two time-based interruptions, ISR_r and ISR_100ms. The ISR_r subsystem is triggered r times per second. As per our previous work [33], the optimal value for r is 3. ISR_100ms is triggered at every 100 ms. The siren detector subsystem is detailed below, in Figure 7.

Figure 7.

Contents of the siren detector subsystem.

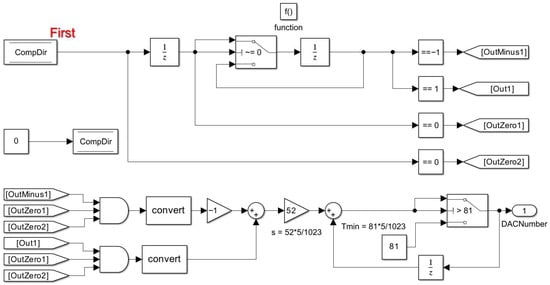

Details of the ISR_r subsystem are illustrated in Figure 8. This component handles the adaptive regulation of the comparator’s threshold, a key feature that mitigates sensitivity to varying input signal volumes. Without such regulations, loud signals such as sirens received at close range could cause the band-pass envelope to consistently exceed the threshold, eliminating threshold crossings and thereby preventing valid detections. To avoid this, the subsystem monitors the envelope’s behavior over successive intervals. If the envelope remains above the threshold for two consecutive r intervals (i.e., 2/3 s), the threshold is increased by a step s. Conversely, if the envelope remains below the threshold for two consecutive r intervals and the current threshold is above the system’s minimum limit (Tmin), it is decreased by s. In our previous work [33], the optimal minimum threshold was found to be approximately 0.3952, while the optimal step size s was calculated as 0.2555. However, the current implementation models realistic constraints. The minimum threshold value is now limited by the resolution of the DAC, and in this system, it is approximately 0.395 V, given the reference voltage of 5 V and 10 bit resolution. Likewise, the step size s is determined by the DAC’s quantization step. Both are highlighted in Figure 8. For the current situation, the usable step size is approximately 0.254 V.

Figure 8.

Details of the ISR_r subsystem.

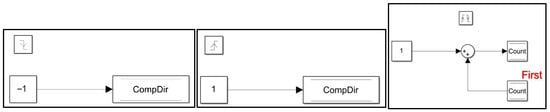

Finally, the system tracks the output behavior of the comparator through a signal named CompDir, which encodes the direction of the most recent transition. Specifically, a rising edge at the comparator output sets CompDir to 1, a falling edge sets it to −1, and the absence of any transition results in CompDir being equal to 0. These are handled by the FallEdgeInterrupt and RisingEdgeInterrupt subsystems, as shown in Figure 9. The number of threshold crossings in 100 ms intervals is counted using the BothEdgeInterrupt subsystem. The subsystem simply increments the value of the Count global variable, which represents the number of threshold crossings. We mention here that this variable is reset to 0 every 100 ms, as is explained later in the paper.

Figure 9.

Details of the FallEdgeInterrupt (left), RisingEdgeInterrupt (center) and BothEdgeInterrupt (right) subsystems.

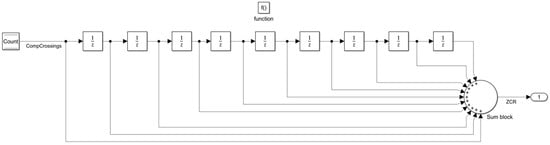

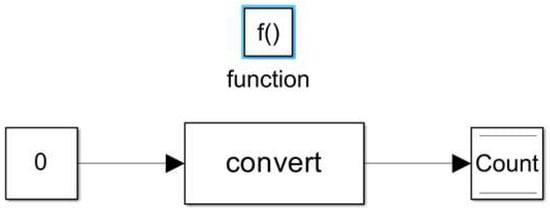

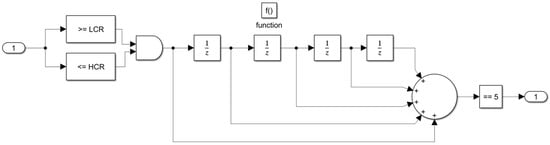

Details of the ISR_100ms subsystem are illustrated in Figure 10. This subsystem is executed every 100 ms and distributes the trigger signal to three sequential processing blocks: ZCR (Zero-Crossing Rate), ResetCounter, and Decision. The ZCR block estimates the rate of threshold crossings over the last second. It receives the number of comparator threshold crossings that occurred during the current 100 ms interval and stores this value in a sliding buffer that holds the last ten such measurements. Using these ten stored values, the system continuously updates the zero-crossing rate once every 100 ms, effectively maintaining a rolling estimate of activity over a one-second window. These operations can be observed in detail in Figure 11. The ResetCounter block is responsible for resetting the crossing counter at the end of each 100 ms period. This ensures that the number of threshold crossings counted in the next interval always starts from zero, observable in Figure 12. Finally, the Decision block uses the one-second zero-crossing rate computed by ZCR to evaluate whether a yelp siren is present. The decision logic compares the current zero-crossing rate to two predefined thresholds (denoted with HCR and LCR, optimally determined in [33] as 13 and 4). If the rate remains within these bounds for five consecutive 100 ms intervals (i.e., for a continuous duration of 0.5 s) the block asserts a detection. This approach provides decision updates every 100 ms, with a confirmation latency of 0.5 s, which is a suitable requirement for in-vehicle alerting. The subsystem is shown in detail in Figure 13.

Figure 10.

The contents of the ISR_100 subsystem.

Figure 11.

The contents of the ZCR subsystem.

Figure 12.

The contents of the ResetCounter subsystem.

Figure 13.

The contents of the Decision subsystem.

To better illustrate the system’s behavior under realistic signal conditions, Figure 14 presents representative waveforms obtained from the Simulink simulation. The figure highlights how the adaptive threshold, managed by the comparator subsystem, evolves in response to the incoming signal envelope. As described above, the threshold dynamically increases when the signal maintains high energy for a sustained period, in accordance with the adjustment rules defined in the ISR_r subsystem. This adaptive behavior prevents the system from saturating in response to loud inputs while preserving its sensitivity to the characteristic periodic modulations present in yelp sirens. The resulting oscillations of the comparator output become detectable. This information feeds directly into the subsequent decision-making process, enabling the system to reliably determine whether a siren signal is present. The measurement points from where the shown signals were recorded can be identified in Figure 4.

Figure 14.

Adaptive threshold, comparator output and detection behavior for sample input.

To improve clarity and support reproducibility, the main system parameters used in the proposed model are summarized in Table 1. These values include filter characteristics, DAC-imposed constraints, and decision thresholds.

Table 1.

Key system parameters used in the proposed Simulink model.

The C code generated from the Simulink model using Embedded Coder demonstrates extremely low resource requirements. The implementation uses only 52 bytes of global RAM (random access memory), no local static RAM, and a maximum stack depth of 9 bytes. The code consists of 278 functional lines and is entirely based on integer operations, with no dynamic memory allocation or runtime-dependent loops. These characteristics ensure deterministic execution and confirm the suitability of the design for microcontrollers with limited resources while meeting real-time processing requirements.

2.2. Dataset Generation with AudioLDM

To demonstrate the performance of the proposed system in this version that is realistically modeled, we compared it with 3 machine learning algorithms. In our prior work [33], all suitable signals (yelp and road noises), selected from [36], had already been used to determine optimal detection parameters, exhausting the pool of data for further unbiased evaluation. To ensure a fair and robust comparison between our deterministic Simulink model and the trained machine learning classifiers, we needed additional signals that matched the statistical profile of the original dataset but had not been encountered by any of the models during training or design. Due to the limited availability of publicly accessible datasets containing annotated siren and road noise recordings, we faced a significant challenge in obtaining new test data that would be representative yet independent of the signals previously used for system tuning.

To address this, we employed AudioLDM, a generative audio-to-audio solution. It enables generation conditioned on example signals rather than text prompts, which makes it especially suitable for our use case: expanding a signal domain using class-consistent exemplars. Using this approach, we randomly selected 100 yelp siren signals and 100 road noise recordings from the previous dataset and used them as reference inputs to generate 100 new variants for each class. The generated duration was selected randomly from 2.5, 5, 7.5 or 10 s. These newly synthesized signals had not been seen by either the Simulink system or the machine learning algorithms and thus provided a clean and unbiased test set for evaluating generalization performance. Because the generation process preserved the statistical and perceptual features of the source signals, the augmented dataset remained faithful to the original task definition. This ensured continuity in signal characteristics and contributed to a controlled and realistic performance evaluation framework. The size of this test set corresponds to approximately 18% of the original dataset, aligning with common evaluation practices.

This test set allowed us to assess how each system behaves when exposed to unseen yet class-representative data. This is an essential step in validating the real-world applicability of both rule-based and learning-based models. Figure 15 shows examples of spectrograms for yelp and road noise signals obtained using AudioLDM. The features of yelp and road noise signals can be observed.

Figure 15.

Example spectrograms of generated yelp (a) and road noise (b) signals using AudioLDM.

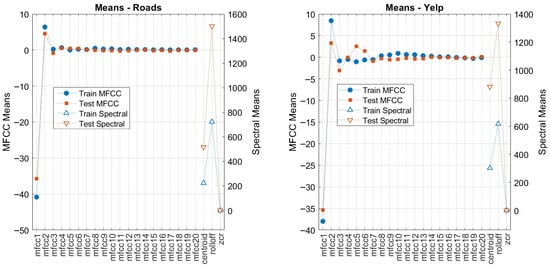

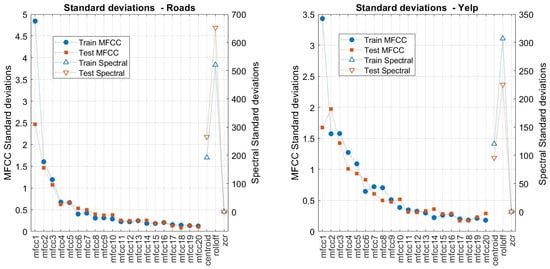

To further validate the representativeness of the generated signals, we performed an objective feature distribution analysis comparing the original training dataset and the synthesized test set. For both classes (yelp sirens and road noise), we extracted 23 features (20 mel-frequency cepstrum coefficients (MFCC) plus spectral centroid, spectral roll-off, and zero-crossing rate) and computed the mean and standard deviation for each feature across the two sets. The results showed strong structural similarity, with correlation coefficients above 0.99 between feature-wise means and standard deviations of the two sets (see Table 2). Figure 16 and Figure 17 illustrate these relationships, confirming that the generated data preserves the statistical characteristics of real signals despite minor scale differences. This consistency reinforces the validity of the augmented test set for benchmarking.

Table 2.

Correlation coefficients between feature-wise means and standard deviations for training and test sets.

Figure 16.

Feature-wise mean comparison between training and test sets for road noise (left) and yelp sirens (right).

Figure 17.

Feature-wise standard deviation comparison between training and test sets for road noise (left) and yelp sirens (right).

The representativeness of the generated signals can also be assessed by analyzing the performance of all evaluated models on this test set, with results provided in Section 3. Furthermore, the dataset has been made publicly available to support transparency, reproducibility, and independent validation (see Data Availability Statement).

2.3. Feature Extraction and Machine Learning

To provide a robust benchmark for the performance of our Simulink-based detection system, we implemented and evaluated three classical machine learning algorithms: KNN, SVM, NN. These models serve as a performance reference point, enabling us to assess how well our deterministic system compares against learning-based approaches trained on the same class distributions. Additionally, the use of these well-known classifiers emphasizes a key motivation of this study: to explore whether simpler, interpretable systems can match the performance of more complex data-driven alternatives.

The KNN classifier was trained using a single neighbor (k = 1), effectively making it a nearest neighbor decision rule. This configuration assigns a test sample the label of its closest training instance in feature space, allowing the model to be highly sensitive to local structure. The city block distance metric (also known as Manhattan or L1 distance) was used to measure proximity between feature vectors. Compared to Euclidean distance, the city block metric can be more robust to high-dimensional feature spaces by reducing the impact of large coordinate differences. All features were standardized prior to classification to ensure that differences in scale did not bias the distance computation.

The SVM classifier with a quadratic kernel was used to model non-linear decision boundaries in the feature space. The quadratic kernel, corresponding to a second-degree polynomial, allows the classifier to capture pairwise feature interactions while maintaining relatively low model complexity. To ensure stable convergence and to prevent dominance by features with large variance, feature standardization was applied prior to training. The regularization parameter (box constraint) was set to 1, providing a balance between maximizing the margin and minimizing classification error on the training set.

A shallow feedforward neural network was used for classification. The architecture consisted of a single fully connected hidden layer with 25 neurons, using the ReLU (Rectified Linear Unit) activation function. This choice introduces non-linearity while avoiding saturation effects that can occur in sigmoid or tanh activations, making it well-suited for relatively low-dimensional feature spaces. The network was trained using a maximum of 1000 iterations, with early stopping disabled. The regularization parameter (λ) was set to 0, meaning no L2 penalty was applied to the weights during training. All input features were standardized to zero mean and unit variance prior to training, a standard preprocessing step that improves convergence and numerical stability in gradient-based optimization.

The machine learning models were trained on all available labeled signals from our previous dataset (253 yelp siren signals and 868 road noise recordings selected from [36], selection detailed in [33]) using 5-fold cross-validation to ensure robustness. Audio signals were first resampled to 8000 Hz to standardize the input. Feature extraction was performed using the audioFeatureExtractor object available in the Audio Toolbox of MATLAB2024b.

This extractor was configured to generate a total of 23 features per audio sample: 20 Mel-frequency cepstral coefficients (MFCCs), which are known to effectively capture the spectral envelope and timbral characteristics of audio signals, along with three time-domain and spectral descriptors: spectral centroid, spectral roll-off point, and zero-crossing rate. These features are widely recognized in audio classification for their discriminative power and sensitivity to both tonal structure and noise patterns. Importantly, the relatively large number of descriptors ensures that the machine learning models have sufficient representational capacity to achieve high training accuracy. Moreover, the feature extraction and classification are performed using the entire audio signal as input. This means the machine learning models have access to the full temporal context of the signal before making a decision, a luxury not available to our Simulink-based system. In contrast, the proposed system processes the signal in real-time, sample by sample, and generates a siren detection decision as soon as the internal conditions are satisfied. This streaming nature makes the classification task inherently more difficult for the Simulink model.

By contrasting our deterministic Simulink model with these statistically trained models using the same test dataset, we aim to underscore that carefully designed, low-complexity systems can offer competitive performance without relying on extensive feature sets or learning stages. We assessed the computational characteristics of each model to better understand their suitability for deployment in embedded or resource-constrained environments. Table 3 summarizes the prediction speed, training time, and memory footprint for each classifier.

Table 3.

Performance characteristics of the KNN, neural network (NN), and support vector machine (SVM) models.

3. Results

This section reports the experimental findings, beginning with validation and test accuracy of machine learning classifiers on the original dataset, followed by an assessment of their and the proposed Simulink model’s performance on the test set generated with AudioLDM, and concluding with an accuracy-based comparison of all models against selected systems from literature.

3.1. Simulink Model and Machine Learning Models Performance

To benchmark the proposed Simulink model against learning-based approaches, we trained three widely used classifiers: KNN, SVM, and a shallow NN, on 23 acoustic features extracted from the original dataset (selection of signals from [36] detailed in [33]). The features and training details were as presented in Section 2.3. An additional 10% of the dataset was held out as a local test set to assess generalization within the original data distribution. The resulting validation and test accuracies are summarized in Table 4.

Table 4.

Validation and test accuracy of the ML models.

To assess generalization beyond the original dataset, all models were subsequently evaluated on 200 unseen signals generated with AudioLDM: 100 yelp siren and 100 road noise samples. These signals were derived from the same class distributions but were never used during training, validation, or system design. Table 5 presents the true positive and false positive rates obtained on this synthetic test set.

Table 5.

Detection performance comparison between Simulink and ML models.

While all classifiers performed well on familiar data, their accuracy dropped (most notably for KNN and NN) when evaluated on the AudioLDM-generated signals. This reflects the challenge of generalizing previously unseen but realistically synthesized input. In contrast, the Simulink model, which uses a minimal, fixed feature set, maintained a high detection rate with only two false positives. Given its real-time operation, deterministic structure, and hardware-oriented design, this result underscores the system’s robustness and its practical suitability for embedded deployment.

It is important to note that the performance results in Table 5 differ from those reported in Table 4 because the two tables evaluate under different conditions. Table 4 reflects validation on real recordings drawn from the same distribution as the training set, while Table 5 measures generalization on a new test set generated using AudioLDM. This test set preserves the statistical structure of the original data (correlation >0.99 for feature-wise means and standard deviations) while introducing parameter variability that challenges model adaptability. As a result, models like SVM maintain strong performance, whereas KNN and NN exhibit a greater drop, indicating limited flexibility to unseen variations.

Performance evaluation in this study was carried out at the signal level. In practice, this means that each audio recording in the test set was treated as a single unit and classified into one of two categories: either it contained a siren, or it did not. We deliberately adopted this formulation because the datasets available for this work do not provide onset and offset annotations of siren events. Without such temporal markers, it is not possible to compute frame-level or event-level measures that depend on precise timing information. By focusing on signal-level decisions, the evaluation remains consistent across all signals in the dataset and allows us to report results in terms of overall accuracy, which is the most common measure in related studies.

3.2. Accuracy-Based Comparison with Existing Systems

To provide a broader perspective on how our model performs relative to other systems reported in the literature, we carried out a comparative analysis based on overall accuracy. We chose accuracy because it is the most frequently reported performance measure in studies on this topic, which makes it the most suitable for direct comparison. For consistency, we evaluated both our Simulink model and the three machine learning reference models (described in Section 2.3) using the same 200-signal test set. This ensures that all results are obtained under identical conditions. For the cited works, the accuracy values were taken directly from the original publications. A summary of these comparisons, combining our experimental results with those reported in the literature, is shown in Table 6, which illustrates how our approach relates to existing methods.

Table 6.

Accuracy comparison with selected systems.

4. Discussion

The performance evaluation highlights a clear advantage of the proposed Simulink-based system over classical machine learning classifiers, especially under realistic testing conditions. While all models performed comparably on in-distribution data, their performance diverged significantly when evaluated on the AudioLDM-generated test set. The Simulink model achieved a 97% true positive rate and a 2% false positive rate—outperforming the best learning-based model (SVM) by 6 percentage points in TPR and maintaining low FPR.

This result demonstrates that the deterministic structure, low complexity, and streaming design of the Simulink model do not compromise accuracy but rather support it under generalization stress. Notably, the Simulink model operates without access to the entire signal upfront, in contrast to the ML models that classify based on complete feature vectors. This indicates a strong robustness of the rule-based system to temporal uncertainty, making it ideal for real-time embedded applications.

The high performance of SVM among ML models aligns with existing literature favoring kernel-based methods for audio classification tasks with limited data. However, the NN and especially KNN models showed considerable degradation when faced with unseen data, highlighting their limited generalization. These findings reinforce the claim that a well-constrained, interpretable system, carefully engineered with real-world implementation constraints, can match or even surpass data-driven models when evaluated fairly.

Moreover, the use of AudioLDM for generating the test dataset represents a novel contribution. It allows the generation of realistic, class-representative test signals while avoiding data leakage from the training distribution. This strategy enables reliable assessment of out-of-distribution generalization, something rarely addressed in prior siren detection studies.

In terms of computational efficiency, the Simulink system also offers advantages: no training phase, fixed memory footprint, and full compatibility with automatic code generation for embedded deployment. These characteristics significantly lower the barrier to integration in automotive systems where energy and latency constraints are strict. Thus, the results demonstrate that principled signal design, rather than pure data-driven optimization, can yield superior practical outcomes.

A small fraction of false positives (2%) occurred during evaluation, primarily caused by short bursts of noise that produced a zero-crossing rate temporarily within the detection bounds. While such cases are rare and have minimal dangerous practical impact (prompting the driver to stay alert), future work will focus on mitigating them.

5. Conclusions

This study presented a realistic Simulink implementation of a yelp siren detection system tailored for embedded deployment. Compared to traditional machine learning approaches, the proposed architecture achieved superior generalization performance on a novel test set generated using AudioLDM. The Simulink model combines hardware-friendly constraints, adaptive thresholding, and real-time processing, all while maintaining high accuracy (97% TPR, 2% FPR).

Unlike ML models that depend on global feature representations and large training datasets, the deterministic structure of the Simulink system proved inherently robust, interpretable, and efficient. This makes it particularly attractive for safety-critical applications in smart vehicles or driver-assistance systems.

Future work will include real-world hardware deployment on low-resource microcontrollers, accompanied by detailed profiling of latency, memory usage, and power consumption. Additional directions involve extending detection to other siren types (e.g., wail, hi-lo) and integrating the system into multi-modal frameworks that combine visual and acoustic cues to enhance driver alerting in complex traffic environments. Further improvements in detection robustness and overall system performance will also be pursued.

Author Contributions

Conceptualization, E.V.D. and R.A.D.; methodology, E.V.D.; software, E.V.D. and R.A.D.; validation, E.V.D. and R.A.D.; formal analysis, E.V.D.; investigation, E.V.D.; resources, E.V.D.; data curation, E.V.D.; writing—original draft preparation, E.V.D. and R.A.D.; writing—review and editing, E.V.D., R.A.D. and R.R.; visualization, E.V.D.; supervision, R.A.D. and R.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The training data presented in this study are available at https://doi.org/10.1038/s41597-022-01727-2, reference number [36]. The list of exact signals that were used in the training process are available at https://doi.org/10.1016/j.aej.2024.09.073, reference number [33]. The test data presented in the study are openly available in DatasetCreatedWithAudioLDM at https://github.com/ValentinaDumitrascu/DatasetCreatedWithAudioLDM (accessed on 1 August 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Injury Facts. Emergency Vehicles. Available online: https://injuryfacts.nsc.org/motor-vehicle/road-users/emergency-vehicles/ (accessed on 10 July 2025).

- Taxman, Pollock, Murray & Bekkerman, LLC. Emergency Vehicle Accidents Statistics USA-2023. 2023. Available online: https://tpmblegal.com/emergency-vehicle-accidents-statistics/ (accessed on 10 July 2025).

- Bosch Global. Embedded Siren Detection. Available online: https://www.bosch.com/stories/embedded-siren-detection/ (accessed on 10 July 2025).

- New Atlas. Hear System Promises Simple Inexpensive Siren Detection for Drivers. 2020. Available online: https://newatlas.com/automotive/ihear-siren-detection-system/ (accessed on 10 July 2025).

- Waymo. Recognizing the Sights and Sounds of Emergency Vehicles. Available online: https://waymo.com/blog/2017/07/recognizing-sights-and-sounds-of (accessed on 10 July 2025).

- Liaw, J.-J.; Wang, W.-S.; Chu, H.-C.; Huang, M.-S.; Lu, C.-P. Recognition of the Ambulance Siren Sound in Taiwan by the Longest Common Subsequence. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Washington, DC, USA, 13–16 October 2013; pp. 3825–3828. [Google Scholar]

- Marchegiani, L.; Posner, I. Leveraging the Urban Soundscape: Auditory Perception for Smart Vehicles. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 6547–6554. [Google Scholar]

- Beritelli, F.; Casale, S.; Russo, A.; Serrano, S. An Automatic Emergency Signal Recognition System for the Hearing Impaired. In Proceedings of the 2006 IEEE 12th Digital Signal Processing Workshop & 4th IEEE Signal Processing Education Workshop, Teton National Park, WY, USA, 24–27 September 2006; pp. 179–182. [Google Scholar]

- Nellore, K.; Hancke, G.P. Traffic Management for Emergency Vehicle Priority Based on Visual Sensing. Sensors 2016, 16, 1892. [Google Scholar] [CrossRef] [PubMed]

- Schröder, J.; Goetze, S.; Grützmacher, V.; Anemüller, J. Automatic Acoustic Siren Detection in Traffic Noise by Part-Based Models. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 493–497. [Google Scholar]

- Chakrabarty, D.; Elhilali, M. Abnormal Sound Event Detection Using Temporal Trajectories Mixtures. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 216–220. [Google Scholar]

- Miyazaki, T.; Kitazono, Y.; Shimakawa, M. Ambulance Siren Detector Using FFT on dsPIC. In Proceedings of the 1st IEEE/IIAE International Conference on Intelligent Systems and Image Processing 2013 (ICISIP2013), Kitakyushu, Japan, 24 September 2013. [Google Scholar]

- Anacur, C.A.; Saracoglu, R. Detecting of Warning Sounds in the Traffic Using Linear Predictive Coding. Int. J. Intell. Syst. Appl. Eng. 2019, 7, 195–200. [Google Scholar] [CrossRef]

- Ebizuka, Y.; Kato, S.; Itami, M. Detecting Approach of Emergency Vehicles Using Siren Sound Processing. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 4431–4436. [Google Scholar]

- Fatimah, B.; Preethi, A.; Hrushikesh, V.; Singh, B.A.; Kotion, H.R. An Automatic Siren Detection Algorithm Using Fourier Decomposition Method and MFCC. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; pp. 1–6. [Google Scholar]

- Mulimani, M.; Koolagudi, S.G. Segmentation and Characterization of Acoustic Event Spectrograms Using Singular Value Decomposition. Expert Syst. Appl. 2019, 120, 413–425. [Google Scholar] [CrossRef]

- Mielke, M.; Schäfer, A.; Brück, R. Integrated circuit for detection of acoustic emergency signals in road traffic. In Proceedings of the 17th International Conference Mixed Design of Integrated Circuits and Systems (MIXDES), Wrocław, Poland, 24–26 June 2010; IEEE: Toruń, Poland, 2010; pp. 562–565. [Google Scholar]

- Meucci, F.; Pierucci, L.; Del Re, E.; Lastrucci, L.; Desii, P. A Real-Time Siren Detector to Improve Safety of Guide in Traffic Environment. In Proceedings of the 2008 16th European Signal Processing Conference, Lausanne, Switzerland, 25–29 August 2008; pp. 1–5. [Google Scholar]

- Mittal, U.; Chawla, P. Acoustic Based Emergency Vehicle Detection Using Ensemble of Deep Learning Models. Procedia Comput. Sci. 2023, 218, 227–234. [Google Scholar] [CrossRef]

- Marchegiani, L.; Newman, P. Listening for Sirens: Locating and Classifying Acoustic Alarms in City Scenes. IEEE Trans. Intell. Transp. Syst. 2022, 23, 17087–17096. [Google Scholar] [CrossRef]

- Chinvar, D.C.; Rajat, M.; Bellubbi, R.L.; Sampath, S.; Guddad, K. Ambulance Siren Detection Using an MFCC Based Support Vector Machine. In Proceedings of the 2021 IEEE International Conference on Mobile Networks and Wireless Communications (ICMNWC), Tumakuru, India, 3–4 December 2021; pp. 1–5. [Google Scholar]

- Carmel, D.; Yeshurun, A.; Moshe, Y. Detection of Alarm Sounds in Noisy Environments. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos Island, Greece, 28 August–2 September 2017; pp. 1839–1843. [Google Scholar]

- Boddapati, V.; Petef, A.; Rasmusson, J.; Lundberg, L. Classifying Environmental Sounds Using Image Recognition Networks. Procedia Comput. Sci. 2017, 112, 2048–2056. [Google Scholar] [CrossRef]

- Murphy, K.P. Probabilistic Machine Learning: Advanced Topics; The MIT Press: Cambridge, UK, 2023; ISBN 978-0-262-04843-9. [Google Scholar]

- Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems; O’Reilly Media: Beijing, China; Boston, MA, USA; Farnham, UK; Sebastopol, CA, USA; Tokyo, Japan, 2023; ISBN 978-1-0981-2597-4. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016; ISBN 978-0-262-03561-3. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the Third International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Tran, V.-T.; Tsai, W.-H. Acoustic-Based Emergency Vehicle Detection Using Convolutional Neural Networks. IEEE Access 2020, 8, 75702–75713. [Google Scholar] [CrossRef]

- Choudhury, K.; Nandi, D. Improvement of Wail Siren Signal Detection in Noisy Environment Using Goertzel Filter with Sliding Window. Results Eng. 2025, 27, 106828. [Google Scholar] [CrossRef]

- Banchero, L.; Vacalebri-Lloret, F.; Mossi, J.M.; Lopez, J.J. Enhancing Road Safety with AI-Powered System for Effective Detection and Localization of Emergency Vehicles by Sound. Sensors 2025, 25, 793. [Google Scholar] [CrossRef] [PubMed]

- Dobre, R.A.; Niţă, V.A.; Ciobanu, A.; Negrescu, C.; Stanomir, D. Low Computational Method for Siren Detection. In Proceedings of the 2015 IEEE 21st International Symposium for Design and Technology in Electronic Packaging (SIITME), Brașov, Romania, 22–25 October 2015; pp. 291–295. [Google Scholar]

- Dobre, R.A.; Negrescu, C.; Stanomir, D. Improved Low Computational Method for Siren Detection. In Proceedings of the 2017 IEEE 23rd International Symposium for Design and Technology in Electronic Packaging (SIITME), Constanța, Romania, 26–29 October 2017; pp. 318–323. [Google Scholar]

- Dobre, R.-A.; Dumitrascu, E.-V. High-Performance, Low Complexity Yelp Siren Detection System. Alex. Eng. J. 2024, 109, 669–684. [Google Scholar] [CrossRef]

- Liu, H.; Lee, K.; Yang, Y.; Wang, Y.; Kong, Q.; Plumbley, M. AudioLDM: Text-to-Audio Generation with Latent Diffusion Models. arXiv 2023, arXiv:2301.12503. Available online: https://arxiv.org/abs/2301.12503 (accessed on 10 July 2025).

- Wagner, R.P. Guide to Test Methods, Performance Requirements, and Installation Practices for Electronic Sirens Used on Law Enforcement Vehicles; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2000; NCJ 181622. [Google Scholar]

- Asif, M.; Usaid, M.; Rashid, M.; Rajab, T.; Hussain, S.; Wasi, S. Large-Scale Audio Dataset for Emergency Vehicle Sirens and Road Noises. Sci. Data 2022, 9, 599. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).