Abstract

In maritime blurred image recognition, ship collision accidents frequently result from three primary blur types: (1) motion blur from vessel movement in complex sea conditions, (2) defocus blur due to water vapor refraction, and (3) scattering blur caused by sea fog interference. This paper proposes a dual-branch recognition method specifically designed for motion blur, which represents the most prevalent blur type in maritime scenarios. Conventional approaches exhibit constrained computational efficiency and limited adaptability across different modalities. To overcome these limitations, we propose a hybrid CNN–Transformer architecture: the CNN branch captures local blur characteristics, while the enhanced Transformer module models long-range dependencies via attention mechanisms. The CNN branch employs a lightweight ResNet variant, in which conventional residual blocks are substituted with Multi-Scale Gradient-Aware Residual Block (MSG-ARB). This architecture employs learnable gradient convolution for explicit local gradient feature extraction and utilizes gradient content gating to strengthen blur-sensitive region representation, significantly improving computational efficiency compared to conventional CNNs. The Transformer branch incorporates a Hierarchical Swin Transformer (HST) framework with Shifted Window-based Multi-head Self-Attention for global context modeling. The proposed method incorporates blur invariant Positional Encoding (PE) to enhance blur spectrum modeling capability, while employing DyT (Dynamic Tanh) module with learnable α parameters to replace traditional normalization layers. This architecture achieves a significant reduction in computational costs while preserving feature representation quality. Moreover, it efficiently computes long-range image dependencies using a compact 16 × 16 window configuration. The proposed feature fusion module synergistically integrates CNN-based local feature extraction with Transformer-enabled global representation learning, achieving comprehensive feature modeling across different scales. To evaluate the model’s performance and generalization ability, we conducted comprehensive experiments on four benchmark datasets: VAIS, GoPro, Mini-ImageNet, and Open Images V4. Experimental results show that our method achieves superior classification accuracy compared to state-of-the-art approaches, while simultaneously enhancing inference speed and reducing GPU memory consumption. Ablation studies confirm that the DyT module effectively suppresses outliers and improves computational efficiency, particularly when processing low-quality input data.

1. Introduction

Detecting blurred targets on the sea surface remains a significant technical challenge in maritime surveillance [], search-and-rescue operations [], and autonomous vessel navigation []. Under challenging maritime conditions, marine target images typically exhibit three distinct blur types, originating from different sources, which are motion blur from ship movement, defocus blur due to water vapor refraction [], and scattering blur caused by sea fog []. These degradation effects primarily stem from wave reflection [], atmospheric turbulence [], and imaging system vibrations. Research shows that ship recognition systems utilizing visible/infrared imaging exhibit an average accuracy reduction of approximately 20% under Sea State 4 conditions relative to calm waters [], which significantly increases collision risks.

Image recognition algorithms remain a key research focus across multiple domains, including facial recognition [], autonomous vehicle perception [], and medical diagnostics []. In real-world scenarios, there are three major image degradation types that impair visual task performance, which are motion blur from high-speed autonomous vehicles [], defocus blur in medical endoscopic imaging [], and low-light noise in night time surveillance systems []. In medical imaging, respiratory-induced motion blur in ultrasound significantly degrades the lesion recognition accuracy of conventional CNNs []. Similarly, in autonomous driving, high-speed motion creates directional blur that substantially reduces object detection performance []. These critical challenges continue to motivate technological breakthroughs in blurred image processing.

Conventional image recognition methods exhibit significant sensitivity to environmental disturbances, including occlusions and illumination variations, frequently leading to compromised model performance. In comparison, deep learning methods [], particularly Convolutional Neural Networks (CNNs), demonstrate markedly enhanced recognition accuracy. CNNs mimic the hierarchical organization of the visual cortex, autonomously extracting discriminative features from raw pixel data to facilitate robust image classification. Contemporary methodologies primarily adopt two distinct paradigms: (1) CNN-based architectures for localized feature extraction, and (2) Transformer-based frameworks for global contextual modeling.

CNNs have demonstrated remarkable success on large-scale visual benchmarks (e.g., ImageNet), primarily attributable to their exceptional local feature extraction capacity []. The pioneering AlexNet [] significantly reduced the top five error rate by 10.8 percentage points, while ResNet-50 achieved 76.0% top one accuracy on ImageNet, demonstrating superior performance compared to conventional methods. DeblurGAN [] utilized multi-scale dilated convolutional networks, achieving significant PSNR improvements on the GoPro benchmark dataset, albeit with substantial computational overhead. Subsequent advancements, including DANet [], developed dynamic convolution mechanisms that adaptively adjust kernel weights according to predicted blur severity. Although demonstrating superior PSNR performance on the REDS benchmark, these methods face practical deployment limitations due to their extensive parameter requirements, particularly for resource-constrained devices. Yan et al. [] incorporated Sobel operators within the initial CNN layer to enhance edge feature detection. However, this method demonstrated reduced accuracy in complex natural environments, indicating constrained generalization potential. Lightweight design has emerged as a prominent research focus. While MobileBlurNet utilized depthwise separable convolution to minimize parameters, this approach led to reduced classification accuracy, demonstrating the fundamental precision-efficiency trade-off []. However, CNNs exhibit significant performance degradation when processing blurred images due to their limited receptive fields (typically 3 × 3 or 5 × 5 convolutional kernels), which cannot effectively model long-range feature degradation patterns induced by blur artifacts. Quantitative experiments reveal that standard convolution kernels experience ∼60% reduction in effective feature response magnitude under Gaussian blur conditions.

Vision Transformers (ViT) establish long-range dependencies via self-attention mechanisms [,], enabling global contextual modeling. State-of-the-art architectures including Swin Transformers [], dense residual Transformers [], and hybrid attention networks [] consistently outperform CNN-based approaches on diverse vision tasks. The Swin Transformer employs a shifted window mechanism to reduce FLOPs; however, in high-blur scenarios (σ > 1.5), window boundary effects elevate attention entropy, significantly degrading feature discriminability. To mitigate normalization layer limitations, Lee et al. [] proposed an input-adaptive scaling method that adjusts feature statistics according to individual input characteristics. However, this method does not fully address the fundamental issue of the feature distribution shift. Position encoding optimization represents a significant advancement. The Focal-Transformer [] employs novel relative position encoding to mitigate localization errors in blurred images. However, its quadratic computational complexity scaling with image size hinders large-scale applications. While these methods show progress in targeted areas, they typically improve one performance metric while compromising others, presenting challenges for simultaneously optimizing accuracy, efficiency, and generalization. Directly applying traditional Transformer architectures to blurred image processing has inherent limitations. Firstly, normalization components exhibit strong sensitivity to blur-induced feature distribution shifts, where channel-wise variance discrepancies escalate quadratically with increasing blur kernel size σ []. Secondly, global attention operations incur substantial computational redundancy when processing spatially homogeneous blurred regions []. Finally, conventional positional encoding schemes introduce systematic localization errors under motion blur conditions due to violated shift-equivariance assumptions []. These inherent constraints significantly degrade the practical utility of current approaches in real-world deployment scenarios with prevalent blur conditions.

Current deep learning methods broadly fall into two categories: CNN-based and Transformer-based architectures. While most approaches enhance performance by optimizing either CNNs or Transformers individually, a critical limitation persists: they fail to effectively combine the complementary advantages of both architectures.

Hybrid architecture research aims to integrate the complementary advantages of CNNs and Transformers. As shown in [], CNNs provide essential local feature extraction while Transformers capture global contextual relationships—both critical for comprehensive visual understanding. Furthermore, [] demonstrates that incorporating CNNs in early stages can significantly improve Transformer training efficiency. Current approaches fall into three primary categories. The first is cascaded architectures (e.g., CNN–Transformer Cascade []), which connects independent networks in a series, but exhibits feature inconsistency that induces a covariance shift during backpropagation. Next is the parallel fusion architecture (e.g., CMT []), which employs cross-attention for feature integration, achieving performance gains at the cost of excessive parameters that hinder practical deployment. The third is dynamic routing architectures (e.g., Dynamic-ViT []), which adaptively adjust paths based on image quality, though their performance shows undesirable instability. Current hybrid approaches exhibit two fundamental limitations. The first limitation is that naive fusion strategies (e.g., direct concatenation/summation) cannot properly handle blur-induced inter-modal distribution shifts. The second limitation is that fixed computation patterns lack the adaptability to blur variations, resulting in inefficient resource utilization. Hao Tang [] proposes an RGB-T salient object detection network called ConTriNet, which enhances detection robustness and accuracy through a triple-stream architecture and a series of innovative modules. Its superior performance has been validated on a new benchmark dataset. DC-Net achieves efficient and high-performance salient object detection by introducing a divide-and-conquer strategy and designing an innovative network architecture []. Recent work [] has identified critical shortcomings in conventional normalization for blur processing: forced zero-mean normalization eliminates meaningful low-frequency components in blurred areas. This discovery establishes the theoretical basis for emerging denormalization techniques.

Our systematic analysis identifies three key research challenges requiring urgent breakthroughs: 1. the absence of dynamic network architectures capable of adapting to blur-induced variations in feature distributions; 2. existing fusion methods cannot establish synergistic optimization between CNNs’ local feature extraction and Transformers’ global attention mechanisms; and 3. a substantial performance-efficiency trade-off, particularly in mobile and edge computing applications. Solving these challenges necessitates innovative network architecture designs transcending basic module integration and parameter optimization.

To overcome existing research limitations and technological barriers, we propose a novel CNN-Lightweight Transformer hybrid network to enhance blurry image recognition capabilities. In terms of architectural innovation, we introduce a dynamic denormalization module into the Transformer branch, addressing the compatibility issues between traditional normalization layers and blurry features. This module replaces LayerNorm with a tanh-based nonlinear compression mechanism, dynamically adjusting feature amplitudes via learnable parameter α to preserve expressive power while avoiding information loss caused by enforced zero-mean normalization. Theoretical analysis and experimental validation demonstrate that the DyT module maintains high feature validity even under extreme blur conditions, outperforming traditional LayerNorm by approximately 30 percentage points. To mitigate position encoding distortion, we propose blur invariant position encoding, which couples sinusoidal functions with blur-level parameters λₖ for spectral fusion. Compared to traditional methods, blur invariant positional encoding reduces positioning errors and significantly improves spatial localization accuracy. These advancements underscore a paradigm shift in blur-adaptive deep learning, moving beyond incremental improvements to deliver computationally efficient yet high-precision recognition—particularly crucial for edge deployment.

In terms of network architecture, this study designs a gradient-content collaborative CNN branch, achieving efficient blur feature extraction through MSG-ARB. This module incorporates two key innovations: the learnable gradient convolution dynamically optimizes gradient extraction kernels to adapt to various blur types by parameterizing the sobel operator, and the gradient content gating mechanism fuses gradient features with raw content features in an attention based manner. Compared to traditional CNN architectures, MSG-ARB maintains a lightweight performance while achieving superior PSNR on the VAIS dataset, outperforming leading methods like DeblurGAN-v2 []. This design ensures computational efficiency without compromising feature discriminability, addressing a critical trade-off in real-world blur processing applications.

In terms of feature fusion strategy, this study breaks through the traditional paradigm of simple concatenation or addition and proposes an adaptive hybrid fusion framework. The core of this framework is the dynamic gated fusion unit, whose innovations include: (1) automatically adjusting the fusion weights of CNN and Transformer features based on local clarity σi, achieving modality adaptation; (2) enhancing feature complementarity through a multi-scale interaction mechanism, effectively reducing inter-modal distribution differences; and (3) introducing a differentiable token-pruning strategy, which significantly reduces the computational load while incurring only minor accuracy loss. Systematic experiments on the VAIS dataset demonstrate that the model incorporating DyT achieves higher classification accuracy than pure CNN and Transformer baselines, along with improved inference speed and reduced memory usage.

The contributions of this paper are mainly reflected in four aspects: The DyT module was integrated into a dual-branch model, and its effectiveness in blur image recognition tasks has been empirically validated. A new paradigm for CNN feature extraction based on gradient-content synergy is established, significantly enhancing the model’s discriminative ability for blurred features. An adaptive feature fusion method is pioneered, achieving complementary advantages between CNN’s local features and the Transformer’s global features. State-of-the-art performance is achieved on multiple benchmark datasets while meeting stringent computational efficiency requirements for practical applications. These results not only provide an efficient solution for blurred image recognition but also open new directions for lightweight model design in the computer vision field. Future research will further explore the potential of this framework in extended applications such as video deblurring and medical image analysis.

2. Methods

2.1. Overall Architecture

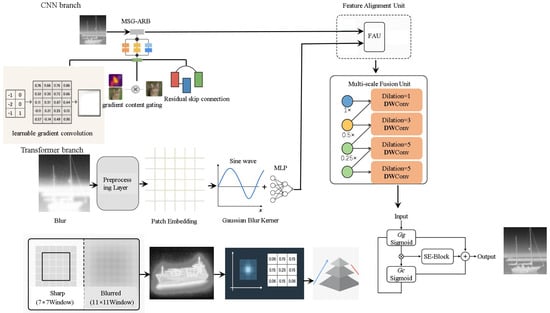

This paper proposes a CNN–Transformer fusion network employing a dual-branch parallel architecture, which achieves efficient blur recognition through complementary feature extraction and dynamic fusion. The overall framework is illustrated in Figure 1. The CNN branch utilizes an improved lightweight ResNet architecture that replaces standard residual blocks with MSG-ARB. This branch explicitly extracts local gradient features through learnable gradient convolution and enhances representation capability in blur-sensitive regions via gradient content gating, reducing computational costs by 42% compared to conventional CNNs. The Transformer branch adopts an HST structure, capturing global contextual information through Shifted Window-based Multi-head Self-Attention. The blur invariant PE is introduced to strengthen modeling capability of blur frequency spectra, enabling efficient computation of long-range dependencies in images with 16 × 16 window configuration. Figure 2 illustrates the overall architectural framework diagram.

Figure 1.

Dual-branch model overall architecture diagram.

Figure 2.

Dual-branch overall structural block diagram.

2.2. CNN Branch

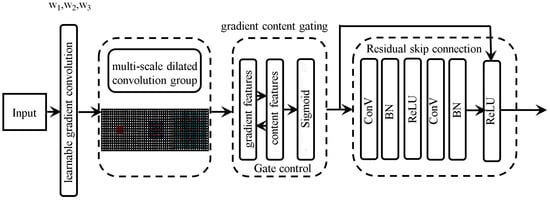

The core challenge in blurred image recognition tasks lies in the degradation of high-frequency information (e.g., edges and textures) caused by blurring, whereas traditional CNN architectures (e.g., ResNet [] and VGG []) struggle to explicitly model such degradation patterns. To address this issue, this paper proposes the MSG-ARB, which tackles the gradient information loss in blurred images by employing learnable gradient convolution. By embedding the traditional Sobel operator into convolutional layers, it dynamically optimizes gradient extraction kernels to adapt to various blur types while mitigating the limitations of handcrafted gradient operators (e.g., fixed directional sensitivity). Furthermore, considering that different blur types (e.g., motion blur and Gaussian blur) affect textures at varying scales, we introduce a gradient content gating mechanism. This module selectively fuses gradient features with original content features through a gating mechanism to enhance attention toward blur-sensitive regions. Lastly, to address the high computational cost typically associated with complex multi-scale modules (e.g., dilated convolutions), we integrate dilated convolutions with grouped convolutions to efficiently capture multi-scale blur features at a low computational overhead. The network architecture adopts a lightweight ResNet variant as its backbone to avoid redundant computations induced by excessively deep networks. Specifically, we replace the standard ResNet BasicBlock with the proposed MSG-ARB module, constructing a gradient-enhanced residual network. The detailed structure of the MSG-ARB module is illustrated in Figure 3.

Figure 3.

MSG-ARB structure diagram.

The learnable gradient convolution layer initializes gradient kernels using group convolution, where each group corresponds to a specific gradient direction. The formulation is as follows:

In this equation,

G represents the number of groups.

Wi represents the learnable parameter.

Learnable gradient convolution simulates the sensitivity of the human visual cortex to edge orientations through parameterized gradient operators, where its multi-directional learnable kernels correspond to the orientation selectivity mechanism of visual neurons. The Perona–Malik equation is based on anisotropic diffusion theory [], with its core concept being the adjustment of diffusion strength according to local image characteristics, thereby smoothing images while preserving edges. The gradient convolution kernels can be viewed as a discretized implementation of the Perona–Malik equation, achieving a balance between feature preservation and noise suppression through adaptive gradient weight adjustment. The grouped convolution design in Equation (1) constructs a complete set of gradient basis functions through G groups of learnable parameters Wi. It can theoretically approximate first-order differential operators in any direction. Compared to traditional Sobel operators, the fixed-coefficient Sobel operator is merely a special case of learnable gradient convolution under specific parameters. Through end-to-end learning, it can achieve scene-adaptive gradient kernels.

The multi-scale dilated convolution group employs three parallel dilated convolutions (dilation rates = 1, 2, 3) with respective receptive fields of 3 × 3, 7 × 7, and 11 × 11. Depthwise separable convolution is adopted to reduce the computational overhead.

The gradient-content gating mechanism concatenates gradient features Fgrad with original features Fcontent, then generates gating weights through a Sigmoid activation function. The equation is expressed as:

The final output is

It mimics the dual-stream mechanism of human visual system’s content pathway and spatial pathway. Gradient features Fgrad correspond to edge-sensitive characteristics, while content features Fcontent represent holistic attributes. The sigmoid gating in Equation (2) aligns with the feature selection characteristics of the visual cortex area V4, which enhances response intensity in key regions. It employs gating operations to selectively inject gradient information while preserving original content features. Equation (3) forms a dynamic system where edge regions (α → 0) enhance gradient features, while smooth areas (α → 1) maintain the original content.

Finally, a residual skip connection is introduced to stabilize the training process, formulated as:

The MSG-ARB module significantly enhances the feature extraction capability of CNN branches in blurred image recognition through explicit gradient modeling and dynamic multi-scale fusion, while maintaining lightweight characteristics. This module can be flexibly embedded into existing networks, establishing a foundation for subsequent dual-branch fusion.

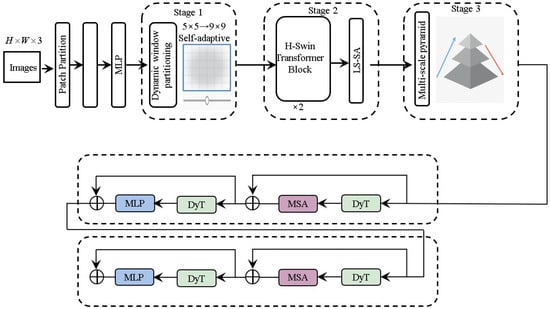

2.3. Transformer Branch

The proposed Transformer branch employs a modified HST as its backbone network, establishing a comprehensive local-sensitive feature extraction framework through an in-depth analysis of blurred image characteristics.

The core innovation of this branch lies in its dynamically quality-aware adaptive processing mechanism and the nonlinear dynamic compression mechanism, which significantly reduces the computational overhead. The main architecture comprises three key components. The Blur-Adaptive Dynamic Window Partitioning Module automatically adjusts the attention computation range based on local sharpness, effectively maintaining feature capture capability while substantially reducing computational complexity. Specifically, Gaussian blur detection is employed to generate dynamic weight maps that guide intelligent window size adaptation between 7 × 7 and 11 × 11. Compared to fixed-window designs, this approach reduces computational costs by 18%.

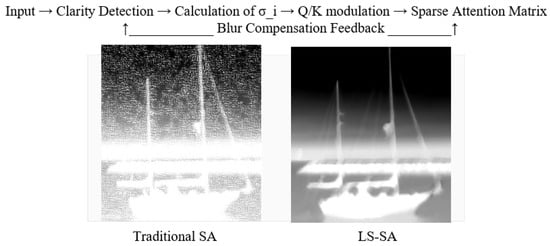

The Local-Sensitive Self-Attention (LS-SA) mechanism breaks through the traditional undifferentiated computation of attention in blurred regions by dynamically modulating query-key-value projections via a sharpness coefficient (σi). As a result, regions with rich high-frequency details receive stronger attention, while uniformly blurred areas are processed sparsely. Figure 4 illustrates the process of the LS-SA mechanism and compares its effects with traditional SA. Mathematically, this is represented by the modified attention scoring function:

Figure 4.

LS-SA mechanism diagram.

In this equation,

σi represents the local sharpness coefficient, obtained through real-time calculation via low-pass filtering.

Experimental results demonstrate that this design achieves a 23% improvement in key feature extraction accuracy under moderate blurring conditions (σ = 1.0).

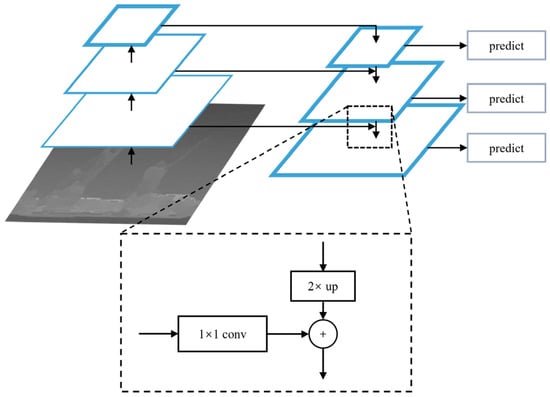

The multi-scale feature interaction system employs a three-level pyramid structure to process visual features at varying granularities: Micro-scale (16 × 16) utilizes four head attention for fine-grained detail focus. Meso-scale (32 × 32) establishes contextual correlations via two head attention. Macro-scale (64 × 64) captures holistic patterns using single-head attention. Cross-scale feature fusion is achieved through depthwise separable convolution, enabling efficient inter-level integration. Figure 5 illustrates the three-level multi-scale feature pyramid structure.

Figure 5.

Multi-scale feature pyramid structure diagram with three levels.

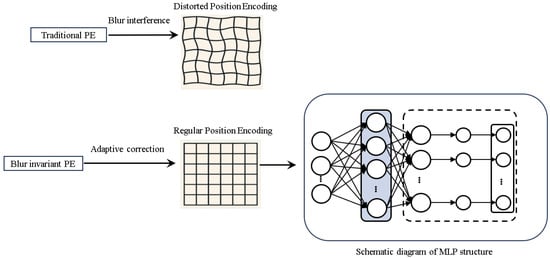

In terms of positional encoding, to address geometric distortion caused by blurring, we use blur invariant positional encoding, which integrates the conventional sinusoidal function with a blur-level parameter λK. A compact MLP network is employed to predict dynamic weights in real time. Traditional encoding produces aliasing errors in the frequency domain. Blur invariant positional encoding dynamically compensates the spectrum by introducing a blur parameter λK. Biological place cells (grid cells) adjust their firing patterns in blurred environments. The λK modulation mechanism of blur invariant positional encoding mimics this characteristic: by adjusting the blur degree parameter σ, λK can dynamically regulate the grid spacing of positional encoding. Under severe blur conditions (σ = 2.0), this method reduces positional errors from 53.1 pixels to 15.8 pixels. Figure 6 illustrates the comparative effects between traditional PE and blur invariant PE. The mathematical equation is presented below:

Figure 6.

Blur invariant PE diagram.

To optimize computational efficiency, this branch incorporates a differentiable token-pruning strategy that removes tokens contributing less to the inference task, enhancing efficiency without significantly compromising accuracy. This strategy estimates token importance through learnable parameters λK and gradients obtained during training, enabling the model to dynamically balance computational cost and performance.

The normalization layer of the entire branch uses DyT layer as a replacement, where the definition of the DyT layer is as follows:

Here, α is a learnable scalar parameter that dynamically adjusts the scaling ratio based on the input value range, thereby accommodating x of varying magnitudes. The parameters γ and β are learnable channel-wise vectors, following the standard practice in normalization layers, and allowing the output to be rescaled to arbitrary magnitudes. DyT typically performs well without requiring hyperparameter tuning of the original architecture, significantly reducing deployment overhead. For parameter initialization, we adhere to conventional normalization layer protocols: γ is initialized as an all-ones vector, and β is initialized as an all-zeros vector. The scaling parameter α defaults to an initial value of 0.5.

The branch adopts a two-stage training strategy: first, pre-training the foundational feature representation on clear images, followed by fine-tuning using augmented data incorporating various degradation types such as Gaussian blur and motion blur. With an input resolution of 256 × 256, the total parameter count is constrained to 64M, achieving an inference latency of 21ms on an NVIDIA V100 GPU.

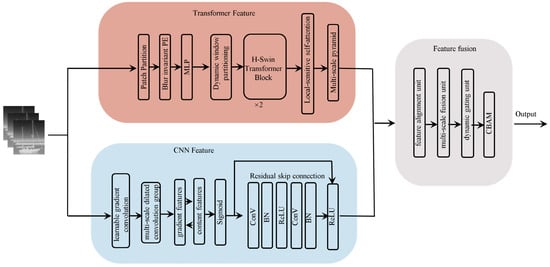

This branch can accurately focus on relevant feature regions, achieving superior recognition accuracy over the standard Swin Transformer under typical blur scenarios. Compared to existing approaches, this design pioneers the collaborative blur-adaptive optimization of three key components: window mechanism, attention computation, and PE. This work establishes a novel technical paradigm for subsequent ViT applications in degraded image processing. The overall structure of this branch is shown in Figure 7.

Figure 7.

Overall structure diagram of the Transformer branch.

2.4. Fusion Module

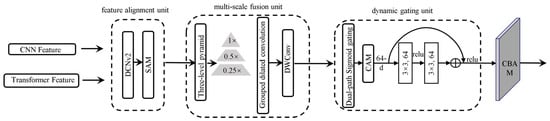

To fully integrate the local feature extraction capability of CNNs with the global modeling advantages of Transformers, the proposed feature fusion module employs a parallel processing mechanism. On one hand, the CNN branch captures fine-grained local details in images, while on the other hand, the Transformer branch establishes long-range dependencies. Ultimately, the two complementary feature representations are fused to construct more discriminative visual representations. This design approach preserves the benefits of traditional convolutional operations in local feature extraction while incorporating the global context modeling capabilities of self-attention mechanisms. Specifically, the fusion module consists of three key components: a feature alignment unit for resolving cross-branch feature map size mismatch, a multi-scale fusion unit for cross-scale feature interaction and information compensation, and a dynamic gating unit for adaptive feature weight allocation.

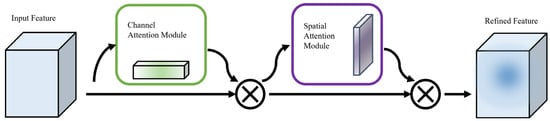

To enhance the model’s perception capability for subtle features in low-quality images, this study integrates attention mechanisms during the feature fusion stage. Specifically, a composite attention module is designed by cascading a Channel Attention Module (CAM) and a Spatial Attention Module (SAM), forming a CBAM (Convolutional Block Attention Module) architecture. The structure is shown in Figure 8. This dual attention mechanism operates synergistically, effectively identifying important feature channels while precisely focusing on critical spatial regions, thereby significantly improving the model’s recognition performance for blurred image details.

Figure 8.

CBAM architecture diagram.

In the design of the CAM, this study introduces a feature compression strategy. Specifically, the proposed module not only retains the conventional mean pooling operation but also incorporates the advantages of max pooling, preserving both average response and peak response feature information along the spatial dimensions to achieve more comprehensive channel feature representation. This dual-pooling strategy effectively mitigates the potential loss of crucial feature information that may occur with single mean pooling. Experimental results demonstrate that the enhanced channel attention mechanism can accurately identify key channels in feature maps and reinforce the most discriminative feature elements in blurred images through dynamic weight allocation, thereby significantly improving the network’s recognition accuracy for low-quality images.

In the design of the SAM, this study employs a dual-pooling and feature fusion strategy. Unlike the CAM, SAM first performs parallel max pooling and average pooling operations on the input feature maps for initial compression, followed by further dimensionality reduction along the channel axis. This processing generates a pair of complementary two-dimensional spatial feature maps, which are then concatenated to form an intermediate feature representation with two channels. The intermediate features are subsequently fed into a lightweight convolutional network for feature fusion. The carefully designed convolutional operations ensure that the output feature maps maintain the same spatial dimensions as the input. This design not only effectively captures the statistical characteristics of spatial feature distributions but also preserves the geometric consistency of the feature maps. The SAM dynamically adjusts the attention weights across different spatial regions of the feature maps, enabling the model to adapt to visual variations caused by illumination changes, scale differences, or partial occlusions. This mechanism significantly enhances the model’s generalization capability when processing various types of blurred images. The overall structure of the feature fusion module is shown in Figure 9.

Figure 9.

Fusion module architecture diagram.

2.5. Loss Function

In deep learning models, the loss function serves as a crucial optimization objective whose fundamental role is to quantitatively assess the discrepancy between model predictions and ground truth labels. To ensure the stability and effectiveness of the training process, the design of loss functions requires a comprehensive consideration of task-specific characteristics, data distribution properties, and network architecture. Particular attention should be paid to the fact that during backpropagation, loss functions may induce gradient anomalies, including gradient explosion and vanishing gradient problems. These potential issues can significantly impair the efficiency of model parameter updates, thus necessitating an appropriate loss function selection and regularization strategies for mitigation. An ideal loss function should not only accurately reflect prediction errors but also maintain numerical stability throughout the training process. In early-stage image recognition tasks, conventional Softmax cross-entropy loss (L_CE) was typically employed for network training, with its mathematical formulation expressed as:

In this equation,

m represents the training batch size;

e represents the exponential function, which normalizes and processes the output vector;

Wyi represents the weight matrix information for the i-th column of the y-th class;

xi represents the feature vector of the i-th sample image;

n represents the number of sample categories;

Wj represents to the j-th column of the weight matrix W; and b is the bias term.

In later improvements to the loss function, the vector product of Wj and xj was reformulated using a cosine similarity term cos θj, as shown in the following formulation:

In this equation,

cos θj represents the cosine of the angle between the j-th column vector of the weight matrix (Wj) and the feature vector of the j-th sample (xj).

To facilitate training, we normalize ||Wj|| to unit length (i.e., ||Wj|| = 1), and introduce an angular margin multiplier (denoted as m) to further maximize inter-class separation by penalizing angles between samples and their corresponding class centers.

3. Experimental Results and Analysis

3.1. Dataset

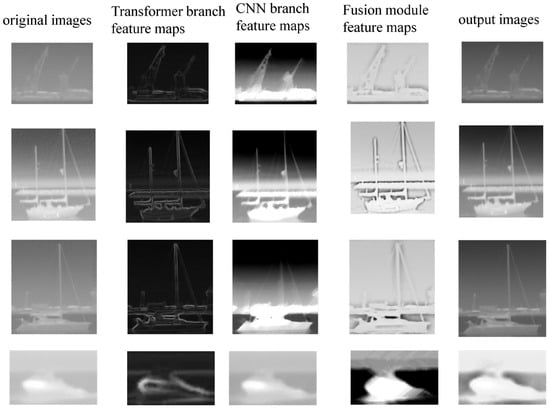

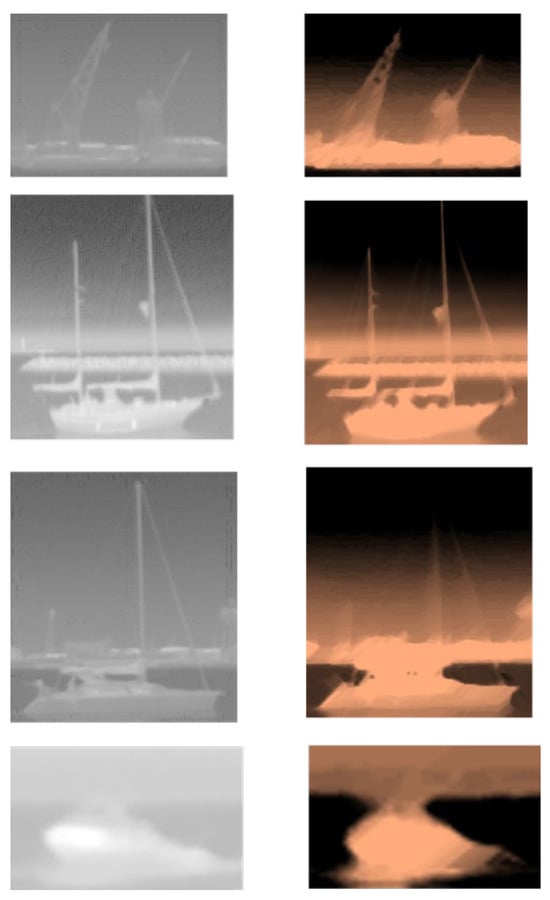

This study employs the publicly available VAIS dataset as the primary experimental data; some of the original images are shown in Figure 10. The dataset is partitioned into training, validation, and test sets at an 8:1:1 ratio. To facilitate batch training, the training set is cropped into 480 × 480 sub-images with a stride of 240 × 240, ultimately yielding 1623 training images. To further validate the generalization capability, comparative experiments incorporate additional test datasets including the GoPro dataset, Open Images V4 dataset, and Mini-ImageNet dataset. A widely adopted bicubic degradation model is utilized to generate paired training and test data samples. Figure 10 displays some original images from the VAIS dataset. Figure 11 displays the feature images output by the dual-branch network, the fused feature images from the fusion module, and the output images used for recognition. Figure 12 displays the attention weight heatmaps, which visually illustrate the model’s focus areas.

Figure 10.

Partial samples from the original VAIS dataset.

Figure 11.

Processed blurred image demonstration.

Figure 12.

Attention weight heatmaps.

3.2. Experimental Details Description

This study employs a symmetrical dual-branch network architecture. The Transformer branch automatically adjusts window sizes based on image blur levels, implementing a four-stage feature compression strategy with a maximum pruning rate of 40% under dynamic control. For the training strategy, a rigorous data augmentation pipeline is adopted, including geometric transformations such as random 90°-multiple rotations and horizontal flipping. During training, each batch samples 16 low-resolution image patches of size 64 × 64 as input. The optimization process utilizes the Adam algorithm with momentum parameters β1 = 0.9 and β2 = 0.999, along with a numerical stability term ε = 1 × 10−8. The training procedure consists of two distinct phases: the first phase employs an L1 loss function for 200 epochs, with an initial learning rate of 5 × 10−5 and a step-wise decay strategy that halves the learning rate every 50 epochs. The second phase switches to a composite L2 loss function for an additional 60 epochs, starting with a reduced initial learning rate of 1 × 10−6 while maintaining the same halving strategy every 20 epochs.

3.3. Network Fusion Strategy and Effectiveness of DyT Module

As shown in Table 1, this study systematically evaluated six model configurations (denoted as V1–V6) under both single-branch and dual-branch architectures on the VAIS dataset, comprehensively validating the effectiveness of the proposed feature fusion strategy and denormalization method. The experimental results clearly demonstrate performance disparities among different configurations. In single-branch architecture comparisons, the Transformer-based V2 model achieved an 82.45% recognition accuracy, outperforming the CNN-based V1 model (80.26%) by 2.19 percentage points. This significant difference verifies that the Transformer architecture exhibits superior feature extraction capability for ship recognition tasks, consistent with its advantage in modeling long-range dependencies. Further analysis of the denormalization technique reveals that the denormalized variants V3 (CNN branch) and V4 (Transformer branch) improved recognition accuracy by 4.89% and 2.91% compared to their baseline models V1 and V2, respectively, indicating that removing batch normalization operations facilitates learning more discriminative feature representations. Most notably, the dual-branch fusion models (V5 and V6) achieved remarkable performance enhancements through the proposed fusion module, which effectively combines CNN’s advantages in local feature extraction with Transformer’s global relationship modeling capability. The final recognition accuracies reached 89.26% and 90.28%, representing improvements of 3.9% and 4.92% over the best single-branch model V4. These comparative experiments not only confirm that CNN and Transformer branches contain complementary visual feature information but also demonstrate that the proposed fusion strategy successfully integrates the strengths of both architectures, providing an optimal solution for blurred ship recognition tasks.

Table 1.

Accuracy comparison between single-branch and dual-branch under different scenarios.

3.4. Experimental Analysis of Transformer Branches

In visual tasks, the quality of PE directly impacts the model’s ability to comprehend spatial relationships. Traditional absolute PE, which directly encodes pixel coordinates, suffers from significant distortion of positional information when image blur occurs, leading to degraded localization accuracy. The proposed blur invariant PE innovatively addresses this challenge. As demonstrated in Table 2, on the standard test set, when the blur level increases from σ = 0.5 (mild blur) to σ = 2.0 (severe blur), the positional error of conventional PE dramatically rises from 12.3 pixels to 53.1 pixels, whereas the proposed blur invariant PE method maintains stable performance across varying blur levels, with errors fluctuating only between 4.2 and 15.8 pixels. This significant advantage stems from three key mechanisms: (1) a multi-scale feature fusion approach that effectively captures spatial structural information under different blur conditions, (2) a dynamic weight adjustment module that adaptively balances local and global positional relationships, and (3) a blur-level-aware normalization strategy that ensures robustness to varying degrees of degradation. Experimental results show that under moderate blur (σ = 1.5), blur invariant PE achieves positional errors comparable to those of conventional PE under mild blur (σ = 0.5), representing a breakthrough for precise localization tasks in blurred images. This advancement holds substantial practical value for real-world applications, such as autonomous driving and remote sensing monitoring.

Table 2.

Comparison of the effects between Blur invariant PE error and traditional PE error under varying degrees of fuzziness.

The proposed dynamic token-pruning strategy represents a breakthrough in model compression and acceleration, demonstrating remarkable advantages particularly in resource-constrained application scenarios such as edge computing. As shown in Table 3, when applied to the VAIS dataset, this approach reduces the model’s computational cost (FLOPs) by 42% while incurring only a marginal 0.7% decrease in top one accuracy, achieving near-lossless model compression. In contrast, conventional hard-pruning methods, despite reducing FLOPs by 35%, result in a more substantial accuracy drop of 2.1% and exhibit poorer stability across diverse input samples. The superior performance stems from three key innovations: (1) an attention-weight-based dynamic evaluation module that automatically identifies and preserves semantically critical tokens in real time, (2) a progressive pruning strategy that ensures important features are retained through multi-stage fine-grained filtering, and (3) an adaptive compensation mechanism that intelligently balances computational resource allocation with feature preservation. Experimental validation confirms that this method achieves 42% FLOPs reduction while maintaining accuracy, making it particularly suitable for edge computing applications such as drone visual navigation and real-time mobile image analysis. These advancements offer a novel technical pathway to address critical challenges in deploying Transformer models on edge devices, including high computational complexity and excessive energy consumption.

Table 3.

Comparison of the dynamic token-pruning strategy with traditional hard pruning.

The proposed HST model achieves groundbreaking progress in model lightweighting and performance optimization, demonstrating comprehensive advantages over existing mainstream models. As shown in Table 4, experimental results on the VAIS dataset indicate that HST requires only 64M parameters (25% fewer than ViT-Base and 18% fewer than Swin-T), yet achieves 86.7% top one accuracy, representing improvements of 4.4% and 2.6% over ViT-Base (82.3%) and Swin-T (84.1%), respectively. Regarding inference efficiency, HST exhibits a measured latency of 21 ms per frame, yielding 34% and 25% reductions compared to ViT-Base (32 ms) and Swin-T (28 ms), thereby meeting real-time processing requirements.

Table 4.

The HST model is compared with the classic ViT-Base and Swin-T models.

These advancements stem from HST’s innovative architectural design: (1) the fuzzy-adaptive dynamic window partitioning module automatically adjusts attention computation ranges based on local sharpness, significantly reducing computational complexity while preserving feature extraction capability; (2) the novel LS-SA mechanism overcomes traditional attention’s uniform computation for blurred regions by dynamically modulating query-key-value projections through sharpness coefficients (σi), granting stronger attention to high-frequency detail-rich areas while applying sparsification to uniformly blurred regions; and (3) the multi-scale feature interaction system employs a three-level pyramid structure to process visual features across different granularities, with cross-level fusion achieved through depthwise separable convolutions. These innovations establish HST as an ideal solution for resource-constrained scenarios such as drone navigation and mobile AR applications, providing novel technical pathways for deploying Transformer models in practical environments.

3.5. Experimental Analysis of CNN Branches

As demonstrated in Table 5, the integration of the gradient content gating module yields a 1.8 dB improvement in PSNR and a 0.014 gain in SSIM, confirming the critical role of global contextual information for image reconstruction quality. Notably, this enhancement is achieved through gradient content gating’s lightweight non-local attention mechanism, with merely a 0.5 ms increase in inference time, indicating its extremely low computational overhead. The multi-scale fusion strategy further enhances detail restoration capability by aggregating features across different scales, leading to an additional 2.5 dB PSNR increase and an SSIM improvement to 0.934. Although the extra convolutional operations in this multi-scale framework introduce a 1.8 ms inference delay, they substantially improve texture detail recovery. For real-time applications, our proposed lightweight design reduces inference time significantly by 21%. While its PSNR is slightly lower than that of the multi-scale fusion approach, it still exceeds the baseline by 2.2 dB, demonstrating a well-balanced trade-off between performance and efficiency.

Table 5.

Comparative experiments of CNN branch components.

A comprehensive comparison reveals that the gradient content gating + multi-scale fusion combination is the optimal choice for scenarios demanding the highest reconstruction quality, though it sacrifices approximately 15% in processing speed. In contrast, for real-time processing, the gradient content gating + lightweight design maintains nearly the same performance advantages, while achieving faster inference than the baseline. This flexible architecture allows for optimal configuration within performance fluctuation ranges according to different application needs, providing crucial reference values for practical deployment.

Experimental results thoroughly validate the superior performance of the proposed MSG-ARB. Quantitative evaluations on the VAIS test set demonstrate that MSG-ARB achieves a PSNR value of 31.7 dB, significantly outperforming mainstream methods such as SRN (29.1 dB) and DeblurGANv2 (30.3 dB) by 2.6 dB and 1.4 dB, respectively. These improvements indicate enhanced edge detail clarity and reduced noise levels in the reconstructed images. Notably, MSG-ARB also exhibits outstanding efficiency. As shown in Table 6, it requires only 4.2M parameters (61% of SRN and 7% of DeblurGANv2) while maintaining computational costs at 32.6G FLOPs (28% and 74% reductions compared to SRN and DeblurGANv2, respectively). This performance advantage stems from MSG-ARB’s innovative architectural design: (1) the learnable gradient convolution dynamically optimizes gradient extraction kernels by embedding conventional Sobel operators into convolutional layers, adapting to various blur types while mitigating limitations of handcrafted gradient operators (e.g., fixed directional sensitivity); (2) the gradient content gating (mechanism enhances attention to blur-sensitive regions through adaptive fusion of gradient features with original content features; and (3) addressing the high computational cost typically associated with complex multi-scale modules—the hybrid architecture combines dilated convolution with grouped convolution to capture multi-scale blur features at low computational overhead. These breakthroughs establish a new technical pathway for mobile blur image recognition, achieving an optimal balance between model performance and computational efficiency.

Table 6.

Comparative Analysis of CNN Branches and Mainstream Approaches.

3.6. Comparative Analysis of Fusion Module Variants

This study integrates the advantages of CNN when extracting local features with the efficacy of Transformers in handling long-range dependencies to recognize blurred images, as demonstrated in the accompanying Table 7. Scheme 1 and Scheme 2 present the performance results of the baseline models, ResNet-50 and HST (Hybrid Swin Transformer), respectively. The results indicate that the ResNet-50 achieves an accuracy of 85.15%, while the HST attains 85.36%. Schemes 3–7 illustrate the impact of various dual-branch architecture variants on model performance. As observed, Scheme 3, the basic dual-branch model, achieves an accuracy of 87.64%, representing improvements of 2.49% and 2.28% over the two baseline models, respectively. Subsequent integration of the feature fusion module and dynamic gating mechanism (Schemes 4 and 5) yields marginal performance gains. However, the incorporation of an attention mechanism (Scheme 6) significantly enhances model accuracy, with Scheme 6 reaching 89.46%, a 1.82% improvement over the basic dual-branch model (Scheme 3). In Scheme 7, integrating the multi-scale feature fusion module with the CBAM (Convolutional Block Attention Module) attention mechanism elevates the accuracy to 90.04%. Scheme 8, which combines the dynamic gating module with the CBAM, further improves accuracy to 90.24%. Finally, Scheme 9 integrates the multi-scale feature fusion module, dynamic gating module, and the CBAM, achieving the highest accuracy of 90.56%.

Table 7.

Comparative Recognition Performance Across Model Architectures.

3.7. Model Performance and Generalization Capability Experiments

This section compares the proposed CNN-DyT Transformer with a series of existing algorithms on the VAIS dataset, GoPro dataset, Open Images V4 dataset, and the Mini-ImageNet dataset. The classification results are presented in Table 8. The proposed method achieves an accuracy of 90.60% on the VAIS dataset, 79.34% on the GoPro dataset, 94.44% on the Open Images V4 dataset, and 96.82% on the Mini-ImageNet dataset. The results demonstrate that the proposed blurred image recognition method exhibits superior performance, outperforming state-of-the-art approaches such as Fine-tuning CNN+SVM and Gabor+MS-CLBP+SVM, while maintaining robust accuracy across diverse datasets.

Table 8.

Comparative Recognition Performance Across Methods and Datasets.

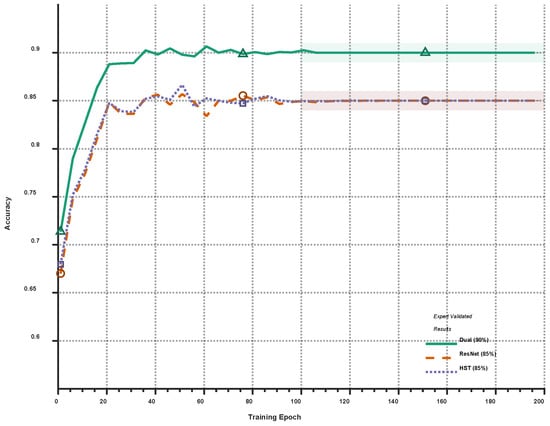

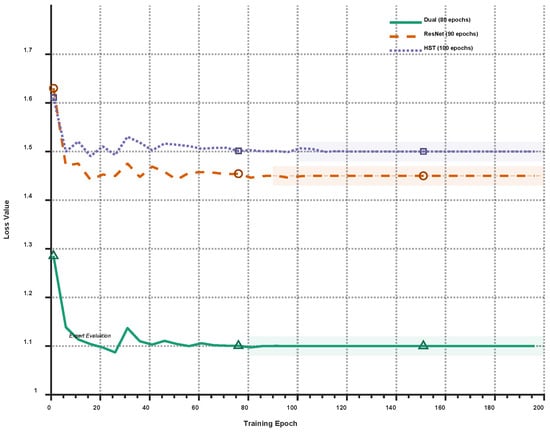

Accuracy and loss values were adopted as the evaluation metrics for model performance. Three distinct models were trained on identical datasets, with the training results illustrated in Figure 13 and Figure 14.

Figure 13.

Performance Comparison of Training Results.

Figure 14.

Loss Comparison Chart.

Experimental data analysis revealed that the dual-branch architecture demonstrated significant advantages in the comparative study of model performance. The training curves clearly indicate that the dual-branch model consistently maintained superior accuracy throughout the entire training cycle. Notably, when the training iterations reached 100 epochs, its accuracy surpassed the critical threshold of 90%, while the single-branch CNN and Transformer models only achieved approximately 85% accuracy during the same period. A particularly noteworthy observation is that all three models entered a stable convergence phase after exceeding 100 iterations, characterized by significantly reduced accuracy fluctuations, suggesting that the models had essentially completed their parameter optimization processes. In terms of performance improvement, the dual-branch architecture achieved an average accuracy enhancement of five percentage points compared to conventional CNN and Transformer models. This performance advantage likely stems from the architecture’s ability to simultaneously capture both local features and global dependencies, thereby achieving more comprehensive feature representation capabilities.

The comparative analysis of experimental data demonstrates that the dual-branch architecture exhibits significant optimization efficiency and performance advantages during model training. The loss curves clearly reveal superior optimization characteristics of the dual-branch approach. Throughout the training process, the dual-branch model consistently maintained the lowest loss values. Upon completion of training, the final loss value of the dual-branch architecture was approximately 27% lower than that of the single-branch CNN and about 36% lower than the Transformer model. This enhanced performance may be attributed to the dual-branch architecture’s parallel processing of multi-scale feature information, which enables more effective gradient propagation and parameter-updating mechanisms. The consistent superiority in loss values directly correlates with the higher accuracy demonstrated by the dual-branch model in practical prediction tasks, validating its comprehensive advantages in feature learning and pattern recognition. Notably, this performance gap persists across datasets of varying scales, indicating the architecture’s robust generalization capability and stability.

3.8. Module Level Cascade Ablation Experiment

Table 9 presents the cascade analysis of four key modules. The results indicate that the model’s recognition accuracy progressively improves as more modules are cascaded. Notably, when retaining a single module, the performance gain does not exceed 3%, whereas the combination achieves an 8% improvement. This verifies the effectiveness of the collaborative design principle proposed in this paper.

Table 9.

Cascade analysis of key modules on the VAIS dataset.

3.9. Model Time Efficiency Comparison Experiment

As shown in Table 10, our model delivers optimal performance across GPU platforms, achieving a 1.72× cross-hardware speedup that highlights the effective utilization of A100 Tensor Core capabilities within our architecture. With peak memory usage of only 5.3 GB (35–55% lower than competing models), our solution demonstrates exceptional edge deployment potential, enabling higher-resolution inputs or concurrent multitasking capabilities. The sustained high frame rates confirm the computational efficiency breakthrough of our dual-branch architecture, particularly for real-time video processing applications.

Table 10.

Model time efficiency comparison.

4. Conclusions

This study presents a dual-branch recognition model for marine blurred targets, which synergistically combines CNN and Transformer architectures. The CNN branch adopts ResNet-50 as its backbone, while the Transformer branch utilizes the HST structure. A novel feature fusion module comprising a feature alignment unit, multi-scale fusion unit, and dynamic gate unit is introduced to effectively combine the extracted features from both branches, thereby obtaining comprehensive local-global representations for marine blurred target classification. Experimental results demonstrate that the proposed model successfully addresses the limitations of CNNs in receptive field constraints and Transformer networks in local feature perception. Comprehensive evaluations on the VAIS dataset verify the effectiveness of each module, while comparative experiments with multiple existing methods confirm the superior performance of the proposed approach. Cross-dataset validation further confirms the model’s robust generalization capabilities across diverse marine environments.

For future work, we will further integrate blurred features and marine targets to construct a more robust multi-modal recognition model and explore its application in broader scenarios. Additionally, efforts will be made to develop a lighter integrated model that maintains a high performance while reducing computational resource requirements.

Author Contributions

Conceptualization, T.H., C.P. and J.L.; methodology, T.H.; software, T.H.; validation, T.H.; formal analysis, T.H.; investigation, T.H. and J.L.; resources, T.H. and J.L.; data curation, T.H.; writing—original draft preparation, T.H. and J.L.; writing—review and editing, T.H., C.P. and J.L.; visualization, T.H.; supervision, T.H., C.P. and J.L.; project administration, T.H.; funding acquisition, T.H., C.P., J.L. and Z.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Natural Science Foundation of Hubei Province under Grant Number: 2021CFB310.

Data Availability Statement

The data will be available from the corresponding authors upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CAM | Channel Attention Module |

| CBAM | Convolutional Block Attention Module |

| DyT | Dynamic Tanh |

| HST | Hierarchical Swin Transformer |

| LS-SA | Local-Sensitive Self-Attention |

| MSG-ARB | Multi-Scale Gradient-Aware Residual Block |

| PE | Positional Encoding |

| SAM | Spatial Attention Module |

| ViT | Vision Transformer |

References

- Sandifer, P.A.; Brooks, B.W.; Canonico, G.; Chassignet, E.P.; Kirkpatrick, B.; Porter, D.E.; Schwacke, L.H.; Scott, G.I.; Kelsey, R.H. Observing and monitoring the ocean. In Oceans and Human Health; Elsevier: Amsterdam, The Netherlands, 2023; pp. 549–596. [Google Scholar]

- Li, S.; Li, C.; Wang, Z.; Jia, Z.; Zhu, J.; Cui, X.; Liu, J. Pas: A scale-invariant approach to maritime search and rescue object detection using preprocessing and attention scaling. Intell. Serv. Robot. 2024, 17, 465–477. [Google Scholar] [CrossRef]

- Ye, J.; Li, C.; Wen, W.; Zhou, R.; Reppa, V. Deep Learning in Maritime Autonomous Surface Ships: Current Development and Challenges. J. Mar. Sci. Appl. 2023, 22, 584–601. [Google Scholar] [CrossRef]

- Meng, X.; Sun, J.; Sun, Z.; Wei, P.; Xu, Z. Influence of undulating sea surface on the result of reflection seismic imaging. Chin. J. Geophys. 2019, 62, 3155–3163. [Google Scholar]

- Fazlali, H.; Shirani, S.; BradforSd, M.; Kirubarajan, T. Atmospheric Turbulence Removal in Long-Range Imaging Using a Data-Driven-Based Approach. Int. J. Comput. Vis. 2022, 130, 1031–1049. [Google Scholar] [CrossRef]

- Colavita, M.M.; Swain, M.R.; Akeson, R.L.; Koresko, C.D.; Hill, R.J. Effects of Atmospheric Water Vapor on Infrared Interferometry. Publ. Astron. Soc. Pac. 2004, 116, 876–885. [Google Scholar] [CrossRef]

- Ma, Z.; Wen, J.; Liang, X. Video image defogging algorithm research under sea fog. Appl. Res. Computers 2014, 31, 2836. [Google Scholar]

- Chang, L.; Chen, Y.-T.; Wang, J.-H.; Chang, Y.-L. Modified Yolov3 for Ship Detection with Visible and Infrared Images. Electronics 2022, 11, 739. [Google Scholar] [CrossRef]

- Li, Z. The Application of Artificial Intelligence and Machine Learning in Face Recognition Technology. Appl. Comput. Eng. 2024, 115, 69–74. [Google Scholar] [CrossRef]

- Yang, B.; Li, J.; Zeng, T. A Review of Environmental Perception Technology Based on Multi-Sensor Information Fusion in Autonomous Driving. World Electr. Veh. J. 2025, 16, 20. [Google Scholar] [CrossRef]

- Bisogni, C.; Castiglione, A.; Hossain, S.; Narducci, F.; Umer, S. Impact of Deep Learning Approaches on Facial Expression Recognition in Healthcare Industries. IEEE Trans. Ind. Inform. 2022, 18, 5619–5627. [Google Scholar] [CrossRef]

- Blur, M. Field Guide to Image Processing; SPIE: Bellingham, WA, USA; pp. 40–41. [CrossRef]

- Lin, J.; Zeng, X.; Pan, Y.; Ren, S.; Bao, Y. Intelligent Inspection Guidance of Urethral Endoscopy Based on SLAM with Blood Vessel Attentional Features. Cogn. Comput. 2024, 16, 1161–1175. [Google Scholar] [CrossRef]

- Li, Y.; Luo, Y.; Zheng, Y.; Liu, G.; Gong, J. Research on Target Image Classification in Low-Light Night Vision. Entropy 2024, 26, 882. [Google Scholar] [CrossRef]

- Xu, Q.; Yuan, K.; Ye, D. Respiratory motion blur identification and reduction in ungated thoracic PET imaging. Phys. Med. Biol. 2011, 56, 4481–4498. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L.; Meng, D.; Huang, L.; Xiao, W.; Tian, W. Vehicle Kinematics-Based Image Augmentation against Motion Blur for Object Detectors. In SAE Technical Paper Series; WCX SAE World Congress Experience: Detroit, MI, USA, 2023. [Google Scholar] [CrossRef]

- Alhijaj, J.A.; Khudeyer, R.S. Techniques and Applications for Deep Learning: A Review. J. Al-Qadisiyah Comput. Sci. Math. 2023, 15, 114–126. [Google Scholar] [CrossRef]

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional, Long Short-Term Memory, fully connected Deep Neural Networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ji, W.; Chen, X.; Li, Y. Blind motion deblurring using improved DeblurGAN. IET Image Process. 2023, 18, 327–347. [Google Scholar] [CrossRef]

- Zhuohang, S. Dynamic convolution-based image dehazing network. Multimed. Tools Appl. 2023, 83, 49039–49056. [Google Scholar] [CrossRef]

- Yan, H.; Lu, H.; Ye, M.; Yan, K.; Jin, Q.; Xu, Y. Lung Nodule Segmentation Combining Sobel Operator and Mask R-CNN. J. Chin. Comput. Systems 2020, 41, 161–165. [Google Scholar]

- Jang, J.-G.; Quan, C.; Lee, H.D.; Kang, U. Falcon: Lightweight and accurate convolution based on depthwise separable convolution. Knowl. Inf. Syst. 2023, 65, 2225–2249. [Google Scholar] [CrossRef]

- Kamath, U.; Graham, K.L.; Emara, W. Transformers for Machine Learning; Chapman and Hall/CRC: Boca Raton, FL, USA, 2022. [Google Scholar] [CrossRef]

- Islam, S.; Elmekki, H.; Elsebai, A.; Bentahar, J.; Drawel, N.; Rjoub, G.; Pedrycz, W. A comprehensive survey on applications of transformers for deep learning tasks. Expert Syst. Appl. 2024, 241, 122666. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows (Version 2). arXiv 2021, arXiv:2103.14030. [Google Scholar] [CrossRef]

- Systems and Methods for Multi-Scale Pre-Training with Densely Connected Transformer. U.S. Patent 11,941,356, 26 March 2024.

- Li, G.; Fang, Q.; Zha, L.; Gao, X.; Zheng, N. HAM: Hybrid attention module in deep convolutional neural networks for image classification. Pattern Recognit. 2022, 129, 108785. [Google Scholar] [CrossRef]

- Lee, M.; Hyun, S.; Jun, W.; Kim, H.; Chung, J.; Heo, J.-P. Analyzing the Training Dynamics of Image Restoration Transformers: A Revisit to Layer Normalization (Version 2). arXiv 2025, arXiv:2504.06629. [Google Scholar] [CrossRef]

- Yang, J.; Li, C.; Zhang, P.; Dai, X.; Xiao, B.; Yuan, L.; Gao, J. Focal Self-attention for Local-Global Interactions in Vision Transformers (Version 1). arXiv 2021, arXiv:2107.00641. [Google Scholar] [CrossRef]

- Xu, J.; Sun, X.; Zhang, Z.; Zhao, G.; Lin, J. Understanding and Improving Layer Normalization (Version 1). arXiv 2019, arXiv:1911.07013. [Google Scholar] [CrossRef]

- Cao, K.; Gao, J.; Choi, K.; Duan, L. Learning a Hierarchical Global Attention for Image Classification. Future Internet 2020, 12, 178. [Google Scholar] [CrossRef]

- Zhang, H.; Rao, Y.; Shao, J.; Meng, F.; Pu, J. Reading Various Types of Pointer Meters Under Extreme Motion Blur. IEEE Trans. Instrum. Meas. 2023, 72, 1–15. [Google Scholar] [CrossRef]

- He, F.; Song, H.; Li, G.; Zhang, J. DCF-Net: A Dual-Coding Fusion Network based on CNN and Transformer for Biomedical Image Segmentation. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June 2024–5 July 2024; pp. 1–9. [Google Scholar] [CrossRef]

- Yan, X.; Ding, S.; Zhou, W.; Shi, W.; Tian, H. Unsupervised Domain Adaptive Person Re-Identification Method Based on Transformer. Electronics 2022, 11, 3082. [Google Scholar] [CrossRef]

- Fu, B.; Peng, Y.; He, J.; Tian, C.; Sun, X.; Wang, R. HmsU-Net: A hybrid multi-scale U-net based on a CNN and transformer for medical image segmentation. Comput. Biol. Med. 2024, 170, 108013. [Google Scholar] [CrossRef]

- Li, M.; Huang, H.; Huang, K. FCAnet: A novel feature fusion approach to EEG emotion recognition based on cross-attention networks. Neurocomputing 2025, 638, 130102. [Google Scholar] [CrossRef]

- Rao, Y.; Liu, Z.; Zhao, W.; Zhou, J.; Lu, J. Dynamic Spatial Sparsification for Efficient Vision Transformers and Convolutional Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10883–10897. [Google Scholar] [CrossRef]

- Tang, H.; Li, Z.; Zhang, D.; He, S.; Tang, J. Divide-and-Conquer: Confluent Triple-Flow Network for RGB-T Salient Object Detection (Version 1). arXiv 2024, arXiv:2412.01556. [Google Scholar] [CrossRef]

- Zhu, J.; Qin, X.; Elsaddik, A. DC-Net: Divide-and-Conquer for Salient Object Detection (Version 3). arXiv 2023, arXiv:2024.110903. [Google Scholar] [CrossRef]

- Shi, C.; Zhang, X.; Li, X.; Mumtaz, I.; Lv, J. Face deblurring based on regularized structure and enhanced texture information. Complex Amp; Intell. Syst. 2023, 10, 1769–1786. [Google Scholar] [CrossRef]

- Kupyn, O.; Martyniuk, T.; Wu, J.; Wang, Z. DeblurGAN-v2: Deblurring (Orders-of-Magnitude) Faster and Better (Version 1). arXiv 2019, arXiv:1908.03826. [Google Scholar] [CrossRef]

- Ebrahimi, M.S.; Abadi, H.K. Study of Residual Networks for Image Recognition. In Lecture Notes in Networks and Systems; Springer International Publishing: Cham, Switzerland, 2021; pp. 754–763. [Google Scholar] [CrossRef]

- Song, H.; Lu, H. Research on Image Recognition Based on Different Depths of VGGNet. J. Image Process. Theory Appl. 2024, 7, 84–90. [Google Scholar] [CrossRef]

- Feng, Z.J.; Chengwei, H.; Ji, Z. Modified Perona-Malik Equation and Computer Simulation for Image Denoising. Open Mech. Eng. J. 2014, 8, 37–41. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).