Abstract

Low-Earth-orbit (LEO) satellite networks face significant vulnerabilities to malicious jamming and co-channel interference, compounded by dynamic topologies, resource constraints, and complex electromagnetic environments. Traditional anti-jamming approaches lack adaptability, centralized intelligent methods incur high overhead, and distributed intelligent methods fail to achieve global optimization. To address these limitations, this paper proposed a value decomposition network (VDN)-based multi-agent deep reinforcement learning (DRL) anti-jamming spectrum access approach with a centralized training and distributed execution architecture. Following offline centralized ground-based training, the model was deployed distributedly on satellites for real-time spectrum-access decision-making. The simulation results demonstrate that the proposed method effectively balances training costs with anti-jamming performance. The method achieved near-optimal user satisfaction (approximately 97%) with minimal link overhead, confirming its effectiveness for resource-constrained LEO satellite networks.

1. Introduction

Low-Earth-orbit (LEO) satellites offer significant advantages, including low-latency communication, high data rates, and wide geographical coverage capabilities [,]. These attributes make their application a powerful supplement to terrestrial networks [,], and they have broad application prospects []. In recent years, the global deployment of LEO constellations (e.g., Starlink, OneWeb, and Kuiper) has been rapidly expanding in both speed and scale []. However, the inherent exposure of satellites and their reliance on open wireless channels make them susceptible to external malicious jamming and internal co-channel interference. Consequently, developing effective anti-jamming techniques is critical to ensure communication security. However, dynamic topologies [], limited resources [], and complex electromagnetic conditions [] in LEO networks pose significant challenges to achieving this goal.

Extensive research has been dedicated to anti-jamming technologies. Overall, the existing approaches can be divided into two categories: traditional anti-jamming technologies and intelligent anti-jamming technologies.

1.1. Traditional Anti-Jamming Techniques

Direct-sequence spread spectrum (DSSS) and frequency-hopping spread spectrum (FHSS) constitute the foundational techniques for anti-jamming communications []. The authors of [] proposed the Turbo iterative acquisition algorithm based on factor graph modeling of time-varying Doppler rate to address the challenges of DSSS signal acquisition in highly mobile satellite communications. Ref. [] proposed random differential DSSS based on the traditional DSSS technology, which can effectively alleviate reactive jamming attacks. FHSS uses pre-shared pseudorandom sequences to switch communication frequencies. Ref. [] proved that the performance of FHSS signals is superior to that of DSSS signals, and FHSS signals are suitable for application in military satellite communication. The authors of [] achieved a stable connection and good anti-jamming ability by applying adaptive frequency hopping technology. Ref. [] proposed dynamic spectrum-access technology (DSA) based on cognitive database control, and it achieved co-channel sharing between non-terrestrial networks and terrestrial networks. The authors of [] presented anti-jamming technologies in the time and transform domains and further investigated spatial-domain techniques, space–time processing, and adaptive beamforming based on array antennas. However, these methods typically rely on predefined rules or static configurations. Although effective in stable environments, their fixed strategies lack adaptability in high-mobility LEO satellite networks with dynamic uncertainties.

1.2. Intelligent Anti-Jamming Techniques

Intelligent anti-jamming techniques can explore the environment through trial-and-error, enabling them to learn to make robust and adaptive anti-jamming decisions under highly dynamic and uncertain conditions []. The adaptive learning capabilities, ability to handle complex high-dimensional state spaces, and innovative distributed/collaborative learning paradigms of these intelligent technologies overcome traditional bottlenecks and address anti-jamming challenges in satellite communications [,]. The authors of [] proposed a decentralized federated learning-assisted deep Q-network (DQN) algorithm to determine collaborative anti-jamming strategies for UAV pairs. In [], an energy-saving and anti-jamming communication framework of a UAV cluster based on multi-agent cooperation was constructed. Ref. [] introduced a deep reinforcement learning (DRL)-based dynamic spectrum-access method with accelerated convergence where soft labels replace conventional reward signals; it reduced iteration counts. Ref. [] proposed an intelligent dynamic-spectrum anti-jamming communication approach based on DRL, and it improved in a trial-and-error manner to adapt to the dynamic nature of jamming. Ref. [] proposed a collaborative multi-agent layered Q learning (MALQL)-based anti-jamming communication algorithm to reduce the high dimensionality of the action space. Ref. [] proposed a multi-agent proximal policy optimization (MAPPO) algorithm to optimize a collaborative strategy for multiple UAVs regarding transmission power and flight trajectory. The authors of [] designed a deep recurrent Q-network (DRQN) algorithm and an intelligent anti-jamming decision algorithm, which improved throughput and convergence performance. However, centralized intelligent anti-jamming architectures incur high computational overhead and complexity, while distributed intelligent anti-jamming architectures fail to converge to globally optimal solutions. Consequently, neither approach is suitable for resource-constrained LEO satellite networks.

To address the aforementioned issues, this paper proposes a value decomposition network (VDN)-based multi-agent DRL method that applies a centralized training and distributed execution architecture. This method achieves global optimization during training and local decision-making during execution, which has certain advantages in terms of performance improvement and cost reduction. The main contributions of this paper are as follows:

- A VDN-based multi-agent DRL method is proposed to solve the anti-jamming spectrum-access problem. This method adopts an “offline centralized training–online distributed execution” architecture. After offline training on the ground, the model is deployed onto satellites to enable real-time anti-jamming spectrum-access decisions based on local observations.

- During training, the parameter-sharing mechanism is employed to reduce communication overhead significantly. During execution, the incremental update mechanism is employed to enhance model adaptability.

- The simulation results prove the proposed method’s effectiveness. It balances performance and cost better than fully centralized training and independent distributed training.

The rest of the paper is organized as follows. The system model and problem construction are outlined in Section 2. Section 3 proposes a multi-agent deep reinforcement learning algorithm. Section 4 presents the simulation results and discussion. Section 5 concludes this paper. The main notations employed in the paper are shown in Table 1.

Table 1.

Main notations.

2. System Model and Problem Construction

2.1. System Model

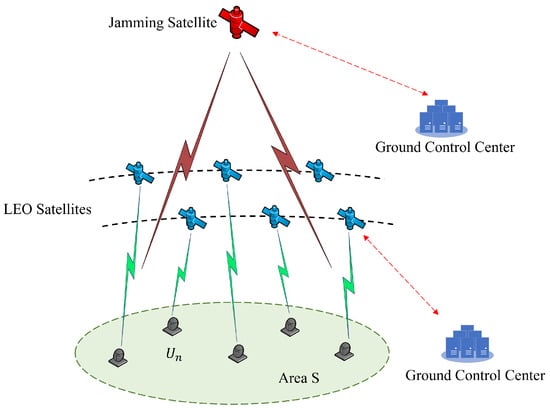

As shown in Figure 1, there is a jamming satellite and an LEO constellation. Region S is the area jointly covered by jamming satellite and LEO satellites, and users are randomly distributed within region S. The jamming satellite applies randomized frequency suppression jamming to the satellite downlinks. The frequency variations follow a Markov probability matrix. Jamming strategies are formulated by the ground control center and distributed to jamming satellites. The LEO satellites are equipped with multibeam phased array antennas that can dynamically adjust the beam frequency for anti-jamming, and user communication quality is maintained uninterrupted.

Figure 1.

Anti-jamming communication scenario.

The set of LEO satellites is denoted as ; the set of users is denoted as . Each satellite has B beams, and each beam serves one user. The set of available channels for each beam is denoted as , and each channel bandwidth is . The jamming channel set is , and the beam power of the jamming satellite and LEO satellites are denoted as and , respectively.

At each time slot, jamming satellite switches channels based on Markov probability matrix, and LEO satellites decide the optimal spectrum-access scheme for the downlinks based on real-time environment sensing and historical data analysis. This paper focuses on dynamic spectrum-access strategies for LEO satellite downlinks in malicious jamming environments, aiming to reduce interference and improve user satisfaction.

2.2. Propagation Model

According to ITU-R S.672 [], the gain of jamming satellite transmitting antenna is

where is the maximum gain of jamming satellite transmitting antenna, and is the off-axis angle of the center of the jamming beam in the direction. is half the 3 dB beamwidth , and denotes the value obtained when the fourth line of Equation (1) is equal to 0.

According to ITU-R S.1528 [], the gain of LEO satellite transmitting antenna is

where is the maximum gain of LEO satellite transmitting antenna, and is the off-axis angle of the link in the direction. is half the 3 dB beamwidth , is main beam and proximal paraflap mask intersection, and dBi is near-axis paravalve level. Parameters Y and Z are denoted as

According to ITU-R S.465 [], the gain of user receiving antenna is

where is the maximum gain of user receiving antenna, and is the off-axis angle of the link in the direction. The parameter is denoted as

where D is the circular equivalent diameter of antenna, and is the wavelength.

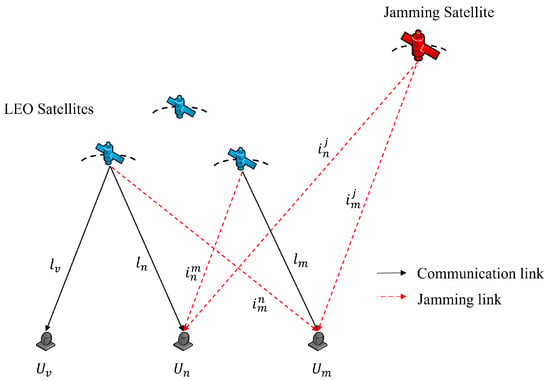

As shown in Figure 2, users are disrupted in two ways:

Figure 2.

Interference model.

➀ Malicious jamming from jamming satellite:

where the channel gain is

and the function is

where is antenna gain of the jamming satellite in the direction, is antenna gain of user in the jamming satellite direction, and is distance of the link .

➁ Co-channel interference from other beams of the LEO satellites:

where the channel gain is

and is antenna gain of the link in the direction, is antenna gain of the link in the direction, and is distance of the link .

Consequently, the transmission rate of user is

where is the system noise, is the channel gain, and its expression is

where is distance of the link .

In order to capture the user’s individualized needs and preferences, perceived satisfaction [] is introduced with the following expression:

where is the spectrum selection of user at time slot t, is transmission rate threshold, and the parameter c modulates the slope of the demand utility curve, reflecting the sensitivity of the to deviations from the rate threshold. Higher values of the indicate superior user satisfaction with communication services, whereas lower values signify that the system fails to adequately meet service requirements.

2.3. Problem Construction

This paper aims to determine optimal spectrum-access strategies for LEO satellites to maximize average user satisfaction over T periods while maximizing anti-jamming performance. The optimization problem can be formulated as follows:

In this optimization problem, indicates that the transmission rate must be greater than a threshold to ensure normal communication, indicates that the time horizon is either finite or infinite, and indicates that the spectrum accessed by the user at time slot t must be within the orthogonal frequency band. The model not only takes into account the immediate user needs but also optimizes the overall user satisfaction over a longer period of time.

The optimization problem P is a non-convex nonlinear program []. According to [,], this problem is generally NP-hard, and it is difficult to find the optimal solution. Although convex optimization, heuristic, or game-theoretic approaches may attain suboptimal solutions, they typically require global information collection. This makes them impractical for resource-constrained LEO satellites with strict real-time requirements []. Since the dynamic spectrum-access problem satisfies the characteristics of the Markov decision process (MDP), the multi-agent DRL solution is introduced in detail subsequently.

3. The Proposed Multi-Agent Deep Reinforcement Learning Method

This section first presents the multi-agent MDP [] formulation for multi-user anti-jamming spectrum access. Subsequently, the process of VDN-based multi-agent DRL is detailed.

3.1. Multi-Agent MDP Problem Formulation

In this paper, each satellite operates as an agent that observes local environmental states and selects actions per time slot. With all agents collaboratively maximizing the long-term cumulative reward, this constitutes a fully cooperative multi-agent task modeled as an MDP, mathematically expressed as , where is the set of environment state, is the the set of action, is the state transition probability function, is the reward function, and is the discount factor; its values range from 0 to 1. Specifically, key parameters for the multi-user scenario are formally defined as follows:

- State: The state of the environment is the global information of all users, which can be formed as follows:where each contains self-satisfaction, current jamming, and current co-channel interference.

- Action: The action is the spectrum-access strategy for all users at time slot t, which can be formed as follows:where is the spectrum-access strategy of user n.

- Reward: The agents can obtain immediate rewards after taking actions, which can be formed as follows:

- State–action value function: The state–action value function is the discounted reward obtained by taking action in state , which can be formed as follows:where is the action set of agent n over period T, , is long-term reward, and is the expectation. The agent’s goal is to learn the optimal strategy that maximizes the long-term reward.

3.2. Multi-Agent DRL Algorithm Design

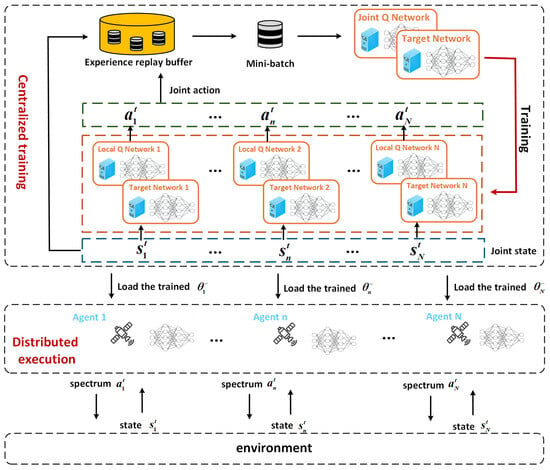

Centralized multi-agent DRL methods require global information and incur high computational overhead. Conversely, distributed multi-agent DRL approaches relying on local observations fail to converge to optimal solutions. To overcome these limitations, the VDN [] algorithm is adopted for multi-satellite anti-jamming spectrum access. This approach implements a centralized training and distributed execution framework. Global information is utilized during offline training, while local observations are required during online execution. The overall structure of the proposed algorithm is shown in Figure 3.

Figure 3.

The overall architecture of the proposed algorithm.

3.2.1. The Offline Centralized Training Phase

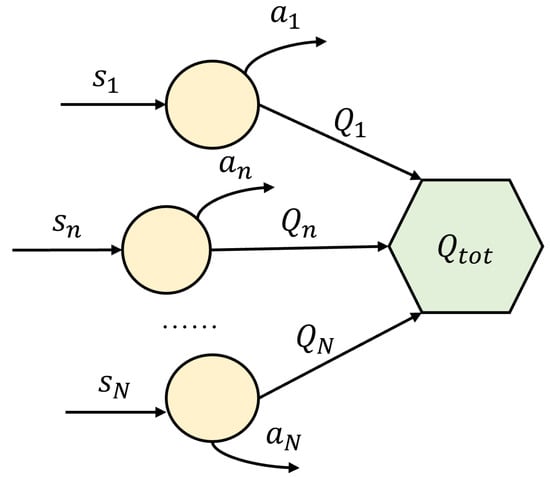

The core idea of the VDN algorithm is to decompose the joint action-value function of a multi-agent system into the sum of the local action-value functions of the individual agent, and it is denoted as

where is joint state, is joint action, and is parameter of local Q-network of the agent n. This decomposition allows the agent to independently select actions based on their local observations during the execution phase while optimizing the strategy through a global temporal difference (TD) objective during the centralized training phase. During the training process, the agent n selects action according to the -greedy strategy, and it is denoted as

The VDN network architecture is shown in Figure 4. After approximating the joint Q-value , the VDN updates the network parameters by minimizing the global reward-based TD error, and the loss function is defined as

where denotes the mini-batch set sampled from the replay buffer, and is target value denoted as

where is global immediate reward, is discount factor, and and denote the next joint state and joint action, respectively.

Figure 4.

The structure of the VDN.

The gradient of the loss function is backpropagated through to each local Q function , which in turn updates its parameters . This optimization process ensures that the local Q-network parameters of each agent are updated from the perspective of global returns. In order to improve learning efficiency, reduce computational and storage overheads, and facilitate collaboration among the agents, the algorithm employs a parameter-sharing mechanism.

After the centralized training phase is completed, the optimized model parameters are uploaded to the satellite agent to support subsequent distributed autonomous operation. Algorithm 1 describes the centralized training process in detail.

| Algorithm 1 Offline Centralized Training Algorithm |

|

3.2.2. The Online Distributed Execution Phase

Each satellite agent loads the trained agent network . Satellite agent determines the optimal spectrum access by independently selecting the action that maximizes their local Q value based only on their local observations.

An incremental parameter update mechanism is introduced to adapt to dynamic satellite network environment. Each agent continuously collects execution experiences, storing them in a local replay buffer. Periodically, new experiences from this buffer are utilized to refine pretrained network parameters through local loss computation and stochastic gradient descent. This online adaptation accommodates environmental dynamics while avoiding complete retraining. Algorithm 2 describes the distributed execution process in detail.

| Algorithm 2 Online Distributed Execution Algorithm |

|

3.2.3. Complexity Analysis

The computational complexity of the proposed method is analyzed from two key phases: centralized training and distributed execution.

Centralized Training Phase: Each agent’s local Q-network performs forward propagation with complexity , where is the number of layers in forward propagation, and D is the dimension of hidden layers in the neural network. For N agents, the total forward complexity per time slot is . For each mini-batch, backpropagation of the TD error involves computing gradients across all layers, with per-sample complexity , where is the number of layers in backpropagation. For a mini-batch of size , the total backpropagation complexity per episode is . Combining forward and backward operations over T time slots, the training complexity is . The parameter-sharing mechanism reduces the parameter space from to , significantly lowering computational costs.

Distributed Execution Phase: Each satellite agent requires only per time slot to compute and select actions locally. The incremental update further adapts to environmental dynamics with minimal overhead , where is the size of the local replay buffer. Therefore, the overall complexity of per time slot is .

4. Simulation Results and Performance Analysis

4.1. Simulation Parameters

In this section, the performance of the proposed multi-agent DRL algorithm is evaluated. The simulated scenario consists of one jamming satellite and an LEO Walker constellation, with ten users randomly distributed within the jamming satellite’s beam coverage. Detailed simulation parameters are documented in Table 2. All the simulations are executed on a computer equipped with a 2.7 GHz Intel Core Ultra 9 CPU, 32 GB RAM, and NVIDIA 5070Ti GPU. Algorithm framework parameters are summarized in Table 3.

Table 2.

Parameters of constellation.

Table 3.

Parameters of algorithm framework.

In this paper, we compare the proposed method with the following three different methods:

- Centralized Training Execution (CTE) []: The CTE method employs a fully centralized architecture during both training and execution phases. It requires continuous global information interaction and incurs high communication overhead.

- Non-Cooperative Independent Learning (NIL) []: In the NIL method, each agent independently optimizes strategies without coordination mechanisms or information sharing.

- Random Action Selection (RAS): The RAS method is a benchmark strategy without learning ability, and each agent randomly selects actions.

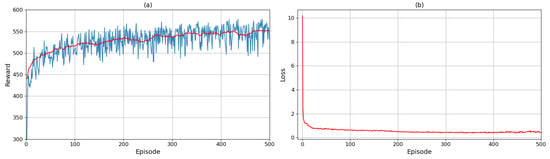

4.2. Convergence Analysis

Figure 5 illustrates the convergence of the proposed method through episode reward and loss. The reward values in Figure 5a increase during training and stabilize after 200 episodes, while the loss values in Figure 5b exhibit progressive reduction. These observations validate the proposed method’s convergence.

Figure 5.

An illustration of (a) reward and (b) loss during the training process.

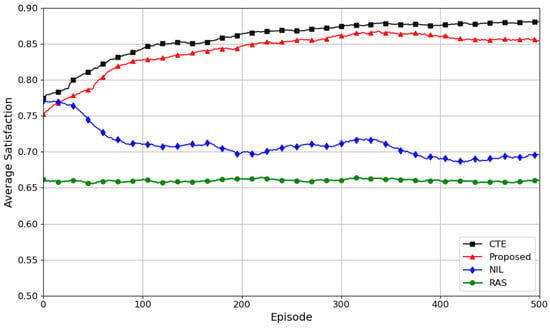

Figure 6 compares convergence among different methods, with the vertical axis denoting the satisfaction index. Evidently, the CTE method demonstrates superior convergence performance due to its global information awareness; its practical application is significantly constrained by satellite network communication and computational resources. The proposed method achieves approximately 97% of CTE’s convergence performance without excessive link overhead. It validates the effectiveness of the proposed method. The NIL method achieves a fully independent decision-making training mode, but the convergence performance is significantly reduced due to the lack of a cooperation mechanism. The RAS method has the worst performance due to its lack of learning ability.

Figure 6.

Convergence comparison of different methods.

4.3. Performance Analysis

4.3.1. Jamming Avoidance

Table 4 and Table 5, respectively, present the channel selection of users and the jammer during initial and convergence episodes. Each row corresponds to a distinct time slot. As shown in Table 4, the users exhibit random channel selection during the initial exploration, resulting in significant channel overlap with jammer transmissions. In contrast, Table 5 demonstrates users’ effective avoidance of jammed channels after convergence. This comparative analysis confirms the proposed algorithm’s efficacy in achieving near-optimal jamming avoidance.

Table 4.

Channel selection in the initial episode.

Table 5.

Channel selection in the convergence episode.

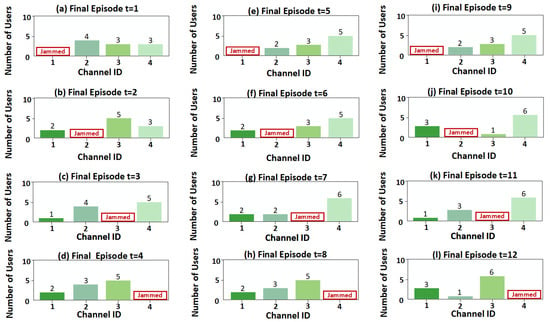

Figure 7 illustrates the users’ channel selection over 12 time slots after algorithm convergence under sweep jamming. It is obvious that the proposed method remains effective under sweep jamming, and users can thus avoid the channel selected by the jammer.

Figure 7.

The channel selection under sweep jamming.

4.3.2. User Satisfaction

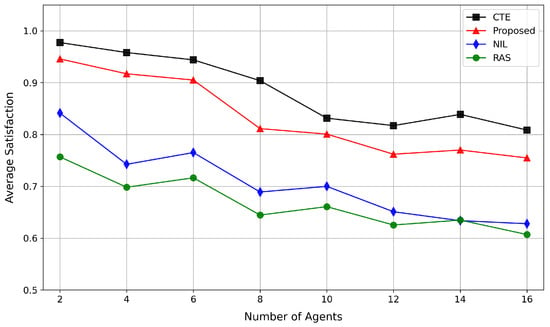

Figure 8 illustrates the impact of number of agents on user satisfaction. As number of agents increases, average satisfaction decreases for all the methods. The CTE method achieves the highest satisfaction. However, its computational costs rise significantly with user scaling. The NIL method reduces costs but causes frequent spectrum collisions from insufficient information exchange, so it has lower satisfaction. The proposed method maintains moderate satisfaction levels. Malicious jamming and computational costs are reduced through collaborative decisions. Thus, the proposed method is more suitable for spectrum management in LEO dynamic networks.

Figure 8.

Impact of number of agents on user satisfaction.

4.3.3. Network Fairness

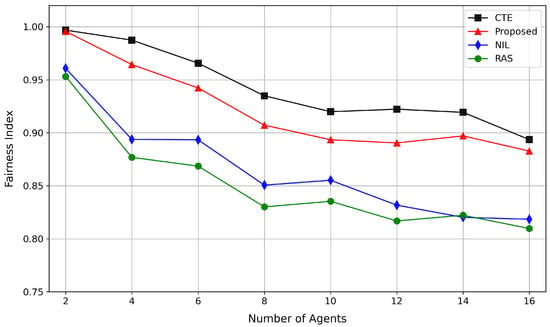

Inspired by [], to better demonstrate the effectiveness of the proposed method, the user fairness index is introduced as follows:

where N is the number of users, is the satisfaction of user . It is evident that, the closer the fairness index is to 1, the better the network fairness.

Figure 9 illustrates the impact of number of agents on network fairness. The fairness index exhibits a declining trend across all the methods with an increasing number of agents. Among them, the CTE method demonstrates the best fairness index, while the proposed method’s fairness index is slightly inferior to CTE. In contrast, due to a lack of coordination among agents, NIL exhibits a relatively poor fairness index. RAS achieves the worst fairness index owing to its lack of learning ability.

Figure 9.

Impact of number of agents on network fairness.

5. Conclusions

A VDN-based multi-agent DRL method is proposed for anti-jamming spectrum access in LEO satellite networks. To enhance training efficiency and minimize communication overhead, a centralized training with distributed execution architecture is adopted. Following offline centralized training, the network is distributedly deployed on LEO satellites to enable real-time spectrum-access decision-making. Parameter sharing is employed to accelerate convergence and reduce complexity, while incremental updates enhance model adaptability. The simulation results demonstrate improvements in jamming avoidance and user satisfaction, along with reduced computational overhead. Therefore, this approach provides an effective solution for dynamic anti-jamming spectrum access in resource-constrained LEO satellite networks. Future work will explore multi-domain joint anti-jamming technologies and integrate them with physical layer security techniques to further enhance resilience against evolving jamming threats.

Author Contributions

Conceptualization, F.C. and L.J.; Methodology, L.J. and W.C.; Validation, W.C.; Writing—original draft preparation, W.C.; Writing—review and editing, H.Z. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant 62471491.

Data Availability Statement

The original contributions presented in this study are included in the article material. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Al-Hraishawi, H.; Chougrani, H.; Kisseleff, S.; Lagunas, E.; Chatzinotas, S. A survey on nongeostationary satellite systems: The communication perspective. IEEE Commun. Surv. Tutorials 2022, 25, 101–132. [Google Scholar] [CrossRef]

- Xiao, Z.; Yang, J.; Mao, T.; Xu, C.; Zhang, R.; Han, Z.; Xia, X.G. LEO satellite access network (LEO-SAN) toward 6G: Challenges and approaches. IEEE Wirel. Commun. 2022, 31, 89–96. [Google Scholar] [CrossRef]

- Luo, X.; Chen, H.H.; Guo, Q. LEO/VLEO satellite communications in 6G and beyond networks–technologies, applications, and challenges. IEEE Netw. 2024, 38, 273–285. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, X.; Qi, Q. Energy-efficient design of satellite-terrestrial computing in 6G wireless networks. IEEE Trans. Commun. 2023, 72, 1759–1772. [Google Scholar] [CrossRef]

- Kodheli, O.; Lagunas, E.; Maturo, N.; Sharma, S.K.; Shankar, B.; Montoya, J.F.M.; Duncan, J.C.M.; Spano, D.; Chatzinotas, S.; Kisseleff, S.; et al. Satellite communications in the new space era: A survey and future challenges. IEEE Commun. Surv. Tutor. 2020, 23, 70–109. [Google Scholar] [CrossRef]

- Al-Hraishawi, H.; Chatzinotas, S.; Ottersten, B. Broadband non-geostationary satellite communication systems: Research challenges and key opportunities. In Proceedings of the 2021 IEEE International Conference on Communications Workshops (ICC Workshops), Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar]

- Li, W.; Jia, L.; Chen, Y.; Chen, Q.; Yan, J.; Qi, N. A game-theoretic approach for satellites beam scheduling and power control in a mega hybrid constellation spectrum sharing scenario. IEEE Internet Things J. 2025, 12, 20626–20639. [Google Scholar] [CrossRef]

- Kim, D.; Jung, H.; Lee, I.H.; Niyato, D. Multi-Beam Management and Resource Allocation for LEO Satellite-Assisted IoT Networks. IEEE Internet Things J. 2025, 12, 19443–19458. [Google Scholar] [CrossRef]

- Yang, Q.; Laurenson, D.I.; Barria, J.A. On the use of LEO satellite constellation for active network management in power distribution networks. IEEE Trans. Smart Grid 2012, 3, 1371–1381. [Google Scholar] [CrossRef]

- Hasan, M.; Thakur, J.M.; Podder, P. Design and implementation of FHSS and DSSS for secure data transmission. Int. J. Signal Process. Syst. 2016, 4, 144–149. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, C.; Kuang, L. Turbo iterative DSSS acquisition in satellite high-mobility communications. IEEE Trans. Veh. Technol. 2021, 70, 12998–13009. [Google Scholar] [CrossRef]

- Alagil, A.; Liu, Y. Randomized positioning dsss with message shuffling for anti-jamming wireless communications. In Proceedings of the 2019 IEEE conference on dependable and secure computing (DSC), Hangzhou, China, 18–20 November 2019; pp. 1–8. [Google Scholar]

- Lu, R.; Ye, G.; Ma, J.; Li, Y.; Huang, W. A numerical comparison between FHSS and DSSS in satellite communication systems with on-board processing. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–4. [Google Scholar]

- Mast, J.; Hänel, T.; Aschenbruck, N. Enhancing adaptive frequency hopping for bluetooth low energy. In Proceedings of the 2021 IEEE 46th Conference on Local Computer Networks (LCN), Edmonton, AB, Canada, 4–7 October 2021; pp. 447–454. [Google Scholar]

- Kokkinen, H.; Piemontese, A.; Kulacz, L.; Arnal, F.; Amatetti, C. Coverage and interference in co-channel spectrum sharing between terrestrial and satellite networks. In Proceedings of the 2023 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2023; pp. 1–9. [Google Scholar]

- Yan, D.; Ni, S. Overview of anti-jamming technologies for satellite navigation systems. In Proceedings of the 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 4–6 March 2022; Volume 6, pp. 118–124. [Google Scholar]

- Wang, X.; Wang, S.; Liang, X.; Zhao, D.; Huang, J.; Xu, X.; Dai, B.; Miao, Q. Deep Reinforcement Learning: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 5064–5078. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Nguyen, N.D.; Nahavandi, S. Deep Reinforcement Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications. IEEE Trans. Cybern. 2020, 50, 3826–3839. [Google Scholar] [CrossRef]

- Jia, L.; Qi, N.; Su, Z.; Chu, F.; Fang, S.; Wong, K.K.; Chae, C.B. Game theory and reinforcement learning for anti-jamming defense in wireless communications: Current research, challenges, and solutions. IEEE Commun. Surv. Tutorials 2024, 27, 1798–1838. [Google Scholar] [CrossRef]

- Yin, Z.; Li, J.; Wang, Z.; Qian, Y.; Lin, Y.; Shu, F.; Chen, W. UAV Communication Against Intelligent Jamming: A Stackelberg Game Approach With Federated Reinforcement Learning. IEEE Trans. Green Commun. Netw. 2024, 8, 1796–1808. [Google Scholar] [CrossRef]

- Wu, Z.; Lin, Y.; Zhang, Y.; Shu, F.; Li, J. Multi-agent collaboration based UAV clusters multi-domain energy-saving anti-jamming communication. Sci. Sin. Inf. 2023, 53, 2511. [Google Scholar] [CrossRef]

- Li, Y.; Xu, Y.; Li, G.; Gong, Y.; Liu, X.; Wang, H.; Li, W. Dynamic spectrum anti-jamming access with fast convergence: A labeled deep reinforcement learning approach. IEEE Trans. Inf. Forensics Secur. 2023, 18, 5447–5458. [Google Scholar] [CrossRef]

- Li, W.; Chen, J.; Liu, X.; Wang, X.; Li, Y.; Liu, D.; Xu, Y. Intelligent dynamic spectrum anti-jamming communications: A deep reinforcement learning perspective. IEEE Wirel. Commun. 2022, 29, 60–67. [Google Scholar] [CrossRef]

- Yin, Z.; Lin, Y.; Zhang, Y.; Qian, Y.; Shu, F.; Li, J. Collaborative Multiagent Reinforcement Learning Aided Resource Allocation for UAV Anti-Jamming Communication. IEEE Internet Things J. 2022, 9, 23995–24008. [Google Scholar] [CrossRef]

- Bai, H.; Wang, H.; Du, J.; He, R.; Li, G.; Xu, Y. Multi-Hop UAV Relay Covert Communication: A Multi-Agent Reinforcement Learning Approach. In Proceedings of the 2024 International Conference on Ubiquitous Communication (Ucom), Xi’an, China, 5–7 July 2024; pp. 356–360. [Google Scholar]

- Zhang, F.; Niu, Y.; Zhou, Q.; Chen, Q. Intelligent anti-jamming decision algorithm for wireless communication under limited channel state information conditions. Sci. Rep. 2025, 15, 6271. [Google Scholar] [CrossRef]

- ITU-R S.672; Satellite Antenna Radiation Patterns for Geostationary Orbit Satellite Antennas Operating in the Fixed-Satellite Service. International Telecommunication Union (ITU): Geneva, Switzerland, 1997.

- ITU-R S.1528; Satellite Antenna Radiation Patterns for Non-Geostationary Orbit Satellite Antennas Operating in the Fixed-Satellite Service Below 30 GHz. International Telecommunication Union (ITU): Geneva, Switzerland, 2001.

- ITU-R S.465; Reference radiation pattern for earth station antennas in the fixed-satellite service for use in coordination and interference assessment in the frequency range from 2 to 31 ghz. International Telecommunication Union (ITU): Geneva, Switzerland, 2010.

- Li, W.; Jia, L.; Chen, Q.; Chen, Y. A game theory-based distributed downlink spectrum sharing method in large-scale hybrid satellite constellations. IEEE Trans. Commun. 2024, 72, 4620–4632. [Google Scholar] [CrossRef]

- Islam, M.; Sharmin, S.; Nur, F.N.; Razzaque, M.A.; Hassan, M.M.; Alelaiwi, A. High-throughput link-channel selection and power allocation in wireless mesh networks. IEEE Access 2019, 7, 161040–161051. [Google Scholar] [CrossRef]

- Lin, Z.; Ni, Z.; Kuang, L.; Jiang, C.; Huang, Z. Dynamic beam pattern and bandwidth allocation based on multi-agent deep reinforcement learning for beam hopping satellite systems. IEEE Trans. Veh. Technol. 2022, 71, 3917–3930. [Google Scholar] [CrossRef]

- Luo, Z.Q.; Zhang, S. Dynamic spectrum management: Complexity and duality. IEEE J. Sel. Top. Signal Process. 2008, 2, 57–73. [Google Scholar] [CrossRef]

- Lin, Z.; Ni, Z.; Kuang, L.; Jiang, C.; Huang, Z. Satellite-terrestrial coordinated multi-satellite beam hopping scheduling based on multi-agent deep reinforcement learning. IEEE Trans. Wirel. Commun. 2024, 23, 10091–10103. [Google Scholar] [CrossRef]

- Busoniu, L.; Babuska, R.; De Schutter, B. A comprehensive survey of multiagent reinforcement learning. IEEE Trans. Syst. Man, Cybern. Part Appl. Rev. 2008, 38, 156–172. [Google Scholar] [CrossRef]

- Sunehag, P.; Lever, G.; Gruslys, A.; Czarnecki, W.M.; Zambaldi, V.; Jaderberg, M.; Lanctot, M.; Sonnerat, N.; Leibo, J.Z.; Tuyls, K.; et al. Value-decomposition networks for cooperative multi-agent learning. arXiv 2017, arXiv:1706.05296. [Google Scholar]

- Tampuu, A.; Matiisen, T.; Kodelja, D.; Kuzovkin, I.; Korjus, K.; Aru, J.; Aru, J.; Vicente, R. Multiagent cooperation and competition with deep reinforcement learning. PloS ONE 2017, 12, e0172395. [Google Scholar] [CrossRef]

- Aref, M.A.; Jayaweera, S.K.; Machuzak, S. Multi-agent reinforcement learning based cognitive anti-jamming. In Proceedings of the 2017 IEEE wireless communications and networking conference (WCNC), San Francisco, CA, USA, 19–22 March 2017; pp. 1–6. [Google Scholar]

- Zhang, Y.; Jia, L.; Qi, N.; Xu, Y.; Wang, M. Anti-jamming channel access in 5G ultra-dense networks: A game-theoretic learning approach. Digit. Commun. Netw. 2023, 9, 523–533. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).