Intrusion Detection on AWS Cloud through Hybrid Deep Learning Algorithm

Abstract

1. Introduction

2. Literature Survey

| Categorization | Methodology | Features | Challenges | Common Issues |

|---|---|---|---|---|

| Machine Learning | DT-NN [44] | Achieved good accuracy while selecting the feature | The issue of data over fit on the DT | Used Old Data Set (KDD-CUP 99 and NSL-KDD) |

| ANN + SVM [45] | Time and space complexity for the training dataset has been lesser | Predicting the specific attack type is not accurate | ||

| Deep Learning | CNN [53] | Good accuracy rate | Only detecting the DDoS-based attacks | |

| GRU-RNN [18] | Precession, F1-Score and recall are at a good level | Less accuracy and higher overhead | ||

| AE + DNN [30] | Good precession value with faster prediction | The accuracy and the score of F1 is on the lower side | ||

| LSTM [38] | Good level of accuracy achieved | Bandwidth is on the lower side | ||

| Flood-based Attack Detection | CRESOM—SDNMS [46] | Metaheuristic approach | Accuracy is on the lower side | |

| CS_DDoS [54] | ||||

| FSOMDM [48] | Good in controlling malicious data traffic | False positive is at a higher rate | ||

| LEDEM [25] | Good level of accuracy | When data input speed increases, performance decreases | ||

| ICRPU [47] | Accuracy and intrusion detection are good | FAR is in the higher side | ||

| FRC-based Attack Detection | T-Distribution with flow Confidence Technique [49] | Precision and recall are on the higher side | Lesser attack detection |

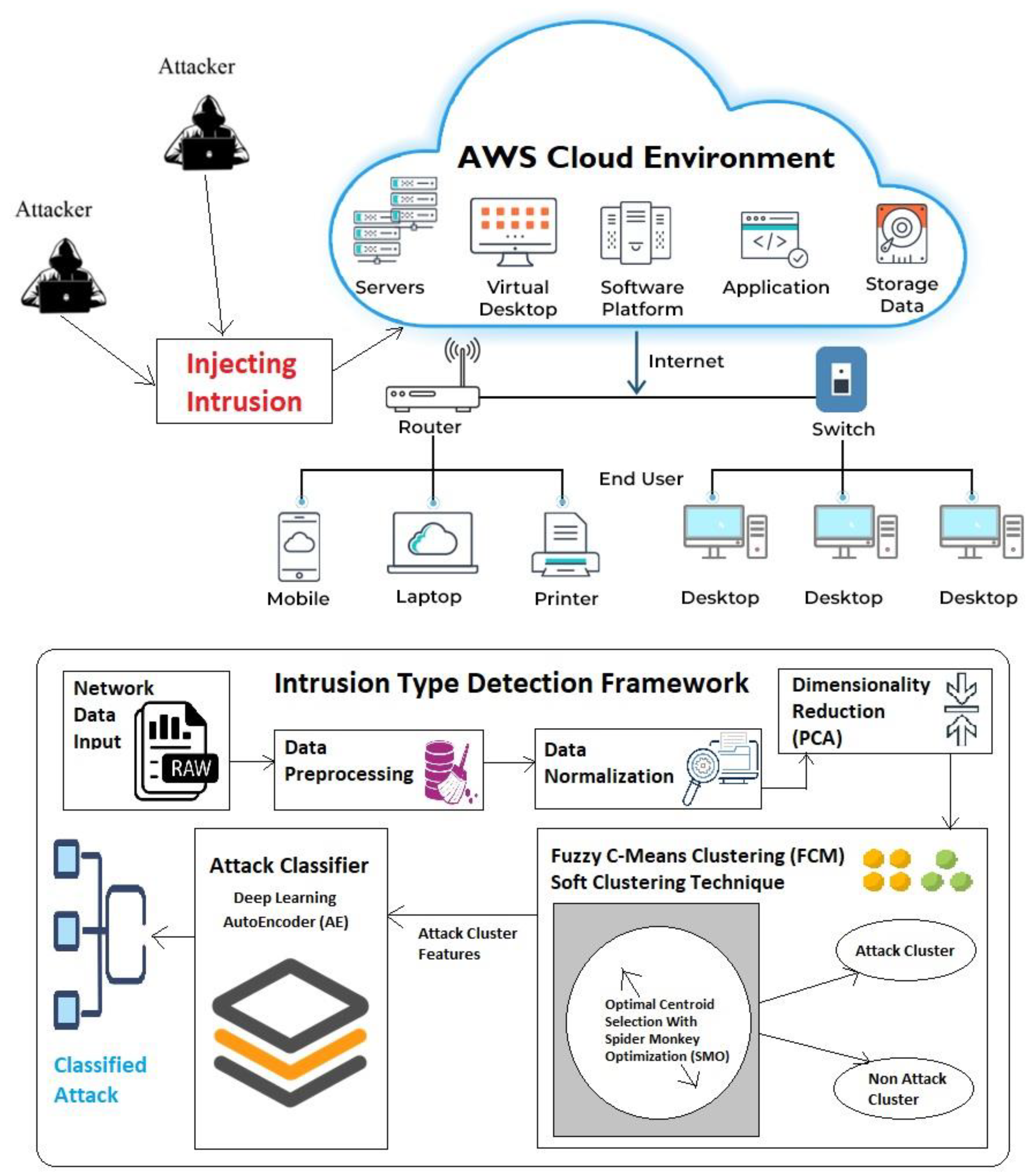

3. Proposed Model

4. Data Initialization Module

4.1. Data Pre-Processing

4.2. Feature Normalization

4.3. Dimensionality Reduction

4.4. Mean

4.5. Standard Deviation

4.6. Covariance

4.7. Eigenvalue and Eigenvectors of a Matrix

5. Cluster Formation Module

5.1. Fuzzy C-Means Algorithm

5.2. Spider Monkey Optimization

5.3. Algorithm of SMO

5.4. Cluster Merging Point (CMP) Algorithm of FCM and SMO

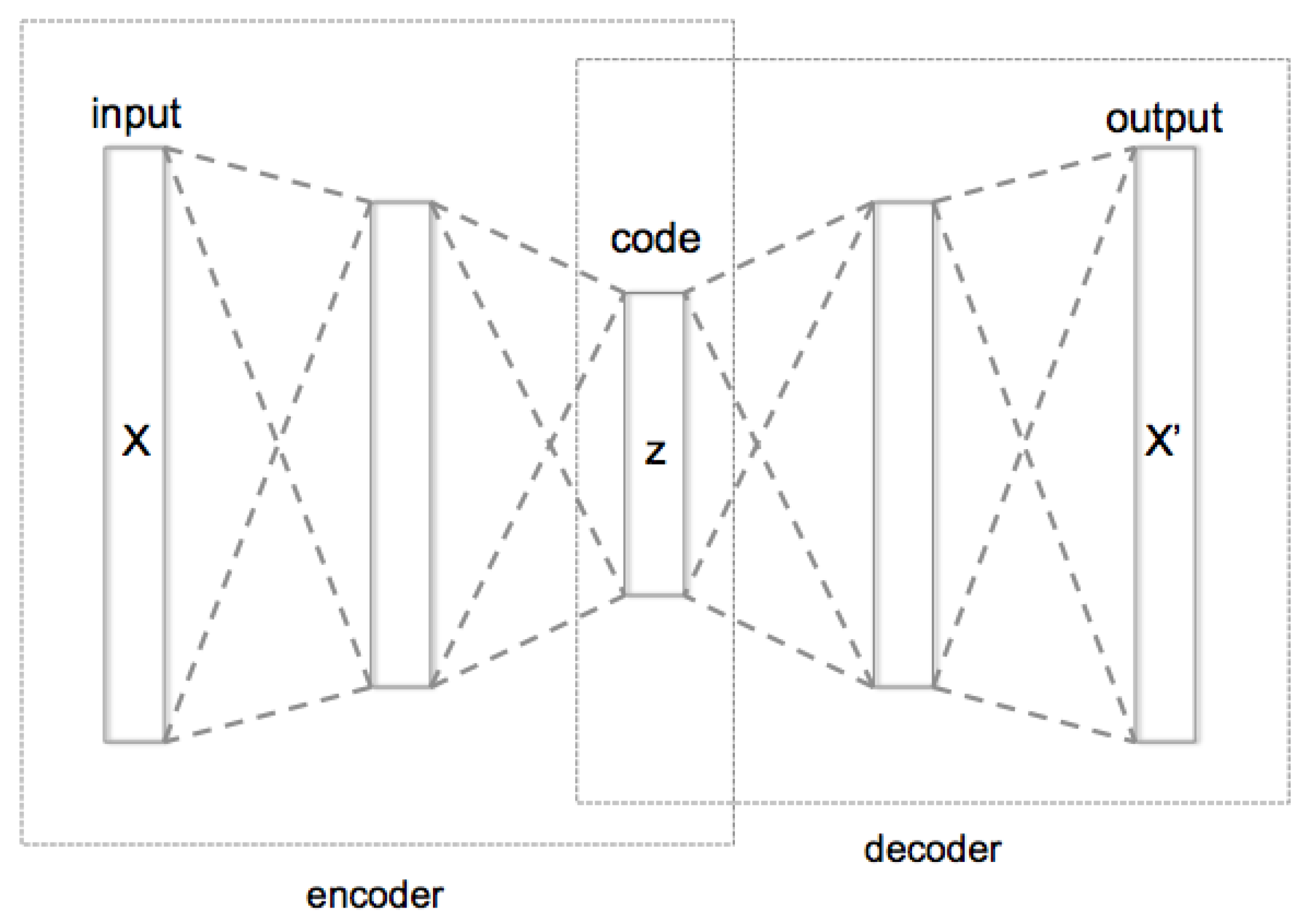

6. Attack Classification Module

7. Dataset and Environment

8. Result and Analysis

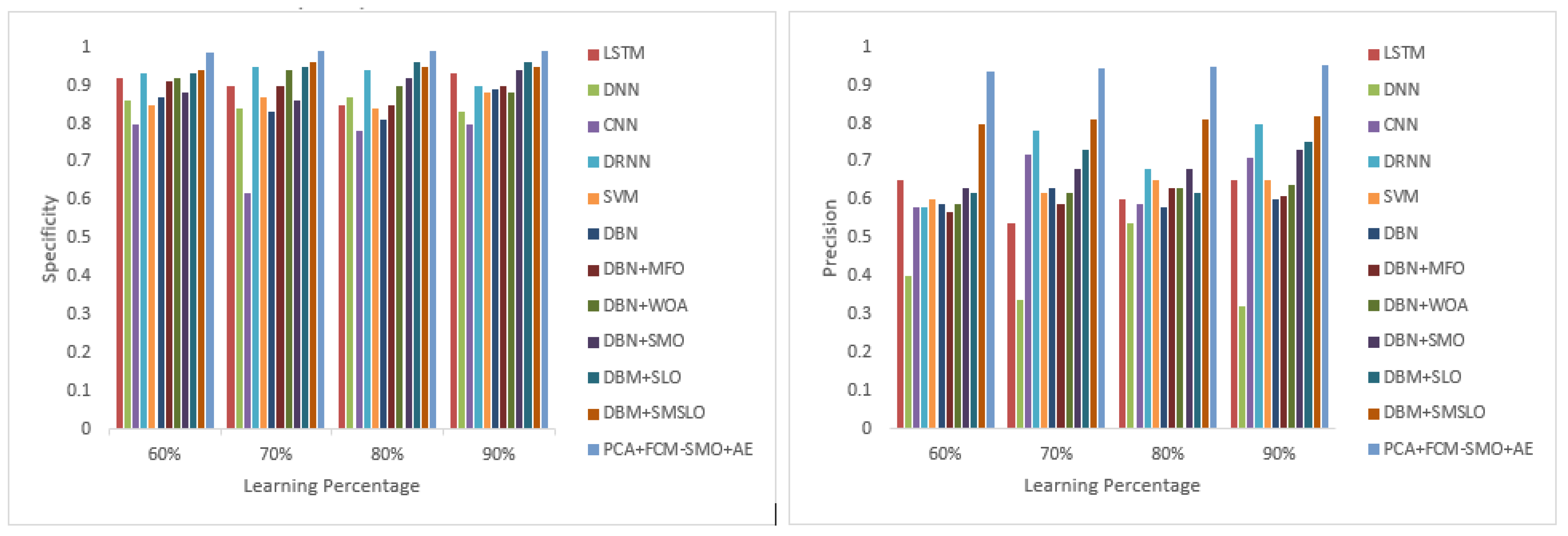

8.1. Positive Measures

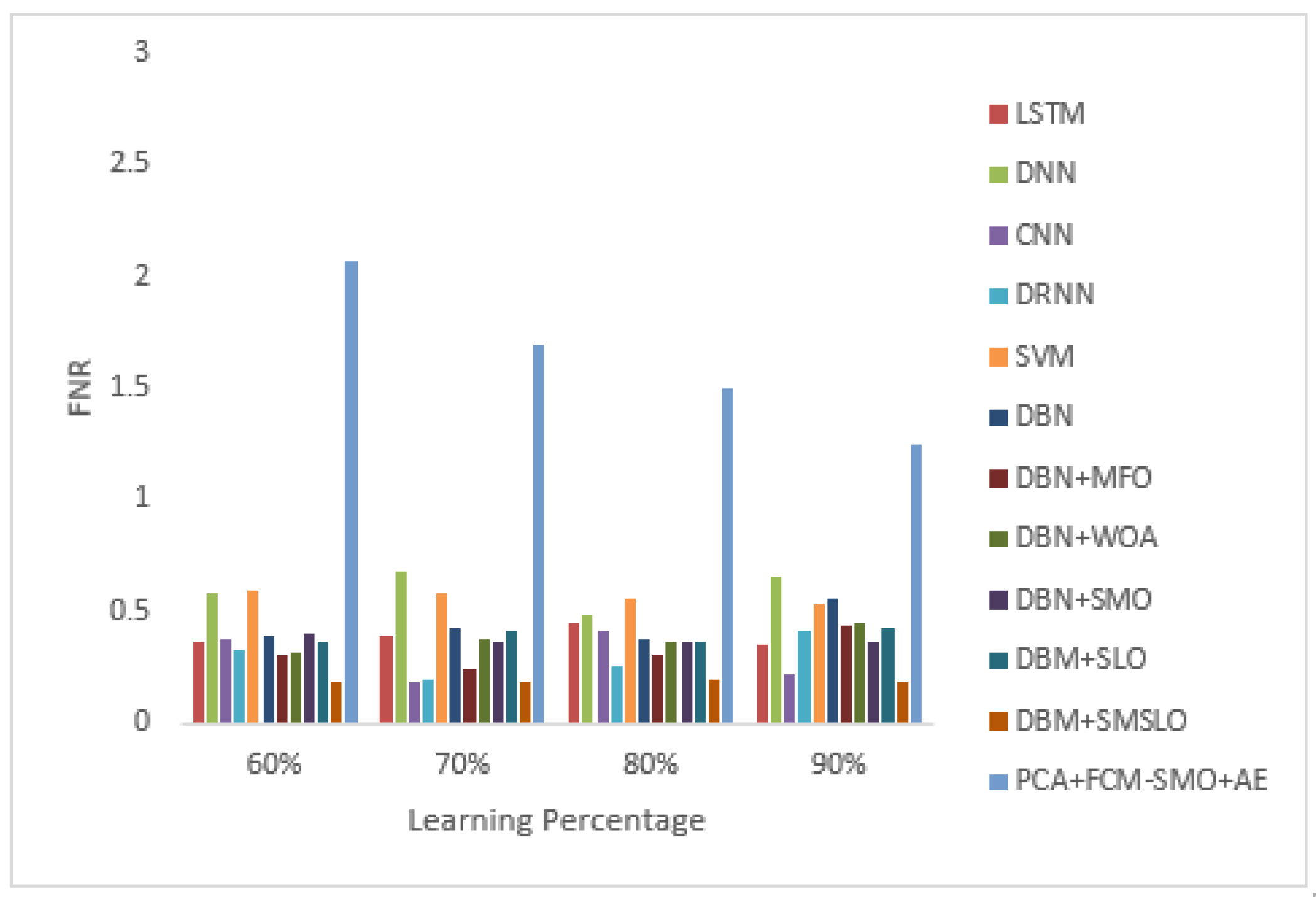

8.2. Negative Measures

8.3. Other Measures

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xing, K.; Srinivasan, S.S.R.; Rivera, M.J.; Li, J.; Cheng, X. Attacks and Countermeasures in Sensor Networks: A survey. In Network Security; Springer: Boston, MA, USA, 2010; pp. 251–272. [Google Scholar]

- Kumar, C.A.; Vimala, R. Load balancing in cloud environment exploiting hybridization of chicken swarm and enhanced raven roosting optimization algorithm. Multimed. Res. 2020, 3, 45–55. [Google Scholar]

- Thomas, R.; Rangachar, M. Hybrid optimization based DBN for face recognition using low-resolution images. Multimed. Res. 2018, 1, 33–43. [Google Scholar]

- Veeraiah, N.; Krishna, B. Intrusion detection based on piecewise fuzzy c-means clustering and fuzzy naive bayes rule. Multimed. Res. 2018, 1, 27–32. [Google Scholar]

- Preetha, N.N.; Brammya, G.; Ramya, R.; Praveena, S.; Binu, D.; Rajakumar, B. Grey wolf optimisation-based feature selection and classification for facial emotion recognition. IET Biom. 2018, 7, 490–499. [Google Scholar] [CrossRef]

- Phan, T.; Park, M. Efficient distributed denial-of-service attack defense in SDN-Based cloud. IEEE Access 2019, 7, 18701–18714. [Google Scholar] [CrossRef]

- Ministry of Home Affairs. India Released Facts on Cyber Crime Cases Registered. Available online: https://www.pib.gov.in/PressReleasePage.aspx?PRID=1694783 (accessed on 21 May 2021).

- A Study Report Published as a News by University of North Georgia. Available online: https://ung.edu/continuing-education/news-and-media/cybersecurity.php (accessed on 21 May 2021).

- 50 Cloud Security Stats You Should Know in 2022. Available online: https://expertinsights.com/insights/50-cloud-security-stats-you-should-know/ (accessed on 28 August 2022).

- Amazon Leads $200-Billion Cloud Market. Available online: https://www.statista.com/chart/18819/worldwide-market-share-of-leading-cloud-infrastructure-service-providers/ (accessed on 28 August 2022).

- Roy, A.; Razia, S.; Parveen, N.; Rao, A.S.; Nayak, S.R.; Poonia, R.C. Fuzzy rule based intelligent system for user authentication based on user behaviour. J. Discret. Math. Sci. Cryptogr. 2020, 23, 409–417. [Google Scholar] [CrossRef]

- Mohan, V.M.; Satyanarayana, K.V.V. The Contemporary Affirmation of Taxonomy and Recent Literature on Workflow Scheduling and Management in Cloud Computing. Glob. J. Comput. Sci. Technol. 2016, 16, 13–21. [Google Scholar]

- Zhijun, W.; Wenjing, L.; Liang, L.; Meng, Y. Low-rate DoS attacks, detection, defense, and challenges: A survey. IEEE Access 2020, 8, 43920–43943. [Google Scholar] [CrossRef]

- Kumar, R.R.; Shameem, M.; Khanam, R.; Kumar, C. A hybrid evaluation framework for QoS based service selection and ranking in cloud environment. In Proceedings of the 15th IEEE India Council International Conference (INDICON), Coimbatore, India, 16–18 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Sharma, K.; Ghose, M.K. Wireless Sensor Networks: An Overview on Its Security Threats. IJCA Spec. Issue Mob. Ad-Hoc Netw. MANETs 2010, 1495, 42–45. [Google Scholar]

- Mohan, V.M.; Satyanarayana, K. Multi-Objective Optimization of Composing Tasks from Distributed Workflows in Cloud Computing Networks, Advances in Intelligent Systems and Computing Volume 1090. In Proceedings of the 3th International Conference on Computational Intelligence and Informatics ICCII (2018), Hyderabad, India, 28–29 December 2018. [Google Scholar]

- Lalitha, V.L.; Raju, D.S.H.; Krishna, S.V.; Mohan, V.M. Customized Smart Object Detection: Statistics of Detected Objects Using IoT; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Kumar, R.R.; Tomar, A.; Shameem, M.; Alam, M.D. Optcloud: An optimal cloud service selection framework using QoS correlation lens. Comput. Intell. Neurosci. 2022, 2022, 2019485. [Google Scholar] [CrossRef]

- CSE-CIC-IDS2018 on AWS. Available online: https://www.unb.ca/cic/datasets/ids-2018.html (accessed on 28 August 2022).

- IDS 2018 Intrusion CSVs (CSE-CIC-IDS2018). Available online: https://www.kaggle.com/datasets/solarmainframe/ids-intrusion-csv?resource=download (accessed on 28 August 2022).

- Somani, G.; Gaur, M.; Sanghi, D.; Conti, M.; Rajarajan, M. Scale inside-out: Rapid mitigation of cloud DDoS attacks. IEEE Trans. Dependable Secur. Comput. 2018, 15, 959–973. [Google Scholar] [CrossRef]

- Balajee, R.M.; Mohapatra, H.; Venkatesh, K. A comparative study on efficient cloud security, services, simulators, load balancing, resource scheduling and storage mechanisms. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Tamil Nadu, India, 26–28 March 2021; Volume 1070, p. 012053. [Google Scholar]

- Balajee, R.M.; Venkatesh, K. A Survey on Machine Learning Algorithms and finding the best out there for the considered seven Medical Data Sets Scenario. Res. J. Pharm. Technol. 2019, 12, 3059–3062. [Google Scholar] [CrossRef]

- Rajeswari, S.; Sharavanan, S.; Vijai, R.; Balajee, R.M. Learning to Rank and Classification of Bug Reports Using SVM and Feature Evaluation. Int. J. Smart Sens. Intell. Syst. 2017, 1, 10. [Google Scholar] [CrossRef]

- Ravi, N.; Shalinie, S.M. Learning-driven detection and mitigation of DDoS attack in IoT via SDN-Cloud architecture. IEEE Internet Things J. 2020, 7, 3559–3570. [Google Scholar] [CrossRef]

- Virupakshar, K.; Asundi, M.; Narayan, D. Distributed Denial of Service (DDoS) Attacks Detection System for OpenStack-based Private Cloud. Procedia Comput. Sci. 2020, 167, 2297–2307. [Google Scholar] [CrossRef]

- Agrawal, N.; Tapaswi, S. Defense mechanisms against DDoS attacks in a cloud computing environment: State-of-the-art and research challenges. IEEE Commun. Surv. Tutor. 2019, 21, 3769–3795. [Google Scholar] [CrossRef]

- Khan, A.A.; Shameem, M. Multicriteria decision-making taxonomy for DevOps challenging factors using analytical hierarchy process. J. Softw. Evol. Process. 2020, 32, e2263. [Google Scholar] [CrossRef]

- Mohapatra, S.S.; Kumar, R.R.; Alenezi, M.; Zamani, A.T.; Parveen, N. QoS-Aware Cloud Service Recommendation Using Metaheuristic Approach. Electronics 2022, 11, 3469. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Mangat, V.; Vig, R. Hyperband tuned deep neural network with well posed stacked sparse autoencoder for detection of DDoS attacks in cloud. IEEE Access 2020, 8, 181916–181929. [Google Scholar] [CrossRef]

- Balajee, R.M.; Kannan, M.K.J.; Mohan, V.M. Automatic Content Creation Mechanism and Rearranging Technique to Improve Cloud Storage Space. In Inventive Computation and Information Technologies; Springer: Singapore, 2022; pp. 73–87. [Google Scholar]

- Voleti, L.; Balajee, R.M.; Vallepu, S.K.; Bayoju, K.; Srinivas, D. A secure image steganography using improved LSB technique and Vigenere cipher algorithm. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1005–1010. [Google Scholar]

- AlKadi, O.; Moustafa, N.; Turnbull, B.; Choo, K. Mixture localization-based outliers models for securing data migration in cloud centers. IEEE Access 2019, 7, 114607–114618. [Google Scholar] [CrossRef]

- Devagnanam, J.; Elango, N. Optimal resource allocation of cluster using hybrid grey wolf and cuckoo search algorithm in cloud computing. J. Netw. Commun. Syst. 2020, 3, 31–40. [Google Scholar]

- Mishra, P.; Varadharajan, V.; Pilli, E.; Tupakula, U. VMGuard: A VMI-Based Security Architecture for Intrusion Detection in Cloud Environment. IEEE Trans. Cloud Comput. 2020, 8, 957–971. [Google Scholar] [CrossRef]

- Dong, S.; Abbas, K.; Jain, R. A survey on distributed denial of service (DDoS) attacks in SDN and cloud computing environments. IEEE Access 2019, 7, 80813–80828. [Google Scholar] [CrossRef]

- Thirumalairaj, A.; Jeyakarthic, M. An intelligent feature selection with optimal neural network based network intrusion detection system for cloud environment. Int. J. Eng. Adv. Technol. 2020, 9, 3560–3569. [Google Scholar] [CrossRef]

- Roy, R. Rescheduling based congestion management method using hybrid Grey Wolf optimization-grasshopper optimization algorithm in power system. J. Comput. Mech., Power Syst. Control 2019, 2, 9–18. [Google Scholar]

- Anand, S. Intrusion detection system for wireless mesh networks via improved whale optimization. J. Netw. Commun. Syst. (JNACS) 2020, 3, 9–16. [Google Scholar] [CrossRef]

- Balajee, R.M.; Hiren, K.M.; Rajakumar, B.R. Hybrid machine learning approach based intrusion detection in cloud: A metaheuristic assisted model. Multiagent Grid Syst. 2022, 18, 21–43. [Google Scholar]

- Kumar, R.R.; Shameem, M.; Kumar, C. A computational framework for ranking prediction of cloud services under fuzzy environment. Enterp. Inf. Syst. 2021, 16, 167–187. [Google Scholar] [CrossRef]

- Tang, T.; McLernon, D.; Mhamdi, L.; Zaidi, S.; Ghogho, M. Intrusion Detection in Sdn-Based Networks: Deep Recurrent Neural Network Approach. In Deep Learning Applications for Cyber Security; Springer: Cham, Switzerland, 2019; pp. 175–195. [Google Scholar]

- Bakshi, A.; Dujodwala, Y.B. Securing cloud from ddos attacks using intrusion detection system in virtual machine. In Proceedings of the 2010 Second International Conference on Communication Software and Networks, Singapore, 26–28 February 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 260–264. [Google Scholar]

- Fontaine, J.; Kappler, C.; Shahid, A.; De Poorter, E. Log-based intrusion detection for cloud web applications using machine learning. In Proceedings of the International Conference on P2P, Parallel, Grid, Cloud and Internet Computing, Online, 20 October 2019; pp. 197–210. [Google Scholar]

- Aboueata, N.; Alrasbi, S.; Erbad, A.; Kassler, A.; Bhamare, D. Supervised machine learning techniques for efficient network intrusion detection. In Proceedings of the 28th International Conference on Computer Communication and Networks (ICCCN), Valencia, Spain, 29 July–1 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Harikrishna, P.; Amuthan, A. SDN-based DDoS attack mitigation scheme using convolution recursively enhanced self organizing maps. Sādhanā 2020, 45, 1–12. [Google Scholar] [CrossRef]

- Bharot, N.; Verma, P.; Sharma, S.; Suraparaju, V. Distributed denial-of-service attack detection and mitigation using feature selection and intensive care request processing unit. Arab. J. Sci. Eng. 2018, 43, 959–967. [Google Scholar] [CrossRef]

- Pillutla, H.; Arjunan, A. Fuzzy self organizing maps-based DDoS mitigation mechanism for software defined networking in cloud computing. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 1547–1559. [Google Scholar] [CrossRef]

- Bhushan, K.; Gupta, B.B. Network flow analysis for detection and mitigation of Fraudulent Resource Consumption (FRC) attacks in multimedia cloud computing. Multimed. Tools Appl. 2019, 78, 4267–4298. [Google Scholar] [CrossRef]

- Baid, U.; Talbar, S. Comparative study of k-means, gaussian mixture model, fuzzy c-means algorithms for brain tumor segmentation. In Proceedings of the International Conference on Communication and Signal Processing 2016 (ICCASP 2016), Online, 26–27 December 2016; pp. 583–588. [Google Scholar]

- Khare, N.; Devan, P.; Chowdhary, C.L.; Bhattacharya, S.; Singh, G.; Singh, S.; Yoon, B. Smo-dnn: Spider monkey optimization and deep neural network hybrid classifier model for intrusion detection. Electronics 2020, 9, 692. [Google Scholar] [CrossRef]

- Masadeh, R.; Mahafzah, B.A.; Sharieh, A. Sea lion optimization algorithm. Int. J. Adv. Comput. Sci. Appl. 2019, 10. [Google Scholar] [CrossRef]

- Kim, J.; Kim, J.; Kim, H.; Shim, M.; Choi, E. CNN-based network intrusion detection against denial-of-service attacks. Electronics 2020, 9, 916. [Google Scholar] [CrossRef]

- Sahi, A.; Lai, D.; Li, Y.; Diykh, M. An efficient DDoS TCP flood attack detection and prevention system in a cloud environment. IEEE Access 2017, 5, 6036–6048. [Google Scholar] [CrossRef]

| Abbreviation | Description |

|---|---|

| ANN | Artificial Neural Network |

| CNN | Convolution Neural Network |

| DNN | Deep Neural Network |

| CRESOM | Convolution Recursively Enhanced Self-Organizing Map |

| AE | AutoEncoder |

| CS | Classifier System |

| FCM | Fuzzy C-Means |

| DBN | Deep Belief Network |

| SMO | Spider Monkey Optimization |

| DDoS | Distributed Denial of Service |

| DoS | Denial of Service |

| PCA | Principal Component Analysis |

| DL | Deep learning |

| DRNN | Deep Recurrent Neural Network |

| DT | Decision Trees |

| SLA | Sea Lion Optimization |

| FRC | Fraudulent Resource Consumption |

| FNR | False negative rate |

| FDR | False discovery rate |

| FPR | False positive rate |

| FSOMDM | Fuzzy Self-Organizing Maps-based DDOS Mitigation |

| SVM | Support Vector Machine |

| GRU | Gated Recurrent Unit |

| ICRPU | Intensive Care Request Processing Unit |

| IDS | Intrusion Detection System |

| LEDEM | Learning-Driven Detection Mitigation System |

| LSTM | Long Short-Term Memory |

| MSE | Mean Square Error |

| NN | Nearest Neighbor |

| RBM | Restricted Boltzmann Machine |

| SD | Standard Deviation |

| SDNMS | Software Defined Networking-based Mitigation Scheme |

| FAR | Floor Area Ratio |

| Learning Percentage | Testing Data Considered |

|---|---|

| 60% | 40% |

| 70% | 30% |

| 80% | 20% |

| 90% | 10% |

| Feature | Description |

|---|---|

| Compute Instance | AWS EC2 |

| Data Storage | .csv files in EBS Storage |

| Instance VPC | Default VPC by AWS |

| Region | ap-south-1 |

| Subnet | ap-south-1a |

| Elastic Block Storage Memory | 8 GB |

| Instance Architecture | 64-bit |

| OS | Linux |

| Security Group | All Traffic, IPV4 allow anywhere |

| Client Terminal | Putty and putty get for key conversion from.pem to.ppk |

| FTP Software to transfer dataset | FileZila |

| FTP Connection | SSH in Port 22 |

| Attacker Environment | Attack Type | Tools Used for Attack | Victim Environment | Duration |

|---|---|---|---|---|

| Kali linux | Bruteforce attack | FTP—Patator SSH—Patator | Ubuntu 16.4 (Web Server) | One day |

| Kali linux | DoS attack | Hulk, GoldenEye, Slowloris, Slowhttptest | Ubuntu 16.4 (Apache) | One day |

| Kali linux | DoS attack | Heartleech | Ubuntu 12.04 (Open SSL) | One day |

| Kali linux | Web attack | Damn Vulnerable Web App (DVWA) in-house selenium framework (XSS and brute-force) | Ubuntu 16.4 (Web Server) | Two days |

| Kali linux | Infiltration attack | First level: dropbox download in a Windows machine. Second level: Nmap and portscan | Windows Vista and Macintosh | Two days |

| Kali linux | Botnet attack | Ares (developed by Python): remote shell, file upload/download, capturing screenshots and key logging | Windows Vista, 7, 8.1, 10 (32-bit) and 10 (64-bit) | One day |

| Kali linux | DDoS + PortScan | Low Orbit Ion Canon (LOIC) for UDP, TCP or HTTP requests | Windows Vista, 7, 8.1, 10 (32-bit) and 10 (64-bit) | Two days |

| Technique Shortform | Reference Paper Number | Technique Full Name |

|---|---|---|

| SVM classifier | [2] | Support Vector Machine |

| LSTM | [42] | Long-Short Term Memory |

| DNN | [6] | Deep Neural Network |

| DRNN | [43] | Deep Recurrent Neural Network |

| CNN | [41] | Convolution Neural Network |

| DBN | [1] | Deep Belief Network |

| DBN + WOA | [1] | Deep Belief Network with Whale Optimization Algorithm |

| DBN + MFO | [1] | Deep Belief Network with Moth Flame Optimization |

| DBN + SLO | [1] | Deep Belief Network with Sea Lion Optimization |

| DBN + SMO | [1] | Deep Belief Network with Spider Monkey Optimization |

| DBN + SMSLO | [1] | Deep Belief Network with Spider Monkey optimization and Sea Lion Optimization |

| DDOS Attack | DOS Attack | Brute-Force Attack | Botnet Attack | |

|---|---|---|---|---|

| Learning Percentage: 60% and Test Data: 40% | ||||

| Predicted Positive | 3,464,454 | 414,564 | 219,911 | 164,846 |

| Predicted Negative | 7,356,524 | 10,406,413 | 10,601,067 | 10,656,132 |

| TP | 3,115,042 | 370,988 | 216,017 | 160,210 |

| TN | 7,110,782 | 9,729,695 | 9,956,390 | 10,031,606 |

| FP | 349,412 | 43,576 | 3894 | 4636 |

| FN | 245,742 | 676,718 | 644,677 | 624,526 |

| Learning Percentage: 70% and Test Data: 30% | ||||

| Predicted Positive | 4,003,406 | 480,911 | 257,899 | 191,118 |

| Predicted Negative | 8,621,068 | 12,143,564 | 12,366,575 | 12433,356 |

| TP | 3,691,901 | 435,110 | 250,951 | 188,314 |

| TN | 8,410,036 | 11,472,976 | 11,739,360 | 11,852,630 |

| FP | 311,504 | 45,801 | 6949 | 2805 |

| FN | 211,032 | 670,588 | 627,215 | 580,726 |

| Learning Percentage: 80% and Test Data: 20% | ||||

| Predicted Positive | 4,570,926 | 547,518 | 295,964 | 219,795 |

| Predicted Negative | 9,857,045 | 13,880,452 | 14,132,007 | 14,208,176 |

| TP | 4,236,896 | 500,409 | 287,717 | 215,674 |

| TN | 9,631,536 | 13,209,304 | 13,472,902 | 13,631,057 |

| FP | 334,029 | 47,110 | 8247 | 4121 |

| FN | 225,509 | 671,148 | 659,105 | 577,119 |

| Learning Percentage: 90% and Test Data: 10% | ||||

| Predicted Positive | 5,127,458 | 612,425 | 329,867 | 245,981 |

| Predicted Negative | 11,104,009 | 15,619,042 | 15,901,600 | 15,985,486 |

| TP | 4,776,398 | 564,726 | 322,994 | 242,118 |

| TN | 10,891,912 | 15,016,893 | 15,204,678 | 15,494,678 |

| FP | 351,060 | 47,698 | 6872 | 3864 |

| FN | 212,097 | 602,149 | 696,922 | 490,808 |

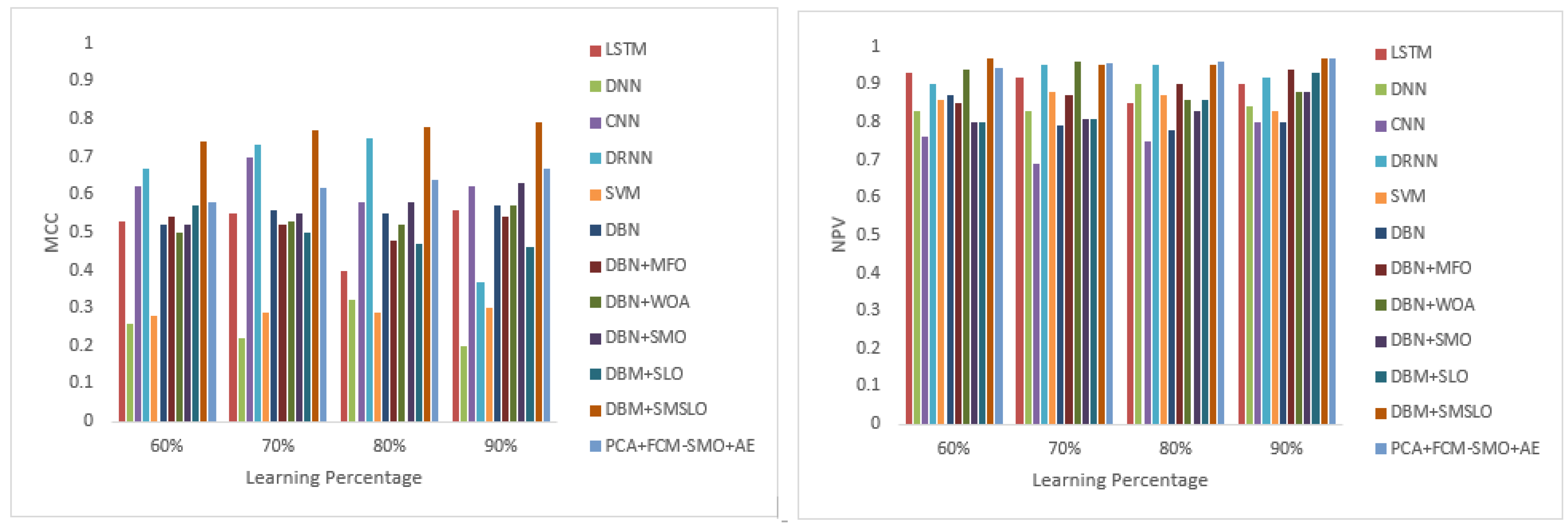

| Measure | LSTM | DNN | CNN | DRNN | SVM | DBN | DBN + MFO | DBN + WOA | DBN + SMO | DBM + SLO | DBM + SMSLO | PCA + FCM-SMO + AE |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Learning Percentage: 60% and Test Data: 40% | ||||||||||||

| Specificity | 0.920 | 0.860 | 0.800 | 0.930 | 0.850 | 0.870 | 0.910 | 0.920 | 0.880 | 0.930 | 0.940 | 0.987 |

| Precision | 0.650 | 0.400 | 0.580 | 0.580 | 0.600 | 0.590 | 0.570 | 0.590 | 0.630 | 0.620 | 0.800 | 0.937 |

| Sensitivity | 0.660 | 0.420 | 0.500 | 0.590 | 0.360 | 0.620 | 0.610 | 0.620 | 0.620 | 0.660 | 0.810 | 0.434 |

| Accuracy | 0.870 | 0.730 | 0.660 | 0.880 | 0.780 | 0.850 | 0.820 | 0.850 | 0.840 | 0.850 | 0.910 | 0.940 |

| MCC | 0.530 | 0.260 | 0.620 | 0.670 | 0.280 | 0.520 | 0.540 | 0.500 | 0.520 | 0.570 | 0.740 | 0.581 |

| F-Measure | 0.655 | 0.410 | 0.537 | 0.585 | 0.450 | 0.605 | 0.589 | 0.605 | 0.625 | 0.639 | 0.805 | 0.593 |

| NPV | 0.930 | 0.830 | 0.760 | 0.900 | 0.860 | 0.870 | 0.850 | 0.940 | 0.800 | 0.800 | 0.970 | 0.946 |

| FPR | 0.090 | 0.130 | 0.500 | 0.080 | 0.150 | 0.110 | 0.090 | 0.110 | 0.080 | 0.090 | 0.060 | 0.013 |

| FDR | 0.380 | 0.590 | 0.410 | 0.350 | 0.560 | 0.390 | 0.340 | 0.410 | 0.360 | 0.420 | 0.180 | 0.063 |

| FNR | 0.370 | 0.580 | 0.380 | 0.330 | 0.600 | 0.390 | 0.300 | 0.320 | 0.400 | 0.360 | 0.180 | 2.062 |

| Learning Percentage: 70% and Test Data: 30% | ||||||||||||

| Specificity | 0.900 | 0.840 | 0.620 | 0.950 | 0.870 | 0.830 | 0.900 | 0.940 | 0.860 | 0.950 | 0.960 | 0.990 |

| Precision | 0.540 | 0.340 | 0.720 | 0.780 | 0.620 | 0.630 | 0.590 | 0.620 | 0.680 | 0.730 | 0.810 | 0.946 |

| Sensitivity | 0.660 | 0.360 | 0.760 | 0.750 | 0.420 | 0.620 | 0.580 | 0.620 | 0.610 | 0.660 | 0.830 | 0.468 |

| Accuracy | 0.850 | 0.800 | 0.520 | 0.920 | 0.740 | 0.820 | 0.780 | 0.850 | 0.850 | 0.840 | 0.930 | 0.951 |

| MCC | 0.550 | 0.220 | 0.700 | 0.730 | 0.290 | 0.560 | 0.520 | 0.530 | 0.550 | 0.500 | 0.770 | 0.618 |

| F-Measure | 0.594 | 0.350 | 0.739 | 0.765 | 0.501 | 0.625 | 0.585 | 0.620 | 0.643 | 0.693 | 0.820 | 0.626 |

| NPV | 0.920 | 0.830 | 0.690 | 0.950 | 0.880 | 0.790 | 0.870 | 0.960 | 0.810 | 0.810 | 0.950 | 0.956 |

| FPR | 0.080 | 0.170 | 0.750 | 0.070 | 0.130 | 0.080 | 0.110 | 0.090 | 0.060 | 0.070 | 0.050 | 0.010 |

| FDR | 0.380 | 0.680 | 0.170 | 0.200 | 0.570 | 0.330 | 0.220 | 0.700 | 0.360 | 0.470 | 0.190 | 0.054 |

| FNR | 0.390 | 0.680 | 0.180 | 0.200 | 0.580 | 0.430 | 0.240 | 0.380 | 0.360 | 0.410 | 0.190 | 1.691 |

| Learning Percentage: 80% and Test Data: 20% | ||||||||||||

| Specificity | 0.850 | 0.870 | 0.780 | 0.940 | 0.840 | 0.810 | 0.850 | 0.900 | 0.920 | 0.960 | 0.950 | 0.991 |

| Precision | 0.600 | 0.540 | 0.590 | 0.680 | 0.650 | 0.580 | 0.630 | 0.630 | 0.680 | 0.620 | 0.810 | 0.949 |

| Sensitivity | 0.520 | 0.480 | 0.500 | 0.700 | 0.440 | 0.610 | 0.590 | 0.610 | 0.630 | 0.630 | 0.800 | 0.488 |

| Accuracy | 0.830 | 0.790 | 0.590 | 0.900 | 0.770 | 0.830 | 0.800 | 0.840 | 0.850 | 0.790 | 0.920 | 0.956 |

| MCC | 0.400 | 0.320 | 0.580 | 0.750 | 0.290 | 0.550 | 0.480 | 0.520 | 0.580 | 0.470 | 0.780 | 0.638 |

| F-Measure | 0.557 | 0.508 | 0.541 | 0.690 | 0.525 | 0.595 | 0.609 | 0.620 | 0.654 | 0.625 | 0.805 | 0.645 |

| NPV | 0.850 | 0.900 | 0.750 | 0.950 | 0.870 | 0.780 | 0.900 | 0.860 | 0.830 | 0.860 | 0.950 | 0.960 |

| FPR | 0.150 | 0.130 | 0.630 | 0.050 | 0.140 | 0.080 | 0.080 | 0.090 | 0.070 | 0.080 | 0.050 | 0.009 |

| FDR | 0.460 | 0.480 | 0.410 | 0.250 | 0.570 | 0.310 | 0.340 | 0.410 | 0.420 | 0.380 | 0.200 | 0.051 |

| FNR | 0.450 | 0.490 | 0.420 | 0.260 | 0.560 | 0.380 | 0.300 | 0.370 | 0.360 | 0.360 | 0.200 | 1.503 |

| Learning Percentage: 90% and Test Data: 10% | ||||||||||||

| Specificity | 0.930 | 0.830 | 0.800 | 0.900 | 0.880 | 0.890 | 0.900 | 0.880 | 0.940 | 0.960 | 0.950 | 0.991 |

| Precision | 0.650 | 0.320 | 0.710 | 0.800 | 0.650 | 0.600 | 0.610 | 0.640 | 0.730 | 0.750 | 0.820 | 0.954 |

| Sensitivity | 0.600 | 0.340 | 0.640 | 0.800 | 0.500 | 0.630 | 0.620 | 0.630 | 0.660 | 0.650 | 0.800 | 0.522 |

| Accuracy | 0.850 | 0.720 | 0.630 | 0.820 | 0.790 | 0.860 | 0.800 | 0.860 | 0.900 | 0.840 | 0.960 | 0.963 |

| MCC | 0.560 | 0.200 | 0.620 | 0.370 | 0.300 | 0.570 | 0.540 | 0.570 | 0.630 | 0.460 | 0.790 | 0.669 |

| F-Measure | 0.624 | 0.330 | 0.673 | 0.800 | 0.565 | 0.615 | 0.615 | 0.635 | 0.693 | 0.696 | 0.810 | 0.675 |

| NPV | 0.900 | 0.840 | 0.800 | 0.920 | 0.830 | 0.800 | 0.940 | 0.880 | 0.880 | 0.930 | 0.970 | 0.967 |

| FPR | 0.090 | 0.180 | 0.640 | 0.090 | 0.140 | 0.090 | 0.120 | 0.080 | 0.070 | 0.080 | 0.040 | 0.009 |

| FDR | 0.340 | 0.650 | 0.210 | 0.400 | 0.580 | 0.410 | 0.380 | 0.640 | 0.380 | 0.490 | 0.190 | 0.046 |

| FNR | 0.350 | 0.650 | 0.220 | 0.410 | 0.540 | 0.560 | 0.440 | 0.450 | 0.360 | 0.430 | 0.190 | 1.250 |

| Average Value Results | ||||||||||||

| Specificity | 0.900 | 0.850 | 0.750 | 0.930 | 0.860 | 0.850 | 0.890 | 0.910 | 0.900 | 0.950 | 0.950 | 0.990 |

| Precision | 0.610 | 0.400 | 0.650 | 0.710 | 0.630 | 0.600 | 0.600 | 0.620 | 0.680 | 0.680 | 0.810 | 0.947 |

| Sensitivity | 0.610 | 0.400 | 0.600 | 0.710 | 0.430 | 0.620 | 0.600 | 0.620 | 0.630 | 0.650 | 0.810 | 0.478 |

| Accuracy | 0.850 | 0.760 | 0.600 | 0.880 | 0.770 | 0.840 | 0.800 | 0.850 | 0.860 | 0.830 | 0.930 | 0.953 |

| MCC | 0.510 | 0.250 | 0.630 | 0.630 | 0.290 | 0.550 | 0.520 | 0.530 | 0.570 | 0.500 | 0.770 | 0.626 |

| F-Measure | 0.608 | 0.399 | 0.623 | 0.710 | 0.510 | 0.610 | 0.600 | 0.620 | 0.654 | 0.664 | 0.810 | 0.635 |

| NPV | 0.900 | 0.850 | 0.750 | 0.930 | 0.860 | 0.810 | 0.890 | 0.910 | 0.830 | 0.850 | 0.960 | 0.957 |

| FPR | 0.103 | 0.153 | 0.630 | 0.073 | 0.140 | 0.090 | 0.100 | 0.093 | 0.070 | 0.080 | 0.050 | 0.010 |

| FDR | 0.390 | 0.600 | 0.300 | 0.300 | 0.570 | 0.360 | 0.320 | 0.540 | 0.380 | 0.440 | 0.190 | 0.053 |

| FNR | 0.390 | 0.600 | 0.300 | 0.300 | 0.570 | 0.440 | 0.320 | 0.380 | 0.370 | 0.390 | 0.190 | 1.627 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

R M, B.; M K, J.K. Intrusion Detection on AWS Cloud through Hybrid Deep Learning Algorithm. Electronics 2023, 12, 1423. https://doi.org/10.3390/electronics12061423

R M B, M K JK. Intrusion Detection on AWS Cloud through Hybrid Deep Learning Algorithm. Electronics. 2023; 12(6):1423. https://doi.org/10.3390/electronics12061423

Chicago/Turabian StyleR M, Balajee, and Jayanthi Kannan M K. 2023. "Intrusion Detection on AWS Cloud through Hybrid Deep Learning Algorithm" Electronics 12, no. 6: 1423. https://doi.org/10.3390/electronics12061423

APA StyleR M, B., & M K, J. K. (2023). Intrusion Detection on AWS Cloud through Hybrid Deep Learning Algorithm. Electronics, 12(6), 1423. https://doi.org/10.3390/electronics12061423