Abstract

Measuring ontology matching is a critical issue in knowledge engineering and supports knowledge sharing and knowledge evolution. Recently, linguistic scientists have defined semantic relatedness as being more significant than semantic similarities in measuring ontology matching. Semantic relatedness is measured using synonyms and hypernym–hyponym relationships. In this paper, a systematic approach for measuring ontology semantic relatedness is proposed. The proposed approach is developed with a clear and fully described methodology, with illustrative examples used to demonstrate the proposed approach. The relatedness between ontologies has been measured based on class level by using lexical features, defining semantic similarity of concepts based on hypernym–hyponym relationships. For evaluating our proposed approach against similar works, benchmarks are generated using five properties: related meaning features, lexical features, providing technical descriptions, proving applicability, and accuracy. Technical implementation is carried out in order to demonstrate the applicability of our approach. The results demonstrate an achieved accuracy of 99%. The contributions are further highlighted by benchmarking against recent related works.

1. Introduction

Recently, ontology has been described as one of the most widespread modern knowledge representation techniques, which provides a source of precise knowledge; an ontology can be considered as a formal definition of concepts along with relationships between these concepts. In the knowledge engineering domain, the primary aims of ontologies are knowledge representation and knowledge sharing. In the past two decades, ontologies have been used prominently for representing information in a machine-readable and processable way [1].

More recently, ontologies have been used for exchanging business and scientific data via authenticated and standardized knowledge representation structures, replacing older techniques. In this context, there have been many efforts to develop and implement ontologies from scientific and commercial perspectives. The quality of an ontology is highly dependent on both the knowledge that it represents and the format that is used. Knowledge concerning identical concepts could be represented by different syntaxes. Hence, the existence of multiple ontologies with different syntaxes yet identical same semantics is logical and justifiable.

On the other hand, according to [2], the ontology should have a continuous maintenance process to keep it contemporary as a response to the updates and challenges in the domain it models. In addition, usually, users are aided with a specific ontology to accomplish their tasks, for instance, searching data, and at some point, they will need to expand it to adapt to the dynamic nature of their requirements. In addition, [3,4] highlight that finding correspondences between entities of two or more given ontologies, known as “ontology matching,” is a challenging task.

Measuring the similarity between two ontologies is a potential area for innovation and improvement in the ontology industry. Applying intelligent solutions for automatically calculating similarities between ontologies is a challenging task with applications in many real-world applications that require the integration of semantic information [5], such as sentiment analysis of Twitter posts [6,7].

According to [8], semantic similarity could be measured using synonyms, hyponyms, and hypernyms. A hypernym is a generalization of a more specific term; for instance, color is a hypernym of red. A hyponym is a term that is more specific than a given more general term, i.e., a term whose meaning is included in that of another term; for instance, red is a hyponym of color. A synonym of a term is one that has the same meaning; two concepts are considered to be synonymous if they are having the same meaning; for instance, red is a synonym for ruby.

The linguistic-semantic technique is widely used for measuring similarities between ontologies, i.e., matching lexical features between ontologies [9]. The linguistic-semantic technique is concerned with measuring similarities between lexical units and finding taxonomic relations. In knowledge engineering, taxonomic relations in an ontology are denoted by the hierarchical arrangement of concepts based on hypernym/hyponym relations. The development of taxonomic relations serves as the foundation of ontology construction [10].

In the knowledge engineering domain, measuring semantic relations, i.e., semantic relatedness between ontologies can be achieved based on lexical ontology units. In this context, there are numerous research works that investigate measuring ontology similarities, yet, measuring semantic relatedness demands more attention [11]. Semantic relatedness between words in ontologies is the main conceptualization for measuring ontology similarities [12] and is analyzed and defined by examined hypernym/hyponym relations [13].

In this paper, we propose a new approach for defining semantic relatedness between ontologies based on measuring synonym, hyponym, and hypernym components. Illustrative running examples are used for presenting and explaining our approach. Logic rules have been used effectively for representing semantic components of an ontology [14]; therefore, we use logic rules for representing our assumptions and definitions. For proving its applicability, we implement our approach in Python. The accuracy of our approach is evaluated using precision and recall metrics. Finally, we use standard benchmarking for highlighting our contributions, based on comparisons with previous similar works.

2. Related Works

In this section, we review related works that are used lexical relations, particularly the hypernym–hyponym relationship.

Ref. [15] designed a method for evaluating the inter-relationship between ontology terms using a graph structure of the seed ontology. Reference [16] proposed a method for calculating a ratio between hypernym and hyponym between ontologies by using the Levenshtein distance algorithm; the aim was to define semantic similarity value. Reference [5] illustrates the importance of discovering hypernym relationships between ontologies for measuring semantic similarities. The study [5] developed an automatic approach for discovering hyponym relationships across ontology elements. However, the approach interferes with discovering synonym relationships and thus leads to curtailment of the accuracy. Reference [17] proposed a probabilistic method for contextual advertising based on ontology. This method assigns weights to the candidate instances based on the probability that it is a hypernym of a concept, reflecting the importance of measuring hypernym relationships. Reference [18] addressed the problem of matching foundational ontologies by defining the hypernym relation carried out in the definitions layout, i.e., ontology meta-data. Reference [19] developed an ontology-based approach for labeling influential topics of scientific articles by using synonym, hypernym, and homonym relationships. The study [20] used hypernym and homonym relationships to define entity matching in ontology-based attribute graphs.

Ref. [21] developed a method for calculating the availability of information content in an ontology based on hypernyms-hyponyms taxonomic knowledge. Reference [1] developed a semantic matching framework for the integration of large knowledge bases using the linguistic-semantic technique, using the WordNet electronic dictionary as a data source, and evaluating using the precision and recall technique. Reference [22] developed ad hoc algorithms using probabilistic association to analyze lexical units; these algorithms generate a directed acyclic graph for finding hypernyms. Reference [10] designed a method for extracting taxonomic relations from a domain text by using a combination of the web approach and word sense disambiguation, using search engines for collecting targeted patterns, and matching each pattern with its corresponding hypernym from Wordnet.

Ref. [23] developed an approach for extending a learning ontology-based model by adding missing concepts. The missing concepts are defined by examining associated ontologies and evaluating similarities based on linguistic patterns such as hyponym-hypernym measurement. Reference [24] used the relationship of synonyms, hypernyms, and homonyms for the explanation of ontology queries. The results demonstrated the importance of discovering the synonym, hypernym, and homonym relationships. Reference [25] proposed a method for enhancing query sentences for ontology-oriented search by defining hypernym and homonym relationships. Reference [26] proposed a method to deal with semantic heterogeneity where data are organized into two different layers by defining hypernym and homonym relationships. Reference [27] proposed a model for ontology matching for large-scale ontologies in big data, which is based on probability, logical, and similarities between lexical units.

Ref. [28] addressed the challenge of the continuous changing of the ISO 27001-based security ontology by defining the hypernyms and hyponyms for security concepts. The hypernyms and hyponyms were extracted from DBpedia. Reference [29] used the WordNet lexical database to define hypernym relation similarities by comparing vocabularies one by one. Reference [30] proposed a model for automatically extracting Arabic text by parsing sentences meaning which can be used for developing ontologies. This method used hypernym and hyponym relationships to develop a query triple-answering method. Reference [12] proposed a semantic evaluation dataset for defining semantic similarity and relatedness. This study confirms the importance of word relatedness in measuring semantic similarity. Reference [31] developed an ontology for IT Operations that is applicable to IT dynamic applications. This ontology has been evaluated by measuring its similarity based on linguistic patterns, i.e., by hyponym-hypernym measurement. Reference [32] used synonym, antonym, hypernym, and hyponym relationships for extracting and analyzing Quran concepts. Reference [33] developed an approach to enhance the measurement of semantic relationships between hypernym-based concepts and homogeneous concepts. The results proved the usefulness of integrating multiple classifications in defining ontologies matching. Reference [34] defined three disciplines for ontology matching which are databases, natural language processing, and machine learning. The research proved high potentiality for developing automation tools in ontology matching based on Natural language processing (NLP). Table 1 summarizes the considered related works in terms of purposes and methodologies used. The work in [35] used standard algorithms for measuring semantic relatedness between ontologies. The approach in [35] associated semantic similarity with relatedness, while our approach provides a flexible approach that measures synonym and homonym relatedness separately.

Table 1.

Summary of related works.

The aforementioned discussion of selected related works indicates a research gap, specifically a decisive demand for considering semantic relatedness in measuring ontology similarities. In addition, there is a need for defining relatedness with a specific percentage threshold.

3. Methodology

In this section, our methodology to measure the matching between two ontologies is explained in detail, accompanied by illustrated examples. An ontology is described in terms of classes and associated attributes [36]. Hence, our methodology has been developed based on measuring the matching between classes of targeted ontologies. In other words, the methodology starts the measuring process from the low level of ontology, which is the class. In the following, assumptions and definitions have been described using mathematical logic. The letter “O” denotes an ontology, the letter “C” denotes a class, and the letters “DP” denote a data property.

Assumption 1.

∀ O, Ǝ C: ontology(O) ∧class (C) ∧ contain(O, C) ⟹ True

Assumption 2.

∀ C, Ǝ DP: class (C) ∧ data_property(DP) ∧ contain(C, DP) ⟹ True

Assumption 1 states that any ontology has at least one class; Assumption 2 states that any class is described by at least one data property. Our methodology is based on calculating similarities between ontologies’ classes, therefore, any ontology that does not include at least one class, or includes a class without data properties cannot be considered in our approach.

Definition 1.

∀ C1, C2, O1, O2: class(C1) ∧ class(C2) ∧ ontology (O1) ∧ ontology (O2) ∧ C1 ∈ O1 ∧ C2 ∈ O2 ∧ similarity (C1, C2) ⟹ similarity (O1, O2)

Definition 1 states that where class C1 belongs to ontology O1 and class C2 belongs to ontology O2, the similarity between ontologies O1 and O2 is defined as the similarity between classes C1 and C2.

Definition 2.

∀ O1, O2: ontology (O1) ∧ ontology (O2) ⟹ similarity (O1, O2) ≠ similarity (O2, O1)

Definition 2 states that the similarity between ontology “A” and ontology “B” is not equal to the similarity between ontology “B” and ontology “A”.

To measure the similarity between two classes, or between a class and a subclass, the following steps should be executed in order.

- Prepare the properties of each class by applying the normalization process which is:

- Remove stopping words; e.g.: “of”, “in”, or “the”;

- Remove class signs; e.g.: suppose the class name is “car” and the property is “car model” then the data property will be “model”;

- Apply stemming for each data property.

- Apply cartesian product to compare the properties of the two targeted classes. The comparison becomes true if and only if one of the following applies:

- The properties are synonyms of each other;

- If one data property has two words and another data property has one word, then the comparison becomes true if there is at least one word similar. For instance, the class “car” has the data property “plate number” and the class “vehicle” has the data property “number”, these two data properties could be considered similar;

- If the two data properties have two or more words: (1) remove similar words; (2) if the remaining words are synonyms of each other, then similarity becomes true. For instance, consider the data property “close date” and the data property “date open”, then the word “date” will be eliminated; the word “close” is not a synonym of the word “open”, thus these two data properties are not similar. As another example, consider the data property “open date” and the data property “access date”, the word “date” will be eliminated, and the word “open” is a synonym of the word “access”, thus the two data properties are similar.

This proposed approach follows corpus-based approaches where similarity is counted based on the number of overlapping data properties between the two ontologies [37].

We now give illustrated examples of the proposed methodology.

Example 1.

Consider the following two classes: “Scientific article” and “research” which belong to two different ontologies, Table 2 shows the data properties of these two classes.

Table 2.

Data properties of classes scientific article and research.

Before applying the proposed methodology, the data properties of each class are modeled as a vector. We now implement the steps of the proposed methodology:

- 3.

- Remove the stopping words.

The first step is removing the stopping words. Table 3 shows the data properties after removing the stopping words. For instance, the data property “decision of article” has become “decision article”.

Table 3.

Data properties after removing the stopping words.

- 4.

- Remove the class signs.

The second step is removing the class signs. For instance, the property “article author” has become “author”. Table 4 shows data properties after removing the class signs. Table 4 is developed based on results from Table 3, i.e., after the stopping words have been removed in step 1.

Table 4.

Data properties after removing the class signs.

- 5.

- Stemming.

The third step is stemming for all data properties. For instance, the data property “identifier” from class “paper” will be stemmed to be “identify”. Table 5 shows data properties after applying stemming. Table 5 is developed based on results from Table 4, i.e., after removing the class signs.

Table 5.

Data properties after applying stemming.

- 6.

- Apply cartesian product to properties.

The fourth step is to apply cartesian product to the properties. In this step, each property from the class “Scientific article” has been placed with each property from the class “research”. For instance, the data property “author” from class “Scientific article” has been placed against each property class “research”, the result becomes: ((author, research); (author, date submission); (author, title); (author, decision); (author, paper identify), (author, field)). Table 6 shows the cartesian product of the properties, developed based on the results from Table 5, i.e., after stemming the data properties.

Table 6.

Cartesian product of properties.

- 7.

- Comparison

The preceding steps illustrate the normalization process and the fifth step is compares each pair of properties to discover similarities. The similarity is calculated based on the conditions detailed in step 2 of the approach. Table 7 shows the positive results of the comparison process with the appropriate causal reason. Table 7 is developed based on results from Table 6, i.e., the comparison is computed between each cartesian pair.

Table 7.

The positive results of comparison process.

Definition 3 defines the calculation for similarity percentage between classes, where similarity could be due to either synonyms or hypernyms.

Definition 3.

∀ C1, C2, No, T1, T2: class(C1) ∧ class(C2) ∧ (No = number of similar data properties between C1 and C2) ∧ (T1 = total number of data properties of C1) ∧ (T2 = total number of data properties of C2) ⟹ (similarity(C1, C2) = (No % T1)) ∧ (similarity (C2, C1) = (No % T2)).

In Definition 3, the similarity between two classes A and B is taken from Definition 2: similarity (A, B) = number of similar synonym data properties between classes A and B, divided by the total number of data properties in class A. As stated in Definition 2, the similarity between two classes A and B is not equal to the similarity between B and A. Therefore, the similarity between B and A is calculated as: similarity (B, A) = number of similar data properties between A and B, divided by the total number of data properties in class B.

Using Definition 3, we can now calculate synonym similarity between the two classes “scientific article” and “research”. The results from Table 6 show a total of five similar synonym data properties between class “Scientific Article” and class “research”. In other words, there are five cartesian pairs that are similar. Class “scientific article” has six data properties, five of which are similar to data properties from class “research”; therefore, synonyms_similarity (scientific article, research) = 83.33%. Class “research” has seven data properties, five of them are similar to data properties from class “scientific article”; therefore, synonyms_similarity (research, scientific article) = 71.43%.

In the modern linguistic-semantic technique, semantic relatedness is described by finding taxonomic relations. A taxonomic relation is defined by synonyms, hypernyms, and hyponyms.

Given that hypernyms and hyponyms could be considered two sides of the same coin, we have calculated hypernym relatedness.

Hence, defining similarity based on similar synonyms is not sufficient. We now demonstrate checking for hypernym relation means of an illustrated example.

Example 2.

Consider two classes, A and B. Table 8 shows the data properties of these two classes.

Table 8.

Data properties of classes A and B from example 2.

Table 9 shows the result of hypernym similarity of data properties between classes A and B.

Table 9.

Result of hypernym similarity of data properties between classes A and B.

Table 10 shows the result of hypernym similarity of data properties between classes B and A.

Table 10.

Result of hypernym similarity of data properties between classes B, and A.

Using Definition 3, we calculate hypernym similarity between classes A and B. Class A has eight data properties, with two of them being hypernyms for data properties from Class B; therefore, hypernym_similarity (A, B) = 25%.

Class B has nine data properties, with four of them being hypernyms for data properties from class A; therefore, hypernym_similarity (B, A) = 44.44%.

In the following section, the applicability of our proposed approach has been presented.

Appendix A shows an example of a relatedness measurement between ontology with one class with ontology with hierarchically organized classes. Appendix B shows an example of a relatedness measurement between two published ontologies.

4. Implementation

In computing, examining the applicability of a proposed solution is critical in proving the success, and acceptability of the approach. In this section, the applicability of our proposed approach is demonstrated by means of implementation and benchmarking.

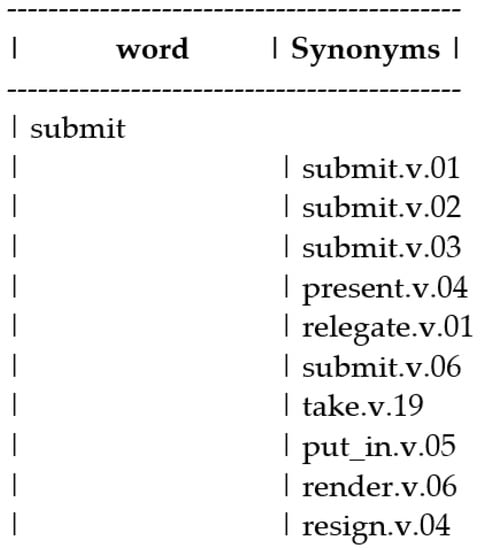

The WordNet lexical database [38], has been used to obtain a set of synonyms and hyponyms for every given word. Figure 1 shows the output from finding all synonyms of the word “submit”.

Figure 1.

All synonyms of the word “submit”, as extracted from WordNet.

To implement the calculation of synonyms similarly (as per Example 1), the following steps were taken:

- Select one word from the cardinality pair;

- Extract all synonyms of the selected word from WordNet;

- Check all extracted synonyms against the other word in the cardinality pair. The comparison flag of the cardinality pair becomes true if one word in the cardinality pair is a synonym of another word in the cardinality pair. The full explanation of this scenario has been presented in Example 1 in Section 3.

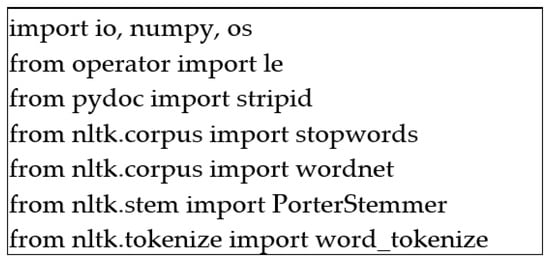

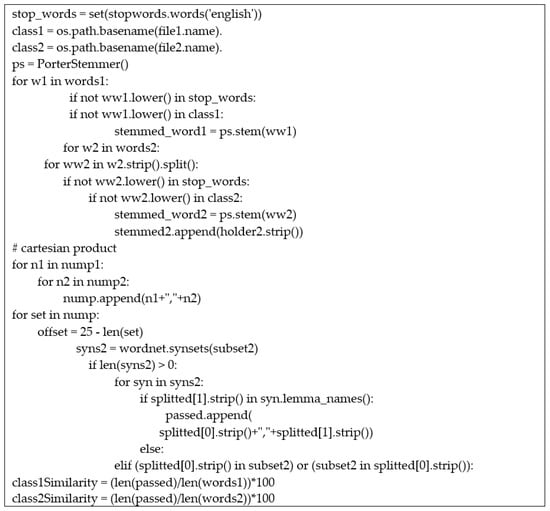

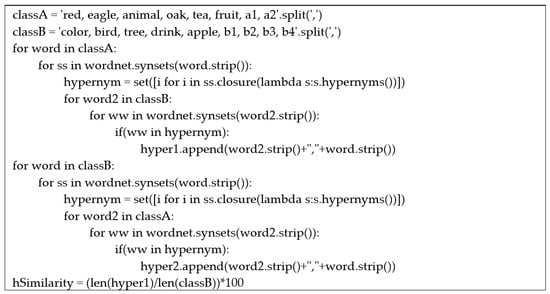

Figure 2 shows the external Python libraries that have been used in the implementation. Figure 3 shows the algorithm, written in Python, for calculating synonym similarities between two classes.

Figure 2.

The external libraries that have been used in implementation.

Figure 3.

Algorithm of calculating synonyms between two classes.

The algorithm in Figure 3 has been used for implementing Example 1 using the Python programming language and the NLTK library.

The following steps are followed for implementing hypernym similarity:

- Select one word from the cardinality pair;

- Extract all hypernyms of the selected word from WordNet;

- Check all the extracted hypernyms against the other word in the cardinality pair. The comparison status of the cardinality pair becomes true if one word in the cardinality pair is a hypernym of another word in the cardinality pair. The full explanation of this scenario has been presented in Example 2 in Section 3.

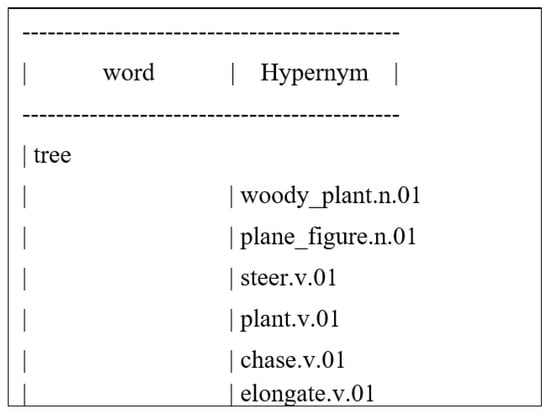

Figure 4 shows all hypernyms of the word “tree” as extracted from WordNet.

Figure 4.

All hypernyms of a word “tree” as extracted from WordNet.

Figure 5 shows the algorithm, written in Python, for calculating hypernym similarity between classes “A” and “B” and between classes “B” and “A”.

Figure 5.

Algorithm for calculating hypernym similarity between classes “A” and “B” and classes “B” and “A”.

The algorithm in Figure 5 implements Example 2 using the Python programming language and the NLTK library. The implementation of these two algorithms demonstrates the theoretical and practical applicability of our proposed approach.

5. Evaluation

In the following section, accuracy has been validated by using precision, and recall parameters.

In the knowledge engineering domain, in addition to the applicability, accuracy must also be tested and validated for any proposed solution. We consider two facts for validating the accuracy of our proposed approach which are: (1) In this type of research, the accuracy of results is evaluated by measuring the systems’ outputs against reference sets [39]. (2) In semantic similarities systems, measuring the accuracy using precision and recall parameters has been used as a standard approach [40]. Hence, we have considered the two mentioned previously facts for measuring the accuracy of our proposed approach. Regarding the first fact, WordNet has been chosen as a reference set. Regarding the second fact, precision and recall values are calculated.

Our evaluation procedure, which follows our proposed methodology, is as follows:

- Create twenty classes by combining groups of five words in each class randomly. The words are collected by student volunteers’, and each volunteer creates four classes.

- Randomly divide the twenty classes into ten groups, two classes per group. The classes in each group are measured for similarity using our proposed methodology.

- Generate the cartesian pair for each group (two classes) as has been explained in the methodology.

- Calculate precision, and recall as follows:Precision = TP/(TP + FP)where:Recall = TP/(TP + FN)TP = True positiveFP = False positiveTN = True negativeFN = False negative

In this evaluation, we have ten groups of classes, each group having two classes, each class having five words. The synonym and hypernym similarities have been measured for each group. The size of the cartesian products for each group is twenty-five. For the ten groups, we therefore measured 250 cartesian pairs in total.

Table 11 shows the results of TP, FP, TN, and FN.

Table 11.

The results of TP, FP, TN, and FN.

- The last step in this evaluation procedure is calculating the accuracy of our proposed approach. Accuracy represents the number of correctly classified data instances over the total number of data instances. Hence, we use the following equation to define accuracy:Accuracy = TP + TN/(TP + TN + FP + FN).

Using this equation, accuracy is 99%.

6. Discussion and Conclusions

The applicability and accuracy of our proposed approach have been demonstrated and proved. The following section contains the discussion and conclusion. In the knowledge engineering domain, knowing the similarity between ontologies is a significant issue and cornerstone in the processes of knowledge evolution and knowledge sharing. In the literature, various techniques have been used for determining the similarity between ontologies, such as deep learning, support vector machine, and computational linguistic methods. Among these, computational linguistic methods are considered the most practical technique. In language computational methods, measuring synonym similarity is the main technique for ontology matching. Recently, scientists in linguistic semantics are considering semantic relatedness as a more accurate metric for semantic matching.

Semantic relatedness can be measured by calculating both synonym and hypernym similarities. Therefore, measuring semantic relatedness between ontologies is providing deep insight into ontologies’ relevancy. In this paper, a systematic approach for measuring ontology semantic relatedness has been introduced.

In this research, we have combined concepts from linguistics and knowledge engineering sciences for measuring relatedness between ontologies. To the best of our knowledge, this is the first research that provides a flexible relatedness measurement methodology between ontologies at the class level.

The proposed approach provides a flexible measurement methodology. The relatedness between two ontologies is measured on a class-based level, i.e., at a low level of the ontologies. Synonym and hypernym similarities are measured separately using the standard WordNet lexical database. This paper provides a methodology with open technical details, which draws a clear picture of how our approach has been developed. First, a formalization of the tokenization of class data properties is described, followed by the creation of the cartesian pair of the two classes being compared. WordNet is then used as a reference model for calculating synonym and hypernym similarities. The applicability of our proposed approach has been demonstrated by implementation using the Python programming language. The accuracy of our proposed approach is demonstrated by calculating the recall and precision parameters, which show the accuracy of our model to be 99%. To the best of our knowledge, this is the first approach to introduce ontology semantic relatedness with full technical details. The benefits of using computational linguistics for measuring ontology matching have been proven over other methodologies; hence, we have adopted computational linguistic approaches, so that our contributions can be compared with others. The contributions of our proposed approach are now highlighted based on a comparison with previous related works.

Scientific benchmarking is used for evaluating our proposed approach with similar works, which consists of five properties: related meaning features, lexical features, providing technical descriptions, proving applicability, and accuracy. We now give further explanation of each parameter:

- Related meaning features: defining semantic similarities of concepts based on synonyms similarity relations.

- Lexical features: defining semantic similarities of concepts based on hypernym–hyponym relations.

- Providing technical descriptions: where technical explanations of the proposed method have been presented, where the reader can develop a deep understanding of the theory and be able to test and evaluate it.

- Proving applicability: providing a programming implementation for the proposed theory.

- Accuracy: where the accuracy of the proposed theory has been tested and presented.

Table 12 shows the results of the scientific benchmarking of our proposed approach with related works. The “√” symbol denotes that this research has considered the property, and “X” signifies that the property has not been considered.

Table 12.

The results of the benchmark comparison.

By considering Table 11, it is clear that our proposed approach has successfully satisfied all the benchmark parameters.

In this paper, we have used WordNet as a reference model. In future work, we are planning to use more than one standard reference, such as DBpedia. This way, the similarities are extracted based on more than one standard reference.

Author Contributions

Conceptualization, A.O.E. and Y.H.A.; methodology, A.O.E. and Y.H.A.; software, A.O.E. and Y.H.A.; validation, A.O.E. and Y.H.A.; formal analysis, A.O.E. and Y.H.A.; investigation, A.O.E. and Y.H.A.; resources, A.O.E.; data curation, Y.H.A.; writing—original draft preparation, A.O.E.; writing—review and editing, A.O.E. and Y.H.A.; visualization, A.O.E.; supervision, A.O.E.; project administration, A.O.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Deanship of Scientific Research at University of Tabuk, Saudi Arabia (Research No. S-0187-1442).

Data Availability Statement

Not applicable.

Acknowledgments

The authors extend their appreciation to the Deanship of Scientific Research at University of Tabuk for funding this work through Research no. S-0187-1442.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

As detailed in Section 5, “The relatedness between two ontologies is measured on a class-based level, i.e., at a low level of the ontologies”. Therefore, we now consider the provided example:

Library classification:

Book with properties {author, title, number of pages, topic}.

Newspaper with properties {date, title, number, size of paper, editor}.

Scientific paper with properties {journal title, authors, affiliations, title, page number, PACS}.

This will be divided into four classes:

Class 1: Publication with properties {publication year, country, city, publishing house}.

Class 2: Book with properties {author, title, number of pages, topic}.

Class 3: Newspaper with properties {date, title, number, size of paper, editor}.

Class 4: Scientific paper with properties {journal title, authors, affiliations, title, page number, PACS}.

For instance, consider we need to compare “library” ontology with “Scientific article “ontology from Example 1. Then, the compression will be conducted based on class level. In the following, our proposed algorithm has been followed to measure the relatedness between “library” ontology and “Scientific article” ontology.

| Ontology/Class | Data Properties |

| Scientific Article | Article author; submission date; article title, decision of the article; article identifier; domain |

| Library.Publication | Publication year, country, city, publishing house |

| Library.Book | Author, title, number of pages, topic |

| Library.Newspaper | Date, title, number, size of paper, editor |

| Library.Scientific paper | Journal title, authors, affiliations, title, page number, PACS |

Appendix A.1. Step 1: Remove the Stopping Words

| Ontology/Class | Data Properties after Removing Stop Words |

| Scientific Article | Article author; submission date; article title, decision article; article identifier; domain |

| Library.Publication | Publication year, country, city, publishing house |

| Library.Book | Author, title, number pages, topic |

| Library.Newspaper | Date, title, number, size paper, editor |

| Library.Scientific paper | Journal title, authors, affiliations, title, page number, PACS |

Appendix A.2. Step 2: Remove the Class Sign

| Ontology/Class | Data Properties after Removing Class Sign |

| Scientific Article | Author; submission date; title, decision; identifier; domain |

| Library.Publication | Year, country, city, publishing house |

| Library.Book | Author, title, number pages, topic |

| Library.Newspaper | Date, title, number, size, editor |

| Library.Scientific paper | Journal title, authors, affiliations, title, page number, PACS |

Appendix A.3. Step 3: Stemming

| Ontology/Class | Data Properties after Stemming |

| Scientific Article | Author; submit date; title, decide; identify; domain |

| Library.Publication | Year, country, city, publish house |

| Library.Book | Author, title, number page, topic |

| Library.Newspaper | Date, title, number, size, editor |

| Library.Scientific paper | Journal title, author, affiliation, title, page number, PACS |

Appendix A.4. Step 4: Apply Cartesian Product to the Properties

Appendix A.4.1. Apply Cartesian Product to the Properties between “Scientific Article” and “Library.Publication” Classes

| (author, year); (author, country); (author, city); (author, publish house); |

| (submit date, year); (submit date, country); (submit date, city); (submit date, publish house); |

| (title, year); (title, country); (title, city); (title, publish house); |

| (decide, year); (decide, country); (decide, city); (decide, publish house); |

| (identify, year); (identify, country); (identify, city); (identify, publish house); |

| (domain, year); (domain, country); (domain, city); (domain, publish house); |

Appendix A.4.2. Apply Cartesian Product to the Properties between “Scientific Article” and “Library.Book” Class

| (author, author); (author, title); (author, number page); (author, topic) |

| (submit date, author); (submit date, title); (submit date, number page); (submit date, topic) |

| (title, author); (title, title); (title, number page); (title, topic) |

| (decide, author); (decide, title); (decide, number page); (decide, topic) |

| (identify, author); (identify, title); (identify, number page); (identify, topic) |

| (domain, author); (domain, title); (domain, number page); (domain, topic) |

Appendix A.4.3. Apply Cartesian Product to the Properties between “Scientific Article” and “Library.Newspaper” Class

| (author, date); (author, title);(author, number);(author, size); (author, editor) |

| (submit date, date); (submit date, title);( submit date, number);( submit date, size); (submit date, editor) |

| (title, date); (title, title);(title, number);(title, size); (title, editor) |

| (decide, date); (decide, title);( decide, number);( decide, size); (decide, editor) |

| (identify, date); (identify, title);( identify, number);( identify, size); (identify, editor) |

| (domain, date); (domain, title);( domain, number);( domain, size); (domain, editor) |

Appendix A.4.4. Apply Cartesian Product to the Properties between “Scientific Article” and “Library. Scientific Paper” Class

| (author, journal title); (author, author); (author, affiliation); (author, title); (author, page number); (author, PACS); |

| (submit date, journal title); (submit date, author); (submit date, affiliation); (submit date, title); (submit date, page number); (submit date, PACS); |

| (title, journal title); (title, author); (title, affiliation); (title, title); (title, page number); (title, PACS); |

| (decide, journal title); (decide, author); (decide, affiliation); (decide, title); (decide, page number); (decide, PACS); |

| (identify, journal title); (identify, author); (identify, affiliation); (identify, title); (identify, page number); (identify, PACS); |

| (domain, journal title); (domain, author); (domain, affiliation); (domain, title); (domain, page number); (domain, PACS); |

Appendix A.5. Similarity

There is no synonyms similarity between “scientific article” and “library.publication” classes.

Synonyms_Similarity (scientific article, library.publication) = 0

Synonyms similarity between “scientific article” and “library.book” classes

| (author, author) | Yes |

| (title, title) | Yes |

Synonyms_Similarity (scientific article, library.book) = 33.33%

Synonyms similarity between “scientific article” and “library.newspaper” classes

| (submit date, date) | Yes |

| (title, title) | Yes |

Synonyms_Similarity (scientific article, library.newspaper) = 33.33%

Synonyms similarity between “scientific article” and “library. scientific paper” classes

| (author, author) | Yes |

| (title, journal title) | Yes |

| (title, title) | Yes |

Synonyms_Similarity (scientific article, library.newspaper) = 50%

Result of hypernym similarity of data properties between classes “scientific article” and “library.publication”

| (submit date, year) | Yes |

Hypernym_Similarity (scientific article, library.publication) = 16.66 %

Result of hypernym similarity of data properties between classes “scientific article” and “library. book”

| (title, topic) | Yes |

| (domain, topic) | Yes |

Hypernym_Similarity (scientific article, library.book) = 33.33%

Result of hypernym similarity of data properties between classes “scientific article” and “library.newspaper”

Hypernym_Similarity (scientific article, library. newspaper) = 0

Result of hypernym similarity of data properties between classes “scientific article” and “library.scientific paper”

Hypernym_Similarity (scientific article, library.scientific paper) = 0

As a conclusion, the relatedness between the “scientific article” and “library” ontologies has been measured based on class level.

Appendix B

To measure relatedness between two published ontologies, we have considered these two published ontologies:

First ontology is ‘People Ontology’, which is published in:

https://spec.edmcouncil.org/fibo/ontology/FND/AgentsAndPeople/People/ (10 January 2023).

The data properties are:

(adult, age of majority, birth certificate, date of birth, date of death, death certificate,

driver’s license, identity document, incapacitated adult, working, name,

passport number, person name, place of birth, residence, relative).

The second ontology is ‘Person Ontology’, which is published at

Person Class

The data properties are:

(identifier, date of birth, date of death, mother, father, spouse, Job, associated Income, driving id, address, phone, name).

After applying our proposed algorithm:

| Cardinality pair | Synonyms Similarity |

| (identify, identity document) | Yes |

| (date birth, date birth) | Yes |

| (date death, date death) | Yes |

| (name, name) | Yes |

| Cardinality pair | Hypernym Similarity |

| (father, relatives) | Yes |

| (mother, relatives) | Yes |

| (spouse, relatives) | Yes |

| (drive id, drive license) | Yes |

| (Address, residence) | Yes |

| (Job, working) | Yes |

The result of relatedness between person and people ontologies based on person classes in both is:

synonyms_similarity (person.person, people.person) = 33.33%

hypernym_similarity (person.person, people.person) = 50%

References

- Rinaldi, A.M.; Russo, C.; Madani, K. A Semantic Matching Strategy for Very Large Knowledge Bases Integration. Int. J. Inf. Technol. Web Eng. 2020, 15, 1–29. [Google Scholar] [CrossRef]

- Zablith, F.; Antoniou, G.; D’Aquin, M.; Flouris, G.; Kondylakis, H.; Motta, E.; Sabou, M. Ontology Evolution: A Process-Centric Survey. Knowl. Eng. Rev. 2015, 30, 45–75. [Google Scholar] [CrossRef]

- Portisch, J.; Hladik, M.; Paulheim, H. Background Knowledge in Schema Matching: Strategy vs. Data. In Proceedings of the International Semantic Web Conference, Cham, Switzerland, 24 October 2021. [Google Scholar] [CrossRef]

- Detges, U. Cognitive Semantics in the Romance Languages. In Oxford Research Encyclopedia of Linguistics; Oxford University Press: Oxford, UK, 2022. [Google Scholar] [CrossRef]

- Huitzil, I.; Bobillo, F.; Mena, E.; Bobed, C.; Bermúdez, J. Some Reflections on the Discovery of Hyponyms Between Ontologies. In Proceedings of the 21st International Conference on Enterprise Information Systems, Heraklion, Greece, 1 January 2019. [Google Scholar] [CrossRef]

- Kontopoulos, E.; Berberidis, C.; Dergiades, T.; Bassiliades, N. Ontology-Based Sentiment Analysis of Twitter Posts. Expert Syst. Appl. 2013, 40, 4065–4074. [Google Scholar] [CrossRef]

- Vidanagama, D.U.; Silva, A.T.P.; Karunananda, A.S. Ontology Based Sentiment Analysis for Fake Review Detection. Expert Syst. Appl. 2022, 206, 117869. [Google Scholar] [CrossRef]

- Raad, J.; Cruz, C. A Survey on Ontology Evaluation Methods. In Proceedings of the International Conference on Knowledge Engineering and Ontology Development, Part of the 7th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management, Lisbon, Portugal, 12 November 2015. [Google Scholar] [CrossRef]

- Maksimov, N.; Lebedev, A. Knowledge Ontology System. Procedia Comput. Sci. 2021, 190, 540–545. [Google Scholar] [CrossRef]

- Doan, P.H.; Arch-Int, N.; Arch-Int, S. A Semantic Framework for Extracting Taxonomic Relations from Text Corpus. Int. Arab. J. Inf. Technol. 2020, 17, 325–337. [Google Scholar] [CrossRef]

- Harispe, S.; Ranwez, S.; Janaqi, S.; Montmain, J. Semantic Similarity from Natural Language and Ontology Analysis; Synthesis Lectures on Human Language Technologies; Springer: Heidelberg, Germany, 2015; Volume 8, pp. 1–256. [Google Scholar] [CrossRef]

- Salaev, U.; Kuriyozov, E.; Gómez-Rodríguez, C. SimRelUz: Similarity and Relatedness Scores as a Semantic Evaluation Dataset for Uzbek Language. Available online: http://arxiv.org/abs/2205.06072 (accessed on 20 December 2022).

- Stevenson, S.; Merlo, P. Beyond the Benchmarks: Toward Human-Like Lexical Representations. Front. Artif. Intell. 2022, 5, 796741. [Google Scholar] [CrossRef]

- Elfaki, A.; Aljaedi, A.; Duan, Y. Mapping ERD to Knowledge Graph. In Proceedings of the 2019 IEEE World Congress on Services (SERVICES), Milan, Italy, 8–13 July 2019; Volume 2642, pp. 110–114. [Google Scholar] [CrossRef]

- Zhao, G.; Zhang, X. Domain-Specific Ontology Concept Extraction and Hierarchy Extension. In Proceedings of the 2nd International Conference on Natural Language Processing and Information Retrieval, Bangkok, Thailand, 7 September 2018. [Google Scholar] [CrossRef]

- Wang, T.; Montarnal, A.; Truptil, S.; Benaben, F.; Lauras, M.; Lamothe, J. A Semantic-checking based Model-driven Approach to Serve Multi-organization Collaboration. Procedia Comput. Sci. 2018, 126, 136–145. [Google Scholar] [CrossRef]

- Chen, J.Y.; Zheng, H.T.; Jiang, Y.; Xia, S.T.; Zhao, C.Z. A Probabilistic Model for Semantic Advertising. Knowl. Inf. Syst. 2019, 59, 387–412. [Google Scholar] [CrossRef]

- Kamel, M.; Schmidt, D.; Trojahn, C.; Vieira, R. Hypernym Relation Extraction for Establishing Subsumptions: Preliminary Results on Matching Foundational Ontologies. CEUR Workshop Proc. 2019, 2536, 36–40. [Google Scholar]

- Kim, H.H.; Rhee, H.Y. An Ontology-Based Labeling of Influential Topics Using Topic Network Analysis. J. Inf. Process. Syst. 2019, 15, 1096–1107. [Google Scholar] [CrossRef]

- Ma, H.; Chiang, F.; Alipourlangouri, M.; Wu, Y.; Pi, J. Ontology-Based Entity Matching in Attributed Graphs. Proc. VLDB Endow. 2019, 12, 1195–1207. [Google Scholar] [CrossRef]

- Batet, M.; Sánchez, D. Leveraging Synonymy and Polysemy to Improve Semantic Similarity Assessments Based on Intrinsic Information Content. Artif. Intell. Rev. 2020, 53, 2023–2041. [Google Scholar] [CrossRef]

- Dong, H.; Wang, W.; Coenen, F.; Huang, K. Knowledge Base Enrichment by Relation Learning from Social Tagging Data. Inf. Sci. 2020, 526, 203–220. [Google Scholar] [CrossRef]

- Jabla, R.; Khemaja, M.; Buendia, F.; Faiz, S. Automatic Ontology-Based Model Evolution for Learning Changes in Dynamic Environments. Appl. Sci. 2021, 11, 10770. [Google Scholar] [CrossRef]

- Afuan, L.; Ashari, A.; Suyanto, Y. A New Approach in Query Expansion Methods for Improving Information Retrieval. JUITA J. Inform. 2021, 9, 93–103. [Google Scholar] [CrossRef]

- Duy Công Chiến, T. An Approach to Extending Query Sentence for Semantic Oriented Search on Knowledge Graph. J. Sci. Technol. 2021, 50, 284–291. [Google Scholar] [CrossRef]

- Giunchiglia, F.; Zamboni, A.; Bagchi, M.; Bocca, S. Stratified Data Integration. arXiv 2021, arXiv:2105.09432. [Google Scholar]

- Mountasser, I.; Ouhbi, B.; Hdioud, F.; Frikh, B. Semantic-based Big Data integration framework using scalable distributed ontology matching strategy. Distrib. Parallel Databases 2021, 39, 891–937. [Google Scholar] [CrossRef]

- Sanagavarapu, L.M.; Iyer, V.; Reddy, R. A Deep Learning Approach for Ontology Enrichment from Unstructured Text. Cybersecurity & High-Performance Computing Environments: Integrated Innovations, Practices, and Applications. Available online: http://arxiv.org/abs/2112.08554 (accessed on 22 December 2022).

- Ocker, F.; Vogel-Heuser, B.; Paredis, C.J.J. A Framework for Merging Ontologies in the Context of Smart Factories. Comput. Ind. 2022, 135, 103571. [Google Scholar] [CrossRef]

- Saber, Y.M.; Abdel-Galil, H.; El-Fatah Belal, M.A. Arabic Ontology Extraction Model from Unstructured Text. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 6066–6076. [Google Scholar] [CrossRef]

- Uceda-Sosa, R.; Mihindukulasooriya, N.; Kumar, A.; Bansal, S.; Nagar, S. Domain Specific Ontologies from Linked Open Data (lod). In Proceedings of the 5th Joint International Conference on Data Science & Management of Data (9th ACM IKDD CODS and 27th COMAD) (CODS-COMAD 2022), Bangalore, India, 8–10 January 2022; pp. 105–109. [Google Scholar] [CrossRef]

- Rahman, A.A.; Rasid, S.H.M. Triple-Relational Latent Lexicology-Phonology-Semantic ‘MMRLLEXICOLPHONOSEMC’ Analysis Model for Extracting Qura’nic Concept. Int. J. Adv. Res. Islam. Stud. Educ. 2022, 2, 99–114. [Google Scholar]

- Chen, M.; Wu, C.; Yang, Z.; Liu, S.; Chen, Z.; He, X. A Multi-Strategy Approach for the Merging of Multiple Taxonomies. J. Inf. Sci. 2022, 48, 283–303. [Google Scholar] [CrossRef]

- Trojahn, C.; Vieira, R.; Schmidt, D.; Pease, A.; Guizzardi, G. Foundational Ontologies Meet Ontology Matching: A Survey. Semant. Web 2022, 13, 685–704. [Google Scholar] [CrossRef]

- Jin, Y.; Zhou, H.; Yang, H.; Shen, Y.; Xie, Z.; Yu, Y.; Hang, F. An approach to measuring semantic similarity and relatedness between concepts in an ontology. In Proceedings of the 23rd International Conference on Automation and Computing (ICAC), Huddersfield, UK, 7–8 September 2017; pp. 1–6. [Google Scholar]

- Vrandečić, D.; Sure, Y. How to design better ontology metrics. In Proceedings of the European Semantic Web Conference, Berlin/Heidelberg, Germany, 3 June 2007. [Google Scholar] [CrossRef]

- Walter, S.; Unger, C.; Cimiano, P. A Corpus-Based Approach for the Induction of Ontology Lexica. In Proceedings of the International Conference on Application of Natural Language to Information Systems, Berlin/Heidelberg, Germany, 19 June 2013. [Google Scholar] [CrossRef]

- Miller, G.A. WordNet: A Lexical Database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- McNeill, F.; Bental, D.; Gray, A.J.G.; Jedrzejczyk, S.; Alsadeeqi, A. Generating Corrupted Data Sources for the Evaluation of Matching Systems. CEUR Workshop Proc. 2019, 2536, 41–45. [Google Scholar]

- Alqarni, H.A.; AlMurtadha, Y.; Elfaki, A.O. A Twitter Sentiment Analysis Model for Measuring Security and Educational Challenges: A Case Study in Saudi Arabia. J. Comput. Sci. 2018, 14, 360–367. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).