Abstract

Gait is commonly defined as the movement pattern of the limbs over a hard substrate, and it serves as a source of identification information for various computer-vision and image-understanding techniques. A variety of parameters, such as human clothing, angle shift, walking style, occlusion, and so on, have a significant impact on gait-recognition systems, making the scene quite complex to handle. In this article, we propose a system that effectively handles problems associated with viewing angle shifts and walking styles in a real-time environment. The following steps are included in the proposed novel framework: (a) real-time video capture, (b) feature extraction using transfer learning on the ResNet101 deep model, and (c) feature selection using the proposed kurtosis-controlled entropy (KcE) approach, followed by a correlation-based feature fusion step. The most discriminant features are then classified using the most advanced machine learning classifiers. The simulation process is fed by the CASIA B dataset as well as a real-time captured dataset. On selected datasets, the accuracy is 95.26% and 96.60%, respectively. When compared to several known techniques, the results show that our proposed framework outperforms them all.

1. Introduction

Human gait recognition (HGR) [1] is a biometric application used to solve human recognition’s behavioral characteristics from a distance. A few other biometrics, such as handwriting, face [2], iris [3], ear [4], electroencephalography (EEG) [5], etc., are also useful for identifying an individual in a defined vicinity [6]. Gait recognition is critical in security systems. In this modern technological era, we require an innovative and up-to-date biometric application; thus, gait is an ideal approach for identifying individuals. The primary advantage of gait recognition over other biometric techniques is that it produces desirable results while avoiding identification from low-resolution videos [7]. Each individual has a few unique characteristics, such as walking style, speed, clothes variation, carrying conditions, and variation in angles [8].

Individual body gestures or walking styles are used to detect human gait features because each subject has a unique walking style. Each subject’s walking style varies depending on the situation and the type of clothing he is wearing [9]. Additionally, when an individual holds a bag, the features are changed [10]. Gait recognition is divided into two approaches: model-based and model-free, also known as the holistic model [11]. The model-based method requires extravagant computing costs. However, in the free-model-based technique, we can detect suspicious activity through preprocessing and segmentation techniques [12]. The major part of the process is to detect the same person in a different environment because a person’s body language varies in different situations (for example, carrying a bag and moving fast) [13].

Many gait-recognition techniques are presented in the literature using machine learning (ML) [14,15]. ML is an important research area; it is utilized in several applications, such as human action recognition [16,17,18], image processing [19,20], and recently, COVID-19 diagnostics [21]. A simple gait-recognition method involves a few essential steps, such as preprocessing original video frames, segmenting the region of interest (ROI), extracting features from ROI, and classifying extracted features using classification algorithms [22]. Researchers use thresholding techniques to segment the ROI after enhancing the contrast of video sequences in the preprocessing step. This step is critical in traditional approaches because the features are only extracted from these segmented regions. This procedure, however, is complicated and unauthentic.

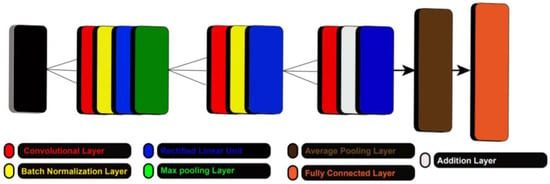

Deep learning has had a lot of success with human gait recognition in recent years. The convolutional neural network (CNN) is a type of deep learning model that is used for several processes, such as gait recognition [23], action recognition [24], medical imaging [25], and others [26,27]. A simple CNN model consists of a few important layers, such as convolutional, pooling, batch normalization, ReLu, GAP, fully connected, and classification layers [28,29]. Figure 1 depicts a simple architecture. A group of filters is adapted in the convolutional layer to extract some important features of an image, such as edges and shape. The non-linear conversion is carried out by the ReLu layer, also known as the activation layer. The batch-normalization layer minimizes the number of training epochs, whereas the pooling layers solve the overflow problem. The fully connected layer extracts the image’s deep features, also known as high-level features, and classifies them in the final step using the softmax function [30,31].

Figure 1.

A simple CNN architecture for gait recognition.

Our major contributions in this work are:

- A database captured in the real-time outdoor environment using more than 50 subjects. The captured videos include a high rate of noise and background complexity.

- Refinement of the contrast of extracted video frames using the 3D box filtering approach and then fine-tuning of the ResNet101 model. The transfer-learning-based model is trained on real-time captured video frames and extracted features.

- A kurtosis-based heuristic approach is proposed to select the best features and fuse them in one vector using the correlation approach.

- Classification using multiclass one against all-SVM (OaA-SVM) and comparison of the performance of the proposed method on different feature sets.

This paper is organized as follows: previous techniques are discussed in Section 2. Section 3 describes the proposed method, such as frame refinement, deep learning, feature selection, and classification. Results of the proposed technique are discussed in Section 4. Finally, the conclusion and future directions are presented in Section 5.

2. Related Work

There are several methods for HGR using deep learning [32]. The authors focused on deep-learning-based methods in these methods, but the majority of them focused on selecting important features and feature fusion. The experimental process used several gait datasets, such as CASIA A, CASIA B, and CASIA C [33]. In this work, our focus is on the real-time recorded dataset and CASIA B datasets. CASIA B is a famous dataset and is mostly utilized for gait recognition.

Wang et al. [34] presented a novel gait-recognition method using a convolutional LSTM approach named Conv-LSTM. They performed a few steps to complete the gait-recognition process. At the start, they presented GEI frame by frame and then expanded its volume to relax the constraint of the gait cycle. Later on, they performed an experiment to analyze the cross-covariance of one subject. After that, they design a Conv-LSTM model for final gait recognition. The experiments were performed on the CASIA B dataset and OU-ISIR datasets. On the CASIA B dataset, they achieved an accuracy of 93%, and 95% on OU-ISIR, respectively.

Arshad et al. [6] presented a new approach for HGR. In this approach, two deep neural networks were used with the FEcS selection method. Two pre-trained models, VGG19 and AlexNet, were used for feature extraction. The extracted features were refined in the later step using entropy and skewness vectors. Four datasets were used for the experimental process, CASIA A, CASIA B, CASIA C, and AVAMVG. On these datasets, they achieved the accuracy of 99.8%, 99.7%, 93.3%, and 92.2%, respectively.

Mehmood et al. [22] presented an automated deep-learning-based system for HGR under multiple angles. Four key steps were performed, preprocessing, feature extraction, feature selection, and classification. They extracted deep-learning features and applied the firefly algorithm for feature optimization. The experiments were performed on a widely available CASIA B dataset and achieved notable accuracy.

Anusha et al. [35] presented a technique for HGR based on multiple features. They extracted low-level features through spatial, texture, and gradient information. Five databases were used for the experimental process, CASIA A, CASIA B, OU-ISIR D, CMU MoBo, and KTH video datasets. These all datasets were tested on different angles and achieved improved performance.

Sharif et al. [14] presented a method for HGR based on accurate ROI segmentation and multilevel features fusion. Several clothing and carrying conditions were considered for the experimental process and achieved an accuracy of 98.6%, 93.5%, and 97.3%, respectively. A PoseGait model was presented by Liao et al. [13] for HGR. They considered the problem of drastic variations of individuals. The 3D models were used for capturing data from different angles. The 3D image was defined as 3D coordinates of the human body joints.

Wu et al. [36] presented a graph-based approach for multiview HGR. The Spiderweb-graph-based approach was applied to capture the data in a single view and then connect to the other view of gait data concurrently. Memory and capsule modules were used for the trajectory view of each gait as well as STF extraction. The experiments were performed on three challenging gait datasets, SDUgait, OU-MVLP, and CASIA B, and achieved an accuracy of 98.54%, 96.91%, and 98.77%, respectively. Arshad et al. [37] focused on the feature-selection approach to improve the performance of HGR. They used the Bayesian model and quartile-deviation approaches for enhanced feature vectors.

In conclusion, the previous studies focused primarily on the selection of the most relevant features. They used CNN to extract features and then combined the results with data from a few other channels. They did not, however, focus on real-time gait recognition due to the complexity of the system design. In this paper, we focus on real-time gait recognition in an outdoor setting.

3. Proposed Methodology

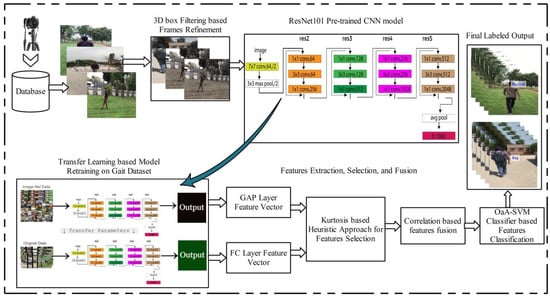

The proposed method for real-time human gait recognition is presented in this section with detailed mathematical modeling and visual results. The following steps are involved in the proposed framework: video preprocessing, deep learning features extraction using transfer learning, kurtosis-based features selection, correlation-based features fusion, and finally, one-against-all-SVM (OaA-SVM)-based classification. The main architecture diagram is shown in Figure 2. The proposed method is executed in a sequence, and at the end of the execution, it returns a labeled output and numerical results in the form of recall rate, precision, accuracy, etc. The details of each step are given below in the following subsections.

Figure 2.

Proposed architecture of real-time human gait recognition using deep learning.

3.1. Videos Preprocessing

One of the most prominent parts of digital image processing is to enhance the quality of visual information of an image. This technique helps to remove the noise and messy information from the image and make it more readable [38]. In the area of machine learning, the algorithm requires better information about an object to learn a good model. In this work, we initially process with videos, and in a later stage, convert them into frames and label them according to the actual class. Then, we resize images into a dimension of , where R denotes row pixels, denotes column pixels, and represent number of channels, respectively. We set and .

The real-time videos are recorded into four different angles, and each angle consists of two gait classes—walk while carrying a bag and normal walk without any bag. The four angles are 90°, 180°, 270°, and 360°. The original frames also captured some noise during the video recording. For this purpose, we used the 3D box filter, which is a perfect choice. The detail of 3D box filtering is given in Algorithm 1.

| Algorithm 1: Data Augmentation Process. |

| Input: Original video frame . Output: Improved video frame . Step 1: Load all video frames . for: Step 2: Calculate filter size.

|

The visual effects are also shown in the main flow, Figure 2. Here, the frames are given before and after the filter processing. The improved frames are utilized in the next step for model learning.

3.2. Convolutional Neural Network

Deep learning emerged recently in the field of computer vision and has since spread to other fields. Convolutional Neural Network (CNN) is a deep learning approach that won a competition for image classification using ImageNet in 2012 [39]. In deep learning, images are directly passed in the model without segmentation; therefore, it is called image-based ML. A simple CNN consists of convolutional layer, ReLu layer, dense layer, pooling layer, and a softmax layer. Visually, simple architecture is shown in Figure 1. In a CNN, the input image is passed to the network in a fixed size. A convolutional operation is performed in the convolutional layer and in the output weights, and bias vectors are generated. Back-propagation is used in the CNN for training the weights. Mathematically, convolutional operation is formulated as follows:

where is convolutional layer output, denotes the convolutional kernels, is composed of channels , denotes the convolutional operation, represents convolutional kernel and is defined by . To increase the nonlinearity of a network, ReLu layer is applied and defined as follows:

Another layer named maxpooling is employed to reduce the dimension of extracted features. This layer is formulated as follows:

where denotes the max pooling operation, denotes the input weight matrix, and , are static parameters. The filter of this layer is normally fixed as , denoted by , and stride is , denoted by . An important layer in a CNN is fully connected layer. In this layer, all neurons are connected to each other, and resultant output is obtained in a 1D matrix. Finally, the extracted features are classified in the softmax layer.

3.3. Deep Features Extraction

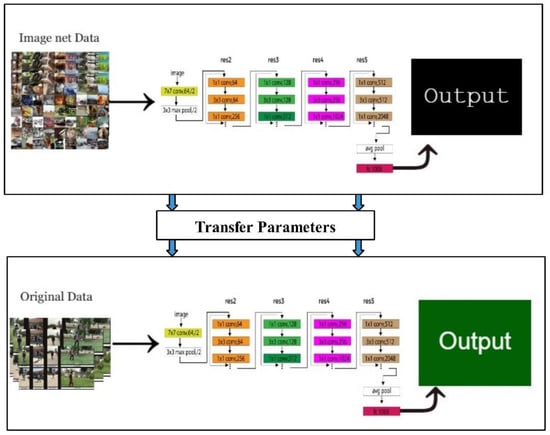

Feature extraction is the process of reducing original images into small number of important information groups. These groups are called features. The features have few important characteristics such as color, shape, texture, and location of an object. In this proposed technique, we utilized a pre-trained deep learning network named ResNet101. Originally, this model consists of total of 101 layers and was trained on ImageNet dataset. A visual flow is shown in Figure 3. Note that the filter size of first convolutional layer is , stride is 2, and number of channels is . In the next, a maxpooling layer is applied for filter size and stride is . Five residual layers are included in this network, and lastly, global average pool layer and FC layer are added following a softmax layer.

Figure 3.

Original ResNet101 pre-trained CNN model for image classification.

Training Model using Transfer Learning: In this work, we use ResNet101 pre-trained CNN model for features extraction [40]. We trained this model using transfer learning, as visually shown in Figure 4 [41]. Note that parameters of original ResNet101 pre-trained model are transferred and trained on the real-time captured gait database. We remove the last layer from modified ResNet101 and extract features. The features are extracted from global average pool (GAP) and FC layers. Mathematically, TL can define as follows: given a source domain and learning task , a target domain and learning task , TL improves the learning of a target predictive function in the using the knowledge in and , where or . After this process, we obtain two feature vectors from GAP and FC layers of dimensions and , respectively.

Figure 4.

Transfer-learning-based model retraining on real-time captured video frames for HGR.

3.4. Kurtosis-Controlled, Entropy-Based Feature Selection

Feature selection (FS) is the process of selecting the most prominent features from the original feature vector. In the FS, the value of features is not updated, and features are selected in the original form [42]. The main motivation behind this step is to obtain the most optimal information of an image and discard the redundant and irrelevant data. In this work, we proposed a new feature selection technique named kurtosis-controlled OaA-SVM. The working of this technique is given in Algorithms 2 and 3 for both vectors.

Description: Consider that we have two extracted deep feature vectors denoted by and , where the dimension of each vector is and , respectively. In the first step, features are initialized and processed in the system until all features are passed. In the next step, kurtosis is computed for all features in the pair, where window size and stride were and 1. A newly generated vector is obtained, which is evaluated in the next step by fitness function. In the fitness function, we select one vs. all SVM classifier that classifies the feature and, in the output, returns an error rate.

This process continues until the error rate is minimized and stops when error increases for the next iteration. By following this, we obtain two feature vectors in the output of dimension , as discussed in Algorithms 1 and 2.

Finally, we fused both selected vectors by employing correlation-based approach. In this approach, features of both vectors are paired as and . After that, the correlation coefficient is computed, and higher-correlated feature pairs are added to the fused vector. Mathematically, it is defined as follows:

where , , is a correlation coefficient among two features and , is features of , and is feature of , respectively. The notation is mean value of feature vector and is mean value of feature vector .

In this approach, we choose those features for fused vector which have correlation value near to 1 or greater than 0. This means we only selected positively correlated features for final fused vector. This procedure is performed for all features in both vectors and in the output; we obtained a resultant features vector of length , where denotes the length of fused feature vector.

Finally, the features in the fused vector are classified using OaA-SVM.

| Algorithm 2: Features selection for deep learning model 1. |

| Input: Feature vector of dimension . Output: Selected feature of dimension . Step 1: Features initialization. for // Step 2: Compute kurtosis of each feature pair.

Step 4: Perform fitness function.

end for |

| Algorithm 3: Features selection for deep learning model 2. |

| Input: Feature vector of dimension. Output: Selected feature of dimension. Step 1: Features initialization. for // Step 2: Compute kurtosis of each feature pair.

Step 4: Perform fitness function.

end for |

3.5. Recognition

The one against all SVM (OaA-SVM) is utilized in this work for features classification [43]. The OaA-SVM approach is usually to determine the separation of one gait action with other listed gait action classes. Mostly, this classification is used for unknown patterns to generate the maximum results.

Here, we have N-class problem, and D represents the training samples: . Here, to represents b-dimensional feature vector, where to is the corresponding class label. This approach usually constructs the binary SVM classifiers. The represent all the positive labels of training class and the other negative labels. Then, SVM solves the problems with the following yield-decision function:

Here, is the yield-decision function, represents weight values, denotes input features, and denotes the bias values. The minimization function is applied on weight values as:

where M is a minimization parameter, C denotes the output class, is between-class distance, if , and otherwise. A sample is classified as in class .

Resultant visual results are also shown in Figure 5, in which the classifier returned a labeled output such as walking with bag and normal walk. The numerical results are also computed and described in Section 4.

Figure 5.

Proposed system predicted labeling results.

4. Results

4.1. Datasets

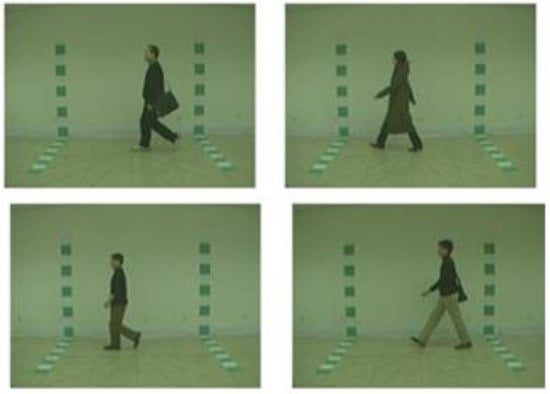

The results of the proposed automated system for gait recognition are presented in this section. The proposed framework is tested on a real-time captured dataset and CASIA B dataset. In the real-time dataset, a total of 50 students are included, and for each student, we recorded 8 videos in 4 angles, 90°, 180°, 270°, and 360°. For each angle, two videos are recorded—one while wearing a bag and one without wearing a bag. A few samples are shown in Figure 6. For the CASIA B dataset [44], we only consider a 90° angle for evaluation results. In this dataset, three different covariant factors are included, normal walking, walking with a bag, and walking while wearing a coat, as shown in Figure 7.

Figure 6.

Sample image frames of real-time video sequences.

Figure 7.

Sample frames collected from CASIA B dataset [44].

4.2. Experimental Setup

We utilized 70% video frames for training the proposed framework, and the remaining 30% were utilized for the testing. In the training process, a group of hyper parameters was employed, where the learning rate was 0.001, epochs was 200, mini batch size was 64, the optimization algorithm was Adam, the activation function was sigmoid, the dropout factor was 0.5, momentum was 0.7, the loss function was cross entropy, and the learning rate schedule was Piecewise. Several classifiers were utilized for validation, such as SVM, K-Nearest Neighbor, decision trees, and Naïve Bayes. The following parameters were used for the analysis of selected classifiers: precision, recall, F1 Score, AUC, and classification time. All results were computed using K-Fold validation, whereas the value of K was 10. The simulations of this work were conducted in MATLAB2020a using a dedicated personal desktop computer with 256 GB SSD, 16 GB RAM, and 16 GB memory graphics card.

4.3. Real-Time Dataset Results

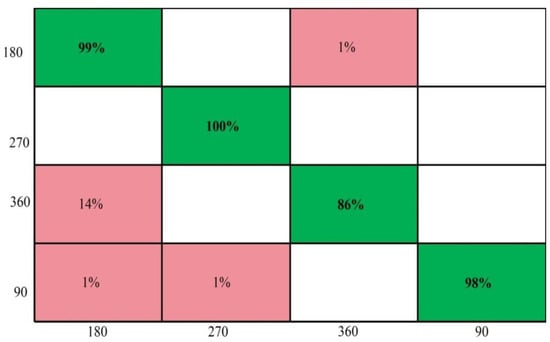

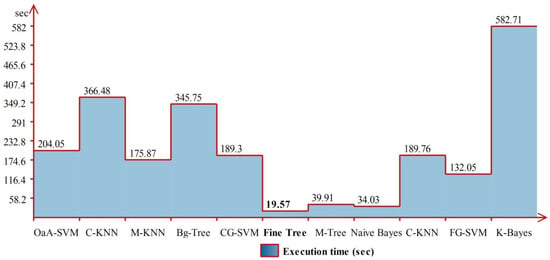

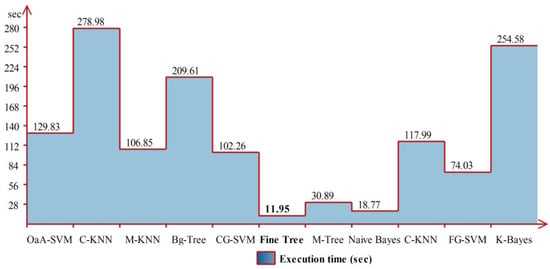

In this section, we present our proposed method’s results on real-time video sequences. The results are computed in three different scenarios—normal walking results for all selected angles, walking while carrying a bag for all three angles, and finally, classification of normal walking and walking while carrying a bag. Table 1 shows the results of a normal walk for the four selected angles of 90°, 180°, 270°, and 360°, respectively. The OaA-SVM classifier achieved superior performance compared to other listed classification algorithms. The achieved accuracy by OaA-SVM is 95.75%, whereas the precision rate is 95.25%, F1-Score is 95.98%, AUC is 1.00, and FPR is 0.0125, respectively. The Medium KNN also gives better performance and achieves an accuracy of 95%. The recall and precision rates are 95% and 95.50%, respectively. The worst accuracy noted for this experiment is 48.75% on the Kernel Naïve Bayes classifier. The change in accuracy among all classifiers shows the authenticity of this work. The performance of OaA-SVM can be further verified in Figure 8. The prediction accuracy for the 270° angle is maximum, whereas the correct prediction performance for 360° is 86%. In the latter, the recognition time is also tabulated in Table 1 and shows that the Fine Tree classifier executed much faster compared to the other listed classifiers. The execution time of Fine Tree is 19.465 (s). The variation in execution time is also shown in Figure 9.

Table 1.

Proposed gait-recognition results using real-time captured dataset for normal walk on selected angles.

Figure 8.

Confusion matrix of OaA-SVM for normal walk on different selected angles.

Figure 9.

Variation in computational time for selected classifiers on Real time dataset.

Table 2 shows results of walking while carrying a bag at 90°, 180°, 270°, and 360° angles, respectively. The OaA-SVM classifier attained better accuracy compared to other classification algorithms. For OaA-SVM, the accuracy is 96.5%, whereas the precision rate is 97%, F1-Score is 96.7%, AUC is 1.00, and FPR is 0.01, respectively. The KNN series classifiers, such as Cubic KNN and Medium KNN, give the second-best accuracy of 95.1%. Naïve Bayes classifier achieves an accuracy of 83.7%. The recall and precision rates of the Naïve Bayes classifier are 83.7% and 86.7%, respectively. The Kernel Naïve Bayes, FG-SVM, and Coarse KNN classifiers do not perform well. They achieve accuracies of 48.6%, 61.3%, and 63%, respectively.

Table 2.

Proposed gait-recognition results using real-time captured dataset for walking while carrying a bag at selected angles.

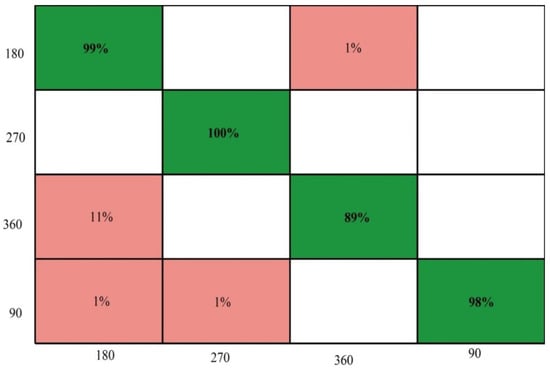

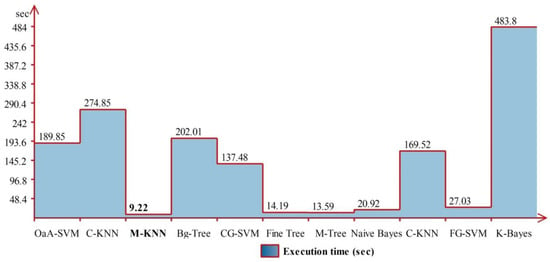

The performance of OaA-SVM can be further verified through Figure 10, which shows that the prediction accuracy for the 270° angle is the maximum (100%), whereas correct prediction performance for 360° is 89%. The execution time during the recognition process is also tabulated in Table 2 and shows that the Medium Tree classifier executed much faster compared to other listed classifiers. The execution time is also plotted in Figure 11, which shows a huge variation due to the classifier’s complexity.

Figure 10.

Confusion matrix of OaA-SVM for walking while carrying a bag at different selected angles.

Figure 11.

Variation in computational time for selected classifiers of 4 selected angles on Real time dataset.

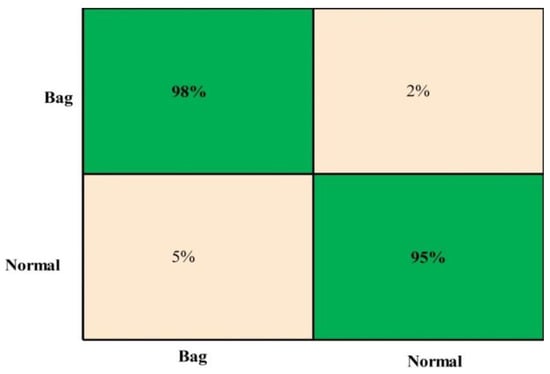

Table 3 represents the classification results of a normal walk and walking while carrying a bag. The purpose of this experiment is to analyze the performance of the proposed algorithm for the binary class classification problem. Table 3 shows that the OaA-SVM attained an accuracy of 96.4%. The other calculated measures, such as recall rate, precision rate, f1-Score, AUC, and FPR values, are 96.5%, 96.5%, 96.5%, 1.00, and 0.03, respectively. Medium KNN gives the second-best classification performance of 96.1%, whereas the recall and precision rates are 96% and 96%, respectively. The following classifiers, Naïve Bayes, Coarse KNN, Kernel Naïve Bayes, and FG SVM, do not perform well and achieve an accuracy of 67.8%, 79.3%, 735, and 79.6%, respectively. The confusion matrix also provided and illustrated in Figure 12 shows that the correct prediction rate of both classes is above 90%. In the latter, the execution time is also plotted for this experiment and illustrated in Figure 13, which shows that the Fine Tree execution time is better compared to other algorithms.

Table 3.

Recognition results for all datasets with kurtosis function on 600 predictions on original deep-feature vector extracted from ResNet 101.

Figure 12.

Confusion matrix of OaA-SVM for normal walking and walking while carrying a bag classification.

Figure 13.

Execution time of proposed method on real-time dataset (normal walking and walking while carrying a bag).

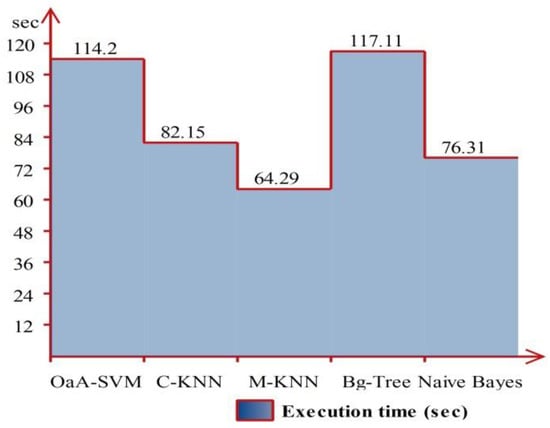

4.4. CASIA B Dataset Results at a 90° Angle

CASIA B dataset results are presented in this subsection. For experimental results, we only selected a 90° angle because most of the recent studies used this angle. Numerical results are given in Table 4. The OaA-SVM gives better results among all other implemented classifiers. The results are computed with different feature vectors, such as original global average pool (GAP) layer features, fully connected layer features (FC), and the proposed approach. For GAP-layer features, the achieved accuracy is 90.22%, and the recall rate is 90.10%, whereas the FC layer gives an accuracy of 88.64%. The proposed method gives an accuracy of 95.26%, where the execution time is 176.4450 (s). Using Cubic KNN, the attained accuracy is 93.60%, which is the second-best performance after the OaA-SVM classifier. Table 5 presents the confusion matrix of the OaA-SVM classifier performance using the proposed scheme. In this table, it is illustrated that the corrected prediction accuracy of normal walking is 94%, walking while wearing a coat (W-Coat) is 95%, and walking while carrying a bag is 97%. In addition, the computational time of all classifiers is plotted in Figure 14, which shows that Medium KNN efficiency is better than all other classifiers in terms of the computational cost.

Table 4.

Proposed recognition results on CASIA B dataset, where selected angle is 90°.

Table 5.

Confusion matrix of OaA-SVM for CASIA B dataset.

Figure 14.

Execution time of proposed method for CASIA B dataset (90° angle).

4.5. Discussion

A detailed discussion on results and comparison is conducted in this section. As shown in Figure 1, the proposed method has a few important steps, such as video preprocessing, deep-learning feature extraction, feature selection through the kurtosis approach, the fusion of selected features through the correlation approach, and finally, the OaA-SVM-based feature classification. The proposed method is evaluated on two datasets. One is recorded in the real-time environment, and the second is CASIA B. The real-time captured dataset results are given in Table 1, Table 2 and Table 3. In Table 1, results are presented for normal walking under four different angles, whereas in Table 2, results are given for walking while carrying a bag. The classification of the binary class problem is also conducted, and results are tabulated in Table 3. The OaA-SVM outperformed in all three experiments and can be verified through Table 1. Additionally, we used the CASIA B dataset for the validation of the proposed technique. The results are listed in Table 4 and verified through Table 5.

We also performed experiments on different feature sets to confirm the authenticity of the proposed heuristic-feature-selection approach. For this purpose, we select several feature vectors, such as 300 features, 400 features, 500 features, 600 features, 700 features, and a complete feature vector. Results are given in Table 6 for both datasets. This table shows that the results of 600 features are much better compared to other feature sets. The results increase initially, but after 600 features, accuracy is degraded by approximately 1%. For all features, the accuracy difference is almost 4%. Based on this table, we can say that the proposed technique achieves a significant performance on 600 features.

Table 6.

Analysis of proposed feature-selection framework on numerous feature sets. *The OvA-SVM is employed as a classifier for this table.

The comparison of the proposed method is also listed in Table 7. In this table, we only added those techniques in which the CASIA B dataset was used. For a real-time dataset, the comparison with recent techniques is not fair. This table shows that the previous best-reported accuracy on this dataset was 93.40% [6]. In this work, we have improved this accuracy by almost 2% and reached 95.26%.

Table 7.

Comparison with existing techniques for CASIA B dataset.

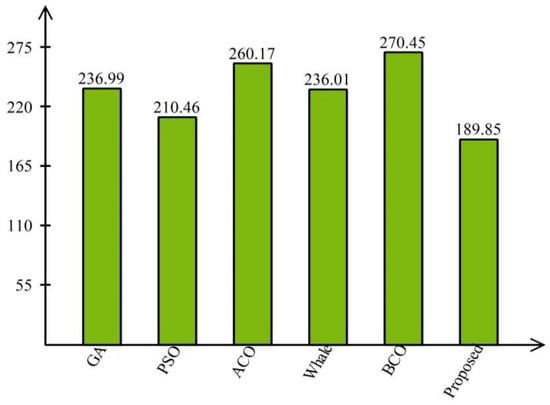

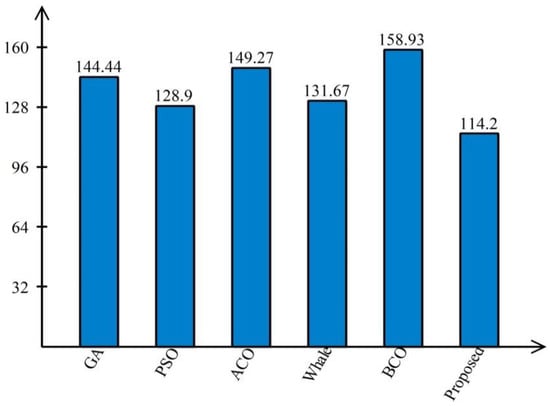

Normally, researchers employ metaheuristic techniques, such as a genetic algorithm, PSO, ACO, and BCO [47]. These techniques have consumed too much time during the selection process. However, the proposed feature-selection approach is based on the single-kurtosis value activation function and is executed fast compared to the GA, PSO, BCO, Whale, and ACO on both the selected dataset, as shown by time plotted in Figure 15 and Figure 16. These figures show that the proposed feature-selection approach is more suitable for gait recognition in terms of computational time than the metaheuristic techniques.

Figure 15.

Time comparison for the real-time dataset.

Figure 16.

Time comparison on the CASIA B dataset.

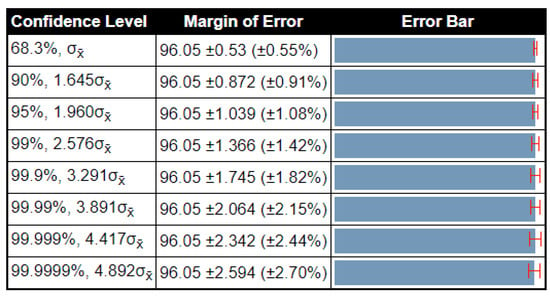

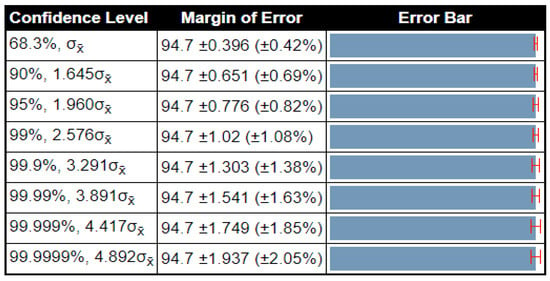

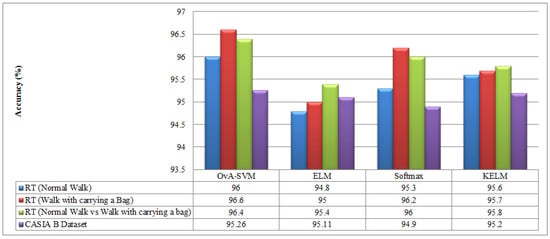

A detailed analysis is also conducted through confidence intervals. For this purpose, the proposed framework is executed 500 times for both datasets. For the real-time dataset, we obtained three accuracy values—maximum (96.8%), minimum (95.3%), and average (96.05%). Based on these values, the standard deviation and standard error mean (SEM) are computed and obtain values of 0.75 and 0.5303. Similarly, for the CASIA B dataset, the standard deviation and standard error mean value are 0.56 and 0.3959, respectively. Using standard deviation and standard error mean values, the margin of error is computed, as plotted in Figure 17 and Figure 18. From these figures, it is shown that the proposed framework accuracy is consistent after the number of selected iterations. Moreover, the performance of the proposed framework (OvA-SVM) is also analyzed with a few other classifiers, such as Softmax, ELM, and KELM, as illustrated in Figure 19. From this figure, it can be noted that the proposed framework shows better performance.

Figure 17.

Confidence interval based analysis of proposed method on real-time dataset.

Figure 18.

Confidence-interval-based analysis of proposed method on CASIA B dataset.

Figure 19.

Comparison of OvS-SVM classifier with other classification algorithms for gait recognition.

The reason for the selected hyper parameters is as follows. Normally, the researchers employed gradient descent [48] as an optimization function, but due to the complex nature of the selected pre-trained model Resnet101 (residual blocks), the ADAM optimizer [49] can work better. The initial learning rate is normally 0.010, but in this work, the selected dataset dimension is high; therefore, the learning rate of 0.001 is suitable. Mini batch size is always selected based on the machine (desktop computer), but we do not have enough resources for the execution on a mini batch size of 128; therefore, we selected a value of 64. The maximum epochs are normally 70–100, but in this work, due to the higher number of video frames, we obtained a better training accuracy after 150 epochs. A few training results that were noted during the training process are given in Table 8 and Table 9 below.

Table 8.

Training accuracy on selected hyper parameters using real-time collected dataset.

Table 9.

Training accuracy on selected hyper parameters using CASIA B dataset.

5. Conclusions

Human gait recognition is an active research domain based on an important biometric application. Through gait, the human walking style can be easily determined in the video sequences. The major use of human gait recognition is in video surveillance, crime prevention, and biometrics. In this work, a deep-learning-based method is presented for human gait recognition in a real-time environment and for offline publicly available datasets. Transfer learning is applied for feature extraction and then selects robust features using a heuristic approach. The correlation formulation is applied for the best-selected feature fusion. In the end, multiclass OaA-SVM is applied for the final classification. The methodology is evaluated on a real-time captured database and CASIA B dataset, and it achieves an accuracy of 96.60% and 95.26%, respectively.

We can conclude that the preprocessing step before the model’s learning reduces the error rate. This step further shows strength in selecting the best features, but some features are discarded, which are essential for classification. The kurtosis-controlled entropy (KcE) is a new heuristic feature-selection technique that is executed in less time than the metaheuristic techniques. Another new technique named correlation-formulation-based fusion is used in this work for the best feature fusion. We compare the results of this method to existing methods such as PCA and LDA, and our newly proposed selection technique gives better results and has a shorter computational time for real-time video sequences. Moreover, the fusion process, through correlation formulation, increased the information of the human walking style that later helped the improved gait-recognition accuracy.

The drawback of this work is as follows: in the correlation-formulation-based feature fusion step, the method added some redundant features that later increased the computational time and reduced the recognition accuracy slightly. In the future, we shall improve the fusion approach and increase the database by adding data from more subjects.

Author Contributions

Conceptualization, M.I.S., M.A.K. and M.N.; methodology, M.I.S., M.A.K. and M.N.; software, M.I.S., M.A.K. and M.N.; validation, A.A., S.A. and A.B.; formal analysis, A.A., S.A. and A.B.; investigation, A.A., S.A. and A.B.; resources, A.A., S.A. and A.B.; data curation, M.A.K. and R.D.; writing—original draft preparation, M.A.K., M.I.S. and M.N.; writing—review and editing, R.D. and A.A.; visualization, R.D. and S.A.; supervision, R.D.; project administration, R.D. and A.B.; funding acquisition, R.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used in this study are available from: https://drive.google.com/file/d/1g0UBj35Gu0HyjGhdid1NMobn2mPk8PyN/view?usp=sharing (accessed on 20 December 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Saleem, F.; Khan, M.A.; Alhaisoni, M.; Tariq, U.; Armghan, A.; Alenezi, F.; Choi, J.-I.; Kadry, S. Human Gait Recognition: A Single Stream Optimal Deep Learning Features Fusion. Sensors 2021, 21, 7584. [Google Scholar] [CrossRef] [PubMed]

- Bendjillali, R.I.; Beladgham, M.; Merit, K.; Taleb-Ahmed, A. Improved Facial Expression Recognition Based on DWT Feature for Deep CNN. Electronics 2019, 8, 324. [Google Scholar] [CrossRef] [Green Version]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, Present, and Future of Face Recognition: A Review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Olanrewaju, L.; Oyebiyi, O.; Misra, S.; Maskeliunas, R.; Damasevicius, R. Secure ear biometrics using circular kernel principal component analysis, Chebyshev transform hashing and Bose–Chaudhuri–Hocquenghem error-correcting codes. Signal Image Video Process. 2020, 14, 847–855. [Google Scholar] [CrossRef]

- Rodrigues, J.D.C.; Filho, P.P.R.; Damasevicius, R.; de Albuquerque, V.H.C. EEG-based biometric systems. In Neurotechnology: Methods, Advances and Applications; The Institution of Engineering and Technology: London, UK, 2020; pp. 97–153. Available online: https://www.researchgate.net/publication/340455635_Neurotechnology_Methods_advances_and_applications (accessed on 17 January 2022).

- Arshad, H.; Khan, M.A.; Sharif, M.I.; Yasmin, M.; Tavares, J.M.R.S.; Zhang, Y.-D.; Satapathy, S.C. A multilevel paradigm for deep convolutional neural network features selection with an application to human gait recognition. Expert Syst. 2020, 20, 1–21. [Google Scholar] [CrossRef]

- Sokolova, A.; Konushin, A. Pose-based deep gait recognition. IET Biom. 2018, 8, 134–143. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Li, Y.; Xiong, F.; Zhang, W. Gait Recognition Using Optical Motion Capture: A Decision Fusion Based Method. Sensors 2021, 21, 3496. [Google Scholar] [CrossRef]

- Kim, H.; Kim, H.-J.; Park, J.; Ryu, J.-K.; Kim, S.-C. Recognition of Fine-Grained Walking Patterns Using a Smartwatch with Deep Attentive Neural Networks. Sensors 2021, 21, 6393. [Google Scholar] [CrossRef]

- Hwang, T.-H.; Effenberg, A.O. Head Trajectory Diagrams for Gait Symmetry Analysis Using a Single Head-Worn IMU. Sensors 2021, 21, 6621. [Google Scholar] [CrossRef]

- Khan, M.H.; Li, F.; Farid, M.S.; Grzegorzek, M. Gait recognition using motion trajectory analysis. In Proceedings of the International Conference on Computer Recognition Systems, Polanica Zdroj, Poland, 22–24 May 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 73–82. [Google Scholar]

- Manssor, S.A.; Sun, S.; Elhassan, M.A. Real-Time Human Recognition at Night via Integrated Face and Gait Recognition Technologies. Sensors 2021, 21, 4323. [Google Scholar] [CrossRef]

- Liao, R.; Yu, S.; An, W.; Huang, Y. A model-based gait recognition method with body pose and human prior knowledge. Pattern Recognit. 2019, 98, 107069. [Google Scholar] [CrossRef]

- Sharif, M.; Attique, M.; Tahir, M.Z.; Yasmim, M.; Saba, T.; Tanik, U.J. A Machine Learning Method with Threshold Based Parallel Feature Fusion and Feature Selection for Automated Gait Recognition. J. Organ. End User Comput. 2020, 32, 67–92. [Google Scholar] [CrossRef]

- Priya, S.J.; Rani, A.J.; Subathra, M.S.P.; Mohammed, M.A.; Damaševičius, R.; Ubendran, N. Local Pattern Transformation Based Feature Extraction for Recognition of Parkinson’s Disease Based on Gait Signals. Diagnostics 2021, 11, 1395. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.; Khan, M.A.; Alhaisoni, M.; Tariq, U.; Yong, H.-S.; Armghan, A.; Alenezi, F. Human Action Recognition: A Paradigm of Best Deep Learning Features Selection and Serial Based Extended Fusion. Sensors 2021, 21, 7941. [Google Scholar] [CrossRef]

- Khan, M.A.; Zhang, Y.-D.; Khan, S.A.; Attique, M.; Rehman, A.; Seo, S. A resource conscious human action recognition framework using 26-layered deep convolutional neural network. Multimed. Tools Appl. 2021, 80, 35827–35849. [Google Scholar] [CrossRef]

- Khan, M.A.; Javed, K.; Khan, S.A.; Saba, T.; Habib, U.; Khan, J.A.; Abbasi, A.A. Human action recognition using fusion of multiview and deep features: An application to video surveillance. Multimed. Tools Appl. 2020, 1–27. [Google Scholar] [CrossRef]

- Zebari, D.A.; Ibrahim, D.A.; Zeebaree, D.Q.; Haron, H.; Salih, M.S.; Damaševičius, R.; Mohammed, M.A. Systematic Review of Computing Approaches for Breast Cancer Detection Based Computer Aided Diagnosis Using Mammogram Images. Appl. Artif. Intell. 2021, 1–47. [Google Scholar] [CrossRef]

- Kassem, M.; Hosny, K.; Damaševičius, R.; Eltoukhy, M. Machine Learning and Deep Learning Methods for Skin Lesion Classification and Diagnosis: A Systematic Review. Diagnostics 2021, 11, 1390. [Google Scholar] [CrossRef]

- Kumar, V.; Singh, D.; Kaur, M.; Damaševičius, R. Overview of current state of research on the application of artificial intelligence techniques for COVID-19. PeerJ Comput. Sci. 2021, 7, e564. [Google Scholar] [CrossRef]

- Mehmood, A.; Khan, M.A.; Sharif, M.; Khan, S.A.; Shaheen, M.; Saba, T.; Riaz, N.; Ashraf, I. Prosperous Human Gait Recognition: An end-to-end system based on pre-trained CNN features selection. Multimed. Tools Appl. 2020, 11, 1–21. [Google Scholar] [CrossRef]

- Anusha, R.; Jaidhar, C.D. Clothing invariant human gait recognition using modified local optimal oriented pattern binary descriptor. Multimed. Tools Appl. 2020, 79, 2873–2896. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Muhammad, N.; Javed, M.Y.; Naqvi, S.R. Improved strategy for human action recognition; experiencing a cascaded design. IET Image Process. 2020, 14, 818–829. [Google Scholar] [CrossRef]

- Kadry, S.; Rajinikanth, V.; Taniar, D.; Damaševičius, R.; Valencia, X.P.B. Automated segmentation of leukocyte from hematological images—A study using various CNN schemes. J. Supercomput. 2021, 1–21. [Google Scholar] [CrossRef]

- Tanveer, M.; Rashid, A.H.; Ganaie, M.; Reza, M.; Razzak, I.; Hua, K.-L. Classification of Alzheimer’s disease using ensemble of deep neural networks trained through transfer learning. IEEE J. Biomed. Health Inform. 2021. [Google Scholar] [CrossRef]

- Khan, M.Z.; Saba, T.; Razzak, I.; Rehman, A.; Bahaj, S.A. Hot-Spot Zone Detection to Tackle Covid19 Spread by Fusing the Traditional Machine Learning and Deep Learning Approaches of Computer Vision. IEEE Access 2021, 9, 100040–100049. [Google Scholar] [CrossRef]

- Rehman, A.; Naz, S.; Razzak, M.I.; Hameed, I.A. Automatic Visual Features for Writer Identification: A Deep Learning Approach. IEEE Access 2019, 7, 17149–17157. [Google Scholar] [CrossRef]

- Alyasseri, Z.A.A.; Al-Betar, M.A.; Abu Doush, I.; Awadallah, M.A.; Abasi, A.K.; Makhadmeh, S.N.; Alomari, O.A.; Abdulkareem, K.H.; Adam, A.; Damasevicius, R.; et al. Review on COVID-19 diagnosis models based on machine learning and deep learning approaches. Expert Syst. 2021, e12759. [Google Scholar] [CrossRef]

- Castro, F.M.; Marín-Jiménez, M.J.; Guil, N.; De La Blanca, N.P. Multimodal feature fusion for CNN-based gait recognition: An empirical comparison. Neural Comput. Appl. 2020, 32, 14173–14193. [Google Scholar] [CrossRef] [Green Version]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Bari, A.S.M.H.; Gavrilova, M.L. Artificial Neural Network Based Gait Recognition Using Kinect Sensor. IEEE Access 2019, 7, 162708–162722. [Google Scholar] [CrossRef]

- Zheng, S.; Zhang, J.; Huang, K.; He, R.; Tan, T. Robust view transformation model for gait recognition. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 2073–2076. [Google Scholar]

- Wang, X.; Yan, W.Q. Human Gait Recognition Based on Frame-by-Frame Gait Energy Images and Convolutional Long Short-Term Memory. Int. J. Neural Syst. 2019, 30, 1950027. [Google Scholar] [CrossRef] [PubMed]

- Anusha, R.; Jaidhar, C.D. Human gait recognition based on histogram of oriented gradients and Haralick texture descriptor. Multimed. Tools Appl. 2020, 79, 8213–8234. [Google Scholar] [CrossRef]

- Zhao, A.; Li, J.; Ahmed, M. SpiderNet: A spiderweb graph neural network for multi-view gait recognition. Knowl. Based Syst. 2020, 206, 106273. [Google Scholar] [CrossRef]

- Arshad, H.; Khan, M.A.; Sharif, M.; Yasmin, M.; Javed, M.Y. Multi-level features fusion and selection for human gait recognition: An optimized framework of Bayesian model and binomial distribution. Int. J. Mach. Learn. Cybern. 2019, 10, 3601–3618. [Google Scholar] [CrossRef]

- Ferroukhi, M.; Ouahabi, A.; Attari, M.; Habchi, Y.; Taleb-Ahmed, A. Medical Video Coding Based on 2nd-Generation Wavelets: Performance Evaluation. Electronics 2019, 8, 88. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. arXiv 2016, arXiv:1602.07261. [Google Scholar]

- Torrey, L.; Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2010; pp. 242–264. [Google Scholar]

- Naheed, N.; Shaheen, M.; Khan, S.A.; Alawairdhi, M.; Khan, M.A. Importance of Features Selection, Attributes Selection, Challenges and Future Directions for Medical Imaging Data: A Review. Comput. Model. Eng. Sci. 2020, 125, 315–344. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, Y.F. One-against-all multi-class SVM classification using reliability measures. In Proceedings of the 2005 IEEE International Joint Conference on Neural Networks, Montreal, QC, Canada, 31 July–4 August 2005; pp. 849–854. [Google Scholar]

- Arai, K.; Andrie, R. Gait recognition method based on wavelet transformation and its evaluation with chinese academy of sciences (casia) gait database as a human gait recognition dataset. In Proceedings of the 2012 Ninth International Conference on Information Technology-New Generations, Las Vegas, NV, USA, 16–18 April 2012; pp. 656–661. [Google Scholar]

- Arora, P.; Hanmandlu, M.; Srivastava, S. Gait based authentication using gait information image features. Pattern Recognit. Lett. 2015, 68, 336–342. [Google Scholar] [CrossRef]

- Castro, F.M.; Marín-Jiménez, M.J.; Mata, N.G.; Muñoz-Salinas, R. Fisher motion descriptor for multiview gait recognition. Int. J. Pattern Recognit. Artif. Intell. 2017, 31, 1756002. [Google Scholar] [CrossRef] [Green Version]

- Agrawal, P.; Abutarboush, H.F.; Ganesh, T.; Mohamed, A.W. Metaheuristic Algorithms on Feature Selection: A Survey of One Decade of Research (2009–2019). IEEE Access 2021, 9, 26766–26791. [Google Scholar] [CrossRef]

- Amari, S.-I. Backpropagation and stochastic gradient descent method. Neurocomputing 1993, 5, 185–196. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).