Abstract

Cache attacks exploit the hardware vulnerabilities inherent to modern processors and pose a new threat to Internet of Things (IoT) devices. Intuitively, different cache parameter configurations directly impact the attack effectiveness, but the current research on this issue is not systematic or comprehensive enough. This paper’s primary focus is to evaluate how different cache parameter configurations affect access-driven attacks. We build a flexible and configurable simulation verification environment based on the Chipyard framework. To reduce the interference of other factors, we established a baseline for each category of parameter evaluation. We propose a novel evaluation model, called Key Score Scissors Differential (KSSD), for evaluating common private and shared cache parameters under the local and cross-core attack models, respectively; among these are private cache replacement policy, private cache capacity, cache line size, private cache associativity, shared cache capacity, and shared cache associativity. Ours is the first evaluation of the shared cache under a cross-core attack model. As a result of the evaluation, the quantitative metrics can provide a reliable indication of information leakage level under the current cache configuration, which is helpful for attackers, defenders, and evaluators. Furthermore, we provide detailed explanations and discussions of inconsistent findings by comparing our results with the existing literature.

1. Introduction

With the rapid development of IoT technology in recent years, the Internet of Everything (IoE) has gradually become a reality. In many cases, IoT devices collect sensitive information, such as biological characteristics, health monitoring data, etc. Additionally, IoT devices are often connected to the cloud to analyze and store data in order to save money and facilitate expansion. IoT devices are currently at risk from a considerable cybersecurity threat due to the growth of the network and the development of new attacking methods [1].

IoT attacks can generally be classified into four categories [2,3,4,5]: physical attacks, cyber-attacks, software attacks, and side-channel attacks. Side-channel attacks use information, such as sound, power, and time, leaked by a system (especially the cryptosystem) to crack the system (key) [6,7]. Cache attacks are side-channel attacks that pose a significant threat to IoT devices [8,9]; they mainly exploit the inherent vulnerability of modern processors. They differ entirely from pure software attacks. However, they also involve only the implementation of malware code, no other hardware aids are required, and it is challenging to mitigate such attacks without degrading performance.

Attackers and security policy developers must have a comprehensive and in-depth understanding of cache attacks, such as the attacker capabilities, attack environment, and attack targets. Intuitively, different configurations of cache can directly impact the effectiveness of the attack, such as the cache replacement policy and cache line size.

However, intuition and experience alone are not enough, and we need a quantitative metric as a guide. This is also our primary motivation: (1) the impact of different cache configurations is challenging to determine and further quantify through intuition. (2) The influence of a cache parameter on attack results may be positive or negative. This requires a detailed experimental evaluation. (3) The same cache configuration may not be consistent in the conclusions obtained under different attack methods.

There has been some study of this issue in the literature [10,11,12,13,14,15], but these papers have at least two shortcomings. First, they are not devoted to analyzing cache parameters but only to experiments involving different cache configurations, so the evaluation is not very comprehensive. Furthermore, the evaluation lacks a baseline, and they utilized different architectures or processors with different chip processes, which may introduce interference from other factors, such as prefetching. As a relative reference, [16] is similar to our concept. However, this study emphasizes timing-driven attacks as the primary evaluation method of cache parameters. In addition, cross-core attacks are not considered when evaluating shared cache. As a method of mainstream attack, access-driven attacks lack similar literature. As a consequence of these observations, we perform a systematic and detailed evaluation of the common cache parameters using access-driven attacks.

Specifically, the main contributions of this paper are as follows:

- With the Chipyard framework, we built a simulation verification environment for RISC-V processors that allows the flexible configuration of cache parameters. We also defined a reference baseline for each parameter evaluation to make the comparison as fair and credible as possible. Additionally, the Chipyard framework may also be used to design physical chips, and several chips based on this framework have been validated in silicon [17,18]. Compared with generic simulators such as gem5 [19], the framework gives cycle-accurate results and has greater confidence.

- To our best knowledge, we are the first to perform a detailed and comprehensive evaluation of cache parameters based on access-driven attacks, including private cache replacement policy, private cache capacity, cache line size, private cache associativity, shared cache associativity, and shared cache associativity.

- We evaluated the parameters of private cache and shared cache under the local attack model and cross-core attack model, respectively. We propose to evaluate the shared cache under the cross-core attack model for the first time and focus on analyzing the impact of noise payload on cross-core attacks.

- With different cache configurations, we implemented an access-driven attack for the AES algorithm. We proposed a novel figurative quantitative evaluation metric called Key Score Scissors Differential (KSSD), through which it is very clear to see the impact of different configurations of each class of cache parameters on the results of an access-driven cache attack. In addition, we normalized the KSSD in order to make comparisons easier.

- The main findings of this paper are summarized and compared to the existing literature. We also explain the inconsistent views.

2. Background and Related Work

2.1. Cache Basics

Cache is a small piece of fast SRAM located between the CPU and main memory [20], which stores a copy of recently used data. When a cache miss occurs, the CPU will try looking for data in the next storage level. The processor’s access operation has temporal and spatial locality, resulting in a very high cache hit rate, which significantly resolves the “storage wall” issue. Modern processors use this cache-memory storage architecture, which is a conventional means of improving performance. Most modern processors have more than one level of cache hierarchy, with each level having a different cache size and function. Taking the two-level cache as an example, L1 cache, which we refer to as private cache, is exclusive to each core. On the other hand, L2 cache can be shared by all cores, which is why we call it shared cache, and its capacity is also much larger than private cache. Additionally, the last level cache is usually referred to as LLC. Cache hit and miss time is processor-specific, but memory access time is significantly different at different levels. Based on the time threshold and the probe time during the attack, attackers can determine whether the access was a hit or a miss.

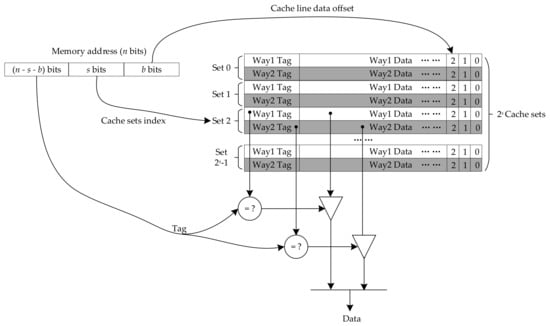

A two-way set-associate cache is shown in Figure 1. The access address is divided into three fields: tag, index, and offset, and the related cache parameters are described below.

Figure 1.

Example of 2-way set-associate cache.

Cache Line Size (CLS). Data exchange between the cache and the main memory is based on the size of a cache line. The lower b bits of the memory access address are used to determine the offset of the byte data in the cache line, and CLS = 2b. Private and shared cache line sizes are represented using PCLS and SCLS, respectively, although they are usually kept consistent.

Number of Cache Sets (NCS). Cache can be divided into multiple sets. The s bits in the middle of the memory access address are the index used to find the cache set, and NCS = 2s. The numbers of sets for the private cache and shared cache are represented by number of private cache sets (NPCS) and number of shared cache sets (NSCS), respectively.

Cache Mapping. There are three different types of cache mapping which are as follows: direct mapping, full associative mapping, and set-associative mapping. Direct mapping means that each memory address can be mapped to only one fixed cache line. In contrast, full associative mapping implies that each memory address can be mapped to all cache lines. Set-associative mapping means that each memory address can only be mapped to a fixed cache set, but can be mapped to any cache line within that set. Therefore, direct mapping can be seen as one-way set-associative mapping, and full associative mapping can be seen as set-associative mapping with only one cache set. Direct mapping has the simplest structure, but is prone to cache thrashing, while full associative mapping minimizes cache thrashing, but has the highest design complexity. Set-associative mapping combines the advantages of both approaches, balancing the challenges of design complexity and cache thrashing, and is used by most processors today.

Cache Associativity (CA). Cache associativity is the number of cache lines within each cache set, which is also the number of cache ways. Private cache associativity (PCA) and shared cache associativity (SCA) represent the associativity of private cache and shared cache.

Cache Capacity (CC). Cache capacity, i.e., the total size of the cache, is derived from CC = CLS × NCS × CA, while PCC and SCC denote private cache capacity and shared cache capacity, respectively.

Replacement Policy (RP). As the cache capacity is several orders of magnitude smaller than the main memory, cache is inevitably involved in competition and replacement during processor operation. In reality, random replacement policy and least recently used (LRU) replacement policy are commonly used, and LRU replacement policy is divided into two types: true LRU (TLRU) and pseudo-LRU (PLRU). The replacement policies for private cache and shared cache are represented by private cache replacement policy (PCRP) and shared cache replacement policy (SCRP), respectively.

Multi-level Cache Inclusiveness. Multi-level cache has three kinds of inclusion policy: inclusive, exclusive, and non-inclusive non-exclusive (NINE). Taking two-level cache as an example, inclusive cache means that L1 cache is a subset of L2 cache, NINE cache does not satisfy the inclusion policy, and exclusive cache is a special case of NINE cache, that is, the two levels of cache are mutually exclusive.

Addressing Mode. Both the index and tag fields can use a physical or virtual address when accessing the cache. Three addressing modes are commonly used, namely virtually indexed virtually tagged (VIVT), physically indexed physically tagged (PIPT), and virtually indexed physically tagged (VIPT).

This paper contains many abbreviations, so we generated a list for readability, as shown in Table 1.

Table 1.

List of frequently used abbreviations.

2.2. Cache Attacks

Cache attacks are typically classified into three categories [21,22,23], which are time-driven attacks [24,25], trace-driven attacks [26,27], and access-driven attacks [28,29,30]. Time-driven attacks entail collecting the overall time of one encryption or decryption and inferring the key using statistical analysis, which requires large sample data and is inefficient for attacks. The trace-driven attack implements the attack by capturing the cache hit and miss sequences at each access during cryptographic encryption or decryption, which is more difficult to implement in practice because the sequences are tough to obtain by timing means and are also susceptible to noise through power consumption or electromagnetic means. In access-driven attacks, the attacker initializes the cache to a specific state and then probes again to obtain a “snapshot” of the victim’s access to the memory after encryption or decryption. Access-driven attacks have become mainstream in recent years due to their higher attack efficiency, and the most well-known ones are Evict + Time [29], Prime + Probe [28,29,31,32], Flush + Reload [33,34,35], and Evict + Reload [31,36,37,38]. Among them, Evict + Time attack is less efficient and is less used nowadays; Prime + Probe attack does not require memory sharing between attacker and victim and is the most widely adapted; Flush + Reload attack requires cache flush instructions and memory sharing between attacker and victim, and its advantage is that the attack granularity can be precise to the cache line. Evict + Reload is typically used when there is no cache flush instruction available or when the attacker does not have permission to use it. Similarly, Evict + Reload attacks have the same attack granularity—the cache set.

Whatever the attack method used, access-driven attacks consist of three main steps from the attacker’s perspective [39].

Step 1 (Initialization): The attacker initializes specific cache sets (lines), either using his own data to fill them or using the flush instruction to invalidate them. This paper refers to this process uniformly as cache initialization.

Step 2 (Wait): Wait for the victim to perform sensitive data operations. As the attacker and the victim share the cache, the victim may break the cache state that the attacker has initialized.

Step 3 (Measurement): The attacker probes the state of the cache and infers which cache sets (lines) have been accessed by the victim through the access time, whereby the attacker can obtain the pattern of the victim’s access to memory.

Repeat these steps if multiple attacks are required.

2.3. AES Algorithm

Researchers generally use AES [40] as an attack object to check the security of systems because of its high security and widespread application. AES evolved from the Rijndael algorithm, which has different versions with 128-bit, 192-bit, and 256-bit key lengths; without loss of generality, we only focus on AES with a 128-bit key. The AES cipher is an iterative block cipher with finite field operations, and there are ten rounds of iterative procedures. Except for the last round, in each round are four operations: substitute bytes, shift rows, mix columns, and add round key, whereas, in the last round, there is no mix columns transformation. The plaintext is XORed with the original key before the first round of operations, known as key whitening. The entire encryption process requires ten rounds of keys, each of which is extended from the initial key by nonlinear transformations. It is worth noting that the key generation algorithm is reversible, which means that the original key can be cracked as soon as any round of the extended key is recovered.

Common cryptographic algorithm libraries use a fast software implementation (e.g., OpenSSL [41], Bouncy Castle [31], etc.) to improve computational performance, as recommended in the original Rijndael report [40] for software implementations on 32-bit (or 64-bit) processors. By combining three steps of byte substitution, row shifting, and column mixing, the fast software implementation performs precomputation and stores the results in five lookup tables, T0, T1, T2, T3, and T4, all of which can be transformed from the original S-box. Equation (1) demonstrates the operation process for the first nine rounds, and each encryption involves 36 accesses to the T0–T3 tables, respectively. For the last round, a dedicated T4 table is used, which is accessed 16 times during the encryption process, as shown in Equation (2).

There are several variants of fast implementations based on T-tables [42,43,44]. Despite the differences in implementation procedures, the attack principles are similar. This paper does not intend to analyze the security of the AES algorithm, so we use the implementation described in the original report [40].

2.4. Chipyard Framework

Moore’s law has already started to fail, and domain-specific architecture has become the new hot direction in computer architecture. Hennessy and Patterson [45] described it as a new golden age of computer architecture. Consequently, differentiated architectures are bound to present new difficulties and challenges in system integration, verification, etc. Chipyard, an open-source hardware platform for chip design, simulation verification, and implementation back-end, was developed by the University of California, Berkeley, CA, USA, based on agile development principles [17,18]. Chipyard is based on the Chisel and FIR hardware description libraries as well as the RocketChip SoC ecosystem. Many chips designed based on the Chipyard framework have been silicon-proven.

2.5. Related Work

There have been some studies on the impact of cache parameters on attack results, but most are not targeted studies. Therefore, this article will only review the parts related to cache configuration. As early as 2007, Tiri et al. [10] proposed that the effectiveness of cache attacks is directly related to the cache architecture and attacked AES algorithms with different implementations on two different processors. However, only a comparative analysis of two different cache line sizes was involved. Domnitser et al. [11] mainly investigated cache associativity within a simulation environment. They used conditional probability models for encryption and decryption of the AES and Blowfish algorithms, respectively. In the experiments, however, the LRU replacement policy was used without considering the random replacement policy. Therefore, the effect of the cache associativity on the attack was not well captured. Demme et al. [12] proposed a generic side-channel leakage evaluation metric, side-channel vulnerability factor (SVF), which can theoretically be used for any side-channel leakage and is not restricted to cache leakage. However, they also do not consider the impact of the random replacement policy when evaluating the cache associativity and require the attacker to have a “God’s view” to obtain the so-called “oracle trace” when calculating the SVF. Zhang et al. [13] discussed the advantages and disadvantages of SVF presented in the literature [12] and derived the cache side-channel vulnerability (CSV) metric based on it, which is focused on cache attacks. Nevertheless, when comparing the results, the experimental environment used in the two studies is not completely consistent, so it is difficult to determine whether or not the results are valuable. Doychev et al. [14] proposed a generic framework for automated static analysis of cache vulnerabilities. This framework is used to establish the correctness of upper bounds on leakage to corresponding side-channel adversaries. Still, the results are only theoretical, and no actual attack validation is performed. Using their previously proposed three-step model, Deng et al. [15] performed cache vulnerability evaluation on a cloud platform. Cache parameters are evaluated using Evict + Time attacks and the number of vulnerabilities found in different configurations as an evaluation criterion. The work carried out by Yu et al. [16] is the closest to ours, as it focuses on the evaluation of cache configurations and discusses most of the cache parameters. However, they use the more “outdated” Bernstein attack, a very classical timing attack. In addition, it evaluates the shared cache based on a local attack model similar to private cache attacks without taking into account cross-core attacks.

As shown in Table 2, we summarize the evaluation model, attack algorithm, attack method, experimental environment, and cache parameters involved. In addition, we give a brief summary of each paper in Table 2 and explain the conclusions inconsistent with this paper in Section 4.3.

Table 2.

Summary table of new therapeutic agents proposed to treat sarcopenia and muscle atrophy.

3. Methods

3.1. Cache Parameter Setting and Basic Consideration

Parameter evaluation is divided into two parts, one is private cache, and the main parameters include private cache replacement policy, private cache capacity, cache line size, and private cache associativity. The other is shared cache, and the main parameters include shared cache capacity and shared cache associativity. Since cache capacity can be modeled as a function of cache line size, the number of cache sets and cache associativity, the only factor that changed when evaluating cache capacity is the number of cache sets to reduce interference from other factors.

In evaluating the two parameters of shared cache capacity and associativity, we used a dual-core architecture with two levels of cache. Each core has its private cache, and the L2 cache is the shared cache of both cores. Clearly, the local core cannot directly affect the adjacent core’s private cache but can affect both cores’ shared cache. It has been demonstrated in numerous works of literature [33,46,47,48,49] that cross-core attacks can be carried out using the shared cache (usually LLC), but only if the inclusive attribute is maintained between multi-level cache. The local core can evict data from the shared cache. As a result of the inclusive attribute, when the data in the shared cache are evicted, the corresponding data cached in the private cache are also set to invalid, thus achieving the purpose of evicting the private cache of the adjacent core.

In addition, we set a baseline for each type of parameter evaluation, ensuring that the other parameters remained unchanged as much as possible to avoid other interferences. The specific settings and fundamental considerations for each parameter are described below.

3.1.1. Private Cache Replacement Policy

Several access-driven attacks other than the Flush + Reload attack require more evictions when initializing the cache under the random replacement policy so that the random replacement policy will pose more difficulty. This paper focuses on the more general case of no cache flush instruction, so the number of evictions is considered in the evaluation. We evaluated three types of replacement policies in this paper: random, TLRU, and PLRU.

3.1.2. Private Cache Capacity

If the PCC becomes small, the competition for cache from different programs will increase. The PCC is configured as 16KB, 8KB, 4KB, 2KB, 1KB, and 512B; correspondingly, the number of sets in the private cache is 64, 32, 16, 8, 4, and 2.

3.1.3. Cache Line Size

An attacker cannot distinguish data mapped to the same cache line. Thus, the CLS affects the resolution of the attack. In the experimental environment of this paper, changing the PCLS affects the SCLS at the same time. This means that CLS at all levels is always the same. Additionally, the experimental environment limits the size of one way to no more than one page. That is, it must satisfy 2 s + b ≤ 4KB. It is designed to avoid cache aliases in VIVT and VIPT addressing modes. Thus, CLS parameters are evaluated in two cases, namely, where the number of private cache sets equals 64 and 16. We set the parameters to 256B-16Sets, 128B-16Sets, 64B-16Sets, 64B-64Sets, 32B-64Sets, and 16B-64Sets. We evaluated two cases in order to determine the additional impact of the different numbers of sets when the CLS was 64B.

3.1.4. Private Cache Associativity

The direct mapping corresponds to the one-way set-associative mapping. Since the full associative mapping is almost impossible when the cache capacity is large, we use a large enough associativity (32) to approximate it, and the remaining cases correspond to the set-associative mapping.

Two approaches are available to measure associativity. The first is to keep private cache capacity unchanged so that the number of private cache sets changes as the PCA changes. When the number of private cache sets becomes small, sensitive data will be mapped to multiple cache lines in the cache set. Attackers cannot distinguish whether it is due to the impact of the number of private cache sets or the PCA. The second way is to keep the number of private cache sets unchanged while changing the PCA; the total capacity of cache also changes simultaneously. When the capacity of cache remains large enough, the impact on the attack will be minimal, which is equivalent to simplifying the variables, so the second method is adopted in this paper.

The relationship between PCA and the replacement policy is also very close. With the LRU replacement policy, the cache can be cleared by the same number of evictions as the associativity value. In contrast, if the random replacement policy is adopted, more evictions will be needed to achieve the same effect. In order to expose the impact of associativity as much as possible, this paper employs the random replacement policy to evaluate associativity. In theory, as long as the number of evictions is sufficient, the ideal eviction effect can be achieved, but the indicators proposed in this paper cannot be used. Therefore, this paper sets the number of evictions under each configuration to the same value as the PCA.

3.1.5. Shared Cache Capacity

With a smaller SCC, there is more competition between the two cores for the shared cache. Therefore, noise payload is taken into account when evaluating this parameter. The SCC is configured by changing the number of shared cache sets while keeping the same associativity. SCC is generally several times larger than private cache capacity; thus, the SCCs evaluated in this paper are 1 MB, 512 KB, 256 KB, 128 KB, 64 KB, 32 KB, and 16 KB, corresponding to the numbers of shared cache sets of 2048, 1024, 512, 256, 128, and 64, respectively.

3.1.6. Shared Cache Associativity

The SCA makes the eviction operation much more difficult for the attacker when it grows larger, much like the PCA. However, the larger SCA will also reduce the competition between the two cores for the shared cache. It is difficult to determine which of these two factors is most significant. When changing the SCA, this study leaves the number of shared cache sets unchanged and performs an evaluation with and without noise payload. There are three configurations: 8-way, 16-way, and 32-way.

3.2. Experimental Setup

Based on the literature [17,50], we developed an experimental environment with a simpler micro-architecture to eliminate disturbances in evaluation caused by mechanisms such as prefetching and out-of-order execution. With the Verilator tool, specific simulators can be generated for different configurations. Simulators can implement cycle-accurate simulation. The following summarizes the attack conditions and environment.

- All experiments were performed with changes based on the baseline configuration shown in Table 3.

Table 3. Baseline configuration.

Table 3. Baseline configuration. - In order to avoid additional interference from the operating system, we use a bare-metal programming environment.

- The AES is implemented using fast software algorithm, as described in Section 2.3. The programming language is C, and the toolchain and compiler parameters are as follows: riscv64-unknown-elf-gcc-mcmodel = medany-static-std = gnu99-O2.

- We perform our attack against the last round of AES. The attacker and victim share the T4 table, or the attacker knows where the T4 table is stored in the cache. The T4 table is stored aligned to the cache line size.

- The attacker could access the RISC-V processor’s built-in cycle registers to obtain the number of clock cycles the processor has currently executed for accurate timing.

- During our experiments, we used two-level cache architectures with inclusive properties. In simulation, the DRAM is simulated using the SimDRAM model, and its size is 256MB. CPU, bus, and DRAM run at 100 MHz. Furthermore, the private cache is evaluated using a single-core architecture, while the shared cache is evaluated using a dual-core architecture.

- The total number of attacks is 50 for each configuration. The attacker can only probe memory once after each group of plaintext is encrypted.

This paper aims to describe how different cache parameters affect attack results. The attack assumptions are not the focus of this paper, and these assumptions are all within acceptable limits.

3.3. Attack Models

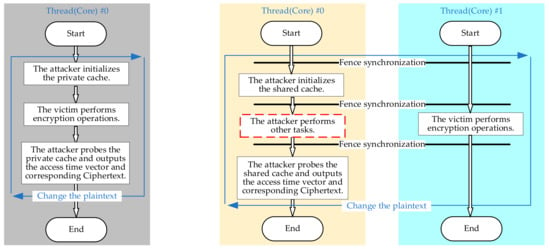

We present the local attack model and the cross-core attack model, which are different attack models for evaluating private and shared cache parameters, respectively. Both attack models use synchronous attacks, i.e., the attacker can trigger the victim to encrypt, as shown in Figure 2. In the local attack model, the attacker and the victim reside in the same thread without considering the interference of other programs. In the cross-core attack model, the attacker and the victim run in two threads, and the attacker’s thread may run other tasks, which we call noise payload.

Figure 2.

Local attack model (left) and cross-core attack model (right).

3.4. Evaluation Model

An attack is implemented in this paper against the last round of the AES algorithm. The attacker mainly monitors which entries of the T4 table are accessed by the victim in the last round. Due to the fact that data mapped to the same cache line are cached or replaced simultaneously, these data cannot be distinguished. In the meantime, the victim makes 16 accesses to the T4 table in the last round, and the attacker can only probe the cache again after the encryption is complete, so these 16 accesses are also indistinguishable. The above two reasons will lead to a reduction in attack resolution. In each attack, only multiple candidate key bytes can be filtered, but real key bytes cannot be locked directly, so multiple attacks are required to crack the correct key bytes.

We use a scoring method and increase each candidate key byte’s score by one after each attack. Ideally, the correct key byte score will be added one after each encryption. In contrast, other incorrect candidate key bytes will be added one at the right time, so the real key bytes will be highlighted when the number of encryptions reaches a certain level. However, when the noise is large enough, it is difficult to obtain the key in a limited number of attacks.

In order to evaluate the attack results, this paper proposes a novel figurative metric, i.e., KSSD, which characterizes the difference between the highest score of the candidate key bytes and the scores of the other 255 candidate key bytes. Theoretically, the attack complexity of the 16 key bytes is the same, so the KSSDs of the 16 key bytes are averaged to yield the final KSSD. This metric will serve as a meaningful indicator of the attack’s result. From another point of view, it will display the degree of information leakage from the cache. A larger value of KSSD indicates more information leakage. The KSSD is calculated as shown in Algorithm 1.

| Algorithm 1 Calculating the KSSD after each attack |

| Input: Ciphertext, Time vector for accessing T4 table; Output: KSSD[1: n]. |

| 1. Φhit(1:n) ← Ø, score[0: 15][0:255] ← 0, KSSD[1:n] ← 0; |

| 2. for m ← 1 to n do |

| 3. for i ← 0 to 15 do |

| 4. Infer the set Φhit(m) of T4 table entries accessed by the victim; |

| 5. Calculate the set of key byte candidates; |

| 6. for j ← 0 to 255 do |

| 7. if j ∈ then |

| 8. score[i][j] ++; |

| 9. end if |

| 10. end for |

| 11. end for |

| 12. Calculate the KSSD[m]; |

| 13. end for |

| 14. return KSSD[1: n] |

This algorithm uses the idea of divide and conquer to execute the attack by bytes. The first line of code initializes the set Φhit, array score[][], and array KSSD[]. Then, based on the probe time vector obtained in the third step of the attack process and the time threshold, the fourth line of code determines which T4 table entries the victim accessed, and these T4 table entries are noted as the set Φhit (m), m is the number of attacks. The fifth line of code is to calculate the set of candidate key bytes based on Φhit (m) and Equation (2), where i indicates the i-th byte, and the calculation formula is shown in Equation (3). According to the subscript, only the byte i%4 from the T4 table entry is involved in the operation, and the byte order is defined as byte0 to byte3 from left to right, respectively. Lines 6–10 of the code are to add one to the score corresponding to the bytes of the candidate keys. Line 12 of the code is to calculate the KSSD [m] after encrypting each set of plaintexts, with the formula shown in Equation (4), where the max() function returns the maximum value of the provided data. Line 14 outputs the final result.

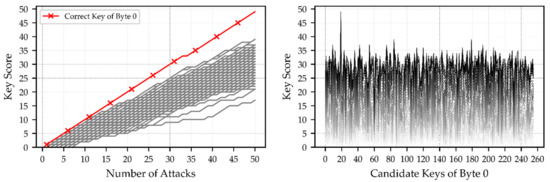

4. Results and Discussion

Using the method described in Section 3, Figure 3 shows the score of key byte 0 in the last round from two perspectives. Figure 3 (left) depicts the score growth of the correct key and other candidate keys. As the number of attacks increases, the correct key byte becomes more and more distinguishable, and its score curve and the score curves of other candidate keys become more and more similar to open scissors; that is why the key score scissors differential in this paper is proposed. A peak can be clearly seen in Figure 3 (right), and the abscissa of the peak position is the correct key byte value with the maximum probability.

Figure 3.

The score of key byte 0 after every round of attack from two perspectives.

4.1. Evaluation

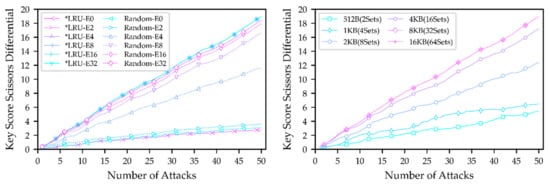

4.1.1. Private Cache Replacement Policy

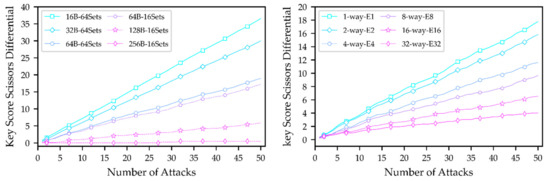

Since this parameter is evaluated in a four-way set-associative case, the initialization result of a cache set highly depends on how many evictions are performed. In this paper, a total of 0, 2, 4, 8, 16, and 32 evictions are set for different cases, indicated in the legend by the suffix E*, where * represents a wildcard. Figure 4 (left) presents the evaluation results. The evaluation results of PLRU and TLRU are consistent in all cases. Therefore, in the following text, PLRU and TLRU will be represented by *LRU uniformly.

Figure 4.

Evaluation of private cache replacement policy (left) and private cache capacity (right).

Attack results of the *LRU replacement policy show two extremes. If the number of evictions is less than the associativity, the effect of different eviction times is consistent. It is because, under the *LRU replacement policy, the relevant entries of the T4 table must be cached in the remaining cache lines that have not yet been evicted. In this way, as the initialization state of the cache is not affected, the attacker cannot obtain any useful information. On the other hand, it is guaranteed that the entire cache set will be successfully evicted when the number of evictions exceeds or equals the associativity. As long as the victim has access to the T4 table during operation, the attacker will detect it and obtain information related to the key. In this case, the attack results are also the same for different numbers of evictions.

In the random replacement policy, evicting all cache lines in the cache sets is theoretically impossible. However, as the number of evictions increases, it can gradually approximate the effect at *LRU-E4. There is still a certain probability of destroying the initialized cache state since the cache lines mapped to the T4 table entries within the cache set are random, even when there are fewer evictions than associativity, so the KSSD indicator is higher than when the *LRU configuration is used. When the number of evictions is greater than or equal to the associativity, the KSSD is always smaller than when the *LRU is used. As the number of evictions increases, the KSSD will gradually approach the case when the *LRU is used; this is because as evictions increase, the degree to which an attacker initializes the cache also increases. Therefore, the random replacement policy will have a decreasing impact but will increase the time complexity of the attack to a certain extent when the number of evictions increases.

The above conclusion has a premise that the cache capacity is large enough relative to the T4 table; that is, it needs to satisfy Equation (5)-1. On the other hand, if the cache capacity is small, it will generate self-eviction and bring some impact on the evaluation results.

4.1.2. Private Cache Capacity

The evaluation of private cache capacity is divided into three cases, as shown in Equation (5).

where T4-size denotes the size of the T4 table, which is actually 1 KB, the evaluation results are shown in Figure 4 (right). If PCC is 16 KB or 8 KB, the number of private cache sets (NPCS) is 64 or 32, satisfying Equation (5)-1. At this time, the entries of the T4 table will not be mapped to more than one cache line in a cache set simultaneously, and the change in the NPCS will hardly affect the KSSD. When the NPCS is 16 or 8, the corresponding PCC is 4 KB or 2 KB, which satisfies Equation (5)-2. At this time, considering that the cache also stores other data, the one-way cache will not be able to fit the complete T4 table, resulting in multiple cache lines in a cache set when caching T4 table entries. Thus, the space in the cache reserved for other programs will be reduced, resulting in the initialized cache state being easily “unintentionally” destroyed by other applications, increasing the noise on the system and reducing the KSSD. With the NPCS being further reduced, when the NPCS is 4 and 2, the corresponding PCC is 1 KB and 512 B, satisfying Equation (5)-3. At this time, the whole private cache cannot completely install the T4 table, which will lead to the overlap of the mapping of the T4 table in the cache, so the victim will suffer from self-eviction in the process of accessing the T4 table, and the KSSD will be further reduced.

4.1.3. Cache Line Size

The evaluation results of CLS are shown in Figure 5 (left). Consequently, the smaller the CLS and the larger the KSSD, the more information is leaked. This is because the attack resolution increases when the CLS is small. Increasing the CLS, the KSSD becomes smaller, and when it reaches 256 B, the attacker can barely obtain any useful information. We compare and analyze the additional impact caused by the different numbers of private cache sets in two configuration cases (64B-64Sets and 64B-16Sets). The experimental results show no significant differences between the two configurations. Relatively speaking, when the number of private cache sets is 16, a smaller space is reserved for other cache applications, increasing noise interference. Although two different numbers of private cache sets were used to evaluate this parameter, their use does not significantly affect the conclusion of the CLS parameter evaluation.

Figure 5.

Evaluation of cache line size (left) and private cache associativity (right).

4.1.4. Private Cache Associativity

Figure 5 (right) shows the results of the PCA evaluation. The cache is equivalent to a direct mapping when the PCA is set to 1, and the attack is more effective in this configuration since it is not impacted by the random replacement policy. With increasing PCA, KSSD decreases gradually due to the increasing difficulty for the attacker to initialize the cache to a controllable state, which results in additional noise when the cache eviction is not clean. The current evaluation sets the number of evictions consistent with the associativity. Nevertheless, if the number of evictions is set to be the same under different configurations, the differences in evaluation results will be widened further.

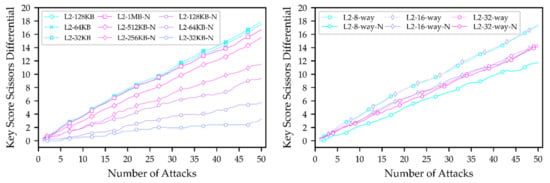

4.1.5. Shared Cache Capacity

Figure 6 (left) shows the results of the evaluation of the SCC parameters. According to the results, the different SCCs almost do not affect the results when the attacker thread does not run a noise payload because the shared cache is large enough compared to the T4 table, and its change in size has already had a minimal effect. However, the attacking program’s data will also take up a portion of the shared cache if the SCC is small. As a result, part of the T4 table entries will be driven out of the shared cache, resulting in a slightly lower KSSD. If the SCC is further reduced, the KSSD will theoretically be reduced further. Nevertheless, the shared cache is too small to meet actual usage.

Figure 6.

Evaluation of shared cache capacity (left) and shared cache associativity (right).

When the attacker threads run simultaneously with the noise payload, they are indicated by solid lines in Figure 6 (left) and end with -N in the legend. At this point, the impact of different SCCs on the results increases significantly, and the smaller the capacity, the less leakage. Since the noise payload accesses the cache equally, the number of noise accesses to a cache set will increase when the capacity is reduced. As a result, the T4 table entries cached initially are more likely to be evicted, increasing the noise interference and leading to a lower KSSD.

4.1.6. Shared Cache Associativity

Under different SCA configurations, we uniformly set the number of evictions to 64, and the evaluation results are displayed in Figure 6 (right), where the suffix -N indicates noise payload. Similar to the evaluation results for PCA, under the random replacement policy, the larger the associativity, the less leakage. Since the shared cache has the inclusive feature for both instruction cache and data cache for both cores, it is more susceptible to unintentional eviction of other data, resulting in comparable results for both L2-way-8 and L2-way-16 configurations. In contrast, when the attack thread runs the noise payloads concurrently, the system’s noise tolerance will increase as SCA increases. When both factors cancel each other out, the influence of associativity is weakened. Depending on the noise payload intensity, the degree of weakening varies. To sum up, in the case of no noise interference, the larger the associativity, the smaller the KSSD; at the same time, the smaller the associativity, the more sensitivity to noise and more vulnerability to noise interference.

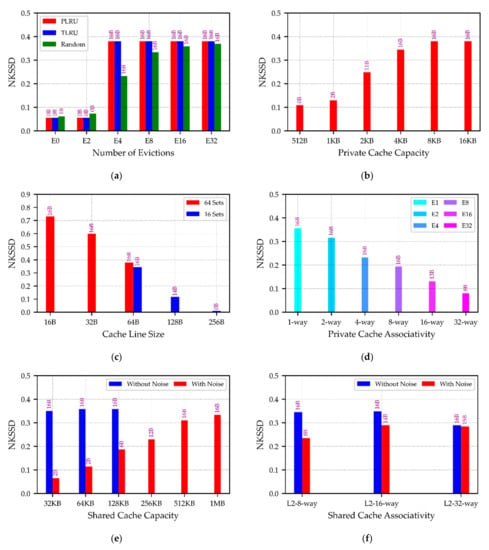

4.2. Normalization

Ideally, the score of the real key byte should be 50, and the score of all other candidate key bytes should be 0; the value of KSSD at this time is 50, which is also the maximum value. To achieve the most desirable attack effect, the attacker needs to learn every memory access of the victim, and there should be no other noise interference, which is unrealistic. In the worst case, the KSSD will be 0, and the attacker will not be able to gather any information. According to the experimental results, the KSSD is basically linear with the number of attacks. In order to facilitate analysis and comparison, the KSSDs for each configuration after n attacks are normalized, and the results are recorded as normalized key score scissors differential (NKSSD), which equals KSSD[n]/n. Here, n represents the number of attacks, and its value is 50 in this paper. The normalized results are shown in Figure 7.

Figure 7.

NKSSD in different configurations. Graphs (a–f) illustrate the NKSSD of private cache replacement policy, private cache capacity, cache line size, private cache associativity, shared cache capacity, and shared cache associativity in different configurations. The higher the bar, the larger the NKSSD, and the more information the cache leaks. The text above the bar indicates the number of key bytes that can be inferred after 50 attacks. In general, the larger the NKSSD, the more key bytes that can be inferred. Moreover, when all the key bytes can be cracked, the NKSSD can still be used to compare the degree of leakage of the current cache configuration.

4.3. Discussion

We summarize the main findings of this paper and compare them with other works of literature, as shown in Table 4. Several key points are discussed in this section.

Table 4.

Comparison of the main findings of this paper with previous literature studies.

4.3.1. Comparison with Other Literature Findings Based on Timing-Driven Attacks

The literature on evaluating cache parameters based on timing-driven attacks is limited, and [10] is not evaluated under a baseline, so it has little reference value. This paper primarily compares with [16]. Due to the different attack methods, agreement or disagreement between conclusions does not imply wrong or right, and we explain it mainly based on principle. The study in [16] evaluates based on Bernstein’s attack. In this attack model, a high missing rate of cache results in a more advantageous attack. This explains why the evaluation of the replacement policy results in the opposite conclusion. For cache line size, since the overall time of encryption is exploited under timing-driven attacks, while access-driven attacks are more concerned with the difference between single access, the conclusions reached by cache line size in [16] are reasonable. The larger the private cache capacity and associativity parameters, the lower the missing rate of cache and the less leakage. Therefore, a conclusion consistent with ours is drawn. Shared cache capacity and associativity are still evaluated using the local attack model in [16], so their findings are incomparable to ours.

4.3.2. Comparison with Other Literature Findings Based on Access-Driven Attacks

We focus our analysis on the inconsistent findings. For the private cache replacement policy parameters, [14] concludes that PLRU leaks more information than TLRU using static analysis. Since the conclusions in this paper are based on actual attacks with more specific attack conditions, whereas [14] is more in line with a theoretical analysis, we would suggest that because of the limitations of the attack model in this paper, the differences between the TLRU and PLRU are not adequately reflected. Except for this parameter, all other common parameters were consistent with [14].

The study in [12] gives different conclusions from this paper for both private cache line size and associativity parameters. We believe that their conclusion for cache line size is questionable; from the perspective of theoretical analysis and practical validation (including other literature), cache line size significantly affects the attack effect. For private cache associativity, [12] was evaluated under the LRU replacement policy. In this case, we also concluded that private cache associativity has a limited effect. Moreover, it may also be possible to conclude that there is no significant pattern in the effect of private cache associativity if other factors are taken into account, so we do not consider this a fundamental contradiction.

4.3.3. Flush + Reload Attacks

Using the Flush + Reload attack to evaluate cache associativity may result in an opposite conclusion from other access-driven attacks because the Flush + Reload attack can operate directly on the cache lines during initialization. Therefore, the attack is not affected by the random replacement policy and the cache associativity during the initialization phase. On the contrary, the higher the associativity, the higher the tolerance to noise, which is more conducive to the implementation of the attack. In this paper, we consider a general situation but do not conduct specific experiments on Flush + Reload attacks. In this regard, we agree with the conclusion of reference [14].

4.3.4. Time Complexity

Different configurations require different attack time; for example, more associativity requires a longer attack time. However, for more efficient access-driven attacks, the attack time is not a significant factor and is not considered much in this paper, although it is also used as a metric for evaluation in [12]. In contrast, the time complexity must be considered under the timing-driven attack model.

4.3.5. Relativity

As the attack results depend on various factors and the KSSD is dynamically adjusted based on the attack conditions, meaningful comparisons between KSSD must be made based on a baseline. For example, the value of NKSSD2KB when evaluating private cache capacity is larger than that of NKSSD128B when evaluating cache line size; nevertheless, the number of key bytes cracked by the former is less than that of the latter.

KSSD can still evaluate leakage levels even if all 16 key bytes are cracked, which is superior to the equivalent key length method proposed in [16].

4.3.6. Correlation

In general, KSSD positively correlates with the number of key bytes that can be cracked out. In very noisy systems, however, the highest score in calculating the KSSD might not represent the true key bytes. This will result in some degree of distortion of the KSSD. Two solutions are available, either increasing the number of encryptions or replacing the highest score in Equation (4) with that of the correct key byte. The premise of the second method is that the attacker (evaluator) needs to know the correct key bytes, and the result at this time can also be called a posteriori KSSD.

4.3.7. Limitations

In this paper, the specific attack model and environment have some limitations, and the findings may not be suitable for all algorithms and novel attacks. For example, according to the evaluation model of this paper, there is no difference between TLRU and PLRU replacement policies. In the novel attack mode, however, the results will differ. For example, Xiong et al. [51] constructed a covert channel based on LRU characteristics, and the results caused by TLRU and PLRU are inconsistent.

In addition, this paper does not evaluate the instruction cache.

5. Conclusions

This paper investigates the impact of different cache configurations on access-driven attacks. We perform a systematic and comprehensive evaluation of six common cache parameters: private cache replacement policy, private cache capacity, cache line size, private cache associativity, shared cache capacity, and shared cache associativity. It is based on a highly parameterized simulation and verification environment. The study in [16] includes much work under the timing-driven attack. However, with the access-driven attack being the most common attack, this paper is the first to evaluate the cache parameters specifically under the access-driven attack method, which forms a valuable complement and contrast with [16], and the evaluation results can be used as a reference by attackers, defenders, and evaluators. In this paper, we propose a figurative evaluation metric, shown to be a reasonable way to measure cache vulnerability under different cache configurations. We compare with other literature findings and provide targeted explanations. In particular, we are the first to evaluate the shared cache under a cross-core attack scenario.

We have only evaluated part of the micro-architecture, so systematically evaluating the vulnerability of the whole micro-architecture will be an extremely challenging research effort.

Author Contributions

Conceptualization, P.G. and Y.Y.; Methodology, P.G., B.Y., L.Z. and C.Z.; Software, P.G., C.Z. and L.Z.; Validation, P.G., Y.Y. and T.S.; Formal Analysis, P.G. and L.C.; Investigation, P.G., T.S. and L.C.; Resources, P.G., B.Y. and C.Z.; Data Curation, P.G., Y.Y. and B.Y.; Writing—Original Draft Preparation, P.G., Y.Y., L.Z. and C.Z.; Writing—Review and Editing, P.G., Y.Y., B.Y., C.Z. and L.Z.; Visualization, P.G. and T.S.; Supervision, Y.Y. and L.C.; Project Administration, Y.Y. and L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All data included in this study are available upon request by contact with pfguo_hit@126.com.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Noor, M.B.M.; Hassan, W.H. Current Research on Internet of Things (IoT) Security: A Survey. Comput. Netw. 2019, 148, 283–294. [Google Scholar] [CrossRef]

- Aloseel, A.; He, H.; Shaw, C.; Khan, M.A. Analytical Review of Cybersecurity for Embedded Systems. IEEE Access 2021, 9, 961–982. [Google Scholar] [CrossRef]

- Panchal, A.C.; Khadse, V.M.; Mahalle, P.N. Security Issues in IIoT: A Comprehensive Survey of Attacks on IIoT and Its Countermeasures. In Proceedings of the 2018 IEEE Global Conference on Wireless Computing and Networking (GCWCN), Lonavala, India, 23–24 November 2018; pp. 124–130. [Google Scholar]

- Deogirikar, J.; Vidhate, A. Security Attacks in IoT: A Survey. In Proceedings of the 2017 International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 10–11 February 2017; pp. 32–37. [Google Scholar]

- Higgins, C.; McDonald, L.; Ijaz Ul Haq, M.; Hakak, S. IoT Hardware-Based Security: A Generalized Review of Threats and Countermeasures. In Security and Privacy in the Internet of Things; Awad, A.I., Abawajy, J., Eds.; Wiley: Hoboken, NJ, USA, 2021; pp. 261–296. ISBN 978-1-119-60774-8. [Google Scholar]

- Koeune, F.; Standaert, F.-X. A Tutorial on Physical Security and Side-Channel Attacks. In Foundations of Security Analysis and Design III: FOSAD 2004/2005 Tutorial Lectures; Aldini, A., Gorrieri, R., Martinelli, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 78–108. ISBN 978-3-540-31936-8. [Google Scholar]

- Standaert, F.-X. Introduction to Side-Channel Attacks. In Secure Integrated Circuits and Systems; Verbauwhede, I.M.R., Ed.; Integrated Circuits and Systems; Springer US: Boston, MA, USA, 2010; pp. 27–42. ISBN 978-0-387-71827-9. [Google Scholar]

- Takarabt, S.; Schaub, A.; Facon, A.; Guilley, S.; Sauvage, L.; Souissi, Y.; Mathieu, Y. Cache-Timing Attacks Still Threaten IoT Devices. In Codes, Cryptology and Information Security; Carlet, C., Guilley, S., Nitaj, A., Souidi, E.M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11445, pp. 13–30. ISBN 978-3-030-16457-7. [Google Scholar]

- Polychronou, N.F.; Thevenon, P.-H.; Puys, M.; Beroulle, V. Securing IoT/IIoT from Software Attacks Targeting Hardware Vulnerabilities. In Proceedings of the 2021 19th IEEE International New Circuits and Systems Conference (NEWCAS), Toulon, France, 13–16 June 2021; pp. 1–4. [Google Scholar]

- Tiri, K.; Acıiçmez, O.; Neve, M.; Andersen, F. An Analytical Model for Time-Driven Cache Attacks. In Fast Software Encryption; Biryukov, A., Ed.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4593, pp. 399–413. ISBN 978-3-540-74617-1. [Google Scholar]

- Domnitser, L.; Abu-Ghazaleh, N.; Ponomarev, D. A Predictive Model for Cache-Based Side Channels in Multicore and Multithreaded Microprocessors; Springer: Berlin/Heidelberg, Germany, 2010; pp. 70–85. [Google Scholar]

- Demme, J.; Martin, R.; Waksman, A.; Sethumadhavan, S. Side-Channel Vulnerability Factor: A Metric for Measuring Information Leakage. SIGARCH Comput. Archit. News 2012, 40, 106–117. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, F.; Chen, S.; Lee, R.B. Side Channel Vulnerability Metrics: The Promise and the Pitfalls. In Proceedings of the 2nd International Workshop on Hardware and Architectural Support for Security and Privacy, Tel-Aviv, Israel, 23–24 June 2013; Association for Computing Machinery: New York, NY, USA, 2013. [Google Scholar]

- Doychev, G.; Köpf, B.; Mauborgne, L.; Reineke, J. CacheAudit: A Tool for the Static Analysis of Cache Side Channels. ACM Trans. Inf. Syst. Secur. 2015, 18, 1–32. [Google Scholar] [CrossRef]

- Deng, S.; Matyunin, N.; Xiong, W.; Katzenbeisser, S.; Szefer, J. Evaluation of Cache Attacks on Arm Processors and Secure Caches. IEEE Trans. Comput. 2021, 1. [Google Scholar] [CrossRef]

- Yu, X.; Xiao, Y.; Cameron, K.; Yao, D. (Daphne) Comparative Measurement of Cache Configurations’ Impacts on Cache Timing Side-Channel Attacks. In Proceedings of the 12th USENIX Workshop on Cyber Security Experimentation and Test (CSET 19), Santa Clara, CA, USA, 12 August 2019; USENIX Association: Santa Clara, CA, USA, 2019. [Google Scholar]

- Amid, A.; Biancolin, D.; Gonzalez, A.; Grubb, D.; Karandikar, S.; Liew, H.; Magyar, A.; Mao, H.; Ou, A.; Pemberton, N.; et al. Chipyard: Integrated Design, Simulation, and Implementation Framework for Custom SoCs. IEEE Micro 2020, 40, 10–21. [Google Scholar] [CrossRef]

- Dörflinger, A.; Albers, M.; Kleinbeck, B.; Guan, Y.; Michalik, H.; Klink, R.; Blochwitz, C.; Nechi, A.; Berekovic, M. A Comparative Survey of Open-Source Application-Class RISC-V Processor Implementations. In Proceedings of the 18th ACM International Conference on Computing Frontiers, Virtual Event Italy, 11–13 May 2021; ACM: New York, NY, USA, 2021; pp. 12–20. [Google Scholar]

- Binkert, N.; Beckmann, B.; Black, G.; Reinhardt, S.K.; Saidi, A.; Basu, A.; Hestness, J.; Hower, D.R.; Krishna, T.; Sardashti, S.; et al. The Gem5 Simulator. SIGARCH Comput. Archit. News 2011, 39, 1–7. [Google Scholar] [CrossRef]

- Davis, A.; Schuette, M.; Franklin, D.; Thomasian, A.; Mekhiel, N.; Hu, Y.; Baker, R.J.; Smith, J. Cache, DRAM, Disk. In Memory Systems; Elsevier: Amsterdam, The Netherlands, 2008; pp. i–ii. ISBN 978-0-12-379751-3. [Google Scholar]

- Ge, Q.; Yarom, Y.; Cock, D.; Heiser, G. A Survey of Microarchitectural Timing Attacks and Countermeasures on Contemporary Hardware. J. Cryptogr. Eng. 2018, 8, 1–27. [Google Scholar] [CrossRef]

- Savaş, E.; Yılmaz, C. A Generic Method for the Analysis of a Class of Cache Attacks: A Case Study for AES. Comput. J. 2015, 58, 2716–2737. [Google Scholar] [CrossRef]

- Lou, X.; Zhang, T.; Jiang, J.; Zhang, Y. A Survey of Microarchitectural Side-Channel Vulnerabilities, Attacks, and Defenses in Cryptography. ACM Comput. Surv. 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Bernstein, D. Cache-Timing Attacks on AES; The University of Illinois at Chicago: Chicago, IL, USA, 2005. [Google Scholar]

- Spreitzer, R.; Plos, T. On the Applicability of Time-Driven Cache Attacks on Mobile Devices. In International Conference on Network and System Security; Lopez, J., Huang, X., Sandhu, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 656–662. [Google Scholar]

- Acıiçmez, O.; Koç, Ç.K. Trace-Driven Cache Attacks on AES (Short Paper); Springer: Berlin/Heidelberg, Germany, 2006; pp. 112–121. [Google Scholar]

- Kizhvatov, I. Error-Tolerance in Trace-Driven Cache Collision Attacks; University of Luxembourg: Luxembourg, 2011. [Google Scholar]

- Neve, M.; Seifert, J.-P. Advances on Access-Driven Cache Attacks on AES; Springer: Berlin/Heidelberg, Germany, 2007; pp. 147–162. [Google Scholar]

- Tromer, E.; Osvik, D.A.; Shamir, A. Efficient Cache Attacks on AES, and Countermeasures. J. Cryptol. 2010, 23, 37–71. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Ding, A.A.; Fei, Y.; Jiang, Z.H. Statistical Analysis for Access-Driven Cache Attacks Against AES. IACR Cryptol. ePrint Arch. 2016, 2016, 970. [Google Scholar]

- Lipp, M.; Gruss, D.; Spreitzer, R.; Maurice, C.; Mangard, S. ARMageddon: Cache Attacks on Mobile Devices. In Proceedings of the 25th USENIX Conference on Security Symposium, Austin, TX, USA, 10–12 August 2016; USENIX Association: Berkeley, CA, USA, 2016; pp. 549–564. [Google Scholar]

- Kayaalp, M.; Abu-Ghazaleh, N.; Ponomarev, D.; Jaleel, A. A High-Resolution Side-Channel Attack on Last-Level Cache. In Proceedings of the 53rd Annual Design Automation Conference, Austin, TX, USA, 5–9 June 2016; ACM: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Yarom, Y.; Falkner, K. FLUSH+RELOAD: A High Resolution, Low Noise, L3 Cache Side-Channel Attack. In Proceedings of the 23rd USENIX Security Symposium (USENIX Security 14), San Diego, CA, USA, 20–22 August 2014; USENIX Association: San Diego, CA, USA, 2014; pp. 719–732. [Google Scholar]

- Liu, F.; Yarom, Y.; Ge, Q.; Heiser, G.; Lee, R.B. Last-Level Cache Side-Channel Attacks Are Practical. In Proceedings of the 2015 IEEE Symposium on Security and Privacy, San Jose, CA, USA, 17–21 May 2015; pp. 605–622. [Google Scholar]

- Gulmezoglu, B.; Inci, M.S.; Irazoqui, G.; Eisenbarth, T.; Sunar, B. Cross-VM Cache Attacks on AES. IEEE Trans. Multi-Scale Comp. Syst. 2016, 2, 211–222. [Google Scholar] [CrossRef]

- Green, M.; Rodrigues-Lima, L.; Zankl, A.; Irazoqui, G.; Heyszl, J.; Eisenbarth, T. AutoLock: Why Cache Attacks on ARM Are Harder than You Think. In Proceedings of the 26th USENIX Conference on Security Symposium, Vancouver, BC, Canada, 16–18 August 2017; USENIX Association: Berkeley, CA, USA, 2017; pp. 1075–1091. [Google Scholar]

- Gruss, D.; Spreitzer, R.; Mangard, S. Cache Template Attacks: Automating Attacks on Inclusive Last-Level Caches. In Proceedings of the 24th USENIX Security Symposium (USENIX Security 15), Washington, DC, USA, 12–14 August 2015; USENIX Association: Berkeley, CA, USA, 2015; pp. 897–912. [Google Scholar]

- Lipp, M. Cache Attacks and Rowhammer on ARM. Master’s Thesis, Graz University of Technology, Graz, Austria, 2016. [Google Scholar]

- Deng, S.; Xiong, W.; Szefer, J. Analysis of Secure Caches Using a Three-Step Model for Timing-Based Attacks. J. Hardw. Syst. Secur. 2019, 3, 397–425. [Google Scholar] [CrossRef]

- Daemen, J.; Rijmen, V. The Design of Rijndael: AES—The Advanced Encryption Standard; Information Security and Cryptography; Springer: Berlin/Heidelberg, Germany, 2002; ISBN 978-3-642-07646-6. [Google Scholar]

- Zhao, X.-J.; Wang, T.; Guo, S.-Z.; Zheng, Y.-Y. Access Driven Cache Timing Attack Against AES: Access Driven Cache Timing Attack against AES. J. Softw. 2011, 22, 572–591. [Google Scholar] [CrossRef]

- Bonneau, J.; Mironov, I. Cache-Collision Timing Attacks Against AES. In Proceedings of the Cryptographic Hardware and Embedded Systems—CHES 2006; Goubin, L., Matsui, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 201–215. [Google Scholar]

- Wan, Y.; Luo, X.; Qi, Y.; He, J.; Wang, Q. Access-Driven Cache Attack Resistant and Fast AES Implementation. Int. J. Embed. Syst. 2018, 10, 32. [Google Scholar] [CrossRef]

- Sepúlveda, J.; Gross, M.; Zankl, A.; Sigl, G. Beyond Cache Attacks: Exploiting the Bus-Based Communication Structure for Powerful On-Chip Microarchitectural Attacks. ACM Trans. Embed. Comput. Syst. 2021, 20, 1–23. [Google Scholar] [CrossRef]

- Hennessy, J.L.; Patterson, D.A. A New Golden Age for Computer Architecture. Commun. ACM 2019, 62, 48–60. [Google Scholar] [CrossRef] [Green Version]

- Maurice, C.; Neumann, C.; Heen, O.; Francillon, A. C5: Cross-Cores Cache Covert Channel. In International Conference on Detection of Intrusions and Malware, and Vulnerability Assessment; Almgren, M., Gulisano, V., Maggi, F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 46–64. [Google Scholar]

- Weiß, M.; Weggenmann, B.; August, M.; Sigl, G. On Cache Timing Attacks Considering Multi-Core Aspects in Virtualized Embedded Systems. In International Conference on Trusted Systems; Yung, M., Zhu, L., Yang, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 151–167. [Google Scholar]

- Irazoki, G. Cross-Core Microarchitectural Attacks and Countermeasures. Ph.D. Thesis, Worcester Polytechnic Institute, Worcester, MA, USA, 2017. [Google Scholar]

- Kaur, J.; Das, S. A Survey on Cache Timing Channel Attacks for Multicore Processors. J. Hardw. Syst. Secur. 2021, 5, 169–189. [Google Scholar] [CrossRef]

- Asanović, K.; Avizienis, R.; Bachrach, J.; Beamer, S.; Biancolin, D.; Celio, C.; Cook, H.; Dabbelt, D.; Hauser, J.; Izraelevitz, A.; et al. The Rocket Chip Generator; EECS Department, University of California: Berkeley, CA, USA, 2016. [Google Scholar]

- Xiong, W.; Katzenbeisser, S.; Szefer, J. Leaking Information Through Cache LRU States in Commercial Processors and Secure Caches. IEEE Trans. Comput. 2021, 70, 511–523. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).