Abstract

Four-dimensional virtual patient is a simulation model integrating multiple dynamic data. This study aimed to review the techniques in virtual four-dimensional dental patients. Searches up to November 2022 were performed using the PubMed, Web of Science, and Cochrane Library databases. The studies included were based on the superimposition of two or more digital information types involving at least one dynamic technique. Methodological assessment of the risk of bias was performed according to the Joanna Briggs Institute Critical Appraisal Checklist. Methods, programs, information, registration techniques, applications, outcomes, and limitations of the virtual patients were analyzed. Twenty-seven full texts were reviewed, including 17 case reports, 10 non-randomized controlled experimental studies, 75 patients, and 3 phantoms. Few studies showed a low risk of bias. Dynamic data included real-time jaw motion, simulated jaw position, and dynamic facial information. Three to five types of information were integrated to create virtual patients based on diverse superimposition methods. Thirteen studies showed acceptable dynamic techniques/models/registration accuracy, whereas 14 studies only introduced the feasibility. The superimposition of stomatognathic data from different information collection devices is feasible for creating dynamic virtual patients. Further studies should focus on analyzing the accuracy of four-dimensional virtual patients and developing a comprehensive system.

1. Introduction

Digital workflows are becoming more accurate in dental medicine because of technological innovations. Transferring intraoral and extraoral data to a virtual environment is the first step in digital treatment. Currently, digital information can be captured in different ways, including desktop scanners (DS), intraoral scanners (IOS), facial scanners (FS), cone beam computed tomography (CBCT), computed tomography (CT), cephalometry, and photography. These methods can produce different file formats, such as standard tessellation language (STL), object code (OBJ), polygon (PLY), and digital imaging and communications in medicine (DICOM). A three-dimensional (3D) virtual patient can be created after the alignment and fusion of various data formats, including information about a real patient’s teeth, soft tissues, and bones [1]. Thus, if real patients are indisposed, dental treatment plans could still be realized in virtual patients reducing chair time and patients’ appointments.

Currently, investigators focus on static virtual patients, with improved gains through new materials, automation, and quality control [2,3]. However, static simulated patients cannot reflect real-time changes. The stomatognathic system comprises the skull, maxilla, mandible, temporomandibular joint (TMJ), teeth, and muscles, and changes in one part will cause synergistic changes in the others [4]. Therefore, integrating TMJ and mandible movement, occlusal dynamic, and soft tissue dynamic information (such as muscle movement and facial expression) to construct four-dimensional (4D) virtual patients is required in the future [5]. Four dimensions use time to express action; thus, four-dimensional patients with temporal information help understand the dynamic interactions of anatomical components under functional activities such as chewing, speech, and swallowing.

The first step in creating a 4D virtual patient is digitalizing the motion data. The virtual facebow (VF) and virtual articulator (VA) combination can facilitate positional relationship replication between the skull and jaws, simulating mandible movements [6]. In addition, a jaw motion analyzer (JMA) moves the digitized dentitions along paths in the computer, helping to visualize kinematic occlusion collisions and the condyle trajectory [7,8]. Information such as the smile line, lip movements, and facial expressions is essential to ensure functional outcomes and aesthetic performance and to construct a pleasant smile [9]. Finally, in various computer-assisted design and computer-aided manufacturing (CAD/CAM) programs, 4D simulated patients with complex movements are built. Currently, 4D virtual patient types vary according to clinical needs. Few studies have comprehensively analyzed the existing 4D dental virtual model construction techniques.

Therefore, this systematic review aims to summarize the current scientific knowledge in the dental dynamic virtual patient field to guide subsequent related research.

2. Materials and Methods

2.1. Eligibility Criteria

This systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [10,11,12]. (Table S1) The present review was not registered because it belongs to the literature reviews that use a systematic search, which PROSPERO does not accept. The focus was on the technique, accuracy, and application of dynamic multi-modal data fusion to create four-dimensional virtual patients in dentistry. The criteria for study selection were (1) the possibility of creating a 4D dental dynamic virtual patient analyzing at least one patient or phantom; (2) possible integration of two or more digital methods, at least one of which captures dynamic information; (3) availability of the methods and devices used; (4) review articles, opinion articles, interviews, charts, and non-English articles were excluded from this systematic review. The PICOS terms were defined as population (P), four-dimensional virtual patient; intervention (I), dynamic digitization technology; comparison © was omitted because the current review was not expected to have randomized controlled trials or relevant controlled trials; outcome (O): dental applications or accuracy analysis; setting (S): multi-modal data fusion.

2.2. Information Sources

The literature search was conducted by reviewing three online databases for eligible studies: PubMed, Medline (Web of Science), and Cochrane Library. The references of the full-text articles were additionally screened manually for other relevant studies. A four round “snowball procedure” was carried out to identify other published articles that met the review’s eligibility criteria. (Figure S1) The “snowball procedure” is a multi-round forward screening, after the full-text screening, to search the eligible papers from the reference lists of the included papers. Once a new study/reference is included, its references are called snowball papers, which will undergo a new round of snowball screening. This procedure ends only when no snowball papers can be included in the last round [13].

2.3. Search Strategy

The first search in the database was performed on 5 August 2022. The search strategy was assembled using Medical Subject Headings (MeSH) terms and free-text words. Search terms were grouped according to the PICOS principle (Table 1). Weekly literature tracking was then conducted separately in the three databases using the above search terms to obtain the latest relevant literature.

Table 1.

Overview of Electronic Search Strategy.

2.4. Study Records

After the first duplicate check in NoteExpress, the articles were imported into the Rayyan website [14] for the second duplicate check. Titles and abstracts were screened independently by two reviewers (YY and QL) on Rayyan [15], a tool to filter titles and abstracts effectively and to collaborate on the same review. Disagreements were resolved by discussion. For controversial articles labeled as maybe, reviewers discussed including them after reading the full text. After screening, full-text articles of selected titles and abstracts were acquired and read intensively by two reviewers to determine eligible articles. All authors discussed the remaining controversial articles to obtain a consensus. Snowball articles were included from the full-text references and were selected following the same principle.

2.5. Data Extraction

The following parameters were extracted from the selected full-text articles after the selection process: Author(s) and year of publication, study design, sample size, methods (including file format), manufacturer software programs, information, type of superimposition, scope, outcomes, and limitations.

2.6. Evaluation of Quality

The Joanna Briggs Institute (JBI) Critical Appraisal Checklist for quasi-experimental studies [16] and case reports [17] was used for the non-randomized controlled experimental studies/case reports. Two reviewers (YY and QL) independently assessed the methodological quality of the included studies. For every question in the checklist, except Q3 in the Checklist for Quasi-Experimental Studies, a yes answer means the question is low risk. Studies that met 80–100%/60–79%/40–59%/0–39% of the criteria were considered to have a low/moderate/substantial/high risk of bias, respectively [13]. In cases of disagreement, the decision was made by discussion among all authors.

3. Results

3.1. Search

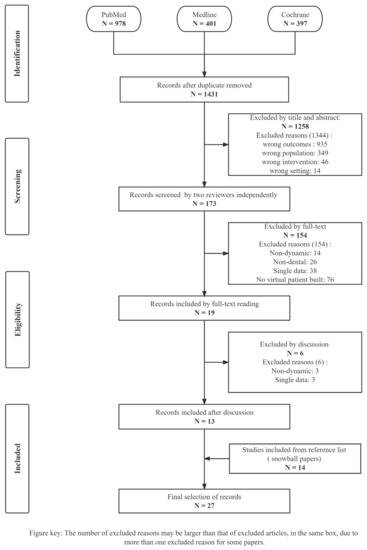

The systematic search was completed on 30 November 2022. The QUORUM diagram details are shown in Figure 1. The snowball procedure is illustrated in Figure S1. The search yielded 1776 titles, 173 titles and abstracts were identified. Subsequently, 19 full texts were selected by two reviewers, of which 550 references underwent four rounds of citation checks using the snowball procedure, yielding 14 articles. Six controversial articles were excluded from the 19 full-text articles based on the outcomes of the discussion between all authors. The reasons for exclusion were as follows: single dynamic data (n = 1), non-dynamic patients (n = 4), and no virtual patient built (n = 1). Finally, 27 articles were included in the systematic review; the reasons for excluding other papers are shown in Figure 1.

Figure 1.

PRISMA flow chart. This diagram describes the identification, screening, exclusion reasons, and included procedures of 27 included articles. The number of excluded reasons exceeds that of excluded articles only at the screening phase, where the total number of reasons excluded was 1344, but 1258 articles were excluded.

3.2. Description of Studies

The characteristics of the included studies are presented in Table 2. The included publications were dated from 2007 to 2022, without intentional time restriction, since the 4D virtual patient is a new technology recently proposed. The review included 17 case reports and 10 nonrandomized controlled experimental studies. No randomized controlled trials (RCT) were found. Most of the 27 studies had only one subject/phantom, except for 2 [18,19]. In total, 75 patients and three phantoms were included in creating 4D virtual patient models.

Table 2.

Information on the 27 included studies to compose dynamic simulated dental patient.

Two or more methods, including static information and dynamic information collection devices, can acquire different formats of 3D data. The present article focuses on dynamic data (Table 3); 10 studies acquired real-time jaw-motion data, 4 analyzed the dynamic facial information, 13 simulated the jaw position, and 2 examined the coordinated movement of the masticatory system. Three to five types of information acquired from the above data were integrated to create 4D virtual patients. Additionally, the included studies focused on different clinical scopes: prosthetic dentistry (n = 19) (including implant dentistry (n = 4)), maxillofacial surgery (n = 8), and orthodontics (n = 5).

Table 3.

Summary of the dynamic data.

3.3. Risk of Bias in Included Studies

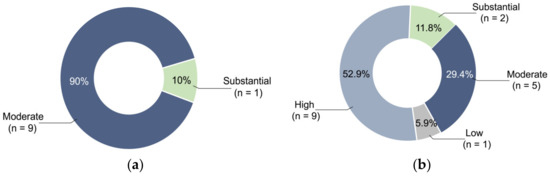

Table S2 describes the risk of bias assessment in the 10 non-randomized controlled experimental studies. All had a low risk of bias for Q1, Q4, Q7, and Q8 of the JBI Critical Appraisal Checklist. For Q2, Q3, and Q9, 80%, 90%, and 60% of the studies had a low risk of bias, respectively. For the overall risk, 90% of the studies showed moderate risk and 10% showed substantial risk (Figure 2a). Table S3 describes the risk of bias assessment of the 17 case reports. All these had a low risk of bias for Q5, Q6, Q7, and Q9 of the JBI Critical Appraisal Checklist. Except for four studies that indicated low risk, Q4 was not applicable in most studies where specific diseases were not crucial during the construction of the simulated model. However, for Q1, Q2, and Q3, 76.5%, 69.5%, and 52.9% of the case reports, respectively, showed a high risk of bias. For the overall risk, 52.9%, 29.4%, 11.8%, and 5.9% showed high, moderate, substantial, and low risk, respectively (Figure 2b).

Figure 2.

Percentage of different risk levels: (a) For the overall risk in non-randomized controlled experimental studies, 90% showed moderate risk and 10% showed substantial risk. (b) For the overall risk in case reports, 52.9%, 29.4%, 11.8%, and 5.9% showed high, moderate, substantial, and low risk, respectively.

3.4. Outcomes

3.4.1. Dynamic Data Collection Methods of 4D Virtual Patients

- 1.

- Mandibular movement (jaw motion)

Ten studies acquired real-time jaw-motion data. Only two mentioned the dynamic file format (Moving Picture Experts Group 4 (MP4) [20]/text file (TXT) [20,38]). One study captured videos of mandibular movements using a target-tracking camera [20]. Two studies introduced FS and targets to record jaw movements and the accumulated movement paths [21,31]. The kinematics of the occlusion/condyle can be simulated by combining jaw tracking with DS/CBCT. The accuracy of the FS + targets method, evaluated by the distance between the targets, showed a value of 4.1–6.9 mm (a minor error compared with that of laboratory scanners [78 mm]). Three studies used an electromagnetic (EM) system to track the positions of the maxillary bone segment (MBS) and the reference in physical space [25] or the mandibular proximal segment (MPS) and condyle position [26], or mandibular repositioning and occlusal correction [18]. One study used an optical analyzer to track the position of light-emitting diodes mounted on facebow and jaw movements [4]. Three studies acquired mandibular movements [29,35,38], condylar motions [29,38], and collision detection [38] by the ultrasonic system. The ultrasound Arcus Digma system has an accuracy of 0.1 mm and 1.5°, and its pulse running time was converted to 3D coordinate values and saved as TXT files [38].

- 2.

- Dynamic faces

One study [23] used a smartphone facial tracking app to track lip dynamics. Three studies acquired semi-dynamic faces using FS and image processing software. Semi-dynamic facial information refers to dynamic bits of facial details [42] or faces at different times [34,43] instead of real-time facial changes.

- 3.

- Positional relationships of the dentition, jaws, skull, and TMJ (jaw position)

Thirteen studies obtained positional relationships to help simulate jaw movements on the VA. First, the facial reference system helped to locate the natural head position (NHP), a reliable plane to align the skull and VA hinge axis [22,30]. The common VF techniques involve transferring the maxillary dentition to the FS, guided by an Intraoral transfer element (IOTE) (i.e., facebow fork) or a scan body, and to the FS without an IOTE/scan body [19,22,24,32,33,37,40,42]. A modified IOTE combined with LEGO blocks, trays, and impressions enabled a convenient transfer procedure [32,33]. The VF showed average trueness of 1.14 mm and precision of 1.09 mm based on the FS of a smartphone [19]. There is a difference between the virtual transferred maxillary position and its real position (a trueness of 0.138 mm/0.416 mm and a precision of 0.022 mm/0.095 mm were obtained using the structured white light (SWL)/structure-from-motion (SFM) scanning method). This difference is mainly caused by the registration error, which may be reduced by different alignment methods and IOTE [37]. Special VF transfers the relationship by anatomical points/marker planes, reducing the errors by omitting the traditional facebow transfer [36]. The FS can be replaced by photographs [30,41]/CBCT [27,28]; however, the accuracy was not calculated. Once VF transfer is completed, VA systems are available for jaw motion simulation.

- 4.

- Coordinated movement of the masticatory system

Two studies used finite element (FE) software to analyze mandibular movements, masticatory muscle performance, occlusal force [38], and stress distribution of TMJ [39].

3.4.2. 4D Superimposition Techniques of Virtual Patients

The main components of stomatognathic information include static information such as the skeletal components (SK) of the skull, jaws, and TMJ, dentition (DENT), dynamic information such as mandibular movement (MM), jaw position (JP), occlusal analyses (OA), and motion of TMJ soft tissues and muscles. Moreover, soft tissues of the face (SF) can be either in a dynamic or static form. Three to five of these components were combined to create virtual models based on 4D superimposition techniques. These models are created using various software systems.

- 1.

- Five types of information superimpositions

- SK + DENT + MM + SF + TMJ soft tissues/SK + DENT + MM + OA + muscles

Two studies created models [29,38] including the skeletal components from CT (DICOM format), dentitions from DS (STL format), and jaw movement from the Jaw motion tracker (JMT) (TXT format [38]). Lszewski et al. [29] added MRI’s facial and TMJ soft tissues to the above data. The virtual model’s superimposition and real scenes were based on an algorithm and fiducial markers. The module’s accuracy requires further validation before clinical application. Dai et al. [38] included masticatory muscles and occlusal analyses in their model. The regional registration method was used to superimpose the dentition in the occlusion. Their FE model showed an accuracy (≤0.5 mm) similar to that of the T-Scan.

- 2.

- Four types of information superimpositions

- SF + DENT + OA + MM/JP:

Two studies integrated facial tissues from FS, dentition from DS [21]/IOS [19], occlusal contact analyzed by the CAD software, and mandibular movement tracking from FS + targets [21]/jaw position from VF + VA [19]. The alignment of the maxillary cast, scan body, and facial tissues was based on the points of the teeth [21]. The superimposition of FS and CBCT was based on the facial surface. The deviation of their models was 1 mm in linear distance and 1° in angulation [19].

- SK + SF + DENT + JP:

- Six studies [24,27,28,32,33,34] constructed virtual patients mounted on the VA with faces from the FS (OBJ [24]/STL [27]/PLY [28] format)/photographs [34], bones from CBCT/CT (DICOM format), and dentition/prosthesis from the IOS (STL format). The models of three studies [24,27,28] were centric relation occlusion (CRO) and vertical dimension of occlusion (VDO). The prosthetic outcomes demonstrated a good fit, occlusion, and esthetics. Three studies created models based on points and fiducial markers [24,32,33]. One study [32] aligned the models with CBCT using the Iterative Closest Point (ICP) algorithm. The errors in tooth registration were less than 1 mm, whereas those of the nasion, alares, and tragions were 0.83 mm, 0.77 mm, and 1.70 mm, respectively. Further research is required to reduce this discrepancy and distortion. Granata et al. [42] created a virtual patient with faces in a smiling, open mouth, and maximum intercuspation (MICP) positions from FS (OBJ format), dental arches from IOS (PLY/STL format), and bones and jaw position from CBCT with the occlusal registration device Digitalbite (DGB). The superimposition was based on fiducial markers and a best-fitting algorithm.

- SK + SF + DENT + MM:

Kwon et al. [31] introduced a virtual patient with skeletons from CBCT, dentitions from DS, and a face combined with mandibular motions using FS + targets. The alignment was based on triangulated mesh points and a transformation matrix. The tracking system stability and reproduction were acceptable compared with routine VF transfer. Noguchi et al. [43] created virtual models integrating the mandible, TMJs, and outline of the soft tissue from cephalometry and dentition from DS and traced their movement. The projection-matching technique is based on the contour line of the projection image, and the registration error is the same as that in conventional cephalometry.

- SK + DENT + MM + TMJ soft tissues:

Savoldelli et al. [39] combined the bone components of jaws and TMJs, dental arches from multi-slice CT, and soft tissues of TMJs when the jaw was opened 10 mm. Then, the joint discs’ boundary conditions and stress distribution were analyzed showing a high level of accuracy (stress levels of the model [5.1 MPa] were within the range of reported stress [0.85–9.9 Mpa]).

- 3.

- Three types of information superimpositions

- SK + DENT + MM:

Zambrana et al. [20] constructed a virtual patient with jaws and TMJs from CBCT (DICOM format), dentition with maxillomandibular relationship from IOS (STL format), and mandibular movements from a target tracking video (MP4 format). Registration was based on the surface and points. Five studies combined bones from CT [4]/MDCT [25,26]/CBCT [35]/cephalograms [18], dentition from DS [4,25,26]/CBCT [35], and positions of MBS [25]/MPS [26]/mandibular movement [4,18,35] from the JMT. Integrations [4,18,25,26,35] were based on fiducial markers, and some were combined with the least-squares method [4]/ICP algorithm [25,26]. The technique of Fushima et al. [18] showed high accuracy (the minor standard deviations (SD) <0.1 mm). The two methods showed no significant difference between the actual and measured positions [25]. The condylar landmark results showed high accuracy (differences between MPS models and those between CT models were 1.71 ± 0.63 mm and 1.89 ± 0.22 mm, respectively) [26]. He et al. [35] analyzed the condyle position, which was more accurate than CBCT, with records showing high precision over three days.

- SF/SK + DENT + JP:

One study [36] combined bones from CBCT with mandibular position from VA and VF and dentition in CRO/MICP from IOS to simulate a patient. Six studies [22,30,37,40,41] described methods to create models with faces from FS [22,23,37,40]/2D photograph [30,41], dentition models from IOS [22,23,30,41]/DS [37], and mandibular position using VF and VA. Kois et al. [30] used the “Align Mesh” tool to align data. The Alignment of the casts, scan body, facial scan, or alignment of the facebow forks was based on points [22,41]. IOS and CBCT/FS were registered based on points and the ICP algorithm [36,37] or fiducial markers of the scan body [23,40].

- 4.

- Software programs to create the virtual patients

An open-source program (Blender 3D; Blender Foundation, Amsterdam, Netherlands) can import MP4 dynamic information directly. The MP4 file can be transferred into TXT format using a direct linear transform (DLT) algorithm, which facilitates the integration [20], marking the reference points to align the models acquired from other CAD software programs using Python [19]. In this program, STL/PLY files can be transferred to the OBJ format to facilitate the fusion of different formats [23].

Exocad (Exocad; exocad GmbH, Darmstadt, Germany) is the most common multifunction CAD software used to build virtual patients. It can align the IOS/DS with the FS, with/without CBCT, guided by the scan body [22,40]/IOTE [19]/gothic arch tracer, wax [27,40]/DGB [42], positions NHP, transfers facebow, and finally integrates the model into the VA. Exocad can also analyze the occlusal discrepancy between MIP and CR [21,24] or design a tooth-supported template [28]. Finally, restorations can be created based on the VDO and the occlusal plane [34]. Other software programs for constructing the 4D virtual patients are presented in Table 4.

Table 4.

Summary of the software to create 4D patients.

FE modeling and analysis software can convert 4D virtual models created by other software into numerical models and analyze dynamic/static components. The FE software commonly used for 4D virtual models is ANSYS (ANSYS Inc., Canonsburg, PA, USA) [38]/FORGE (Transvalor, Glpre 2005, Antibes, France) [39]. Processing software such as AMIRA (Visage Imaging, Inc., SD, CA, USA) are often used to obtain surface and volume meshes. The volumes of the anatomical components were input to FE software and meshed as the element; subsequently, the mesh quality and nodes’ quality were verified. The accuracy of a FE model is determined using geometric models.

3.4.3. Clinical Applications of 4D Virtual Patients

The 4D virtual patient is built to apply to different clinical scopes.

- 1.

- Application in prosthetic dentistry and dental implant surgery

In this field, the 4D virtual patient mainly involves locating the jaw positions and condylar axes, obtaining functional data, simulating the mandible and condyle trajectories, and analyzing occlusion. Traditional restoration processes focusing only on static occlusion may lead to poor occlusal function and TMJs disorder. However, for digital workflows, VF techniques locate the position of jaws and condyles [19,22,23,24,27,28,30,32,33,37,40,41,42], and VA [22,24,27,28,30,36,40] or JMA [20,31,35] helps simulate or record patient-specific mandibular and TMJ kinematics. These procedures obtain the correct MICP, CRO, and VDO for coordinated dental implants and restorations in a stable position [24,27,28,36]. The dynamic occlusal analysis allows the detection of occlusal interference during eccentric movements to design anatomic prostheses [4,19,21]. Additionally, the condylar motion trajectory and mandibular movement pathway can help diagnose and treat TMJ diseases and facilitate occlusal reconstruction [20,31]. Furthermore, adding facial information to the virtual patient is beneficial to harmonizing the prosthesis with the face, ensuring an aesthetic effect [23,30,34,41,42]. CAD systems perform the above digital analysis and design, and finally, dock CAM for guide template and restoration fabrication. The innovative workflows of 4D virtual models are particularly suitable for complex implant rehabilitation [24,27,28] and complete denture restoration [40], resulting in excellent repair results with patient satisfaction.

- 2.

- Application in maxillofacial surgery

Traditional orthognathic surgery planning is effective but time-consuming, and many factors, such as the occlusal recording and mounting, affect the accuracy. Computer-assisted orthognathic surgery is an interdisciplinary subject that combines signal engineering, medical imaging, and orthognathic surgery to improve efficiency. In addition to facial esthetics, reducing mandibular spin is essential to obtaining stable skeletal and occlusal outcomes and preventing temporomandibular disorders. Therefore, studies have improved accuracy and minimized spin using 4D virtual models in surgical systems.

This article mainly included planning [4,18,29,35,36,43] and navigation systems [25,26]. The ACRO system integrates modules for planning, assisting surgery, and bringing information from virtual planning to the operating room [29]. The Aurora system (Aurora, Northern Digital Inc., Waterloo, ON, Canada) uses augmented reality (AR) to locate bone segments and condylar positions [25,26]. The 4D analysis system TRI-MET (Tokyo-Shizaisha, Tokyo, Japan) was used to simulate mandibular motion, condylar to articular fossa distance, and occlusal contact [4]. The mandibular motion tracking system (ManMoS), a communication tool for operational trial and error, predicts changes in occlusion and repeatedly determines mandibular position [18]. The SICAT system (SICAT, Bonn, Germany) can show the motion of the incisors and condyles during mandibular movement, thereby avoiding additional radiation exposure [35]. The simulation system based on cephalometry allows the location of 3D bone changes without CBCT, reducing radiation and errors in manual pointing [43].

- 3.

- Application in orthodontics

Most of the included papers have cross-disciplinary applications. The VF, VA, and JMA to locate the mandible and condyles are also crucial for orthodontics. This helps reconstruct stable and balanced occlusion in the optimum position and prevents recurrence and TMJ symptoms. The simulated position of the incisors, jaws, and soft tissue provides a visual treatment objective. As mentioned above, analysis of pre- and post-operative tissue changes [34], orthodontic-orthognathic planning [4,43], and dynamic facial information of virtual patients are also applicable to aesthetic orthodontics plans and outcomes.

Traditional orthodontic treatment often focuses on dentition and bone problems in three dimensions but ignores the improvement of mastication efficiency and TMJ health. Quantifying masticatory function is essential for occlusal evaluation and orthodontic tooth movement. The directly mentioned application of the dynamic patient models in orthodontics mainly involves the analysis of masticatory muscles, stress distribution in the articular disc, mandibular movements, and occlusion [38,39]. FE methods can simulate dynamic masticatory models, including muscle forces to the teeth, to determine the magnitude and direction of the bite force. High-resolution FE models analyzed the stress distribution and symmetry of TMJ and the boundary conditions of mandibular during the closure process. Further studies are expected to enable the prediction of various stress loads on the TMJ disc in the context of mandibular trauma, surgery, or dysfunction.

4. Discussion

With the development of computer-aided design/computer-aided manufacturing (CAD/CAM) technology, virtual patient construction, incorporating multi-modal data, has been widely used in multiple fields of dentistry. Building a 4D virtual dental patient using dynamic information is of great interest. The present review revealed the methods, manufacturer software programs, information, registration techniques, scopes, accuracy, and limitations of existing approaches to dynamic virtual patient construction. Data from multiple sources and formats were captured using various methods and programs. Specific alignment methods integrate various types of information to build 4D virtual patients in different clinical settings.

4.1. Dynamic Data Collection Methods

The JMT system, FS + targets, and target tracking camera were used to acquire real-time jaw motion data. The EM JMT system uses a magnetic sensor to track jaw motion, bone segment, and condyle positions and a receiver to detect movement, which is popular in minimally invasive surgeries [18,25,26]. Nevertheless, electromagnetic interference can affect the device’s accuracy [44]. An optical JMT system can display condyles and mandibular movement trajectories. The limitation of this method is the strict conditions and motion restriction by a sizeable facebow [4,20]. Ultrasonic JMT systems transfer acoustic signals from the transmitter into spatial information to record movements, which may be vulnerable to environmental conditions [29,35,38]. FS or photographs combined with targets can simultaneously capture jaw motion and the face and show a small error [21,31,41]. The mobile phone’s camera, connected to a marker board, captures movements inexpensively and conveniently but without a test of the accuracy [20]. These methods capture much information, such as mandibular movements and kinematics of the condyles, in all degrees of freedom (including excursive movements, maximum mouth opening, protrusion, and lateral excursions). However, few studies have reported real-time jaw motion accuracy and file format.

Integrating kinematic digital VF with VA makes capturing jaw movements with acceptable accuracy possible [19,37]. Most VA assembly procedures include a digital impression of dentition, occlusal recording, VF transfer of the maxilla position to the skull, and mounting the models to VA. Various software [19,22,24,27,28,30,33,34,36,37,40,41,42] include the VA procedure. Although this approach does not present motion in real-time, it is compatible with file formats and requires more information. The FE method accurately simulates the masticatory system [38,39]. In addition, studies on the fusion of dynamic facial information in 4D virtual patients are lacking.

4.2. 4D Superimposition Techniques

The 4D superimposition technique integrates three to five types of information to create dynamic patients. The technology’s superimposition methods, software, and outcome varied among different research.

4.2.1. Superimposition Methods

Image fusion and virtual patient creation are based on selecting the corresponding marker for the superimposition of data from multiple sources. The construction of a simulated model may involve multiple alignment processes. The alignment methods in the included articles were mainly based on points [20,21,22,24,32,33,36,37,40,41], fiducial markers [4,18,22,23,24,25,26,27,29,31,32,33,35,40,41,42], surface [19,20], and anatomical structure [39,43]. Registration based on additional attached fiducial markers is also a point-based registration method. Some algorithms, such as the ICP [21,26,27,32,33,36,37], best-fit [19,22,29,41,42], and least squares methods, were used to help the registration processes [4]. The specific alignment techniques used in each study are presented in Table 2.

Real-time mandibular movement data integration was mainly based on the fiducial marker [4,18,26,27,29,35], but the specific integration principles were not described. The alignment step affected the final virtual model’s accuracy. However, only two studies have evaluated the accuracy of alignment methods [32,34]. Therefore, further studies should introduce registration methods for the dynamic data of 4D patients and quantify the accuracy and optimization methods for every alignment step.

4.2.2. Software Programs

The 4D virtual model ensures that a comprehensive model contains the required data, while the FE analysis software chunks the complex model into simple units connected by nodes, facilitating simple algorithms for the analysis and interpretation of complex data. Although few FE software programs analyzed 4D models, several FE-related programs were used to analyze 3D models. For example, Hypermesh (Altair, Troy, MI, USA) is an important preprocessing software, and Abaqus (Abaqus Inc., Providence, RI, USA) is a common FE analysis software that interprets geometric models [45,46].

Currently, commercial software is available for dynamic dental virtual models; however, the principle is not specified, and the accuracy needs further improvement. Various computer software packages have made it convenient to use diverse clinical information. However, creating patient models by superimposing multi-modal data is still new. Integrating data from diverse file formats may be incompatible and inaccurate. Other limitations are tedious processes, requiring different hardware and software to acquire and analyze data, and expensive fees.

4.2.3. Outcome of the Technology

Most recent studies on 4D patients have included only one patient or phantom for feasibility exploration. Further studies are required to increase the sample size. In that regard, fourteen studies did not evaluate their results’ reliability [4,20,21,22,23,24,27,28,30,34,36,40,41,42], six assessed different dynamic techniques’ accuracy [19,29,31,33,35,37], five evaluated the simulated model’s accuracy [18,25,26,38,39], and two reported the registration accuracy [32,43]. Owing to the differences in the sources, formats, integration methods, and use of different 4D virtual patients, there is still a need for a unified standard to assess 4D virtual patients’ accuracy.

4.3. Clinical Applications

Depending on the clinical needs, 4D virtual patients integrate static virtual patients with dynamic information. The present review focuses on applying dynamic information of the virtual models: the establishment of occlusion in a stable mandibular and condylar position is of great benefit for restoration design, implant planning, orthodontic treatment, and orthognathic surgery. In addition to analysis of masticatory function and occlusal interference in the functional state, real-time jaw and joint movements are also useful for cause analysis and treatment of TMJ disorders and intraoperative navigation in implantology and orthognathic. The dynamic face of the virtual models facilitates the smile design. Overall, dynamic virtual patient models facilitate pre-treatment planning, intraoperative assessment, and stable, healthy, and aesthetic treatment outcomes for actual patients. Virtual patient construction achieves an intuitive presentation, facilitating communication and clinical decision-making in dentistry.

There are some limitations to this systematic review. First, the included studies were either non-randomized experimental studies or case reports. Few studies showed low overall risk. Therefore, the scientific level of clinical evidence is lacking. Appropriate statistical methods are needed to evaluate non-randomized experimental studies. The case reports lacked demographic characteristics, medical histories, and current clinical conditions. Second, the total sample size was 78, which needs further expansion. Third, there was considerable variation in subjects, interventions, outcomes, study design, and statistical methods across the included studies. Due to high heterogeneity, we did not conduct a meta-analysis but only qualitatively discussed the technique and application. Fourth, a few included articles were a series of related studies done by the same authors, which may increase the bias of the results. Although all criteria are met, such cases should be avoided in future studies. Finally, the number of included snowball papers was more than that obtained by electronic searches. There were no suitable articles in the weekly literature tracking. Thus, our search strategy needs to be improved.

High-quality clinical studies such as RCT should be conducted in 4D virtual patients to ensure an appropriate design, sufficient sample size, and less heterogeneity. Registration methods for dynamic data should be introduced and optimized. Future investigators should evaluate the accuracy of the currently available techniques to create 4D dynamic virtual patients. Furthermore, it is better to establish a unified evaluation standard conducive to quantitative analysis. In addition, integrating all of the required information within one system should also be considered.

5. Conclusions

Based on the included articles, the following conclusions were drawn:

- Dynamic data collection methods of 4D virtual patients include the JMT, FS + targets, and target tracking camera to acquire real-time jaw motion, VF and VA to simulate jaw position, facial tracking systems, and FE programs to analyze the coordinated movement of the masticatory system.

- Superimposition of the skeleton, TMJs, soft tissue, dentition, mandibular movement/position, and occlusion from different static/dynamic information collection devices in various file formats is feasible for 4D dental patients.

- Four-dimensional virtual patient models facilitate pre-treatment planning, intraoperative assessment, and stable, healthy, and aesthetic treatment outcomes in different clinical scopes of dentistry.

- There is a lack of well-designed and less heterogeneous studies in the field of 4D virtual patients.

- Further studies should focus on evaluating the accuracy of the existing software, techniques, and final models of dental dynamic virtual patients and developing a comprehensive system that combines all necessary data.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jfb14010033/s1, Figure S1: Snowball procedure; Table S1: PRISMA 2020 checklist; Table S2: Joanna Briggs Institute Critical Appraisal Checklist for Quasi-Experimental Studies (non-randomized experimental studies); Table S3: Joanna Briggs Institute Critical Appraisal Checklist for case reports.

Author Contributions

Conceptualization, S.Y. and Y.Y.; methodology, formal analysis, and investigation, Y.Y. and Q.L.; resources and data curation, S.Y. and W.H.; writing—original draft preparation, review visualization, and editing, Y.Y.; supervision, project administration, W.H.; funding acquisition, S.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Research Initiation Program of Southern Medical University (grant number PY2018N094), Guangdong Medical Research Foundation (grant number A2020458). The APC was funded by Stomatological Hospital, Southern Medical University, the Guangdong Basic and Applied Basic Research Foundation (grant numbers 2021A1515111140 and 2021B1515120059), Research and Cultivation Program of Stomatological Hospital, Southern Medical University (grant number PY2018021).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors thank An Li for his great advice on this review.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

desktop scanners (DS); intraoral scanners (IOS); facial scanners (FS); cone beam computed tomography (CBCT); computed tomography (CT); virtual facebow (VF); virtual articulator (VA); jaw motion analyzer (JMA); electromagnetic (EM) system; intraoral transfer element (IOTE); structured white light (SWL); structure-from-motion (SFM); finite element (FE) software; reverse engineering (RE) software; augmented reality (AR); computer-assisted design (CAD); computer-aided manufacturing (CAM); mandibular motion tracking system (ManMoS); standard tessellation language (STL); object code (OBJ); polygon (PLY); digital imaging and communications in medicine (DICOM); moving picture experts group 4 (MP4); text file (TXT); skeletal components (SK); dentition (DENT); mandibular movement (MM); jaw position (JP); occlusal analyses (OA); soft tissues of the face (SF); three-dimensional (3D); four-dimensional (4D); temporomandibular joint (TMJ); maxillary bone segment (MBS); mandibular proximal segment (MPS); centric relation (CR); centric relation occlusion (CRO); vertical dimension of occlusion (VDO); maximum intercuspation (MICP); natural head position (NHP); iterative closest point (ICP) algorithm; direct linear transform (DLT) algorithm; preferred reporting items for systematic reviews and meta-analyses (PRISMA); medical subject headings (MeSH); Joanna Briggs Institute (JBI); standard deviations (SD).

References

- Joda, T.; Bragger, U.; Gallucci, G. Systematic literature review of digital three-dimensional superimposition techniques to create virtual dental patients. Int. J. Oral Maxillofac. Implant. 2015, 30, 330–337. [Google Scholar] [CrossRef]

- Joda, T.; Gallucci, G.O. The virtual patient in dental medicine. Clin. Oral Implant. Res. 2015, 26, 725–726. [Google Scholar] [CrossRef] [PubMed]

- Perez-Giugovaz, M.G.; Park, S.H.; Revilla-Leon, M. 3D virtual patient representation for guiding a maxillary overdenture fabrication: A dental technique. J. Prosthodont. 2021, 30, 636–641. [Google Scholar] [CrossRef]

- Terajima, M.; Endo, M.; Aoki, Y.; Yuuda, K.; Hayasaki, H.; Goto, T.K.; Tokumori, K.; Nakasima, A. Four-dimensional analysis of stomatognathic function. Am. J. Orthod. Dentofac. 2008, 134, 276–287. [Google Scholar] [CrossRef] [PubMed]

- Mangano, C.; Luongo, F.; Migliario, M.; Mortellaro, C.; Mangano, F.G. Combining intraoral scans, cone beam computed tomography and face scans: The virtual patient. J. Craniofac. Surg. 2018, 29, 2241–2246. [Google Scholar] [CrossRef]

- Lepidi, L.; Galli, M.; Mastrangelo, F.; Venezia, P.; Joda, T.; Wang, H.L.; Li, J. Virtual articulators and virtual mounting procedures: Where do we stand? J. Prosthodont. 2021, 30, 24–35. [Google Scholar] [CrossRef]

- Ruge, S.; Quooss, A.; Kordass, B. Variability of closing movements, dynamic occlusion, and occlusal contact patterns during mastication. Int. J. Comput. Dent. 2011, 14, 119–127. [Google Scholar] [PubMed]

- Mehl, A. A new concept for the integration of dynamic occlusion in the digital construction process. Int. J. Comput. Dent. 2012, 15, 109. [Google Scholar]

- Schendel, S.A.; Duncan, K.S.; Lane, C. Image fusion in preoperative planning. Facial Plast. Surg. Clin. 2011, 19, 577–590. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ Br. Med. J. 2021, 372, n71. [Google Scholar] [CrossRef]

- Shamseer, L.; Moher, D.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: Elaboration and explanation. BMJ Br. Med. J. 2015, 354, i4086. [Google Scholar] [CrossRef] [PubMed]

- The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) Website. Available online: http://www.prisma-statement.org (accessed on 26 November 2022).

- Li, A.; Thomas, R.Z.; van der Sluis, L.; Tjakkes, G.H.; Slot, D.E. Definitions used for a healthy periodontium-A systematic review. Int. J. Dent. Hyg. 2020, 18, 327–343. [Google Scholar] [CrossRef] [PubMed]

- The Rayyan Website. Available online: https://rayyan.ai/reviews (accessed on 26 November 2022).

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan-a web and mobile app for systematic reviews. Syst. Rev. Lond. 2016, 5, 210. [Google Scholar] [CrossRef] [PubMed]

- Tufanaru, C.; Munn, Z.; Aromataris, E.; Campbell, J.; Hopp, L. Chapter 3: Systematic reviews of effectiveness. In JBI Manual for Evidence Synthesis; Aromataris, E., Munn, Z., Eds.; JBI: Adelaide, SA, Australia, 2020. [Google Scholar]

- Moola, S.; Munn, Z.; Tufanaru, C.; Aromataris, E.; Sears, K.; Sfetcu, R.; Currie, M.; Qureshi, R.; Mattis, P.; Lisy, K.; et al. Chapter 7: Systematic reviews of etiology and risk. In JBI Manual for Evidence Synthesis; Aromataris, E., Munn, Z., Eds.; JBI: Adelaide, SA, Australia, 2020. [Google Scholar]

- Fushima, K.; Kobayashi, M.; Konishi, H.; Minagichi, K.; Fukuchi, T. Real-time orthognathic surgical simulation using a mandibular motion tracking system. Comput. Aided Surg. 2007, 12, 91–104. [Google Scholar] [CrossRef]

- Li, J.; Chen, Z.; Decker, A.M.; Wang, H.L.; Joda, T.; Mendonca, G.; Lepidi, L. Trueness and precision of economical smartphone-based virtual facebow records. J. Prosthodont. 2022, 31, 22–29. [Google Scholar] [CrossRef]

- Zambrana, N.; Sesma, N.; Fomenko, I.; Dakir, E.I.; Pieralli, S. Jaw tracking integration to the virtual patient: A 4D dynamic approach. J. Prosthet. Dent. 2022; in press. [Google Scholar] [CrossRef]

- Kim, J.-E.; Park, J.-H.; Moon, H.-S.; Shim, J.-S. Complete assessment of occlusal dynamics and establishment of a digital workflow by using target tracking with a three-dimensional facial scanner. J. Prosthodont. Res. 2019, 63, 120–124. [Google Scholar] [CrossRef]

- Revilla-Leon, M.; Zeitler, J.M.; Kois, J.C. Scan body system to translate natural head position and virtual mounting into a 3-dimensional virtual patient: A dental technique. J. Prosthet. Dent. 2022; in press. [Google Scholar] [CrossRef]

- Revilla-Leon, M.; Zeitler, J.M.; Blanco-Fernandez, D.; Kois, J.C.; Att, W. Tracking and recording the lip dynamics for the integration of a dynamic virtual patient: A novel dental technique. J. Prosthodont. 2022, 31, 728–733. [Google Scholar] [CrossRef]

- Lepidi, L.; Galli, M.; Grammatica, A.; Joda, T.; Wang, H.L.; Li, J. Indirect digital workflow for virtual cross-mounting of fixed implant-supported prostheses to create a 3D virtual patient. J. Prosthodont. 2021, 30, 177–182. [Google Scholar] [CrossRef]

- Kim, S.-H.; Lee, S.-J.; Choi, M.-H.; Yang, H.J.; Kim, J.-E.; Huh, K.-H.; Lee, S.-S.; Heo, M.-S.; Hwang, S.J.; Yi, W.-J. Quantitative augmented reality-assisted free-hand orthognathic surgery using electromagnetic tracking and skin-attached dynamic reference. J. Craniofac. Surg. 2020, 31, 2175–2181. [Google Scholar] [CrossRef]

- Lee, S.-J.; Yang, H.J.; Choi, M.-H.; Woo, S.-Y.; Huh, K.-H.; Lee, S.-S.; Heo, M.-S.; Choi, S.-C.; Hwang, S.J.; Yi, W.-J. Real-time augmented model guidance for mandibular proximal segment repositioning in orthognathic surgery, using electromagnetic tracking. J. Cranio Maxillofac. Surg. 2019, 47, 127–137. [Google Scholar] [CrossRef]

- Li, J.; Chen, Z.; Dong, B.; Wang, H.L.; Joda, T.; Yu, H. Registering maxillomandibular relation to create a virtual patient integrated with a virtual articulator for complex implant rehabilitation: A clinical report. J. Prosthodont. 2020, 29, 553–557. [Google Scholar] [CrossRef]

- Li, J.; Att, W.; Chen, Z.; Lepidi, L.; Wang, H.; Joda, T. Prosthetic articulator-based implant rehabilitation virtual patient: A technique bridging implant surgery and reconstructive dentistry. J. Prosthet. Dent. 2021; in press. [Google Scholar] [CrossRef]

- Olszewski, R.; Villamil, M.B.; Trevisan, D.G.; Nedel, L.P.; Freitas, C.M.; Reychler, H.; Macq, B. Towards an integrated system for planning and assisting maxillofacial orthognathic surgery. Comput. Methods Programs Biomed. 2008, 91, 13–21. [Google Scholar] [CrossRef]

- Kois, J.C.; Kois, D.E.; Zeitler, J.M.; Martin, J. Digital to analog facially generated interchangeable facebow transfer: Capturing a standardized reference position. J. Prosthodont. 2022, 31, 13–22. [Google Scholar] [CrossRef] [PubMed]

- Kwon, J.H.; Im, S.; Chang, M.; Kim, J.-E.; Shim, J.-S. A digital approach to dynamic jaw tracking using a target tracking system and a structured-light three-dimensional scanner. J. Prosthodont. Res. 2019, 63, 115–119. [Google Scholar] [CrossRef] [PubMed]

- Lam, W.Y.; Hsung, R.T.; Choi, W.W.; Luk, H.W.; Pow, E.H. A 2-part facebow for CAD-CAM dentistry. J. Prosthet. Dent. 2016, 116, 843–847. [Google Scholar] [CrossRef] [PubMed]

- Lam, W.; Hsung, R.; Choi, W.; Luk, H.; Cheng, L.; Pow, E. A clinical technique for virtual articulator mounting with natural head position by using calibrated stereophotogrammetry. J. Prosthet. Dent. 2018, 119, 902–908. [Google Scholar] [CrossRef] [PubMed]

- Shao, J.; Xue, C.; Zhang, H.; Li, L. Full-arch implant-supported rehabilitation guided by a predicted lateral profile of soft tissue. J. Prosthodont. 2019, 28, 731–736. [Google Scholar] [CrossRef]

- He, S.; Kau, C.H.; Liao, L.; Kinderknecht, K.; Ow, A.; Saleh, T.A. The use of a dynamic real-time jaw tracking device and cone beam computed tomography simulation. Ann. Maxillofac. Surg. 2016, 6, 113–119. [Google Scholar] [CrossRef] [PubMed]

- Park, J.H.; Lee, G.-H.; Moon, D.-N.; Kim, J.-C.; Park, M.; Lee, K.-M. A digital approach to the evaluation of mandibular position by using a virtual articulator. J. Prosthet. Dent. 2021, 125, 849–853. [Google Scholar] [CrossRef] [PubMed]

- Amezua, X.; Iturrate, M.; Garikano, X.; Solaberrieta, E. Analysis of the influence of the facial scanning method on the transfer accuracy of a maxillary digital scan to a 3D face scan for a virtual facebow technique: An in vitro study. J. Prosthet. Dent. 2021, 128, 1024–1031. [Google Scholar] [CrossRef]

- Dai, F.; Wang, L.; Chen, G.; Chen, S.; Xu, T. Three-dimensional modeling of an individualized functional masticatory system and bite force analysis with an orthodontic bite plate. Int. J. Comput. Assist. Radiol. 2016, 11, 217–229. [Google Scholar] [CrossRef]

- Savoldelli, C.; Bouchard, P.-O.; Loudad, R.; Baque, P.; Tillier, Y. Stress distribution in the temporo-mandibular joint discs during jaw closing: A high-resolution three-dimensional finite-element model analysis. Surg. Radiol. Anat. 2012, 34, 405–413. [Google Scholar] [CrossRef] [PubMed]

- Perez-Giugovaz, M.G.; Mostafavi, D.; Revilla-Leon, M. Additively manufactured scan body for transferring a virtual 3-dimensional representation to a digital articulator for completely edentulous patients. J. Prosthet. Dent. 2021, 128, 1171–1178. [Google Scholar] [CrossRef] [PubMed]

- Solaberrieta, E.; Garmendia, A.; Minguez, R.; Brizuela, A.; Pradies, G. Virtual facebow technique. J. Prosthet. Dent. 2015, 114, 751–755. [Google Scholar] [CrossRef]

- Granata, S.; Giberti, L.; Vigolo, P.; Stellini, E.; Di Fiore, A. Incorporating a facial scanner into the digital workflow: A dental technique. J. Prosthet. Dent. 2020, 123, 781–785. [Google Scholar] [CrossRef]

- Noguchi, N.; Tsuji, M.; Shigematsu, M.; Goto, M. An orthognathic simulation system integrating teeth, jaw and face data using 3D cephalometry. Int. J. Oral Maxillofac. Surg. 2007, 36, 640–645. [Google Scholar] [CrossRef]

- Stella, M.; Bernardini, P.; Sigona, F.; Stella, A.; Grimaldi, M.; Fivela, B.G. Numerical instabilities and three-dimensional electromagnetic articulography. J. Acoust. Soc. Am. 2012, 132, 3941–3949. [Google Scholar] [CrossRef]

- Chen, G.; Lu, X.; Yin, N. Finite-element biomechanical-simulated analysis of the nasolabial fold. J. Craniofac. Surg. 2020, 31, 492–496. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Dong, Y.; Xin, P.; Hu, G.; Xiao, C.; Shen, S.; Shen, S.G. A novel method to determine the potential rotational axis of the mandible during virtual three-dimensional orthognathic surgery. J. Craniofac. Surg. 2013, 24, 2014–2017. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).