Abstract

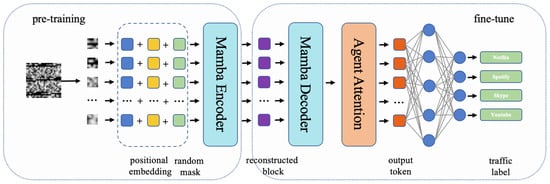

With the widespread use of encryption protocols on network data, fast and effective encryption traffic classification can improve the efficiency of traffic analysis. A resampling method combining Wasserstein GAN and random selection is proposed for solving the dataset imbalance problem, and it uses Wasserstein GAN for oversampling and random selection for undersampling to achieve class equalization. Based on Mamba, an ultra-low parametric quantity model, we propose an encrypted traffic classification model, ET-Mamba, which has a pre-training phase and a fine-tuning phase. During the pre-training phase, positional embedding is used to characterize the blocks of the traffic grayscale image, and random masking is used to strengthen the learning of the intrinsic correlation among the blocks of the traffic grayscale image. During the fine-tuning phase, the agent attention mechanism is adopted in the feature extraction phase to achieve global information modeling at a low computational cost, and the SmoothLoss function is designed to solve the problem of the insufficient generalization ability of cross-entropy loss function during training. The experimental results show that the proposed model significantly reduces the number of parameters and outperforms other models in terms of classification accuracy on non-VPN datasets.

1. Introduction

In this digital era, network traffic is growing rapidly and becoming an important resource for network security research. With the improvement in privacy protection awareness and the wide application of encryption technology, the proportion of encrypted traffic is increasing, which increases the difficulty of traffic monitoring and identification. Encrypted traffic classification can determine the traffic category, which is conducive to the further monitoring and identification of encrypted traffic.

We propose an encrypted traffic classification model, i.e., Encrypted Traffic Mamba (ET-Mamba), based on the ultra-low parametric quantity model Mamba [1], and the main innovations associated with this model are as follows:

(1) A resampling method combining Wasserstein GAN [2] and random selection is designed to compose a new dataset with more balanced categories. By generating minority class samples with a portion of randomly selected majority class samples, the resampling method avoids the following problem: the classifier tends to learn the categories with a larger number of samples while ignoring the categories with a smaller number of samples.

(2) A framework for encrypted traffic classification is proposed based on the encoder and decoder of Mamba. The framework is structured to have not only a pre-training stage, including the encoder of the Mamba but also a fine-tuning stage, including the decoder of the Mamba.

(3) During the pre-training phase, the positional embedding for the blocks of the traffic grayscale image is used for feature representation, and the random masking of coding is used to reinforce the learning ability of contextual associations. During the fine-tuning phase, the agent attention mechanism is adopted for feature extraction to achieve global information modeling at a low computational cost.

(4) The SmoothLoss function is designed to reduce the sensitivity of the model to noise by introducing label smoothing combined with L2 regularization to suppress parameter overfitting and to improve the generalization ability and stability of the model.

The structure of the remainder of the paper is as follows: Section 2 provides an overview of the latest approaches for encrypted traffic classification. Section 3 provides our method and module design. Section 4 introduces our comparative experiment and ablation experiment design. Section 5 introduces our study summary, shortcomings, and prospects.

2. Related Work

In the past two decades, port-based classification [3], DeepPacket detection [4], and machine learning methods [5] have made significant progress. However, due to the ever-growing complexity of the Internet, new encryption protocols, and advanced defense strategies, they are now facing the problem of reduced efficiency. The traditional model has been unable to effectively deal with the problem of encrypted traffic classification. Thus, some researchers have begun to use deep learning to classify encrypted traffic.

The deep learning approach has been used for encrypted traffic classification over the traditional machine learning approach. Deep learning was used for the first time to achieve encrypted traffic classification by Wang et al. [6]; the first 784 bytes of traffic of the original packet were used as model inputs, and a one-dimensional convolutional neural network was used for feature extraction, and the classification accuracy was significantly improved compared to that of the traditional machine learning methods. The recurrent neural network (RNN) was chosen to construct the model [7] due to its advantage in sequence modeling, and it can extract potential temporal features from the encrypted traffic and improve the classification effect of encrypted traffic. Based on the analysis of why convolutional neural networks can deal with the encrypted traffic classification problem, long- and short-term memory networks also obtained a good classification effect [8], but the model depth was deeper. A graph neural network (GNN) model has been proposed [9], which combines the raw bytes of a packet and the relationship between packets to improve the accuracy of encrypted traffic classification by taking full advantage of the non-Euclidean properties and temporal relationships of network data. Some combination models have been proposed, such as CNN combined with RNN [10] and CNN combined with Transformer [11]. Based on the large model, ET-BERT [12] was proposed, which is a BERT-based encrypted traffic classification model that can pre-train deep context datagram-level traffic representations from large-scale unlabeled data with simple fine-tuning of the labeled data for the classification task and obtained good classification results, but with a large number of parameters. NetMamba [13], an efficient linear-time SSM proposed by Wang, is a specially selected and improved unidirectional Mamba structure. Nevertheless, despite promising outcomes, the Mamba model remains in its nascent stage of development, with widespread adoption and large-scale applied research yet to materialize. In addition, Wang did not consider the impact of unbalanced data. Encrypted traffic class imbalance is common for the class imbalance problem in encrypted traffic classification; an integrated learning approach is used to deal with the class imbalance problem [14], which combines the prediction results of multiple classifiers through integrated learning to improve the overall classification effect. A treatment combines cross-entropy loss and FocalLoss loss to widen the loss gap between categories by adjusting the weights so that the model focuses more on minority class samples [15]. An Imbalanced Generative Adversarial Network (IGAN) has been used to generate minority class samples to solve the class imbalance problem [16].

Most of the current deep learning-based encrypted traffic identification methods achieve encrypted traffic identification and classification by increasing the network depth or fusing multiple neural network models together; although this can achieve good results, most of these classification models are complex in structure, have many parameters, and require huge computational overhead. Existing methods used to resolve the problem of class imbalance in encrypted traffic datasets encounter two main problems; one is to assign higher weights to minority class samples through integrated learning or using loss functions, which is likely to result in high model complexity or overfitting risk, and the other is to use generative adversarial networks to generate minority class samples to balance the dataset, without censoring the majority of class samples, and the quality of the generated samples are of varying quality.

3. Methods

3.1. Balancing in Data Processing

The USTC-TFC2016 [17], ISCX-VPN2016 [18], and ISCX-Tor2016 [19] public datasets are used in our study of encrypted traffic classification. In order to convert the raw traffic data into an input format that the deep learning model can accept, the data packet is transformed into a 28 × 28-pixel grayscale image [20]. Firstly, based on the five-tuple information (source IP address, source port number, destination IP address, destination port number, and protocol), SplitCap is used to split the pcap files in the original dataset according to the session. Secondly, there are many duplicate files in the split traffic, and the hash values of these files are the same. The FindDuplicate software of version v1.04 is used to calculate the hash values of these files, and then, the duplicate files with the same hash values are deleted. Thirdly, the length of the UDP header is fixed at 8 bytes, while the length of the TCP header is fixed at 20 bytes; thus, it is necessary to add twelve 0 bytes to the UDP header. Considering that most data packets are less than 784 bytes, the first 784 bytes of the packet are converted into grayscale images, with an extension of 0 to 784 bytes for the packets that are less than 784 bytes and a truncation to 784 bytes for the packets that are greater than 784 bytes. Finally, the MAC address of the data link layer and the IP address of the IP layer are replaced with zeros.

All the three datasets are category-imbalanced, as is seen in Table 1, Table 2 and Table 3. In the USTC-TFC2016 dataset, the FaceTime file size is 2 MB, while the Weibo file size is 1.6 GB. In the ISCX-VPN2016 dataset, the email file size is 21 MB, while the file is 17.6 GB. In the ISCX-Tor dataset, the email file size is 16 MB, while the file is 13 GB. There is a significant imbalance in the sample categories of the dataset, which causes the model to learn the categories with more samples and ignore the categories with fewer samples during the training process, which leads to a decrease in the generalization ability and classification accuracy of the model.

Table 1.

Sample file sizes in the ISCX-VPN dataset.

Table 2.

Sample file sizes in the ISCX-Tor dataset.

Table 3.

Sample file sizes in the USTC-TFC dataset.

This study proposes a resampling method combining Wasserstein GAN and random selection for resolving the sample category imbalance in a dataset (Figure 1), and this method includes an oversampling process based on the Wasserstein GAN network and an undersampling process based on the random selection method. In the oversampling process, the minority class samples are fed into the Wasserstein GAN, and the virtual samples of the corresponding classes are generated, thus expanding the number of minority class samples; in the undersampling process, a portion of the samples are randomly selected from the majority class samples. After resampling, the number of samples in each category is 10,000, and the samples in each category are divided according to training/validation/testing = 8:1:1.

Figure 1.

Resampling flowchart for Wasserstein GAN combined with stochastic.

3.2. ET-Mamba Model

3.2.1. Model Design

The overall framework of the encrypted traffic classification model ET-Mamba proposed in this study, based on the Mamba architecture, is shown in Figure 2, including its pre-training phase and its fine-tuning phase. This model built in the pre-training phase uses location coding for raw feature representation and a Mamba encoder for feature extraction; the Mamba decoder and agent attention mechanism are used for feature extraction in the model built in the fine-tuning phase. During the model training process, pre-training is carried out to obtain the hidden space representation of the encrypted traffic; then, the hidden space representation is fine-tuned to enhance the feature representation of the encrypted traffic and improve the model performance. Meanwhile, the SmoothLoss function is designed in the model to solve the problem of the insufficient generalization ability of the cross-entropy loss function during training.

Figure 2.

Framework of ET-Mamba.

The input to the model is a grayscale image of the encrypted traffic data [20], which has a size of 28 × 28 pixels, and the input image is sliced using a convolution with a convolution kernel of 4 × 4 and a step size of 4. The image is divided into 49 image blocks, which retain the local features of the original data. Positional coding helps the model to understand the arrangement of the image blocks and the relative positional relationships between them, which is the key to capturing the spatial structural features among the image blocks. The model encodes each image block by positional embedding to ensure that the image blocks retain their spatial position information during the encoding process. For the model to accurately understand the correlations between image blocks, a random masking approach is introduced in the pre-training phase, which trains the model to predict the ability to mask image blocks by randomly masking a portion of the image blocks. The random masking approach forces the model to focus on the local features of the image blocks and the global relationships between the image blocks, thus enabling the model to perform effective recognition despite incomplete or missing data. After positional embedding and random masking, the grayscale image block of encrypted traffic can be represented as Equation (1)

In the above equation, is the th image block, is the positional embedding representation of the th image block, which typically uses sequential positional encoding, and is a randomly generated mask used to mask out parts of the image block.

The value is encoded using a Mamba encoder to obtain the hidden space representation according to Equation (2)

In the above equation, encoder is the mapping function of the Mamba encoder, which generates the latent space representation based on the input and the parameters of the Mamba encoder. The core coding part is composed of multiple Mamba blocks. Each block integrates RMSNorm normalization to improve numerical stability, residual connection to alleviate the problem of deep network training, and droppath random depth mechanism to enhance generalization ability. In combination with RMSNorm normalization, it ensures the extraction of the depth feature of and finally generates a meaningful hidden space representation to achieve efficient mapping from input to potential features.

The goal of the fine-tuning phase is to utilize the latent space representation learned during the pre-training phase, that is, the reconstruction block, to further optimize the model to adapt to specific downstream tasks of traffic classification. During the fine-tuning process, the reconstruction block is fed into the Mamba decoder, which converts it into feature vectors , as shown in Equation (3)

where is the mapping function of the decoder that generates the output based on the reconstruction block and the decoder parameters. The parameters include the learnable parameters of each core component of the decoder, including the weight and bias of the linear layer, which is responsible for mapping the encoder output to the decoder target dimension, the weight bias of the SSM layer, and the feedforward network, and the normalized parameters to normalize the feature distribution.

The further feature extraction of using the agent attention mechanism allows the model to dynamically focus on the parts of that are relevant to a particular task and suppress irrelevant information to extract a more accurate feature representation, as in Equation (4)

where is calculated and generated by a specific query vector to measure the importance of each information unit in the decoder output to a specific task, is the output of the decoder, and represents the number of feature items involved in attention calculation.

The fully connected layer of the model maps the obtained feature representations weighted by the agent’s attention to the probability distribution for each category as in Equation (5)

where is the weight matrix of the fully connected layer, is the bias term, and represents the probability distribution of each category. Softmax is commonly used for multi-classification problems. It can convert a real number vector into a probability distribution, that is, convert each element in the vector into a value between 0 and 1, and the sum of all elements is 1.

3.2.2. Random Masking

The purpose of random masking is to artificially introduce information loss, preventing the model from relying on the simple extrapolation of adjacent information and thereby forcing the model to deeply explore the intrinsic features of encrypted traffic. After positional encoding, 1/7 of the encrypted traffic blocks are randomly masked. Here, the original encrypted traffic image block size is 28 × 28, and after convolution with a kernel size of 4 × 4 and a stride size of 4, the image is processed into 49 encrypted traffic image blocks of 4 × 4, so a size of 1/7 means 7. The specific masking generation operation is described in Equation (6)

After completing the positional embedding, the Shuffle function randomly rearranges the encrypted traffic image blocks. In the actual transmission process of encrypted traffic data, there may be certain patterns or sequences. If the model directly learns this inherent order, it may overly rely on these surface patterns and fail to deeply explore the intrinsic features of the data. The Shuffle function randomly shuffles the order of data blocks, disrupting these possible simple patterns and increasing the diversity and complexity of the data. When processing data, the model needs to learn from the content, features, and complex relationships between data blocks, which promotes the model to learn more universal and robust feature representations and improves its generalization ability. After random rearrangement using the Shuffle function, it is necessary to select a set of visible encrypted traffic blocks and masked encrypted traffic blocks from the rearranged encrypted traffic image blocks. In this process, the first 42 encrypted traffic blocks are selected as the set of visible encrypted traffic blocks. At the same time, seven encrypted traffic blocks are masked with all zeros to form a completely black encrypted traffic image block. These masked, completely black encrypted traffic image blocks simulate data loss during model training, and the model needs to attempt to reconstruct the masked parts based on the information of visible blocks. This approach is similar to the situation in actual network environments where some data may be lost or unavailable. By learning how to recover complete information from partially visible data, the model can better cope with complex and changing network environments and improve its performance in tasks such as encrypted traffic classification. Moreover, by adjusting the ratio and selection method of visible blocks and mask blocks, the learning performance of the model can be further optimized, achieving a good performance balance in different datasets and task scenarios.

3.2.3. Mamba Encoder and Decoder

The structure of the Mamba encoder and decoder of the model is shown in Figure 3, and its core design combines the normalization Norm, the convolution operation Conv, and the selection of the state-space model SSM for the effective modeling of the stride tokens. The stride tokens are first normalized to ensure numerical stability. On the one hand, the normalized stride tokens are firstly transformed into the feature space through the linear layer to adapt to the subsequent convolution operation and state-space modeling. Then, the convolution module is used to capture the pattern relationship between the local features and their neighboring steps, and the activation function is used to introduce the nonlinear expressive capability, and then, the SSM module is used to model the long-range dependence of the sequence data to further enhance the global feature capture capability. On the other hand, the normalized stride tokens are successively processed using the linear layer and activation function and interact with the output of SSM via element-by-element multiplication for feature interaction, thus achieving the integrated expression of global information and original features of the stride tokens. The synthesized expression is further processed using the linear layer and fused addition with the original stride tokens using the residual connection mechanism, which preserves the original information of the stride tokens and improves the stability of gradient propagation. The design of the entire Mamba coder reflects the balanced modeling of local and global features.

Figure 3.

Encoder/decoder of Mamba.

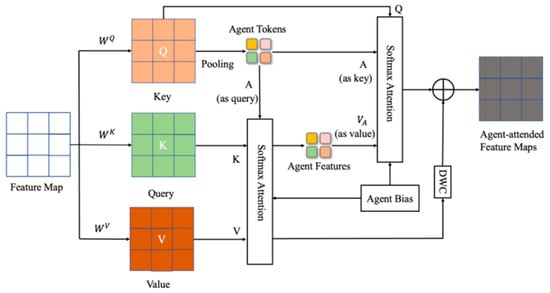

3.2.4. Agent Attention Module

Softmax attention [21] possesses strong expressive power and can effectively capture dependencies in sequence data, but the computational cost rises significantly as the length of input sequence data increases. Linear attention [22] reduces the computational complexity by matrix multiplication with input sequences through learnable weight matrices, but it usually relies on fixed-size weight matrices or inner-product operations during computation and is unable to handle variable-length sequences. The agent attention mechanism [23] combines the advantages of Softmax attention and linear attention. Firstly, the preliminary information extraction is carried out by linear attention, and the preliminary representations of the input sequences are quickly obtained without increasing the computational complexity, and then, these preliminary representations are weighted and averaged using Softmax attention, so that it can flexibly deal with input sequences of arbitrary lengths. We use the proxy attention mechanism in the feature extraction process in the fine-tuning stage to achieve a better balance between performance and computational efficiency.

As shown in Figure 4, the agent attention mechanism introduces the concept of an “agent” performing a linear transformation on the input feature map X using Equation (7), resulting in the agent’s query , key , and value . Further, using Equation (8), the agent token is calculated.

where are learnable weight matrices for the agents, and is the characteristic dimension of the element.

Figure 4.

Agent attention mechanism.

In the information aggregation process, first, the similarity matrix S between the agent token and is calculated, and then, the Softmax calculation is performed on the similarity matrix S to obtain the agent attention weight matrix , as shown in Equation (9):

Finally, the obtained agent attention weight matrix is weighted and summed with the value to obtain the aggregated information = . During the information broadcasting process, the similarity matrix between the computing agent token and the query is calculated as . A Softmax operation is performed for the similarity matrix S′, resulting in the new attention weight matrix . The Softmax used here is the same as that in Formula 5. Then, the aggregated information is considered as the value, and a weighted sum with using the summation of new attention weights and is calculated to obtain the proxy attention output , as shown in Equation (10)

The ability of the agent attention mechanism to focus more on the interactions of a specific agent or subset of features enhances the model’s ability to capture key information in the input sequence and reduces the computational complexity of the attention mechanism.

3.2.5. SmoothLoss Function

In classification tasks, cross-entropy [24] is usually used as a loss function. However, the cross-entropy loss function may lead to the insufficient generalization ability of the model when dealing with complex and diverse data. We propose an improved cross-entropy loss function, SmoothLoss, which combines label smoothing and L2 regularization [25] to improve the accuracy and generalization ability of the model.

The symbols are defined as follows:

: the model output has a shape of , where is the batch size and is the number of classes.

: target labels, with a shape of , with each value being an integer between and .

: the model output after Softmax processing represents the probability of each category.

: model parameters.

Label smoothing employs Equation (11) to smoothen the real labels, resulting in the smoothened labels , which reduces the overconfidence of the model and helps improve the generalization ability of the model.

In the above equation, is the label vector after one-hot encoding, and is the smoothing parameter, set to 0.1.

The cross-entropy loss for the smoothened label can be represented as Equation (12)

In the above equation, is the probability that the th sample belongs to the th category after Softmax. represents the smoothened label value of the th sample in the th category.

Furthermore, regularization prevents overfitting by penalizing the sum of squares of the parameters of the layer of the model, which is defined as Equation (14)

In the above equation, is the weight of regularization.

Combining the above two equations, the total loss function can be represented as Equation (15)

4. Experiments

4.1. Comparison Experiments

To evaluate the performance of the ET-Mamba classification model, the accuracy (Ac), precision (Pr), recall (Re), and F1 score metrics were assessed using the USTC-TFC2016 [17], ISCX-VPN2016 [18], and ISCX-Tor2016 [19] datasets to compare its performance with that of the mainstream encrypted traffic classification methods such as DeepPacket [26], FS-Net [27], PERT [28], TSCRNN [29], and ET-BERT [12].

A server with an Intel i5-10400F processor, 32 GB of RAM, and an Nvidia GTX 1080 Ti graphics card was used for the experiments. The batch size used for the experimental parameters was 64, Adam was used as the optimizer, and the initial learning rate was set to 0.0001. The learning rate of 0.001 is the default value of the Adam optimizer, which balances the convergence speed and stability. A batch size of 64 causes a trade-off between memory efficiency, gradient stability, and generalization ability while adapting to hardware parallel computing [30].

The results of the comparison experiments are shown in Table 4, Table 5 and Table 6. The ET-Mamba model outperforms other methods in all evaluation metrics on the USTC-TFC dataset, with accuracy, precision, and a recall of 99.84%, 99.85%, and 99.84%, respectively, which are 0.69%, 0.70%, and 0.72% higher than those of ET-BERT, and 0.74%, 0.75%, and 0.74% higher than those of PERT. The ET-Mamba metrics on the ISCX-VPN dataset outperform the other models except ET-BERT, with 98.19%, 98.23%, and 98.19% accuracy, precision, and recall, respectively, which are 0.71% and 0.68% lower than ET-BERT but a 0.39% higher recall, due to the fact that ET-BERT uses a large number of no-training methods in the pre-training and a large number of unlabeled VPN datasets. The ET-Mamba model outperforms the other models in all metrics on the ISCX-Tor dataset, with accuracy, precision, and a recall of 99.65%, 99.66%, and 99.65%, which are 0.44%, 0.43%, and 0.44% higher than those of ET-Mamba and 2.59%, 2.56%, and 2.55% higher than those of TSCRNN, respectively.

Table 4.

Comparison of our model with other models on the USTC-TFC dataset.

Table 5.

Comparison of our model with other models on the ISCX-VPN dataset.

Table 6.

Comparison of our model with other models on the ISCX-Tor dataset.

Overall, the ET-Mamba model performs well on multiple datasets and effectively improves the accuracy of encrypted traffic classification.

The processing speed of test data can also reflect the efficiency of the model’s operation. We tested the run time of the test data on three datasets. The average run time is shown in Table 7. Experiments show that the run time of our model is shorter than that of other comparison methods.

Table 7.

The average run time of the test data on three datasets.

4.2. Ablation Experiments

The impact of modules such as data resampling, agent attention module, and SmoothLoss function on the performance of the baseline model Mamba is systematically analyzed through ablation experiments. The experiments use USTC-TFC2016, ISCX-VPN2016, and ISCX-Tor2016 datasets to ablate the modules one by one or jointly and evaluate them using the metrics of accuracy (Ac), precision (Pr), recall (Re), and F1 score, and the experimental results are shown in Table 8, Table 9 and Table 10.

Table 8.

Ablation experiments of our model on the USTC-TFC dataset.

Table 9.

Ablation experiments of our model on the ISCX-VPN dataset.

Table 10.

Ablation experiments of our model on the ISCX-Tor dataset.

As it is seen in Table 4, Table 5 and Table 6, after replacing the cross-entropy loss function with the SmoothLoss function in the baseline model Mamba, the accuracy improved by 1.15% on the USTC-TFC2016 dataset, by 0.90% on the ISCX-VPN2016 dataset, and by 0.90% on the ISCX-Tor2016 dataset, indicating the effectiveness of SmoothLoss function in optimizing the loss. The introduction of the agent attention module improved the accuracy by 0.75% on the USTC-TFC2016 dataset, 0.97% on the ISCX-VPN2016 dataset, and 1.77% on the ISCX-Tor2016 dataset, which reflects the effectiveness of the agent attention mechanism in enhancing the model’s feature extraction ability. After the introduction of data resampling, the accuracy rate is improved by 0.93% on the USTC-TFC2016 dataset, 0.44% on the ISCX-VPN2016 dataset, and 0.86% on the ISCX-Tor2016 dataset, which illustrates the effectiveness of the data equalization process. Both modules significantly improve the performance of the model when they are introduced in combination, compared to one module alone, showing the complementarity and synergy between the modules. The best performance of the model is achieved when the three modules are combined. The ablation experiments show that the different modules have complementary roles in improving the generalization ability and classification accuracy of the model and can provide significant performance enhancement in a variety of encrypted traffic classification task scenarios.

4.3. Generalization Experiment

In order to test the generalization ability of the ET-Mamba model, we conducted experiments on datasets ISCX-VPN2016 and ISCX-Tor2016. The model weights trained on the ISCX-VPN2016 dataset are used to test the ISCX-TOR2016 test set. The reason why the USTC-TFC dataset is not used for generalization experiments is that its dataset label name and the number of sample categories are different from ISCX-VPN2016 and ISCX-Tor2016.

We selected the AC and F1 metrics, and the experimental results are shown in the Table 11. The experimental results show that the weights trained in ISCX-VPN2016 still have a good performance on the ISCX-Tor2016 dataset, which AC is 0.06% lower than the ISCX-VPN2016 test set itself, and F1 is 0.57% lower. The weights trained in ISCX-Tor2016 also have a good performance on the ISCX-VPN2016 dataset, in which AC is 0.47% lower than the ISCX-Tor2016 test set itself, and F1 is 0.25% lower. The experimental results show that the ET-Mamba model has good generalization ability and can accurately classify datasets that have not been seen before.

Table 11.

Generalization experiment on the ISCX-VPN dataset and ISCX-Tor dataset.

5. Conclusions

We propose a crypto traffic classification model based on the Mamba architecture to improve the performance of crypto traffic classification. A resampling method combining Wasserstein GAN and stochastic is proposed to resolve the dataset imbalance problem. The blocks of the traffic grayscale image are features represented by positional embedding, and the agent attention mechanism is used in feature extraction to achieve global information modeling with low computational cost; the random masking approach is used to pre-train the model to learn the intrinsic correlation between the blocks of the traffic grayscale image. The SmoothLoss function is designed to solve the problem of the insufficient generalization ability of the cross-entropy function during training. The model has high classification accuracy on three datasets, USTC-Traffic, ISCX-VPN2016, and ISCX-TOR2016, with an accuracy of 99.85%, 98.19%, and 99.65%, respectively; the number of model parameters is as low as 1.8 M, which demonstrates that the model proposed in this study has high classification performance in encrypted traffic classification.

The characteristics of real encrypted traffic continue to change with the iteration of malware, which makes the traditional model dependent on historical data that are prone to failure. The existing public datasets (ISCX-VPN, ISCX-TOR, USTC-TFC, etc.) are not extensive. Thus, it is difficult to identify rare, encrypted traffic types. In the future, we need to utilize the dynamic fine-tuning model through migration to improve the recognition ability of new traffic and collect more complex traffic types to build a more comprehensive encrypted traffic dataset.

Author Contributions

Conceptualization, J.X., L.C. and W.X.; methodology, L.C., J.X. and L.H.; software, L.C., L.D. and C.W.; validation, J.X., L.C. and L.H.; investigation, J.X., L.C. and W.X.; resources, L.H.; data curation, L.C. and J.X.; writing—original draft preparation, L.C. and J.X.; writing—reviewing and editing, L.H.; supervision, L.H.; project administration, J.X. and W.X.; funding acquisition, L.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant number: 62362040).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The USTC-TFC2016 dataset can be accessed publicly at https://github.com/davidyslu/USTC-TFC2016 (accessed on 14 April 2025), the ISCX-VPN2016 dataset can be accessed publicly at https://www.unb.ca/cic/datasets/vpn.html (accessed on 14 April 2025), and the ISCX-VPN2016 dataset can be accessed publicly at https://www.unb.ca/cic/datasets/tor.html (accessed on 14 April 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- Bar-Yanai, R.; Langberg, M.; Peleg, D. Realtime classification for encrypted traffic. In Proceedings of the International Symposium on Experimental Algorithms, Naples, Italy, 20–22 May 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 373–385. [Google Scholar]

- Rezaei, S.; Liu, X. Deep learning for encrypted traffic classification: An overview. IEEE Commun. Mag. 2019, 57, 76–81. [Google Scholar] [CrossRef]

- Huang, Y.F.; Lin, C.B.; Chung, C.M. Research on qos classification of network encrypted traffic behavior based on machine learning. Electronics 2021, 10, 1376. [Google Scholar] [CrossRef]

- Wang, W.; Zhu, M.; Wang, J.; Zeng, X.; Yang, Z. End-to-end encrypted traffic classification with one-dimensional convolution neural networks. In Proceedings of the IEEE International Conference on Intelligence and Security Informatics, Beijing, China, 22–24 July 2017; pp. 43–48. [Google Scholar]

- Ren, X.; Gu, H.; Wei, W. Tree-RNN: Tree structural recurrent neural network for network traffic classification. Expert Syst. Appl. 2021, 167, 114363. [Google Scholar] [CrossRef]

- Mei, Y.; Luktarhan, N.; Zhao, G.; Yang, X. An Encrypted Traffic Classification Approach Based on Path Signature Features and LSTM. Electronics 2024, 13, 3060. [Google Scholar] [CrossRef]

- Huoh, T.L.; Luo, Y.; Zhang, T. Encrypted network traffic classification using a geometric learning model. In Proceedings of the 2021 IFIP/IEEE International Symposium on Integrated Network Management, Bordeaux, France, 17–21 May 2021; pp. 376–383. [Google Scholar]

- Zou, Z.; Ge, J.; Zheng, H.; Wu, Y.; Han, C.; Yao, Z. Encrypted traffic classification with a convolutional long short-term memory neural network. In Proceedings of the 2018 IEEE 20th International Conference on High Performance Computing and Communications; IEEE 16th International Conference on Smart City; IEEE 4th International Conference on Data Science and Systems, Exeter, UK, 28–30 June 2018; pp. 329–334. [Google Scholar]

- Wang, K.; Gao, J.; Lei, X. MTC: A Multi-Task Model for Encrypted Network Traffic Classification Based on Transformer and 1D-CNN. Intell. Autom. Soft Comput. 2023, 37, 619. [Google Scholar] [CrossRef]

- Lin, X.; Xiong, G.; Gou, G.; Li, Z.; Shi, J.; Yu, J. Et-bert: A contextualized datagram representation with pre-training transformers for encrypted traffic classification. In Proceedings of the ACM Web Conference, Lyon, France, 25–29 April 2022; pp. 633–642. [Google Scholar]

- Wang, T.; Xie, X.; Wang, W.; Wang, C.; Zhao, Y.; Cui, Y. Netmamba: Efficient network traffic classification via pre-training unidirectional mamba. In Proceedings of the 2024 IEEE 32nd International Conference on Network Protocols (ICNP), Charleroi, Belgium, 28–31 October 2024; pp. 1–11. [Google Scholar]

- Almomani, A. Classification of virtual private networks encrypted traffic using ensemble learning algorithms. Egypt. Inform. J. 2022, 23, 57–68. [Google Scholar] [CrossRef]

- Qin, J.; Liu, G.; Duan, K. A New Imbalanced Encrypted Traffic Classification Model Based on CBAM and Re-Weighted Loss Function. Appl. Sci. 2022, 12, 9631. [Google Scholar] [CrossRef]

- Rao, Y.N.; Suresh Babu, K. An imbalanced generative adversarial network-based approach for network intrusion detection in an imbalanced dataset. Sensors 2023, 23, 550. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Zhu, M.; Zeng, X.; Ye, X.; Sheng, Y. Malware traffic classification using convolutional neural network for representation learning. In Proceedings of the 2017 International Conference on Information Networking, Da Nang, Vietnam, 11–13 January 2017; pp. 712–717. [Google Scholar]

- Draper-Gil, G.; Lashkari, A.H.; Mamun, M.S.I.; Ghorbani, A.A. Characterization of encrypted and vpn traffic using time-related. In Proceedings of the 2nd International Conference on Information Systems Security and Privacy, Rome, Italy, 19–21 February 2016; pp. 407–414. [Google Scholar]

- Lashkari, A.H.; Gil, G.D.; Mamun, M.S.I.; Ghorbani, A.A. Characterization of tor traffic using time based features. In Proceedings of the International Conference on Information Systems Security and Privacy, Funchal, Portugal, 19–21 February 2017; pp. 253–262. [Google Scholar]

- Shapira, T.; Shavitt, Y. Flowpic: Encrypted internet traffic classification is as easy as image recognition. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications Workshops, Paris, France, 29 April–2 May 2019; pp. 680–687. [Google Scholar]

- Heinsen, F.A. Softmax Attention with Constant Cost per Token. arXiv 2024, arXiv:2404.05843. [Google Scholar]

- Katharopoulos, A.; Vyas, A.; Pappas, N.; Fleuret, F. Transformers are rnns: Fast autoregressive transformers with linear attention. In Proceedings of the International Conference on Machine Learning, Virtual, 12–18 July 2020; pp. 5156–5165. [Google Scholar]

- Han, D.; Ye, T.; Han, Y.; Xia, Z.; Song, S.; Huang, G. Agent attention: On the integration of softmax and linear attention. arXiv 2023, arXiv:2312.08874. [Google Scholar]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-entropy loss functions: Theoretical analysis and applications. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 23803–23828. [Google Scholar]

- El Moutaouakil, K.; Roudani, M.; Ouhmid, A.; Zhilenkov, A.; Mobayen, S. Decomposition and Symmetric Kernel Deep Neural Network Fuzzy Support Vector Machine. Symmetry 2024, 16, 1585. [Google Scholar] [CrossRef]

- Lotfollahi, M.; Jafari Siavoshani, M.; Shirali Hossein Zade, R.; Saberian, M. Deep packet: A novel approach for encrypted traffic classification using deep learning. Soft Comput. 2020, 24, 1999–2012. [Google Scholar] [CrossRef]

- Liu, C.; He, L.; Xiong, G.; Cao, Z.; Li, Z. Fs-net: A flow sequence network for encrypted traffic classification. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 1171–1179. [Google Scholar]

- He, H.Y.; Yang, Z.G.; Chen, X.N. PERT: Payload encoding representation from transformer for encrypted traffic classification. In Proceedings of the 2020 ITU Kaleidoscope: Industry-Driven Digital Transformation, Hanoi, Vietnam, 7–11 December 2020; pp. 1–8. [Google Scholar]

- Lin, K.; Xu, X.; Gao, H. TSCRNN: A novel classification scheme of encrypted traffic based on flow spatiotemporal features for efficient management of IIoT. Comput. Netw. 2021, 190, 107974. [Google Scholar] [CrossRef]

- Goyal, P.; Dollár, P.; Girshick, R. Accurate, large minibatch sgd: Training imagenet in 1 hour. arXiv 2017, arXiv:1706.02677. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).