SL: A Reference Smartness Level Scale for Smart Artifacts

Abstract

:1. Introduction

2. Related Work

2.1. Artifacts Tagged as Smart Artifacts

2.2. Existing Works on Classifications Based on Smartness

3. Definition of Smart Artifact

3.1. “Smart Object”, “Smart Thing” and “Smart Artifact”

3.2. The Connectable Role

3.3. Our Definition of “Smart Artifact”

“A smart artifact is a traceable everyday artifact that is directly or indirectly digitally augmented and connected in order to improve its capabilities or expose new functions.”

3.4. The Role of Users’ Mobile Devices

4. Smartness Level of Device Capabilities

4.1. Problem Statement

4.2. The Model

5. Fitting Smart Artifacts

5.1. Research Work and Prototypes

5.2. Real-World Smart Artifacts

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AAL | Ambient Assisted Living |

| AI | Artificial Intelligence |

| AmI | Ambient Intelligence |

| API | Application Programming Interface |

| B2B | Business-to-Business |

| CO2 | Carbon Dioxide |

| ICT | Information and Communications Technology |

| ID | Identification |

| IoT | Internet of Things |

| IPSO | Internet Protocol for Smart Objects |

| LED | Light-Emitting Diode |

| RF | Radio Frequency |

| RFID | Radio Frequency Identification |

| SL | Smartness Level |

| VOC | Volatile Organic Compounds |

References

- Weiser, M. The Computer for the 21st Century. Sci. Am. 1991, 265, 94–104. [Google Scholar] [CrossRef]

- CassensJörg, J.; CassensRebekah, W. Ambient Explanations: Ambient Intelligence and Explainable AI. In Proceedings of the 15th European Conference, AmI 2019, Rome, Italy, 13–15 November 2019. [Google Scholar]

- Calvaresi, D.; Cesarini, D.; Sernani, P.; Marinoni, M.; Dragoni, A.; Sturm, A. Exploring the ambient assisted living domain: A systematic review. J. Ambient. Intell. Humaniz. Comput. 2017, 8, 239–257. [Google Scholar] [CrossRef]

- Alter, S. Making Sense of Smartness in the Context of Smart Devices and Smart Systems. Inf. Syst. Front. 2020, 22, 381–393. [Google Scholar] [CrossRef]

- Kindberg, T.; Barton, J. Towards a real-world wide web. In Proceedings of the 9th Workshop on ACM SIGOPS European Workshop: Beyond the PC: New Challenges for the Operating System, Kolding, Denmark, 17–20 September 2000; pp. 195–200. [Google Scholar]

- Pentland, A. Smart rooms, desks and clothes. In Proceedings of the 1997 IEEE International Conference on Acoustics, Speech, and Signal Processing, Munich, Germany, 21–24 April 1997; Volume 1, pp. 171–174. [Google Scholar]

- Holmquist, L.; Mattern, F.; Schiele, B.; Alahuhta, P.; Beigl, M.; Gellersen, H. Smart-Its Friends: A Technique for Users to Easily Establish Connections between Smart Artefacts. In Proceedings of the UbiComp’01: 3rd International Conference on Ubiquitous Computing, Atlanta, GA, USA, 30 September–2 October 2001; pp. 116–122. [Google Scholar]

- Helal, S. The Monkey, the Ant, and the Elephant: Addressing Safety in Smart Spaces. Computer 2020, 53, 73–76. [Google Scholar] [CrossRef]

- Khan, T. A Solar-Powered IoT Connected Physical Mailbox Interfaced with Smart Devices. IoT 2020, 1, 128–144. [Google Scholar] [CrossRef]

- Manpreet, S.K.; Manish, K.; Bobby, K.; Priyam, T.; Harpreet, K.C. Designing and Implementation of Smart Umbrella. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2019, 5, 13–17. [Google Scholar] [CrossRef]

- Han, Y.; Lee, C.; Kim, Y.; Jeon, S.; Seo, D.; Jung, I. Smart umbrella for safety directions on Internet of Things. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–10 January 2017; pp. 84–85. [Google Scholar] [CrossRef]

- Behr, C.J.; Kumar, A.; Hancke, G.P. A smart helmet for air quality and hazardous event detection for the mining industry. In Proceedings of the 2016 IEEE International Conference on Industrial Technology (ICIT), Taipei, Taiwan, 14–17 March 2016; pp. 2026–2031. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, H.; Rempel, S.; Hong, K.; Han, T.; Sakamoto, Y.; Irani, P. A smart utensil for detecting food pick-up gesture and amount while eating. In Proceedings of the AH ’20: 11th Augmented Human International Conference, Winnipeg, MB, Canada, 27–29 May 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, A.; Zhang, Y.; Sun, S. A smart catering system base on Internet-of-things technique. In Proceedings of the 2015 IEEE 16th International Conference on Communication Technology (ICCT), Hangzhou, China, 18–20 October 2015; pp. 433–436. [Google Scholar] [CrossRef]

- Park, M.; Song, Y.; Lee, J.; Paek, J. Design and Implementation of a smart chair system for IoT. In Proceedings of the 2016 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Korea, 19–21 October 2016; pp. 1200–1203. [Google Scholar] [CrossRef]

- Liu, K.C.; Hsieh, C.Y.; Huang, H.Y.; Chiu, L.T.; Hsu, S.J.P.; Chan, C.T. Drinking Event Detection and Episode Identification Using 3D-Printed Smart Cup. IEEE Sens. J. 2020, 20, 13743–13751. [Google Scholar] [CrossRef]

- Rijsdijk, S.; Hultink, E. How Today’s Consumers Perceive Tomorrow’s Smart Products. J. Prod. Innov. Manag. 2009, 26, 24–42. [Google Scholar] [CrossRef]

- Cena, F.; Console, L.; Matassa, A.; Torre, I. Multi-dimensional intelligence in smart physical objects. Inf. Syst. Front. 2019, 21, 383–404. [Google Scholar] [CrossRef]

- Pérez Hernández, M.E.; Reiff-Marganiec, S. Classifying Smart Objects using capabilities. In Proceedings of the 2014 International Conference on Smart Computing, Hong Kong, China, 3–5 November 2014; pp. 309–316. [Google Scholar] [CrossRef]

- Püschel, L.; Röglinger, M.; Schlott, H. What’s in a Smart Thing? Development of a Multi-layer Taxonomy. In Proceedings of the ICIS, Dublin, Ireland, 11–14 December 2016. [Google Scholar]

- Langley, D.J.; van Doorn, J.; Ng, I.C.L.; Stieglitz, S.; Lazovik, A.; Boonstra, A. The Internet of Everything: Smart things and their impact on business models. J. Bus. Res. 2021, 122, 853–863. [Google Scholar] [CrossRef]

- Kortuem, G.; Kawsar, F.; Sundramoorthy, V.; Fitton, D. Smart objects as building blocks for the Internet of things. IEEE Internet Comput. 2010, 14, 44–51. [Google Scholar] [CrossRef]

- Siegemund, F.; Krauer, T. Integrating Handhelds into Environments of Cooperating Smart Everyday Objects. In Proceedings of the EUSAI, Eindhoven, The Netherlands, 8–10 November 2004. [Google Scholar]

- Ventura, D.; Monteleone, S.; Torre, G.L.; Delfa, G.C.L.; Catania, V. Smart EDIFICE—Smart EveryDay interoperating future devICEs. In Proceedings of the 2015 International Conference on Collaboration Technologies and Systems (CTS), Atlanta, GA, USA, 1–5 June 2015; pp. 19–26. [Google Scholar]

- Porter, M.E.; Heppelmann, J.E. How Smart, Connected Products Are Transforming Companies. Harv. Bus. Rev. 2015, 93, 53–71. [Google Scholar]

- Kaisler, S.H.; Money, W.H.; Cohen, S.J. Smart Objects: An Active Big Data Approach. In Proceedings of the HICSS, Hilton Waikoloa Village, HI, USA, 3–6 January 2018. [Google Scholar]

- OMA SpecWorks. Available online: https://omaspecworks.org (accessed on 14 May 2021).

- Samaniego, M.; Deters, R. Internet of Smart Things—IoST: Using Blockchain and CLIPS to Make Things Autonomous. In Proceedings of the 2017 IEEE International Conference on Cognitive Computing (ICCC), Honolulu, HI, USA, 25–30 June 2017; pp. 9–16. [Google Scholar] [CrossRef]

- Moraes do Nascimento, N.; de Lucena, C.J.P. Engineering cooperative smart things based on embodied cognition. In Proceedings of the 2017 NASA/ESA Conference on Adaptive Hardware and Systems (AHS), Pasadena, CA, USA, 24–27 July 2017; pp. 109–116. [Google Scholar] [CrossRef]

- Madakam, S. Internet of things: Smart things. Int. J. Future Comput. Commun. 2015, 4, 250–253. [Google Scholar] [CrossRef]

- Caswell, D.; Debaty, P. Creating Web Representations for Places. In Proceedings of the Handheld and Ubiquitous Computing; Thomas, P., Gellersen, H.W., Eds.; Springer: Berlin/Heidelberg, Germany, 2000; pp. 114–126. [Google Scholar]

- Mattern, F.; Florkemeier, C. Vom Internet der Computer zum Internet der Dinge. Informatik-Spektrum 2010, 33, 107–121. [Google Scholar] [CrossRef]

- Kees, A.; Oberländer, A.M.; Röglinger, M.; Rosemann, M. Understanding the internet of things: A conceptualisation of business-to-thing (B2T) interactions. In Proceedings of the European Conference on Information Systems, Munster, Germany, 26–29 May 2015; Volume 23. [Google Scholar]

- SAE International. Levels of Driving Automation. Available online: https://www.sae.org (accessed on 14 May 2021).

- Bailey, K.; Sage Publications, I. Typologies and Taxonomies: An Introduction to Classification Techniques; Number 102 in Quantitative Applications in the Social Science; SAGE Publications: Sauzen d’Oaks, CA, USA, 1994. [Google Scholar]

- Google Nest. Available online: https://www.nest.com (accessed on 22 October 2021).

- EvaDrop, World’s First Smart Shower. Available online: https://evadrop.com (accessed on 4 October 2021).

- Smart Temporal Thermometer. Available online: https://www.withings.com (accessed on 8 October 2021).

- Smart Indoor Air Monitor. Available online: https://uk.getawair.com (accessed on 8 October 2021).

- Breathometer. Available online: https://www.breathometer.com/mint (accessed on 8 October 2021).

- Your Gym and Personal Trainer to, Go. Available online: https://en.straffr.com (accessed on 8 October 2021).

| Work/Characteristics | Approach | Method | Aim | Sample Output |

|---|---|---|---|---|

| [4] | Classification matrix | Four smart capabilities: information processing, internal regulation, action in the world and knowledge acquisition expanded to 23 dimensions. Although the authors defined five different levels of smartness (i.e., not smart at all, scripted execution, formulaic adaptation, creative adaptation and unscripted or partially scripted invention, the 23 dimensions are evaluated with two smart levels: somewhat smart and extremely smart. | Guiding making a device or system smarter. | A table for each category and related dimensions wherein each dimension is characterized in terms of somewhat smart and extremely smart. |

| [18] | Framework | Six capabilities: knowledge management, reasoning, learning what, learning how, human–object interaction/object–object interaction and social relations. For each smart physical object, each capability is assigned with a qualitative level of smartness. A descriptive conclusion is created according to the assigned levels of smartness. | Guiding designing and comparing different smart physical objects. | “Smart physical object with interaction capabilities (smart and innovative input modalities) with limited reasoning capabilities enabling context awareness and adaptive reminder”, quoted from [18]. |

| [19] | Classification model | Five levels of capabilities: Level 1 (essential), level 2 (networked), level 3 (enhanced), level 4 (aware) and level 5 (IoT complete). For each capability level, a set of capabilities is defined. The capability level is reached when a smart object implements the specified capabilities. | Helping to determine what a smart object is able to do by itself and what requirements can be covered externally by applications, services, platforms and other objects. | This projects defines capability levels and not smartness levels. |

| [20] | Multi-layer taxonomy | Ten capability dimensions (sensing, acting, direction, multiplicity, partner, thing compatibility, data source, data usage, offline functionality and main purpose); for each smart thing, a support percentage is assigned to each dimension. In the end, a hit ratio is calculated. | The authors presented calculated hit ratios for different smart things per thing and per dimension. | This project is not focused on smartness level. Instead, the multi-layer taxonomy is used to calculate hit ratios for individual smart things and for dimensions when considering multiple smart things. |

| [21] | Taxonomy | Matrix that relates four capabilities (reactive, adaptive, autonomous and cooperative) with three connectivity levels: closed system, open system with restricted protocol and open system with full interoperability. Twelve different unlabeled cells are defined (apparently defining 12 different smartness levels) to highlight the different implications of a smart thing for business models. | Describing the business model implications according to different level-of-smartness smart things. | “Business models based on delegating decision-making to smart things”, quoted from [21]. |

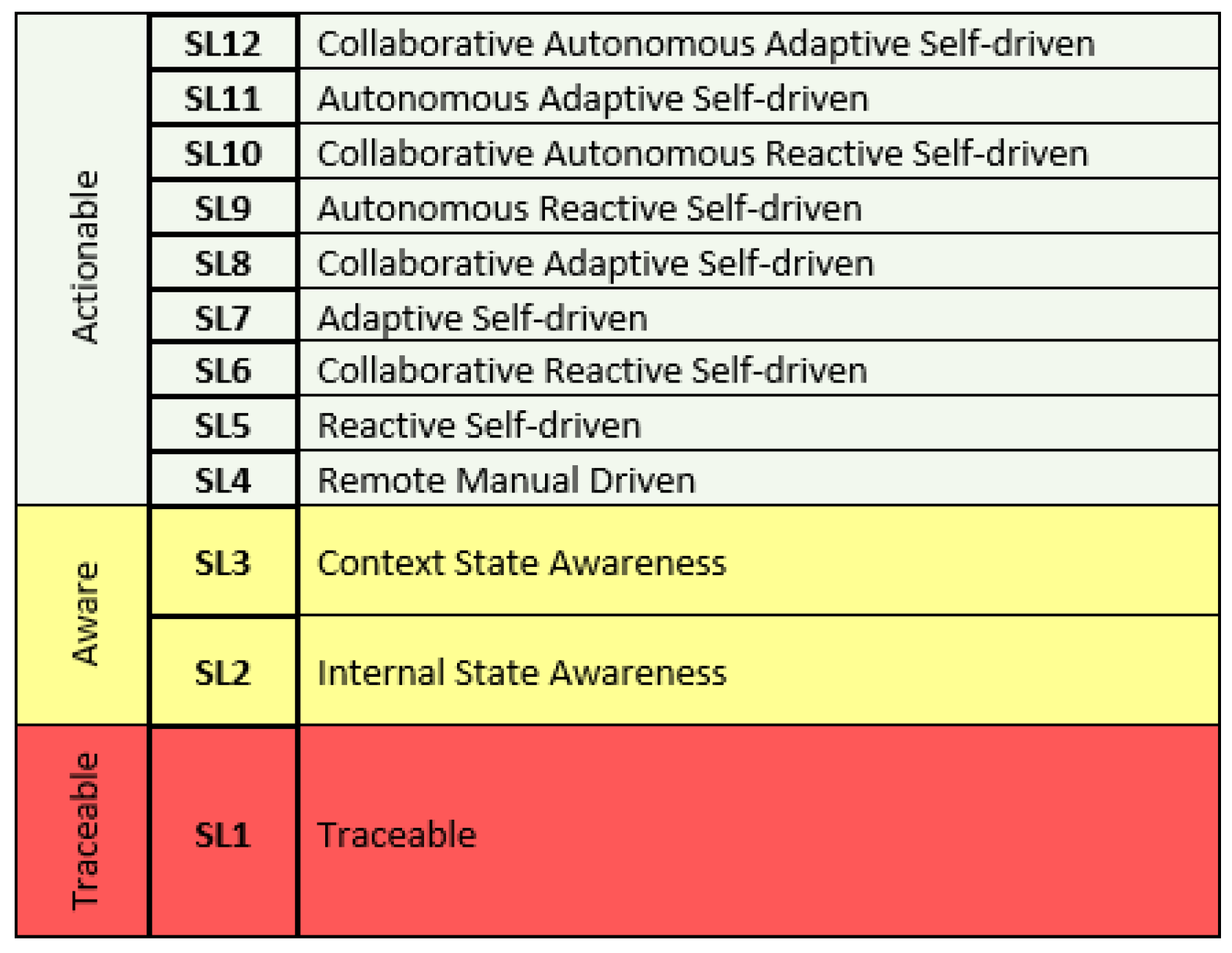

| Our proposal | Uni-dimensional typology | Twelve levels of smartness (SL1..SL12) associated with sets of capabilities: traceable, internal state awareness, context state awareness, remote manual driven, reactive self-driven, collaborative reactive self-driven, adaptive self-driven, collaborative adaptive self-driven, autonomous reactive self-driven, collaborative autonomous reactive self-driven, autonomous adaptive self-driven and collaborative autonomous adaptive self-driven. A math model based on capability sets and capability weights is provided to extract the smartness level of each smart artifact. | Assigning a smartness level and guiding smart artifact development in terms of the requirements to achieve a specific smartness level. | The smart chair has an SL3 smartness level. |

| Smartness Level | Required Capability | Criteria |

|---|---|---|

| SL1 | Traceability | The smart artifact must include a unique identification |

| even though it relies on the surrounding infrastructure. | ||

| Bar codes, QR codes, beaconing and RFID are some examples | ||

| of identification technologies that make artifacts traceable. | ||

| Any smart artifact must have at least | ||

| the traceability capability. | ||

| SL2 | Internal state awareness | The smart artifact is able to report simple internal states ranging |

| from its battery level, temperature and vibration, etc. to more complex | ||

| internal diagnosis reports. | ||

| SL3 | Context state awareness | The smart artifact is able to provide a report of its surrounding |

| context, apart from its internal one. | ||

| SL4 | Remotely, manually driven | The smart artifact has the ability to be manually, |

| remotely driven either partially or totally. | ||

| SL5 | Reactively self-driven | The smart artifact is able to react by itself to its internal or |

| external context but under user supervision | ||

| considering its main function. | ||

| SL6 | Collaboratively, reactively self-driven | The smart artifact is able to react by itself according to its internal |

| or external context and from a collaboration with other | ||

| smart artifacts under user supervision | ||

| considering its main function. | ||

| SL7 | Adaptively self-driven | The smart artifact is able to react and adapt itself by learning |

| from past data and events under user supervision | ||

| considering its main function. | ||

| SL8 | Collaboratively, adaptively self-driven | The smart artifact is able to react and adapt itself by |

| learning from past data and events and from a collaboration | ||

| with other smart artifacts under user supervision | ||

| considering its main function. | ||

| SL9 | Autonomously, reactively self-driven | The smart artifact is able to react by itself to its internal |

| or external context without requiring user supervision | ||

| considering its main function. | ||

| SL10 | Collaboratively, autonomously and reactively self-driven | The smart artifact is able to react by itself to its internal |

| or external context and from a collaboration | ||

| with other artifacts without requiring user supervision | ||

| considering its main function. | ||

| SL11 | Autonomously, adaptively self-driven | The smart artifact is able to react and adapt itself by learning |

| from past data and events without requiring user supervision | ||

| considering its main function. | ||

| SL12 | Collaboratively, autonomously and adaptively self-driven | The smart artifact is able to react and adapt itself by learning |

| from past data and events and from a collaboration with other | ||

| smart artifacts without requiring user supervision | ||

| considering its main function. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Costa, N.; Rodrigues, N.; Seco, M.A.; Pereira, A. SL: A Reference Smartness Level Scale for Smart Artifacts. Information 2022, 13, 371. https://doi.org/10.3390/info13080371

Costa N, Rodrigues N, Seco MA, Pereira A. SL: A Reference Smartness Level Scale for Smart Artifacts. Information. 2022; 13(8):371. https://doi.org/10.3390/info13080371

Chicago/Turabian StyleCosta, Nuno, Nuno Rodrigues, Maria Alexandra Seco, and António Pereira. 2022. "SL: A Reference Smartness Level Scale for Smart Artifacts" Information 13, no. 8: 371. https://doi.org/10.3390/info13080371

APA StyleCosta, N., Rodrigues, N., Seco, M. A., & Pereira, A. (2022). SL: A Reference Smartness Level Scale for Smart Artifacts. Information, 13(8), 371. https://doi.org/10.3390/info13080371