Abstract

Underwater image enhancement (UIE) is a key technology in the fields of underwater robot navigation, marine resources development, and ecological environment monitoring. Due to the absorption and scattering of different wavelengths of light in water, the quality of the original underwater images usually deteriorates. In recent years, UIE methods based on deep neural networks have made significant progress, but there still exist some problems, such as insufficient local detail recovery and difficulty in effectively capturing multi-scale contextual information. To solve the above problems, a Multi-Scale Contextual Fusion Residual Network (MCFR-Net) for underwater image enhancement is proposed in this paper. Firstly, we propose an Adaptive Feature Aggregation Enhancement (AFAE) module, which adaptively strengthens the key regions in the input images and improves the feature expression ability by fusing multi-scale convolutional features and a self-attention mechanism. Secondly, we design a Residual Dual Attention Module (RDAM), which captures and strengthens features in key regions through twice self-attention calculation and residual connection, while effectively retaining the original information. Thirdly, a Multi-Scale Feature Fusion Decoding (MFFD) module is designed to obtain rich contexts at multiple scales, improving the model’s understanding of details and global features. We conducted extensive experiments on four datasets, and the results show that MCFR-Net effectively improves the visual quality of underwater images and outperforms many existing methods in both full-reference and no-reference metrics. Compared with the existing methods, the proposed MCFR-Net can not only capture the local details and global contexts more comprehensively, but also show obvious advantages in visual quality and generalization performance. It provides a new technical route and benchmark for subsequent research in the field of underwater vision processing, which has important academic and application values.

1. Introduction

Underwater imaging plays a crucial role in the fields of ocean exploration [1], environmental monitoring [2], underwater autonomous navigation [3], underwater archaeology [4], etc. [5,6,7]. However, underwater imaging is affected by factors such as water turbidity and illumination changes, resulting in problems of contrast reduction, color distortion, and blurring degradation [8,9,10]. Unlike the land environment, the absorption and scattering effects in the underwater environment hurt the visual quality, leading to significant degradation of acquired underwater images, which hinders the execution of advanced computer vision tasks such as target recognition and scene understanding. Therefore, the development of high-performance UIE methods is crucial for subsequent missions.

UIE methods can be divided into three types: physical model, non-physical model, and deep learning-based methods. Physical models compensate for light absorption and scattering by constructing mathematical models based on the propagation characteristics of light in water and achieve color correction by estimating imaging degradation parameters. This type of method is highly interpretable and can recover the quality of underwater images to a certain extent. However, it relies on precise environmental parameters, and the optical properties in complex waters are often difficult to obtain accurately, which limits its applicability. Non-physical models mainly rely on image processing techniques to improve visual quality through pixel-level transformations, contrast enhancement, and color correction. The non-physical model has the advantages of computational efficiency, easy implementation, and clear imaging. However, due to the underwater optical degradation not being considered, it is difficult to recover the real image effectively, even introducing artifacts. In recent years, deep learning has significantly advanced UIE by automatically extracting multi-level features through its powerful representation-learning capabilities. However, the existing methods are insufficient to recover local details and difficult to capture rich context information at different scales of the underwater images, which limits the visual quality and detail expression of the enhanced images.

To solve the challenges of image enhancement brought by complex underwater scenes, we propose a Multi-Scale Context Fusion Residual Network (MCFR-Net). Based on the classical U-shape encoder–decoder framework, this method combines an Adaptive Feature Aggregation Enhancement (AFAE) module, Residual Dual Attention Module (RDAM), and Multi-Scale Feature Fusion Decoding (MFFD) module to fully extract and utilize multi-scale information and improve the visual effect of underwater images. Specifically, the AFAE module uses Kernel Fusion Self-Attention (KFSA) to adaptively strengthen the feature expression of key regions in the image and realizes effective consistency between local features and global contexts. The RDAM further enhances the expressive capacity of critical regions and effectively suppresses noise and feature degradation by applying KFSA twice and residual connections. The MFFD module achieves multi-scale feature fusion through channel mapping and scale alignment, and then reconstructs multi-resolution detail features in parallel.

The main contributions of this paper are summarized as follows.

- We propose an Adaptive Feature Aggregation Enhancement (AFAE) module, which aggregates multi-scale convolutional and self-attention features through Kernel Fusion Self-Attention (KFSA), enhancing the local and global feature representation capabilities of MCFR-Net.

- We propose a Residual Dual Attention Module (RDAM), which enhances the feature representation of key regions and retains the original information by applying KFSA twice and residual connections, thereby improving the stability and robustness of MCFR-Net.

- We design a Multi-Scale Feature Fusion Decoding (MFFD) module to achieve channel mapping and scale alignment of multi-scale features, and fuse and reconstruct multi-resolution detail features.

2. Related Work

2.1. Traditional Methods

The early UIE methods mainly adjust the pixel value of the image according to the characteristics of the underwater image, such as color distortion and low contrast. UIE methods based on physical models employ mathematical models to restore the color and contrast of underwater images by simulating the processes of light propagation, scattering, and absorption in water. Chiang et al. [11] proposed the Wavelength Compensation and Dehazing Algorithm (WCID), which employs the citation for dark channel techniques before estimating the scene depth, eliminates interference from artificial light sources, and compensates for light scattering and color attenuation simultaneously. Drews et al. [12] put forward the Unified Dark Channel Prior (UDCP) method, which is capable of enhancing the visual quality of underwater images and obtaining precise depth information through the introduction of the dark channel prior in the green and blue channels. Li et al. [13] proposed a method based on blue–green channel dehazing and red channel correction. This method eliminates haze in the blue and green channels and corrects the red channel to mitigate the effects of light absorption and scattering. These methods perform well in color correction and contrast enhancement, but they rely on specific physical models and prior assumptions, which are difficult to adapt to complex underwater environments and possess limited generalization capability.

Non-physical model-based UIE methods mainly employ pixel-level adjustment, statistical transformation, and frequency domain analysis techniques. Through color correction, contrast enhancement, and multi-scale transformation, the visibility and color fidelity of the image are enhanced, and the impact of the underwater environment on image quality is mitigated. Priyadharsini et al. [14] put forward a contrast enhancement approach based on Stationary Wavelet Transform (SWT), which integrates Laplacian filtering and masking technology to mitigate the impact of fuzzy areas. Huang et al. [15] proposed a relative global histogram stretching method based on adaptive parameter acquisition, which integrates contrast correction and color correction to adaptively optimize the histogram stretching process and improve the quality of images in the shallow water environment. However, methods based on non-physical models ignore the propagation characteristics of light in water and exhibit instability under conditions of high turbidity or extreme illumination. In addition, these methods predominantly rely on empirical adjustment, which makes it difficult to accurately restore the true color and details, and their ability to adapt to complex environments is restricted.

2.2. Deep Learning Methods

Recently, the development of deep learning has significantly accelerated the progress of UIE technology. UIE methods based on the convolutional neural network have strong automatic feature learning ability and generalization characteristics to adapt to diverse environments. Wang et al. [16] proposed UIE-Net to realize color correction and dehazing through end-to-end training. It introduces a pixel perturbation strategy to suppress texture noise and improve image clarity. Li et al. [17] proposed Water-Net, which eliminates color cast and atomization phenomena and improves the visual quality of real underwater single images. Sun et al. [18] put forward a CNN model based on an encoder–decoder structure, which demonstrates excellent adaptability in complex underwater environments. Qi et al. [19] suggested SGUIE-Net, which integrates semantic attention and multi-scale perception technology. It utilizes high-level semantics to guide the enhancement process and improves the clarity and detailed expression ability of underwater images. However, the computational cost is relatively high. Li et al. [20] proposed the Ucolor network, which integrates multi-color space embedding and a medium transmittance-guided decoder, capable of removing the color cast and enhancing low-contrast regions. Fu et al. [21] put forward SCNet, which implements spatial and channel normalization strategies based on U-Net [22] to enhance the generalization ability and robustness of the model. The Transformer-based UIE method can effectively capture global semantic correlation information in images and shows obvious advantages in overall structure perception. Lei et al. [23] proposed a deep unwrapping network combining a color prior guidance module and a cross-stage feature converter to realize the organic fusion of physical model constraints and Transformer global modeling. Khan et al. [24] designed a multi-domain query cascade attention mechanism to improve the global modeling ability through the interaction of multi-scale features in the spatial frequency domain. Hu et al. [25] proposed a dual attention fusion structure, which integrated the Swin Transformer block and residual convolution in parallel to achieve the collaborative representation of non-local and local features in an adaptive channel attention manner. However, existing deep learning methods still have shortcomings in local detail recovery, key region expression, and multi-scale context modeling, which limit the effect of image enhancement in complex underwater environments.

3. Proposed Model

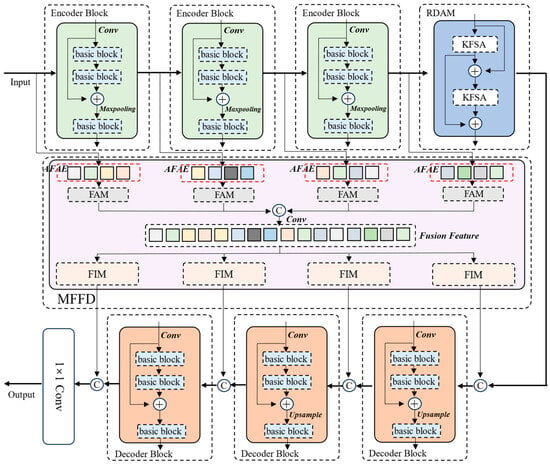

We propose MCFR-Net, an efficient underwater image enhancement network, as shown in Figure 1. MCFR-Net adopts the classic U-shaped structure and introduces basic blocks in the encoding and decoding stages to achieve stable and efficient feature extraction and conversion. Firstly, the input image undergoes multiple encoder blocks to progressively extract features, where each encoder block consists of a stack of basic blocks. The extracted features of each scale pass through the AFAE module, which uses KFSA to aggregate local and global features to enhance the feature expression of key regions effectively. Then, we introduce the RDAM, which further strengthens the feature expression of key regions through two KFSA and residual connections, retaining the original information and enhancing the stability and robustness of features. After that, the MFFD module performs channel mapping and scale alignment of the enhanced features at each scale, and splices and fuses the features in the channel dimension. The fusion features are passed through Feature Integration Modules (FIMs) to reconstruct multi-resolution details in parallel, and restored to the original resolution by stepwise decoding and upsampling.

Figure 1.

The architecture of the proposed MCFR-Net.

3.1. Basic Block

The basic block is one of the core components in MCFR-Net, which aims to efficiently extract the local features of underwater images and enhance their nonlinear expression ability. The basic block has three main parts: convolution operation, instance normalization, and PReLU activation function. Firstly, the convolution operation extracts spatial features through a local receptive field and preserves the channel dimension information to ensure that the basic feature representation is provided for subsequent processing. Then, instance normalization is used to standardize the channel features of each sample, reduce the fluctuation of feature distribution caused by uneven underwater illumination, and improve the robustness of the model across different underwater conditions. Finally, PReLU is used as the activation function to enhance the feature expression ability of the negative value region through adaptive slope adjustment, which further improves the nonlinear modeling ability of the network.

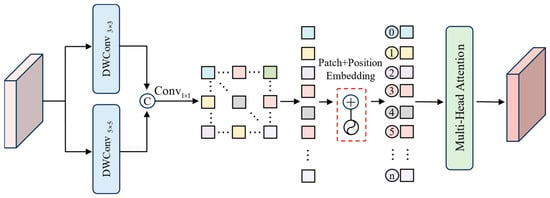

3.2. Adaptive Feature Aggregation Enhancement

Traditional feature extraction methods are often unable to balance the local details and overall structure simultaneously in complex underwater environments, which leads to the loss of key features or the introduction of artifacts. To solve this problem, we design an AFAE module, which integrates local multi-scale features and global contexts through KFSA modules to effectively improve the feature recovery ability of underwater images.

As shown in Figure 2, the AFAE module first uses a 1 × 1 convolution, batch normalization, and an SiLU activation function to map the input feature maps to the intermediate feature space. Then, it adopts a multi-path feature fusion strategy, which contains an unprocessed residual branch to retain the original features, and three enhanced branches processed by KFSA to obtain enhanced features.

Figure 2.

The structure of the AFAE module.

As shown in Figure 3, in KFSA, two deep convolutions of different scales are first used to extract the local spatial features of the input feature map in parallel, and the specific formula is as follows:

Figure 3.

The structure of the KFSA module.

Here, is deep convolution with the kernel size .

Then, the two convolutional features and are concatenated along the channel dimension, followed by a 1 × 1 convolution channel fusion, batch normalization, and ReLU activation function, obtaining multi-scale fusion features.

Here, represents a batch normalization and represents a channel concatenation.

Subsequently, the fused feature map is mapped to the required dimensions through additional channel projection , and a fixed position encoding is added to introduce spatial position information.

Here, is the feature map with location encoding.

Then, the is flattened into a sequence along the spatial dimension, and the global context features are captured by standard multi-head attention. Then, global context features are reconstructed back to the original feature map size.

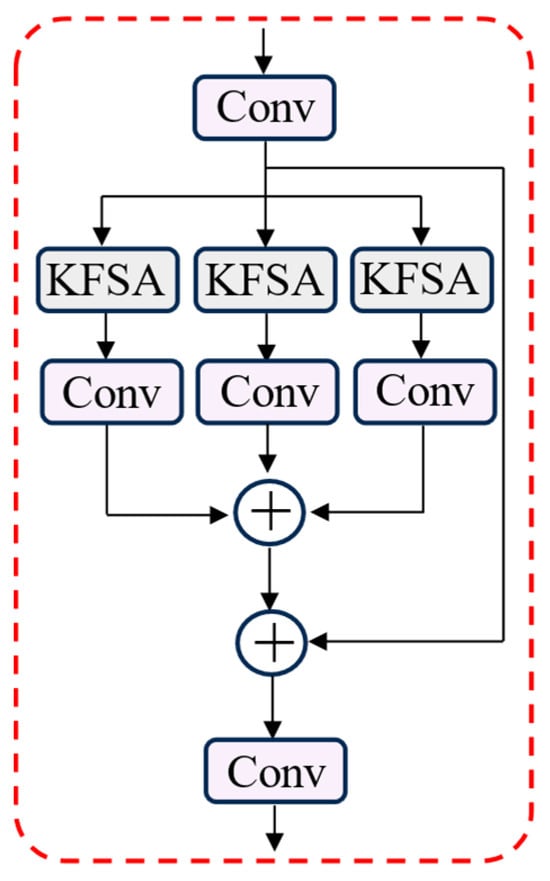

3.3. Residual Dual Attention Module

To improve the stability of underwater image enhancement and the ability to express key regions, we design the RDAM, which dynamically enhances the feature representation by applying KFSA twice and retains the original features through the residual connections.

Specifically, the input feature map undergoes KFSA once to obtain the initial enhanced feature map. Then, it is fused with the original one through a residual connection to obtain the intermediate feature map .

Then, KFSA is applied to the intermediate feature map again for secondary enhancement to further optimize the feature expression of key regions, and the final output feature map is obtained by fusing with the initial enhanced features:

This structure makes full use of the KFSA module’s ability to capture local and global contexts, and effectively suppresses the interference of background regions while strengthening the key regions. In addition, the continuous residual connection avoids the feature offset and information loss caused by excessive adjustment, ensuring that the network maintains stability and robustness while enhancing the visual quality of the image.

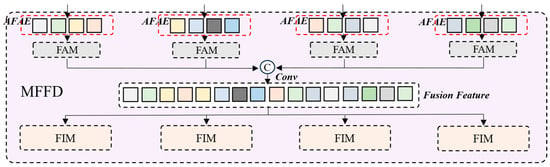

3.4. Multi-Scale Feature Fusion Decoding

In the encoder–decoder architecture, it is difficult to effectively fuse features at different levels. Specifically, high-level features are rich in semantic information but lack details. In contrast, low-level features contain fine textures but are susceptible to degradation. A simple skip connection method does not fully integrate multi-scale information, resulting in problems such as missing details or unbalanced information in different regions of the enhancement result.

We propose an efficient MFFD module, as shown in Figure 4, which integrates the features of the coding layer globally by multi-dimensional feature fusion. This module can make the feature expression more stable in the decoding process, and ensure that the enhanced image has a complete global structure and rich local details. The MFFD module first preprocesses the encoded features of different levels through AFAE modules to enhance their semantic expression. After that, each enhanced feature is fed into the Feature Alignment Module (FAM). The specific operations are as follows:

Figure 4.

The proposed MFFD module.

Here, represents the i-th feature map that goes through the FAM. The FAM first unifies the channel dimension by 1 × 1 convolution and then uses bilinear interpolation to align the spatial size, which solves the problem of inconsistent size and channel dimension between multi-layer features and ensures that different level features can be smoothly fused. In this way, the fusion of multi-scale information is completed before entering the decoder, so that the decoder can use richer global information during feature reconstruction, thereby improving the stability of decoding.

After feature fusion, the concatenated features pass through multiple Feature Integration Modules (FIMs) in parallel to further extract detailed features at different scales. Each FIM uses a 3 × 3 convolution to adjust the channel dimension, extracts structural information through two basic blocks, and adjusts the features to the specified resolution. The output of each FIM is expressed as follows:

The output will be concatenated with the decoder features. This design realizes the effective fusion of global and local information, ensures the continuity of details in the process of layer-by-layer reconstruction of the decoder, improves the structural integrity and visual consistency of the enhanced image, and makes the enhancement effect of the network more stable and natural under different water conditions.

3.5. Loss Function

To reduce the pixel-level error and enhance the structural consistency of the image, we use a linear combination of loss functions to ensure that the enhanced underwater image not only has accurate pixel reduction but also maintains good visual perception quality and structural information integrity. The total loss function consists of loss and SSIM loss, and is expressed as follows:

Here, and are weights to balance the pixel error and structural similarity.

The loss measures the pixel-level difference between the enhanced image and the target image, defined as follows:

Here, and denote the enhanced image and target image, respectively. is the total number of pixels. The loss is more robust to outliers than the mean square error, which prevents the gradient from being too large and improves the training stability.

The SSIM loss measures the structural similarity between the enhanced image and the target image and is calculated as follows:

Here, and are the means of and , respectively, and are the variances, is the covariance, and and are stabilization factors. The SSIM focuses on luminance, contrast, and structural information, and is effective in improving the perceptual quality of the enhanced image compared to methods that only optimize the pixel error.

By combining the loss with the SSIM loss, this linear combination ensures that the enhancement results can accurately reproduce the pixel details and maintain the consistency of the overall structure of the image in the optimization process, so that a more natural and stable enhancement effect can be obtained under different underwater environments.

4. Experimental Setup and Results

In this section, we first give a detailed explanation of the training of MCFR-Net. Subsequently, we present the validation datasets. To comprehensively evaluate the performance of the method, we select ten underwater image enhancement algorithms, including four traditional methods and six deep learning methods, and conduct qualitative and quantitative evaluations, respectively. Finally, the actual contribution and effectiveness of each component are verified by multiple ablation experiments on MCFR-Net.

4.1. Implementation Details

To train MCFR-Net, we use the UIEB dataset with synthetic underwater degraded images. The UIEB dataset consists of 950 real underwater images, of which 890 have corresponding reference images and 60 are challenging. From the UIEB dataset, we randomly select 800 pairs of images to join the training set. The synthesized underwater degraded images simulate 10 underwater scenarios in open sea (I, IA, IB, II, III) and coastal waters (1, 3, 5, 7, 9). We randomly selected 240 pairs from each class, for a total of 2400 image pairs. A total of 3200 pairs of images were used to train the MCFR-Net model. We built an experimental environment for MCFR-Net using an Intel (Santa Clara, CA, USA) Xeon E5-2680 v4 processor, 32 GB of DDR4 memory, and an NVIDIA (Santa Clara, CA, USA) GeForce RTX 4090 graphics processing unit. In the optimization phase, we adopt the ADAM optimizer with the learning rate set to 0.0002 and the batch size is 4.

4.2. Datasets

We comprehensively evaluate the performance of MCFR-Net on four datasets, including R90 [17], C60 [17], U45 [26], and EUVP [27]. R90 refers to the 90 unused pairs of images in the UIEB dataset. C60 is 60 challenging underwater images in the UIEB dataset. The U45 dataset includes three typical degradation types of blue, green, and fog. Each type contains 15 original underwater images. The EUVP dataset includes three categories of underwater images: deep-sea dark light, marine life, and marine environment, which represent diverse underwater scenes. We selected 330 images in EUVP as a validation subset.

4.3. Compared Methods

For a comprehensive and fair evaluation of our model, we compare it with ten typical methods. It covers four traditional methods (UDCP [12], UIBLA [28], GDCP [29], and white balance algorithm) and six deep learning-based methods (WaterNet [17], FUnIE [27], Ucolor [20], U-shape [30], AoSR [31], and DGD [32]). Each method is implemented based on the publicly released code.

4.4. Evaluation Metrics

We employ three full-reference and four no-reference metrics to evaluate the quantitative performance of our MCFR-Net. For the R90 dataset with reference images, we choose the mean square error (MSE), Peak Signal-to-Noise Ratio (PSNR), and Structural Similarity Index Measure (SSIM) as the full-reference evaluation criteria. A lower MSE value means that the output image and the label image have less error at the pixel level. A higher PSNR value means that the output image and the label image are closer in perceptual content, while a higher SSIM value indicates that the two images are more similar in structure. For the C60, U45, and EUVP datasets, we use non-reference evaluation metrics including entropy, UCIQE [33], UIQM [34], and NIQE [35]. The entropy value reflects the amount of information in the image, and the higher the value is, the richer the details and textures are. UCIQE evaluates the subjective visual effect from three dimensions of color fidelity, contrast, and sharpness. The higher the score, the better the perception. NIQE does not need a reference image and measures the degree of distortion by counting the characteristics of natural scenes. A lower value indicates that the image is closer to the natural scene under the no-reference condition.

4.5. Comparisons on R90

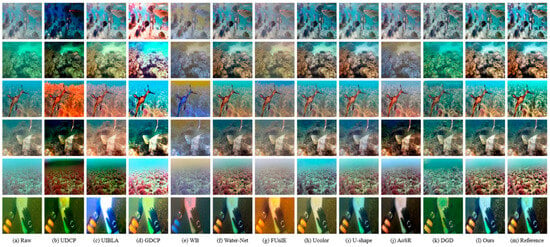

For the R90 dataset, as shown in Figure 5, the enhancement effects of each method are significantly different. In traditional methods, UDCP results in significantly darker images. Although UIBLA alleviates the blur of water bodies to some extent, it fails to effectively improve the image. The GDCP method improves the brightness while introducing obvious color distortion. The unnatural color cast problem of the WB method stands out, especially for the image in the third row. Among the deep learning-based methods, WaterNet and U-shape perform better in color and detail recovery. FUnIE introduces an orange texture. Ucolor is more accurate in overall color restoration, but there are deviations in some details. AoSR and DGD have a limited effect on image enhancement. AoSR fails to significantly improve the problem of detail blur. And DGD fails to significantly improve the color distortion. In contrast, the enhanced image of our proposed MCFR-Net is closer to the reference image, especially in the first, second, and fifth rows, and the visual effect of the enhanced image of MCFR-Net is significantly better than that of other methods.

Figure 5.

Qualitative comparison of our MCFR-Net with other methods on the R90 dataset. From left to right, the original underwater image, the results of UDCP, UIBLA, GDCP, WB, WaterNet, FUnIE, Ucolor, U-shape, AoSR, DGD, and the proposed results of MCFR-Net, with reference images.

To further evaluate the performance of the proposed method, we use metrics such as the MSE, PSNR, and SSIM to measure the quality of the enhanced image. As shown in Table 1, the best score is marked in red and the second in blue. MCFR-Net achieves the best results in all evaluation metrics, indicating that it can effectively recover images and performs well in preserving image details and structural similarity. Although Ucolor and Ushape also achieve good results in the PSNR and SSIM, their MSE values are relatively high. In addition, methods like UDCP and GDCP have large MSE values and poor performance in PSNR and SSIM metrics, which means that these methods are insufficient in the accuracy and quality of image restoration.

Table 1.

Quantitative comparison of our MCFR-Net and other methods on the R90 dataset.

4.6. Comparisons on C60

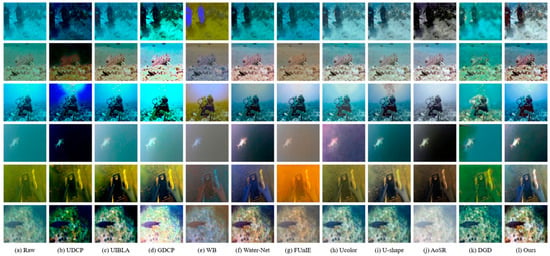

Figure 6 shows the experimental results of different methods on the C60 dataset. Although UDCP alleviates the image blur problem, it introduces dark tones. UIBLA and GDCP eliminate the fog of underwater images, but the overall contrast cannot be effectively improved. The WB method shows more obvious and unnatural color bias, such as the images in the first row. WaterNet corrects color deviation, but its effect on brightness is limited. FunIE and AoSR can effectively alleviate color cast, but introduce additional color distortion. Ucolor and U-shape have good results in color restoration, but they are not obvious in the improvement in detail blur and low contrast. The DGD method has limited improvement on the overall image quality. Compared with the above methods, our proposed MCFR-Net successfully corrects the color bias, significantly improves the image brightness and contrast, and the enhanced visual effect is natural and clear.

Figure 6.

Qualitative comparison between our proposed MCFR-Net and other methods on the C60 dataset. From left to right, the original underwater image, the results of UDCP, UIBLA, GDCP, WB, WaterNet, FUnIE, Ucolor, U-shape, AoSR, DGD, and our proposed results for MCFR-Net.

To further evaluate the performance of the proposed method, non-reference metrics such as entropy, UIQM, UCIQE, and NIQE are further used to measure the quality of the enhanced image. As shown in Table 2, among all the compared methods, our MCFR-Net ranks first in terms of entropy and UIQM, and second in terms of UCIQE. In general, MCFR-Net performs well in all indicators, especially in entropy with a value of 7.46, which indicates that our method can effectively recover the detailed information and color quality of underwater images. In addition, although the values of UCIQE and NIQE are not optimal, they can also well show that MCFR-Net can effectively recover details, improve the quality and structure of underwater images, and show the naturalness of images. The comprehensive results show that our method can effectively improve the image quality, and the enhanced image contains more visual information.

Table 2.

Quantitative comparison of our MCFR-Net and other methods on the C60 dataset.

4.7. Comparisons on U45

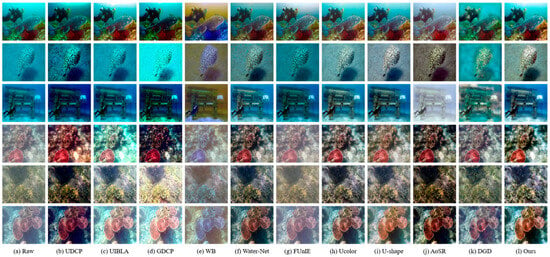

For the U45 dataset, as shown in Figure 7, the enhancement effects of each method are significantly different. It can be seen from the figure that UDCP, UIBLA, and GDCP fail to effectively remove the blue tone. WB introduces a yellow hue and creates a new color deviation. WaterNet, FUnIE, Ucolor, U-shape, and AoSR achieve color correction to some extent, but fail to effectively improve image blur. The DGD method has a poor effect in improving image color and contrast, and the visual quality is not significantly improved, as seen in the images in the second and third rows. In contrast, our proposed MCFR-Net can not only stably correct the color deviation in underwater images, but also significantly improve the overall brightness and contrast of the image. The enhanced image has more details, and the visual quality is better than other contrast methods.

Figure 7.

Qualitative comparison between our proposed MCFR-Net and other methods on the U45 dataset. From left to right are the original underwater image, the results of UDCP, UIBLA, GDCP, WB, WaterNet, FUnIE, Ucolor, U-shape, AoSR, and DGD, and our proposed results for MCFR-Net.

To further evaluate the performance of the proposed method, non-reference metrics such as entropy, UIQM, UCIQE, and NIQE are further used to measure the quality of the enhanced image. As shown in Table 3, our MCFR-Net achieves the highest scores on entropy, UIQM, and UCIQE, and provides more image information compared to Water-Net and Ucolor, which indicates that our method has significant advantages in detail recovery. Although the value of NIQE is not optimal, it is also at a high level, indicating that our method can maintain a good visual effect on the image.

Table 3.

Quantitative comparison of our MCFR-Net and other methods on the U45 dataset.

4.8. Comparisons on EUVP

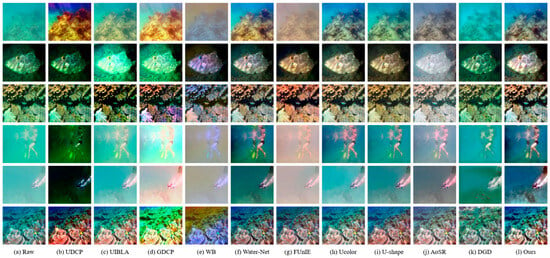

Figure 8 shows the experimental results of different methods on the EUVP dataset. It can be seen from the figure that UDCP and WB fail to effectively solve the color deviation problem and introduce abnormal tones. Although UIBLA and GDCP enhance the contrast of the image, the brightness enhancement is too high. The WaterNet, Ucolor, U-shape, and AoSR methods can correct the color deviation to a certain extent, but they cannot effectively improve the blur phenomenon of the image. The dehazing effect of the FUnIE method is not good, and the fourth and fifth lines are more obvious. In addition, the enhancement effect of the DGD method is not good, and the enhanced image is still blurred, and the color distortion is obvious. Compared with the above methods, our proposed MCFR-Net performs better in resolving image blur, and the enhanced images have realistic colors and significantly improved contrast, as shown in the second-, fourth-, and fifth-row images.

Figure 8.

Qualitative comparison of our MCFR-Net with other methods on the EUVP dataset. From left to right, the original underwater image, the results of UDCP, UIBLA, GDCP, WB, WaterNet, FUnIE, Ucolor, U-shape, AoSR, and DGD, and our proposed results for MCFR-Net.

Table 4 shows the performance results of the EUVP dataset on non-reference metrics such as entropy, UIQM, UCIQE, and NIQE. From the data, MCFR-Net shows superior performance in many aspects. Specifically, MCFR-Net achieves the best scores on entropy, UIQM, and UCIQE, which indicates that this method is superior to other comparison methods in preserving image details and improving visual quality. MCFR-Net also performs well on the NIQE index, indicating that the method can effectively improve the visual effect of the image. In summary, MCFR-Net can improve the image quality in many aspects when dealing with underwater scenes and better balance the sharpness and visual effects.

Table 4.

Quantitative comparison of our MCFR-Net and other methods on the EUVP dataset.

4.9. Parameters and Flops

In this section, we evaluate the inference time, parameters, and flops of all methods. We use U45 as the test dataset, and all experiments are performed on an Intel Xeon Silver 4110 CPU @ 2.10 GHz. The statistical results are listed in Table 5. Although MCFR-Net has an increase in computation and inference time compared with FUnIE, it has more advantages in visual restoration effect and quantitative indicators. Compared with Ucolor, MCFR-Net reduces the computational and storage overhead while maintaining the performance benefits.

Table 5.

Comparison of flops, parameters, and inference time on the U45 dataset.

4.10. Ablation Experiments

In this section, we conduct ablation experiments to demonstrate the effectiveness of each module of MCFR-Net. The specific experiments are as follows:

- Analysis of the effect of the Adaptive Feature Aggregation Enhancement module. Noted as no AFAE (w/o AFAE).

- Analysis of the effect of the Residual Dual Attention Module. Noted as no RDAM (w/o RDAM).

- Analysis of the Multi-Scale Feature Fusion Decoding module. Noted as no MFFD (w/o MFFD).

- Analysis of the baseline with all the above modules removed. Noted as Baseline.

Table 6 presents the quantitative comparison results on the R90 dataset. From the results, the full MCFR-Net performs the best in all metrics, which reflects the effectiveness of the combination of individual modules. From the specific indicators, the MSE of the model increases and the PSNR decreases after removing AFAE, while the SSIM remains unchanged. This is mainly because the AFAE module reduces the pixel-level error through adaptive local feature enhancement, the PSNR is more sensitive to pixel-level changes, so it changes more, and the SSIM pays more attention to structural information, so it has less influence. After removing the RDAM, the MSE increases, the PSNR index decreases, and the SSIM remains the same. This indicates that the RDAM mainly improves the image details and pixel accuracy by enhancing the feature expression of key regions, and has relatively little impact on the overall structural information, so the change in the PSNR is more obvious than that of the SSIM. Removing the MFFD module, the MSE increases significantly, and the SSIM decreases substantially, while the PSNR remains stable. This indicates that the MFFD module effectively captures and reconstructs rich context information through the multi-scale fusion strategy, which plays an important role in the structural consistency and visual effect of the image, while the PSNR is less sensitive to structural information, so the change is not obvious. Finally, the indicators of the baseline model after removing all modules are significantly degraded, which further verifies the effectiveness and necessity of the proposed module combination in the UIE task.

Table 6.

Image quality assessment of the ablation experiments for the R90 dataset.

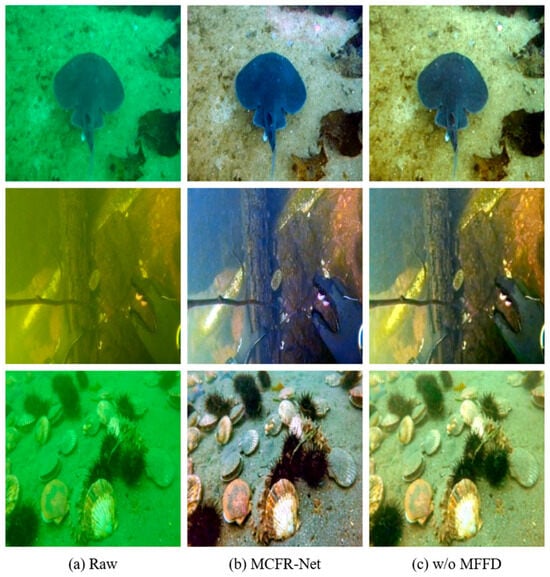

Figure 9 shows the ablation of the MFFD module. The first column is the original image, the second column is the MCFR-Net enhancement result, and the third column is the ablation result after removing the MFFD module. It can be seen that the original image has obvious color distortion, low contrast, and missing details. In the absence of the MFFD module, although the contrast is improved, the overall detail performance is still not ideal. For example, the edge of the stingray in the first row is still blurred, the structure details of the underwater object in the second row are not clear, and the details of shells and sea urchins in the third row are blurred. In contrast, the complete MCFR-Net shows clearer edges and more realistic colors. This shows that the MFFD module can effectively fuse multi-scale features and significantly improve the detail performance and overall visual quality of the image.

Figure 9.

Ablation experiments of the MFFD module. (a) Original underwater images. (b) Results of MCFR-Net. (c) Results without using the MFFD module.

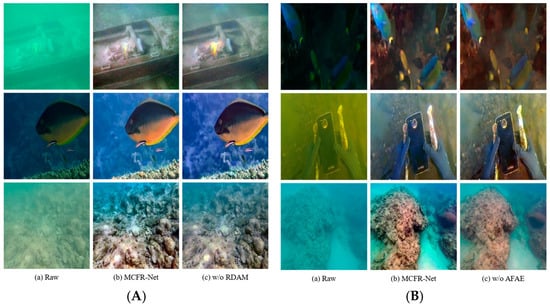

Figure 10 illustrates the ablation of the RDAM and the AFAE module. As shown in Figure 10A, after removing the RDAM, the contrast between the detailed feature expression of key targets and the background area is weakened. For example, the seabed rocks and corals in the third row of the third column lack details and clarity. In contrast, the complete MCFR-Net provides clearer and more detailed images, indicating that the RDAM can significantly improve the detailed expression and color stability of the key regions in the images. As shown in Figure 10B, after removing the AFAE module, although the overall image quality is improved to some extent compared to the original samples, the detail clarity is inferior to the complete MCFR-Net. This confirms that the AFAE module plays a key role in enhancing the local details.

Figure 10.

Ablation studies of the RDAM and the AFAE module. (A) Ablation study of the RDAM. (B) Ablation study of the AFAE module.

Based on the above qualitative analysis, the MFFD, RDAM, and AFAE modules perform their functions in the proposed MCFR-Net, and jointly improve the overall visual quality and detail reduction ability of underwater images from three aspects of multi-scale feature fusion, attention enhancement of key regions, and adaptive enhancement of local details, respectively.

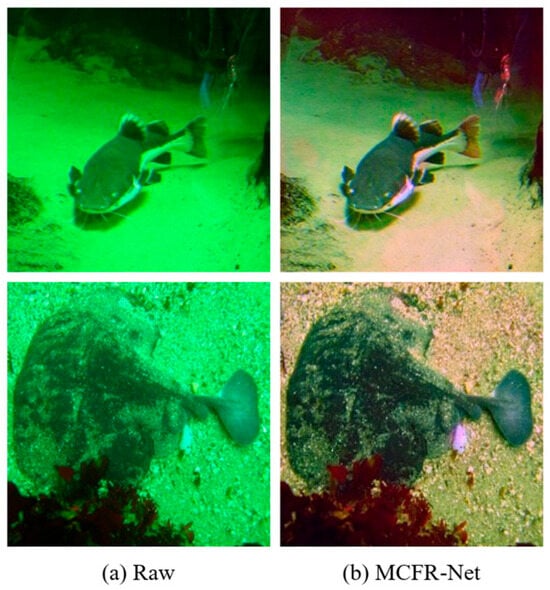

4.11. Failure Cases

Our MCFR-Net shows some shortcomings when dealing with some underwater images with serious color deviation, as shown in Figure 11. The first column is the original underwater image, and the second column is the corresponding enhanced result. It can be seen from the figure that MCFR-Net shows color distortion in local regions during the enhancement process, such as purple bias in the fish body region in the first row of the second column. Therefore, in future research, we will introduce a color correction algorithm to improve the restoration effect under severe color cast conditions.

Figure 11.

Failure cases. (a) Original underwater images. (b) Results of MCFR-Net.

5. Conclusions

Aimed at countering the degradation of underwater images caused by light absorption and scattering, this paper proposes MCFR-Net, which significantly improves the detail and visual effect of underwater images. The proposed method included an Adaptive Feature Aggregation Enhancement (AFAE) module, a Residual Dual Attention Module (RDAM), and a Multi-Scale Feature Fusion Decoding (MFFD) module. The AFAE module aggregates multi-scale convolution and self-attention features through KFSA, which effectively enhances the interaction and expression ability between local details and global features in the image, and improves the shortcomings of traditional methods in local detail recovery. The RDAM enhances the feature representation of key regions and retains the original information by twice applying KFSA and residual connection to improve the stability of feature expression. In addition, the MFFD module captures rich context through scale alignment and multi-scale channel mapping, which improves the network’s ability to understand and recover details and the overall structure. A large number of experimental results on different datasets show that MCFR-Net is superior to the existing traditional methods and deep learning models in subjective visual effects and objective quantitative indicators, which has important theoretical value and practical significance for underwater visual processing. In the future, we will further optimize the network structure to improve efficiency and expand practical application scenarios.

Author Contributions

Methodology, C.L.; software, C.L.; formal analysis, C.L.; resources, C.L. and Y.F.; data curation, C.L.; writing—original draft preparation, C.L.; writing—review and editing, C.L., L.H. and X.S.; funding acquisition, X.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Foundation of the National Natural Science Foundation of China under Grant 62276118.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, W.; Zhuang, P.; Sun, H.H.; Li, G.; Kwong, S.; Li, C. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- Jiang, Z.; Li, Z.; Yang, S.; Fan, X.; Liu, R. Target oriented perceptual adversarial fusion network for underwater image enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6584–6598. [Google Scholar] [CrossRef]

- Zhuang, P.; Wu, J.; Porikli, F.; Li, C. Underwater image enhancement with hyper-laplacian reflectance priors. IEEE Trans. Image Process. 2022, 31, 5442–5455. [Google Scholar] [CrossRef]

- Liu, C.; Shu, X.; Xu, D.; Shi, J. GCCF: A lightweight and scalable network for underwater image enhancement. Eng. Appl. Artif. Intell. 2024, 128, 107462. [Google Scholar] [CrossRef]

- Hong, L.; Shu, X.; Wang, Q.; Ye, H.; Shi, J.; Liu, C. CCM-Net: Color compensation and coordinate attention guided underwater image enhancement with multi-scale feature aggregation. Opt. Lasers Eng. 2025, 184, 108590. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Liu, C.; Shu, X.; Pan, L.; Shi, J.; Han, B. Multiscale underwater image enhancement in RGB and HSV color spaces. IEEE Trans. Instrum. Meas. 2023, 72, 1–14. [Google Scholar] [CrossRef]

- Jahidul Islam, M. Understanding Human Motion and Gestures for Underwater Human-Robot Collaboration. arXiv 2018, arXiv:1804.02479. [Google Scholar]

- Lu, H.; Li, Y.; Serikawa, S. Underwater image enhancement using guided trigonometric bilateral filter and fast automatic color correction. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 3412–3416. [Google Scholar]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef]

- Chiang, J.Y.; Chen, Y.C. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2011, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Guo, C.; Cong, R.; Gong, J. A hybrid method for underwater image correction. Pattern Recognit. Lett. 2017, 94, 62–67. [Google Scholar] [CrossRef]

- Priyadharsini, R.; Sree Sharmila, T.; Rajendran, V. A wavelet transform based contrast enhancement method for underwater acoustic images. Multidimens. Syst. Signal Process. 2018, 29, 1845–1859. [Google Scholar] [CrossRef]

- Huang, D.; Wang, Y.; Song, W.; Sequeira, J.; Mavromatis, S. Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In MultiMedia Modeling: 24th International Conference, MMM 2018, Bangkok, Thailand, 5–7 February 2018, Proceedings, Part I 24; Springer International Publishing: Cham, Switzerland, 2018; pp. 453–465. [Google Scholar]

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep CNN method for underwater image enhancement. In Proceedings of the 2017 IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 1382–1386. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Sun, X.; Liu, L.; Dong, J. Underwater image enhancement with encoding-decoding deep CNN networks. In Proceedings of the 2017 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computed, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation, San Francisco, CA, USA, 4–8 August 2017; pp. 1–6. [Google Scholar]

- Qi, Q.; Li, K.; Zheng, H.; Gao, X.; Hou, G.; Sun, K. SGUIE-Net: Semantic attention guided underwater image enhancement with multi-scale perception. IEEE Trans. Image Process. 2022, 31, 6816–6830. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef]

- Fu, Z.; Lin, X.; Wang, W.; Huang, Y.; Ding, X. Underwater image enhancement via learning water type desensitized representations. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 2764–2768. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Lei, Y.; Yu, J.; Dong, Y.; Gong, C.; Zhou, Z.; Pun, C.M. UIE-UnFold: Deep unfolding network with color priors and vision transformer for underwater image enhancement. In Proceedings of the 2024 IEEE 11th International Conference on Data Science and Advanced Analytics (DSAA), San Diego, CA, USA, 6–10 October 2024; pp. 1–10. [Google Scholar]

- Khan, R.; Mishra, P.; Mehta, N.; Phutke, S.S.; Vipparthi, S.K.; Nandi, S.; Murala, S. Spectroformer: Multi-domain query cascaded transformer network for underwater image enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 1454–1463. [Google Scholar]

- Hu, X.; Liu, J.; Li, H.; Liu, H.; Xue, X. An effective transformer based on dual attention fusion for underwater image enhancement. PeerJ Comput. Sci. 2024, 10, e1783. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wang, W. A fusion adversarial underwater image enhancement network with a public test dataset. arXiv 2019, arXiv:1906.06819. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Peng, L.; Zhu, C.; Bian, L. U-shape transformer for underwater image enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Lu, Y.; Yang, D.; Gao, Y.; Liu, R.W.; Liu, J.; Guo, Y. AoSRNet: All-in-one scene recovery networks via multi-knowledge integration. Knowl.-Based Syst. 2024, 294, 111786. [Google Scholar] [CrossRef]

- Gonzalez-Sabbagh, S.; Robles-Kelly, A.; Gao, S. DGD-cGAN: A dual generator for image dewatering and restoration. Pattern Recognit. 2024, 148, 110159. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).