Abstract

Methods based on light field information have shown promising results in depth estimation and underwater image restoration. However, improvements are still needed in terms of depth estimation accuracy and image restoration quality. Previous work on underwater image restoration employed an image formation model (IFM) that overlooked the effects of light attenuation and scattering coefficients in underwater environments, leading to unavoidable color deviation and distortion in the restored images. Additionally, the high blurriness and associated distortions in underwater images make depth information extraction and estimation very challenging. In this paper, we refine the light propagation model and propose a method to estimate the attenuation and backscattering coefficients of the underwater IFM. We simplify these coefficients into distance-related functions and design a relationship between distance and the darkest channel to estimate the water coefficients, effectively suppressing color deviation and distortion in the restoration results. Furthermore, to increase the accuracy of depth estimation, we propose using blur cues to construct a cost for refocusing in the depth direction, reducing the impact of high signal-to-noise ratio environments on depth information extraction, and effectively enhancing the accuracy and robustness of depth estimation. Finally, experimental comparisons show that our method achieves more accurate depth estimation and image restoration closer to real scenes compared to state-of-the-art methods.

1. Introduction

Underwater image restoration and depth estimation are crucial research areas that have broad applications in underwater scene reconstruction, heritage conservation, and seabed surveying, among others. However, underwater optical imaging is often compromised by water quality and lighting conditions, which can lead to color distortion and image blurring. To address these challenges, numerous solutions have been explored, including the use of polarization [1], light fields (LF), and stereoscopic imaging techniques [2]. Among these, light field applications in underwater imaging have garnered attention due to the micro-lens array of light field cameras, which can capture sufficient spatial and angular information in a single shot. This capability makes it possible to extract accurate depth information from a single viewpoint, significantly enhancing the quality of restoration in underwater environments.

A considerable amount of research has utilized light field data to enhance underwater image restoration [3,4,5,6]. These algorithms typically rely on the Image Formation Model (IFM) proposed by Narasimhan and Nayar [7], which assumes constant and identical attenuation and scattering coefficients. However, in real underwater environments, the attenuation coefficient varies with distance and differs from the scattering coefficient, leading to inaccuracies in the IFM that result in noticeable color biases in the restored images.

In the field of underwater depth estimation, the clarity of target scenes is often compromised by backscatter from dense water bodies, leading to blurred images. This effect renders most traditional refocusing algorithms that rely on correlation cues and methods using the dark channel prior for long-range depth estimation ineffective, especially in areas with significant backscatter noise. Recently, researchers like Lu [5] and Huang [8] have proposed using deep neural networks to estimate underwater image depth. However, like all methods that employ deep learning for underwater depth estimation, they face challenges due to the scarcity of adequate paired training data. Consequently, these methods perform poorly in underwater environments where no training data pairs are available.

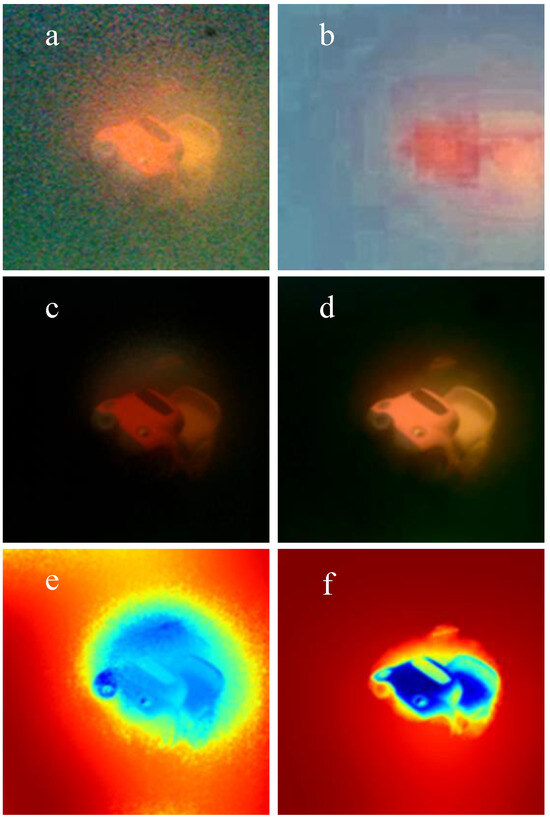

In this paper, we introduce a method for estimating intrinsic parameters of an underwater image formation model and propose a depth estimation method for underwater light field images based on blur cues. Inspired by [9], we use distance as a cue to estimate the inherent parameters of the water body. However, compared to [9], our method further simplifies the parameters required by the model while maintaining accuracy. Furthermore, leveraging the positive correlation between underwater image depth and blurriness, we propose using blur cues as a cost component for light field depth refocusing, effectively enhancing the accuracy of depth estimation in underwater scenes. As illustrated in Figure 1, our approach achieves higher accuracy in depth estimation and improves image restoration outcomes in underwater settings. The contributions of this paper are outlined as follows:

Figure 1.

Comparison of Depth Estimation and Image Restoration. (a) Central view of an underwater light field image captured with a light field camera. (b) Restored image using Huang’s deep learning-based method [8]. (c) Restored image using Tian’s light field refocusing method [3]. (d) Restored image using our method. (e) Depth estimation by Tian [3]. (f) Depth estimation image using our method.

- We have introduced an underwater light propagation model for image restoration and developed a method to estimate water body attenuation parameters and backscatter;

- We proposed a method for underwater image depth estimation that utilizes the relationship between blurriness and scene depth as one of the clues for estimating the depth of underwater light field images, thereby improving the accuracy of underwater scene depth estimation;

- In an experimental water tank environment, we demonstrated through extensive experimental data that our method achieves higher depth estimation accuracy and better restoration effects compared to previous methods.

2. Related Work

The problem of underwater image degradation has been approached from various research angles, such as hardware-based and software-based restoration techniques. Image distortions caused by forward attenuation are generally addressed using software approaches, including the establishment of attenuation functions [10,11,12], image enhancement [13], or deep learning methods [8]. However, software solutions face limitations in handling information loss caused by scattering noise, and deep learning approaches struggle to acquire high-quality data pairs for training.

To mitigate backscatter noise, Schechner and Karpel [1] utilized the polarization properties of light in the medium, capturing images at different angles with polarizers to retrieve effective image information. Hitam et al. [14] explored range-gated imaging, using pulsed lasers and fast-gated cameras to precisely control the imaging process and reduce backscatter. Additionally, Roser and colleagues [2] employed stereoscopic imaging techniques to determine object distances by comparing the disparity between images captured from different angles. These methods have achieved notable results, but harsh underwater conditions can significantly reduce the imaging quality, thus impacting the performance of traditional sensors and environmental sensing accuracy.

In recent years, as light field cameras have entered the consumer market, the field of light field imaging has garnered increased attention. Compared to traditional imaging, light field cameras can capture not only the intensity of light but also its angular information, allowing for far superior estimation accuracy in image depth. Tao and others [15] combined digital refocusing blur cues with corresponding cues to estimate scene depth. Georgiev [16] further applied this to super-resolution imaging.

However, there has been limited research on using light field cameras for underwater image restoration. Tian [3] and Lu [4] were the first to attempt applying light field methods to underwater image restoration, combining the dark channel prior (DCP) [17] method and digital refocusing cues to estimate depth maps and restore images, which showed better robustness and restoration effects compared to monocular image strategies. Skinner [18] applied 2D defogging methods to each sub-aperture image and processed them using guided image filtering, resulting in 4D defogged underwater light field images. Feng [19] established an underwater imaging system based on Mie scattering theory for underwater 3D reconstruction and image restoration. Additionally, Lu [20] introduced depth convolutional neural fields in image restoration tasks to address the desaturation problem in light field images. To tackle the challenge of obtaining underwater image data, Ye [21] proposed a deep learning-based method to transform images taken in air into underwater images. However, due to limited training data, the effectiveness of deep learning methods was not satisfactory. While these methods have achieved good results, they have neglected the impact of light imaging model parameters in the restoration process. Moreover, the accuracy of light field depth estimation methods needs improvement due to the noise caused by backscattering.

Despite the superior performance of light field cameras compared to traditional cameras, there remain significant limitations. In this paper, we employ blur cues from light field refocusing combined with traditional priors to achieve more accurate depth maps. We then introduce a refined underwater optical imaging model and estimate more precise parameters for light attenuation and scattering to improve the quality of underwater image restoration.

The remainder of this paper is organized as follows. In Section 3, we describe the proposed method. In Section 4, we set up the experiments and conduct extensive performance comparisons of the results. In Section 5, we provide some discussions and conclusions. Specifically, in Section 3.1, we describe the image restoration model and its related parameters, and the subsequent subsection details the solving process for these parameters. In Section 3.2, we explain the methods and processes for solving the scattering and attenuation coefficients in the model. In Section 3.3, we present our proposed underwater light field depth estimation method. In Section 3.4, we describe the refocusing restoration process for light field images.

3. Method

3.1. Underwater Image Formation Model

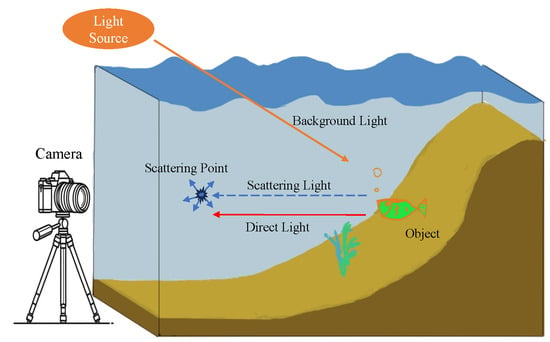

Figure 2 depicts the underwater image formation model, where the camera captures underwater image information comprising attenuated direct signals and scattered signals from the water body. The imaging model is represented as follows:

where represents the color channels, is the distorted image captured by the camera, is the target information attenuated during propagation through the water, and is the scattering signal from light reflected off particles suspended in the water, which degrades and blurs the image.

Figure 2.

Schematic diagram of underwater imaging and light propagation in Scattering media.

The extended form of Equation (1) is given as follows [9]:

where z denotes the distance from the camera to the target, represents the external input light source, represents the undegraded scene image, is the beam attenuation coefficient, and is the backscattering coefficient of the water body.

The attenuation coefficient in the attenuation term depends on wavelength , depth d, and distance z. Similarly, the scattering coefficient is related to the wavelength and the background light . These coefficients can be expressed as follows [22]:

where represents the reflectance of the object’s surface, is the intensity of the incident light at depth d, is the spectral response of the camera, is the physical attenuation coefficient, and and represent the visible wavelength range (400 to 700 nm).

The background light is the sum of the light received at the position where the distance , represented by the following:

3.2. Coefficients Estimation

According to Equation (2), to effectively restore underwater images, it is essential to determine or estimate the parameters associated with light attenuation and scattering during propagation. As mentioned in Equation (3), () and () depend on the parameters and , respectively. The parameters , (defined by the optical water type), the depth d at which photos are taken, the reflectance of each target in the scene, and the camera response are considered constants once determined at the time of capture. These parameters are seldom known precisely at the time an underwater photo is taken. is significantly influenced by the distance z, and is highly correlated with the type of water body and illumination. Here we detail the methods for estimating these coefficients.

3.2.1. and Estimation

To estimate the backscattering parameters, it is crucial to isolate other influencing factors. Inspired by He’s [23] dark channel prior method, where the minimum RGB values in a hazy image’s dark channel represent the relative distance between the camera and the target and the maximum values indicate the background light region, our approach differs in that we know the target distance range rather than estimating it.

It is evident that the backscatter part is related to distance z and reflectance , and tends to the captured distorted image as distance increases or target reflectance decreases. Therefore, for reflectance or (in shadow regions), the captured target pixel intensity tends toward the backscatter pixel intensity . This is similar to the DCP method, but here we look for the lowest 1% of RGB triplets in the image area represented as where or , hence . Concurrently, to obtain a more accurate scattering parameter relative to distance, we take the target distance as a known parameter. Thus, the backscatter in the region can be further expressed as follows:

where is the known distance variable and represents a minor residual amount, retained due to dense scattering conditions in water. Using nonlinear least squares, we then estimate parameters , , , . Note that since the forward signal is minimal, can be temporarily considered constant.

3.2.2. Estimation

According to [9], is strongly related to distance z, and its dependency on any range z is described using a dual-exponential form:

where , , , and are constants.

Assuming and have been obtained, can be removed from the original image , and can be estimated by processing the direct signal . According to Equations (2) and (3), the restored image can be expressed as follows:

In our experimental setup, where absolute distances are not extensive, we assume the corresponding image captured in clear water undergoes minimal scattering and attenuation, using it as a reference image for restoration effects set as . Rewriting the formula, we obtain the following:

By minimizing this expression, we obtain discrete data related to range z.

3.3. Depth Estimation

In densely scattering media such as water, the random scattering of light during propagation leads to restored images with relatively low signal-to-noise ratios, causing traditional defocus and correspondence cues to perform poorly. To address this, we propose using blur cues and incorporating a depth threshold method to reduce the influence of noise on depth estimation, thereby improving accuracy. Below, we detail our approach.

3.3.1. Construction of Blur Clue Cost

We utilize a parallel plane parameterization to represent the four-dimensional light field , where and correspond to spatial and angular coordinates, respectively. Following Ng et al. [24], we segment the light field data at different depths as follows:

where represents the current focus depth ratio and is the refocused light field image at the depth ratio , with the central view located at coordinates .

Considering that the further the distance of a target pixel due to attenuation, the greater its blurriness, and that the blurriness of a target pixel after light field refocusing is inversely proportional to its true distance, we choose to use blurriness as a cost clue for depth estimation. We employ a local frequency analysis method using the Discrete Cosine Transform (DCT) [25] to analyze the characteristics of the area around a pixel. By evaluating the absence of high-frequency components, we can quantify the image’s blurriness. The DCT representation for the area around a clue point is given as follows:

where are frequency variables ranging from 0 to , are the pixel values in an area, and are normalization factors defined as follows:

The blurriness at that location is then expressed as follows:

For the refocused light field image at depth ratio , the cost clue is represented as follows:

This method systematically utilizes the inherent blurriness of the underwater images caused by scattering to establish a robust depth estimation framework. By integrating blur as a quantitative measure into the cost function for depth estimation, we can enhance the accuracy of light field-based depth estimation in challenging underwater conditions.

3.3.2. Single Image Preprocessing

Given the initial high blurriness of underwater images, it is crucial to obtain more texture detail information through preprocessing before depth estimation. As discussed in Section 3.2, having acquired the parameters of the light propagation model, we now only need an initial depth estimate to retrieve more detailed textures from underwater images.

Inspired by the methods of Peng [11] and Carlevaris-Bianco [26], we combine the characteristics of these two approaches for our image preprocessing depth estimation.

First, considering the relationship between imaging distance and blurriness in the captured images, Peng [11] introduced an image formation model based on blurriness prior, where the pixel blurriness related to depth distance is represented as follows:

where is the grayscale version of the input image , G is a spatial filter of size with variance , set as , with n set to 4. is a local patch centered at x.

Next, we estimate depth distances based on the absorption rate differences of light at various wavelengths in the medium, where attenuation of red wavelengths is greater than that of blue and green wavelengths. Carlevaris-Bianco [26] suggested using the difference between the maximum intensities of the red channel and the green and blue channels as depth cues, represented as follows:

We combine the distance cues from Equations (16) and (17) to estimate an initial depth map, expressed as follows:

where is a stretching normalization function. Then, depending on the input’s average red channel value, we choose between light absorption-based or image blurriness-based methods to estimate the underwater scene depth:

where , is the mean function, and S is the sigmoid function defined as follows:

where s controls the slope of the activation function, thereby controlling the amplitude of the output change when the input values have slight differences. We experimented with different values and found that the model performs best when .

Finally, based on Equation (19), we can obtain the initial depth estimation map. By substituting the depth results into the process described in Section 3.1, we can obtain the preliminary restored underwater image.

3.3.3. Depth Cue Fusion and Depth Estimation

After obtaining the blur-based depth cues to enhance the robustness of depth estimation, we also introduce Tao’s [15] defocus cost as a supplementary metric. The spatial point defocus cost response is represented as follows:

where is the window size around the current pixel, is the Laplacian operator in both horizontal and vertical directions, and is the mean of all angular positions for that pixel, expressed as follows:

Combining the depth cost clues from Equations (15) and (21), the depth relationship is finally established as follows:

By inputting the restored light field image into this formula, we obtain the depth estimation map for the underwater light field image. This comprehensive approach effectively merges various depth cues to produce a more accurate and robust estimation of underwater depths.

3.4. Underwater LF Image Restoration

Although attenuation and scattering effects have been removed in light field imaging, the random scattering of light by particles in water often still results in noise in the restored images, especially in waters with high turbidity. To address this, we take into account that the noise patterns and their distributions formed at the same object point across different sub-apertures vary. By averaging multiple light field views through a light field refocusing algorithm, we can further suppress noise and reduce the impact of backscatter noise.

4. Experimental Results

This section introduces the experimental setup, displays results from our experimental apparatus, compares them against various state-of-the-art algorithms, and discusses the limitations of our approach.

4.1. Experimental Methodology

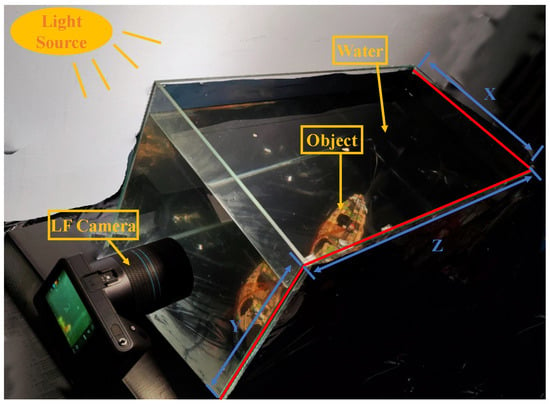

In this study, we conducted experiments in a dark environment using a water tank with dimensions of 0.7 × 0.4 × 0.4 m. All sides of the tank, except the shooting face, were covered with opaque black film to prevent external light reflections. We used white LED lights as the light source. The Lytro Illum camera was positioned 2 cm away from the tank. Figure 3 illustrates this experimental setup. Note that the selection of the tank size was based on the physical space constraints of the laboratory and the practical feasibility of operations. Ideally, a larger water tank would better simulate the light propagation paths in real environments. However, due to the limitations of our laboratory conditions, we could only use the available size of the water tank for our experiments.

Figure 3.

Our experimental setup.

In the experiment, we gradually added milk to the water tank, totaling 4 mL. This incremental addition method allowed us to precisely control the turbidity of the water, thereby simulating different levels of scattering effects.

We chose milk as the scattering medium because it can evenly disperse in water, providing consistent optical scattering effects. Although other studies have used substances like red wine, milk is more suitable for simulating suspended particles and turbidity in water. Additionally, milk is easy to control and measure, and its optical properties better meet the requirements of our experiment.

4.2. Results Comparisons

In this experiment, we evaluated the performance of our proposed method against the latest techniques in image restoration and depth estimation. We selected prior-based single image methods for depth estimation and restoration [11,27], light field (LF)-based methods [3], and deep learning-based methods [8,28] for comparison. It should be noted that the LF-based methods were not optimized specifically for this task during replication, leading to discrepancies between their replication results and practical outcomes. Note that the methods selected above all have publicly available source code or authors willing to provide the code. This allows us to accurately reproduce the results of these methods and make fair comparisons.

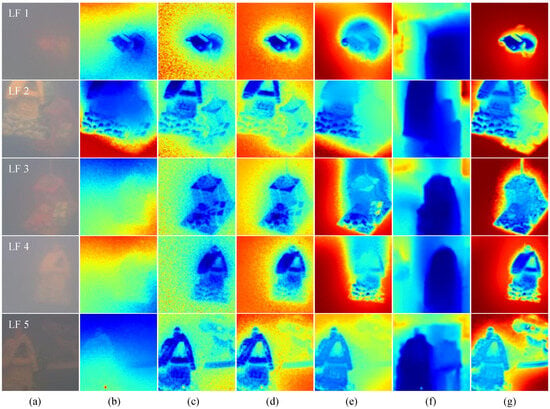

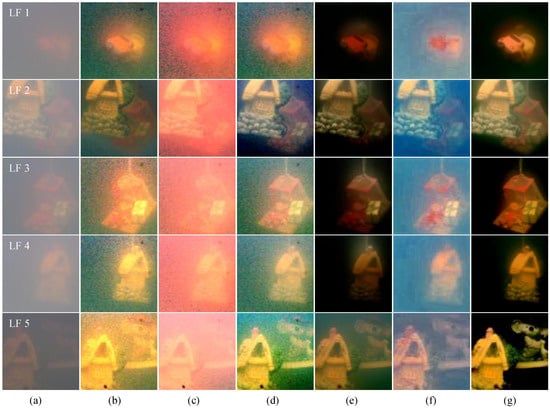

The original image data used in Figure 4 and Figure 5, labeled LF1 to LF5, were collected in a medium turbidity environment created by adding 4ml of milk. To verify robustness to distance, the targets in these five scenes were randomly placed at different positions in the water tank, with designs including both single target and varying positions of front and back targets.

Figure 4.

Comparison of depth estimation results. (a) Original image. (b) UDCP [27]. (c) ULAP [10]. (d) IBLA [11]. (e) Tian’s method [3]. (f) UW-Net [28]. (g) Our method.

Figure 5.

Comparison of restoration results. (a) Original image. (b) UDCP [27]. (c) ULAP [10]. (d) IBLA [11]. (e) Tian’s method [3]. (f) Semi-UIR [8]. (g) Our method.

Figure 4 presents a comparison of depth estimation results between our method and other methods, corresponding to the original images in column (a). In columns (b–c) of Figure 4, methods based on blur and light attenuation priors show relatively stable performance but are significantly affected by high blur in the background regions. In column (d), the light field-based depth estimation method performs well in blurred background regions, second only to our method. However, it exhibits poor robustness in the halo regions caused by light scattering around the target. Column (e) shows good detail in the target area but performs poorly in noisy regions. Column (f) has the worst performance due to the limited generalization capability caused by the underwater image data constraints. Column (g) of our method exhibits more target depth details and smoother, more accurate results in noisy background regions. From a subjective evaluation perspective, our method exhibits certain advantages over other methods in terms of depth estimation accuracy in areas with background light noise interference and target details.

Figure 5 provides a comparison of restoration effects between our method and other methods based on the original images shown in column (a). Columns (b–c) in Figure 5 show that prior-based methods result in noticeable red color casts in the restored images. Additionally, due to incorrect depth map estimation, the target blur remains significant. Columns (d) and (f) display a certain degree of blue-green cast, and the image in column (f) is relatively blurred. Column (e) performs well in the background region but still exhibits some red color cast in the target area. Column (g) of our method effectively removes backscatter from both the target and background, reducing noise. Additionally, light field refocusing brings about better clarity, and the colors are more aligned with human visual perception. Therefore, from a subjective evaluation perspective, our method demonstrates better restoration effects compared to the other methods.

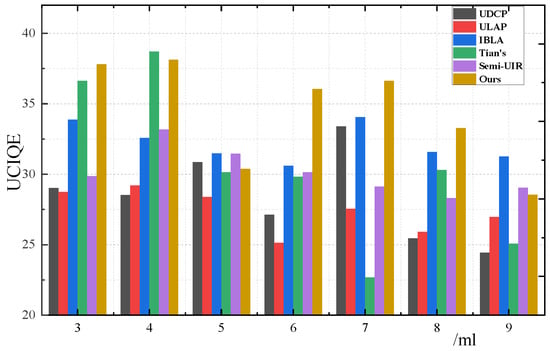

Underwater images have various objective evaluation methods. The underwater color image quality evaluation (UCIQE) [29] is the most widely used set of criteria, providing a no-reference metric specifically for assessing underwater image quality by analyzing color, saturation, and contrast. This standard addresses image quality degradation caused by light attenuation, scattering, and color shifts. A higher score indicates better image quality. Table 1 presents the evaluation scores for Figure 5. It can be observed that the image restoration results of our method achieve better overall performance across the five different image scenes compared to other methods.

Table 1.

Comparison of restoration results using the UCIQE scores corresponding to Figure 5.

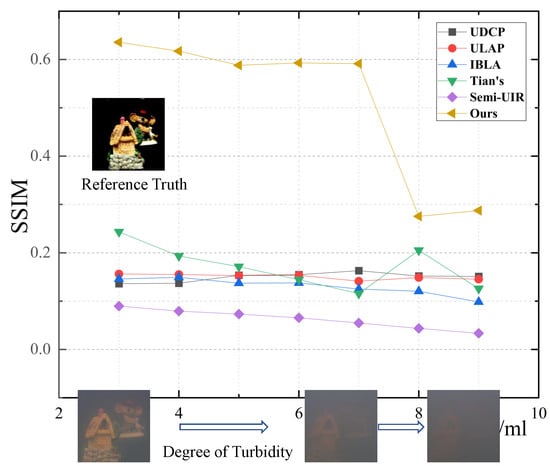

Furthermore, We also tested the robustness of our method in environments with varying degrees of turbidity. We evaluated the restoration performance of our approach in waters of different turbidity levels using the underwater color image quality evaluation (UCIQE) and the structural similarity index (SSIM) [30] as quality metrics. SSIM, which mimics the human visual system to measure the structural similarity between two images, is a widely used reference-based index. We used images captured in clear water as the real image reference. The restoration results, as shown in Figure 6 and Figure 7, demonstrate that our method outperforms others across various turbidity levels. However, our method has limitations; when the water reaches a certain level of turbidity, the quality of our restored images rapidly deteriorates.

Figure 6.

Comparison of restoration results across various turbidity levels using the SSIM index. For better display, our data begins recording from the third addition of an equal amount of milk. Note that aside from our results and Tian’s results, all other views are from the LF’s central perspective. Note that all images are from the same original target with turbidity being the only variable.

Figure 7.

Comparison of restoration effects across various turbidity levels using the UCIQE index. Note that all image data is the same as in Figure 6.

In summary, we subjectively compared the latest methods with our proposed approach in terms of depth maps and image restoration. Our method demonstrates better accuracy and image texture details in the depth maps compared to other methods. Additionally, due to the full-focus characteristics of the light field, our method significantly outperformed single image approaches in controlling noise in the final restored images. Objectively, we used the reference-free UCIQE to compare the restoration effects of different models and the reference-based SSIM to assess the restoration performance under various turbidity levels. The results conclusively showed that our method performed better than existing methods in both respects.

5. Discussion and Conclusions

In this paper, we proposed a method for underwater depth estimation and image restoration in near-field illumination environments. Our contributions include introducing a novel approach that utilizes blurriness and backscatter as depth estimation cues, proposing an image restoration scheme based on state-of-the-art underwater imaging models in near-field illumination and dense scattering environments, and a method to improve the accuracy of light field depth estimation in dense noisy environments. Through comparisons with existing methods, our approach demonstrates improved accuracy in depth estimation, image restoration, and stability compared to color prior-based methods and several state-of-the-art light field-based restoration methods. However, our results also have some errors and limitations, such as inaccuracies in depth estimation in shadow regions and a significant decline in restoration performance in highly turbid water. Future research will further optimize our method and validate its effectiveness under more experimental conditions. Our next step is to establish a database of underwater light field images to analyze the impact of different lighting conditions and water parameters on imaging.

Author Contributions

Conceptualization, B.X. and X.G.; methodology, B.X. and H.H.; software, B.X. and X.G.; validation, B.X. and H.H.; formal analysis, B.X. and X.G.; investigation, B.X. and H.H.; resources, X.G.; data curation, H.H.; writing—original draft preparation, B.X. and X.G.; writing—review & editing, H.H. and X.G.; visualization, B.X. and H.H.; supervision, H.H.; project administration, H.H. and X.G. All authors have read and agreed to the published version of the manuscript.

Funding

The support provided by China Scholarship Council (CSC) during a visit of Bo Xiao to University of Technology Sydney is acknowledged. This research by authors Xiujing Gao and Hongwu Huang was supported by Fujian Provincial Department of Science and Technology Major Special Projects (2023HZ025003), Key Scientific and Technological Innovation Projects of Fujian Province (2022G02008), Education and Scientific Research Project of Fujian Provincial Department of Finance (GY-Z220233), and Fujian University of Technology (GY-Z23027).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to the protection of intellectual property.

Acknowledgments

Thanks to my supervisor Stuart Perry for his careful guidance and valuable advice on my research. Additionally, we once again thank Stuart for the invaluable support we received from him during the various phases of the preparation of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Schechner, Y.; Karpel, N. Clear underwater vision. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 1, p. I. [Google Scholar] [CrossRef]

- Roser, M.; Dunbabin, M.; Geiger, A. Simultaneous underwater visibility assessment, enhancement and improved stereo. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3840–3847. [Google Scholar] [CrossRef]

- Tian, J.; Murez, Z.; Cui, T.; Zhang, Z.; Kriegman, D.; Ramamoorthi, R. Depth and Image Restoration from Light Field in a Scattering Medium. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2420–2429. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Uemura, T.; Kim, H.; Serikawa, S. Low illumination underwater light field images reconstruction using deep convolutional neural networks. Future Gener. Comput. Syst. 2018, 82, 142–148. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Zhang, Y.; Chen, M.; Serikawa, S.; Kim, H. Underwater Optical Image Processing: A Comprehensive Review. Mob. Netw. Appl. 2017, 22, 1204–1211. [Google Scholar] [CrossRef]

- Tian, Y.; Liu, B.; Su, X.; Wang, L.; Li, K. Underwater Imaging Based on LF and Polarization. IEEE Photonics J. 2019, 11, 1–9. [Google Scholar] [CrossRef]

- Narasimhan, S.; Nayar, S. Contrast restoration of weather degraded images. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 713–724. [Google Scholar] [CrossRef]

- Huang, S.; Wang, K.; Liu, H.; Chen, J.; Li, Y. Contrastive Semi-Supervised Learning for Underwater Image Restoration via Reliable Bank. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 18145–18155. [Google Scholar]

- Akkaynak, D.; Treibitz, T. A Revised Underwater Image Formation Model. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6723–6732. [Google Scholar] [CrossRef]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A Rapid Scene Depth Estimation Model Based on Underwater Light Attenuation Prior for Underwater Image Restoration. In Proceedings of the Advances in Multimedia Information Processing—PCM 2018; Lecture Notes in Computer Science; Hong, R., Cheng, W.H., Yamasaki, T., Wang, M., Ngo, C.W., Eds.; Springer: Cham, Switzerland, 2018; pp. 678–688. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cosman, P.C. Underwater Image Restoration Based on Image Blurriness and Light Absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Montenegro Campos, M.F. Underwater Depth Estimation and Image Restoration Based on Single Images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef]

- Hitam, M.S.; Awalludin, E.A.; Jawahir Hj Wan Yussof, W.N.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International Conference on Computer Applications Technology (ICCAT), Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Tao, M.W.; Hadap, S.; Malik, J.; Ramamoorthi, R. Depth from Combining Defocus and Correspondence Using Light-Field Cameras. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 673–680. [Google Scholar] [CrossRef]

- Georgiev, T.; Chunev, G.; Lumsdaine, A. Superresolution with the focused plenoptic camera. In Proceedings of the Computational Imaging IX; International Society for Optics and Photonics; Bouman, C.A., Pollak, I., Wolfe, P.J., Eds.; SPIE: Bellingham, WA, USA, 2011; Volume 7873, p. 78730X. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1956–1963. [Google Scholar] [CrossRef]

- Skinner, K.A.; Johnson-Roberson, M. Underwater Image Dehazing With a Light Field Camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ouyang, F.; Yu, J.; Liu, H.; Ma, Z.; Yu, X. Underwater Imaging System Based on Light Field Technology. IEEE Sens. J. 2021, 21, 13753–13760. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Kim, H.; Serikawa, S. Underwater light field depth map restoration using deep convolutional neural fields. Artif. Intell. Robot. 2018, 2018, 305–312. [Google Scholar]

- Ye, T.; Chen, S.; Liu, Y.; Ye, Y.; Chen, E.; Li, Y. Underwater Light Field Retention: Neural Rendering for Underwater Imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 19–20 June 2022; pp. 488–497. [Google Scholar]

- Akkaynak, D.; Treibitz, T. Sea-Thru: A Method for Removing Water From Underwater Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided Image Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Ng, R.; Levoy, M.; Brédif, M.; Duval, G.; Horowitz, M.; Hanrahan, P. Light Field Photography with a Hand-held Plenoptic Camera; Stanford University Computer Science Tech Report; Stanford University: Stanford, CA, USA, 2005. [Google Scholar]

- Ahmed, N.; Natarajan, T.; Rao, K. Discrete Cosine Transform. IEEE Trans. Comput. 1974, C-23, 90–93. [Google Scholar] [CrossRef]

- Carlevaris-Bianco, N.; Mohan, A.; Eustice, R.M. Initial results in underwater single image dehazing. In Proceedings of the OCEANS 2010 MTS/IEEE SEATTLE, Seattle, WA, USA, 20–23 September 2010; pp. 1–8. [Google Scholar] [CrossRef]

- Drews, P., Jr.; do Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission Estimation in Underwater Single Images. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 825–830. [Google Scholar] [CrossRef]

- Gupta, H.; Mitra, K. Unsupervised Single Image Underwater Depth Estimation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 624–628. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).