Abstract

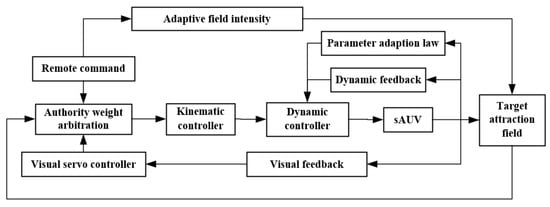

Human intelligence has the advantage for making high-level decisions in the remote control of underwater vehicles, while autonomous control is superior for accurate and fast close-range pose adjustment. Combining the advantages of both remote and autonomous control, this paper proposes a visual-aided shared-control method for a semi-autonomous underwater vehicle (sAUV) to conduct flexible, efficient and stable underwater grasping. The proposed method utilizes an arbitration mechanism to assign the authority weights of the human command and the automatic controller according to the attraction field (AF) generated by the target objects. The AF intensity is adjusted by understanding the human intention, and the remote-operation command is fused with a visual servo controller. The shared controller is designed based on the kinematic and dynamic models, and model parameter uncertainties are also addressed. Efficient and stable control performance is validated by both simulation and experiment. Faster and accurate dynamic positioning in front of the target object is achieved using the shared-control method. Compared to the pure remote operation mode, the shared-control mode significantly reduces the average time consumption on grasping tasks for both skilled and unskilled operators.

1. Introduction

Unmanned underwater vehicles (UUVs) are essential tools for underwater observation tasks [1,2]. They can be commonly categorized according to their autonomy levels, from remotely operated vehicles (ROVs) of limited autonomy to autonomous underwater vehicles (AUVs) of high autonomy [3,4,5]. Most AUVs can only be adopted in some simple tasks such as observation, exploration or monitoring, and more complex tasks such as underwater grasping and manipulation can hardly be performed without human interventions [6]. Such tasks rely on the UUVs equipped with manipulators [7,8,9], which are mostly remotely operated since human intelligence is indispensable in understanding complicated mission requirements and controlling multi-degrees of freedom (DOFs) motion.

The harsh underwater environment creates many difficulties for the application of perception and positioning sensors. Some common robot sensors are unavailable in the underwater environment, and some underwater sensors are bulky and expensive, which strongly limits the autonomy capability of AUVs. For example, robust underwater velocity and position measurement across a wide range rely heavily on acoustic devices [10,11]. The water turbidity and lack of visible reference objects and features also limit the application of vision-based positioning and perception methods [12,13,14]. In many cases, AUVs are specifically tailored for certain types of underwater missions [15,16]. Structures, electronic devices and autonomy programs have to be specially designed according to the mission requirements, which limits their applicability to a wider range of occasions.

With intervention or remote control by human operators, the ROVs are generally more versatile in underwater explorations and manipulation [17]. Low-cost and miniaturized ROVs are becoming more popular in ocean explorations to serve as alternatives for the expensive AUVs. Humans can make decisions and undertake operations with minor sensor information to conduct complex underwater missions. New control methods are also increasingly investigated, and practical issues such as communication rate [18] and winch performance [19] are also considered in the ROV control problems.

However, the performance of an ROV is highly relevant to the human operator’s skills. Furthermore, prolonged concentrated work is not only physically demanding, but also has a high risk of accidents. Therefore, shared autonomy approaches have been proposed in the literature to leverage the capabilities of autonomous systems to complement the skills of human operators [20]. Human intelligence is superior in making intelligent and flexible high-level decisions, dealing with unexpected events, and comprehending the varying and unconstructed environment., while machine autonomy has the advantage of high stability, accuracy and fast responses [21,22].

Many attempts have been made to apply shared-control algorithms to land robots [23,24,25], but the shared control of UUVs has still not been fully investigated. A shared autonomy approach for a low-cost sAUV is developed in [20]. It contains a variable level assistant control mode with an online estimation of user skill level and a planner which generates paths that avoid tether entanglement of the sAUV. The method reduces the training requirements and attentional burden required for underwater inspection tasks, but the accurate control of close-range object grasping tasks has not been fully discussed. An ROV teleoperation via human body motion mapping is proposed in [26], where the ROV hydrodynamic forces are converted into haptic feedback, and the human body motions are modeled and mapped into the gesture controls. The control precision is improved and mental load is reduced, but automatic control has not yet been fused into the system to form a shared-control scheme.

In order to improve the working efficiency and reduce the operational burden on humans in underwater grasping tasks, this paper develops a practical visual-aided shared-control method for a low-cost prototype sAUV. The primary contributions of this paper are three-fold.

- The proposed method takes full advantage of human command in the high-level guidance, and the visual servo autonomy in the close-range dynamic positioning, to construct a stable, flexible and efficient shared controller for underwater grasping tasks.

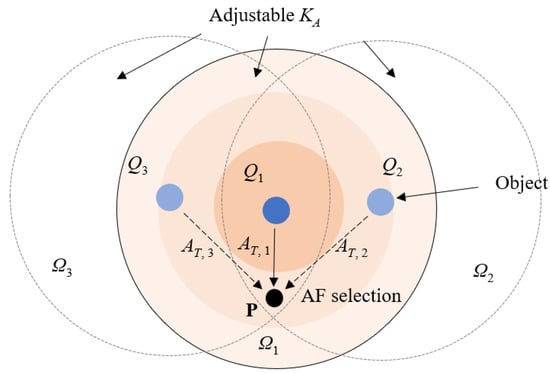

- A variable AF of the target objects is proposed, whose field intensity is adjusted by the human intention extracted from the remote commands. An arbitration mechanism is then adopted to assign authority weights to the human command and the automatic controller according to the AF intensity.

- The shared controller is realized based on the reference velocity fusion in the kinematic level, which is then tracked by the dynamic controller considering model parameter uncertainties. Both the simulation and experiment demonstrate an obvious increase in the grasping efficiency and stability.

The rest of this paper is organized as follows. Section 2 introduces the hardware construction and modeling of the prototype sAUV. Section 3 introduces the detailed design procedure of the shared controller, including both the adjustable AF concept and the model-based dynamic controller considering model uncertainties. Section 4 provides the simulation and experiment results, and Section 5 concludes the paper.

2. System Construction and Modeling

2.1. System Construction

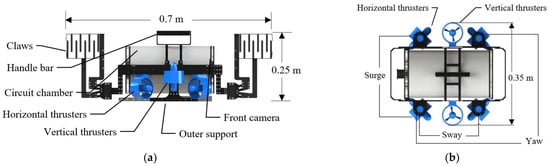

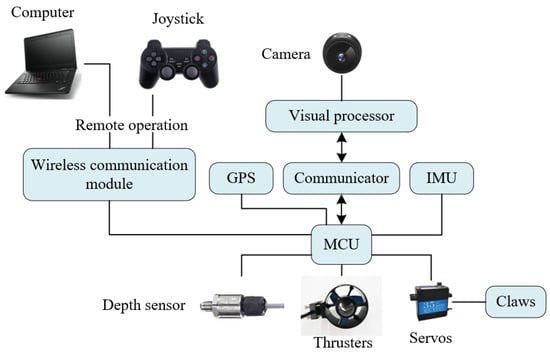

The prototype miniaturized sAUV is shown in Figure 1, and its functional diagram is illustrated in Figure 2. The prototype is approximately 0.7 m in length including the claws, 0.35 m in width, and 0.25 m in height, which is miniaturized and low-cost. It is equipped with six thrusters to provide five-DOF motion for dexterous underwater grasping tasks. Nevertheless, it is passively stable in roll and pitch unless a special pose adjustment is required. The sAUV is wirelessly controlled and capable of three control modes: full autonomy, remote control and shared control. It can receive remote-control signals from a joystick and an onshore computer.

Figure 1.

Structure of the prototype sAUV: (a) Side view with claws; (b) Top view without claws.

Figure 2.

Functional diagram of the sAUV.

Due to budget and weight limits, acoustic devices are not applied in this prototype version. The sAUV utilizes a forward-looking camera for observation and object searching. The real-time visual program is taken charge of by an independent visual processor. All the other sensors and modules are connected to a microcontroller unit (MCU), which is the central brain of the electrical system. The inertial measurement unit (IMU) provides Euler angles in the north-east-down (NED) earth coordinate. The wireless communication module takes charge of the mutual communication between the sAUV and the external operators, including the joystick and the onshore computer. High-speed communication is established between the MCU and the visual processor to conduct the shared autonomous control algorithm. When the visual measurement is available, the joystick provides the reference velocities and poses as remote commands, otherwise, the translational motions are directly controlled by the input thrust forces. The control modes are switched according to different task requirements.

2.2. Kinematic Model

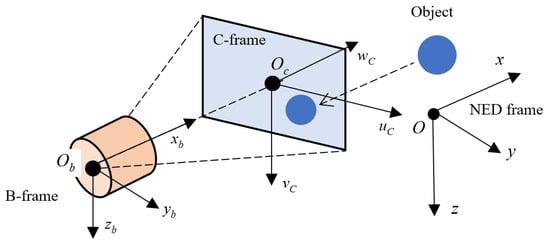

Kinematic and dynamic models of the sAUV were established following the classic model introduced by T.I Fossen [27]. The positions and poses are denoted in the NED frame, and the sAUV velocities are denoted in the body-fixed coordinate frame (B-frame). The schematic of the kinematic model is shown in Figure 3.

Figure 3.

Schematic of the kinematic model.

The six-DOF kinematic model is written as:

where is the position and Euler angle vector in the NED frame, and v is the velocity vector in the B-frame. Given that the sAUV is passively stable in roll and pitch, a decoupled four-DOF motion on the horizontal plane along with an independent vertical motion is adopted, which gives a simplified kinematic model as:

The automatic visual servo control is performed when the target object is recognized by the camera fixed on the front of the sAUV. The rotation matrix from the B-frame to the camera-fixed coordinate frame (C-frame) is given by:

The center position of an object in the C-frame is:

where , , denotes the rotation matrix of the current pose, and is the object position in the NED frame. A position-based visual error function is utilized for the convenience of the controller design. Assuming that the object is expected to be located at in the C-frame in a grasping task, the visual error is then defined as:

The visual error in (5) can be directly obtained from the image captured by the camera. Given that the sAUV is self-stabilized in pitch and roll, which gives , the expansion form of (5) then becomes:

Using the kinematic model in (2), the time derivative of (6) can be written as:

For most of the object-grasping tasks, an object can be grabbed from an arbitrary direction when the translational visual error is eliminated using appropriate motion control. Hence, only the translation component of the visual error is considered in this paper, and the Euler angles are measured by the IMU and are independently controlled. Equation (7) is equivalent to the kinematic model but is more straightforward for the visual servo controller design.

2.3. Dynamic Model

The system dynamics in the grasping task are acquired from the six-DOF dynamic model [27]:

where M, C, D, and G are the mass, Coriolis-centripetal, damping, and restoring force terms, respectively, and M = MA + MRB. τ contains the input forces and torques. Since the sAUV is self-stabilized in pitch and roll, a decoupled four-DOF dynamic can be utilized by simplifying (8). The high-order terms and nonlinear terms in the viscous hydrodynamic force can also be neglected in the low-speed motion, which gives:

The shared controller in the next section is designed based on the kinematic model in (2) and (7), and the dynamic model in (9).

4. Simulation and Experiment

4.1. Simulation

Simulation is conducted using MATLAB based on an approximate model of the sAUV. The model parameters applied in the simulation are set as M = diag(21, 23, 25, 1.3, 2.1, 1.8), DA = diag(12, 18, 20, 1.5, 2.5, 2.4), and is neglected due to the passive stability in roll and pitch. The initial model parameter estimations in the controller are chosen to have at least 50% errors. The controller gains are ku = kv = kw = 0.2, ku = 0.4, kτu = kτv = kτw = 20, kτr = 10. . The importance factors for all the objects are chosen to be qi = 1, and . The initial position of the sAUV is set at P0 = (−1.5, −2, 1.5), ψ0 = 0 and ψd = 0.5.

The simulation is conducted on three basic operating tasks, including automatic visual servo, shared control with unskilled control, and shared control with target switch. These three task units are basic issues that need to be addressed during the whole underwater grasping task, which also reveal the major advantages of the proposed shared controller.

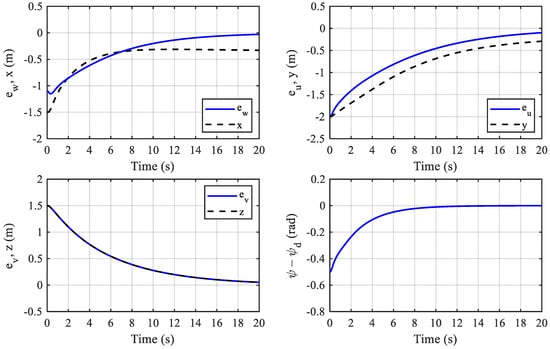

The fully automatic visual servo performance is an important foundation for the shared-control mode and is thus firstly validated in the simulation. In the first task, the sAUV is expected to perform dynamic positioning in front of the target with a desired yaw angle using the visual servo technique. The NED coordinate position of the target object is set at Pt = (0, 0, 0), and the target C-frame position is set at = (0, 0, 0.4). The simulation results are shown in Figure 6 and Figure 7.

Figure 6.

Pose and visual errors in the automatic control task.

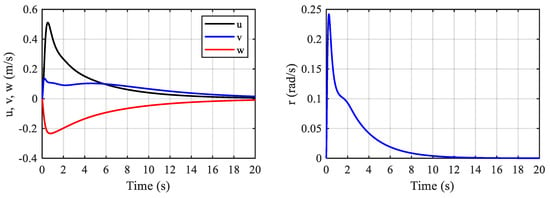

Figure 7.

Translational and rotational velocities in the automatic control task.

It can be observed from Figure 6 that the visual errors all converge smoothly within the period. Due to the existence of the non-zero desired yaw angle ψ, only the errors ev and z are coincident. Figure 7 shows the translational and yaw velocities during the pose-adjusting process. The velocities change smoothly and gradually decrease as the sAUV approaches the target. The maximum translational velocities are near 0.4 m/s, and the maximum yaw velocity is about 0.25 rad/s, which are reasonable values for the prototype.

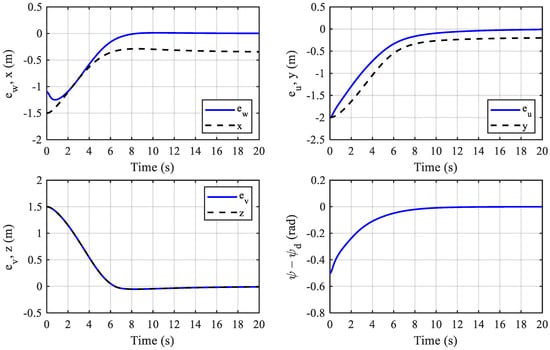

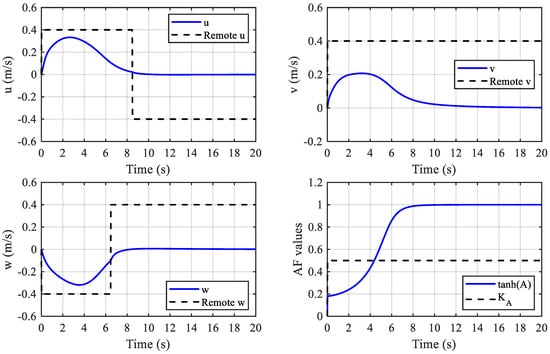

The second task is set to mimic a common situation when the remote command is provided by an unskilled operator. The operator is supposed to have normal observation ability, but very poor operation skills, so that the enhanced assistant and corrective ability of the shared-control strategy can be fully revealed. The unskilled operator can recognize the rough deviation direction of the sAUV from the target object, and intends to approach and grasp it with rough speed control. Specifically, the operator provides constant reference velocities with correct directions when the position deviation is recognizable, but cannot adjust the velocity magnitudes according to the deviation distance. Similar to the previous task, the target object is set at = (0, 0, 0), and the target C-frame position is = (0, 0, 0.4). The corresponding results are shown in Figure 8 and Figure 9.

Figure 8.

Pose and visual errors in the shared-control task.

Figure 9.

Velocities and AF values in the shared-control task.

Compared to Figure 6, the results in Figure 8 show a much faster convergence of the visual errors due to the remote-control signals provided to approach the target object. Figure 9 compares the actual velocities with the remote-control velocities. Before the first 6 to 8 seconds, the visual errors are large, and the operator provides 0.4 m/s constant translational velocities to control to eliminate the errors. These remote-control signals at the beginning are regarded as correct and helpful signals to accelerate the convergence of the visual errors.

When each translational error becomes small, the corresponding remote-control signals of u and w changes to the opposite direction, and all the large remote-control velocities are regarded as wrong commands from the unskilled operator. However, as the AF intensity increases when the sAUV approaches the target, the overall velocities are mainly determined by the automatic controller. The harmful disturbances of the rough remote commands are thus eliminated according to the shared controller described in (16). Consequently, the results of the second task demonstrate that the shared-control strategy can successfully help to improve the control performance of an unskilled operator.

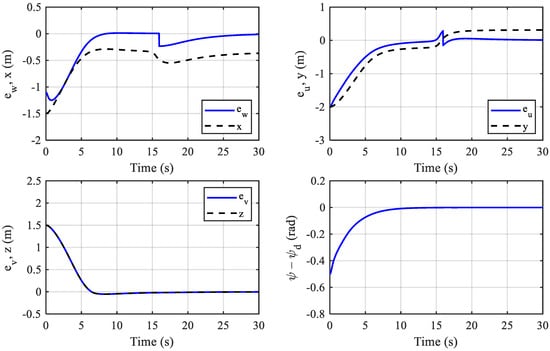

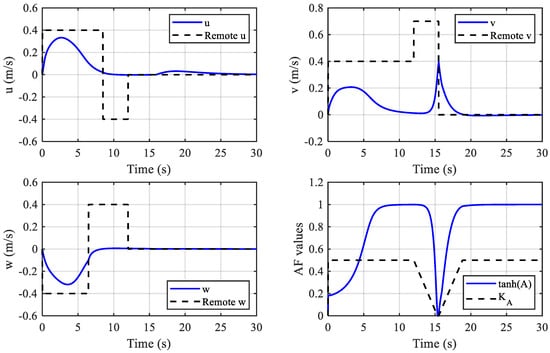

The third task is set to evaluate the target switching performance on occasions where the operator wants to pick target objects far from the current candidate objects. The distraction candidates near the target object are not expected to be picked up immediately but generate disturbance AF during the switching process before the correct target is reached. During the switching process, the operator first provides constant reference velocity towards the candidate target at = (0, 0, 0). When the sAUV is attracted by the candidate target, the operator gives a large escape velocity to v towards the correct target at Pt2 = (0, 0.5, 0). The target C-frame position is = (0, 0, 0.4) for both the candidate and correct targets. The corresponding results are shown in Figure 10 and Figure 11.

Figure 10.

Pose and visual errors in the target switching task.

Figure 11.

Velocities and AF values in the target switching task.

The visual error and yaw angle curves in Figure 10 show a rapid and smooth target switch process. There are obvious abrupt changes in the visual errors of ew and eu, but the position coordinates x and y change smoothly, because the visual errors are re-calculated when the active AF is switched from the candidate target to the correct target. Similar rapid changes in the velocities u and v also occur in Figure 11.

Notice that the operator gives a remote-control signal only to the velocity v after 12 s to switch the target. When the reference velocity deviation of (17) in any one of the directions exceeds the threshold value, the attraction coefficient KA rapidly reduces, and results in a rather low AF intensity near 15 s, as shown in Figure 11. The operator thus has high authority to control the sAUV to quickly switch the target until the reference velocity deviation goes beneath the recovery threshold. The low AF intensity time is also flexibly controlled by the operator. In general, the results demonstrate a good target switching performance using the proposed shared-control strategy.

4.2. Experiment

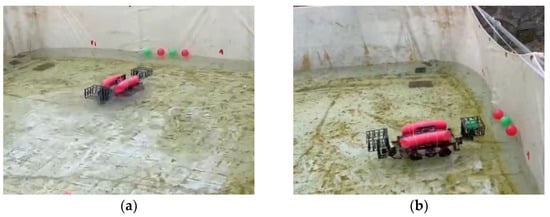

An object-grasping experiment is conducted on the prototype sAUV in a water pool. Due to the limited depth of the pool, the sAUV performs a three-DOF planar motion on the water which is still enough to verify the shared-control performance. Four plastic balls in two different colors are attached on one side of the pool to serve as target objects, as shown in Figure 12. The sAUV first follows a desired path before stopping in front of the target objects and then grasps two of the objects according to different task requirements. In task one, the sAUV is required to grasp any two of the objects despite their colors. In task two, the sAUV is required to grasp two green objects without damaging the red ones. Obviously, task two requires a more skillful remote operation.

Figure 12.

Water pool experiment. (a) The sAUV and target objects. (b) Shared control for object grasping.

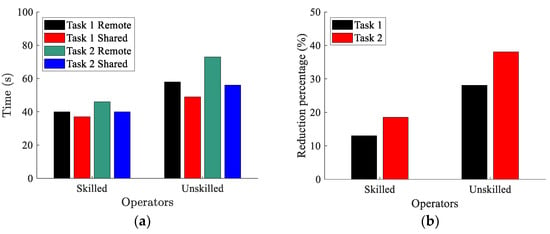

The grasping task is conducted by both a skilled and an unskilled operator, with a pure remote control and a shared-control mode. The tasks are conducted several times and the results are recorded statistically to demonstrate the general efficiency improvement using the shared-control algorithm. Specifically, each task is repeated 10 times in each mode by an operator, and the average times are recorded and compared in Figure 13a,b.

Figure 13.

Comparison of the operating time in the experiment. (a) Operating time for different tasks and operators. (b) Visual servo time reduction percentage with the shared-control mode.

Figure 13a shows that the shared-control strategy generally reduces the averaging operation time for both operators. The time saving is moderate for the skilled operator, because the pure remote-control performance of the skilled operator is closer to the automatic system than the unskilled operator. The time saving for the unskilled operator is more significant, because the shared-control strategy effectively corrects the inappropriate commands given by the operator, and provides greater help on the precise pose adjustment before grasping. The time-saving effect for task two is also more significant for both of the operators, because the shared control is more helpful when the manual operation complexity increases.

Figure 13b compares the time proportion of the visual servo process in the grasping experiment, which is the total time needed to stop in front of each object before grasping. This process is regarded as the most challenging process for an unskilled operator. The time-saving effect has a similar trend to Figure 13a, but is more significant for the visual servo process. Owing to the help of the shared controller, the time needed for the unskilled operator in the visual servo process of task two is drastically reduced by nearly 40%. Besides the time-saving effect, both of the operators also report a much more easy and relaxed operating feeling with the shared-control mode. In conclusion, the proposed shared-control method significantly improves the efficiency of conducting the grasping tasks.

5. Conclusions

A visual-aided shared-control method is proposed and validated in this paper to improve the underwater grasping efficiency of the sAUV. The control algorithm utilizes the variable AF to adjust the authority weights of the human command and the automatic controller, and takes full advantage of human intelligence for decision-making and the automatic system for precise control. Compared to pure-remote operation, the proposed shared controller significantly improves the efficiency of underwater grasping tasks and reduces the operation skill required for the operators.

The proposed method provides a valuable reference for controlling various kinds of underwater vehicles. Future work will proceed with algorithm improvements to consider practical issues in underwater environments, including measurement noise, water turbidity, communication delays and other environmental disturbances. Experiments will also be conducted with more complex tasks. The proposed method is believed to have great potential in real applications of underwater exploration and manipulation.

Author Contributions

Conceptualization, T.W.; methodology, T.W. and Z.S.; software, T.W. and F.D.; validation, T.W. and F.D.; writing—original draft, T.W.; funding acquisition, T.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Natural Science Foundation of China (Grant No. 52201398), and the Natural Science Foundation of Jiangsu Province, China (Grant No. BK20220343).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sahoo, A.; Dwivedy, S.K.; Robi, P.S. Advancements in the field of autonomous underwater vehicle. Ocean Eng. 2019, 181, 145–160. [Google Scholar] [CrossRef]

- Wang, T.; Zhao, Q.; Yang, C. Visual navigation and docking for a planar type AUV docking and charging system. Ocean Eng. 2021, 224, 108744. [Google Scholar] [CrossRef]

- Hong, S.; Chung, D.; Kim, J.; Kim, Y.; Kim, A.; Yoon, H.K. In-water visual ship hull inspection using a hover-capable underwater vehicle with stereo vision. J. Field Robot. 2018, 36, 531–546. [Google Scholar] [CrossRef]

- Peng, Z.; Wang, J.; Han, Q. Path-Following Control of Autonomous Underwater Vehicles Subject to Velocity and Input Constraints via Neurodynamic Optimization. IEEE Trans. Ind. Electron. 2019, 66, 8724–8732. [Google Scholar] [CrossRef]

- Xie, T.; Li, Y.; Jiang, Y.; Pang, S.; Wu, H. Turning circle based trajectory planning method of an underactuated AUV for the mobile docking mission. Ocean Eng. 2021, 236, 109546. [Google Scholar] [CrossRef]

- Huang, H.; Tang, Q.; Li, J.; Zhang, W.; Bao, X.; Zhu, H.; Wang, G. A review on underwater autonomous environmental perception and target grasp, the challenge of robotic organism capture. Ocean Eng. 2020, 195, 106644. [Google Scholar] [CrossRef]

- Birk, A.; Doernbach, T.; Müller, C.A.; Luczynski, T.; Chavez, A.G.; Köhntopp, D.; Kupcsik, A.; Calinon, S.; Tanwani, A.K.; Antonelli, G.; et al. Dexterous Underwater Manipulation from Onshore Locations: Streamlining Efficiencies for Remotely Operated Underwater Vehicles. IEEE Robot. Autom. Mag. 2018, 25, 24–33. [Google Scholar] [CrossRef]

- Youakim, D.; Cieslak, P.; Dornbush, A.; Palomer, A.; Ridao, P.; Likhachev, M. Multirepresentation, Multiheuristic A search-based motion planning for a free-floating underwater vehicle-manipulator system in unknown environment. J. Field Robot. 2020, 37, 925–950. [Google Scholar] [CrossRef]

- Stuart, H.; Wang, S.; Khatib, O.; Cutkosky, M.R. The Ocean One hands: An adaptive design for robust marine manipulation. Int. J. Robot. Res. 2017, 36, 150–166. [Google Scholar] [CrossRef]

- Sarda, E.I.; Dhanak, M.R. A USV-Based Automated Launch and Recovery System for AUVs. IEEE J. Ocean. Eng. 2017, 42, 37–55. [Google Scholar] [CrossRef]

- Palomeras, N.; Vallicrosa, G.; Mallios, A.; Bosch, J.; Vidal, E.; Hurtos, N.; Carreras, M.; Ridao, P. AUV homing and docking for remote operations. Ocean Eng. 2018, 154, 106–120. [Google Scholar] [CrossRef]

- Yazdani, A.M.; Sammut, K.; Yakimenko, O.; Lammas, A. A survey of underwater docking guidance systems. Robot. Auton. Syst. 2020, 124, 103382. [Google Scholar] [CrossRef]

- Zhang, S.; Zhao, S.; An, D.; Liu, J.; Wang, H.; Feng, Y.; Li, D.; Zhao, R. Visual SLAM for underwater vehicles: A survey. Comput. Sci. Rev. 2022, 46, 100510. [Google Scholar] [CrossRef]

- Wang, R.; Wang, X.; Zhu, M.; Lin, Y. Application of a Real-Time Visualization Method of AUVs in Underwater Visual Localization. Appl. Sci. 2019, 9, 1428. [Google Scholar] [CrossRef]

- Palomeras, N.; Peñalver, A.; Massot-Campos, M.; Negre, P.; Fernández, J.; Ridao, P.; Sanz, P.; Oliver-Codina, G. I-AUV Docking and Panel Intervention at Sea. Sensors 2016, 16, 1673. [Google Scholar] [CrossRef] [PubMed]

- Kimball, P.W.; Clark, E.B.; Scully, M.; Richmond, K.; Flesher, C.; Lindzey, L.E.; Harman, J.; Huffstutler, K.; Lawrence, J.; Lelievre, S.; et al. The ARTEMIS under-ice AUV docking system. J. Field Robot. 2018, 35, 299–308. [Google Scholar] [CrossRef]

- Khadhraoui, A.; Beji, L.; Otmane, S.; Abichou, A. Stabilizing control and human scale simulation of a submarine ROV navigation. Ocean Eng. 2016, 114, 66–78. [Google Scholar] [CrossRef]

- Batmani, Y.; Najafi, S. Event-Triggered H∞ Depth Control of Remotely Operated Underwater Vehicles. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 1224–1232. [Google Scholar] [CrossRef]

- Zhao, C.; Thies, P.R.; Johanning, L. Investigating the winch performance in an ASV/ROV autonomous inspection system. Appl. Ocean Res. 2021, 115, 102827. [Google Scholar] [CrossRef]

- Lawrance, N.; Debortoli, R.; Jones, D.; Mccammon, S.; Milliken, L.; Nicolai, A.; Somers, T.; Hollinger, G. Shared autonomy for low-cost underwater vehicles. J. Field Robot. 2019, 36, 495–516. [Google Scholar] [CrossRef]

- Li, G.; Li, Q.; Yang, C.; Su, Y.; Yuan, Z.; Wu, X. The Classification and New Trends of Shared Control Strategies in Telerobotic Systems: A Survey. IEEE Trans. Haptics 2023, 16, 118–133. [Google Scholar] [CrossRef]

- Liu, S.; Yao, S.; Zhu, G.; Zhang, X.; Yang, R. Operation Status of Teleoperator Based Shared Control Telerobotic System. J. Intell. Robot. Syst. 2021, 101, 8. [Google Scholar] [CrossRef]

- Selvaggio, M.; Cacace, J.; Pacchierotti, C.; Ruggiero, F.; Giordano, P.R. A Shared-Control Teleoperation Architecture for Nonprehensile Object Transportation. IEEE Trans. Robot. 2022, 38, 569–583. [Google Scholar] [CrossRef]

- Li, M.; Song, X.; Cao, H.; Wang, J.; Huang, Y.; Hu, C.; Wang, H. Shared control with a novel dynamic authority allocation strategy based on game theory and driving safety field. Mech. Syst. Signal Proc. 2019, 124, 199–216. [Google Scholar] [CrossRef]

- Li, R.; Li, Y.; Li, S.E.; Zhang, C.; Burdet, E.; Cheng, B. Indirect Shared Control for Cooperative Driving Between Driver and Automation in Steer-by-Wire Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 7826–7836. [Google Scholar] [CrossRef]

- Xia, P.; You, H.; Ye, Y.; Du, J. ROV teleoperation via human body motion mapping: Design and experiment. Comput. Ind. 2023, 150, 103959. [Google Scholar] [CrossRef]

- Fossen, T.I. Handbook of Marine Craft Hydrodynamics and Motion Control; Wiley: New York, NY, USA, 2011. [Google Scholar]

- Muratore, L.; Laurenzi, A.; Hoffman, E.M.; Baccelliere, L.; Kashiri, N.; Caldwell, D.G.; Tsagarakis, N.G. Enhanced Tele-interaction in Unknown Environments Using Semi-Autonomous Motion and Impedance Regulation Principles. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 5813–5820. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).