Abstract

In the functioning of the hand–eye collaboration of an apple picking robot, the accuracy of the hand–eye relationship is a key factor affecting the efficiency and accuracy of the robot’s operation. In order to enhance the low accuracy of traditional hand–eye calibration methods, linear and nonlinear solving methods based on mathematical tools such as quaternions are commonly adopted. To solve the loss of accuracy in decoupling during the linearization solution and to reduce the cumulative error that occurs during nonlinear solutions, a hand–eye calibration method, based on the ICP algorithm, is proposed in this paper. The method initializes the ICP matching algorithm with a solution derived from Tsai–Lenz, and substitutes it for iterative computation, thereby ascertaining a precise hand–eye conversion relationship by optimizing the error threshold and iteration count in the ICP matching process. Experimental results demonstrate that the ICP-based hand–eye calibration optimization algorithm not only circumvents the issues pertaining to accuracy loss and significant errors during solving, but also enhances the rotation accuracy by 13.6% and the translation accuracy by 2.47% compared with the work presented by Tsai–Lenz.

1. Introduction

Agricultural harvesting robots are electromechanical devices that integrate mechanization, automation, and intelligence. They have been researched and applied extensively in agricultural production. These robots are typically composed of components such as AGV automatic guided vehicles, end effectors, 3D vision cameras, and collaborative robotic arms [1,2,3]. During the harvesting process, the 3D vision camera perceives targets and extracts their pose information. Subsequently, after hand–eye calibration, the pose in the camera coordination frame is transformed into a pose relative to the robotic arm’s base coordination frame [4]. The accuracy of hand–eye calibration is a crucial factor for the efficient operation of agricultural harvesting robots. The task of hand–eye calibration primarily involves solving for in to determine the hand–eye calibration relationship. In hand–eye calibration, the equation represents the transformation relationship between the camera frame and the robotic arm frame. A and B denote the transformation matrices of the camera frames and robotic arm frames relations, respectively. is the solution sought in the hand–eye calibration equation . This equation delineates the translation and rotation relationship between the camera and robotic arm coordinate frames. Through solving this equation, precise hand–eye calibration results can be obtained, facilitating accurate spatial localization and control. Hence, the accuracy of X’s solution determines the precision of hand–eye calibration [5]. For the problem of solving , various scholars have employed different mathematical methods for the equation . Common approaches include linear or nonlinear solving methods based on mathematical tools such as dual quaternions, rotation vectors, and so on [6,7,8,9,10,11,12,13,14,15]. The Tsai–Lenz method employs a nonlinear solving approach based on the least squares method for hand–eye calibration equations [16]. It employs a two-step process, initially determining the rotation matrix followed by implementing nonlinear computations for translational errors. Nonetheless, solving for the rotation matrix can potentially result in error accumulation, which subsequently propagates errors affecting translational accuracy throughout the procedure. Heikkila et al. [17] enhanced the approach through introducing a four-step calibration method; this approach surpasses the original two-step method. They directly employed a linear solving method to solve , followed by applying Levenberg–Marquardt optimization for further refinement. Daniilidis et al. [18] proposed a solution method based on dual quaternions. In comparison to the two-step approach, this method constructs a new set of linear equations using quaternions and then employs SVD (singular value decomposition) for the simultaneous solution of . However, there are issues of coupled accuracy loss during the solving process. Qi et al. [19], building upon the dual quaternion algorithm, incorporated the characteristics of data on SO(4) to solve the problem. Compared to the dual quaternion algorithm, their method demonstrates improved stability and practicality. Van et al. [20] proposed a solving method based on the exponential formula product and linearly constrained singular value decomposition least squares algorithm to enhance calibration accuracy. With the progress in 3D camera and LiDAR sensor technologies, the utilization of 3D point cloud data in rigid registration has witnessed substantial advancement [21,22,23]. Point cloud registration involves finding a rigid transformation to align one point cloud as closely as possible with another, encompassing translation and rotation. The iterative closest point (ICP) algorithm and its variants are widely used methods for precise point cloud registration [24,25,26,27,28]. Zhao et al. [29] proposed a method for re-matching fractured rigid fractured surfaces in point clouds based on the ICP algorithm. Zhang et al. [30] introduced a 3D map creation method for motion estimation using a feature-based ICP algorithm with a discrete selection mechanism. Although the ICP matching algorithm is widely employed in point cloud registration, it demands accurate initial matches; otherwise, it may suffer from local convergence issues leading to substantial errors. To address these challenges, this paper presents an ICP iterative point cloud registration and hand–eye calibration method based on the Tsai–Lenz algorithm. This method employs the Tsai–Lenz algorithm to solve in ; X facilitates the conversion of the calibration board’s feature points’ point cloud pose from the camera coordinate system to a pose relative to the robotic arm’s base coordinates. Simultaneously, an ICP matching process is performed with the feature points’ point cloud in the tool center point (TCP) frame of the robotic arm. serves as the initial value for ICP matching. Upon completion of the matching process, the obtained rigid transformation becomes the refined hand–eye transformation.

2. Materials and Methods

2.1. Hand–Eye Calibration Model

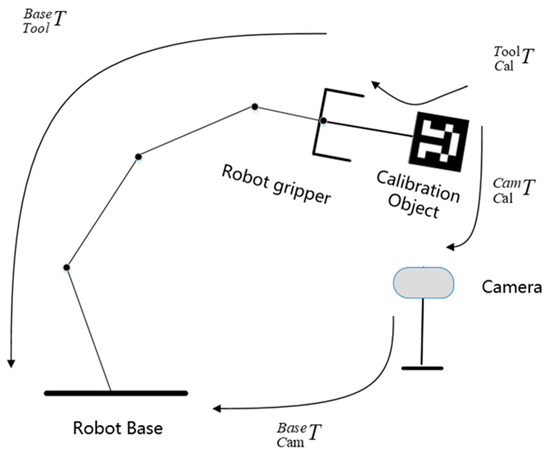

According to the camera installation method, there are two approaches: “eye-in-hand” and “eye-to-hand” [31]. In the “eye-in-hand” scenario, the camera is mounted on the robotic arm’s end effector and moves along with the robot’s motion. In the “eye-to-hand” scenario, the camera remains fixed in position but can observe the robotic arm and the operating area. The “eye-to-hand” visual system maintains a fixed field of view, ensuring that target information is not lost when the robotic arm moves. The calibration objective in this scenario is to establish the transformation relationship between camera frame and robotic arm frame. This paper is based on this installation approach for modeling, as illustrated in Figure 1.

Figure 1.

Hand–eye calibration model.

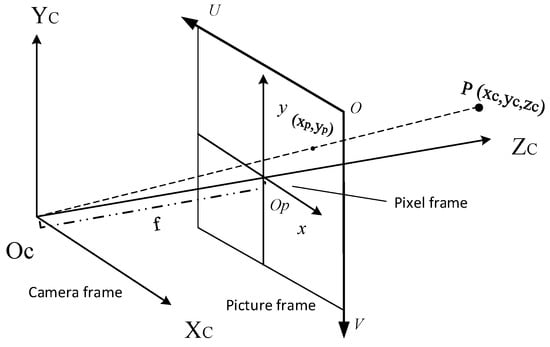

In Figure 1, represents the transformation relationship of the calibration board frame with respect to the end effector frame, represents the transformation relationship of the calibration board frame with respect to the camera frame, represents the transformation relationship of the camera frame with respect to the robotic arm’s base frame, and represents the transformation relationship of the end effector frame with respect to the robotic arm’s base frame. signifies the hand–eye transformation relationship, which corresponds to in the hand–eye calibration equation . During the grasping process of the harvesting robot, the 3D vision camera perceives and captures the pose of an apple. The imaging principle of a point in the camera frame is illustrated in Figure 2.

Figure 2.

Camera imaging model.

Point is imaged in the pixel coordinate frame , and after undergoing the transformation in the image coordinate frame and subsequent projection, it is imaged in the camera coordinate frame . The transformation relationship is represented by Equation (1).

In Equation (1), , represents the row and column of the object’s imaged pattern in the pixel coordinate frame, represents the unit size length on the and axes for each pixel in the optical sensor, represents the camera focal length, and represents the camera intrinsic parameters, which can generally be obtained through the Zhang calibration method. Hand–eye coordination tasks require transforming a point in the camera coordinate frame into a point relative to the robotic arm’s base coordinate frame using the transformation relationship . This enables the robotic arm to perform harvesting operations. can be represented using rotation matrix and translation vector . This relationship is illustrated in Equation (2).

2.2. Hand–Eye Calibration Algorithm Based on ICP

2.2.1. The ICP Matching Algorithm Is Solved at the Initial Value

The Tsai–Lenz method is employed to obtain the initial values for ICP matching. In the hand–eye calibration model shown in Figure 1, and are fixed values. can be obtained through camera calibration, and can be derived from the robotic arm’s forward kinematics equation. The transformation of the camera’s coordinate frame relative to the robotic arm’s base coordinate frame is represented by Equation (3):

is a constant, and through transformation, Equation (4) can be derived.

During the calibration process, the poses of calibration board feature points need to be captured in the camera coordinate frame. Simultaneously, the poses of the robotic arm’s end effector coordinate frame must be recorded at different positions. The recorded calibration relationships for the first set are denoted as , and for the second set as . A total of sets are collected (), and they are collectively expressed in the form of a homogeneous system of equations, as shown in Equation (5).

By combining the equations from the first and second sets, Equation (6) can be derived:

Left-multiplying Equation (6) by and right-multiplying it by , where represents the unknowns; through setting as , we obtain Equation (7):

Further simplifying Equation (5) leads to Equation (8):

Coupling and results in , in Equation , and then integrating Equation (8) with , . Using a two-step approach, we obtain Equation (9):

Using a two-step approach, the rotation matrix is first solved, followed by the nonlinear calculation of the translation vector . To solve for the rotation matrix , we employ the Rodrigues transformation. This transforms it into the Rodrigues parameter form by utilizing the product of the axis vector and the rotation angle , denoted as . Here, represents a unit vector, and represents the rotation angle. The rotational vector relationship is calculated using the Skew matrix, as shown in Equation (10):

In Equation (10), represents the homogeneous coordinate vector of the feature point in the robotic end effector coordinate frame, represents the homogeneous coordinate vector of the feature point in the camera coordinate frame, and denotes the inverse hand–eye transformation relationship. Further calculations are performed to derive the rotational vector ,. The solved value of is then substituted into Equation (11) to determine .

Substitute into Equation (9) to solve for .

2.2.2. Design of Hand–Eye Calibration Method Based on ICP

Just as a rigid body maintains a consistent transformation relationship in space across various positions, the point cloud feature descriptors of a rigid body exhibits specific coordinate expression relationships at different spatial positions. Point cloud registration involves calculating the coordinate expression relationship between corresponding feature points of the target and source point clouds in space, which allows us to determine the spatial coordinate expression relationship between the two-point clouds. The mathematical model is described as follows: In space, there is a source point cloud , and a target point cloud , where represents the number of points in the source and target point clouds, and . In point cloud registration, both the source and target point clouds are samples of the same object, obtained from different angles or positions. The source point cloud is the one we aim to adjust or transform, so that it aligns as closely as possible with the target point cloud through rigid transformations (translation and rotation). This process is known as point cloud registration, as shown in Equation (12), where represents the rotation matrix of the rigid transformation, and is the translation vector.

Point cloud registration typically consists of two processes: coarse registration and fine registration. Coarse registration aims to establish a preliminary correspondence between two-point clouds. This provides a more accurate initial value for fine registration, reducing computation and enhancing matching efficiency. Currently, the ICP algorithm is one widely used method for fine registration. Due to the relatively low accuracy of traditional Tsai–Lenz hand–eye transformation relationships, there still exist positional discrepancies between the transformed calibration board feature points’ point cloud and the points located beneath the robotic arm’s tool. ICP calculates the optimal rigid transformation using the least squares method, iteratively solving to minimize the Euclidean distance between corresponding points of the source and target point clouds. This ensures the maximum possible overlap between the two-point clouds, offering features such as good matching performance and robustness. The steps of hand–eye calibration based on ICP are as follows:

Step (1): Use camera to capture different poses of the calibration board feature point cloud, referred to as point cloud . Simultaneously, move the robotic arm tool to collect sets of point clouds of the calibration board feature points, referred to as point cloud .

Step (2): Set the hand–eye transformation relationship obtained from Tsai–Lenz as the initial value for ICP point cloud matching. Set the matching error threshold as and the maximum number of iterations as .

Step (3): Substitute the collected point cloud data into ICP for matching.

Step (4): Iteratively perform matching until the matching convergence conditions are met.

Step (5): Upon convergence, output the rigid transformation relationship, which represents the improved hand–eye calibration relationship in terms of accuracy.

During the ICP matching process, the Euclidean distance between point cloud and point cloud is given by Equation (13). In the equation, represents the corresponding points in point cloud and point cloud , while represents the maximum number of corresponding point pairs.

With each iteration, a new is generated. The Euclidean distance between the corresponding points in point cloud and point cloud after the ()th iteration’s rigid transformation is denoted as . The rotation matrix under this rigid transformation is represented by , and the translation vector is represented by . This is shown in Equation (14).

The iteration continues until either the specified maximum iteration count is reached, or the error threshold falls below the predetermined threshold, whichever stopping condition occurs first. Assuming the ()th iteration did not converge, the spatial positions of point cloud and point cloud based on the th iteration’s rigid transformation will be continued for the ()th iteration, and the rotation matrix , translation vector , and Euclidean distance for the kth iteration will be output. This is shown in Equations (15) and (16).

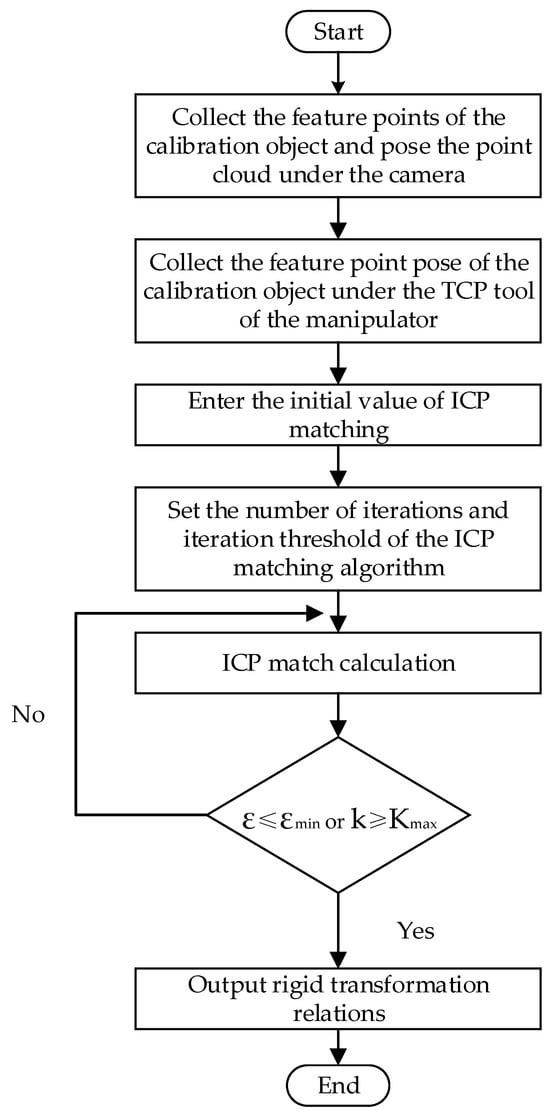

In the computation process, it is imperative to incorporate an error threshold and a specified number of iterations to determine when optimal results should be achieved. Otherwise, the efficiency of point cloud registration will be affected due to the large computational workload. In the point cloud registration process, the point cloud error is . When the corresponding point cloud error is for the ()th iteration, the rigid transformation is deemed optimal, and at this point, the corresponding rigid transformation relationship is outputted. Alternatively, a maximum number of iterations can be set, and when the number of iterations is reached, the computation is stopped, and the rigid transformation relationship , at this point, is outputted. This rigid transformation relationship represents the calibrated relationship between the hand and eye with improved accuracy. The flowchart is shown in Figure 3.

Figure 3.

Based on the ICP hand–eye calibration method.

3. Results and Discussion

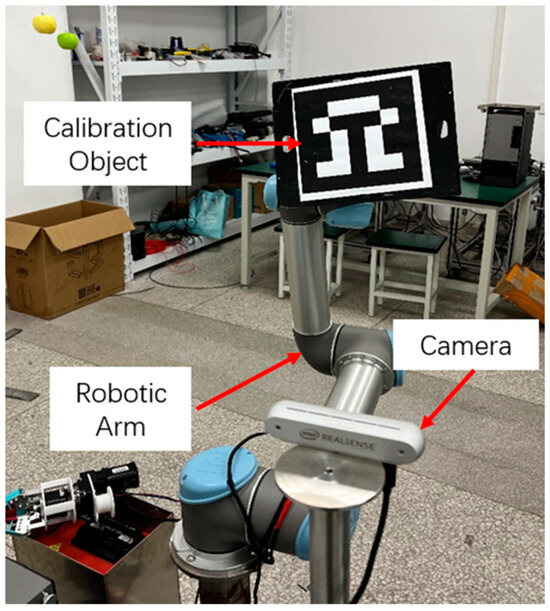

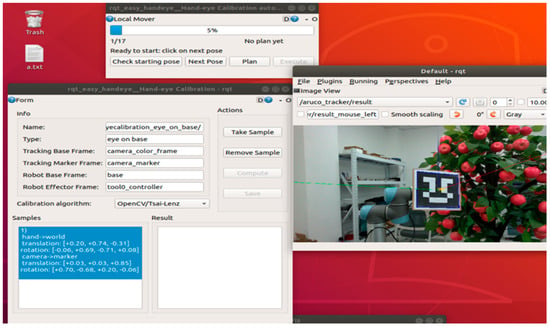

To verify the feasibility of the hand–eye calibration method based on ICP, experiments using an apple-picking robot with an eye-in-hand configuration was conducted. The experimental platform, consisting of both hardware and software components, is shown in Figure 4.

Figure 4.

Hand–eye calibration experiment site.

The visual sensor used is the RealSense D455 camera, and the UR5e six-axis robotic arm is employed. The specific equipment models and specifications are listed in Table 1. Programming software and simulation platforms such as CloudCompare (v.2.3), Matlab (v.R2021b), and ROS (v.melodic.18.04) were utilized for experimental simulations.

Table 1.

Parameters of experimental instruments and equipment.

To obtain the initial values for ICP iteration in the hand–eye calibration, we gathered the poses of the ArUco-582 calibration board’s feature points at various orientations within the camera’s field of view. Simultaneously, we recorded the coordinates of the end effector’s center point under the robotic arm’s base. A total of 20 sets of data were collected. The Tsai–Lenz hand–eye calibration method was used to obtain the initial values for ICP matching, and the calibration process is illustrated in Figure 5.

Figure 5.

The initial value of ICP matching was solved based on the Tsai–Lenz hand–eye calibration method.

The resulting rotation matrix and translation vectors are shown in Table 2.

Table 2.

The rotation matrix and translation vector are obtained based on the Tsai–Lenz hand–eye calibration method.

To examine the impact of ICP matching initial values and matching parameters on the accuracy of the rigid transformation relationship in the matching output, we utilized the aforementioned solutions as the initial values for the ICP matching process. Comparative experiments were carried out by varying the ICP matching initial values, matching error threshold , and maximum iteration count . The rotational error is computed using Formula (17), while Formula (18) is employed to calculate the translational error:

“RARX-RXRB” is a method employed for quantifying errors in hand–eye calibration, commonly utilized to evaluate the disparities between empirically measured values and theoretically estimated values.

RARX: This term denotes the disparity between the actual rotation matrix (R_actual) and the anticipated rotation matrix (R_expected). This matrix encapsulates the rotational association between the camera and the robotic arm’s end effector.

RXRB: This term signifies the contrast between the rotation matrix of the robotic arm’s end effector (R_arm) and the rotation matrix of the robotic arm’s base (R_base). This matrix characterizes the rotational connection of the robotic arm.

Consequently, the “RARX-RXRB” computation quantifies the rotational error between the camera and the robotic arm’s end effector, with the influence of the robotic arm’s rotation factored out. This calculation imparts valuable insights into the precision of hand–eye calibration by assessing the deviation between the actual and anticipated camera positions, while accounting for the impact of the robotic arm’s rotation.

The expression “RXTX-RXTB-TX+TA” constitutes a formula employed for the computation of translational error, involving matrix and vector operations.

RXTX: This term signifies the product of the actual rotation matrix (R_actual) and the actual translational vector (T_actual). It captures the authentic transformational relationship between the camera and the robotic arm’s end effector.

RXTB: This term represents the product of the actual rotation matrix (R_actual) and the anticipated translational vector (T_expected). It characterizes the projected transformational relationship between the camera and the robotic arm’s end effector.

TX: This refers to the actual translational vector (T_actual).

TA: This symbolizes the projected translational vector (T_expected).

Consequently, the computation expressed as “RXTX-RXTB-TX+TA” shows cases the extent of translational error between the factual and envisaged transformational associations. This calculation serves to evaluate the accuracy of the translational correlation between the camera and the robotic arm’s end effector. Lesser error values denote a closer alignment of the actual transformational relationship with the anticipated one, thereby reflecting minimized translational error during the process of hand–eye calibration.

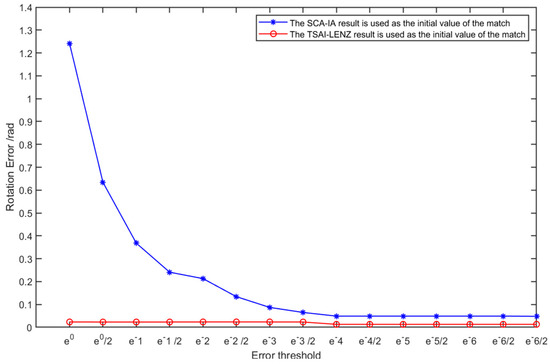

Given that the value of remains constant, experiments were conducted to examine the impact of varying ICP matching error thresholds on the accuracy of rigid transformation relationships in the matching outputs, as shown in Figure 6.

Figure 6.

Under the same premise, the influence of different error thresholds on the rotational accuracy of the rigid transformation relationship of ICP matching output.

From Figure 6, it can be observed that under the condition of the same maximum iteration count , the value of the maximum iteration count is set to 1000. When the result of Tsai–Lenz hand–eye calibration is used as the initial value for ICP matching, and the matching error threshold is set to , the convergence of the rigid transformation matrix remains unchanged. Similarly, when the rigid transformation relationship from the matching output of the SCA-IA algorithm is employed as the initial value for ICP matching, and the matching error threshold is set to , there is no alteration in the convergence of the rigid transformation matrix. Different initial values for ICP matching lead to variations in the accuracy and efficiency of obtaining the rigid transformation relationship. The accuracy of the rigid transformation relationship obtained when utilizing the result of Tsai–Lenz hand–eye calibration as the initial value for ICP matching is higher than the accuracy achieved using the SCA-IA algorithm.

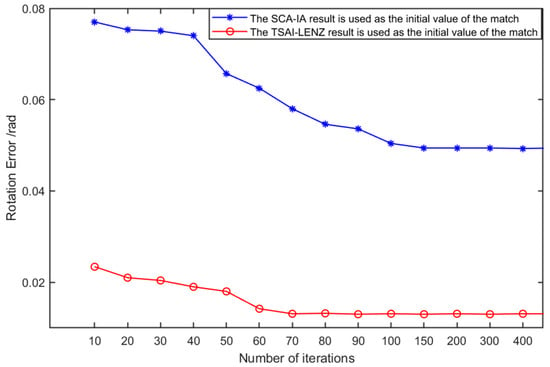

Similarly, under the condition of the same error threshold , the value of the error threshold is set to . Experiments were conducted to investigate the impact of varying ICP matching maximum iteration counts on the accuracy of rigid transformation relationships in the matching outputs, as illustrated in Figure 7.

Figure 7.

Under the same premise of , the influence of different maximum iterations on the rotational accuracy of the rigid relationship of ICP matching output.

As indicated by the findings presented in Figure 7, when employing the outcomes of the Tsai–Lenz hand–eye calibration as the initial inputs for the ICP matching process, and with a designated matching iteration count denoted by set to 70, the convergence behavior of the rigid transformation matrix remains unaffected. At this juncture, the angular precision error registers at approximately 0.013 radians. Conversely, when utilizing the rigid transformation relationships derived from the output of the SCA-IA algorithm as the foundational values for ICP matching, and with the same designated matching iteration count denoted by set to 150, the rigid transformation matrix successfully converges. Notably, the angular precision error at this convergence point approximates 0.075 radians.

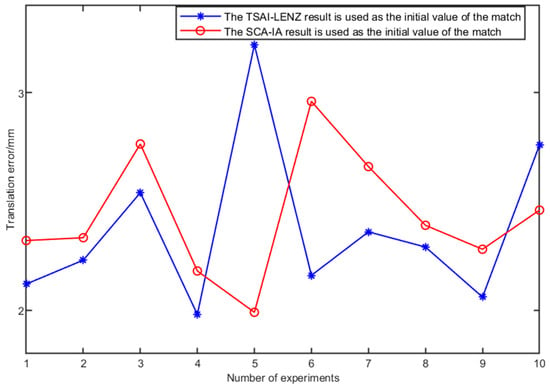

For the purpose of juxtaposing translational errors, a uniform set of ICP matching parameters, denoted by and , was adopted to facilitate a comprehensive analysis of translational discrepancies. A total of 10 calibration experiments were meticulously conducted. The comparative exhibition of experimental data is meticulously presented in Figure 8.

Figure 8.

The influence of different ICP matching initial values on translation accuracy.

Synthesizing the insights from Figure 7 and Figure 8. It becomes apparent that a higher precision in the initialization of ICP matching leads to swifter convergence. Additionally, it enhances the precision of rotational relationships within the resulting rigid transformations. Notably, Figure 8 reveals that the influence of ICP matching initialization on translational error is relatively modest. This observation emanates from ICP matching’s foundation on estimating point cloud centroids, wherein identical point cloud scenarios yield nearly indistinguishable centroid coordinates, thereby yielding minimal disparities in translational error. Incorporating the above-discussed observations leads to the conclusion. This conclusion states that elevated precision in ICP matching initialization enhances matching efficiency. Additionally, it improves the rotational precision of rigid transformation outputs. Consequently, when designating the matching error threshold as and setting the maximum iteration count to 100 iterations, an optimal configuration for rigid transformation relationships can be attained.

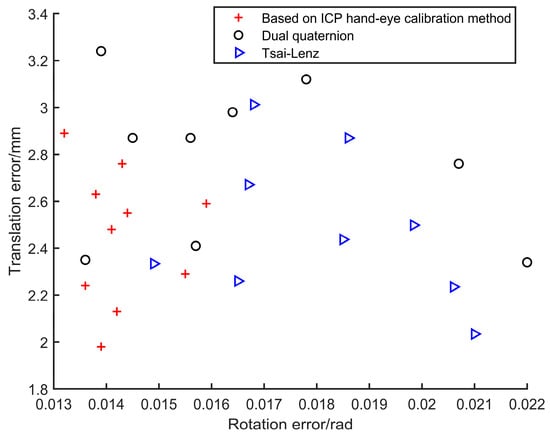

To further substantiate the efficacy of the ICP-based hand–eye calibration method, a comparative study was conducted through 10 experiments against the traditional dual-quaternion method and Tsai–Lenz method. As shown in Figure 9, it becomes evident that, in comparison to the dual-quaternion method and the Tsai–Lenz method, the ICP-based hand–eye calibration method exhibits superior rotational precision. In terms of translational precision, the ICP-based hand–eye calibration method shows comparable results to the Tsai–Lenz method and outperforms the dual-quaternion method.

Figure 9.

Comparison of precision between ICP hand–eye calibration method and other hand–eye calibration methods.

A total of ten comparative experiments were conducted, and the average of the calibration results from these ten trials was computed, as presented in Table 3.

Table 3.

Hand–eye calibration error.

From Table 3, it is evident that under the precondition of employing the Tsai–Lenz algorithm’s hand–eye calibration results as initial values for the ICP matching, the hand–eye calibration method based on ICP matching demonstrates superior rotational accuracy compared to the Tsai–Lenz algorithm. The rotational precision is enhanced by 13.62%, and the translational precision improves by 2.47%. In comparison to the dual quaternion method, the rotational accuracy witnesses a proportional enhancement of 5.03%, accompanied by a 13.89% increase in translational precision.

4. Conclusions

During the traditional hand–eye calibration involving the solution of the equation, there has always been precision loss due to decoupling during the linearization process and the accumulation of substantial nonlinear solution errors. Therefore, this paper introduces a hand–eye calibration method based on ICP point cloud matching to enhance calibration precision. Compared with the traditional Tsai–Lenz and dual quaternion methods, the effectiveness of the proposed method is verified by experiments and simulation experiments. For the issue of low initial precision in ICP point cloud matching, which leads to high computational complexity and substantial error in the matched rigid transformation relationship, this study sets the initial values from Tsai–Lenz as the initial values for ICP matching. Additionally, the matching error threshold in the matching parameters is set as , and the maximum iteration count is set as 70 to optimize both matching efficiency and accuracy. Hand–eye calibration experiments are conducted using a UR5e robotic arm. The results demonstrate that the precision of rotational components can be improved by 13.6%, and translational precision can be enhanced by 2.47% compared to the Tsai–Lenz algorithm. When compared to the dual quaternion method, the rotational precision enhancement is 5.03%, while translational precision improves by 13.89%. This method holds certain significance for enhancing operational efficiency in the context of apple-picking robots.

Author Contributions

Conceptualization, T.Y. and P.L.; methodology, T.Y.; software, T.Y.; validation, P.L., Y.L. and T.J.; formal analysis, T.Y.; investigation, H.Y.; resources, G.C.; data curation, T.Y.; writing—original draft preparation, T.Y.; writing—review and editing, P.L.; visualization, T.J.; supervision, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (61903184); Natural Science Foundation of Jiangsu Province Youth Fund (BK20181017, BK2019K186); 2021 Provincial Key R&D Plan (Industry Foresight and Common Key Technologies) (BE2021016-5).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors gratefully acknowledge the editors and anonymous reviewers for their constructive comments on our manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pi, J.; Liu, J.; Zhou, K.; Qian, M. An Octopus-Inspired Bionic Flexible Gripper for Apple Grasping. Agriculture 2021, 11, 1014. [Google Scholar] [CrossRef]

- Ravankar, A.; Ravankar, A.A.; Rawankar, A.; Hoshino, Y. Autonomous and Safe Navigation of Mobile Robots in Vineyard with Smooth Collision Avoidance. Agriculture 2021, 11, 954. [Google Scholar] [CrossRef]

- Fan, P.; Lang, G.; Guo, P.; Liu, Z.; Yang, F.; Yan, B.; Lei, X. Multi-Feature Patch-Based Segmentation Technique in the Gray-Centered RGB Color Space for Improved Apple Target Recognition. Agriculture 2021, 11, 273. [Google Scholar] [CrossRef]

- Li, Y.R.; Lian, W.Y.; Liu, S.H.; Huang, Z.H.; Chen, C.T. Application of hybrid visual servo control in agricultural harvesting. In Proceedings of the International Conference on System Science and Engineering, Taichung, Taiwan, 26–29 May 2022; pp. 84–89. [Google Scholar]

- Ning, T.; Wang, C.; Han, Y.; Jin, Y.; Gao, Y.; Liu, J.; Hu, C.; Zhou, Y.; Li, P. Deep Vision Servo Hand-Eye Coordination Planning Study for Sorting Robots. Symmetry 2022, 14, 152, Erratum in Symmetry 2023, 15, 82. [Google Scholar] [CrossRef]

- Li, G.; Zou, S.; Din, S.; Qi, B. Modified Hand–Eye Calibration Using Dual Quaternions. Appl. Sci. 2022, 12, 12480. [Google Scholar] [CrossRef]

- Li, J.; Liu, F.; Liu, S.; Wang, Z. Optical Remote Sensor Calibration Using Micromachined Multiplexing Optical Focal Planes. IEEE Sens. J. 2017, 17, 1663–1672. [Google Scholar]

- Zou, Y.; Chen, X. Hand–eye calibration of arc welding robot and laser vision sensor through semidefinite programming. Ind. Robot Int. J. 2018, 45, 597–610. [Google Scholar] [CrossRef]

- Grossmann, B.; Krüger, V. Continuous hand-eye calibration using 3D points. In Proceedings of the 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 311–318. [Google Scholar]

- Xiao, W.; Huang, J.; Song, H. Robot-world and hand–eye calibration based on quaternion: A new method and an extension of classic methods, with their comparisons. Mech. Mach. Theory 2023, 179, 105127. [Google Scholar]

- Park, F.C.; Martin, B.J. Robot sensor calibration: Solving AX = XB on the Euclidean group. IEEE Trans. Rob. Autom. 2002, 10, 717–721. [Google Scholar] [CrossRef]

- Fu, J.; Ding, Y.; Huang, T.; Liu, H.; Liu, X. Hand-eye calibration method based on three-dimensional visual measurement in robotic high-precision machining. Int. J. Adv. Manuf. Technol. 2022, 119, 3845–3856. [Google Scholar] [CrossRef]

- Qiu, S.; Wang, M.; Kermani, M.R. A New Formulation for Hand–Eye Calibrations as Point-Set Matching. IEEE Trans. Instrum. Meas. 2020, 69, 6490–6498. [Google Scholar] [CrossRef]

- Xu, Y.; Rao, L.; Fan, G.; Chen, N. Improved Robust Hand-Eye Calibration Algorithm Based on Data Optimization and Filtering. In Proceedings of the 2020 International Conference on Virtual Reality and Visualization (ICVRV), Recife, Brazil, 13–14 November 2020; pp. 159–164. [Google Scholar]

- Evangelista, D.; Allegro, D.; Terreran, M.; Pretto, A.; Ghidoni, S. An Unified Iterative Hand-Eye Calibration Method for Eye-on-Base and Eye-in-Hand Setups. In Proceedings of the 2022 IEEE 27th International Conference on Emerging Technologies and Factory Automation (ETFA), Stuttgart, Germany, 6–9 September 2022; pp. 1–7. [Google Scholar]

- Tsai, R.Y.; Lenz, R.K. A new technique for fully autonomous and efficient 3D robotics hand/eye calibration. IEEE Trans. Robot. Autom. 1989, 5, 345–358. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 1106–1112. [Google Scholar]

- Daniilidis, K. Hand-eye calibration using dual quaternions. Int. J. Robot. Res. 1999, 18, 286–298. [Google Scholar] [CrossRef]

- Qi, L. Standard Dual Quaternion Optimization and Its Applications in Hand-Eye Calibration and SLAM. Commun. Appl. Math. Comput. 2022. [Google Scholar] [CrossRef]

- Van Toan, N.; Khoi, P.B. A svd-least-square algorithm for manipulator kinematic calibration based on the product of exponentials formula. J. Mech. Sci. Technol. 2018, 32, 5401–5409. [Google Scholar] [CrossRef]

- Xu, A.; Rao, L.; Fan, G.; Chen, N. Fast and High Accuracy 3D Point Cloud Registration for Automatic Reconstruction From Laser Scanning Data. IEEE Access 2023, 11, 42497–42509. [Google Scholar] [CrossRef]

- Fei, B.; Yang, W.; Chen, W.M.; Li, Z.; Li, Y.; Ma, T.; Hu, X.; Ma, L. Comprehensive Review of Deep Learning-Based 3D Point Cloud Completion Processing and Analysis. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22862–22883. [Google Scholar] [CrossRef]

- Mingjun, W.; Fang, Y.; Le, L.; Chaojun, H. Local neighborhood feature point extraction and matching for point cloud alignment. Infrared Laser Eng. 2022, 51, 20210342. [Google Scholar]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Guo, C.; Liu, F.; Wang, W. Research on Improved ICP Geomagnetic Matching Simulation Algorithm. In Proceedings of the 2022 International Conference on Computers, Information Processing and Advanced Education (CIPAE), Ottawa, ON, Canada, 26–28 August 2022; pp. 443–445. [Google Scholar]

- Nakajima, S. Matching of Laser Range Sensor Data and 3D Surface Scanner Data Robust to Abnormal Values Using Evolutionary ICP Algorithm and Gaussian Function. In Proceedings of the 2013 Ninth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Beijing, China, 16–18 October 2013; pp. 250–254. [Google Scholar]

- Wang, X.; Li, Y.; Peng, Y.; Ying, S. A Coarse-to-Fine Generalized-ICP Algorithm with Trimmed Strategy. IEEE Access 2020, 8, 40692–40703. [Google Scholar] [CrossRef]

- Kuçak, R.A.; Erol, S.; Erol, B. An Experimental Study of a New Keypoint Matching Algorithm for Automatic Point Cloud Registration. ISPRS Int. J. Geo.-Inf. 2021, 10, 204. [Google Scholar] [CrossRef]

- Zhao, F.Q.; Zhou, M.Q.; Geng, G.H. A Method for Scale-Varying Rigid Body Fragmentation Matching. J. Beijing Inst. Technol. 2019, 39, 95–100. [Google Scholar]

- Zhang, Y.D.; Yuan, B.; Li, X. Research on Three-dimensional Map Building of Indoor Environment based on Improved ICP Algorithm. J. Cent. China Norm. Univ. Nat. Sci. Ed. 2017, 51, 264–272. [Google Scholar]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 666–673. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).