Abstract

Middle-censoring is a general censoring mechanism. In middle-censoring, the exact lifetimes are observed only for a portion of the units and for others, we can only know the random interval within which the failure occurs. In this study, we focus on statistical inference for middle-censored data with competing risks. The latent failure times are assumed to be independent and follow Burr-XII distributions with distinct parameters. To begin with, we derive the maximum likelihood estimators for the unknown parameters, proving their existence and uniqueness. Additionally, asymptotic confidence intervals are constructed using the observed Fisher information matrix. Furthermore, Bayesian estimates under squared loss function and the corresponding highest posterior density intervals are obtained through the Gibbs sampling method. A simulation study is carried out to assess the performance of all proposed estimators. Lastly, an analysis for a practical dataset is provided to demonstrate the inferential processes developed.

Keywords:

middle-censoring; Burr-XII distribution; competing risks; Bayes estimation; Gibbs sampling MSC:

62N01; 62F15

1. Introduction

1.1. Middle-Censored Data and Competing Risks

Censoring occurs frequently in life test studies and cannot be completely avoided because of various practical constraints. Middle-censoring, a general censoring mechanism, was proposed by Jammalamadaka and Mangalam [1]. In middle-censoring, the precise failure time of the tested component cannot be detected when it occurs within a random interval. That is, the exact lifetimes of some units are observable, while for others we only know the intervals.

It is supposed that there are n identical units in a life test. The lifetimes of these units are defined as , and the i-th corresponding random interval is . If , we cannot know the actual lifetime of the i-th unit, then . Otherwise, denotes that the exact lifetime of the i-th unit can be observed directly. We can reorder the data, placing the complete data at the front, then the data can be described as:

Jammalamadaka and Mangalam [1] not only put forward the middle-censoring scheme, but also developed an algorithm for obtaining the self-consistent estimator for the middle-censored data. The maximum likelihood estimator (MLE) in a non-parametric setting was also obtained. In addition, Jammalamadaka and Iyer [2] developed an approximation to the distribution function of the middle-censored data. Then, they also suggested a straightforward alternative estimator for the lifetime distribution function, analyzed its performance under middle-censored data, and established its consistency and weak convergence.

Moreover, several scholars have delved into middle-censored data within the parametric situation. Iyer et al. [3] considered a middle-censoring scheme in the case where the lifetime of the tested unit follows the exponential distribution. The maximum likelihood estimator was derived and its consistency and asymptotic properties were rigorously established. Additionally, the Bayes estimator was derived under a Gamma prior distribution. Davarzani and Parsia [4] studied a discrete set-up with middle-censored data, where the survival time, the left endpoint of the censoring interval, and the length of the interval are all variables modeled by geometric distributions. They derived the MLE of the unknown parameter using the EM algorithm. Bayesian estimator and the corresponding credible intervals were also obtained. Jammalamadaka and Bapat [5] developed the estimation under the middle-censoring paradigm in a multinomial distribution with k distinct possible outcomes. Abuzaid et al. [6] concentrated on the Weibull and exponential distributions to explore the robustness of their parameter estimates and discovered that estimations for middle-censoring exhibited greater robustness when contrasted with those for right censoring.

In practical applications, failure causes are typically pre-existing risk factors that may interact competitively. For example, in engineering reliability testing, environmental stressors such as temperature and humidity often compete to induce component failure. Statistical literature refers to this competitive failure mechanism as ‘competing risks’, emphasizing how multiple antecedent factors compete to be the first to exceed critical thresholds and cause system breakdown.

To consider the different reasons for failure, many studies combine competing risks with middle-censoring. Based on middle-censored data with two independent competing risks, Wang [7] explored various estimations of the parameter for exponential distribution. Ahmadi et al. [8] considered different estimations under middle-censored independent competing risks data with exponential distribution. Both the fix-point method and the EM algorithm were used to calculate the MLE. In addition, the Bayesian estimate under the Gamma prior was derived using both the Lindley’s approximation method and Gibbs sampling. The reconstruction of the censoring data was also explored. Wang et al. [9] investigated the dependent competing risks model based on middle-censored data by making use of the Marshall–Olkin bivariate Weibull distribution. They derived MLEs, midpoint approximation estimates, and asymptotic confidence intervals (ACIs) for the unknown parameters. They also considered the Bayesian estimates under the Gamma–Dirichlet prior by the acceptance–rejection sampling method. Davarzani et al. [10] analyzed a dependent middle-censoring model with the Marshall–Olkin bivariate exponential distribution.

In addition, other risk models have been explored. Sankaran and Prasad [11] proposed a proportional hazards regression framework to analyze middle-censored lifetime data with Weibull distribution. Rehman and Chandra [12] studied the cumulative incidence function by modeling the cause-specific hazard as a Weibull distribution for middle-censored survival data. Sankaran and Prasad [13] investigated an Exponentiated–Exponential regression model for middle-censored survival time analysis.

1.2. Burr-XII Distribution

The Burr XII distribution is a flexible and versatile distribution that is useful for modeling a wide range of data types. Its parameters allow it to exhibit different kurtosis and tail properties to adapt to different shapes, and the distribution has non-monotone hazard rates. In addition, both the cumulative and reliability function of the Burr-XII distribution have closed-form expressions, making it easier to compute percentiles and the likelihood function based on censored data, which makes it a valuable tool in statistical modeling and analysis [14].

A random variable X is said to follow the Burr distribution if its probability density function (PDF) and cumulative distribution function (CDF) are given by the following expressions, respectively:

where are two positive shape parameters of the Burr-XII distribution.

In addition, the reliability and hazard rate function take the form as:

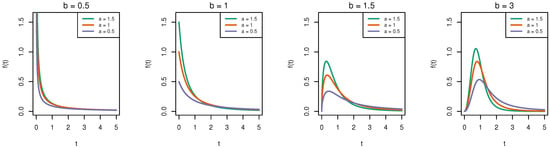

In the density plots in Figure 1, it can be observed that when 1, the density curve is strictly decreasing, while the Burr-XII distribution density curve is unimodal when and at this time, a larger a corresponds to a higher peak height of the curve.

Figure 1.

PDF plots of Burr-XII distribution under various parameter configurations.

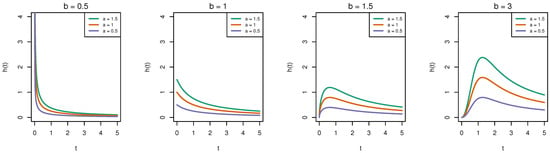

In addition, Figure 2 shows the hazard rate function plots. As shown in the picture, when , the hazard rate function decreases with t becoming larger, while the plot has a single peak when . The peak is higher when the value of parameter a becomes larger.

Figure 2.

Hazard rate function plots of Burr-XII distribution under various parameter configurations.

Recently, owing to its versatility in applied contexts like reliability analysis, quality assurance, and quantum systems, the Burr-XII distribution has attracted significant interest from several scholars.

There are many studies for Burr-XII distribution based on various life tests and censoring schemes. Soliman [15] derived both the MLE and Bayesian estimation approaches for the Burr-XII distribution parameters under progressive Type-II censoring scenarios. Yan et al. [16] exploited the MLE and Bayes estimation within an improved adaptive Type-II progressive censoring framework under the Burr-XII distribution. Du and Gui [17] investigated the Burr-XII distribution in competitive risk modeling under adaptive Type-II progressive censoring. Bayesian point estimates under various loss functions and the corresponding highest posterior density credible intervals were obtained through MCMC simulations.

Although there are a large amount of statistical inferences related to the Burr-XII distribution along with censored data, they mainly focused on Type-II censoring or other progressive censoring schemes, and studies on the Burr-XII distribution under middle censoring are currently limited. Abuzaid [18] analyzed middle-censored data from the Burr-XII distribution. MLEs of two parameters were obtained, and Bayesian inferences under a Gamma prior were established through the application of Lindley’s approximation technique. However, it did not consider the failure reasons. Thus, based on that, in this paper we will introduce independent competing risks to study the middle-censored data under Burr-XII distribution.

To our knowledge, no existing studies have comprehensively investigated the parameter-estimation challenges for Burr-XII distributions considering both middle-censored data and competing risks models. In this paper, we combine the competing risks with middle-censored data to address the parameter-estimation problems for the Burr-XII distribution. Compared to [17], we introduce a new censoring scheme and consider different numbers of failure causes. Different from [18], we introduce a competing risks model to the analysis framework.

In this paper, we use the perspective of latent failure times for a competing risk model suggested by Cox [19]. An analysis of the independent competing risks model is conducted within a middle-censoring scenario. It is assumed that each latent failure time variable follows the Burr-XII distribution. In Section 2, we make some reasonable assumptions for our model. In Section 3, we prove that the MLEs exist uniquely, implementing both optimization techniques and the EM algorithm to obtain them. In addition, we derive ACIs in accordance with the properties of MLEs. In Section 4, we obtain the Bayesian estimators under exponential and Gamma prior distributions using Gibbs sampling, and HPD credible intervals are also constructed. Section 5 presents the simulation results to validate estimator performance and applies the model to a practical dataset to demonstrate the application value of our model.

2. Model Assumption and Notation

Assume that homogeneous units are assigned to a lifetime test. For each unit, there are s failure causes. We suppose that these s latent failure times are mutually independent and conform to the Burr-XII distribution.

Thus, define as the exact lifetime of the i-th tested unit, and

where represents the random variable corresponding to the j-th latent failure cause. Moreover, are independent of each other. We assume that follow the Burr-XII distributions with the same second parameter () and different first parameters (). The PDF and CDF of are presented as follows, respectively:

and

where and b are unknown parameters to be estimated and they are all positive, .

As exhibited in Figure 1, the parameter b affects the overall shape of the density curve. For different failure causes of the same product, it is reasonable to assume that the distributions share the same parameter b. The assumption that the potential failure distributions share a common parameter while differing in the other has been widely used in previous studies on competing risks data, such as [20,21].

Additionally, we use to denote the failure cause. if the i-th unit has failed as a result of the j-th failure cause. We suppose that are independent and cannot be observed directly, while and can be observed if the data are not censored. Therefore, taking into account the competing risks data under a middle-censoring framework, we consider a corresponding random censoring interval for the i-th tested unit, and the interval is independent of its actual lifetime . That is,

We suppose and are also independent and exponentially distributed with known parameters.

Through the independence of , the joint PDF and CDF of lifetime with its corresponding cause are concluded as follows:

where and .

Through integration, we derive:

To simplify the notation, we will replace and with and , respectively, in the following text.

3. Frequentist Estimation

This section begins by deriving the likelihood function and establishing the existence and uniqueness of MLEs of the model parameters. Both optimization-based methods and the EM algorithm are then employed to compute these estimates. ACIs are constructed using the Fisher information matrix.

3.1. Maximum Likelihood Estimators

For simplicity, according to the approach in [8], after reordering the data, the sample is partitioned such that the first data items are not censored, and the subsequent data items are middle-censored. Thus, our data can be written as:

Let represent the observed sample data in the form mentioned above. Based on the sample data, the likelihood function can be derived through (7) and (8) as follows:

where symbol ∝ denotes ’proportional to’,

and , which equals the number of the items that have failed attributed to the j-th latent risk. For simplicity, we use to replace .

Through (9), the log-likelihood function takes the form:

To solve for the MLEs, we set the first-order partial derivatives of function with respect to all unknown parameters and b to 0, respectively. Consequently, the corresponding likelihood equations are given by:

where

and

For , we determine, respectively, the MLEs of and as and , and the MLE of b is expressed as . Based on (11), we can derive that . Thus,

Before obtaining the solution through (11)–(13), the uniqueness and existence of solutions to the likelihood equations require verification.

Theorem 1.

For competing risks and middle-censored data following the Burr-XII distribution, when parameter b is known, the MLEs of parameters are always existent and unique.

Proof.

Through (11)–(13), the likelihood equations are equivalent to the following:

where and . Therefore,

where

When b is a known constant, we just obtain the solution of to obtain . It is obvious that , and that

so is monotonically decreasing with respect to , and takes both positive and negative values, which indicates the existence and uniqueness of the zero point . Therefore, through (13), we can conclude that when b is fixed, for parameters , their MLEs always exist and are unique and finite. □

Theorem 2.

For competing risks and middle-censored data following the Burr-XII distribution, in the case that and b are all unknown, when there exists i such that , the MLEs and all exist and are unique.

Proof.

According to (9), the joint likelihood function is expressed as:

in accordance with the Lagrange mean-value theorem, there is a satisfying .

So we just need to prove that when the data are uncensored, the MLEs of the parameters and b are existent and unique.

Through (24), and for , for convenience, we substitute with , then we can obtain that

Then, is the solution of the equation given as follows:

We can derive the derivative of as follows:

and it is obvious that

where and .

For each , if , and if ,, so when there exists an i such that . Moreover, it is simple to derive that . Thus, . Subsequently, we are able to prove that the function is strictly monotonically decreasing on the interval . Moreover, as b varies from 0 to , the sign of the function shifts from positive to negative. Therefore, the solution of exists and is unique, which indicates that is unique, and through (17), the uniqueness of can be proved. Consequently, the presence and uniqueness of the MLEs of unknown parameters are demonstrated. □

Remark 1.

When using our model to deal with data, to ensure the existence, we just need to appropriately transform the units of physical quantities in the sample data to guarantee that there exists .

Since the explicit solution of the equations is difficult to obtain, we use certain iterative algorithms to calculate the MLEs of unknown parameters. In this paper, in order to maximize , we use the optim function in R language (version 4.3.2).

3.2. EM Algorithm

Given that explicit expressions for and are unavailable, the EM algorithm is considered and employed to find the MLEs. Incomplete data scenarios are typically addressed using the EM algorithm, which is first formulated by Dempster et al. [22].

Define as the exact survival time of the unit that falls within the interval [,], ,...,.

Based on the complete data (), we can derive the log-likelihood function, which is expressed as:

then by setting the derivatives of with respect to and b to 0, we can obtain:

For conciseness, we define the conditional expectations as follows:

where the expressions of and can be seen in (7) and (8).

In each iteration, to perform the E-step, we need to calculate the expectations using the parameter values obtained from the previous iteration by numerical integration methods. For notational convenience, we introduce the symbols as follows:

- ,

- ,

- ,

where . And for those uncensored data, we define:

where .

When performing the M-step during the iteration process, for the purpose of maximizing the pseudo log-likelihood function based on the complete data, through (24), we can derive that

and

In addition, we say the process converges when

and

where represents the tolerance threshold fixed in advance, and proper values of need to be obtained by numerical tests. Based on the above, the sequential steps of the EM algorithm are expressed in Algorithm 1 systematically.

| Algorithm 1 The EM algorithm for obtaining MLEs under middle-censored data with competitive risks |

| 1: Input: , initial parameter , and 2: Initialization: 3: while not converged do 4: E-step (Expectation step): 5: Under the current parameter estimate , calculate the expectations: 6: M-step (Maximization step): 7: Update the parameter estimates by using Equations (25) and (26) 8: 9: end while 10: Output: Estimated parameter and |

3.3. Asymptotic Confidence Intervals

To obtain the ACIs of the unknown parameters and b, we use the Fisher information matrix introduced in [23], and the matrix is derived by calculating the corresponding second-order partial derivatives. Then, we present the Fisher information matrix as:

where

, and . In addition,

where

For the second-order derivative of b,

where

For simplicity, the Fisher matrix is given for , and the form for other values of s can be obtained similarly. In addition, we denote by in the following matrix. The Fisher information matrix is given by:

Replace the unknown parameters by their MLEs, then the observed Fisher matrix can be given as:

We define as the inverse matrix of , which is expressed as:

Let V denote the matrix with the same form as and denote the j-th diagonal element of the covariance matrix V, . According to the asymptotic properties of MLEs, we subsequently infer that the distributions of for and can be approximately modeled by the standard normal distribution.

For , a specified confidence level parameter, the two-sided ACIs for are formulated as . Similarly, the ACI for b can be constructed as . Here, refers to the upper percentile point of the standard normal distribution.

4. Bayesian Estimation

Different from frequentist methods, the advantage of Bayesian estimation resides in its capacity to integrate prior knowledge and sample data, enabling more stable parameter inference in scenarios with small samples. In this section, we derive the Bayesian estimates and the corresponding interval estimates in the case where the prior distribution of is related to b.

We suppose that the parameter b is assigned an exponential prior distribution with the mean equal to , while each follows a Gamma prior distribution with the shape parameters and the rate parameter b. Abuzaid [18] also employed a similar assumption. Moreover, are independent of each other. The PDFs of the prior distributions of and b are presented as:

where and are known.

So the joint prior density can be given by:

where .

The squared error loss function () is widely used in statistics, where represents the true parameter value and is the corresponding estimated value. Squared error loss in Bayesian estimation leads to the posterior mean as the optimal estimator, naturally integrating prior knowledge with observed data to reduce variance and providing inherent uncertainty quantification through the posterior distribution. Under the squared error loss function, the Bayesian estimators for and b are the posterior conditional expectations, calculated based on (31).

4.1. Gibbs Sampling

Deriving expectations directly through integration from the PDF of joint posterior distribution is often analytically intractable. Hence, Gibbs sampling is employed to draw samples from the posterior distribution. Before using the Gibbs sampling, we need to confirm some theorems.

Theorem 3.

If are observed (i.e., the data are complete) and is given, the conditional posterior density of b is log-concave.

Proof.

When the data are complete, the joint posterior density function is expressed as:

where refers to the complete data.

Therefore, the conditional posterior density of b is given by:

After calculation, We calculate the second-order derivative of the logarithmic function with respect to b:

thus, we can see that the conditional posterior density function of b is log-concave, which indicates that we can employ the adaptive rejection sampling [24] to draw samples from the posterior conditional distribution of b. □

Theorem 4.

When the data are complete and b is given, the conditional posterior of follows a Gamma distribution.

Proof.

Through (32), we know that the conditional posterior density functions of are as follows:

where represents vector . Thus, follows the Gamma conditional posterior distribution with the shape parameter and the rate parameter . □

For those censored data, if we have the estimated values of and b, we can use to substitute , where can be sampled from the conditional distribution of . The conditional density function of is given by:

According to the two theorems above, in Algorithm 2, the Gibbs sampling procedure is shown.

| Algorithm 2 Gibbs sampling method to Bayesian estimation |

| 1: Initialize the values of and . • Generate from , • Generate from , 2: for to N do 3: Sample from , then obtain 4: Sample through using the adaptive rejection sampling method proposed in [24]. 5: Sample from , where 6: end for |

Remark 2.

The notation refers to the exponential distribution with the expected value , and refers to the Gamma distribution with the shape parameter u and the scale parameter .

Subsequently, based on the N samples generated from the posterior distribution, the Bayesian estimates of and b under the squared error loss function could be obtained as follows:

- =, ,

where d is the number of the burn-in sample fixed in advance.

4.2. HPD Credible Intervals

We choose to employ the approach proposed by [25] in this subsection to obtain the HPD credible intervals, which contains % of the posterior probability mass, with parameter values within the interval having the highest probability density. Based on the Gibbs sampling in Section 4.1, we perform the following steps to obtain HPD credible intervals.

At the start, rearrange the total drawn samples to obtain the order statistics, and they can be written as and , where . For each parameter,

where refers to the floor function and .

Then, traverse all intervals and find the interval with the shortest length:

Therefore, the HPD credible intervals for each unknown parameter can be expressed as follows:

and

5. Simulation and Data Analysis

This section evaluates the performance of previously developed parameter estimators through Monte Carlo simulation studies. Additionally, a real-world data analysis is presented to validate the proposed methodologies.

5.1. Simulation Study

Without losing generality, in the simulation, we set s = 2; that is, there are two latent risks.

To begin with, we generate competing risks sample data from the Burr-XII distributions randomly by the inverse transform sampling method. In this simulation, we set , , and . The sample size n takes values of , , and , respectively. In addition, we generate the left endpoints and interval lengths from exponential distributions with mean and , respectively. We consider the following combinations for the parameter pairs : , , and . By comparing actual lifetimes with the corresponding interval, we can obtain the middle-censored data.

Then, we can calculate the MLEs and 95% ACIs for , , and b by employing the optim function or the EM algorithm.

For Bayesian estimation, regarding the informative prior, to guarantee that the expected value of the prior distribution of b coincides with the true value, we set that b has an exponential prior distribution with . In addition, we select the hyper-parameters as and . This method to select hyper-parameters has been used by some scholars such as [8,21].

In practical scenarios, researchers often lack prior knowledge, necessitating the use of non-informative priors in Bayesian methodologies. For parameters , where Gamma distributions are adopted as the prior, it is common practice to set the shape parameter to a decimal value close to 0 in the calculation process. This approach aligns with established methodologies in existing literature, such as references [8,20].

For parameter b, we introduce an improper uniform prior density, defined over the positive real numbers (). Then, the generalized non-informative prior density function of b and the PDF of the joint posterior distribution function can be expressed as

Through the derivation combined (32), (33), (35), and (37), in the process of Gibbs sampling, we just need to set in the procedure. It is worth noting that when using a non-informative prior, the method for generating initial values of , , and needs to be adjusted in the first step of Gibbs sampling to avoid generating 0.

We then derive the Bayesian estimates and the associated HPD credible intervals by Gibbs sampling. The sampling process consists of iterations, during which the first iterations are seen as the burn-in period and are discarded.

After repeating the process above for times, we can compare the performance of all point estimators numerically via bias and mean squared error (MSE). In addition, the outcomes of interval estimators can be assessed by means of average width (AW) and coverage percentages (CP).

In this study, the code was executed in R langurange (version 4.3.2) on a Lenovo XiaoXinPro 16ACH laptop. It is equipped with an 8-core AMD Ryzen 7 5800H with Radeon Graphics processor running at a base frequency, 16 GB of RAM with a speed of 3200 MT/s, and a 512 GB solid-state drive.

For EM algorithm, taking into account both the time cost and accuracy, we need to select a proper value for . We run one of the schemes and compare the estimation accuracy (according to the MSEs) under different values to identify an appropriate value. Then, we execute the estimation for the other schemes using this determined value. As shown in Table 1, reducing below 0.0001 does not significantly improve the MSE of parameter estimates. Thus, is chosen as the optimal threshold.

Table 1.

MSEs of the MLE obtained by EM algorithm with different values of .

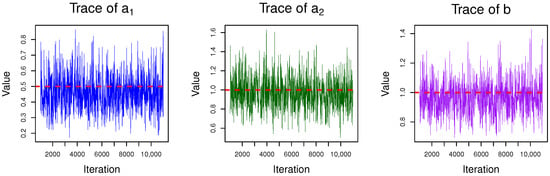

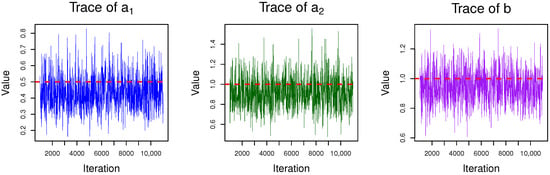

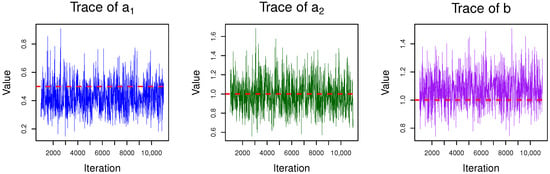

Before conducting Bayesian estimation, it is essential to verify the convergence of the Gibbs sampler to ensure the reliability of posterior inference. To this end, we systematically evaluate the convergence across multiple representative censoring schemes through trace plots. These visualizations and descriptions demonstrate that the number of realizations (11,000) and the burn-in period (1000) we used are sufficient to ensure the convergence of the sampler. Take the situation where and informative priors are used as examples. In Figure 3, Figure 4 and Figure 5, we can see that the trace plots of the three parameters all exhibit the characteristic of fluctuating around a certain value without obvious trend changes after, indicating that with the given number of iterations (N = 11,000 and ), the Gibbs sampling shows a convergent performance when estimating parameters .

Figure 3.

Trace of parameters when and () = (0.5, 0.5).

Figure 4.

Trace of parameters when and () = (0.5, 1).

Figure 5.

Trace of parameters when and () = (1, 0.5).

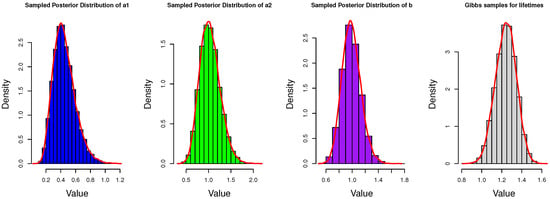

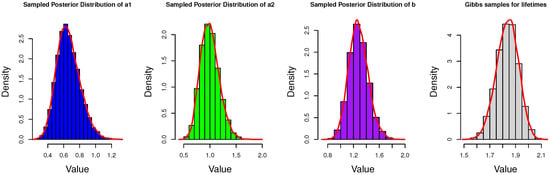

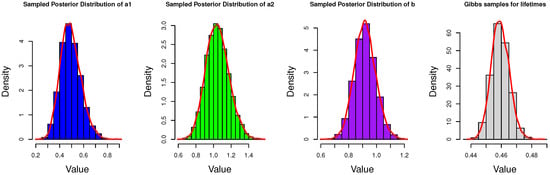

In addition, to show the details of Gibbs sampler and obtain more information of the posterior distributions, we present the histograms of Gibbs samples for unknown parameters and the estimated lifetimes of the censored data. Due to space limitations, only part of the results are presented here in Figure 6, Figure 7 and Figure 8.

Figure 6.

Histograms of Gibbs samples for parameters and censored lifetimes when n = 30 and .

Figure 7.

Histograms of Gibbs samples for parameters and censored lifetimes when n = 50 and .

Figure 8.

Histograms of Gibbs samples for parameters and censored lifetimes when n = 100 and .

Table 2, Table 3 and Table 4 show the results of the point estimators, including the MLEs and Bayesian estimators for , , and b, where `’ and `’, respectively, refer to the MLE results obtained by the optim function and the EM algorithm. And prior 1 refers to the non-informative prior, while prior 2 refers to the informative prior. In addition, pc refers to the censoring proportion under the corresponding scheme and ‘’ refers to the Bayesian estimator. We discuss different censoring schemes by setting different values of the parameters and .

Table 2.

Biases and MSEs corresponding to different estimates for .

Table 3.

Biases and MSEs corresponding to different estimates for .

Table 4.

Biases and MSEs corresponding to different estimates for b.

(1) Under different values of , which represent different censoring schemes, the biases and MSEs of each estimator are very similar. This fully demonstrates that the model is robust to middle-censored data.

(2) As anticipated, for every estimator, both the bias and the MSE decline as the sample size n becomes larger. This indicates an improvement in their performance.

(3) When it comes to the results of MLE of each parameter, the performance of the two methods, namely the optimization method and the EM algorithm, is quite similar.

(4) In the situation where the sample size n is limited (e.g., ), Bayesian estimators exhibit lower MSEs compared to MLEs, which indicates that, in small-sample scenarios, the Bayesian method outperforms the classical frequentist method.

(5) As expected, the Bayesian estimators under informative prior distribution perform better than those under non-informative prior distribution.

Table 5, Table 6 and Table 7 present the results of interval estimates of , , and b, where ‘CP’ refers to the coverage percentages and ‘AW’ refers to the average widths of interval estimates. ‘HPD-P1’ and ‘HPD-P2’ represent the HPD credible intervals under non-informative and informative prior distribution, respectively.

Table 5.

Average width and coverage percentage for interval estimation of when = 0.05.

Table 6.

Average width and coverage percentage for interval estimation of when = 0.05.

Table 7.

Average width and coverage percentage for interval estimation of b when = 0.05.

(1) Similarly to point estimations, the influence of different censoring schemes on the performance of interval estimators is relatively small.

(2) As the sample size n grows, confidence interval widths decrease, and coverage percentages generally rise.

(3) Bayesian interval estimates under informative prior perform better than those under non-informative prior.

5.2. Real Data Analysis

We apply real-world data from [9] in this subsection to demonstrate the inference framework developed in this study.

The real dataset is from the National Eye Institute diabetic retinopathy study, whose purpose is to examine the influence of laser treatment on the delay of the start of blindness in those suffering from diabetic retinopathy. With respect to the i-th participant, represents the blind time of the eye which has received laser treatment, while refers to the blind time of the untreated eye. Then, the time of first onset of blindness . Therefore, this dataset exhibits characteristics of competing risks data. Original data with corresponding cause are in Table 8.

Table 8.

The original data of the blind time with corresponding cause.

To ensure that the solution exists, we divide the data by 365 to let the unit be year. It should be noted that, before further analysis, we need to first determine whether the dataset is able to be analyzed using the Burr-XII distribution. Separately for failure cause 1 and 2, initially, the complete dataset is employed to derive MLEs of unknown parameters, followed by the application of the Kolmogorov–Smirnov test. For cause 1, the MLEs of and are 0.9033 and 2.3703, respectively. The value of Kolmogorov–Smirnov statistics is 0.1168 with its corresponding p-value . For cause 2, the MLEs of and are 0.9237 and 2.4605, respectively. The value of Kolmogorov–Smirnov statistics equals 0.1470 with its corresponding p-value amounting to 0.4324. Consequently, we can conclude that the Burr-XII distribution can be used to fit the dataset mentioned above.

In addition, need to be tested. We use the likelihood ratio test method. Through the analysis and calculation of the sample data, the corresponding p-value is quite close to 1, which indicates that the hypothesis “” is reasonable.

Then, we generate the artificial data. Both the left endpoint and the interval length are the random variables which follow the exponential distribution with mean 1. The processed data (measured in years) are presented in Table 9.

Table 9.

The artificial middle-censored data.

Since we have no extra information of , and b, we employ the non-informative priors for them.

Using the methods and procedures of estimation outlined above, point and interval estimates for all unknown parameters are derived, with results shown in Table 10, where ‘’, ‘’ represent the MLEs obtained by the optim function or the EM algorithm, respectively, and ‘BE’ refers to the Bayesian point estimator. We can see that the results from two methods to obtain MLEs are same and the widths of HPDs are less than those of ACIs.

Table 10.

The result of different point and interval estimates for .

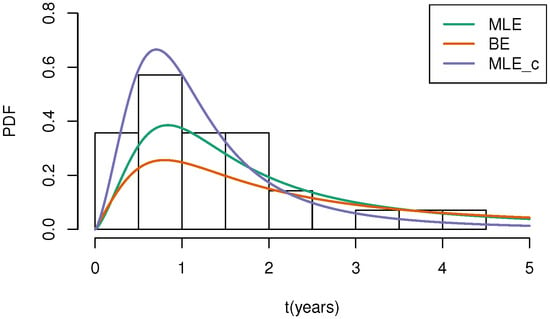

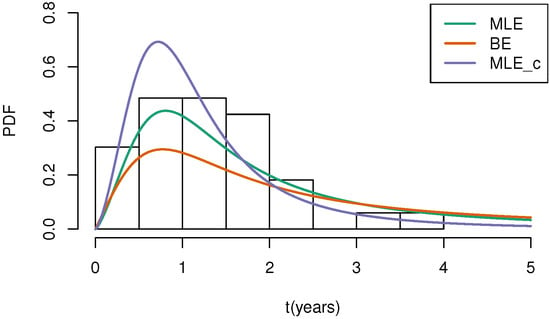

Based on the estimated values of parameters , and b, we can draw plots that combine the fitted probability density curves with the frequency histograms of the complete data for the two causes, respectively.

In Figure 9 and Figure 10, ‘MLE’ denotes the density curve based on MLEs under middle-censored data, ‘BE’ denotes the curve based on Bayes estimates under middle-censored data, and ‘MLE_c’ represents the density curve with parameter estimates derived from the complete data. Through Figure 9 and Figure 10, we can conclude that the estimated density curve is generally consistent with the trend of the frequency distribution of the real dataset, and its peak point is close to the peak point of the real dataset, indicating that our model is valuable in practical application.

Figure 9.

The fitted PDF plots for sample data corresponding to cause 1.

Figure 10.

The fitted PDF plots for sample data corresponding to cause 2.

6. Conclusions

This study mainly concentrates on statistical analysis for the middle-censored data with Burr XII distribution under independent competing risk frameworks. We apply both frequentist and Bayesian approaches to derive parameter estimates.

In the frequentist approach, we present the maximum likelihood estimates and prove their existence and uniqueness. Applying the observed Fisher information matrix, we calculate the ACIs.

Regarding Bayesian estimation, we utilize adaptive rejection sampling and Gibbs sampling techniques. These are employed to compute the Bayesian estimates under the squared error loss function, along with the corresponding HPD credible intervals.

Moreover, a simulation is performed with the aim of observing the performance of various estimations. After comparison, we find that for small-size samples, the Bayesian estimation with prior information performs better. The interval estimate obtained using the Bayesian method has a shorter width. At last, we apply our model to analyze an authentic dataset related to medicine, confirming that our model is reasonable.

Although the current study provides valuable information on the estimation issues regarding the middle-censored data combined with the independent competing risks, there are several areas for future improvement and exploration. One possible direction is to extend the analysis by considering that the value of b for the Burr-XII distribution of each failure cause may vary. Another important aspect for future research is the consideration of dependent competing risks. Within this study, we focus on the basic assumption of independent competitive risks, but in real-world scenarios, the risks may not always be independent. Exploring the implications of dependent competing risks would offer a more comprehensive analysis under complex conditions.

Author Contributions

Conceptualization: S.L. and W.G.; Methodology: S.L. and W.G.; Software: S.L.; Investigation: S.L.; Writing—Original Draft: S.L.; Writing—Review & Editing: W.G.; Supervision: W.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Project 202510004172 which was supported by National Training Program of Innovation and Entrepreneurship for Undergraduates. Wenhao’s work was partially supported by the Science and Technology Research and Development Project of China State Railway Group Company, Ltd. (No. N2023Z020).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in [9].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jammalamadaka, S.R.; Mangalam, V. Nonparametric estimation for middle-censored data. J. Nonparametr. Stat. 2003, 15, 253–265. [Google Scholar] [CrossRef]

- Jammalamadaka, S.R.; Iyer, S.K. Approximate self consistency for middle-censored data. J. Stat. Plan. Inference 2004, 124, 75–86. [Google Scholar] [CrossRef]

- Iyer, S.K.; Jammalamadaka, S.R.; Kundu, D. Analysis of middle-censored data with exponential lifetime distributions. J. Stat. Plan. Inference 2008, 138, 3550–3560. [Google Scholar] [CrossRef][Green Version]

- Davarzani, N.; Parsian, A. Statistical inference for discrete middle-censored data. J. Stat. Plan. Inference 2011, 141, 1455–1462. [Google Scholar] [CrossRef]

- Jammalamadaka, S.R.; Bapat, S.R. Middle censoring in the multinomial distribution with applications. Stat. Probab. Lett. 2020, 167, 108916. [Google Scholar] [CrossRef]

- Abuzaid, A.H.; El-Qumsan, M.K.A.; El-Habil, A.M. On the robustness of right and middle censoring schemes in parametric survival models. Commun. Stat. Simul. Comput. 2015, 46, 1771–1780. [Google Scholar] [CrossRef]

- Wang, L. Estimation for exponential distribution based on competing risk middle censored data. Commun. Stat. Theory Methods 2016, 45, 2378–2391. [Google Scholar] [CrossRef]

- Ahmadi, K.; Rezaei, M.; Yousefzadeh, F. Statistical analysis of middle censored competing risks data with exponential distribution. J. Stat. Comput. Simul. 2017, 87, 3082–3110. [Google Scholar] [CrossRef]

- Wang, Y.; Shi, Y.; Wu, M. Statistical inference for dependence competing risks model under middle censoring. J. Syst. Eng. Electron. 2019, 30, 209–222. [Google Scholar]

- Davarzani, N.; Parsian, A.; Peeters, R. Statistical Inference on Middle-Censored Data in a Dependent Setup. J. Stat. Res. 2014, 9, 646–657. [Google Scholar] [CrossRef]

- Sankaran, P.G.; Prasad, S. Weibull Regression Model for Analysis of Middle-Censored Lifetime Data. J. Stat. Manag. Syst. 2014, 17, 433–443. [Google Scholar] [CrossRef]

- Rehman, H.; Chandra, N. Inferences on cumulative incidence function for middle censored survival data with Weibull regression. J. Appl. Stat. 2022, 5, 65–86. [Google Scholar] [CrossRef]

- Sankaran, P.G.; Prasad, S. Additive risks regression model for middle censored exponentiated-exponential lifetime data. Commun. Stat. Simul. Comput. 2018, 47, 1963–1974. [Google Scholar] [CrossRef]

- Zimmer, W.J.; Keats, J.B.; Wang, F.K. The Burr XII Distribution in Reliability Analysis. J. Qual. Technol. 1998, 30, 386–394. [Google Scholar] [CrossRef]

- Soliman, A.A. Estimation of Parameters of Life from Progressively Censored Data Using Burr-XII Model. IEEE Trans. Reliab. 2005, 54, 34–42. [Google Scholar] [CrossRef]

- Yan, W.; Li, P.; Yu, Y. Statistical inference for the reliability of Burr-XII distribution under improved adaptive Type-II progressive censoring. Appl. Math. Model. 2021, 95, 38–52. [Google Scholar] [CrossRef]

- Du, Y.; Gui, W. Statistical inference of Burr-XII distribution under adaptive type II progressive censored schemes with competing risks. Results Math. 2022, 77, 81. [Google Scholar] [CrossRef]

- Abuzaid, A.H. The estimation of the Burr-XII parameters with middle-censored data. J. Appl. Probab. Stat. 2015, 4, 101. [Google Scholar] [CrossRef]

- Cox, D.R. The analysis of exponentially distributed life - times with two types of failure. J. R. Stat. Soc. Ser. B Stat. Methodol. 1959, 21, 411–421. [Google Scholar] [CrossRef]

- Qin, X.; Gui, W. Statistical inference of Burr-XII distribution under progressive Type-II censored competing risks data with binomial removals. J. Comput. Appl. Math. 2020, 378, 112922. [Google Scholar] [CrossRef]

- Chacko, M.; Mohan, R. Bayesian analysis of Weibull distribution based on progressive type-II censored competing risks data with binomial removals. Comput. Stat. 2018, 34, 233–252. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–22. [Google Scholar] [CrossRef]

- Fisher, R.A. On the mathematical foundations of theoretical statistics. Philos. Trans. R. Soc. Lond. Ser. A Contain. Pap. A Math. Phys. Character 1922, 222, 309–368. [Google Scholar]

- Gilks, W.R.; Wild, P. Adaptive Rejection Sampling for Gibbs Sampling. Appl. Stat. 1992, 41, 337–348. [Google Scholar] [CrossRef]

- Chen, M.-H.; Shao, Q.-M. Monte Carlo Estimation of Bayesian Credible and HPD Intervals. J. Comput. Graph. Stat. 1999, 8, 69–92. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).