Abstract

This article presents the application of a genetic algorithm for solving the Euclidean Steiner problem in spaces of dimensionality greater than 2. The Euclidean Steiner problem involves finding the minimum spanning network that connects a given set of vertices, including the additional Steiner vertices, in a multi-dimensional space. The focus of this research is to compare several different settings of the method, including the crossover operators and sorting of the input data. The paper points out that significant improvement in results can be achieved through proper initialization of the initial population, which depends on the appropriate sorting of vertices. Two approaches were proposed, one based on the nearest neighbor method, and the other on the construction of a minimum spanning tree.

1. Introduction

The Euclidean Steiner Tree Problem (ESTP) in concerns finding the minimal network of connections for a set of points P. This network forms a tree structure, where the length of the network is the sum of the lengths of edges connecting the vertices. If no additional points can be used to connect the points in P, the problem reduces to finding a minimum spanning tree (MST) for P, which can be efficiently solved using algorithms such as Prim’s algorithm. However, if it is possible to add additional intermediate points, known as Steiner points, networks of shorter length (Steiner Minimal Tree, SMT) can be obtained. This, however, is a significantly more complex problem as both the number and the location of the additional Steiner points are unknown. It has been proven to be an NP-hard problem in both 2D Euclidean space and in higher-dimensional spaces.

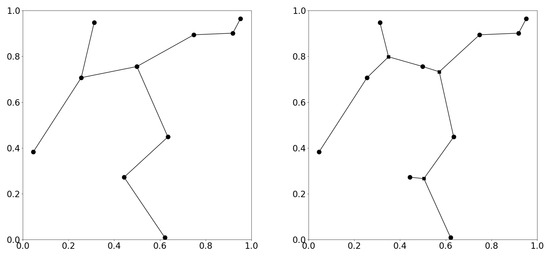

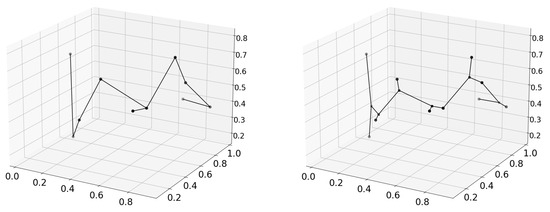

Figure 1 shows an example of a minimal spanning tree and a minimal Steiner tree for ten points in 2D space (). A similar example is presented in Figure 2 for ten points in 3D space (). In both cases, the total length of edges is smaller for SMT compared to MST.

Figure 1.

An example of a minimal spanning tree (left) and a minimal Steiner tree (right) in 2D. Additional Steiner points are presented as squared marks.

Figure 2.

An example of a minimal spanning tree (left) and a minimal Steiner tree (right) in 3D. Additional Steiner points are presented as squared marks.

ESTP in 2D Euclidean space is particularly noteworthy because there exist efficient algorithms that can solve problems for on the order of thousands. An example of such an algorithm is the GeoSteiner algorithm. However, efficient algorithms in 2D are based on characteristics of the Steiner problem specific to the plane and cannot be utilized to develop equally efficient algorithms in 3D space or in higher-dimensional spaces.

The aim of this study is to exploit the potential of the genetic algorithm to build a search algorithm for exploring the space of possible full Steiner topologies (FST) and then to evaluate the so-called relative minimal trees (RMT), which can be obtained from them through the optimization of the Steiner points’ positions. Among the evaluated RMTs, the one with the smallest sum of edges is selected as the SMT. All necessary definitions and characteristics of the used structures will be described in the following sections. The proposed algorithm will be tested on a set of problems in 3D space with sizes and .

1.1. Definitions

The following definitions and characteristics of ESTP will be used in this paper. They are correct for the Euclidean space for any n.

Points in P are called terminals, and it is assumed that their count is p. Points in S are Steiner points. For an optimal solution to the ESTP of size p, the maximum number of Steiner points in the SMT (Steiner Minimum Tree) is . The degree conditions are also met: (1) in the SMT, a terminal from P can only have a degree of 1, 2, or 3; (2) a Steiner point in the SMT has a degree exactly equal to 3. The angle condition can be formulated as follows: Every Steiner point and the three points it is connected to lie on a plane, and the edges connecting the vertices form angles of 120 degrees. A tree that meets the angle condition is called a Steiner tree.

If we fix the number of Steiner points and the way they are connected to vertices from P but do not determine the location of the Steiner points, we define the Steiner topology. If in a given topology we use the maximum number of Steiner points, which is , then such a topology is called a full Steiner topology (FST). Such a full topology describes a Steiner tree in which each terminal has a degree equal to 1.

As mentioned, for a given FST (Full Steiner Topology), the positions of the Steiner points are not determined. If these positions are optimized in such a way that the resulting tree has the minimum total edge length, a so-called relatively minimal tree (RMT) for the given topology is formed. If during the optimization process of the Steiner points’ positions their locations coincide with terminal points or other Steiner points, then the obtained RMT is referred to as a degeneration of the given topology. An RMT for a given topology always exists. A very important feature of the problem under consideration is the fact that for an SMT (Steiner Minimum Tree) in a given ESTP (Euclidean Steiner Tree Problem), there always exists an FST whose RMT is equal to the given MST.

From the above description, a general approach to the ESTP emerges: for a given set P, construct successive FSTs, find their RMTs, and present the smallest of them as the SMT (Steiner Minimum Tree). Many exact algorithms approach the ESTP in this way, trying to limit the number of FSTs checked. A review of these methods can be found in [1]. In this paper, a different approach is proposed. Instead of developing methods to limit the number of FSTs checked, it is assumed that not all of them will be examined. Instead, a genetic algorithm is used to optimize successive FSTs. This is significant, as the space of possible FSTs grows rapidly for successive p.

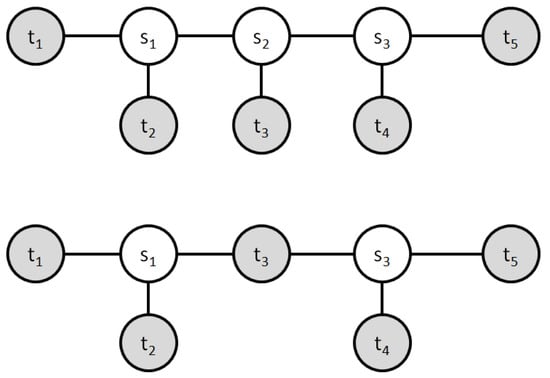

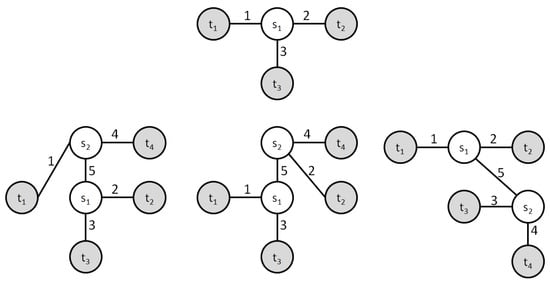

Figure 3 shows an example of an FST for . It is noted that the coordinates of the vertices are not important here, and only their mutual connections matter. This implies that in constructing an FST, the order in which terminals are added to the FST through a Steiner point is crucial. This fact will be utilized in the proposed approach based on the genetic algorithm. On the other hand, Figure 4 provides more details regarding the process of building an FST. Even if the order in which terminals are added to the FST is fixed, it is still possible to construct many different topologies by choosing how the new terminal is connected through a new Steiner point. For three terminals, there is only one unique way to connect them using one Steiner point. Figure 4 further shows that the fourth terminal can be added to the developing FST in three different ways through a new Steiner point. Starting from the initial three edges, adding each subsequent Steiner point and terminal increases the number of edges by 2. Therefore, adding the fifth vertex would be possible in five ways. Generally, adding the k-th Steiner point is possible in ways, for , assuming the first Steiner point connects three terminals.

Figure 3.

An example of a full Steiner topology (top) and its degeneracy (bottom) for five terminals (gray circles). Additional Steiner points are and (white circles).

Figure 4.

Construction of full Steiner topologies (terminal points presented as gray and Steiner points as white circles). Top: a unique full Steiner topology for three terminals with just one Steiner point . Bottom: three full Steiner topologies for four terminals . The new terminal is attached through a new Steiner point , which is connected to edge 1 (left), edge 2 (middle), or edge 3 (right).

The number of possible full topologies increases very rapidly with p and is given by the following formula:

This reflects the combinatorial complexity of the problem, illustrating how the space of potential solutions expands with each additional terminal. Starting from , this value is greater than (654,729,075 vs. 479,001,600).

1.2. Related Work

The Steiner Minimal Tree problem has diverse applications in several technological and scientific domains. One of the primary applications of the Steiner Tree Problem is in network design, where it helps in minimizing the total length of networks, such as telecommunications and computer networks. This has practical implications for reducing costs and increasing the efficiency of network infrastructure. [2] provides a comprehensive overview of the SMT problem in various contexts, including network design. In the realm of electronics, the SMT Problem is crucial for optimizing the layout of circuits in VLSI (Very Large-Scale Integration) designs [3]. The objective is to minimize the total wire length, which is critical for enhancing the performance and reducing the power consumption. In biology, the SMT is used in phylogenetics to infer the shortest evolutionary tree. This application involves finding the most parsimonious tree connecting different species based on genetic markers [4]. Robotics applications can use Steiner trees for path planning, especially in the context of navigating complex environments where paths need to be as efficient as possible. A good overview of industrial applications of the Steiner problem is provided in [5]. The Steiner problem formulation also appears in other optimization problems that can be termed hybrid, such as the Steiner Traveling Salesman Problem in [6].

For the ESTP in two dimensions, an exact and efficient algorithm was proposed by [7]. The GeoSteiner program [8] is a successful implementation of this algorithm, which was based on the efficient enumeration of all topologies. However, the methods used in this two-dimensional approach cannot be directly translated to higher dimensions. In [9], a solution to the ESTP in for was proposed through the enumeration of all topologies, yet this approach is inefficient. One of the most important approaches to solving the ESTP accurately in is the branch and bound method presented by Smith in [10]. However, the practical usefulness of this method largely depends on how well the lower bound can be estimated at the nodes of the search tree. Other studies have tried to introduce improvements to Smith’s algorithm, for example, [11]. However, the results presented in this study were for problems with 18 terminal points and thus relatively small-scale problems. Another work proposing improvements to Smith’s algorithm is [12]. In [13], the ESTP in was formulated as a convex mixed-integer nonlinear programming (MINLP), and results were presented for problems with 10 terminal points. A good overview of algorithms for finding exact solutions to the ESTP is provided in [1]. An excellent and up-to-date introduction to the issues related to the Euclidean Steiner problem and the challenges in higher-dimensional spaces is presented in [14].

The Iterated Local Search algorithm was proposed in [15] as an example of a heuristic approach. The genetic algorithm has been applied to the ESTP in two-dimensional space, as demonstrated in [16,17,18,19]. Other evolutionary algorithms, such as memetic algorithms, have also been developed for [20]. Another example is an application of particle swarm optimization algorithm to optimize the network connecting cities in China [21]. Additional heuristics, like Monte Carlo Tree Search, were also explored in [22]. The genetic algorithm has also been utilized in other variations of the Steiner problem, for instance, [23] for the rectilinear Steiner tree problem, and for the Prize-Collecting Steiner Tree Problem [24]. However, the application of the genetic algorithm to the ESTP in for remains unexplored. This publication aims to fill that gap. The aim of this publication is to present a new approach based on a genetic algorithm and to test the significance of various parameters of this approach. We do not aspire to provide an exhaustive review of other existing heuristics, such as [25], leaving a more comprehensive experimental comparison for future research.

1.3. The Contribution of This Work

This publication addresses a specific gap in the use of genetic algorithms for optimizing connection networks within Euclidean space. Specifically, it presents a tailored implementation of an evolutionary heuristic adapted to the Euclidean Steiner Tree Problem (ESTP) in for . It demonstrates how to represent the problem as a discrete optimization issue. The impact of various factors on the quality of the solutions obtained was examined. It was noted that the proposed method for generating the initial population, based on a heuristic that utilizes sorting according to the order of vertex addition by the Prim algorithm constructing a minimum spanning tree, has a significant influence on the results. Different configurations of the algorithm were tested on sets of problems with 17 and 100 points. The results were subjected to a statistical significance analysis.

2. Proposed Algorithm

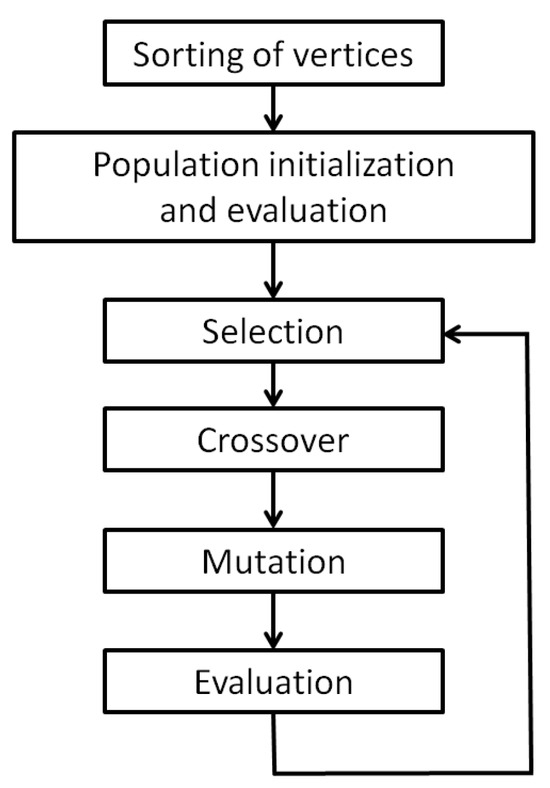

The proposed solution is based on a genetic algorithm. A genetic algorithm is a meta-heuristic often used in challenging optimization problems, including combinatorial ones. The general principle of operation is based on simulating the Darwinian process of natural selection by promoting better solutions from the current population. This selective pressure promotes more promising solutions, which, after undergoing the selection process, serve to generate new, potentially better solutions as a result of applying the appropriate operators. These operators typically include a crossover operator, which generates a new solution based on two selected ones, and a mutation operator, which creates a new solution by randomly altering an existing one. Repeatedly performing selection, crossover, and mutation steps (combined with a procedure for evaluating the quality of each new solution) has significant practical potential for locating areas in the search space with high-quality solutions. The genetic algorithm is a heuristic and, therefore, does not guarantee finding the optimal solution; however, in practice, it often provides satisfactory solutions within a reasonable time frame. The genetic algorithm can also be called a meta-heuristic, meaning it is a general approach to solving optimization problems and needs to be adapted to a specific problem. To carry this out, it is essential, first and foremost, to choose an appropriate data structure capable of representing a solution in the given optimization problem. The data structure should allow for some way of encoding the solution it represents. The chosen data structure also dictates the possible crossover and mutation operations. A schematic representation of the genetic algorithm is shown in Figure 5. In the following sections, we describe the individual steps and assumptions made to adapt the genetic algorithm to solving the ESTP in Euclidean space of any dimensionality.

Figure 5.

General scheme of genetic algorithm.

2.1. Data Structure

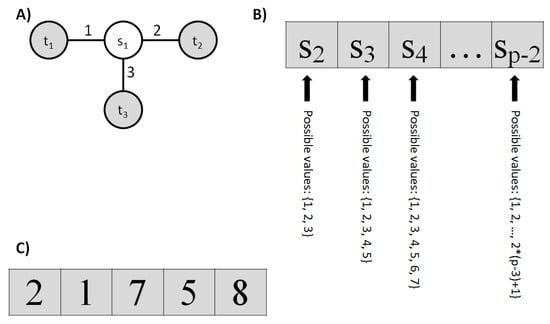

In the ESTP, it is necessary to determine both the number and coordinates of Steiner points. A naive approach to the ESTP would be to encode just these two pieces of information. However, this is not the best approach, as it does not utilize previous algorithmic achievements related to the ESTP, including the ability to efficiently calculate the RMT for a given FST. Such an algorithm was provided in [10], where he also proposed an efficient way of encoding information about a given FST. This method is very simple and simultaneously relates to the previously mentioned process of building an FST in the process of adding successive terminals through another Steiner point.

Here, we assume that the order of terminal attachment follows a certain adopted sequence, which is irrelevant at this point. The initiation of the FST construction begins by taking the first three terminals and connecting them with the first Steiner point. However, attaching the fourth terminal can occur in three ways, the fifth in five, the sixth in seven, generally in for . This leads to the proposed data structure as a vector of integers , where the possible value for depends on its position, meaning belongs to the interval . Note that there is no need to encode the unique way the first Steiner point is connected. During the random initialization of the initial population, values for each are generated randomly from the appropriate interval for it.

In Figure 6, the general data structure used as a chromosome is graphically presented, along with a sample chromosome for the problem involving eight terminal points.

Figure 6.

Scheme of the data structure used as a chromosome. For p points (terminals), we generally have potential Steiner points, each of which can be connected to the topology being generated in a number of ways depending on its index. (A) The first Steiner point uniquely connects the first three terminal points. (B) Each subsequent Steiner point, from index 2 to , can be added in an increasing number of ways equal to , where k is the index of the Steiner point . (C) A sample chromosome for the problem with terminal points. Thus, the number of potential Steiner points is . The first of these does not require encoding in the chromosome, so the chromosome length is 5. Each position in the chromosome displays a random value from the allowable range.

At this point, it is worth emphasizing that the proposed data structure is independent of the dimensionality of the Euclidean space in which the Steiner problem is being solved. Its length depends solely on the number of terminal points, and the possible values depend only on their indices. The dimensionality of the space does not affect the representation of the solution as a chromosome encoded using this data structure. It will, however, influence the method of calculating the Euclidean distance between points; i.e., higher-dimensional Euclidean space will be more computationally demanding.

It is worth noting that adopting such a data structure to represent the solution turns the problem into a combinatorial optimization problem.

2.2. Population Initialization

In describing the data structure used for encoding the solution, the aspect of the order in which terminals are connected, i.e., their permutations, was omitted. It was assumed that this order is random, and the next terminal is connected with an equal probability by any existing edge. Let us call this the default initialization method. However, a brief analysis of the characteristic features of the problem allows for the development of better initialization methods. Below, we propose two such methods.

Firstly, it is worth noting that in an optimal SMT solution, it is more likely that a given terminal will be connected to another terminal (directly or via a Steiner point) if it is closer rather than farther away, using Euclidean distance. From this observation, a simple heuristic can be proposed for generating complete topologies in the initial population of FSTs. This heuristic involves, for each subsequent terminal added to the FST under construction, first finding a certain number of its nearest neighbors among the terminals already included in the FST. From these nearest neighbors, one is randomly selected, and its edge is used to connect the new terminal. In this work, we test the number of nearest neighbors at 2, 3, 4, and 5.

The second proposed heuristic for generating initial FSTs utilizes the first but aims to rectify its shortcomings. It addresses the issue that if the order of terminals is still random, then the nearest neighbors of a given terminal, searched only among other terminals already included in the FST under construction, may not be its nearest neighbors when searched across the entire set P. Therefore, as a second heuristic, we propose pre-sorting all terminals in P according to the order of their selection during the execution of Prim’s algorithm, which finds the minimum spanning tree for points in P. This procedure starts from a random initial point. This ordering is further motivated by the fact that the minimum spanning tree is a solution to the problem of minimal connections in the absence of the possibility of using Steiner points.

The results of numerical experiments presented later in the paper indicate that the application of an appropriate initialization procedure is of great significance.

2.3. Individual Evaluation

The evaluation of a given individual in the solution population involves assessing the quality of the RMT for the FST encoded by this individual. To obtain the RMT, it is necessary to optimize the placement of Steiner points so that the sum of the edge lengths is minimized while the topology of connections remains unchanged. The solution to this problem has been known for years and was proposed by Smith in his work [10]. In this paper, we use exactly this algorithm to evaluate the given FST. As a solution to the ESTP, the genetic algorithm provides the best-found RMT in any generation.

2.4. Selection

The selection method used was tournament selection. It involves drawing a specified number of individuals from the population with equal probability. The tournament is won by the best individual, and the procedure is repeated the required number of times (population size).

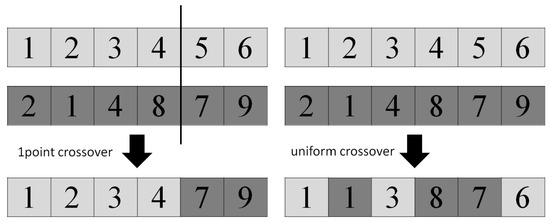

2.5. Crossover

In this paper, two crossover operators were compared: single-point and uniform. In single-point crossover, a single crossover point is randomly selected from two chromosomes (vectors of integers in our case). A new individual is created by cross-exchanging the corresponding parts between two solutions previously selected during the selection phase. It is also possible to create two offspring. In uniform crossover, each element of the new solution (offspring) is randomly chosen with equal probability from the two parental solutions. Figure 7 illustrates the schematic operation of both operators. It is important to note that both operators ensure that the new solution encodes a correct FST. The crossover itself occurs with a certain probability, which is a parameter of the genetic algorithm. If it does not occur, the original solution proceeds to the next step unchanged.

Figure 7.

Example results of two crossover operators: 1-point crossover (left) and a uniform crossover (right).

2.6. Mutation

The mutation operator involves randomly selecting a new value for a specific position in the vector encoding the FST, with a certain probability. Depending on the position, the value is drawn from the appropriate range, the same one that was used in the random generation of the vector describing the FST. Therefore, an integer from the range is selected for the element , where k ranges from 2 to . This procedure also ensures the correctness of the resulting FST.

2.7. Computational Complexity

The proposed algorithm is based on an evolutionary algorithm, with its execution time directly dependent on the number of iterations of the main evolutionary loop. The primary computational cost of the proposed approach arises from the necessity of finding the RMT for a given FST encoded in the chromosome, which involves optimizing the positions of the Steiner points. Compared to this procedure, the computational costs of other operations, such as crossover and mutation, are negligible. Similarly, the use of the minimum spanning tree algorithm, applied only once during population initialization, has minimal impact. For p points, Prim’s algorithm has a worst-case complexity of , which can be reduced depending on the data structures used. Thus, the overall computational cost is approximately O(pop_size · iterations_num), where pop_size is the population size and iterations_num is the number of iterations of the main loop in the genetic algorithm. The complexity of the RMT optimization algorithm itself is detailed in [10].

2.8. Summary

Summarizing the entire procedure presented in Figure 5, we can identify the main steps: (1) sorting vertices from P, either randomly or using Prim’s algorithm to build an MST; (2) initializing the initial population, either randomly or using the nearest neighbor search approach with or without Prim-based sorting; (3) evaluating each FST in the population using Smith’s algorithm, which provides an RMT for each FST; (4) repeating for a specified number of iterations an evolutionary loop consisting of selection, crossover, mutation, and evaluation of new individuals. The result of the algorithm is an RMT with the smallest sum of edge lengths. It is worth mentioning that in most cases, a degenerate topology will be obtained, and thus part of the Steiner points, whose coordinates after performing Smith’s algorithm will coincide with terminals or other Steiner points, will be discarded.

The final detail of the algorithm is that before passing a given FST to Smith’s algorithm, the coordinates of the Steiner points are initialized as the average coordinates of the terminals with which they are connected in the given topology.

3. Results

In this section, we present the results of the proposed algorithm for data from the space (3D real numbers). Two sets of problems were generated, one with 17 points and another with 100 points. Each set contains 50 problems, where the points were randomly generated from a uniform distribution over the cube in . These sample problems and the obtained solutions can serve as a new benchmark for other researchers. They have been made available from the author upon request.

First, the results for the basic version of the algorithm will be presented, followed by an examination of the impact of the appropriate preliminary sorting of the set P of points. The influence of the applied crossover operator will also be discussed.

All runs of the genetic algorithm utilized the following parameter values:

- Population size—80;

- Number of generations—70;

- Tournament size—3;

- Crossover probability—0.8;

- Mutation probability—0.1.

The settings used are typical values applied in the case of genetic algorithms. They were validated through test runs of the algorithm and preliminarily compared with other configurations. It was verified that these settings perform best considering the computation time limitations. A full analysis of the impact of these settings is not presented here; they are kept consistent across all computations since it was observed that other factors, such as the type of crossover and the method of initializing the initial population, have a much more significant and interesting influence on the results.

3.1. Results of the Basic Version of the Algorithm

In this section, the results of the basic version of the proposed algorithm are presented; i.e., no method of preliminary sorting of points from set P is applied. During the population initialization, points from P are attached to the generated FST in a random order, the same for each run of the algorithm.

The first set of problems consists of problems each containing 17 terminal points. This is due to the desire to compare the results obtained by the genetic algorithm with those achieved by Smith’s algorithm, which uses a branch-and-bound approach to search for the optimal solution to the Steiner problem by examining all FSTs, eliminating the need to check some of them. The details of this approach are described in the paper [10]. The size of 17 is the maximum size for which solutions could be found for the 50 problems considered in an acceptable time. For this purpose, an implementation available on GitHub was used (https://github.com/RasmusFonseca/ESMT-Smith).

Table 1 presents the results obtained by the proposed genetic algorithm for 50 problems named 3d17-01 to 3d17-50. The table shows results for two crossover operators, single-point (1point) and uniform (uniform). The respective columns show the minimum values of the sum of edge lengths in the best RMT obtained during 10 runs (min column). The worst values from 10 runs (max column) and the average values along with the standard deviation (mean (std) column) are also presented. The better result (compared to the alternative crossover operator) is highlighted in bold.

Table 1.

Results of a basic version of genetic algorithm (initialization with random sorting) for 3d17 problems. The best result for a given problem is indicated in bold font.

The results obtained suggest that the uniform crossover operator is better suited for this problem. The single-point operator found a better solution (min) in only 13 cases. The same applies to the worst solution found, which is also better for single-point crossover in 13 cases. The average results from ten runs are better in only five cases. However, these observations pertain to a relatively small problem size and will be verified in the case of more challenging problems. At this point, it is more interesting to compare the obtained results with those of Smith’s algorithm, which is based on an exhaustive search. For this purpose, the results obtained by Smith’s algorithm were compared with the best result for each problem achieved by the genetic algorithm with any crossover operator (the one that gave the better result for that problem).

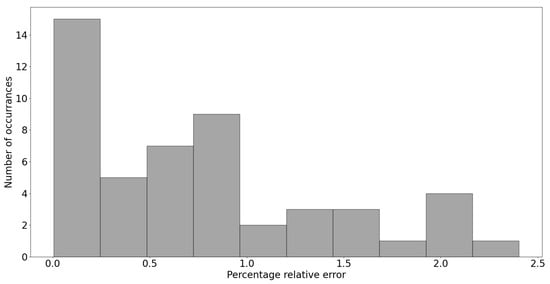

Figure 8 presents a histogram of the relative percentage error of the results obtained by the genetic algorithm compared to Smith’s algorithm. The overwhelming majority of the results have a percentage error less than 1%, with the worst not exceeding 2.5%. Therefore, it can be stated that the genetic algorithm indeed finds solutions close to the optimal one.

Figure 8.

Histogram of percentage relative error values of the best solution found by the genetic algorithm with respect to exact solution found by Smith’s algorithm.

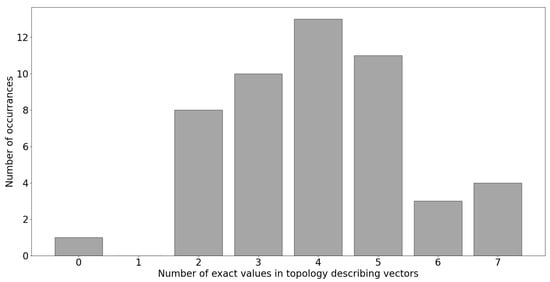

Vectors describing the topologies found by both approaches, GA and Smith’s algorithm, can also be compared. Figure 9 presents a histogram of the number of problems for which GA found topologies with the same value at a given number of positions.

Figure 9.

Histogram of the numbers of the same values in the topology describing vectors found by genetic algorithm compared to solutions found by Smith’s algorithm.

For example, for problem 3d17-02, the topology describing vector returned by Smith algorithm was

1 3 6 2 2 3 10 0 0 20 17 0 17 25

whereas the best solution returned by genetic algorithm was

1 4 5 2 2 4 10 0 0 19 18 0 18 26

resulting in exact matches in seven positions.

However, the Pearson correlation coefficient between the percentage relative error values and the numbers of matching values in topology describing vectors equals −0.125, which indicates that there is only a slight correlation between them and that high-quality solutions can be obtained even for topologies that differ more from the optimal ones.

Table 2 presents the results of the basic version of the GA for 50 problems with 100 points. Here, comparison with the results of Smith’s algorithm is not possible due to the complexity of the problem and computational demands. In each problem, the uniform crossover operator proved to be better both in terms of the best solution and the average quality of the results obtained. This is an interesting and valuable observation; however, later, we will see that for more difficult problems, the application of appropriate sorting is more significant than the crossover operator used.

Table 2.

Results of a basic version of genetic algorithm (initialization with random sorting) for 3d100 problems. The best result for a given problem is indicated in bold font.

3.2. Results for the Algorithm with Population Initialization Heuristics

For problems with 17 points, Table 3 presents the values of the best solutions obtained by different versions of the genetic algorithm, while Table 4 presents the average values from ten runs. The columns labeled ‘1point’ and ‘uniform’ represent GA versions without preliminary sorting (best results from Table 1 and Table 2). The column ‘nn2-1p’ denotes population initialization using the first heuristic, where two nearest neighbors were sought and a single-point crossover operator was applied. The column ‘nn2-u’ represents the same for uniform crossover. The meanings of the names of the other columns are analogous for subsequent values of the number of nearest neighbors searched in the applied heuristic of initial population initialization. From the presented results, the general positive impact of the applied heuristic is evident. Considering the minimum values, the basic versions of GA provided the best results only in 11 cases, of which only 6 are unique. For average values, there were only two cases. However, there is no clear winner in terms of the combination of the number of nearest neighbors and the crossover operator used.

Table 3.

Results (minimum values) of a genetic algorithm (initialization with random sorting and nearest neighbor selection) for 3d17 problems. The best result for a given problem is indicated in bold font.

Table 4.

Results (mean values) of a genetic algorithm (initialization with random sorting and nearest neighbor selection) for 3d17 problems. The best result for a given problem is indicated in bold font.

For more complex problems with 100 points, there is a clear advantage for the GA that utilizes two nearest neighbors during population initialization. Both the best results (Table 5) and the averaged results (Table 6) show that the winning versions are ‘nn2-1p’ or ‘nn2-u’. These results also indicate that the choice of crossover operator has significantly less impact than the proper initialization of the population.

Table 5.

Results (min values) of a genetic algorithm (initialization with random sorting and nearest neighbor selection) for 3d100 problems. The best result for a given problem is indicated in bold font.

Table 6.

Results (mean values) of a genetic algorithm (initialization with random sorting and nearest neighbor selection) for 3d100 problems. The best result for a given problem is indicated in bold font.

It has thus been shown that considering the distances of the sequentially connected points during the initialization of FST is crucial. The second heuristic, which additionally pre-sorts the points according to their usage in the construction of the minimum spanning tree using Prim’s algorithm, was described earlier. Intuition suggests that such sorting should further positively impact the performance of the algorithm. Indeed, the obtained results confirm this. For problems with 17 points, Table 7 shows improvement in the best results, while Table 8 shows improvement in averaged results. The column name ‘mst2-1p’ indicates that the sorting heuristic using Prim’s algorithm starting from a random point was applied during the construction of the FST, a random point was chosen from the two nearest neighbors, and the crossover operator used was single-point crossover. Similarly, in ‘mst2-u’, uniform crossover was used. The names of the other columns have analogous meanings for other values of the number of nearest neighbors.

Table 7.

Results (min values) of a genetic algorithm (initialization with Prim based sorting and nearest neighbor selection) for 3d17 problems. The best result for a given problem is indicated in bold font.

Table 8.

Results (mean values) of a genetic algorithm (initialization with Prim based sorting and nearest neighbor selection) for 3d17 problems. The best result for a given problem is indicated in bold font.

From Table 7 and Table 8, no clearly best configuration is evident. However, for problems with 100 points (Table 9 with the values of the best solutions found and Table 10 for averaged values), it is evident that the greedy choice of a random point among the two nearest neighbors prevails over other configurations and is more significant than the choice of crossover operator. The minimal and maximal values of standard deviation for problems with 17 points and 100 points are presented in Table 11 and Table 12.

Table 9.

Results (min values) of a genetic algorithm (initialization with Prim based sorting and nearest neighbor selection) for 3d100 problems. The best result for a given problem is indicated in bold font.

Table 10.

Results (mean values) of a genetic algorithm (initialization with Prim based sorting and nearest neighbor selection) for 3d100 problems. The best result for a given problem is indicated in bold font.

Table 11.

Standard deviation minimal and maximal values for 3d17 problems.

Table 12.

Standard deviation minimal and maximal values for 3d100 problems.

Between the best configurations, ‘mst2-1p’ and ‘mst2-u’, there are no clear differences. To analyze the obtained results in a more systematic way, the Friedman statistical test was utilized.

The Friedman test evaluates the mean behavior across different algorithms. It considers only the average results for each problem and each algorithm. The test assesses all problems and algorithms simultaneously to identify potential discrepancies in the algorithms’ average performance across all problems. The null hypothesis of the Friedman test is that there are no significant differences among the methods based on their mean performances in the given problems. The p-value obtained from the Friedman test indicates the likelihood that the null hypothesis holds true. Consequently, low p-values (typically below 0.05 or 0.01 are enough) provide grounds for rejecting the null hypothesis. At this stage, it was determined that significant differences exist among the algorithms. To determine which algorithms perform significantly better or worse, subsequent post hoc pairwise comparisons of the algorithms were conducted using an appropriate test (the Shaffer test in this study). This dual-step testing approach is designed to minimize the occurrence of false positive results among the compared pairs of algorithms, meaning that Shaffer pairwise comparisons are conducted only if the Friedman test rejects its null hypothesis. A false positive result arises when a test erroneously rejects the null hypothesis, suggesting differences between algorithms where none actually exist. Additionally, the Friedman test assigns rankings to the algorithms, helping to organize them from the best (with lower rank values) to the worst performing (with higher rank values). Further details about the testing method are available in [26]. All calculations were performed using the Keel software package [27,28].

For the results for problems with 17 points, the algorithm ranking is presented in Table 13. A p-value on the order of ten decimal places clearly indicates differences in the performance of the algorithms. Initialization using MST has a clear advantage over the others. For problems of small size, the uniform crossover operator seems to have an advantage. However, its advantage over single-point crossover is not fully demonstrated. Pairwise comparisons of the algorithms were also performed using Shaffer’s post hoc tests. Due to their large number, only the most significant are presented. Table 14 shows the results of post hoc tests between the best-performing configuration ‘mst2-uniform’ and the others. It indicates that there is no basis to demonstrate its advantage over the configurations ‘mst3-uniform’, ‘mst4-uniform’, ‘mst5-uniform’, and ‘mst2-1point’. On the other hand, the obtained results indicate a significant advantage over the remaining configurations. Similarly, Table 15 presents the results of post hoc tests for the ‘mst3-uniform’ configuration pairwise with the others (except ‘st2-uniform’). This shows that there is no basis for demonstrating significant differences between ‘mst3-uniform’ and the configurations ‘mst4-uniform’, ‘mst5-uniform’, ‘mst2-1point’, ‘mst3-1point’. The results, however, suggest that there are strong grounds to claim that it performs significantly better than the rest.

Table 13.

Friedman test ranking for 3d17 data. p-value computed by the Friedman test: .

Table 14.

Shaffer’s post hoc p-values for 3d17 data for pair-wise comparisons of mst2-uniform vs. other algorithms. Shaffer’s procedure rejects those hypotheses that have an unadjusted p-value (highlighted in bold font).

Table 15.

Shaffer’s post hoc p-values for 3d17 data for pair-wise comparisons of mst3-uniform vs. other algorithms. Shaffer’s procedure rejects those hypotheses that have an unadjusted p-value (highlighted in bold font).

From the statistical analysis of the results for problems with 17 points, it appears that the sorting heuristic based on MST has the greatest impact. These results also indicate a certain advantage of uniform crossover. However, the statistical analysis of results for more challenging problems with 100 points shows that choosing the appropriate number of nearest neighbors becomes more important and that a greedy approach, or a random choice from just two nearest neighbors, works best. It is worth adding that we do not simply choose the closest neighbor because we want to ensure diversity in the initial population, which is also important in the genetic algorithm.

Table 16 presents the results of the Friedman statistical test. A p-value to the tenth decimal place clearly indicates differences in the performance of various versions. It is evident that configurations utilizing MST-based sorting and the smallest number of nearest neighbors occupy the top spots on the list. The choice of crossover operator is of less significance. Table 17 shows the results of post hoc tests where the top-ranked configuration, ‘mst2-1point’, is compared with others. The findings suggest that based on the conducted studies, there is no basis to judge its performance as statistically significantly better than the configurations ‘mst2-uniform’, ‘mst3-uniform’, ‘mst3-1point’, ‘nn2-1point’, and ‘nn2-uniform’. Thus, even without MST sorting, the greedy choice of nearest neighbors is significant. On the other hand, there are clear reasons to assert that the ‘mst2-1point’ configuration is distinctly better than the other configurations, including those that do not consider point order or make less greedy choices of nearest neighbors. Again, the choice of crossover operator is not as crucial.

Table 16.

Friedman test ranking for 3d100 data. p-value computed by the Friedman test: .

Table 17.

Shaffer’s post hoc p-values for 3d100 data for pair-wise comparisons of mst2-1point vs. other algorithms. Shaffer’s procedure rejects those hypotheses that have an unadjusted p-value (highlighted in bold font).

Similarly, Table 18 presents the results of post hoc tests for the second-ranked configuration, ‘mst2-uniform’, compared pairwise with the others (except ‘mst2-1point’). There is no basis to claim that it performs statistically better than ‘mst3-uniform’, ‘mst3-1point’, ‘nn2-1point’, and ‘nn2-uniform’. However, there are grounds to assert that it performs statistically better than the remaining configurations.

Table 18.

Shaffer’s post hoc p-values for 3d100 data for pair-wise comparisons of mst2-uniform vs. other algorithms. Shaffer’s procedure rejects those hypotheses that have an unadjusted p-value (highlighted in bold font).

Summarizing, the obtained results demonstrated the potential of the genetic algorithm itself in solving the ESTP, but they also highlight the significant role played by the heuristics of generating the initial population. Particularly recommended is the second heuristic, which utilizes sorting indicated by Prim’s algorithm. Especially for larger problems, the method of initialization has a greater impact on the results obtained than the crossover operator used.

4. Conclusions

In this work, we propose the application of a genetic algorithm for optimizing connection networks within a multi-dimensional Euclidean space. A network with the minimal total edge length connecting a given set of points can be obtained by adding additional points, known as Steiner points. As there are no efficient algorithms in Euclidean spaces of more than two dimensions, new heuristic approaches are needed. In this work, the problem has been reduced to a discrete optimization problem. An appropriate data structure encodes the topology of connections between Steiner points, and their positions are optimized using the well-known Smith algorithm. The genetic algorithm is employed specifically for optimizing the solution in terms of the appropriate topology. This paper demonstrates, using data, that the proposed genetic algorithm yields results close in quality to optimal solutions, as shown for problems with 17 points, for which optimal solutions can be obtained using the Smith algorithm. For more challenging problems (100 points) in 3D space, it is shown that the proper initialization of the initial population of solutions, which applies the appropriate sorting of points, plays a significant role in the quality of solutions and convergence. In this regard, it is shown that the best results are provided by the proposed sorting using the Prim algorithm to build a minimal spanning tree for a given set of points. The results obtained through its use are better than those from pure random generation of the initial population at a high level of statistical significance.

Results have been presented for points in ; however, the proposed method can be applied unrestrictedly for data in any dimensional space. Due to the publication’s volume limitations, only results for are presented in detail, yet calculations conducted for data in demonstrated analogous conclusions about the operation of various versions of the tested genetic algorithm, particularly the significance of the initialization step based on the Prim’s algorithm. This does not detract from the significance of the results, as in practice, data in may be most relevant.

The following conclusion about this publication can be given as a list of key points:

- Addresses a Specific Gap. This publication tackles a gap in the usage of genetic algorithms for optimizing connection networks within multi-dimensional Euclidean space, particularly for the Euclidean Steiner Tree Problem (ESTP) in where .

- Tailored Heuristic. A specialized evolutionary heuristic is introduced, adapted to ESTP. The problem is reformulated as a discrete optimization task, where Steiner points are added to minimize the total connection length between a given set of points.

- Significance of Initialization. A key finding is the high impact of the initial population generation method. In particular, sorting points based on their addition order in Prim’s MST algorithm significantly improves solution quality compared to purely random initialization.

- Statistical Validation. Different algorithm configurations were tested on problems with 17 and 100 points. The resulting solutions underwent statistical significance analysis, confirming that appropriate parameter settings and initialization strategies lead to better convergence and higher-quality solutions.

- Extension to Higher Dimensions. While the study presents detailed results for , the method is applicable to any-dimensional Euclidean space. Preliminary tests in confirm analogous improvements in solution quality, thus supporting the broader utility of the proposed approach.

- Practical Relevance. Given the computational complexity of exact methods for dimensions higher than two, this heuristic offers a practical compromise. It provides near-optimal solutions for moderate-sized problems and significantly benefits from well-chosen genetic algorithm parameters, especially the initialization procedure based on Prim’s MST.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset available on request from the author.

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GA | Genetic algorithm |

| ESTP | Euclidean Steiner Tree Problem |

| MST | Minimal spanning tree |

| SMT | Steiner Minimal Tree |

| FST | Full Steiner topology |

| RMT | Relative minimal tree |

References

- Fampa, M.; Lee, J.; Maculan, N. An overview of exact algorithms for the Euclidean Steiner tree problem in n-space. Int. Trans. Oper. Res. 2016, 23, 861–874. [Google Scholar] [CrossRef]

- Hwang, F.K.; Richards, D.S.; Winter, P. The Steiner Tree Problem, Annals of Discrete Mathematics 53; Elsevier: Amsterdam, The Netherlands, 1992. [Google Scholar]

- Kahng, A.B.; Robins, G. On Optimal Interconnections for VLSI; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1994. [Google Scholar]

- Cavalli-Sforza, L.L.; Edwards, A.W. Phylogenetic analysis. Models and estimation procedures. Am. J. Hum. Genet. 1967, 19, 233–257. [Google Scholar] [PubMed]

- Cheng, X.Z.; Du, D.Z. (Eds.) Steiner Trees in Industry; Combinatorial Optimization; Springer: Boston, MA, USA, 2002; Volume 11. [Google Scholar] [CrossRef]

- Rodríguez-Pereira, J.; Fernández, E.; Laporte, G.; Benavent, E.; Martínez-Sykora, A. The Steiner Traveling Salesman Problem and its extensions. Eur. J. Oper. Res. 2019, 278, 615–628. [Google Scholar] [CrossRef]

- Melzak, Z.A. On the problem of Steiner. Can. Math. Bull. 1961, 4, 143–148. [Google Scholar] [CrossRef]

- Warme, D.; Winter, P.; Zachariasen, M. Exact Algorithms for Plane Steiner Tree Problems: A Computational Study. In Advances in Steiner Trees; Du, D.Z., Smith, J., Rubinstein, J., Eds.; Springer: Boston, MA, USA, 2000; pp. 81–116. [Google Scholar] [CrossRef]

- Gilbert, E.N.; Pollak, H.O. Steiner Minimal Trees. SIAM J. Appl. Math. 1968, 16, 1–29. [Google Scholar] [CrossRef]

- Smith, W.D. How to find Steiner Minimal Trees in euclideand-space. Algorithmica 1992, 7, 137–177. [Google Scholar] [CrossRef]

- Fampa, M.; Anstreicher, K.M. An improved algorithm for computing Steiner Minimal Trees in Euclidean d-space. Discret. Optim. 2008, 5, 530–540. [Google Scholar] [CrossRef]

- Fonseca, R.; Brazil, M.; Winter, P.; Zachariasen, M. Faster exact algorithms for computing Steiner trees in higher dimensional Euclidean spaces. In Proceedings of the Workshop of the 11th Dimacs Implementation Challenge, Providence, RI, USA, 4–5 December 2014. [Google Scholar]

- Fampa, M.; Lee, J.; Melo, W. A specialized branch-and-bound algorithm for the Euclidean Steiner tree problem in n-space. Comput. Optim. Appl. 2016, 65, 47–71. [Google Scholar] [CrossRef]

- Fampa, M. Insight into the computation of Steiner Minimal Trees in Euclidean space of general dimension. Discret. Appl. Math. 2022, 308, 4–19. [Google Scholar] [CrossRef]

- do Forte, V.L.; Montenegro, F.M.T.; Brito, J.A.d.M.; Maculan, N. Iterated Local Search algorithms for the Euclidean Steiner tree problem in n dimensions. Int. Trans. Oper. Res. 2015, 23, 1185–1199. [Google Scholar] [CrossRef]

- Jones, J.; Harris, F.C.J. A Genetic Algorithm for the Steiner Minimal Tree Problem. In Proceedings of the ISCA’s International Conference on Intelligent Systems, Reno, NV, USA, 19–21 June 1996. [Google Scholar]

- Chakraborty, G. Genetic Algorithm Approaches to Solve Various Steiner Tree Problems. In Steiner Trees in Industry SE—2; Combinatorial Optimization; Cheng, X., Du, D.Z., Eds.; Springer: Boston, MA, USA, 2001; Volume 11, pp. 29–69. [Google Scholar]

- Barreiros, J. A Hierarchic Genetic Algorithm for Computing (near) Optimal Euclidean Steiner Trees. In Proceedings of the GECCO 2003: Proceedings of the Bird of a Feather Workshops, Genetic and Evolutionary Computation Conference, Chigaco, IL, USA, 11 July 2003; pp. 56–65. [Google Scholar]

- Frommer, I.; Golden, B. A Genetic Algorithm for Solving the Euclidean Non-Uniform Steiner Tree Problem. In Extending the Horizons: Advances in Computing, Optimization, and Decision Technologies SE—3; Operations Research/Computer Science Interfaces Series; Baker, E., Joseph, A., Mehrotra, A., Trick, M., Eds.; Springer: Boston, MA, USA, 2007; Volume 37, pp. 31–48. [Google Scholar] [CrossRef]

- Bereta, M. Baldwin effect and Lamarckian evolution in a memetic algorithm for Euclidean Steiner Tree Problem. Memetic Comput. 2019, 11, 35–52. [Google Scholar] [CrossRef]

- Cao, S.; Chen, J.; Gou, J. Binary Particle Swarm Optimization Algorithm for Euclidean Steiner Tree. In Proceedings of the 2024 4th International Conference on Computer Science and Blockchain (CCSB), Shenzhen, China, 6–8 September 2024; pp. 417–422. [Google Scholar] [CrossRef]

- Bereta, M. Monte Carlo Tree Search Algorithm for the Euclidean Steiner Tree Problem. J. Telecommun. Inf. Technol. 2017, 4, 71–81. [Google Scholar] [CrossRef]

- Julstrom, B.A. A scalable genetic algorithm for the rectilinear Steiner problem. In Proceedings of the 2002 Congress on Evolutionary Computation. CEC’02 (Cat. No.02TH8600), Honolulu, HI, USA, 12–17 May 2002. [Google Scholar] [CrossRef]

- Klau, G.W.; Ljubić, I.; Moser, A.; Mutzel, P.; Neuner, P.; Pferschy, U.; Raidl, G.; Weiskircher, R. Combining a Memetic Algorithm with Integer Programming to Solve the Prize-Collecting Steiner Tree Problem. In Genetic and Evolutionary Computation—GECCO 2004; Deb, K., Ed.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1304–1315. [Google Scholar]

- Pinto, R.V.; Maculan, N. A new heuristic for the Euclidean Steiner Tree Problem in . Top 2023, 31, 391–413. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Alcalá-Fdez, J.; Sánchez, L.; García, S.; del Jesus, M.J.; Ventura, S.; Garrell, J.M.; Otero, J.; Romero, C.; Bacardit, J.; Rivas, V.M.; et al. KEEL: A software tool to assess evolutionary algorithms for data mining problems. Soft Comput. 2008, 13, 307–318. [Google Scholar] [CrossRef]

- Alcalá-Fdez, J.; Fernández, A.; Luengo, J.; Derrac, J.; García, S.; Sánchez, L.; Herrera, F. KEEL data-mining software tool: Data set repository, integration of algorithms and experimental analysis framework. J. Mult.-Valued Log. Soft Comput. 2011, 17, 255–287. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).