Abstract

The integration of the Scalable Service-Oriented Middleware over IP (SOME/IP) within Automotive Ethernet enables efficient, service-oriented communication in vehicles. This study presents a video stream transmission library using SOME/IP to transfer pre-recorded video data between virtual Electronic Control Units (ECUs). Implemented with vsomeip, OpenCV, and Protocol Buffers, the system handles video serialization, Ethernet transmission, and reconstruction at the receiver side. Experimental evaluation with front and rear dashboard cameras (2560 × 1440 and 1920 × 1080 px) demonstrated that video resolution and file size directly affect processing duration. Optimized 1920 × 1080 videos achieved total processing times of about 400 ms, confirming the feasibility of near-real-time video transmission. A GUI application was also developed to simulate event-based communication by sending object detection updates after video transfer. The proposed framework provides a scalable and modular architecture that can be adapted to real ECU systems, establishing a foundation for future real-time video communication in automotive networks.

1. Introduction

The evolution of automotive communication protocols has been a cornerstone in the advancement of vehicle technology, defining the way vehicle systems communicate internally and with the external world. In the early days, automotive communication consisted of simple, customized protocols designed for specific tasks within a vehicle. As the complexity and functionalities of vehicles grew and costs of developing non-standard communication systems increased notably, the demand for more standardized and robust communication protocols became evident. In today’s vehicles, there are typically around 70 ECUs (Electronic Control Units) that communicate with each other through approximately 2500 signals []. This led to the development of various automotive-specific protocols, standardized among automotive hardware suppliers to reduce production costs. Some remarkable examples include CAN (Controller Area Network), LIN (Local Interconnect Network), and MOST (Media Oriented Systems Transport), each serving distinct aspects of vehicle communication from basic control functionalities to complex multimedia transmission [].

The introduction of Automotive Ethernet marked a significant milestone in the evolution of automotive communication systems. Traditional automotive networks, while effective for simpler vehicle systems, suffered from limitations in bandwidth and scalability that could not meet the demands of increasingly complex and data-intensive applications such as ADAS (Advanced Driver-Assistance Systems), infotainment, and ultimately, autonomous driving []. Automotive Ethernet emerged as a solution, offering higher data transmission rates, improved scalability, and the flexibility to support a wide range of in-vehicle networks []. Development of new protocols further encouraged Ethernet’s integration into automotive network systems.

Following the integration of Automotive Ethernet, the SOME/IP (Scalable Service-Oriented Middleware over IP) protocol emerged, specifically designed to boost Ethernet’s capabilities in automotive networks. Originally introduced to address the complexity of service-oriented communication and discovery in complex automotive systems, SOME/IP became essential for applications requiring real-time data exchange, such as ADAS and infotainment systems []. One of its novelties lies in enabling seamless service discovery and interaction across diverse vehicle networks, enhancing the interoperability and efficiency of automotive systems []. It is prognosed that, the role of service-oriented protocols such as SOME/IP is going to expand, as the transition towards more integrated and intelligent automotive networks progresses, and potentially it will become a cornerstone in the evolution of V2X (Vehicle-to-Everything) communications and the IoV (Internet of Vehicles) [,].

1.1. Previous Work on SOME/IP

The literature on SOME/IP in automotive networks demonstrates a wide range of applications, challenges, and innovative solutions, as evidenced by the various studies reviewed. In this regard, the paper of Seyler et al. investigates the startup delay issues inherent in the SOME/IP protocol. By formalizing the startup process, this paper contributes to understanding and mitigating delays, enhancing the protocol’s efficiency in real-time automotive applications []. Gopu, Kavitha, and Joy discussed the application of SOAs (Service-Oriented Architecture) in connecting automotive ECUs through SOME/IP. This exploration into SOA emphasizes the protocol’s adaptability and potential in facilitating complex in-vehicle communications []. Nichitelcea and Unguritu focus on the implementation of SOME/IP-SD (Service Discovery) for distributed embedded systems in automotive Ethernet applications. Their work highlights the protocol’s significance in enabling efficient in-vehicle communication and proposes architecture relying solely on scalable Ethernet networks []. Iorio et al. addresses the critical aspect of securing SOME/IP communications within vehicles. By proposing security measures tailored to the protocol, this paper draw attention to the importance of cybersecurity in the automotive sector, particularly for service-oriented communication protocols []. Alkhatib et al. explore the use of deep learning models to detect intrusions in automotive networks by utilizing SOME/IP protocol. The paper emphasizes the increasing connectivity, and complexity connected to that, raising security concerns which require sophisticated mechanisms for intrusion detection []. Choi, Lee, and Kim analyze challenges in transferring large data sets over SOME/IP-TP. Their work sheds light on the complexities of ensuring efficient and reliable big data transfers in automotive systems []. Zelle et al. focus on analyzing the security aspects of SOME/IP automotive services. By identifying vulnerabilities and proposing countermeasures, this paper adds to the critical dialog on fortifying automotive networks against cyber-attacks [].

The literature about SOME/IP so far emphasizes its vital role in the evolution of automotive communication systems, highlighting its potential to enhance real-time data exchange, enable service-oriented architectures, and address security vulnerabilities and data transfer issues. Despite the valuable insights provided by the reviewed literature into the applications of the SOME/IP protocol within automotive networks, a noticeable gap exists regarding its implementation for video data transmission and resource-sharing capabilities. So far, video data transmission and resource-sharing applications have been covered using different approaches. Some examples can be given as follows.

1.2. Previous Work on Video Transfer and Resource Efficiency in Automotive Applications

There has also been remarkable research on video transfer and resource efficiency in automotive applications. Some of these works can be listed as following. Omar W. Ibraheem et al. [] introduce an FPGA-based scalable multi-camera GigE Vision IP core aimed at embedded vision systems requiring efficient multi-camera support. The presented core demonstrated the ability to process video streams up to 2048 × 2048 pixels at frame rates between 25 and 345 fps, emphasizing its potential for high-resolution, real-time video processing in resource-constrained environments. Ben Brahim et al. [] propose a QoS-aware video transmission solution for connected vehicles, utilizing a hybrid network of 4G/LTE and IEEE 802.11p technologies. Their research focuses on enhancing V2X video streaming through a system that dynamically selects the best radio access technology to meet quality of service demands. While it explores video streaming capabilities in depth, the study’s primary focus diverges from the application of SOME/IP protocol for video transmission and resource sharing. Aliyu et al. [] present video streaming challenges and opportunities within IoT-enabled (Internet of Things) vehicular environments. The study focuses on vehicles equipped with advanced sensors and infrared cameras for transmitting real-time data. Abou-zeid et al. [] discuss the advancement of C-V2X (Cellular Vehicle-to-Everything) communication technologies to meet the requirements of connected and autonomous vehicles. Focusing on ultra-reliable and low latency communications, the paper underlines the significance of C-V2X in supporting various vehicular applications, with a particular emphasis on video delivery. Khan et al. [] explore an approach to enhance video streaming quality in vehicular networks through resource slicing by balancing video quality selection and resource allocation. Their method exhibits improved video streaming performance by considering vehicles’ queuing dynamics and channel states. Although not directly tied to SOME/IP protocol, this study aligns with the broader aim of enhancing video data transmission and resource-sharing in automotive systems. Yu and Lee [] examine the effectiveness of real-time video streaming for remote driving over wireless networks, highlighting the importance of reducing latency to enhance remote control responsiveness. Go and Shin [] propose a resilient framework for raw format live video streaming in automated driving systems, leveraging Ethernet-based in-vehicle networks. Their approach is particularly relevant for enhancing the performance of video streaming and resource sharing within automotive communication systems, although it does not explicitly utilize the SOME/IP protocol. Table 1 summarizes some of the most relevant previous works.

Table 1.

Summary of similar previous works.

As shown in the literature, various methods have been employed to manage the transmission of large data packets, such as video data, and to facilitate resource sharing, which is becoming increasingly crucial. Unlike the approaches suggested previously, this paper proposes the use of SOME/IP to tackle both issues effectively. Thus, the focus of this study is on facilitating video data transmission between VMs (Virtual Machines), which simulate the hardware environments of automotive ECUs, through the application of SOME/IP. This research mainly contributes to the following issues;

- Develop a video stream transmission library using SOME/IP to transfer video data between virtual Electronic Control Units (ECUs).

- Implemented with vsomeip, OpenCV, and Protocol Buffers, the system handles video serialization, Ethernet transmission, and reconstruction at the receiver side.

- Experimental evaluation with front and rear dashboard cameras (2560 × 1440 and 1920 × 1080 px) demonstrated that video resolution and file size directly affect processing duration.

- Optimized 1920 × 1080 videos achieved total processing times of about 400 ms, confirming the feasibility of near-real-time video transmission.

- A GUI application was also developed to simulate event-based communication by sending object detection updates after video transfer.

- The proposed framework provides a scalable and modular architecture that can be adapted to real ECU systems, establishing a foundation for future real-time video communication in automotive networks.

This paper is organized in 4 subsequent sections. First, materials that are needed to realize this project will be summarized in the next section. Second, in the following section, methods to achieve certain goals of this study will be introduced. Then, some of the most significant results obtained from this study will be presented in results section. Finally, conclusions drawn from this paper will be discussed.

2. Materials and Methods

2.1. Materials

In this project, 4 open libraries were employed to achieve the desired outcomes. Firstly, the vsomeip library, developed by the GENIVI project, was used to handle the SOME/IP protocol between VMs. Secondly, the OpenCV library was utilized for managing video read and write operations during communication. The Protocol Buffers library, developed by Google, was employed to serialize and deserialize video data. This step is crucial in the video transmission process since video frames cannot be sent directly through the SOME/IP protocol. Instead, they should be packaged as standard data types, such as 8-bit unsigned integers, also known as uint8. Finally, the nlohmann/json library was used to define default data paths from which videos should be read or written, depending on the use-case scenario.

In the scope of this project, the vsomeip library is integral to managing SOME/IP communications among virtual machines. This library, a product of the GENIVI initiative now maintained by COVESA (Connected Vehicle Systems Alliance), offers an optimized implementation of the SOME/IP protocol designed for automotive software applications []. It enhances the development process of in-car communication frameworks, providing a uniform interface and communication protocol across diverse in-vehicle software entities.

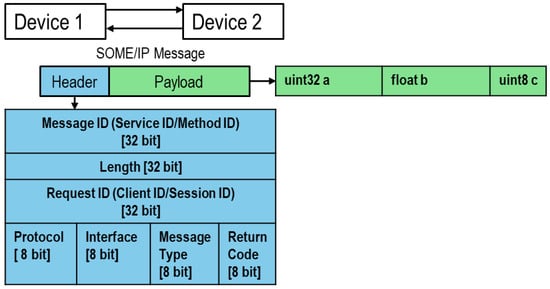

This library aims efficient data transmission between software modules, vital for real-time data sharing critical in automotive control systems where prompt data reception is essential. Thanks to its adaptability for the distinct communication needs of various automotive software, it enables developers to fine-tune the communication dynamics to fit their specific applications. It ensures interoperability, permitting software components originated from independent developers to interact seamlessly—a necessity in the heterogeneous realm of automotive software development. Designed for scalability, vsomeip can handle communications from compact embedded systems to intricate automotive networks with ease. SOME/IP messages consist of two parts, namely, header and payload. Figure 1. Illustrates a SOME/IP communication with a typical message frame.

Figure 1.

SOME/IP message frame.

Within the SOME/IP protocol, there are different methods for communication between ECUs []. The first one, Request/Response is a synchronous communication method, where a device simply asks for a particular service which is known to be available in another controller, and this service is executed by the service provider for one single time. The second one, known as the Fire & Forget method, works exactly like the first one, with the difference that the service requestor does not track whether this service is carried out. In the broadcast-subscription based event communication, the offered service is executed sequentially, and information is sent to those which subscribed to this service without waiting for a request each time. The last method, Event Group communication, categorizes events, letting components subscribe to whole sets of related events, which simplifies their management.

OpenCV, which stands for “Open Source Computer Vision Library,” is an essential tool in the context of computer vision and image processing. It offers a comprehensive set of algorithms and resources that allow developers to work with visual data, supporting a wide variety of applications from recognizing and tracking objects to creating augmented reality experiences. Its open-source status has made OpenCV a popular choice among researchers, engineers, and enthusiasts exploring computer vision [].

One of the key strengths of OpenCV is its versatility in working with different image and video formats, making it easy to use with a variety of data sources. The library includes numerous functions that streamline processes such as image filtering, feature detection, and geometric transformations, making the development of complex vision applications more accessible. The inclusion of a deep learning module within OpenCV expands its potential even further, allowing for the use of pre-trained neural networks for more complex image processing tasks like classification and segmentation.

For this project, OpenCV was utilized for all video processing activities. It handled tasks varying from frame capture and video encoding to the analysis of individual frames. To optimize video data handling in OpenCV, the MP4 (Moving Picture Expert Group Part 14) format was selected for this project. Another major advantage of this library lays in its capability of processing images, which makes it more than often the right choice for image acquisition applications. Although actual image processing remains outside the scope of this project, future expansions of this research could leverage OpenCV for such tasks without needing to seek an alternative library.

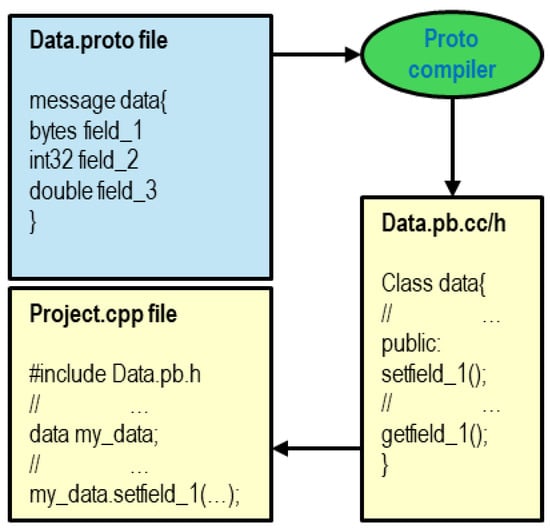

Protocol Buffers, commonly known as Protobuf, stand out as a powerful tool for serializing data, especially useful when efficient exchange is needed across diverse systems. Created by Google, Protobuf is designed to be independent of programming languages, offering a consistent approach for structuring data to ensure clear communication across various systems and languages [].

The core advantage of Protobuf is its straightforward and resourceful nature. Developers define their data schema in .proto files, and the Protobuf compiler then turns these definitions into source code for multiple languages. This auto-generated code provides convenient classes for handling data serialization with ease. Protobuf not only minimizes data size, which helps save on network bandwidth but also speeds up the serialization process, boosting performance. Working principle of protobuf for an exemplary C++ project is illustrated in Figure 2.

Figure 2.

Working principle of protocol buffers in an exemplary code.

For our project, Protobuf was instrumental in structuring complex data like video frames, detailing attributes such as dimensions and frame rates to maintain a uniform format for transferring data. The integration with C++ was seamless, allowing for the smooth serialization of video frames for transmission and equally efficient deserialization upon receipt. This efficient process was vital for enabling the real-time analysis and processing of video data, which is central to the applications built in this project.

In the dynamic world of software development, the ability to efficiently exchange data is critical. The Nlohmann/JSON C++ library meets this demand by offering an efficient way to handle JSON (JavaScript Object Notation). With its human-friendly format, JSON has become the go-to data interchange standard across web services, IoT devices, and many software systems.

The Nlohmann/JSON library streamlines the parsing and crafting of JSON within C++ programs. Its user-friendly syntax lets programmers easily create, access, and alter JSON data. The library effortlessly converts between C++ data types and JSON, providing a smooth and compatible user experience. Thanks to its strong error handling, Nlohmann/JSON is particularly reliable for applications where data correctness is essential [].

In this project, the primary role of the Nlohmann/JSON library was to manage configurations without the need to recompile the entire software project. It enabled our software to dynamically adjust operations such as path definitions for video data by interpreting JSON configuration files. Initially, the Nlohmann/JSON library was also considered for serializing data structures into std::string objects, due to its capability to handle this type. However, it was observed that the library struggles with invalid string characters, which frequently occur in video frames. Consequently, for this specific task, Google’s Protobuf library, which was already introduced earlier, has been employed.

These open-source libraries, integrated into our system, allowed for a flexible architecture that can transmit videos from one setting, like a VM or control device, to another for further analysis and output. This new system grants the secondary environment access to video data it otherwise wouldn’t have, enabling effective utilization. The system’s adaptability makes it suitable for a range of uses, from advanced video processing in complex systems to basic video access in simpler ones. It simplifies the video and image processing complexities that often need sophisticated setups, thereby making it more accessible for simpler controller boards designed primarily for data reception, reducing hardware demands. In the subsequent section, more detailed information to the implementation of methods offered by these libraries will be provided.

2.2. Methods

This section will introduce the methods used to facilitate the entire process. First, it will detail the steps required to establish SOME/IP services for effective communication between VMs. Next, the processes of video reading and serialization, followed by deserialization and writing, will be clarified. Lastly, a demo GUI (Graphical User Interface) application will be presented, developed to demonstrate the potential of this project in transmitting detected objects from a video stream. It should be noted that image acquisition and object detection are not the concerns of this project. The demo GUI allows users to manually select objects and transmit them to another VM via SOME/IP, revealing the system’s ability to send regular updates similar to those in a genuine object detection application. In a system equipped with an actual object detection algorithm, the operational procedure would be identical, with the exception of the demo program, which would naturally be replaced by the detection algorithm.

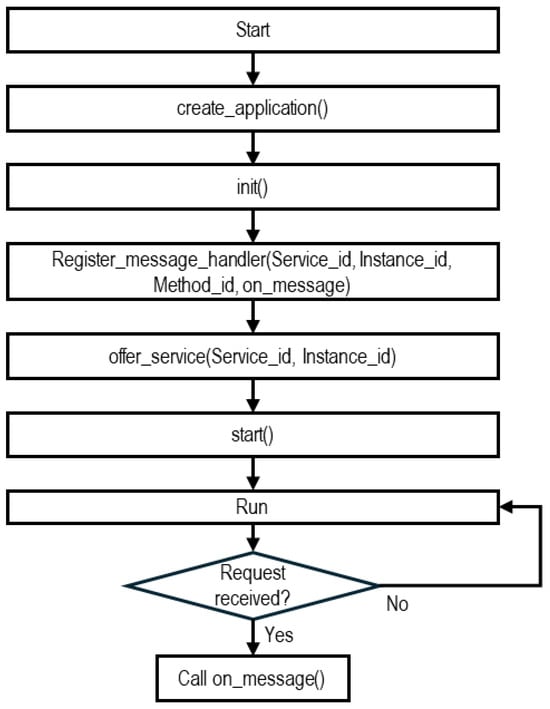

The first step in setting up a SOME/IP runtime environment is to create an application object. In this project, the video source VM is named “Server,” so a SOME/IP runtime object was initialized with this name. This object must be initialized before proceeding with further settings.

Before starting the application object, two additional steps must be completed for a service provider like our Server program. The first is called “register message handler,” a method for SOME/IP objects. This method registers a specific function to be executed when a particular request arrives, identified by a unique service ID, instance ID, and method ID. In the service-oriented architecture of SOME/IP, a service can have multiple instances running on different hosts, each potentially offering a variety of tasks identified by unique method IDs within the scope of the same service. In this project, there is only one task associated with the service ID, and consequently, only one instance ID and one method ID are used.

The second step is to offer the service. This method broadcasts the previously defined service and instance IDs across the network, allowing clients in need of this service to invoke it. Remote clients call the service using the SOME/IP Service Discovery protocol, which scans the entire network for the specified combination of service, instance, and method IDs. If this service is offered by a network participant ECU, the service provider’s network address is obtained through SOME/IP SD, and the service is requested from this provider. In response, the provider sends the output of this service. In our project, the request and response involve accessing a specific video stored in the Server’s memory and transferring this video byte-wise, respectively.

Figure 3 illustrates the flow diagram of setting up the vsomeip environment. After creating and initializing an application, the “register message handler” method is invoked. The last parameter of this method is a user-defined, application-dependent function. Given that only one method is employed in this work, a straightforward name was chosen for service execution. Nonetheless, in scenarios involving multiple methods and instances, this function’s name should ideally correspond to the specific method and instance required. This function is initially triggered when a matching service request is received by the service provider ECU. At this point, the SOME/IP runtime environment automatically executes this function to adequately address the request.

Figure 3.

Flowchart of an exemplary SOME/IP application on the Server side.

While this function is tailored to the application, it must prepare an appropriate payload for the request and dispatch it via the SOME/IP protocol. To this end, payload and response objects are instantiated. The payload object features a method titled “set data” for inputting the data. In this project, the data type “uint8_t” vectors are utilized to fill the payload data, as this format can be seamlessly converted to the SOME/IP payload data type “vsomeip::byte_t”. Once the payload object is furnished with the necessary data, it is transferred to the response object using the “set payload” method. Finally, this response object is conveyed to the active SOME/IP application, which then forwards this response to the client.

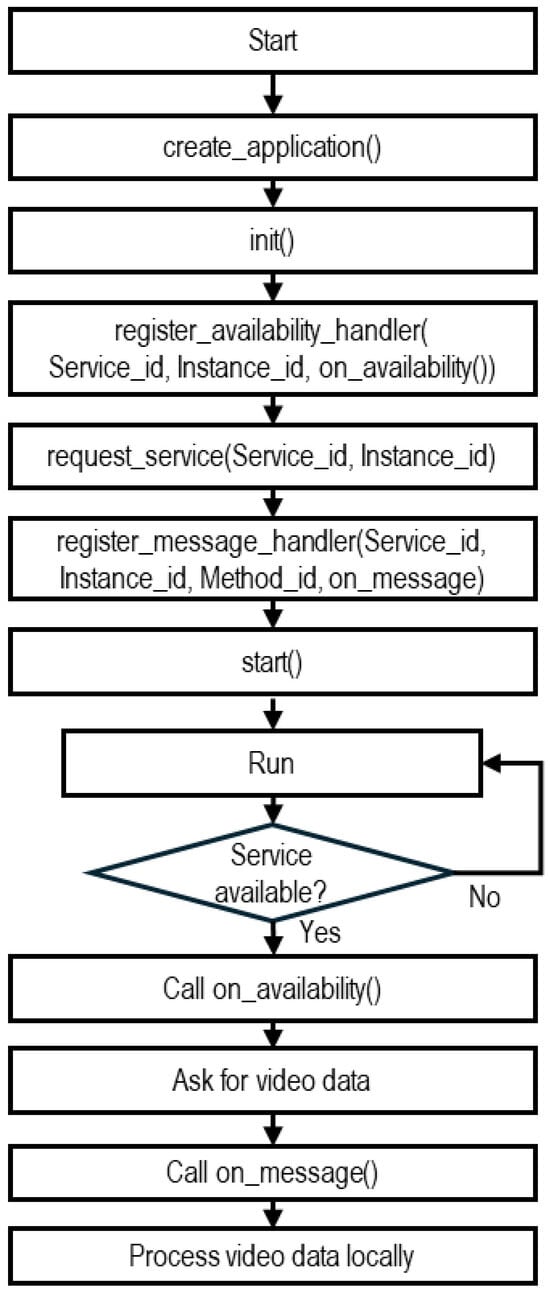

On the receiver side, which we will refer to as the “Client” from now on, similar steps are undertaken to create an application. However, unlike on the Server side, two distinct SOME/IP runtime methods are invoked before the register_message_handler method is triggered. These methods are register availability handler and request service. The former assigns a unique combination of service ID and instance ID to a user-defined and application-dependent function, which is activated as soon as the sought-after service is located anywhere on the network. In our implementation, this function is named “on_availability” due to convention, although more specific names may be necessary in scenarios involving multiple instance IDs, as previously mentioned. The latter method specifies the service and instance IDs in the SOME/IP runtime to initiate the SD protocol as soon as the application starts running. Subsequently, the SOME/IP SD protocol begins searching for this specific ID combination across the network. Finally, the register_message_handler method is invoked similarly to on the Server side. This method triggers an “on message” function to perform specific actions as soon as a packet is received from the Server. In this work, the packet contains video data, and thus this function encompasses the necessary steps for receiving, reading, and then storing this video in the Client’s memory. The workflow on the Client side is illustrated in Figure 4.

Figure 4.

Flowchart of an exemplary SOME/IP application on the Client side.

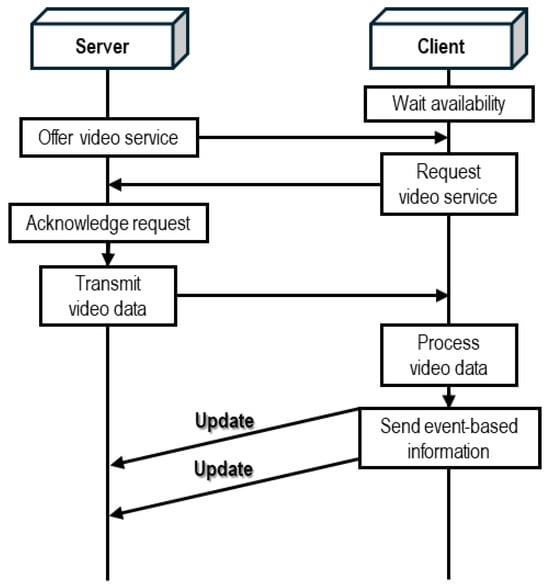

The management of events is handled in a manner quite similar to the simpler request/response methods described above. However, in this case, the Client offers a service that includes event-based updates, and the Server requests this service. Understandably, events should have their own service, instance, and method IDs. In this study, events convey string-based information about detected objects; their type, such as vehicle or human, information about the number of detected objects, and the time at which these objects are detected. Each time a new object is detected, this information is transmitted as an event update from the Client to the Server. The overall process flow is illustrated in Figure 5.

Figure 5.

Process flow of video and object detection services running on Server and Client.

Once a running SOME/IP environment is established, video file operations should be properly implemented in the user-defined functions described in the previous section. To package a video stream in a container, a struct named “VideoData” is defined. This struct includes, among other things, a vector of cv::Mat objects capable of representing a single video frame, and a cv::Size object that holds the video data’s window size. In addition to classes from OpenCV [], a double type variable is used to store the FPS (Frames Per Second) rate. Lastly, a flag is employed to determine whether the FPS rate is read from the input video; this flag is utilized solely to avoid the redundant operation of reading the FPS rate from every single frame. The full extent of the VideoData struct, as well as the function of each member, is summarized in Table 2.

Table 2.

Propertıes of VıdeoData Struct.

To read video files whose directory is known in the VideoData container and writing the contents of it to a video file, a novel class named “Video_Object” is designed. Apart from reading and writing, this class should also be able to serialize video data into an 8-bit unsigned integer stream, and deserialize an existing stream into a VideoData container. As mentioned before, this step is critical while sending video packages through SOME/IP.

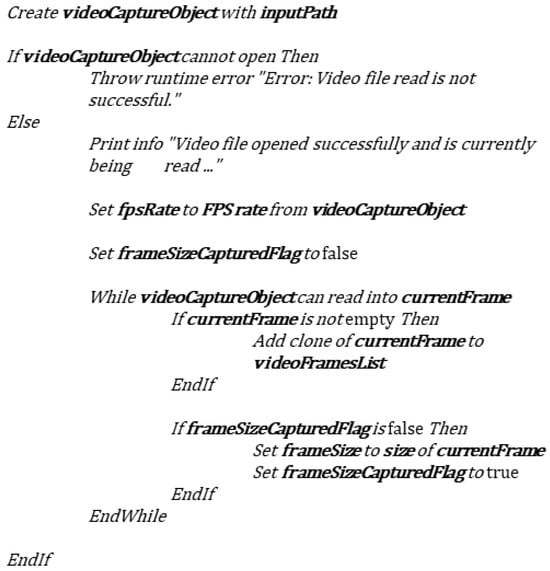

The video reading operation is conducted by the “VideoRead()” method of the Video Object class. This method begins by initiating a “cv::VideoCapture” object, which requires the directory of the video file to be known beforehand. Initially, the file in the specified directory is checked for its openability. If the video is successfully opened, the FPS rate is stored in the corresponding container attribute using the “get” method of the VideoCapture class. Subsequently, video frames are read sequentially. The read() method, used within a while-loop, continues to fetch frames as long as it returns a true value, with each current frame being stored in the VideoFrames attribute. During the first loop iteration, the frame size is recorded, and a flag indicating that the frame size has been captured is set to prevent redundant frame size recordings. The pseudo-code for the Video Read method is depicted in Figure 6.

Figure 6.

Pseudo-code for VideoRead method of Video_Object class.

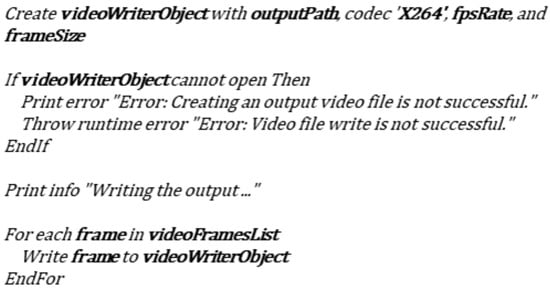

Once the video file is fully read, its contents are stored in a VideoData container. This means that, given access to a properly populated VideoData container, it is possible to regenerate the exact same video stream without any access to the original data. To initiate the write operation of a video file, a data path is first required, with which a “cv::VideoWriter” object from the OpenCV library is initialized. Additional arguments to initialize this object include the codec code, FPS rate, and frame size. The codec code is chosen as X264 in this project, which is compatible with mp4 files. The FPS rate and frame size attributes are read from the VideoData container. Subsequently, a check is performed to ensure the output file can be created. Finally, the “write()” method of the “cv::VideoWriter” class is used to write the stored frames into the output file one by one. Here, an iteration through the frames is conducted. The pseudo-code for the VideoWrite operation is provided in Figure 7.

Figure 7.

Pseudo-code for VideoWrite method of Video_Object class.

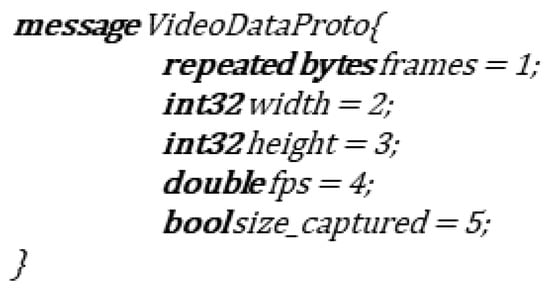

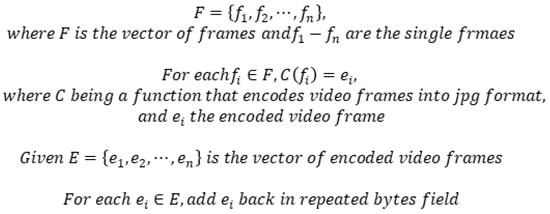

As previously mentioned, complex data structures like our VideoData container must be serialized into sequences of simpler data types, such as bytes, before being incorporated into the payload of a SOME/IP message. Accordingly, methods have been developed for this purpose. Initially, the contents of the VideoData struct must be accurately described in a “.proto” file. This message definition is illustrated in Figure 8. In this schema, each assignment indicates the field position of the corresponding data type; for instance, frames are situated in the first field, width of a frame in the second, and so forth.

Figure 8.

Message format for proto file.

Subsequently, “.pb.cc” and “.pb.h” files are generated using proto compiler. These source files contain all the necessary classes and methods to populate the defined data types and read byte sequences into these data types. To convert a VideoData struct into byte sequences, a proto object generated by proto compiler is initialized to execute all the necessary operations. The “set” method, specifically tailored for our data structure, is used for each content element. The exception to this is frames, which must be handled differently because they are not a single variable but a vector of frames. For serializing a vector of video frames, a for-loop iterating through the frame vector is employed. In each iteration, an OpenCV function named “cv::imencode” encodes the current frame into a buffer in the form of a vector of “uchar” in JPG format, which stands for Joint Photographic Experts Group. This vector is then appended to the video frames field of the serialized message format using ‘add_elements’ method, where ‘elements’ stands for the name of vector field in ‘.proto’ file. The operation of adding video frames to the Proto class member’s repeated byte fields is summarized in Figure 9.

Figure 9.

Operation for adding video frames in repeated byte fields of proto class member.

The final set of operations involves preparing the serialized message as a vector of bytes. Protobuf provides a method named “SerializeToString” which writes the serialized message into a string object passed to the function. However, this string then needs to be reinterpreted as a vector of “uint8” because this format is more compatible with the vsomeip library.

Once the serialization is complete, the entire VideoData container, including the video itself, is successfully represented in the form of sequential bytes. At the receiving end, this byte stream must be converted back into meaningful video data, or in other words, it needs to be deserialized. In the function specifically designed for deserializing our VideoData format, almost every operation conducted during the serialization process is carried out in reverse. Firstly, a proto object is initialized to perform the deserialization operations. A string object is initialized by reinterpreting the received vector of ‘uint8_t’. Then, the “ParseFromString” method is employed to extract the necessary information within the proto-object. To read video frames, the same logic used for writing video frames is applied, except that the encode function is replaced by “cv::imdecode” here. As the name suggests, this function decodes vectors carrying information in JPG format back into the video frame format, or more specifically, into the “cv::Mat” format. Subsequently, decoded video frames are appended to the video frame vector. All other information carried by the serialized message format is read into the appropriate variables using the corresponding get methods.

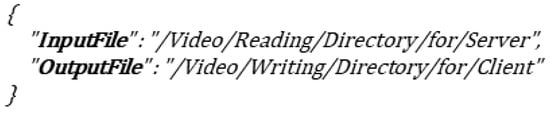

The nlohmann/json library is an extremely efficient and versatile C++ library designed to process JSON (JavaScript Object Notation) data. JSON is a lightweight data interchange format that is easy for humans to read and write, and easy for machines to parse and generate. The nlohmann/json library simplifies the integration of JSON processing into C++ applications by providing a simple and intuitive Application Programming Interface (API) that supports modern C++ features.

The configuration of video directories is managed for both ends using JSON configuration files. This setup specifies the directory from which the video file is sourced on the Server side and the directory in which received video data is stored on the Client side. The format of these configuration files is illustrated in Figure 10. As seen in the figure, the format has been kept as simple as possible, as there is no need for a complex structure for this purpose. It is important to note that the format is identical for both ends, with the only difference being the directories used on the VMs. Understandably, “InputFile” is relevant only for the Server and “OutputFile” for the Client. To make the project as scalable as possible, both attributes are utilized as is in both VMs. To read the entries in the JSON files, an “nlohmann::json” object is created. Configuration files are opened with “std::ifstream” objects and then passed to the JSON objects. Subsequently, the JSON object can return the entries from the given file as string objects. Although this method may seem redundant, it was implemented to enhance the scalability of the project and eliminate the need to recompile the entire project each time the video source changes.

Figure 10.

JSON format of configuration files.

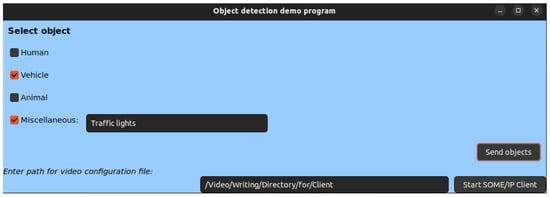

The details for realizing the GUI application will not be provided here, since this part is only considered as demonstration in contrast to the other parts of the project. Therefore, only design will be briefly introduced. This application is solely designed for Client side, where object detection is supposed to be made. The GUI design for pseudo-object detection is illustrated in Figure 11.

Figure 11.

GUI design for pseudo-object detection program.

The GUI was designed to be simple, as it does not play an essential role in the project. However, it was also considered important that it could be replaced by a real object detection algorithm with as little effort as possible. Therefore, the flexibility to select multiple objects was added to the design, a feature typical of real object detection algorithms. In a real algorithm, there may be both pre-defined and undefined objects, thus, there is an option to categorize undefined or at least non-pre-defined objects under “miscellaneous”. For exemplary purposes, three pre-determined objects were defined: human, vehicle, and animal. These were chosen because the likelihood of detecting one of them in a vehicle environment is considerable. In the case of undefined objects, the text field next to the miscellaneous category must be filled, and then the string in this field is sent as an object. In addition to the user side of the program, there is a clock in the background that counts 100 s and then restarts. When the user selects a set of objects and clicks the “Send objects” button, the current timestamp is recorded, and the corresponding detection time is sent along with the selected objects.

As a result of the use-case, it would not make much sense if some objects were detected and sent before the video transmission is completed. Therefore, a safety mechanism is also implemented to prevent sending objects before the video is completely transmitted to the client side.

By using the methods described in this section, a successfully running project environment was created using only the libraries introduced in this chapter, their dependencies where applicable, and two virtual machines running the Ubuntu Linux operating system. Some properties of these virtual machines are listed in Table 3 to highlight the system dependencies of this project. In the following section, the results obtained from this work will be summarized.

Table 3.

Some Properties of Virtual Machines.

Each VM has been allocated 4096 MB of RAM and two CPU cores, ensuring sufficient performance for development and simulation tasks. The key features of these virtual machines include the ability to manage SOME/IP communication and video data processing operations, which are critical for the objectives of this project. The chipset is set to PIIX3, the video memory is set to 16 MB, and the VMSVGA graphics controller is used. These settings provide a stable and efficient environment for developing and testing the vsomeip library and related components.

3. Results and Discussion

3.1. Video Transmission

After creating the project using all the introduced tools and methods, a few settings for the vsomeip library need to be configured for inter-device communication. These settings are typically specified in a configuration file named “vsomeip_config.json” by default. Before vsomeip3, simply placing this file in the same location as the executable was sufficient for vsomeip to automatically recognize and apply the configurations within it. However, with vsomeip3—used in this project—the file’s location must be explicitly defined; otherwise, the SOME/IP handling library defaults to its standard configuration file, which does not align with individual project settings. Moreover, vsomeip3 applications must be launched from the same terminal where the configuration file path is specified. Consequently, bash scripts were created to initiate our project, which include both the program’s run command and the configuration file path definition. The most significant attributes from the configuration files are highlighted in Table 4.

Table 4.

Configuration settings of virtual machines.

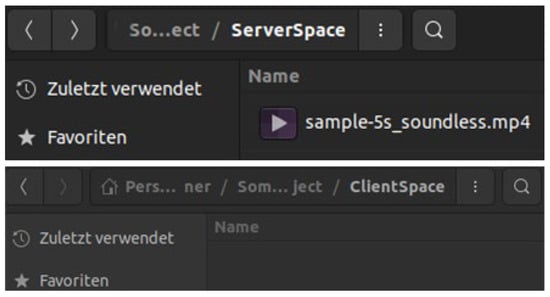

The video file selected to test the project is 6.9 MB in size, presenting a challenging workload for typical automotive communication protocols. This file was sourced from a website offering open-source training videos for machine learning-based systems. Initially, the video is stored solely in the Server’s memory. The second VM, referred to as the Client, has no direct access to this video. The directory structures for both VMs are provided in Figure 12.

Figure 12.

Folder layout before video transmission for Server (upper figure) and Client (lower figure).

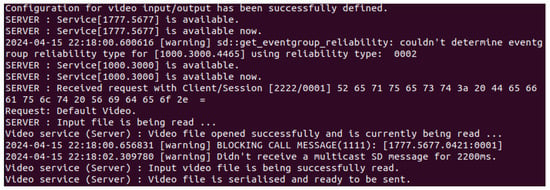

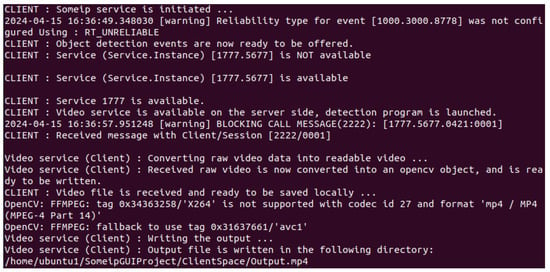

After establishing the initial environmental conditions, applications on both ends are launched. It is important to note that the launch order of the applications does not affect functionality, as both programs remain in a loop until the requested service is provided or a proper request is received. This is made possible by the service availability concept. Applications running on both ends constantly check for the availability of the services they require and remain on hold until those services are available. To monitor the communication between the VMs, console outputs have been configured to log each significant event, such as when the video service becomes available, a request is made, or video transmission is initiated. Figure 13 and Figure 14 illustrate some of the most significant log messages printed on the Server and Client consoles, respectively. Messages prefixed with ‘SERVER’ or ‘CLIENT’ are generated by our user functions called by vsomeip. Logs that begin with ‘Video service’ contain messages from our own video library. Lastly, console messages starting with ‘OpenCV’ are exclusively from the OpenCV library and are not influenced by our implementation.

Figure 13.

Console message on the Server side during video file transmission.

Figure 14.

Console message on the Client side during video file transmission.

On the console terminal of the server, firstly, the availability of its own services is printed. Afterwards, the availability of the services offered by Client are acknowledged, which include event-based object detection updates. On the client side, first significant log message indicates that at first, no matching service instance offered by the server is found. The subsequent message announces that the service is becoming available. The following message contains information about the receiving of a message which contains video data.

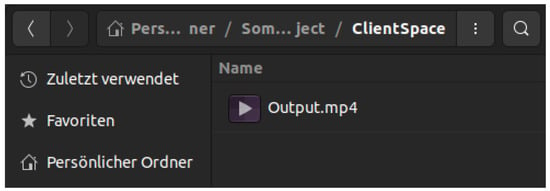

After the video transmission is completed, as indicated by the client-side log message, ‘Output file is written in the following directory: ...’, the same input video that is available on the server end is written to the client’s memory under the name ‘Output.mp4’, as pre-defined in the video configuration file.

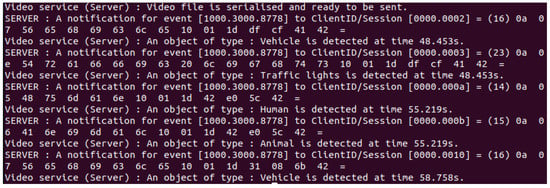

Following this operation, the previously empty directory on the client side, shown in Figure 12, appears as given in Figure 15. So far, only the results from the video transmission operation have been shared. After successful transmission of the requested video file, it is possible to send any number of event updates containing the so-called ‘detected objects’ using the GUI application. To demonstrate this, some arbitrarily chosen objects are sent as event updates to the server. A set of example objects, detailed in Table 5, are transmitted using the GUI application. The log messages that have consequently been printed out on the server’s console are illustrated in Figure 16.

Figure 15.

Folder layout for Client after video transmission is completed.

Table 5.

Exemplary Objects.

Figure 16.

Console message on the Server side during event updates containing object detection information.

In this example, two separate object groups, namely ‘vehicle/traffic lights’ and ‘human/vehicle’, are sent at the same time instance. However, they are sent as separate event instances, as evidenced by comparing their instance IDs in Figure 16. This separation was intentional to highlight different detections, even though they are captured simultaneously. This feature was also added to make the GUI application resemble a genuine detection algorithm as closely as possible. That being said, depending on the specific needs of a project, it is also possible to group objects detected at the same time into a single event update. In this work, that kind of categorization was not taken into account.

3.2. Evaluation of Video Transmission Duration

To evaluate the video data transmission capability of system, some video data is recorded using a GKU D600 model dashboard camera [], (GKUVISION, Guangdon, China). The experimental setup to record sample video data is shown in Figure 17.

Figure 17.

Dashboard camera setup is used in this project to record sample videos. Front (upper figure) and rear (lower figure) dashboard camera.

The front and rear dashboard cameras can record video at 30 and 25 FPS, respectively. The front camera has two resolution settings: 2560 × 1440 and 1920 × 1080. The rear camera can only record at 1920 × 1080.

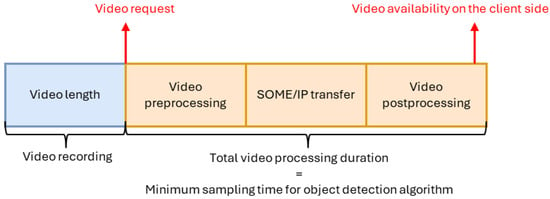

Since video data can be of any length, it is necessary to determine the duration of the video for this project. From this point on, this duration will be referred to as the “video section duration.” The time from when the video is requested until the video data is written to the client’s memory determines the sampling frequency of the object detection algorithm running in the client environment. This time will be referred to as the “total video processing duration” from now on. Important sections of total video processing duration are illustrated in Figure 18.

Figure 18.

Comparison of video length with total video processing duration.

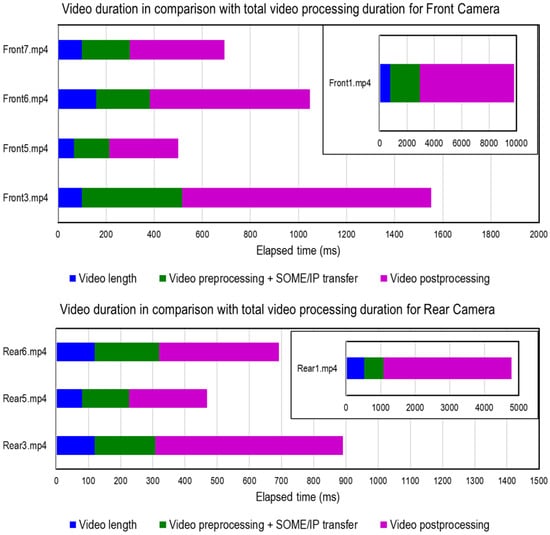

The previously introduced adjustable camera settings, namely the FPS rate and resolution, are used to obtain videos of different durations and sizes. The videos used for comparison are labeled Frontx.mp4 and Rearx.mp4 for the front and rear cameras, respectively. Here, x represents the video number taken from the relevant camera. Some of this video data is shared in Table 6. Figure 19 shows sections taken from the Front1.mp4 and Rear1.mp4 video files.

Table 6.

Details about the video data used in this work.

Figure 19.

Comparison of video length with total video processing duration.

Figure 20 shows the total video durations in comparison with the video length for the front and rear camera video data. The longest processing times are observed in the Front1.mp4 and Rear1.mp4 video sections for the front and rear cameras, respectively. In the Front1.mp4 video, the total processing time is approximately 12 times the length of the video, rendering it unusable for practical purposes. In the Rear1.mp4 video, the total processing time is approximately 4.2 s, which is also highly impractical. To obtain values that can be used in practice, the video duration must be significantly reduced. Additionally, reducing the video resolution is necessary for the front camera to decrease the video data size. This affects not only the duration of video transmission but also file reading and writing operations. When analyzing the Front5.mp4 and Rear5.mp4 samples, it is seen that the total video processing duration remains around 400 ms.

Figure 20.

Comparison of rear and front cameras video length with total video processing duration.

3.3. Key Points and Limitations of the Study

The results obtained from a virtual ECU system are presented so far. In this system, video data is recorded using a set of dashboard cameras and scaled to limit the length of the video in order to process the data in sections. This data is processed in the first virtual ECU before being sent through SOME/IP. Finally, the received data is written to the memory of the second virtual ECU environment.

The system developed in this study represents an automotive work environment in which a scalable architecture distributes services to different control units. This approach provides flexibility and efficient use of system resources. With this in mind, the study includes some simplifications that limit the system’s capabilities. The most significant simplification is using Linux-based virtual ECUs instead of real ECU hardware. Although real cameras are used to capture video frames, the obtained data is not used directly. Rather, it is divided into sections of varying durations to study plausible section durations. Finally, an object detection algorithm that works with video frames was not implemented since this part remains outside the scope of this work. This could be a good inspiration for future work. For this study, only a placeholder GUI program was used to simulate the event-based communication option of SOME/IP.

As indicated by the results presented earlier in this section, a sampling frequency of approximately 400 ms is possible if the camera and video section resolutions are chosen carefully. Further reductions in total processing duration are possible by introducing additional parameters to the system, such as an increased compression ratio or a higher FPS rate using a more advanced camera. With further development, the study promises a flexible architectural design that can be applied to real automotive systems.

4. Conclusions

This research successfully developed a video stream transmission library utilizing SOME/IP on Automotive Ethernet, an innovative approach. The experiments conducted throughout this study demonstrate the system’s capability to handle video data transmissions effectively, underscoring the adaptability of SOME/IP for complex service-oriented communications. This makes it a strong candidate for future automotive network architectures that may require handling of video data in various scenarios.

As a result of this work, a successful transmission of video data between two VMs was achieved. Moreover, following the transmission of this video file, a service was initiated which is capable of sending regular event updates, simulating the behavior of a real object detection algorithm. The most significant outcomes obtained from this work can be summarized as follows:

- A video file was successfully read from a local source and serialized into a vector of bytes

- The serialized vector of bytes was reinterpreted as SOME/IP payload data, and sent to through ethernet to a second device

- The received SOME/IP payload was converted back to a vector of bytes containing data about the video file

- Experimental evaluation with front and rear dashboard cameras demonstrated that video resolution and file size have a direct effect on total processing duration, influencing transmission efficiency over SOME/IP

- It was observed that lower-resolution and shorter-duration achieved total processing durations around 400 ms, confirming that near-real-time operation is achievable with optimized parameters

- These results emphasize the importance of balancing video quality and data size to ensure efficient and practical video transmission performance in service-oriented automotive environments

This study establishes a foundation for future studies, particularly in exploring real-time video transmission capabilities. While the current system does not measure reliability or latency, these aspects represent important directions for future research. Further enhancements could focus on expanding the system to manage multiple video streams and higher data rates, or implementation of system on real control units, and adapting real-time video transmission instead of pre-recorded videos

Author Contributions

M.E., investigation, designed the overall methodology, writing—original draft, reviewing, and editing; L.B., investigation, designed the overall methodology, designed and performed the experiments, writing—original draft, reviewing, and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

Author Levent Bilal was employed by the company Intive GmbH. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADAS | Advanced Driver-Assistance Systems |

| C-V2X | Cellular Vehicle-to-Everything |

| CAN | Controller Area Network |

| COVESA | Connected Vehicle Systems Alliance |

| ECU | Electronic Control Unit |

| FPS | Frames per Second |

| GUI | Graphical User Interface |

| IoT | Internet of Things |

| IoV | Internet of Vehicles |

| JSON | JavaScript Object Notation |

| LIN | Local Interconnect Network |

| MOST | Media Oriented Systems Transport |

| OpenCV | Open Source Computer Vision Library |

| SD | Service Discovery |

| SOA | Service-Oriented Architecture |

| SOME/IP | Scalable Service-Oriented Middleware over IP |

| V2X | Vehicle-to-Everything |

| VM | Virtual Machine |

References

- Hedge, R.; Mishra, G.; Gurumurthy, K. An Insight into the Hardware and Software Complexity of ECUs in Vehicles. In Proceedings of the International Conference on Advances in Computing and Information Technology, Chennai, India, 15–17 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 99–106. [Google Scholar]

- Nolte, T.; Hansson, H.; Bello, L.L. Automotive communications-past, current and future. In Proceedings of the 2005 IEEE Conference on Emerging Technologies and Factory Automation, Catania, Italy, 19–22 September 2005. [Google Scholar]

- Douss, A.B.C.; Abassi, R.; Sauveron, D. State-of-the-art survey of in-vehicle protocols and automotive Ethernet security and vulnerabilities. Math. Biosci. Eng. 2023, 20, 17057–17095. [Google Scholar] [CrossRef] [PubMed]

- Lo Bello, L.; Patti, G.; Leonardi, L. A perspective on ethernet in automotive communications—Current Status and Future Trends. Appl. Sci. 2023, 13, 1278. [Google Scholar] [CrossRef]

- De Almeida, J.F.B. Rust-Based SOME/IP Implementation for Robust Automotive Software. Ph.D. Dissertation, Universidade do Porto, Porto, Portugal, 2021. [Google Scholar]

- Nayak, N.; Ambalavanan, U.; Thampan, J.M.; Grewe, D.; Wagner, M.; Schildt, S.; Ott, J. Reimagining Automotive Service-Oriented Communication: A Case Study on Programmable Data Planes. IEEE Veh. Technol. Mag. 2023, 18, 69–79. [Google Scholar] [CrossRef]

- Johansson, R.; Andersson, R.; Dernevik, M. Enabling Tomorrow’s Road Vehicles by Service-Oriented Platform Patterns. In Proceedings of the ERTS 2018, Toulouse, France, 31 January–2 February 2018. [Google Scholar]

- Ioana, A.; Korodi, A.; Silea, I. Automotive IoT Ethernet-Based Communication Technologies Applied in a V2X Context via a Multi-Protocol Gateway. Sensors 2022, 22, 6382. [Google Scholar] [CrossRef] [PubMed]

- Seyler, J.R.; Streichert, T.; Glaß, M.; Navet, N.; Teich, J. Formal Analysis of the Startup Delay of SOME/IP Service Discovery. In Proceedings of the 2015 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2015. [Google Scholar]

- Gopu, G.L.; Kavitha, K.V.; Joy, J. Service Oriented Architecture based connectivity of automotive ECUs. In Proceedings of the 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Nagercoil, India, 18–19 March 2016. [Google Scholar]

- Nichitelea, T.-C.; Unguritu, M.-G. Automotive Ethernet Applications Using Scalable Service-Oriented Middleware over IP: Service Discovery. In Proceedings of the 2019 24th International Conference on Methods and Models in Automation and Robotics (MMAR), Miedzyzdroje, Poland, 26–29 August 2019. [Google Scholar]

- Iorio, M.; Reineri, M.; Risso, F.; Sisto, R.; Valenza, F. Securing SOME/IP for In-Vehicle Service Protection. IEEE Trans. Veh. Technol. 2020, 69, 13450–13466. [Google Scholar] [CrossRef]

- Alkhatib, N.; Ghauch, H.; Danger, J.-L. SOME/IP Intrusion Detection using Deep. In Proceedings of the 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 27–30 October 2021. [Google Scholar]

- Choi, Y.; Seung, J.L.; Yoon, S.K. Analysis of problems in big data transfer using SOME/IP-TP. Turk. Online J. Qual. Inq. 2021, 12, 6617. [Google Scholar]

- Zelle, D.; Lauser, T.; Kern, D.; Krauß, C. Analyzing and securing SOME/IP automotive services with formal and practical methods. In Proceedings of the 16th International Conference on Availability, Reliability and Security, Vienna, Austria, 17–20 August 2021. [Google Scholar]

- Ibraheem, O.W.; Irwansyah, A.; Hagemeyer, J.; Porrmann, M.; Rueckert, U. A Resource-Efficient Multi-Camera GigE Vision IP Core for Embedded Vision Processing Platforms. In Proceedings of the 2015 International Conference on ReConFigurable Computing and FPGAs (ReConFig), Riviera Maya, Mexico, 7–9 December 2015. [Google Scholar]

- Brahim, M.B.; Mir, Z.H.; Znaidi, W.; Filali, F.; Hamdi, N. QoS-Aware Video Transmission over Hybrid Wireless Network for Connected Vehicles. IEEE Access 2017, 5, 8313–8323. [Google Scholar] [CrossRef]

- Aliyu, A.; Abdullah, A.H.; Kaiwartya, O.; Cao, Y.; Lloret, J.; Aslam, N.; Joda, U.M. Towards Video Streaming in IoT Environments: Vehicular Communication Perspective. Comput. Commun. 2018, 118, 93–119. [Google Scholar] [CrossRef]

- Abou-zeid, H.; Pervez, F.; Adioyi, A.; Aljlayl, M.; Yanikomeroglu, H. Cellular V2X Transmission for Connected and Autonomous Vehicles Standardization, Applications, and Enabling Technologies. IEEE Consum. Electron. Mag. 2019, 8, 91–98. [Google Scholar] [CrossRef]

- Khan, H.; Samarakoon, S.; Bennis, M. Enhancing Video Streaming in Vehicular Networks via Resource Slicing. IEEE Trans. Veh. Technol. 2020, 69, 3513–3522. [Google Scholar] [CrossRef]

- Yu, Y.; Lee, S. Remote Driving Control with Real-Time Video Streaming over Wireless Networks: Design and Evaluation. IEEE Access 2022, 10, 64920–64932. [Google Scholar] [CrossRef]

- Go, K.; Shin, D. Resilient Raw Format Live Video Streaming Framework for an Automated Driving System on an Ethernet-Based In-Vehicle Network. IEEE Access 2023, 11, 144364–144376. [Google Scholar] [CrossRef]

- COVESA. Github Vsomeip. COVESA, 29 November 2023. Available online: https://github.com/COVESA/vsomeip (accessed on 25 September 2025).

- Völker, L. Scalable Service-Oriented MiddlewarE over IP (SOME/IP). Available online: https://some-ip.com/papers.shtml (accessed on 25 September 2025).

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- Google LLC. Protocol Buffers (Protobuf). Google LLC. Available online: https://protobuf.dev/ (accessed on 25 September 2025).

- Lohmann, N. JSON for Modern C++. 1 May 2022. Available online: https://json.nlohmann.me/ (accessed on 25 September 2025).

- OpenCV. OpenCV Documentation. OpenCV. Available online: https://docs.opencv.org/4.9.0/ (accessed on 25 September 2025).

- GKU Tech. GKU D600 4K/2.5K WiFi Dash Cam GKU Tech. Available online: https://www.gkutech.com/en-eu/products/gku-d600-4k-2-5k-wifi-dash-cam (accessed on 12 November 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).