Abstract

Artificial intelligence (AI) is widely expected to transform healthcare, yet its adoption in clinical practice remains limited. This paper examines the perspectives of Italian clinicians and medical physicists on the drivers of and barriers to AI use. Using an online survey of healthcare professionals across different domains, we find that efficiency gains—such as reducing repetitive tasks and accelerating diagnostics—are the strongest incentives for adoption. However, trust in AI systems, explainability, and the limited availability of AI tools are major obstacles. Respondents emphasized that AI should augment, not replace, medical expertise, calling for participatory development processes where clinicians are actively involved in the design and validation of decision support tools. At the organizational level, the adoption of AI tools is facilitated by innovation-oriented leadership and sufficient resources, while conservative management and economic constraints hinder implementation. The awareness of regulatory frameworks, including the EU AI Act, is moderate, and many clinicians express the need for targeted training to support safe integration. Our findings suggest that the successful adoption of AI in healthcare will depend on building trust through transparency, clarifying legal responsibilities, and fostering organizational cultures that support collaboration between humans and AI. The role of AI in medicine is therefore best understood as a complement to clinical judgment, rather than a replacement.

Keywords:

AI; healthcare; participatory design; AI reliability; robustness; explainability; perceptions; trust 1. Introduction

Healthcare has always been an area open to technological innovation and AI is no exception [,]. For several decades, there has been extensive discussion on telemedicine and individualized therapy, and the potential for AI used for personalized medicine and individual treatment plans is within reach []. Medical AI tools can help personalized care, accelerate drug and vaccine discovery, increase diagnosis precision, detect early disease, support medical research and data analysis, reduce the time doctors spend on routine tasks, and improve doctor–patient relationships [,,,,,]. However, despite these potential substantial gains, the use of AI in hospitals remains low due to the following major barriers: algorithmic, social and organizational, institutional, and legal barriers.

Algorithmic barriers refer to the reproduction of inequalities due to biased training data and unfair modelling. Obermeyer et al. [] show how an AI algorithm used to make diagnoses assigned Afro-American patients the same level of health risk while they were suffering significantly worse health conditions than White patients.

Social and organizational barriers refer to the low trust in AI due to several reasons such as a fear of job displacement, challenges to professional authority and prestige, a lack of collaboration between AI algorithm developers and medical doctors, the low availability of validation data, and unfulfilled expectations such as the expectation of a reduced workload [,]. Moreover, a general positive attitude towards AI does not automatically translate into a willingness to adopt it [], rather, it is mediated by personal sociotechnical imaginaries [], as well as by organizational settings and institutional practices [].

Institutional barriers refer to the management and technological resources available within an organization. These resources may either not be provided or available but not promoted by management, which prevents their effective use [,].

Legal barriers point to privacy concerns and to legal implications for AI-assisted diagnostics. They refer to barriers on the collection and use of data; there is a lack of clear rules on the ownership of medical data and the right to use them, and on the sharing of responsibility between the developer of the algorithm and the doctor that uses it. In addition, there are difficulties with using medical data to train machine learning algorithms without violating the right to privacy [].

In this context, the “European Union’s Artificial Intelligence Act” (AI Act (OJ L, 2024/1689, 12.07.2024, ELI: http://data.europa.eu/eli/reg/2024/1689/oj) accessed on 22 September 2025) provides the de facto most comprehensive regulatory guidelines to govern the life cycle of AI systems. It presents a set of obligations for providers of “high-risk” (Art. 6, Annex II) AI devices. It focuses mainly on the safety of AI systems, but it is complemented by a revised version of the Product Liability Directive (PLD) which defines the liability framework in case of the “defectiveness” of devices. According to the revised PLD, providers (developers, manufacturers, and other actors in the production chain) are primarily responsible for the design, safety, and transparency of the AI system itself, thus they can be held accountable for products that do not meet regulation requirements and for defects in the AI products themselves. Deployers (healthcare professionals and healthcare organizations) are responsible for the safe and effective implementation of AI devices in their facilities and for professional malpractice in using the AI system. This includes the failure to exercise clinical decision, ignoring clear alarms, or using improper data input. However, one of the major shortcomings of the AI Act is not having converged to an official “Artificial Intelligence Liability Directive” (AILD), which leaves the fault-based liability under individual national laws that may differ significanly in scope and coverage (e.g., the Italian “Gelli Bianco” law regulating professional responsibility (GU L, 2017/24, 08.03.2017, ELI: https://www.gazzettaufficiale.it/eli/id/2017/03/17/17G00041/s) accessed on 22 September 2025) [].

In this paper, we focus on the social and organizational barriers that could prevent the adoption of AI. The low adoption rates of AI in healthcare motivate an important research question on the extent of the actual use of AI systems in the daily practice of medical doctors. To answer this question, we designed an original survey instrument that we administered online to a sample of medical physicists and clinicians working in Italian hospitals in different areas (Radiology, Nuclear Medicine, Neurology, Radiotherapy, and Oncology).

The survey required respondents to share their opinions and perspectives on AI tools (individual level) and to interpret the attitude of their institutions (organizational level) towards AI adoption and use. One main finding that emerges from the analysis of the empirical data is the need for a participatory approach to AI development with medical professionals asking to be actively involved either in the development or in the integration of AI tools in clinical practice.

2. Materials and Methods

We developed an original survey using Qualtrics. We administered it online between January and July 2024 to a sample of clinicians and medical physicists working in Italian hospitals that we reached with the help of their respective professional associations operating at the national level (see the “Acknowledgments” section for the full list). We distributed the online questionnaire to specific communities of Italian physicians and medical physicists specializing in imaging, where technological adoption has historically been faster than in other fields []. Doctors vary by age and years of work experience, from young trainees to experienced doctors.

An important note refers to the non-representativeness of our sample. This study was conducted with an exploratory goal, as little empirical evidence has been gathered so far. We opted for a snowball sampling technique, a non-probability sampling method not aimed at collecting a representative sample of the population. Our final sample consisted of 193 respondents, 40% females and 60% males, with a mean age of 47 (SD = 14) years. In the data analysis for the preparation of this work, we excluded respondents that did not answer at least all the compulsory questions of the questionnaire.

2.1. Structure of the Survey

The survey was organized in five main sections: (1) “Perceptions, attitudes and awareness of AI”, (2) “Socio-technical imaginaries of AI”, (3) “Adoption of AI tools in hospitals”, (4) “AI use in the clinical practice”, (5) “AI potential adoption in the clinical practice”. In the first section, respondents were asked to measure their familiarity with AI and report their expectations and knowledge about AI-based tools and the regulatory laws that normalize AI applications. Section 2 elicited respondents’ sociotechnical imaginaries of AI. Section 3 aimed to investigate how much and to what extent AI was present in the hospital where the respondent worked and the perceptions on what obstacles and barriers limit the use of AI. Section 4 asked about respondents’ actual use of AI tools in their clinical practice, and the factors driving the decision to use or not to use AI. Section 5 posed a series of questions on the likelihood of the potential use of hypothetical AI tools in a clinical environment.

At the beginning of the questionnaire, we provided respondents with the following definition of artificial intelligence: “Artificial Intelligence (AI) refers to computer systems that are able to perform specific tasks and make decisions without being provided with explicit instructions. These systems can learn from data and are able to make predictions independently of human intervention”. Providing this definition of AI ensured comparability of responses across respondents independently of their previous AI knowledge and the specific AI instruments clinicians may have used. It also excluded answers related to generative AI systems.

The survey contained both mandatory (mostly multiple choice, also in the form of grouped radio buttons or sliders) and optional open-ended questions.

2.2. Descriptive Analysis of Respondents

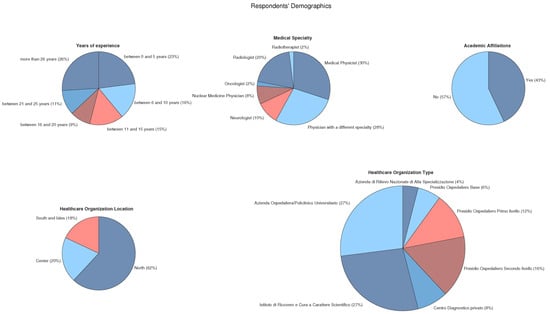

Together, medical physicists and radiologists represented more than half of the sample, since these are the two main fields where AI technologies are either widely used or at least discussed [,] and professionals in these fields are specialized in imaging, where technological adoption has historically been faster than in other fields []. The sample is characterized by a predominance of respondents working for institutions focused on clinical research, i.e., “Azienda Ospedaliera/Policlinico Universitario” (University Hospital) and “Istituto di Ricovero e Cura a Carattere Scientifico” (Scientific Institute for Research, Hospitalization and Healthcare), located in the northern part of Italy. Other relevant statistics are represented in Figure 1.

Figure 1.

Demographic details. Top row: characteristics of respondents who completed the questionnaire. Bottom row: features of respondents’ affiliated institutes. Medical institution categories are as follows: “Azienda di Rilievo Nazionale di Alta Specializzazione (ARNAS)” (Nationally Significant Highly Specialized Hospital), “Presidio Ospedaliero Base” (Basic Hospital Facility), “Presidio Ospedaliero di Primo Livello” (First-Level Hospital Facility), “Presidio Ospedaliero di Secondo Livello” (Second-Level Hospital Facility), “Centro Diagnostico Privato” (Privately Operated Diagnostic Clinic), “Istituto di Ricovero e Cura a Carattere Scientifico (IRCCS)” (Scientific Institute for Research, Hospitalization and Healthcare), and “Azienda Ospedaliera/Policlinico Universitario” (University Hospital).

2.3. Analysis of the Survey Responses

We used bar plots to show the distributions of the responses to the most relevant questions and investigate the emerging data patterns or underlying motivations. Firstly, we explored the imaginaries and attitudes of individual doctors. Secondly, we described the guidelines that medical institutions consider when adopting AI as well as management and related obligations at the organizational level.

3. Results

3.1. Drivers of and Barriers to the Use of AI at the Individual Level

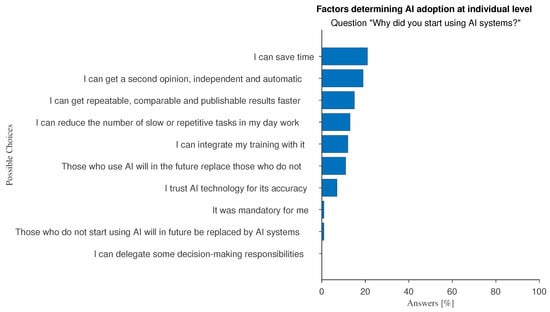

The survey results show that respondents value AI-powered devices for their efficiency, recognizing that AI can help speed up their workflow and reduce repetitive and slow tasks (Figure 2, e.g., answers such as “I can save time”, “I can get repeatable, comparable and publishable results faster”). The optional feedback that respondents could leave in several open-ended questions reinforces these findings: AI is seen as a tool to standardize data, results, and reports. One respondent made an interesting point, reporting that the aim is “to provide patients with the best state-of-the-art technology".

Figure 2.

Answers to the multiple-choice question: “Why did you start using AI systems?”.

We investigated whether there are significant differences between medical physicists, who are characterized by a more technical background, and all other specialists. The occurrence of the answer “I have not received sufficient training” is 8% for medical physicists and 13% for other specialists. The option “it is not available in the facility where I work” has been chosen by 12% of medical physicists and by 40% of those from other specialists. Factors favoring AI adoption at the individual level can distinguish well between the two specialties: “Those who use AI will in the future replace those who do not” (26% against 12%) and “I can reduce the number of slow or repetitive tasks in my day work” (37% vs. 17%).

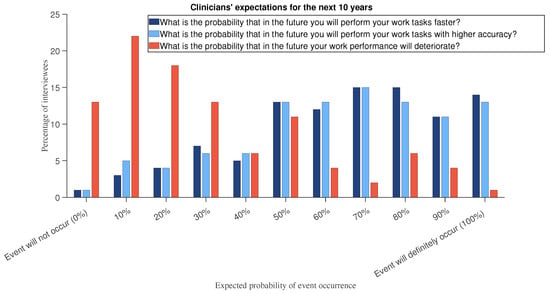

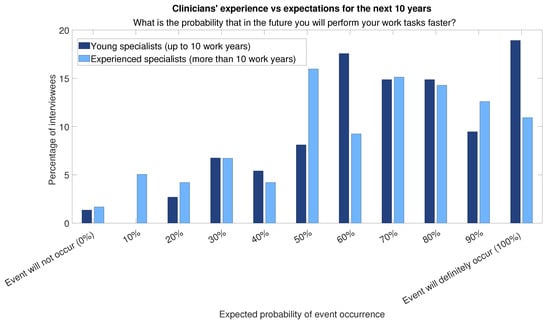

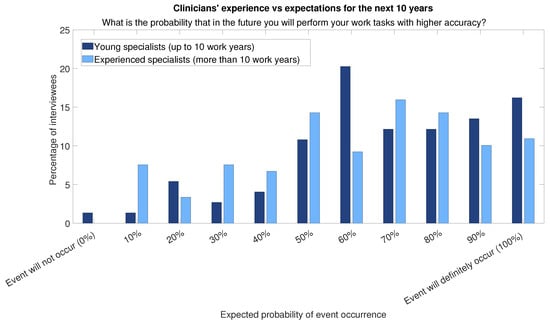

Furthermore, the relevance of AI learning systems is expected to increase if AI in the workplace continues to grow at a fast pace and becomes pervasive (Figure 3). Young doctors (work experience less than or equal to 10 years) are those who show more optimism (Figure 4 and Figure 5 show histograms that are more towards high values) compared with experienced professionals (more than 10 years of work experience).

Figure 3.

In a hypothetical scenario question, we asked respondents to assume that “the presence of AI in your workplace will continue to grow at a fast pace to become pervasive”. Then we asked them to answer the following questions: “What is the probability that in the future you will perform your work tasks faster?”, “What is the probability that in the future you will perform your work tasks with higher accuracy?”, and “What is the probability that in the future your work performance will deteriorate?”.

Figure 4.

Answers to question “What is the probability that in the future you will perform your work tasks faster?” divided into two categories of respondents: those for respondents with more than 10 years of experience and those for respondents with less experience.

Figure 5.

Answers to question “What is the probability that in the future you will perform your work tasks with higher accuracy?” divided into two categories of respondents: those for respondents with more than 10 years of experience and those for respondents with less experience.

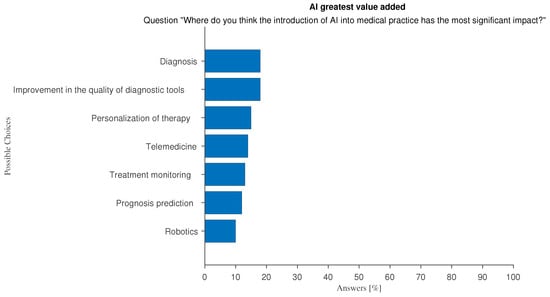

Regarding the perceived main areas of impact of AI (Figure 6), no dominant area emerges. Although respondents offered almost evenly distributed answers across the proposed categories, the first two refer to the diagnostic field (“Diagnosis”, “Improvement in the quality of the diagnostic tools”), which suggests that they believe that AI’s greatest value is in improving the accuracy of diagnosis prediction and early detection. A key factor influencing the use of AI is trust in AI technology, as shown by responses such as “I can get a second opinion, independent and automatic” and “I trust AI technology for its accuracy”.

Figure 6.

Answers to the multiple-choice question: “Where do you think the introduction of AI into medical practice has the most significant impact?”.

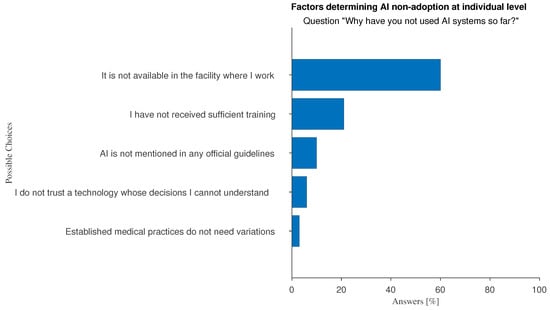

The main perceived barrier to AI adoption is simply a matter of availability: as shown in Figure 7 most respondents state that “[AI] is not available in the facility where I work”. Another important reason is that doctors do not feel they have the necessary AI skills.

Figure 7.

Answers to the multiple-choice question: “Why have you not used AI systems so far?”.

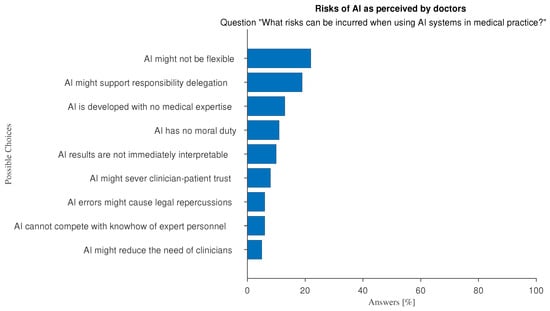

The risks that doctors perceive with the use of AI can be summarized into three main groups (Figure 8): doctors’ competences cannot be equated by AI (e.g., “AI cannot compete with the knowhow of expert personnel”), the need for a participatory design approach (e.g., “AI is developed without any cognizance of clinical practice”; “AI results are not interpretable”), and AI cannot be considered as a moral agent (e.g., “AI has no moral duty”; “AI might sever clinician-patient trust”).

Figure 8.

Answers to the multiple-choice question: “What risks can be incurred when using AI systems in medical practice?”.

3.2. Drivers of and Barriers to the Use of AI at the Organizational Level

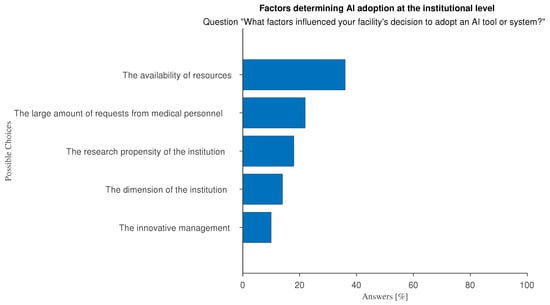

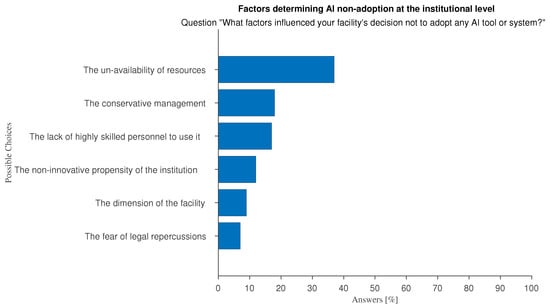

At the organizational level, the main perceived barriers to adopting AI relate to insufficient economic resources and management attitude (Figure 9 and Figure 10): large hospitals with innovation-driven management tend to favor the adoption and use of AI while AI tools are not effectively supported in smaller, less wealthy hospitals.

Figure 9.

Answers to the multiple-choice question:“In your opinion, what factors influenced your organization’s decision to adopt AI systems or tools?”.

Figure 10.

Answers to “In your opinion, what factors influenced your organization’s decision not to adopt AI systems or tools?”.

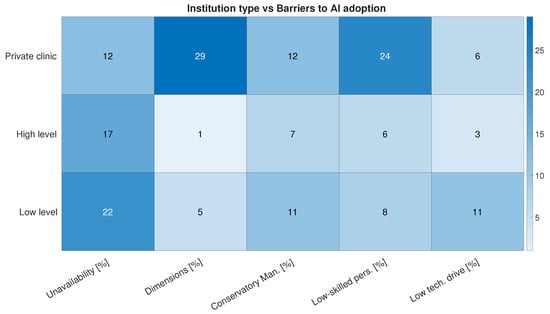

We investigated how these perceptions are correlated with the type of institution doctors work in. Interesting aspects emerge: the unavailability of resources emerged by far as the primary barrier in public hospitals (a high level and low level of specialization or research propensity). However, limited organizational dimensions and a lack of skilled personnel are the most perceived negative factors in private clinics (Figure 11).

Figure 11.

Most frequent answers to question “In your opinion, what factors influenced your organization’s decision not to adopt AI systems or tools?” divided into three categories of respondents: those working in private medical diagnostic centers, those working in high-level institutions (“Azienda di Rilievo Nazionale di Alta Specializzazione (ARNAS)”, “Istituto di Ricovero e Cura a Carattere Scientifico (IRCCS)”, or “Azienda Ospedaliera/Policlinico Universitario”), or those working in low-level institutions (“Presidio Ospedaliero Base”, “Presidio Ospedaliero di Primo Livello”, or “Presidio Ospedaliero di Secondo Livello”).

Regarding the knowledge of AI regulatory frameworks, we find a moderate lack of awareness: about 50% of the respondents stated that they were unfamiliar with the AI Act and 20% were unaware of the World Health Organization (WHO) 2021 guidelines for the use of AI in medicine.

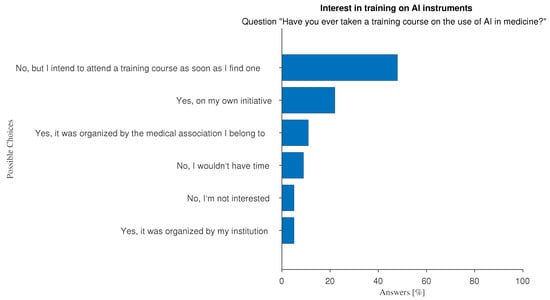

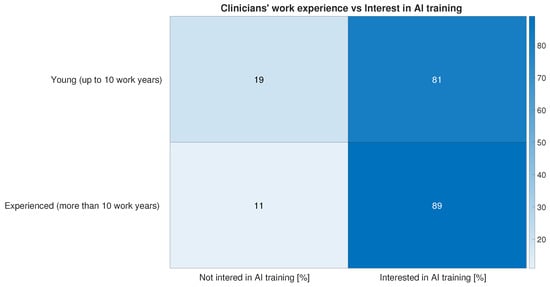

In terms of AI literacy, 13% of survey respondents look forward to the opportunity to take an AI training course to integrate their continuing professional development as a key factor in promoting AI adoption (Figure 2). Moreover, 38% of our sample say they have attended one or more training courses on the use of AI in medicine (Figure 12). The attitude towards training on AI-related subjects and use is not significantly different between young and experienced doctors, as shown in Figure 13.

Figure 12.

Answers to question “Have you ever taken training courses on the use of AI in medicine?”.

Figure 13.

Answers to question “Have you ever taken training courses on the use of AI in medicine?” have here been grouped into two categories—“Not interested in AI training” and “Interested in AI training”—and plotted against two categories of respondents—those for respondents with more than 10 years of experience and those for respondents with less experience.

However, contrary to what is stated in the AI Act (Art. 4), which requires healthcare organizations to ensure the AI literacy of their staff, fewer than half of these courses have been organized by the hospitals where the survey respondents work.

Current EU law, which combines the revised “Product Liability Directive” (PLD) and national legislation, regulates liability in cases of harm to patients caused by AI systems. However, while it is clear in which cases AI device providers are responsible, more nuanced cases can arise when healthcare organizations or personnel malpractice are involved.

An interesting finding from our survey relates to how responsibility is shared between clinicians and AI in the event of litigation due to legal issues related to AI (Table 1). Although no clear consensus emerged, most of the respondents assigned the primary responsibility to doctors who used AI or to AI developers. However, 51% of respondents (96, obtained by summing the contributions of the rows where more than one role is listed) selected more than one option, favoring shared responsibility between doctors, AI providers, and hospitals where AI is used.

Table 1.

Answers to the multiple-choice question: “Who should be responsible for any litigation due to legal problems caused by AI?”. Answers are split by the medical specialty of the respondents. For clarity, responsibility roles are abbreviated as follows: “Companies that develop the AIs” (“AI Providers”); “Hospitals where AI is used” (“AI Deployers”); “Clinicians in charge as AI user” (“AI users”); “Patients who agreed to the use of AI” (“Patients”). “AI” is omitted when more than their role is involved. Medical professions are abbreviated as follows: “Medical Physicist” (“Med. Phys.”); “Radiologist” (“Radiol.”); “Nuclear Medicine Physician” (“Nucl. Med.”); “Neurologist” (“Neurol.”); “Oncologist” (“Oncol.”); “Radiotherapist” (“Radioth.”); and “Doctor with other specialty” (“Other spec.”).

An additional consideration about shared responsibility can be extracted from Table 1: a common factor is the presence of providers in the most selected shared responsibility patterns, and that patients are mainly excluded from this kind of liability.

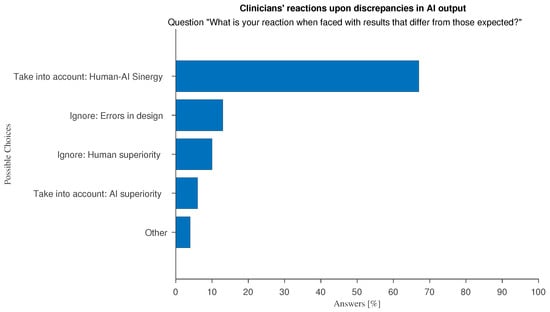

This shared responsibility affects how AI tools are used to support decision-making processes. This raises an important question: how do medical professionals respond when AI-generated results contradict their diagnosis? To answer this question, we asked the respondents about their reaction when faced with unexpected results from AI systems. As shown in Figure 14, most respondents tend to actively investigate the reasons behind the conflicting outputs and decide whether to revise their opinions or disregard the AI output. This attitude is consistent among all doctors regardless of the level of familiarity with AI in their work environment.

Figure 14.

Answers to “What is your reaction when faced with results that differ from those expected?”.

An analysis of the optional free-text feedback associated to these answers shows that the majority of “Other” responses converge on the idea that there is no general correct answer, and that the underlying causes of discrepancies always deserve to be explored. Another interesting discussion point can be summarized as follow: “I retrace the processing steps that led to the result, for both human and AI methods. If the method is the same, I prioritize the AI method to understand where the human approach has failed and then adjust the human method accordingly”.

Several articles of the AI Act (Articles 14 and 26 and Recital 27) recommend that high-risk AI systems are designed to enable effective human supervision to minimize risks to health, safety, or fundamental rights, even under foreseeable misuse. This emphasizes the importance of transparency and explainability in AI systems.

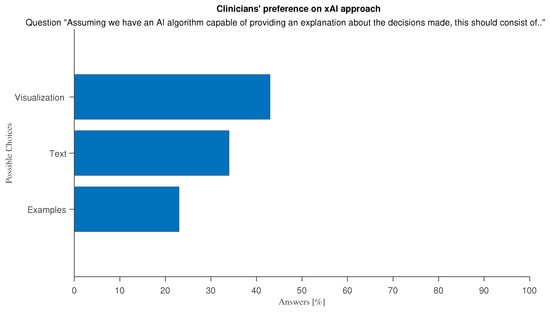

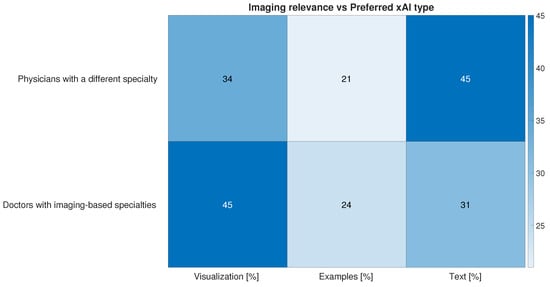

The term “Explainable AI” (xAI) refers to the techniques that aim to make AI tools more transparent to users, providing insights on their internal decision process. This is of the utmost importance in a medical environment where the final users have to make critical decisions based on the output of automated tools. They must therefore be made aware of the internal decision-making process. Explainability is also a way to increase trust in AI tools []. With an eye to software development, we asked doctors which method of explainability would be the most effective. The optional question “Assuming we have an AI algorithm capable of providing an explanation about the decision made, this should consist of […]” lets users choose among traditional xAI approaches: “Text: AI explains in natural language why it made that decision”, “Visualization: AI shows the variables/regions of the image that the algorithm considered most important in its decision” and “Examples: AI explains the decision by presenting similar cases and/or counter-examples”. Almost all clinicians selected more than one answer. A slight preference is expressed for the visual approach to xAI (Figure 15). This may be due to imaging-based bias in our sample, which is predominantly made by doctors who are used to report on imaging data, thus a medical field where by-example approaches do not always apply. To explore this thesis further we divided respondents into two categories: “Doctors with imaging-based specialties”, comprising Medical Physicists, Radiologists, Nuclear Medicine Physicians, Neurologists, Oncologists, and Radiotherapists, and physicians with a different specialty. Differences in xAI approach preferences are very well marked and confirm our assumption that visualization is the preferred explanation approach for the study of imaging data, while in other tasks, natural language explanations suit best (Figure 16).

Figure 15.

Answers to optional question “Assuming we have an AI algorithm capable of providing an explanation about the decision made, this should consist of …”.

Figure 16.

Answers to optional question “Assuming we have an AI algorithm capable of providing an explanation about the decision made, this should consist of …” divided into the following: physicians with a different specialty and “Doctors with imaging-based specialties” containing respondents from all other specialties.

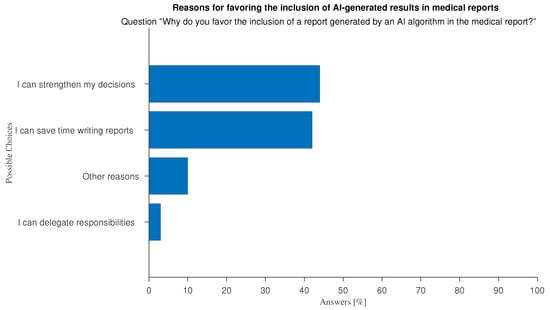

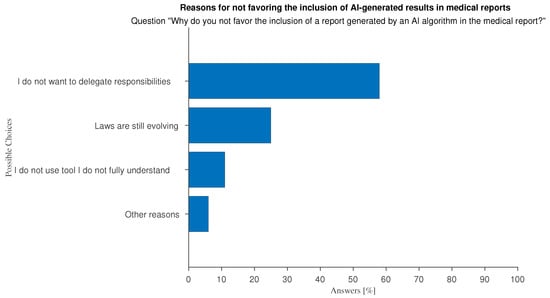

The ethical use of automated systems requires that AI-generated predictions are ultimately used by humans. There is an ongoing debate on whether AI recommendations should be included in medical reports. Reporting is often the final product of the medical profession, particularly with regard to imaging-related tasks. A narrow majority of respondents is inclined to acknowledge the use of AI in their reports (37%), rather than not (23%), while the rest is unsure (40%). Motivations for the positive view are almost equally divided between “I can save time writing reports” and “I can strengthen my decisions” (see Figure 17). Negative responses stem from uncertainty surrounding AI regulations on liability and legal responsibility, and the need to emphasize the importance of human operators (see Figure 18).

Figure 17.

Answers to question “Why do you welcome the inclusion of a AI-generated results in the report to be given to your patients?”.

Figure 18.

Answers to question “Why do you not favor the inclusion of a AI-generated results in the report to be given to your patients?”.

The free-text feedback we were able to collect on the subject of the inclusion of AI-generated predictions in medical reports, indicate supplementary arguments, both in favor or against, that were not explicitly covered by the questionnaire. Among the pros the most common is the expected clarity and exhaustiveness of AI results. Doctors say they favor the inclusion “to make patients aware of the decisions made and the reasons behind them.”, “To make my report more understandable to a general audience”, and because of the “Greater completeness” of the AI results. Among the most shared cons among respondents who offered open-ended comments is the impoverishment of their profession: doctors fear that growing the habit of relying on AI may de-humanize medicine, and turn it into a computer science, as demonstrated by “using AI can bypass responsibility and know-how, slowly leading to learning failure and poor patient management”, “doctors shall keep the responsibility of the correct practice”, and “the de-personalization of the doctor–patient relationship behind an AI interface reduces the quality of the interaction (though not necessarily the trust), creating a mechanistic dynamic in which the doctor might be perceived as an electronic engineer”.

4. Discussion

The results of our survey show that doctors favor a participatory approach to AI design in which medical experts can interact with software developers at the stages of requirement specification, algorithm development, result presentation, and output validation. The development process could involve human decision-makers in either data preparation for AI training, and/or output interpretation. Support in data preparation for training AI models can be provided by selecting and sorting or by interactively and iteratively annotating it (“active learning”), while correcting or ranking model predictions can improve the interpretation of model outputs []. In the most relevant use case for our sample (using AI to support imaging data interpretation or manipulation), the final decision of medical doctors on whether and how to use an auxiliary AI tool will be based on several additional factors that are difficult to quantify: patients’ behaviors, self-assessments, the feelings and suggestions of family members, as well as unavailable information in AI training (e.g., due to privacy reasons or the patients’ medical history). Therefore, a crucial goal is to develop AI tools that can provide clear, effective, and flexible recommendations to inform the complex process of medical decision-making.

The demand for participation—our novel finding—in the design and development of AI tools is in line with a key objective of the EU’s regulatory framework that promotes the development of AI systems “in a way that can be appropriately controlled and overseen by humans” (AI Act, Recital 27). For example, AI Act Article 4 requires healthcare organizations to ensure that their staff is trained in AI. Based on their risk and role, Italian institutions and healthcare associations have started to promote several actions to support the safe and responsible use of AI systems [,]. Doctors are skeptical of AI tools that cannot be easily interpretable and justifiable. They want to be involved in the design, development, and validation processes: the specialized medical knowledge they provide addresses specific problems and responds to distinct needs.

Therefore, promoting a participatory approach could help overcome the conflicting attitudes of doctors. Some respondents appreciate the time-saving benefits of AI in making diagnoses and prognoses, while others express concerns about the time needed to learn the basics of how AI tools work and how to interpret the explanations they provide. The results show the emergence of a "time paradox" that describes a more complex, AI-powered, environment in which doctors operate. This complexity is not always reflected in a narrative of benefits in terms of performance, time, and efficiency gains, since more time is required for training and learning.

The sociotechnical perspective supporting an AI participatory approach allows us to disentangle and recognise that system performance depends on the interplay between technical design and social dynamics [,,] and to consider the agency of individuals and institutions [].

5. Conclusions

The results of the survey that we conducted among Italian healthcare professionals show that there are several obstacles to the effective use of AI in the clinical practice of institutions (e.g., financial resources, management attitude, personnel training), developers (e.g., the transparency and explainability of AI software, dataset bias), and lawmakers (e.g., missing regulations on device misuse, the assignment of responsibility). Clinicians, as the final end users of AI, also face obstacles (e.g., a lack of trust, participation, supervision, and reliability). While saving time is important, efficiency must not be prioritized at the expense of accuracy and ethics. AI results and outputs have to be reliable and interpretable to help doctors make the most informed decisions. Clinicians consider an AI participatory approach to be the most effective way of supporting AI adoption at the individual, organizational, and institutional level. Developing a more participatory design could promote fairer and more democratic governance of AI technologies, especially in the medical field where both technical and medical expertise are essential.

It is important to point out some limitations of this work. First of all, as already highlighted in the section on the methodology, our sample is not representative. Since there are no previous studies in this field in Italy, we opted for an exploratory approach without attempting to generalize the results. Secondly, we distributed the questionnaire through professional medical associations. The selection of associations deliberately favored medical specializations related to imaging, as it is well known in the literature that these are the specializations (including radiology) in which technological innovations are first tested and deployed. Another limitation of the study concerns legal barriers. Although the AI Act applies to all European countries, it is also true that each country ratifies and legislates with specific reference to its own legal system. Our conclusive remarks therefore refer exclusively to the Italian context, while future research should favor a comparative approach to these issues. Overall, our study represents a first step towards the development and validation of research tools to be implemented in the future on representative samples of Italian doctors and others. Our work welcomes suggestions for further research into how AI technologies could be integrated into existing social and organizational contexts, rather than how they could be used [].

Author Contributions

Conceptualization, L.S., C.B., A.C. and A.R.; methodology, L.S., C.B., F.S. and F.L.; validation, L.S. and C.B.; formal analysis, L.S., C.B., F.S. and F.L.; resources, A.R., A.C. and L.S.; data curation, L.S., C.B., F.S. and F.L.; writing—original draft preparation, C.B. and F.S.; writing—review and editing, A.R., F.L., C.B. and L.S.; visualization, F.S. and F.L.; supervision and project administration, A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been carried out in the framework of the projects, PNRR M4C2 I1.3, PE00000013—FAIR—“Future Artificial Intelligence Research”—Spoke 8 “Pervasive AI” and PNRR—M4C2—I1.4, CN00000013—ICSC—“Centro Nazionale di Ricerca in High Performance Computing, Big Data and Quantum Computing”—Spoke 8 “In Silico medicine and Omics Data”, both funded by the European Commission under the NextGeneration EU programme; the Artificial Intelligence in Medicine (AIM) project, funded by INFN-CSN5.

Institutional Review Board Statement

This study was approved by the Bioethics Committee of the University of Bologna (IT), Protocol NR. 0075993; Date 13 December 2023.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

We acknowledge the associations which helped distribute the survey among their subscribers. For radiologists: “Società Italiana di Radiologia Medica e Interventistica” (SIRM), “Associazione Italiana risonanza Magnetica in Medicina” (AIRMM), “Società Italiana Ultrasonologia in Medicina e Biologia” (SIUMB); for nuclear medicine: “Associazione Italiana Medicina Nucleare” (AIMN); for neurologists: “Società Italiana Neurologia” (SIN), “Associazione Italiana di Neuroradiologia Diagnostica e Radiologia" (AINR), “Associazione Neurologica Italiana per la ricerca nelle Cefalee (ANIRCEF), “ Società Italiana per lo Studio delle Cefalee” (SISC); for radiotherapists and radiation oncologists: “Associazione Italiana di Radioterapia Oncologica” (AIRO); for medical physicists: “Associazione Italiana Fisica Medica” (AIFM); and other multidisciplinary associations: “Società Italiana Intelligenza Artificiale in Medicina” (SIIAM) and “Alleanza contro il cancro” (ACC).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sahiner, B.; Pezeshk, A.; Hadjiiski, L.M.; Wang, X.; Drukker, K.; Cha, K.H.; Summers, R.M.; Giger, M.L. Deep learning in medical imaging and radiation therapy. Med. Phys. 2019, 46, e1–e36. [Google Scholar] [CrossRef] [PubMed]

- Avanzo, M.; Stancanello, J.; Pirrone, G.; Drigo, A.; Retico, A. The Evolution of Artificial Intelligence in Medical Imaging: From Computer Science to Machine and Deep Learning. Cancers 2024, 16, 3702. [Google Scholar] [CrossRef]

- Denny, J.C.; Collins, F.S. Precision medicine in 2030—Seven ways to transform healthcare. Cell 2021, 184, 1415–1419. [Google Scholar] [CrossRef]

- Matheny, M.E.; Whicher, D.; Israni, S.T. Artificial Intelligence in Health Care. A Report From the National Academy of Medicine. JAMA 2019, 6, 509–510. [Google Scholar] [CrossRef]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial intelligence in healthcare: Transforming the practice of medicine. Future Healthc. J. 2021, 2, 188–194. [Google Scholar] [CrossRef]

- Al-Kuwaiti, A.; Nazer, K.; Al-Reedy, A.; Al-Shehri, S.; Al-Muhanna, A.; Subbarayalu, A.V.; Muhanna, D.A.; Al-Muhanna, F.A. A Review of the Role of Artificial Intelligence in Healthcare. J. Pers. Med. 2023, 6, 951. [Google Scholar] [CrossRef]

- Baig, M.; Hobson, C.; GholamHosseini, H.; Ullah, E.; Afifi, S. Generative AI in Improving Personalized Patient Care Plans: Opportunities and Barriers Towards Its Wider Adoption. Appl. Sci. 2024, 23, 899. [Google Scholar] [CrossRef]

- Fahim, Y.A.; Hasani, I.W.; Kabba, S.; Ragab, W.M. Artificial intelligence in healthcare and medicine: Clinical applications, therapeutic advances, and future perspectives. Eur. J. Med. Res. 2025, 30, 848. [Google Scholar] [CrossRef]

- Alum, E.U.; Ugwu, O.P.C. Artificial intelligence in personalized medicine: Transforming diagnosis and treatment. Appl. Sci. 2025, 7, 193. [Google Scholar] [CrossRef]

- Obermeyer, Z.; Powers, B.; Vogeli, C.; Mullainathan, S. Intentional machines: A defence of trust in medical artificial intelligence. Bioethics 2022, 366, 447–453. [Google Scholar] [CrossRef]

- Rockall, A.; Brady, A.P.; Derchi, L.E. The identity and role of the radiologist in 2020: A survey among European Society of Radiology full radiologist members. Insights Imaging 2020, 11, 130. [Google Scholar] [CrossRef]

- Sartori, L.; Cannizzaro, S.; Musmeci, M.; Binelli, C. When the white coat meets the code: Medical professionals and Artificial Intelligence (AI) in Italy negotiating with trust and boundary work. Health Risk Soc. 2025, 27, 7–8. [Google Scholar]

- Schulz, P.J.; Lwin, M.O. Modeling the influence of attitudes, trust, and beliefs on endoscopists’ acceptance of artificial intelligence applications in medical practice. Front. Public Health 2023, 11, 1301563. [Google Scholar] [CrossRef] [PubMed]

- Sartori, L.; Bocca, G. Minding the gap(s): Public perceptions of AI and socio-technical imaginaries. AI Soc. 2023, 38, 443–458. [Google Scholar] [CrossRef]

- Elish, M.C.; Watkins, E.A. Repairing Innovation: A Study of Integrating AI in Clinical Care. Data Soc. 2020. Available online: https://datasociety.net/wp-content/uploads/2020/09/Repairing-Innovation-DataSociety-20200930-1.pdf (accessed on 19 November 2025).

- Santamato, V.; Tricase, C.; Faccilongo, N.; Iacoviello, M.; Marengo, A. Exploring the Impact of Artificial Intelligence on Healthcare Management: A Combined Systematic Review and Machine-Learning Approach. Appl. Sci. 2024, 14, 10144. [Google Scholar] [CrossRef]

- Alves, M.; Seringa, J.; Silvestre, T.; Magalhães, T. Use of Artificial Intelligence tools in supporting decision-making in hospital management. BMC Health Serv. Res. 2024, 24, 1282. [Google Scholar] [CrossRef] [PubMed]

- Cabello, J.B.; Ruiz Garcia, V.; Torralba, M.; Maldonado Fernandez, M.; Ubeda, M.d.M.; Ansuategui, E.; Ramos-Ruperto, L.; Emparanza, J.I.; Urreta, I.; Iglesias, M.T.; et al. Critical Appraisal Tools For Artificial Intelligence Clinical Studies: A Scoping Review. J. Med. Internet Res. 2025. Preprint. [Google Scholar] [CrossRef]

- Arcila, B.B. AI liability in Europe: How does it complement risk regulation and deal with the problem of human oversight? Comput. Law Secur. Rev. 2024, 54, 106012. [Google Scholar] [CrossRef]

- Dolan, B.; Tillack, A. Pixels, patterns and problems of vision: The adaptation of computer-aided diagnosis for mammography in radiological practice in the U.S. Hist. Sci. 2010, 2, 227–249. [Google Scholar] [CrossRef]

- Avanzo, M.; Trianni, A.; Botta, F.; Talamonti, C.; Stasi, M.; Iori, M. Artificial intelligence and the medical physicist: Welcome to the machine. Appl. Sci. 2021, 11, 1691. [Google Scholar] [CrossRef]

- Obuchowicz, R.; Lasek, J.; Wodzinski, M.; Piorkowski, A.; Strzelecki, M.; Nurzynska, K. Artificial Intelligence-Empowered Radiology-Current Status and Critical Review. Diagnostics 2025, 3, 282. [Google Scholar] [CrossRef] [PubMed]

- Markus, A.F.; Kors, J.A.; Rijnbeek, P.R. The role of explainability in creating trustworthy artificial intelligence for health care: A comprehensive survey of the terminology, design choices, and evaluation strategies. J. Biomed. Inform. 2021, 113, 103655. [Google Scholar] [CrossRef] [PubMed]

- Budd, S.; Robinson, E.C.; Kainz, B. A survey on active learning and human-in-the-loop deep learning for medical image analysis. Med. Image Anal. 2021, 71, 102062. [Google Scholar] [CrossRef]

- Neri, E.; Aghakhanyan, G.; Zerunian, M.; Gandolfo, N.; Grassi, R.; Miele, V.; Giovagnoni, A.; Laghi, A. Explainable AI in radiology: A white paper of the Italian Society of Medical and Interventional Radiology. La Radiol. Medica 2023, 128, 755–764. [Google Scholar] [CrossRef]

- Chen, B.J.; Metcalf, J. Explainer: A sociotechnical approach to AI policy. Data Soc. 2025, 1–14. [Google Scholar]

- Delgado, F.; Yang, S.; Madaio, M.; Yang, Q. The Participatory Turn in AI Design: Theoretical Foundations and the Current State of Practice. In Proceedings of the Equity and Access in Algorithms, Mechanisms, and Optimization (EAAMO ’23), Boston, MA, USA, 30 October–1 November 2023. [Google Scholar] [CrossRef]

- Sloane, M.; Moss, E.; Awomolo, O.; Forlano, L. Participation is not a Design Fix for Machine Learning. In Proceedings of the Equity and Access in Algorithms, Mechanisms, and Optimization (EAAMO ’22), Arlington, VA, USA, 6–9 October 2022. [Google Scholar] [CrossRef]

- Natale, S.; Biggio, F.; Guzman, A.L.; Ricaurte, P.; Downey, J.; Fassone, R.; Keightley, E.; Ji, D. AI, agency, and power geometries. Media Cult. Soc. 2025, 47, 1057–1073. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).