Abstract

Medication errors pose a significant threat to patient safety. Although Bar-Code Medication Administration (BCMA) has reduced error rates, it is constrained by handheld devices, workflow interruptions, and incomplete safeguards against wrong patients, wrong doses, or drug incompatibility. In this study, we developed and evaluated a next-generation BCMA system by integrating artificial intelligence and mixed reality technologies for real-time safety checks: Optical Character Recognition verifies medication–label concordance, facial recognition confirms patient identity, and a rules engine evaluates drug–diluent compatibility. Computer vision models achieved high recognition accuracy for drug vials (100%), medication labels (90%), QR codes (90%), and patient faces (90%), with slightly lower performance for intravenous fluids (80%). A mixed-methods evaluation was conducted in a simulated environment using the System Usability Scale (SUS), Reduced Instructional Materials Motivation Survey (RIMMS), Virtual Reality Sickness Questionnaire (VRSQ), and NASA Task Load Index (NASA-TLX). The results indicated excellent usability (median SUS = 82.5/100), strong user motivation (RIMMS = 3.7/5), minimal cybersickness (VRSQ = 0.4/6), and manageable cognitive workload (NASA-TLX = 31.7/100). Qualitative analysis highlighted the system’s potential to streamline workflow and serve as a digital “second verifier.” These findings suggest strong potential for clinical integration, enhancing medication safety at the point of care.

1. Introduction

Medication errors are a leading cause of preventable harm and high healthcare costs. In the United States, medication errors are among the most common sources of preventable harm, accounting for thousands of deaths annually [1,2,3]. In South Korea, national reports similarly identify medication-related incidents as the most frequently reported patient safety events, comprising 49.8% of all incidents reported in 2023 [4], indicating that this challenge is not unique to the US. Globally, the World Health Organization estimates annual losses of $42 billion, highlighting the need for system-level improvements [3].

The Bar-Code Medication Administration (BCMA) [5] is a systematic approach to preventing medication administration error by adhering to the “five rights”: right patient, right drug, right dose, right route, and right time [6]. The BCMA has proven to be effective in reducing administration errors across inpatient and emergency settings by reducing errors from 23% to 56% [7]. Despite its effectiveness, BCMA has limitations, which include workflow disruptions, increased risk of omitted safety checks, and infection control concerns associated with shared handheld scanners [8]. These workflow and ergonomic challenges create an “industrial motivation” to develop hands-free, context-aware systems that integrate seamlessly into clinical practice rather than interrupting it.

Emerging technologies, such as artificial intelligence (AI) and mixed reality (MR), offer unique opportunities to address conventional BCMA’s pain point [9,10]. Evidence from other medical domains demonstrates that these technologies can optimize clinical decision-making, improve procedural accuracy, and provide real-time access to patient-related information [11]. Advanced deep learning approaches, such as hybrid methods (e.g., FCIHMRT) [12], are continuously improving the accuracy of medical image analysis, laying the groundwork for complex recognition tasks in clinical settings. Recent studies on prehospital pediatrics have highlighted its potential use in clinical settings. In 2024, Ankam et al. designed and prototyped an MR application to support paramedics in the preparation and administration of medication [13]. However, the integration of AI and MR into the BCMA intrahospital workflow remains at an early stage. The primary contribution of this work is the design, development, and feasibility evaluation of an integrated, hands-free medication safety support system that spans the entire clinical workflow, from medication preparation to bedside administration. Our system uniquely integrates AI-driven OCR, real-time drug compatibility checks, and facial recognition for two-factor patient identification. This system is designed to function as a “digital second verifier,” addressing the known workflow limitations of BCMA.

Within this technological landscape, there is an increasing need for next-generation medication safety support systems that intuitively guide clinical actions through user-centered interfaces and proactively prevent complex medication errors. The aims of this study are as follows: (1) to develop an AI-driven vision system that integrates MR technology to support the process of the preparation and administration of medication, and (2) to evaluate the system’s usability, cognitive workload, willingness to use, and the physical comfort of expert participants in simulated clinical environments.

2. Materials and Methods

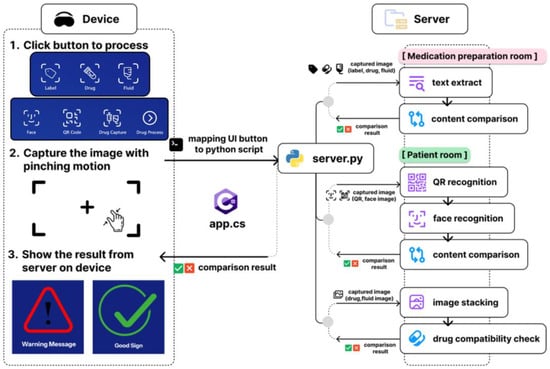

The system employs a distributed architecture comprising four primary components, as shown in Figure 1: a Microsoft HoloLens 2 MR headset, which is widely used in this domain [14]; a Unity-based application framework utilizing the Mixed Reality Toolkit 3 (MRTK3) [15]; a Python (version 3.11) backend server; and cloud-based AI services. This architecture enables the real-time processing of visual inputs while maintaining responsive user interactions through optimized communication protocols.

Figure 1.

System architecture of the project.

2.1. System Architecture

The Unity application deployed on the HoloLens 2 serves as the primary user interface and implements spatial interaction paradigms through MRTK3 components that enable natural gesture-based controls. Visual feedback is delivered as holographic panels within the user’s field of view, ensuring that information remains accessible without occluding critical environmental elements. The application features two primary interaction modes designed to support clinical workflows. The medication preparation room mode uses Optical Character Recognition (OCR)-based recognition of medication labels and intravenous (IV) fluids, assisting with safe drug preparation. The patient room mode enables Quick Response (QR) code scanning (replicating traditional scanner use), facial recognition for patient identification verification, and mixed IV fluid compatibility checks. All functions are operated via intuitive pinch gestures aligned with HoloLens 2 interaction standards.

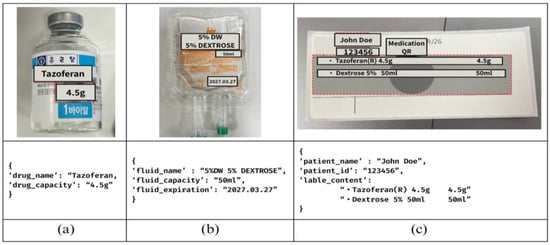

Communication between the HoloLens 2 Unity application and the Python backend occurs through Hypertext Transfer Protocol (HTTP) POST requests, enabling cross-platform compatibility while maintaining real-time performance characteristics, inspired by Strecker et al.’s work [16]. The Python-based backend serves as the central processing hub that receives image data transmitted from the MR device. Each image, which is captured through the user’s interface actions in the C# client app on the HoloLens 2, is routed to the server, where the system assigns it to the corresponding recognition or verification module. The server orchestrates multiple AI-driven tasks, such as OCR, QR decoding, facial recognition, and drug compatibility checks, by invoking dedicated processing pipelines or external application programming interfaces (APIs). This modular coordination allows the device to offload computationally intensive tasks to the server, ensuring real-time analysis and feedback delivery to the user interface. For OCR, the system is integrated with the NAVER OCR API [16], which provides robust text extraction capabilities optimized for various specific document types and lighting conditions commonly encountered in clinical environments, as shown in Figure 2. This specific commercial API was chosen for its reported high accuracy with various texts and its robustness in handling varied lighting conditions and reflective surfaces, which are common characteristics of IV bags and drug vials [17].

Figure 2.

(a) Drug example, (b) fluid example, (c) medication label example.

Furthermore, facial recognition was implemented using the open-source Python package face_recognition (version 1.3.0) developed by Ageitgey [18], which is available on GitHub and is built on top of the library. This library provides pre-trained deep learning models for face detection, facial landmark identification, and encoding extraction based on the ResNet architecture. This open-source library was selected for its well-documented performance, ease of integration into our Python backend, and its ability to perform fast, on-device comparisons. Facial comparisons were performed using 128-dimensional embeddings and the Euclidean distance. All the processing was performed locally to preserve data privacy. In the testing and pilot study context, the system maintained a preloaded database of authorized personnel’s facial encodings.

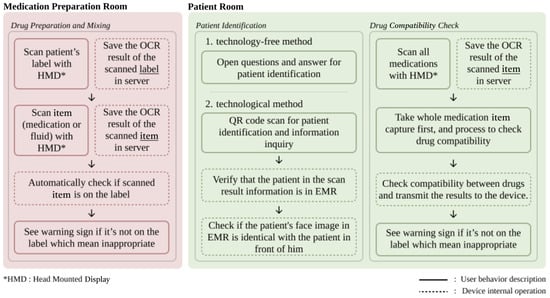

2.2. Clinical Workflow of the System

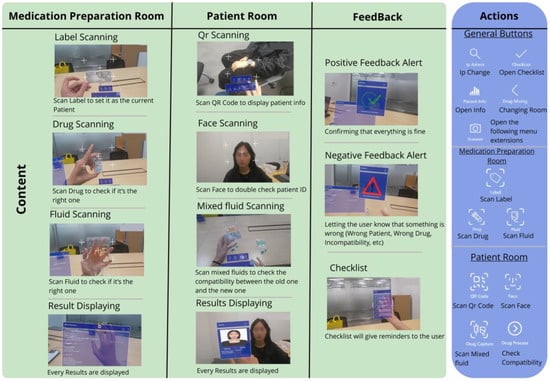

The system integrates two core clinical workflows to support safe medication preparation and administration: the medication preparation room and the patient room (Figure 3).

Figure 3.

General workflow of the system.

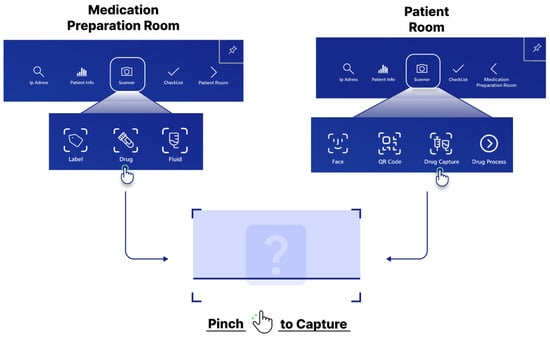

The medication preparation room workflow focuses on verifying medication and fluid compatibility during preparation. In OCR mode, users scan medication labels to retrieve information and schedule prescriptions. Subsequently, they capture images of the drug vials and IV fluids by pinching their fingers after pressing a dedicated button (Figure 4). To clarify the capture process, the system uses the built-in front-facing camera of the HoloLens 2 as the primary sensor; no external sensors are connected. When a user activates a scan function (e.g., “Drug”), the application displays a capture grid. The pinch gesture triggers the capture of a single still image from the camera feed. This image is then immediately sent to the backend server for analysis, as described in Section 2.1. The system performs real-time checks for drug and label matching, drug incompatibility, and concentration consistency using internal logic and external reference databases.

Figure 4.

Screenshot process on the HoloLens 2 application.

The system’s decision module functions as a rule-based advisory engine rather than a predictive model. AI components are employed solely for input recognition, including OCR and facial recognition. The recognized data are then processed through a transparent rule-based workflow that (1) verifies drug–label matching, (2) confirms patient identity, and (3) checks for drug compatibility using predefined databases. This architecture ensures explainable and traceable alerts rather than probabilistic or opaque recommendations.

The patient room workflow targets patient identification and bedside medication administration. Clinicians begin by scanning the QR code of a patient’s wristband and then retrieve key medical information displayed on holographic panels. Facial recognition is used to confirm the patient’s identity and provide a two-step verification process. Finally, additional medications and fluids are scanned to assess their compatibility with current treatments and reduce the risk of adverse interactions during care.

A comprehensive feature map covering both the medication preparation and patient room workflows is provided in Figure 5.

Figure 5.

Application features.

2.3. User Evaluation Procedure

2.3.1. Simulation Test

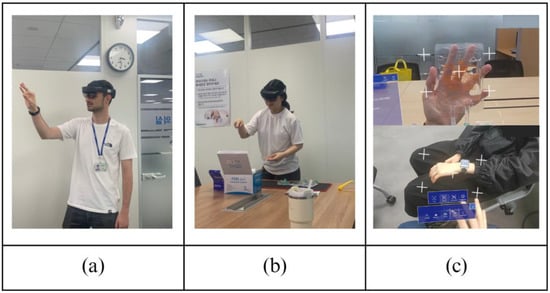

The simulation was conducted in a lab meeting room that was set up to mimic the two core workflows. For the “medication preparation room” task, participants, wearing the HoloLens 2, stood at a table. On the table were a patient’s medication label, several authentic (but empty) drug vials, and IV fluid bags. Participants were instructed to: (1) scan the patient label to set the context and (2) scan each drug vial and fluid bag individually. The system provided real-time visual feedback, warning them if a scanned item did not match the label’s prescription. For the “patient room” task, another researcher or nurse played the role of the patient. This “patient” wore a wristband with a scannable QR code and held a prop representing a previously administered medication. Participants were instructed to: (1) verify the patient’s identity using both the QR code and facial recognition functions and (2) scan their newly prepared medication along with the “previous” medication to perform a drug compatibility check (Figure 6).

Figure 6.

(a) Gesture demo. (b) Medication preparation room with HoloLens 2. (c) First-person view with HoloLens 2.

Each simulation session lasted approximately 45 min and followed a standardized protocol. Each participant received a 10 min orientation to the HoloLens 2 device, including instructions on headset calibration and basic gesture control. Following these tasks, the participants completed a set of standardized quantitative questionnaires and semi-structured interviews, which took approximately 15 min.

2.3.2. Instruments Used for User Test

User evaluation was conducted with a mixed cohort of clinical and technical experts to capture both clinical relevance and usability perspectives, with five participants, two technical experts, and three experienced nurses; thus, the evaluation focused on qualitative feedback and followed the same strategy as in previous studies [19]. Participants were recruited from local healthcare facilities and technical laboratories to ensure the representation of both primary end users and potential system implementers.

Four validated tools were used to assess different aspects of user experience and system acceptance. First, the System Usability Scale (SUS) provides a standardized usability assessment through ten items that measure perceived ease of use, efficiency, and user satisfaction [20]. Responses were collected using a five-point Likert (1–5) scale and then converted to standardized scores following established SUS scoring protocols. Second, the Reduced Instructional Materials Motivation Survey (RIMMS) was used to assess user motivation [21]. The RIMMS is a validated 12-item instrument based on Keller’s ARCS model, measuring four dimensions: Attention, Relevance, Confidence, and Satisfaction, using a five-point Likert (1–5) scale. Its validity has been demonstrated in technology-enhanced MR learning environments. Subsequently, the Virtual Reality Sickness Questionnaire (VRSQ) measured physiological comfort during extended system use, capturing symptoms across three categories: general body symptoms (discomfort, fatigue, dizziness, and nausea), eye-related symptoms (tired eyes, blurred vision and difficulty focusing), and motion-related symptoms [22]. For each question, the score ranged from zero (none) to six (severe). This assessment is particularly critical in healthcare applications, where physical discomfort can compromise professional performance and patient safety. Finally, the NASA Task Load Index (NASA-TLX) evaluated workload across six dimensions: Mental Demand, Physical Demand, Temporal Demand, Performance, Effort, and Frustration Level [23]. After completing the task, the participants were asked to rate each dimension on a scale of 0 to 10 (standardized to 100 later).

In addition, four open-ended questions were included in the post-test survey to capture the participants’ subjective experiences and suggestions regarding the system.

- What aspects of the system did you find most helpful?

- Was there any feature that stood out as particularly useful or innovative?

- How did the system improve the workflow or decision-making process?

- Is there anything else you would like to see added to or improved on the system?

Furthermore, task performance metrics were collected to complement subjective assessments, providing quantitative indicators of system effectiveness in realistic usage scenarios.

2.4. Ethical Considerations

After completing the survey and interview, the participants were compensated with 30,000 KRW each (approximately $22 USD). The user evaluation study was approved by the Institutional Review Board of Samsung Medical Center (No. 2025-06-129).

3. Results

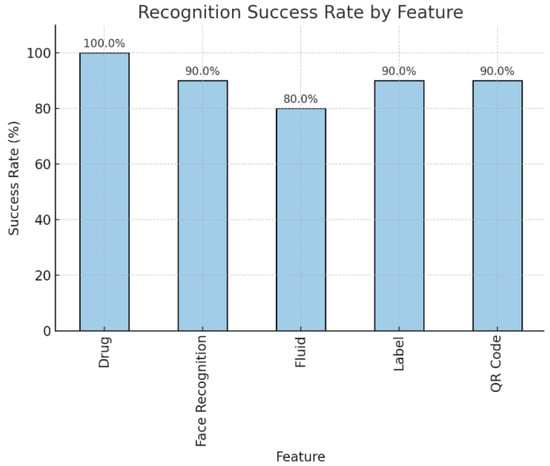

3.1. System Performance Evaluation: Recognition Accuracy

In addition to the user evaluation, a quantitative bench test was conducted to establish the baseline accuracy of the system’s core recognition features. Each recognition feature (Drug, Face Recognition, Fluid, Label, and QR Code) was tested 10 times to calculate a simple success rate. The results of this performance test are presented in Figure 7. The system achieved an overall recognition accuracy of approximately 90%. Accuracy was highest for drug recognition (100%) and consistent across patient face recognition, patient QR codes, and medication labels (90%), whereas fluid recognition was lower at 80% (Figure 7).

Figure 7.

Barplot of the Recognition Success Rate by each feature.

3.2. Results of User Evaluation

3.2.1. Cohort Characteristics

The study included a total of five participants (n = 5). Regarding nursing experience, the cohort comprised 2 Amateur, 2 Beginner, and 1 Intermediate participants. In terms of technical experience, the distribution was 3 Amateur, 1 Advanced, and 1 Expert.

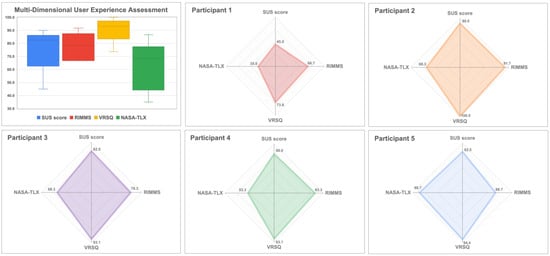

3.2.2. Multi-Dimensional User Experience Assessment

A comprehensive quantitative evaluation of the user experience outcomes is described in Figure 8.

Figure 8.

Multi-dimensional user experience assessment using SUS, NASA-TLX, RIMMS and VRSQ.

The system achieved a median SUS score of 82.5 [80.0–82.5], which corresponds to an “Excellent” rating on the adjectival rating scale [20,24]. The item-level analysis (see Appendix A, Table A1) revealed strengths in the integration of functions (median = 5.0) and ease of learning (median = 4.0). Participants perceived the system as having a low complexity (median = 2.0), reinforcing its intuitive nature.

The overall median RIMMS score was 3.7 [3.7–4.1], indicating a moderate-to-high level of motivation [25]. The subscale analysis (Appendix A, Table A2) showed that the satisfaction (median = 4.3) and confidence (median = 4.0) dimensions were rated the highest, suggesting that users found the experience enjoyable and felt capable of using the system effectively. Relevance scored high (median = 4.0). The Attention dimension scored the lowest (median = 2.7), indicating an opportunity for interface improvements to better capture and maintain user focus.

The overall median VRSQ severity score was extremely low at 0.4 [0.0–0.0], indicating that the system was well-tolerated physiologically [22]. As shown in Appendix A, Table A3, most symptoms were nonexistent (median = 0.0). The most frequently reported symptoms, although at minimal levels, were fatigue (median = 1.0) and dizziness (median = 1.0), confirming the excellent comfort of the system for clinical use.

The overall median of the NASA-TLX score was 31.7 [31.7–46.7], indicating a low-to-moderate perceived workload [23]. Subscale analysis (Appendix A, Table A4) revealed that effort (median = 70.0) and frustration (median = 60.0) were the primary contributors to workload. However, Mental Demand (median = 20.0) and Physical Demand (median = 40.0) were rated relatively low, indicating that the task was neither physically strenuous nor cognitively complex; however, interacting with the interface requires certain operational effort to manipulate virtual elements.

3.2.3. Qualitative Results—Open Questions

- Most Helpful Aspects

The participants consistently emphasized that the system’s ability to facilitate patient identification and medication verification was the most valuable feature. One participant noted, “It allows for intuitive and immediate drug compatibility checks and patient verification,” while another highlighted, “In transfusion tasks, transfusion errors can be fatal… this system may allow one person to handle the task instead of two.” Additionally, the centralization of tools and information within a single interface was complimented for streamlining workflow, with users appreciating that “every tool and information is available at the same place with just a press of a button.”

- Innovative or Useful Features

The OCR functionality was repeatedly described as innovative. One participant stated, “Performing quick and precise OCR on the fluid packets despite their transparency is impressive.” Certain users, less familiar with the concept of the metaverse, described its overall presence as inherently innovative: “I don’t know much about the metaverse, so I’m not sure what exactly is innovative, its very existence just felt innovative to me.” The system’s decision support, including step-by-step guidance and automated compatibility checks, was valued for reducing cognitive load and aiding less-experienced nurses.

- Workflow and Decision-Making

The system was reported to significantly improve workflow efficiency by enabling real-time information access at the patient’s bedside and minimizing unnecessary trips to nurse stations. One participant emphasized, “Portable hospital devices are limited, and their batteries drain quickly, so I often had to go back and forth… With HoloLens, tasks like checking drug information could be handled immediately and directly in front of the patient.” The integrated to-do list and process guidance helped users follow correct procedures and reduce omissions. A seasoned nurse noted that the system “does all the decision making for you,” suggesting that it may enable single nurses to safely perform tasks that typically require double verification.

- Suggestions for Improvement

User feedback primarily focused on interface usability and hardware ergonomics. The participants recommended positioning alert windows closer to the user’s field of view, making them automatically disappear after a few seconds, and incorporating subtle audio cues to ensure alerts were noticed without cluttering the display. One user detailed, “When multiple windows stack up, it becomes confusing… a subtle sound when the window appears would help.” Other suggestions include merging scanning functions, automating checklist updates, and enabling batch scanning of multiple items to accelerate workflow. Certain users demonstrated the need for improved text clarity and size for better readability and highlighted the importance of precise fluid volume recognition for clinical accuracy. Hardware-wise, lighter devices and more responsive controls are desired. One participant commented, “The metaverse device felt light. I hope that it will continue to evolve into even lighter models.”

Overall, the participants expressed a positive experience with the system and encouraged future versions to incorporate the suggested enhancements to further improve usability and clinical effectiveness.

4. Discussion

In this study, we developed a next-generation BCMA by leveraging AI and MR technologies and evaluated its feasibility in a simulated setting as a hands-free, contactless solution. Quantitative results demonstrated excellent usability (median SUS = 82.5), strong user motivation (median RIMMS = 3.7), and exceptional physical comfort (median VRSQ = 0.4), which are critical factors for adopting any new technology in a demanding clinical environment.

Quantitative evaluation confirmed high usability and acceptability, with scores reflecting strong perceptions of usefulness and intuitiveness, which is consistent with previous work on technology adoption in healthcare. Participants rated the system as ‘excellent’ on Bangor’s adjective rating scale [24], highlighting its positive reception. Importantly, nurses with limited exposure to MR or metaverse technologies reported that the system was accessible and beneficial to them. This inclusiveness across diverse user backgrounds aligns with prior studies demonstrating the feasibility and usability of HoloLens 2 in clinical education and workflow support [13,26,27]. Nevertheless, targeted HoloLens 2 training could further enhance its adoption.

Comparing this system to existing technologies, its primary advantage over traditional BCMA is its hands-free nature, which directly addresses the workflow, ergonomic, and hygienic limitations of handheld scanners. Ankam et al. [13] proposed an MR-based pediatric medication administration system for prehospital emergency settings using HoloLens 2 and demonstrated a conceptually similar MR-based verification workflow, validating the feasibility of this design direction. This study enriches this field by adding facial recognition as a second identification factor and providing a more comprehensive user experience evaluation that includes motivation and comfort. The motivation score (median RIMMS = 3.7) was consistent with other studies, such as that of [28], who found high motivation in immersive learning environments, suggesting that these technologies are inherently engaging. The relatively lower score on the RIMMS “Attention” dimension (median = 2.7) can be directly linked to the identified cognitive load issues, where managing the interface may divert attention from the primary task.

These findings carry significant clinical implications. The digital “second verifier” concept, highlighted by participants, could transform workflows for high-risk procedures (e.g., blood transfusions, chemotherapy administration) that currently require the physical presence of two clinicians. Such a system may improve efficiency, reduce staff workload, and enhance safety by providing automated verification. Furthermore, by streamlining workflow and minimizing unnecessary movement, it can free valuable clinician time for direct patient care.

Nevertheless, this evaluation identifies key limitations and areas for future research. Qualitative feedback highlights the need to refine alert positioning and audio notifications and optimize checklist integration. Participants noted hardware-related issues, such as the HoloLens 2’s weight, which aligned with the challenge documented in a systematic review [14].

A key finding from our performance test was the identification of a clear failure case. As shown in Section 3.1, fluid recognition had the lowest accuracy (80%). This aligns with qualitative feedback from participants who found scanning fluid packets challenging. We attribute these errors primarily to optical challenges: light glare and reflections on the transparent, curved surfaces of IV bags and text distortion on the non-rigid, flexible packets. Additionally, the printing on some fluid packages was orange, which, when the user was holding the fluid, caused the text to merge with the color of their hands, further complicating recognition. This provides a clear direction for future work, requiring image pre-processing algorithms to mitigate reflections, as well as training models on more diverse image sets to enhance OCR robustness on such materials. Methodologically, this study has several notable limitations. The sample size was small (N = 5), and thus the findings should be interpreted as preliminary evidence of feasibility and usability rather than generalizable validation. The evaluation was conducted in a simulated environment rather than a real clinical ward, which limits external validity. Furthermore, the system was tested only on a single hardware platform (HoloLens 2) without assessing multi-user scalability, variable lighting conditions, or network latency. The OCR and facial recognition modules were validated on a limited dataset (primarily Korean), leaving potential algorithmic bias unexamined. Future research should include real-world pilot testing with larger and more diverse populations. The current prototype performs all facial data processing locally, enhancing privacy; however, large-scale deployment would require full compliance with healthcare data protection regulations (e.g., HIPAA, GDPR, and Korean laws in the authors’ case). Robust cybersecurity measures and data integrity safeguards are essential, and a comprehensive data governance and audit framework should be established prior to clinical implementation.

5. Conclusions

Our next-generation BCMA offers a practical solution to the limitations of existing BCMA systems, including reliance on handheld devices, workflow interruptions, and hygienic constraints. The current prototype enables hands-free interaction, integrates relevant information into a centralized view, and provides real-time compatibility checks, thereby functioning as a potential “second verifier.” This study demonstrates that with the application of MR technology in alignment with the nurse’s workflow, the system was validated as a means for enhancing medication safety. However, additional work is required to realize its potential in daily clinical practice, including ensuring compliance with privacy and security requirements, minimizing latency through on-device processing, and providing standardized user training.

Author Contributions

Conceptualization, W.C.C. and J.Y.; methodology, N.L.V., S.J.K., S.A. and J.S.Y.; software, N.L.V. and S.J.K.; validation, S.A., J.S.Y., S.H.P., J.H.H., M.-J.K., I.K., M.H.S. and W.C.C.; formal analysis, N.L.V. and S.J.K.; data curation, N.L.V. and S.J.K.; writing—original draft preparation, N.L.V. and S.J.K.; writing—review and editing, S.A., J.S.Y., S.H.P., J.H.H., M.-J.K., I.K., M.H.S. and W.C.C.; visualization, N.L.V. and S.J.K.; supervision, W.C.C. and J.Y.; project administration, J.Y.; funding acquisition, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by a grant from the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health and Welfare, Republic of Korea (grant number: RS-2023-KH134974). And the APC was funded by same source.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Samsung Medical Center (Approval No. 2025-06-129).

Informed Consent Statement

Written informed consent has been obtained from the participants to publish this paper.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

Author Il Kim was employed by the company SurgicalMind Inc. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| API | Application Programming Interface |

| BCMA | Bar-Code Medication Administration |

| GDPR | General Data Protection Regulation |

| HIPAA | Health Insurance Portability and Accountability Act |

| HTTP | Hypertext Transfer Protocol |

| IV Fluids | Intravenous Fluids |

| MR | Mixed Reality |

| MRTK3 | Mixed Reality Toolkit 3 |

| NASA-TLX | NASA Task Load Index |

| OCR | Optical Character Recognition |

| QR code | Quick Response code |

| RIMMS | Reduced Instructional Materials Motivation Survey |

| SUS | System Usability Scale |

| VRSQ | Virtual Reality Sickness Questionnaire |

Appendix A

Table A1.

System Usability Scale (SUS) results.

Table A1.

System Usability Scale (SUS) results.

| Survey Item | Median Score [Q1–Q3] |

|---|---|

| I would like to use this system frequently. | 4.0 [3.0–4.0] |

| The system was unnecessarily complex. | 2.0 [2.0–2.0] |

| The system was easy to use. | 4.0 [4.0–4.0] |

| I need technical support to use this system | 2.0 [1.0–4.0] |

| Functions were well integrated. | 5.0 [4.0–5.0] |

| There was too much inconsistency. | 1.0 [1.0–2.0] |

| Most people would learn to use this quickly. | 4.0 [4.0–5.0] |

| The system was cumbersome to use. | 1.0 [1.0–2.0] |

| I felt confident using the system. | 4.0 [4.0–4.5] |

| I needed to learn a lot before using it. | 1.0 [1.0–2.0] |

| SUS Score (1–100) | 82.5 [80-82.5] |

Table A2.

Reduced Instructional Materials Motivation Survey (RIMMS) results with items categorized by ARCS components.

Table A2.

Reduced Instructional Materials Motivation Survey (RIMMS) results with items categorized by ARCS components.

| Survey Content (Component) | Median Score [Q1–Q3] |

|---|---|

| Relevance (R) | 4.0 [3.7–4.7] |

| It is clear to me how the content is related to things I already know. The content will be useful to me. The content conveys the impression that its contents are worth knowing. | 5.0 [4.0–5.0] 3.0 [3.0–4.0] 4.0 [4.0–5.0] |

| Attention (A) | 2.7 [2.3–3.0] |

| The quality helped to hold my attention. The way the information is arranged helped keep my attention. The variety of digital content helped keep my attention on the lesson. | 4.0 [2.0–4.0] 2.0 [2.0–5.0] 3.0 [3.0–4.0] |

| Confidence (C) | 4.0 [3.7–4.3] |

| I was confident that I could understand the content. The good organization helped me be confident that I would learn the material. After a while, I was confident that I would be able to complete the tasks. | 4.0 [4.0–4.0] 4.0 [4.0–4.0] 5.0 [2.0–5.0] |

| Satisfaction (S) | 4.3 [3.3–4.3] |

| It was a pleasure to work with such a well-designed software. I really enjoyed working with this system. I enjoyed working so much that I think I will continue using it. | 4.0 [4.0–5.0] 4.0 [4.0–4.0] 4.0 [4.0–4.0] |

| Overall Mean Score (1–5) | 3.7 [3.7–4.1] |

Table A3.

Virtual Reality Sickness Questionnaire (VRSQ) results categorized by symptom type.

Table A3.

Virtual Reality Sickness Questionnaire (VRSQ) results categorized by symptom type.

| Symptom | Median Severity [Q1–Q3] |

|---|---|

| General Body Symptoms | |

| General discomfort | 0.0 [0.0–2.0] |

| Fatigue | 1.0 [0.0–2.0] |

| Boredom | 0.0 [0.0–0.0] |

| Drowsiness | 0.0 [0.0–0.0] |

| Dizziness | 1.0 [0.0–2.0] |

| Difficulty concentrating | 0.0 [0.0–1.0] |

| Nausea | 0.0 [0.0–0.0] |

| Eye-Related Symptoms | |

| Tired eyes | 0.0 [0.0–0.0] |

| Sore/aching eyes | 0.0 [0.0–0.0] |

| Eyestrain | 0.0 [0.0–0.0] |

| Blurred vision | 0.0 [0.0–1.0] |

| Difficulty focusing | 0.0 [0.0–2.0] |

| Overall VRSQ Score (0–6) | 0.4 [0.0–0.0] |

Table A4.

NASA-TLX Questionnaire results.

Table A4.

NASA-TLX Questionnaire results.

| Criteria | Median [Q1–Q3] |

|---|---|

| Mental demand | 20.0 [10.0–30.0] |

| Physical demand | 40.0 [10.0–40.0] |

| Temporal demand | 30.0 [30.0–30.0] |

| Effort | 70.0 [30.0–70.0] |

| Performance | 30.0 [10.0–30.0] |

| Frustration | 60.0 [40.0–70.0] |

| Overall Workload Score | 31.7 [31.7–46.7] |

References

- Tariq, R.A.; Vashisht, R.; Sinha, A.; Scherbak, Y. Medication dispensing errors and prevention. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2025. Available online: https://www.ncbi.nlm.nih.gov/books/NBK519065/ (accessed on 2 July 2025).

- Kohn, L.T.; Corrigan, J.M.; Donaldson, M.S. To Err Is Human: Building a Safer Health System; National Academies Press: Washington, DC, USA, 2000. [Google Scholar] [CrossRef]

- World Health Organization. Medication Without Harm. Available online: https://www.who.int/initiatives/medication-without-harm (accessed on 2 July 2025).

- Ministry of Health and Welfare; Korea Institute for Healthcare Accreditation. Korean Patient Safety Incident Report 2023; Korea Institute for Healthcare Accreditation: Seoul, Republic of Korea, 2024; Available online: http://www.kops.or.kr (accessed on 2 July 2025).

- Poon, E.G.; Keohane, C.A.; Yoon, C.S.; Ditmore, M.; Bane, A.; Levtzion-Korach, O.; Moniz, T.; Rothschild, J.M.; Kachalia, A.B.; Hayes, J.; et al. Effect of Bar-Code Technology on the Safety of Medication Administration. N. Engl. J. Med. 2010, 362, 1698–1707. [Google Scholar] [CrossRef] [PubMed]

- Son, E.S.; Kim, A.Y.; Lee, Y.W.; Kim, J.S.; Song, H.S.; Kim, S.E.; Lee, A.Y.; Seok, H.J.; Hwang, K.J.; Park, S.B. Medication Errors and Considerations Related to Injectable Drugs. J. Korean Soc. Health-Syst. Pharm. 2003, 20, 220–224. [Google Scholar]

- Tiu, M.G.; Ballarta, P.J.; De Leon, K.A.; Isulat, T.; Narvaez, R.A.; Salas, M. Impact on Use of Barcode Scanners in Medication Administration: An Integrative Review. Can. J. Nurs. Inform. 2025, 20, 1. Available online: https://cjni.net/journal/?p=14288 (accessed on 4 July 2025).

- Koppel, R.; Wetterneck, T.; Telles, J.L.; Karsh, B.T. Workarounds to Barcode Medication Administration Systems: Their Occurrences, Causes, and Threats to Patient Safety. J. Am. Med. Inform. Assoc. 2008, 15, 408–423. [Google Scholar] [CrossRef] [PubMed]

- Bates, D.W.; Levine, D.; Syrowatka, A.; Kuznetsova, M.; Craig, K.J.T.; Rui, A.; Jackson, G.P.; Rhee, K. The potential of artificial intelligence to improve patient safety: A scoping review. npj Digit. Med. 2021, 4, 54. [Google Scholar] [CrossRef]

- Eckert, M.; Volmerg, J.S.; Friedrich, C.M. Augmented Reality in Medicine: Systematic and Bibliographic Review. JMIR Mhealth Uhealth 2019, 7, e10967. [Google Scholar] [CrossRef] [PubMed]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing Healthcare: The Role of Artificial Intelligence in Clinical Practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef] [PubMed]

- Huo, Y.; Gang, S.; Guan, C. FCIHMRT: Feature Cross-Layer Interaction Hybrid Method Based on Res2Net and Transformer for Remote Sensing Scene Classification. Electronics 2023, 12, 4362. [Google Scholar] [CrossRef]

- Ankam, V.S.S.; Hong, G.Y.; Fong, A.C. Design of a Mixed-Reality Application to Reduce Pediatric Medication Errors in Prehospital Emergency Care. Appl. Sci. 2024, 14, 8426. [Google Scholar] [CrossRef]

- Palumbo, A. Microsoft HoloLens 2 in Medical and Healthcare Context: State of the Art and Future Prospects. Sensors 2022, 22, 7709. [Google Scholar] [CrossRef] [PubMed]

- Microsoft. Mixed Reality Toolkit 3 (MRTK3) Overview. Microsoft Learn: Updated 10 February 2025. Available online: https://learn.microsoft.com/ko-kr/windows/mixed-reality/mrtk-unity/mrtk3-overview/ (accessed on 10 July 2025).

- Strecker, J.; García, K.; Bektaş, K.; Mayer, S.; Ramanathan, G. SOCRAR: Semantic OCR through Augmented Reality. In Proceedings of the ACM International Conference, Lisbon, Portugal, 6–8 September 2023; pp. 25–32. [Google Scholar] [CrossRef]

- NAVER Cloud Corporation. CLOVA OCR Overview. NAVER Cloud Platform Guide. Available online: https://guide.ncloud-docs.com/docs/clovaocr-overview (accessed on 2 July 2025).

- Ageitgey. Face_Recognition: The World’s Simplest Facial Recognition API for Python and the Command Line. Available online: https://github.com/ageitgey/face_recognition (accessed on 13 March 2025).

- Lunding, R.; Hubenschmid, S.; Feuchtner, T.; Grønbæk, K. ARTHUR: Authoring Human–Robot Collaboration Processes with Augmented Reality Using Hybrid User Interfaces. Virtual Real. 2025, 29, 73. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A quick and dirty usability scale. Usability Eval. Ind. 1995, 189, 4–7. Available online: https://www.researchgate.net/publication/228593520_SUS_A_quick_and_dirty_usability_scale (accessed on 20 August 2025).

- Loorbach, N.; Peters, O.; Karreman, J.; Steehouder, M. Validation of the Instructional Materials Motivation Survey (IMMS) in a Self-Directed Instructional Setting Aimed at Working with Technology. Br. J. Educ. Technol. 2015, 46, 204–218. [Google Scholar] [CrossRef]

- Kim, H.K.; Park, J.; Choi, Y.; Choe, M. Virtual Reality Sickness Questionnaire (VRSQ): Motion Sickness Measurement Index in a Virtual Reality Environment. Appl. Ergon. 2018, 69, 66–73. [Google Scholar] [CrossRef] [PubMed]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum. Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Huang, B.; Hew, K.F. Implementing a Theory-Driven Gamification Model in Higher Education Flipped Courses: Effects on Out-of-Class Activity Completion and Quality of Artifacts. Comput. Educ. 2018, 125, 254–272. [Google Scholar] [CrossRef]

- Johnston, M.; O’Mahony, M.; O’Brien, N.; Connolly, M.; Iohom, G.; Kamal, M.; Shehata, A.; Shorten, G. The Feasibility and Usability of Mixed Reality Teaching in a Hospital Setting Based on Self-Reported Perceptions of Medical Students. BMC Med. Educ. 2024, 24, 701. [Google Scholar] [CrossRef] [PubMed]

- Connolly, M.; Iohom, G.; O’Brien, N.; Volz, J.; O’Muircheartaigh, A.; Serchan, P.; Biculescu, A.; Gadre, K.G.; Soare, C.; Griseto, L.; et al. Delivering Clinical Tutorials to Medical Students Using the Microsoft HoloLens 2: A Mixed-Methods Evaluation. BMC Med. Educ. 2024, 24, 498. [Google Scholar] [CrossRef]

- Cecotti, H.; Huisinga, L.; Peláez, L.G. Fully Immersive Learning with Virtual Reality for Assessing Students in Art History. Virtual Real. 2024, 28, 33. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).