1. Introduction

In modern manufacturing, manual operations continue to play a decisive role in determining production costs and product quality, even in highly automated contexts [

1,

2]. To analyze and optimize these human-centered workflows, the Methods-Time Measurement (MTM) system has long been a cornerstone methodology [

3]. MTM decomposes manual tasks into standardized basic motions, each assigned a Time Measurement Unit (TMU), enabling highly granular productivity studies to be conducted. While MTM-1 achieves maximum precision through 17 motion categories, MTM-2 offers a more streamlined analysis with nine core movements, and is better suited for medium-scale production lines [

2,

4,

5].

Despite its usefulness, conventional MTM analysis is hindered by significant drawbacks. Manual video transcription by experts is slow, expensive, and subject to human error [

3,

5]. Consequently, time studies are often performed late in the production cycle, when workplaces are already fixed, limiting their potential to drive workplace redesign and proactive optimization [

4,

5]. These limitations underscore the urgent need for automated, objective, and scalable solutions.

Recent advances in Computer Vision (CV) and Artificial Intelligence (AI) offer a promising pathway to overcome these challenges [

6,

7]. CV enables machines to perceive and interpret visual data through techniques such as object detection, motion tracking, and pose estimation, making it particularly suitable for analyzing human actions in industrial settings. Within the paradigm of Industry 4.0 and the emerging concept of Industry 5.0, these technologies are critical enablers of intelligent manufacturing, where automation must coexist with adaptability, ergonomics, and data-driven decision-making [

1,

3]. Previous exploratory efforts using Virtual Reality (VR) and sensor-based MTM transcription showed feasibility [

4,

5], yet still relied heavily on manual inputs, preventing full automation [

5,

8].

Section 2 delves deeper into these studies.

This work introduces a CV methodology that combines a Convolutional Neural Network (CNN) for robust feature extraction with a supervised classifier for efficient MTM-2 motion classification. Specifically, we employ a pre-trained MobileNetV2 architecture [

9] to detect key anatomical landmarks, from which six angular kinematic features are calculated to characterize joint articulation in the upper limbs. These features, together with

-plane trajectories, provide discriminative descriptors for classifying MTM-2 motions. A novel dataset comprising five representative patterns (Start Position, Reach 15 cm, Reach 30 cm, Place 15 cm, and Place 30 cm) was developed, filling a gap in the literature by offering a benchmark for industrial motion analysis. The K-Nearest Neighbors (KNN) algorithm was selected for its simplicity, adaptability, and proven reliability with the extracted multidimensional biomechanical data.

This study makes three primary contributions to the literature:

It demonstrates a computationally fast, scalable, and cost-effective alternative for real-time transcription of MTM movements.

It incorporates ergonomic risk assessment into motion classification, enabling proactive identification of postural anomalies such as wrist hyperextension.

It lays the foundation for integration into Digital Twins and Manufacturing Execution Systems (MESs), positioning the methodology as a building block for adaptive, human-centered, and sustainable production strategies.

By combining precision, efficiency, and ergonomics, the proposed approach directly supports smart manufacturing goals, particularly by facilitating real-time decision-making and empowering SMEs to adopt Industry 4.0 practices.

It is important to mention that, beyond the analysis presented in this work, the proposed approach is conceived as a perceptual edge node within a broader smart manufacturing architecture. The integration mechanism envisioned in MESs, as well as Digital Twins, could be structured as follows: The edge device (e.g., an industrial PC with a GPU) hosts the CV model, and processes the video sequences to generate two main data outputs: (1) classified MTM-2 motion codes with timestamps, and (2) extracted ergonomic risk metrics (e.g., joint angles, risk indicators). This data is packaged into lightweight JSON messages, structured according to a predefined schema. Messages are then published to a centralized message broker (e.g., MQTT or Kafka) via a factory-wide IoT network. The MES subscribes to this message stream and ingests the motion codes to update task completion times in real time, calculate production efficiency (e.g., OEE), and dynamically adjust work schedules. Simultaneously, the Digital Twin, a virtual replica of the production system, subscribes to the same data stream. It uses the real-time ergonomic and motion data to update its simulation model, enabling proactive what-if analysis, workstation redesign validation, and long-term trend analysis for preventative health-and-safety interventions. This decoupled publish–subscribe architecture ensures scalability and interoperability, allowing the vision system to work with legacy and modern systems without the need for direct point-to-point integration.

The remainder of this paper is structured as follows:

Section 2 reviews related work on MTM analysis, ergonomic assessment, and the use of CV in manufacturing.

Section 3 introduces the proposed intelligent motion classification methodology, while

Section 4 presents the experimental design together with the results and their analysis.

Section 5 reports on the validation of the MTM-2 using real-time data of classified movements and an analysis of ergonomic risk prevention.

Section 6 discusses the main findings and limitations, and

Section 7 concludes the paper and proposes directions for future research.

2. Related Work

2.1. Traditional Methods-Time Measurement and Its Challenges

Recent efforts have also focused on extending the MTM standards themselves, such as the MTM-HWD system, which has been statistically validated as a faster and reliable alternative to MTM-1 for specific cycle times, with deviations below 5% [

10]. The meticulous quantification and analysis of human motion within industrial contexts have traditionally been key pillars for enhancing operational efficiency and overall productivity [

1]. Among the established methodologies, Methods-Time Measurement (MTM) stands as a predetermined motion time system, systematically dissecting manual tasks into a series of fundamental, quantifiable motions, each assigned a precise time value, known as a Time Measurement Unit (TMU) [

2,

3]. One TMU is equivalent to 0.036 s, allowing for detailed analyses of operations lasting less than a second [

1,

8].

The MTM system encompasses a spectrum of standards, each varying in its level of analytical granularity and applicability [

1,

3,

4]. MTM-1 provides the highest degree of detail, identifying 17 basic motions, making it suitable for mass-production scenarios involving rigorous analysis. On the other hand, MTM-2, a derivative of MTM-1, streamlines the analysis by focusing on nine primary movements: Get; Put; Apply Pressure; Regrasp; Crank; Eye Action; Foot Motion; Step; and Bend and Arise [

1,

2,

3]. While less precise than MTM-1, MTM-2 substantially lowers transcription effort, making it more appropriate for smaller production series or medium-scale production lines [

1,

2,

4,

5]. Other standards like MTM-UAS and MTM-MEK further reduce complexity for even smaller batches or single productions, often relying on general conditions rather than detailed movement sequences [

1,

2].

Despite its proven utility in workplace optimization and establishing standard times [

5,

6], the conventional application of MTM analysis faces substantial inherent limitations. Traditionally, MTM analysis necessitates manual video transcription performed by MTM experts [

1,

2,

3]. This process is inherently time-consuming, cost-prohibitive, and susceptible to human inconsistencies and errors [

2,

3,

6]. For instance, a comparative study revealed that four days were required for the manual MTM-2 transcription of recordings from 21 users, across both real and virtual environments [

2]. Furthermore, the traditional approach often mandates the construction of physical workplaces or cardboard mock-ups for the analysis, which hinders early-stage production planning and design iterations, generates waste, and frequently lacks sufficient detail for workers to perform tasks as they would in a real environment [

2,

3,

6]. These deep-seated constraints underscore a compelling imperative for the development of automated, demonstrably more efficient, and objectively verifiable solutions.

2.2. Ergonomic Risk Prevention

Ergonomic Posture Risk Assessment (EPRA) is essential for reducing Work-Related Musculoskeletal Disorders (WMSDs). A significant challenge in automated EPRA is differentiating transient, task-required postures from sustained, hazardous ones. This work addresses this by integrating kinematic analysis with temporal context- and task-specific expectations. These disorders pose a serious risk to worker health and lead to significant economic losses in fields like manufacturing and construction [

11,

12,

13,

14]. Traditionally, EPRA has depended on self-reporting, cumbersome sensor measurements, or time-consuming observation methods such as RULA, REBA, and OWAS [

11,

15,

16]. The rise of Deep Learning (DL) has accelerated this trend. Recent studies have validated CV ergonomic assessment tools in real manufacturing environments, reporting high agreement with expert-based methods like RULA while being significantly faster. While these traditional methods are important, they often lack objectivity, require a lot of time, and do not scale well. This limits consistent and unbiased monitoring in changing work settings [

11,

12,

13,

15,

17]. The rise of CV and Machine Learning (ML) technologies represents a significant breakthrough in workplace health and safety. These tools enable automated, data-driven, and non-invasive ergonomic assessments that overcome the drawbacks of older methods [

11,

13,

14,

15].

This integration of technology allows for the precise extraction of human movement features using advanced pose estimation techniques. These techniques turn video or image data into detailed joint angles [

11,

15,

18]. Systems that use DL algorithms—such as CNNs and platforms like MediaPipe—provide real-time, accurate 2D and 3D human pose estimation. Furthermore, Yang et al. [

15] conducted a systematic review confirming the effectiveness of CNNs and pose estimation models like OpenPose and MediaPipe in automating posture risk assessment, though Kunz et al. [

14] noted challenges in processing speed and occlusion handling. This is vital for spotting awkward postures and predicting WMSD risk levels [

14,

15,

18]. The main benefits include greater objectivity, better efficiency, non-invasiveness, and lower costs, making these tools especially useful for continuous monitoring in smart manufacturing and SMEs [

11,

12,

15,

18]. However, challenges persist, such as maintaining high accuracy on complex backgrounds, monitoring small joints like the wrist, and addressing sudden changes in risk scores that traditional observation tools often fail to detect. Innovations like fuzzy logic help in proactively identifying specific ergonomic issues [

13,

14,

15,

19], offering practical insights for redesigning workstations and implementing preventive measures. To address the issue of computational cost for real-time deployment, Cruciata et al. [

17] proposed a lightweight Vision Transformer model for frame-level ergonomic posture classification, demonstrating a viable path for integration into industrial workflows.

2.3. Computer Vision for Smart Manufacturing

Beyond quality control, CV is increasingly applied to human-centric analysis. Andreopoulos, Gorobetz & Kunz [

20] provided a comprehensive survey of CV techniques in manufacturing, highlighting human action recognition as a key emerging application area for ensuring safety and efficiency. The critical challenge of real-time processing in complex environments is being addressed by new architectures. For instance, Liang et al. [

21] developed a manufacturing-oriented intelligent vision system based on deep neural networks for robust object recognition and pose estimation, a foundational technology for interaction analysis. The accelerating advancements in CV and Artificial Intelligence (AI) technologies present a promising avenue for overcoming the limitations of conventional MTM analysis. CV, fundamentally, is a field of computer science dedicated to enabling machines to “perceive” and interpret their surrounding world by extracting meaningful information from visual data [

7]. This discipline synthesizes principles from image processing, ML, and pattern recognition, making it adept at tasks such as object detection, image classification, edge detection, and object tracking [

6,

7].

The integration of CV and AI is increasingly pivotal across various industrial domains, particularly within the context of Industry 4.0 initiatives that emphasize digitalization and automation [

4,

6]. In manufacturing, CV has been successfully applied for quality control, such as in the real-time tracking and detection of internal accessories in refrigeration units on a production line [

6]. Similarly, comprehensive reviews have highlighted that CV techniques support the entire product lifecycle—from design and simulation to inspection, assembly, and disassembly—although challenges remain in algorithm implementation, data labeling, and benchmarking [

22,

23]. Moreover, CV systems are being developed for human action detection in applications such as inventory control, where they can identify and count objects, and even discern human manipulation through hand detection, contributing to enhanced security and real-time record-keeping [

6,

7]. While these systems face challenges such as object overlapping, occlusion, and sensor focus loss, they demonstrate significant potential for automated monitoring and real-time decision-making [

21,

24].

Prior research has also explored the application of these technologies, particularly in Virtual Reality (VR) environments, to facilitate MTM analysis [

2,

3,

4,

6]. Studies have confirmed the feasibility of conducting MTM analysis in VR, showing that MTM-2 values obtained in virtual settings are comparable to those from real workplaces [

1,

3]. VR-based approaches reduce the need for physical mock-ups, allowing for more agile workplace alterations and early-stage planning. Some works have even proposed algorithms for automatic MTM transcription, for instance using trackers or decision trees to segment motions into MTM codes [

20]. Nevertheless, these approaches face persistent challenges such as slower human motion in VR environments, susceptibility to false positives when unrelated actions occur, and limitations in tracking full-body movements with traditional sensors like Kinect [

1,

2].

Beyond VR, image-based and AI-driven approaches have shown potential for direct motion classification in real environments. For instance, recurrent neural networks have been used to classify five MTM operations (Reach, Grasp, Bring, Release, Position) based on image processing [

25]. DL-based methods have also been applied to detect unsafe acts and ergonomically risky postures in industrial tasks, achieving accuracies above 90% in scenarios such as drilling or collaborative polishing [

26]. In parallel, some AI frameworks provide efficient pipelines for pose estimation, enabling motion analysis in both 2D and 3D [

27]. These developments suggest that advanced CV architectures, including CNNs, RNNs, and hybrid pipelines, can extend MTM applications beyond VR and into real-world factory floors [

28].

Recent efforts have also focused on extending MTM standards themselves, such as the MTM-HWD system, which has been statistically validated as a faster and more reliable alternative to MTM-1 for specific cycle times, with deviations below 5% [

10]. These contributions highlight both the adaptability of MTM to modern production contexts and the ongoing need for automated tools that reduce transcription costs and improve consistency.

Prior research has demonstrated the feasibility of applying CV and AI for MTM automation and ergonomic analysis, but most approaches remain limited to controlled or virtual environments, rely on specialized hardware, or focus on narrow sets of tasks. These constraints hinder their adoption in real production settings, particularly within SMEs. Therefore, there is still a need for lightweight, adaptable, and cost-effective frameworks that can reliably classify motion patterns while also supporting ergonomic risk prevention in practical industrial contexts.

The validation of these systems in real-world settings remains a focus. De Feudis et al. [

24] evaluated vision-based hand-tool-tracking methods, highlighting their potential for quality assessment and training in human-centered Industry 4.0 applications.

3. Intelligent Motion Classification

The proposed methodology outlines a comprehensive framework for the automated classification of human motion patterns in industrial production processes, primarily leveraging advanced CV and ML techniques. It is designed to overcome the main limitations of traditional manual time studies, including their susceptibility to human error, subjective interpretation, and significant time requirements. By focusing on detailed posture analysis and subsequent algorithmic categorization, the system provides a robust, scalable, and objective alternative for improving operational efficiency. The methodological workflow, grounded in established principles of industrial engineering, is organized into several key phases, with particular emphasis on detection, feature extraction, and classification, supported by CNN and a supervised algorithm.

3.1. Data Acquisition

The foundational phase of this methodology involves the systematic capture of video sequences, conducted under controlled lighting conditions to ensure optimal image quality and consistency throughout the experimentation. To achieve this, a high-resolution camera (Logitech C922 Pro HD, Logitech, Lausanne, Switzerland) was strategically placed 1.2 m above a dedicated workstation, at a 45-degree angle to the operator, providing a clear overhead view of torso and arm movements. Lighting was kept constant at 500 lux to minimize shadows and ensure reliable pose estimation.

The workstation was configured according to standard setup guidelines, with a bench height of 95 cm and tools/components placed at predefined distances (15 cm and 30 cm) from a marked starting position. Its orientation was calibrated to provide a clear view of the operator from the waist upwards, focusing on a designated workbench area. This specific top-down perspective is indispensable for enabling a precise analysis of both the kinematic trajectories and subtle postural variations of the operator. While the initial validation was performed in a controlled laboratory environment, the broader objective remains the development of a solution adaptable to the complexities of real-world industrial production lines, where environmental variables like fluctuating illumination and potential object occlusions necessitate robust image processing capabilities.

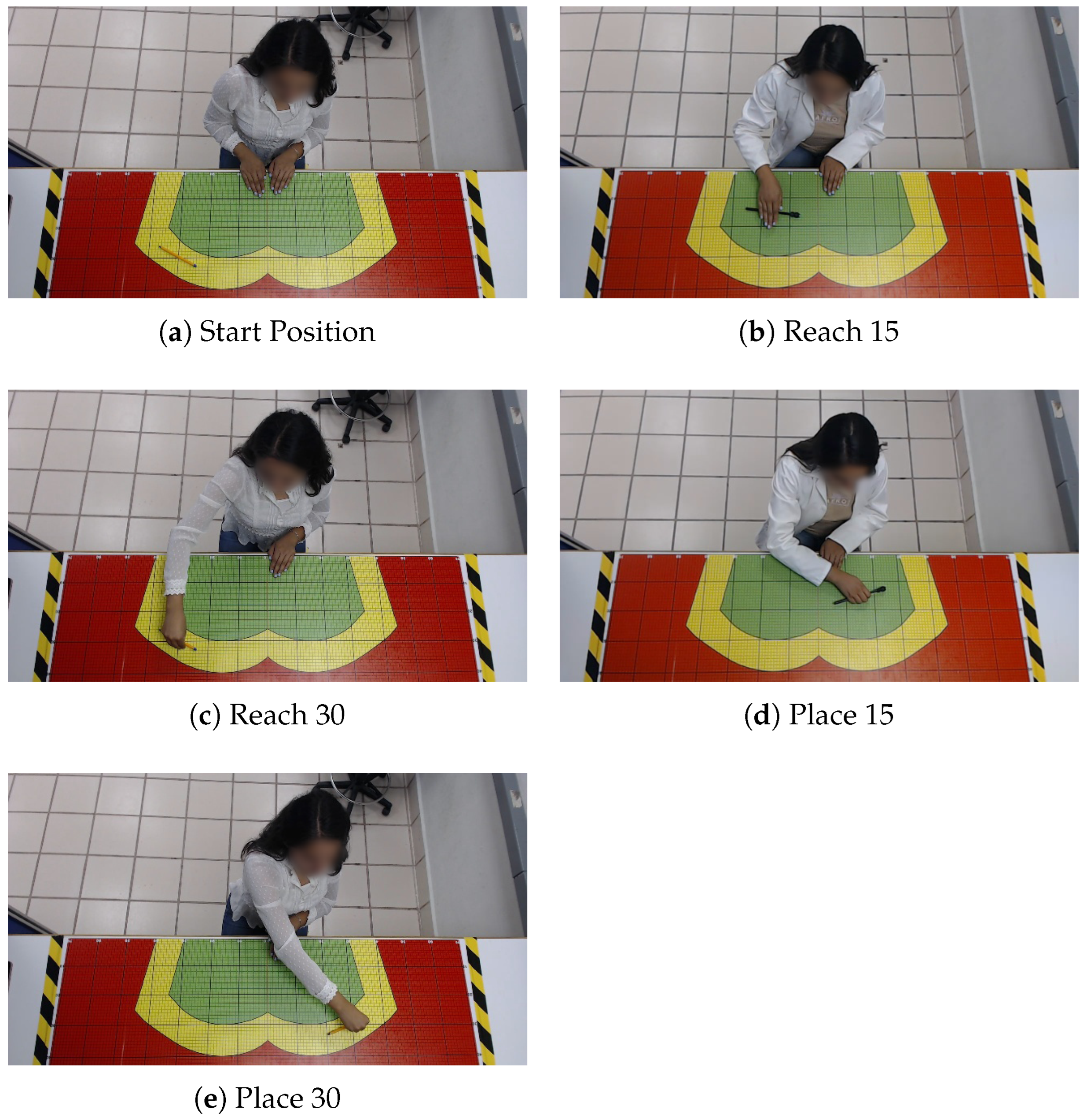

To obtain a variety of movements, two experienced operators (one male and one female) were involved in the capture process, and a benchmark was established during this proof-of-concept phase. The operators were instructed to perform 60 repetitions of each of the five MTM-2 motion patterns (Start Position, Reach 15 cm, Reach 30 cm, Place 15 cm, and Place 30 cm) in a randomized sequence to prevent ordering effects. Each video was recorded at 30 FPS and a resolution of pixels; 60 videos per operator were processed for each motion pattern.

3.2. Feature Extraction

The analytical core of the proposed system lies in its feature extraction pipeline, which transforms raw visual data into quantifiable biomechanical parameters. This stage is crucial for the entire methodology, as the accuracy of subsequent motion classification directly depends on the quality of the extracted features. It also represents one of the main contributions of this work, since the characterization is explicitly aligned with MTM-2 standards.

The process begins with human pose detection using the MobileNetV2 CNN architecture, selected for its proven effectiveness in both hand tracking and full-body pose estimation [

9]. The model identifies key landmarks

, each represented by normalized

coordinates relative to the image width and height. Cartesian coordinates are then obtained by scaling each landmark as

and

. Based on the motions defined in MTM-2, this study focused on the upper-body joints most directly involved in manual assembly tasks: left shoulder, right shoulder, left elbow, right elbow, left wrist, right wrist, left thumb, tip of the left pinky, and tip of the right pinky, as illustrated in

Figure 1.

After detecting these keypoints, six distinct angular features are meticulously derived to characterize the observed motion patterns. Each angle

is calculated from three key points

. In this way, the feature vector is formed as follows:

where

: elbow angles (shoulder–elbow–wrist);

: shoulder angles (hip–shoulder–elbow);

: wrist angles (elbow–wrist–pinky).

The angles are calculated as follows:

These crucial angular metrics typically encompass the dynamic relationships and ranges of motion observed at the shoulder, elbow, and wrist joints, which are paramount to characterizing manual assembly tasks. Furthermore, the methodology incorporates the analysis of operator trajectories within the two-dimensional plane, providing comprehensive kinematic information essential for distinguishing between various movements. This granular and objective extraction of articular features is fundamental to accurately classifying movements in accordance with predetermined time-study standards.

Once the feature extraction stage is complete, a dataset is meticulously compiled by manually labeling individual video frames in which specific movements aligned with MTM-2 are performed. The resulting dataset comprises 8919 instances, distributed across five classes and six features. This establishes a robust dataset for training and operating supervised ML algorithms.

3.3. Motion Classification Algorithms

For this work, three candidate lightweight algorithms were tested:

K-Nearest Neighbors (KNN): This operates by inferring class membership through proximity analysis, which calculates the Euclidean distance between query points and training instances, with classification determined by the majority vote among the k nearest neighbors.

Support Vector Machines (SVMs): These employ linear kernel transformations to construct optimal hyperplanes that maximize separation between motion classes in feature space, implementing the structural risk minimization principle.

Shallow Neural Networks (SNNs): utilize a single hidden layer architecture to learn non-linear decision boundaries through iterative weight optimization, employing early stopping to prevent overfitting while capturing complex pattern relationships.

Performance assessment incorporates multiple metrics, including accuracy, computational efficiency, and generalization capability. This standardized evaluation protocol ensures objective algorithm comparison while maintaining reproducibility and methodological rigor throughout the experimental process.

4. Experimental Design

The experimental design is divided into two stages: (1) a comparison is made between KNN, SVM, and SNN to determine the best-performing algorithm, and (2) more in-depth experimentation is performed with the chosen algorithm to analyze its robustness under noisy conditions.

The entire experimental setup and the execution of the algorithmic processes are carried out within a computing environment configured with Windows 11 OS, 16 GB of RAM, and a Ryzen 5 processor. The core software tools employed include Python (3.14.0) for scripting and overall control, OpenCV for robust video processing functionalities, and Scikit-learn for implementing the classifiers.

Figure 2 shows an example of the motion patterns based on the MTM-2, for the upper body.

4.1. Comparison of Classification Algorithms

This section presents a comprehensive comparative analysis of three lightweight classification algorithms: KNN, SVM, and SNN. The analysis follows a double-validation protocol that ensures a rigorously controlled evaluation of predictive performance. Initially, the dataset underwent a stratified 80–20% split, preserving the original class distribution in both subsets and reserving only 20% as the final test set for the final evaluation. Ten-fold stratified cross-validation was applied to the training set (80%), providing a robust estimate of expected performance through multiple internal training-validation iterations that mitigate the risk of overfitting and quantify the error variance. The models were subsequently retrained using the entire training set to maximize their learning capacity, and finally evaluated against the previously isolated test set, thus providing an uncontaminated measure of their generalization ability on genuinely unseen data.

All models were subjected to the same feature scaling methodology using MinMaxScaler to normalize the input features between 0 and 1, ensuring identical input conditions for optimal performance assessment.

The algorithmic configuration was carefully established to represent each model’s fundamental characteristics while maintaining computational fairness. The KNN implementation utilized a single neighbor (k = 1), optimizing for local pattern recognition without distance weighting. The SVM employed a linear kernel configuration, providing a robust linear classifier with default regularization parameters. The SNN architecture consisted of a single hidden layer containing 50 neurons, configured with a maximum of 1000 iterations and early stopping enabled to prevent overfitting while ensuring convergence. All models maintained their respective default parameters for other hyperparameters to ensure comparability and avoid artificial performance enhancement through extensive tuning.

The performance metrics included stratified cross-validation accuracy to measure generalization capability, test set accuracy to evaluate real-world performance, training computational efficiency to assess operational feasibility, and inference timing to determine real-time applicability. This multi-faceted approach ensured that the comparison captured not only predictive accuracy but also practical implementation considerations crucial for deployment in resource-constrained environments.

Results

and Analysis: Stage 1

Table 1 shows the results of the performance comparison between KNN, SVM, and SNN to determine the best-performing algorithm according to the purposes of this study.

The empirical evaluation of classification algorithms revealed distinct performance characteristics across different paradigms. Among the tested algorithms, KNN demonstrated exceptional computational efficiency during both the training and inference phases, achieving a remarkable predictive accuracy on the test data while requiring only 0.0062 s for training. This combination of high accuracy and minimal computational overhead proved decisive for the current application, which demands real-time processing capabilities. Consequently, KNN was selected for implementation in the subsequent experimental phases due to its optimal balance between performance and operational speed, essential for meeting the system’s temporal constraints.

It is important to emphasize that this selection criterion was driven specifically by the real-time requirements of the present application rather than by absolute algorithmic superiority. We acknowledge that alternative approaches offer complementary advantages that may become more relevant as system complexity evolves. The SVM demonstrated robust generalization capabilities with 99.48% cross-validation accuracy, while the SNN showed promising adaptability to more complex pattern recognition tasks despite slightly lower initial performance.

Looking ahead to future implementations, these results showed that both SVM and SNN represent viable alternatives for scenarios where problem complexity may increase beyond the current feature space. This forward-looking design approach provides flexibility to address potential dimensional expansions, increased feature complexity, or more sophisticated pattern recognition challenges.

4.2. Robustness Analysis of KNN Algorithm Under Noisy Conditions

The results of the previous analysis showed that KNN was the best-performing algorithm under ideal laboratory conditions. For this reason, we conducted rigorous stress tests to establish its operating limits in real-world scenarios where sensor noise, measurement errors, and environmental variability are unavoidable, and the results are given in this section. This study aims to systematically quantify KNN’s degradation patterns under controlled noise conditions, providing empirical evidence for its practical limitations and establishing clear failure thresholds.

The robustness evaluation employs a controlled noise-injection methodology where Gaussian noise with zero mean and a progressively increasing standard deviation ( to , in increments) is introduced to the feature vectors. Each noise level undergoes five independent experimental runs to ensure statistical significance and account for stochastic variations. The experimental design maintains consistent stratification across all noise conditions to preserve class distribution integrity, with an 80–20% train–test split protocol repeated for each noise level iteration.

Four critical performance metrics are simultaneously monitored: accuracy (overall classification correctness), precision (class-specific reliability), recall (completeness of class detection), and F1-score (harmonic mean balancing precision and recall). The evaluation incorporates weighted averaging to account for class imbalance, ensuring a comprehensive assessment across all motion categories. Statistical variability is quantified through standard deviation measurements across multiple runs, providing confidence intervals for each performance metric.

The study maintains several control measures: identical random seeds across noise levels for reproducible comparisons, preserved feature scaling using MinMaxScaler after noise injection to prevent data leakage, and consistent KNN hyperparameters () throughout all experiments to isolate noise effects from parameter optimization.

Results and Analysis: Stage 2

In this section, the results of an in-depth evaluation of KNN are presented, focusing on its robustness under noisy conditions. The overall outcomes are summarized in

Table 2.

The KNN algorithm demonstrated remarkable robustness against increasing noise levels, maintaining near-perfect performance up to a noise level of 0.10 with only 0.20% accuracy degradation. This stability indicates that the algorithm can effectively handle moderate sensor noise and measurement errors without significant performance loss.

Between noise levels of 0.10 and 0.30, a gradual but steady decline in performance is observed. The accuracy decreased from 99.78% to 94.61%, representing a manageable degradation of approximately 5% over this substantial noise increase. This linear degradation pattern suggests predictable behavior under suboptimal conditions, which is valuable for system reliability planning.

The most significant performance drop occurred between noise levels of 0.30 and 0.50, where accuracy declined from 94.61% to 79.64%. This steeper degradation curve indicates that beyond a noise level of 0.30, the algorithm begins to struggle with the increasing signal distortion. However, even at the highest noise level tested (0.50), the algorithm maintained 79.64% accuracy, demonstrating substantial resilience to extreme noise conditions.

Notably, all four metrics (accuracy, precision, recall, and F1-score) showed nearly identical values and parallel degradation patterns throughout the experiment. This consistency indicates that noise affects all aspects of classification performance equally, without introducing particular biases toward false positives or false negatives.

5. Real-Time Model Validation in MTM-2 Motions

Once the model is trained, it is validated by classifying movements according to the MTM-2 in real-time. The data obtained by the framework is used to interpret the movements from an ergonomic perspective, and to provide a recommendation.

5.1. Neutral Position

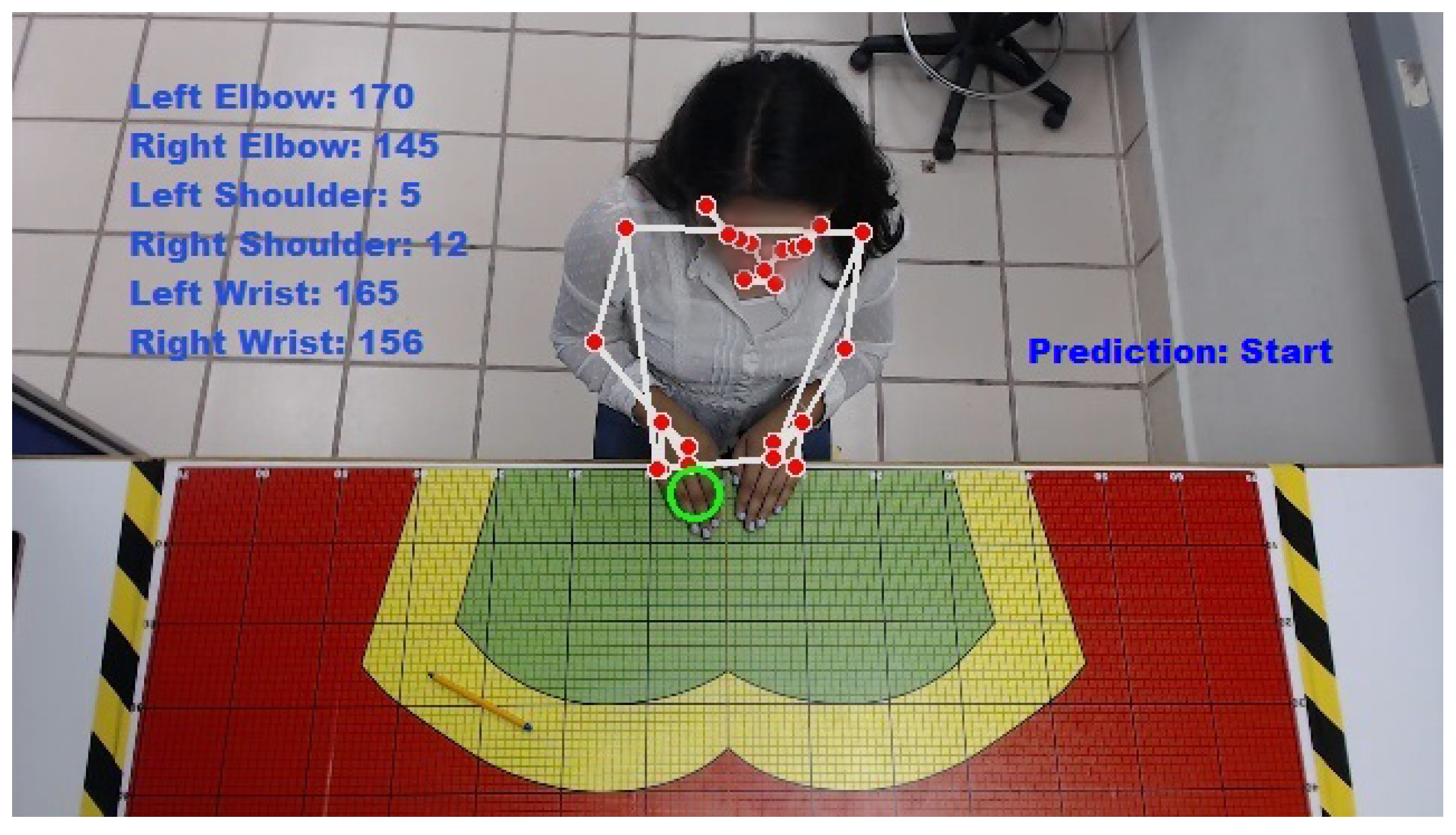

Figure 3 shows an operator in the Start Position. The data obtained for the reference neutral posture is presented in

Table 3.

An analysis of the neutral starting posture, depicted in

Figure 3, revealed key insights into the operator’s baseline alignment with MTM-2 assumptions. While most joints were within expected tolerances, a notable deviation was detected in the right elbow, which exhibited a 145° angle, falling just outside the optimal extension range of 150–180°. This slight flexion suggests that the operator was not in a fully relaxed and standardized initial pose, potentially due to habit or workstation setup. Furthermore, while both shoulder angles were within the 0–20° rest range, 7° asymmetry was observed between the left (5°) and right (12°) sides. These deviations, though minor, have practical implications for time study’s accuracy. A consistently flexed elbow at the start of a Reach motion could lead to a slight but measurable increase in the observed Time Measurement Units (TMUs), as the movement does not initiate from the most biomechanically efficient position. Similarly, shoulder asymmetry could introduce unpredictable variances in bilateral movements, compromising the consistency of predefined time standards. Therefore, it is recommended to implement an initial calibration step where the system verifies the neutral posture before commencing analysis, providing immediate feedback to the operator for correction and ensuring all motions originate from a validated baseline.

5.2. Movement-in-Progress Analysis (Intermediate Phase)

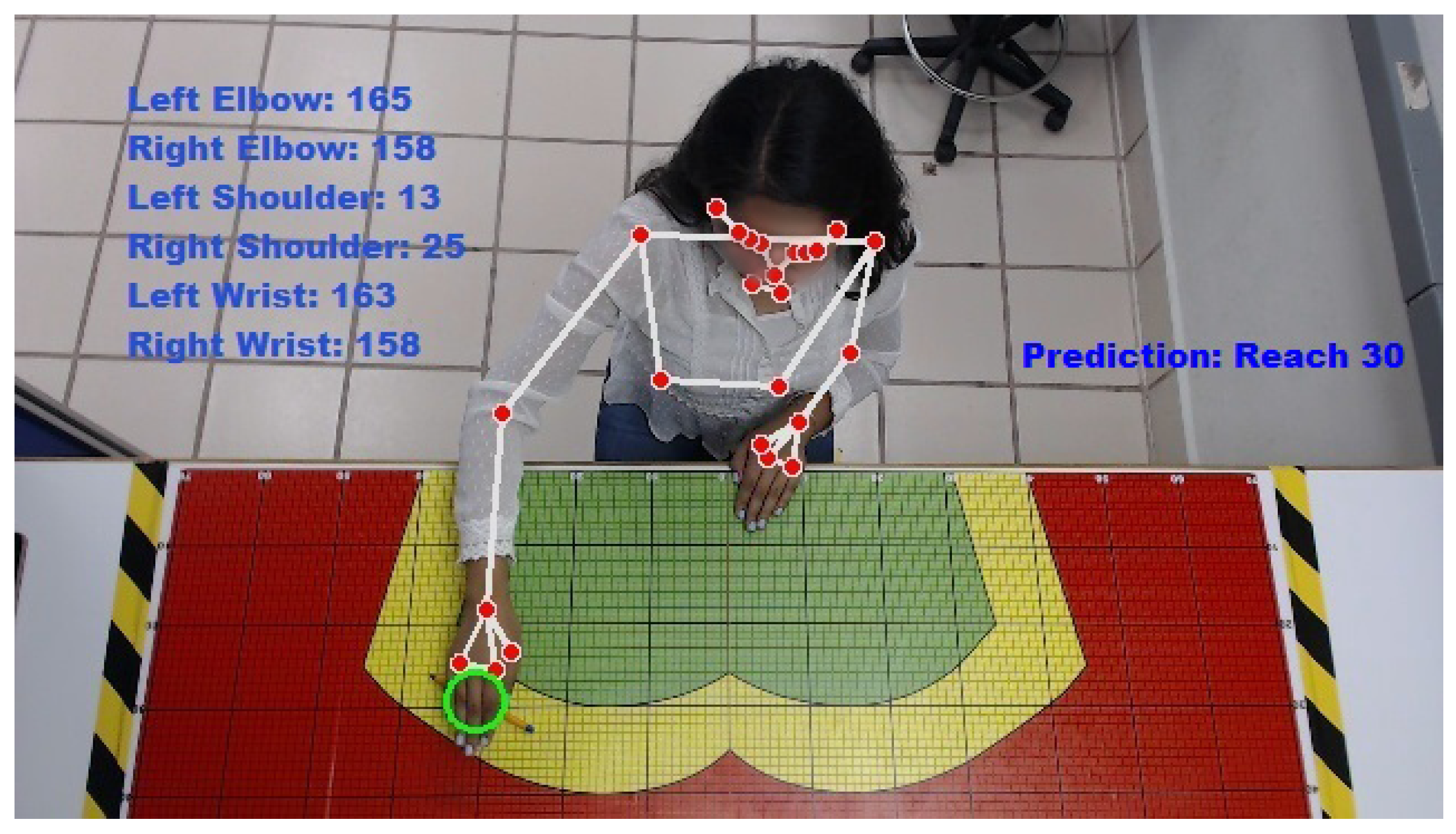

The data for the intermediate movement phase is shown in

Table 4 and illustrated in

Figure 4.

The analysis of the intermediate movement phase, captured in

Table 4 and

Figure 4, provides a dynamic view of the kinematic chain in action. The data indicate a clear transition towards a Reach motion, primarily driven by the right arm. The right elbow exhibited a significant change, extending by +13° from its suboptimal neutral position to 158°, now well within the efficient extension range. Concurrently, the right shoulder showed a pronounced elevation of +16°, a necessary movement for Reach tasks. The left arm, however, displayed a compensatory role, with the elbow flexing by −5°. This contralateral movement is a natural biomechanical response for maintaining balance during a unilateral reach, but is often unaccounted for in simplified time-motion models. The coordinated motion of both arms underscores the complexity of upper-body kinematics, where a primary action can induce secondary compensatory motions that may contribute to overall fatigue. This highlights the system’s capability to capture not only the primary task but also the ancillary movements that form the complete ergonomic picture.

5.3. MTM-2 and Ergonomic Interpretation

The system differentiates between intentional motion variations and genuine ergonomic risks through a multi-factor analysis that incorporates temporal context and task phase. While the feature extraction operates on individual frames, risk assessment is performed over a sliding window of frames corresponding to the execution of a specific MTM motion element (e.g., the entire Reach or Place action).

A posture is flagged as a genuine risk based on two concurrent conditions:

Magnitude of Deviation: The joint angle must exceed a predefined safety threshold (e.g., wrist extension > 165° according to NIOSH guidelines) consistently, not as a transient state.

Temporal Persistence: The anomalous posture must be held for a significant duration of the motion sequence. A brief, extreme angle during a transition is considered a variation; the same angle maintained throughout the Hold or Place phase of a movement is classified as a risk.

For instance, a wrist hyperextension of 170° during the rapid transit of a Reach might be an acceptable variation. However, if the hyperextension is measured at 168° and persists through the final 60% of the Place motion—where the joint should ideally be moving towards a neutral position—it is categorized as a genuine ergonomic risk. This context-aware interpretation is what allows the system to provide actionable alerts rather than generating false positives on normal movement dynamics. Future iterations will formalize this logic into a state machine that explicitly models the phases of each MTM motion.

The kinematic data from the intermediate phase allows for simultaneous MTM-2 and ergonomic interpretation, demonstrating the dual utility of the proposed methodology. The observed right-arm kinematics—full elbow on the workstation and significant shoulder elevation—are highly consistent with the MTM-2 code R20A (Reach 20 cm). However, the ergonomic assessment revealed critical risk factors embedded within this otherwise standard motion. The right wrist angle of 168° at the workstation is significantly above the NIOSH-recommended safe limit of 165°, significantly increasing the risk of Work-Related Musculoskeletal Disorders (WMSDs). Furthermore, the 15° asymmetry in shoulder elevation suggests an uneven load distribution, potentially leading to long-term strain on the dominant side. From a time-study perspective, these inefficiencies manifest quantitatively; the actual motion required 3.2 TMUs, a 14% increase over the theoretical 2.8 TMUs for an optimal R20A. This deviation can be directly attributed to the suboptimal postural adjustments and the deceleration required to control the hyperextended wrist at the endpoint of the movement. Consequently, the system recommends not only classifying the motion but also flagging it for ergonomic intervention, such as workstation redesign to bring objects closer and eliminate the need for extreme wrist angles.

5.4. Posture Analysis with Joint Anomaly

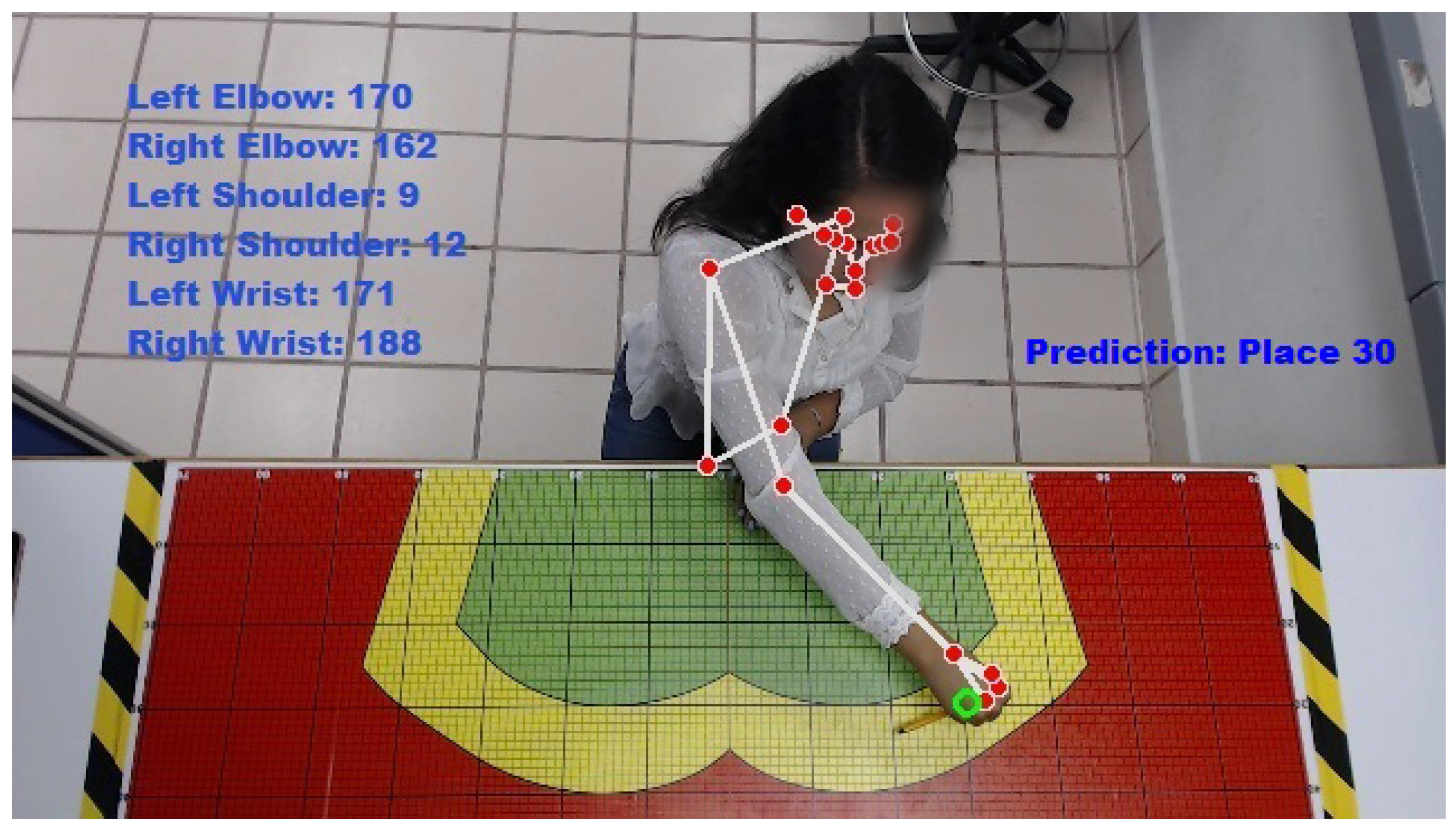

Figure 5 and

Table 5 show the body parts that were recognized as having an anomalous posture.

The data for the anomalous joint posture are shown in

Table 5.

5.5. Biomechanical Interpretation

Figure 5 and

Table 5 present a critical use case of the system: detecting a severe ergonomic anomaly that would likely be missed in a manual observation. While most joints, including the left wrist at 171°, were within their normal functional ranges, the right wrist was measured at a severe extension of 188°. This angle far exceeds the safe anatomical limit of 180° and represents a critical hyperextension risk, as defined by the NIOSH guidelines. The most probable cause is a forced, unnatural movement to reach a distant or poorly located object without adequate body adjustment. From an MTM-2 perspective, this anomaly disrupts standard classification. The excessive time recorded (5.2 TMUs vs. a theoretical 3.5 TMUs) and the aberrant posture create ambiguity between a P2 (Place with adjustment) and a G2 (Complex grasp) code. This illustrates a scenario where ergonomic risk and motion standard complexity are intrinsically linked. The immediate industrial recommendation would be 1) to redesign the workstation ergonomics to eliminate the need for such extreme reaches, and 2) to consider implementing auxiliary supports or tools to maintain a neutral wrist posture. This case underscores the system’s value in proactively identifying high-risk movements that directly impact both worker health and process efficiency.

6. Discussion and Limitations

This work introduces a novel CV–based methodology, demonstrating its considerable efficacy in the automated classification of motions within industrial settings. Under this experimental design, our findings—particularly the attainment of 100% classification accuracy across the Start, Reach, and Place motions on a substantial test dataset—underscore the system’s strong potential for practical deployment. This performance was achieved by systematically extracting angular kinematic features from key anatomical points (shoulder, elbow, and wrist), which proved to be highly discriminative descriptors of fundamental movements. The optimized KNN model, configured with , outperformed more complex alternatives such as SVM and SNN. A critical advantage of the proposed system lies in its operational efficiency and remarkable noise robustness, ensuring reliable operation in real-world industrial environments where sensor imperfections and measurement variability are unavoidable.

On the other hand, once the model is trained, MTM-2 motion classification can be performed in real time—as supported by a per-sample inference time of 1 microsecond—suggesting that the methodology could achieve a significant reduction in analysis time compared to manual transcription of a traditional MTM-2. This reduction could enhance scalability and enable continuous time studies without interfering with production, offering substantial economic value, especially for SMEs. Beyond efficiency, the system also provides proactive ergonomic insights, automatically detecting critical joint anomalies such as wrist hyperextension beyond 165°, which are known risk factors for musculoskeletal disorders. Additionally, the algorithms showed resilience to minor asymmetries and variations in motion speed, supporting their robustness for dynamic industrial environments.

When contrasting these results with previous studies, a clear advancement is evident. VR-based approaches to MTM transcription, such as those by Gorobets et al. [

3] and Andreopoulos et al. [

20]—under their experimental designs—achieved accuracies in the range of 85–94%, but faced limitations due to participants’ slower performance in VR environments and the need for predetermined timings. Our method, applied directly to real video streams, avoids dependencies on hard-coded data sequences or incomplete body tracking (e.g., Kinect-based systems). Furthermore, it addresses the gaps identified in the work of Noamna et al. [

5], where the integration of Lean and MTM-2 improved productivity, but MTM analysis remained largely manual. Similarly, Urban et al. [

4] observed difficulties in identifying reliable ML models for SMEs. Our methodology demonstrates a validated and specific solution for a key component of production time estimation.

Despite the effectiveness of our method, several limitations must be acknowledged. First, the experiments were conducted under controlled laboratory conditions with a limited set of motion classes, which may overestimate performance compared to real-world environments characterized by greater variability, noise, and complexity. The system also showed sensitivity to abrupt lighting changes and may require calibration for operators with extreme anthropometric differences. While KNN proved highly effective and noise-robust in the present scenario, its scalability is limited: as additional MTM motions or compound actions are introduced, classification boundaries will become more complex, potentially necessitating the adoption of more advanced models such as SVMs or SNNs. This is precisely why the framework was designed to be modular, allowing the substitution of KNN with more sophisticated classifiers as needed. A critical consideration for scalability is the model’s performance when confronted with real-world complexities; specifically, three major challenges can be anticipated: partial occlusions (e.g., by workpieces, machinery, or the operator’s own body), significant variability in lighting conditions, and the introduction of a more extensive repertoire of MTM motions, including compound actions.

While the current feature extraction pipeline, based on robust angular kinematics, provides a degree of invariance to slight changes in viewpoint and appearance, performance may degrade under severe occlusion where key joints are not visible. Similarly, the model’s reliance on a pre-trained pose estimation network makes it susceptible to errors caused by extreme shadows or low-light environments, which could lead to inaccurate keypoint detection. Furthermore, while the KNN algorithm proved optimal for the five distinct classes analyzed, its performance and computational efficiency may not scale linearly with a higher number of more semantically similar motion classes (e.g., distinguishing between Grasp (G1, G2, G3) and Apply Pressure).

Addressing these limitations constitutes the immediate focus of our future work. Our strategy for enhancing generalization involves a multi-faceted approach: (1) the curation of a significantly more diverse dataset captured in active manufacturing environments, incorporating multiple operators, varying lighting, and controlled occlusion scenarios to improve model robustness. (2) The development of a temporal reasoning module, potentially based on Recurrent Neural Networks (RNNs) or transformers, to interpret motion sequences rather than relying solely on static postural frames. This would mitigate errors from temporary occlusions or singularly misdetected frames. (3) The exploration of more sophisticated classification algorithms, such as SVMs or SNNs, which may offer better generalization and higher discriminative power for a larger set of classes without a prohibitive increase in computational cost. This modular evolution of the system is aligned with the overarching goal of creating a deployable tool for SMEs.

7. Conclusions

This study demonstrates the feasibility of applying CV and ML to the automated transcription of MTM-2 motions, achieving near-perfect accuracy in controlled laboratory conditions. The use of angular kinematic features extracted from key anatomical points proved to be highly discriminative, enabling efficient and reliable classification of fundamental motions such as Start, Reach, and Place. The optimized KNN model not only surpassed more complex alternatives, but also provided fast inference times, highlighting its potential for practical integration into industrial workflows.

Beyond efficiency gains, the methodology offers added value by incorporating ergonomic monitoring, automatically detecting postural anomalies that may contribute to musculoskeletal risks. These capabilities position the system as both a productivity-enhancing and a worker-oriented tool, with particular promise for adoption in SMEs.

Nonetheless, the current results are constrained by the controlled nature of the experiments and the limited motion repertoire considered. Real-world industrial environments present additional challenges—such as occlusions, lighting variability, and a broader set of MTM motions—that must be systematically addressed. To this end, future work will prioritize the collection of more diverse datasets, the incorporation of temporal reasoning to capture sequential dynamics, and the exploration of more sophisticated classifiers capable of handling larger and more complex motion sets.

This continuous refinement trajectory aligns with the principles of Industry 5.0, fostering a collaborative paradigm between human operators and intelligent AI systems for pervasive and sustainable process improvement. To this end, our methodology transcends isolated time studies, evolving into a continuous, closed-loop system where real-time human performance data directly informs operational decision-making in the MES and strategic optimization in the Digital Twin. The proposed integration architecture—based on message-oriented middleware and standardized data schemas—offers a clear roadmap for embedding this tool into the digital backbone of factories of the future.

Based on the findings and limitations of this study, several directions for future work are clearly defined. First, the dataset will be expanded to include a wider variety of MTM motions (e.g., Grasp, Apply Pressure) and more participants with diverse anthropometrics to improve model generalizability. Second, the framework’s robustness will be tested in real operational environments with challenging conditions such as variable lighting and partial occlusions. Third, a recurrent neural network (RNN) module will be developed to capture the temporal dimension of ergonomic risk by analyzing motion sequences rather than relying solely on static postures. Finally, the proposed integration with MES platforms via OPC UA or MQTT will be implemented to demonstrate real-time data exchange for production monitoring and dynamic scheduling.

In conclusion, the proposed methodology constitutes a validated proof of concept for automated MTM-2 analysis. Its modular design ensures adaptability to more advanced models and broader industrial contexts, paving the way toward scalable, generalizable, and ergonomically informed solutions that bridge human performance and digital intelligence in factories of the future.

Author Contributions

Conceptualization, A.M.-C. and V.C.-L.; methodology, A.M.-C. and V.C.-L. software, V.C.-L. and R.S.-M.; validation, A.M.-C., R.S.-M. and M.B.B.-R.; formal analysis, A.M.-C., V.C.-L., A.E.-M., R.S.-M. and M.B.B.-R.; investigation, A.M.-C., M.B.B.-R. and A.E.-M.; resources, A.M.-C. and V.C.-L.; data curation, A.M.-C., V.C.-L. and R.S.-M.; writing—original draft preparation, A.M.-C. and V.C.-L.; writing—review and editing, A.M.-C., V.C.-L. and M.B.B.-R. translation to English, R.S.-M. and A.E.-M.; visualization, A.M.-C.; supervision, A.M.-C. and V.C.-L.; project administration, A.M.-C.; funding acquisition, A.M.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the PRODEP program of the National Institute of Technology of Mexico, with the official Grant No. M00/1958/2024, in the framework of the 2024 PRODEP Support for the Strengthening of Academic Bodies (Research Groups).

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki (revised in 2013), and the protocol was reviewed and approved by the Academic Committee of the Tecnológico Nacional de México/Instituto Tecnológico Superior de Purísima del Rincón, as documented in Official Grant No. SA/058/2025, dated 19 September 2025. The Committee recommended anonymizing photographs containing human faces to ensure compliance with Articles 23 and 24 of the Declaration of Helsinki and the General Law on the Protection of Personal Data Held by Individuals in Mexico. All images included in the article were modified to remove identifiable features, and written informed consent was obtained from the individuals involved for the use of their images for scientific dissemination purposes.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author (A.M.-C.).

Acknowledgments

We thank the PRODEP program of the National Institute of Technology of Mexico for the support provided through 2024 PRODEP Support for the Strengthening of Academic Bodies (Research Groups), authorized through Official Grant No. M00/1958/2024.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gorobets, V.; Holzwarth, V.; Hirt, C.; Jufer, N.; Kunz, A. A VR-based approach in conducting MTM for manual workplaces. Int. J. Adv. Manuf. Technol. 2021, 117, 2501–2510. [Google Scholar] [CrossRef]

- Andreopoulos, E.; Gorobets, V.; Kunz, A. Automatic MTM-Transcription in Virtual Reality Using the Digital Twin of a Workplace. Res. Sq. 2022. [Google Scholar] [CrossRef]

- Gorobets, V.; Billeter, R.; Adelsberger, R.; Kunz, A. Automatic transcription of the Methods-Time Measurement MTM-1 motions in VR. Hum. Interact. Emerg. Technol. (Ihiet-Ai 2024): Artif. Intell. Future Appl. 2024, 120, 250–259. [Google Scholar] [CrossRef]

- Urban, M.; Koblasa, F.; Mendřický, R. Machine Learning in Small and Medium-Sized Enterprises, Methodology for the Estimation of the Production Time. Appl. Sci. 2024, 14, 8608. [Google Scholar] [CrossRef]

- Noamna, S.; Thongphun, T.; Kongjit, C. Transformer Production Improvement by Lean and Mtm-2 Technique. ASEAN Eng. J. 2022, 12, 29–35. [Google Scholar] [CrossRef]

- López Castaño, C.A.; Ferrin, C.D.; Castillo, L.F. Visión por Computador aplicada al control de calidad en procesos de manufactura: Seguimiento en tiempo real de refrigeradores. Rev. EIA 2018, 15, 57–71. [Google Scholar] [CrossRef]

- Baquero, F.B.; Martínez, D.E. Detección de acción humana para el control de inventario utilizando visión por computadora. Ing. Compet. 2024, 26, e-21813230. [Google Scholar] [CrossRef]

- Gorobets, V.; Andreopoulos, E.; Kunz, A. Automatic Transcription of the Basic MTM-2 Motions in Virtual Reality. In Progress in IS; Spinger: Berlin/Heidelberg, Germany, 2024; pp. 263–266. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Finsterbusch, T.; Petz, A.; Faber, M.; Härtel, J.; Kuhlang, P.; Schlick, C.M. A Comparative Empirical Evaluation of the Accuracy of the Novel Process Language MTM-Human Work Design. Adv. Intell. Syst. Comput. 2016, 490, 147–155. [Google Scholar] [CrossRef]

- Armstrong, D.P.; Moore, C.A.B.; Cavuoto, L.A.; Gallagher, S.; Lee, S.; Sonne, M.W.L.; Fischer, S.L. Advancing Towards Automated Ergonomic Assessment: A Panel of Perspectives. Lect. Notes Netw. Syst. 2022, 223, 585–591. [Google Scholar] [CrossRef]

- González-Alonso, J.; Martín-Tapia, P.; González-Ortega, D.; Antón-Rodríguez, M.; Díaz-Pernas, F.J.J.; Martínez-Zarzuela, M. ME-WARD: A multimodal ergonomic analysis tool for musculoskeletal risk assessment from inertial and video data in working places. Expert Syst. Appl. 2025, 278, 127212. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, W.; Chen, J.; Zhang, X.; Miao, Z. Posture Risk Assessment and Workload Estimation for Material Handling by Computer Vision. Int. J. Intell. Syst. 2023, 2023, 2085251. [Google Scholar] [CrossRef]

- Kunz, M.; Shu, C.; Picard, M.; Vera, D.A.; Hopkinson, P.; Xi, P. Vision-based Ergonomic and Fatigue Analyses for Advanced Manufacturing. In Proceedings of the 2022 IEEE 5th International Conference on Industrial Cyber-Physical Systems (ICPS), Coventry, UK, 24–26 May 2022. [Google Scholar] [CrossRef]

- Yang, Z.; Song, D.; Ning, J.; Wu, Z. A Systematic Review: Advancing Ergonomic Posture Risk Assessment through the Integration of Computer Vision and Machine Learning Techniques. IEEE Access 2024, 12, 180481–180519. [Google Scholar] [CrossRef]

- Kasani, A.A.; Sajedi, H. Predict joint angle of body parts based on sequence pattern recognition. In Proceedings of the 2022 16th International Conference on Ubiquitous Information Management and Communication (IMCOM), Seoul, Republic of Korea, 3–5 January 2022. [Google Scholar] [CrossRef]

- Cruciata, L.; Contino, S.; Ciccarelli, M.; Pirrone, R.; Mostarda, L.; Papetti, A.; Piangerelli, M. Lightweight Vision Transformer for Frame-Level Ergonomic Posture Classification in Industrial Workflows. Sensors 2025, 25, 4750. [Google Scholar] [CrossRef] [PubMed]

- Kiraz, A.; Özkan Geçici, A. Ergonomic risk assessment application based on computer vision and machine learning; Bilgisayarlı görü ve makine öğrenmesi ile ergonomik risk değerlendirme uygulaması. J. Fac. Eng. Archit. Gazi Univ. 2024, 39, 2473–2484. [Google Scholar] [CrossRef]

- Agostinelli, T.; Generosi, A.; Ceccacci, S.; Mengoni, M. Validation of computer vision-based ergonomic risk assessment tools for real manufacturing environments. Sci. Rep. 2024, 14, 27785. [Google Scholar] [CrossRef]

- Andreopoulos, E.; Gorobets, V.; Kunz, A. Automated Transcription of MTM Motions in a Virtual Environment. Lect. Notes Netw. Syst. 2024, 1012, 243–259. [Google Scholar] [CrossRef]

- Liang, G.; Chen, F.; Liang, Y.; Feng, Y.; Wang, C.; Wu, X. A Manufacturing-Oriented Intelligent Vision System Based on Deep Neural Network for Object Recognition and 6D Pose Estimation. Front. Neurorobot. 2021, 14, 616775. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, L.; Konz, N. Computer Vision Techniques in Manufacturing. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 105–117. [Google Scholar] [CrossRef]

- Ettalibi, A.; Elouadi, A.; Mansour, A. AI and Computer Vision-based Real-time Quality Control: A Review of Industrial Applications. Procedia Comput. Sci. 2024, 231, 212–220. [Google Scholar] [CrossRef]

- De Feudis, I.; Buongiorno, D.; Grossi, S.; Losito, G.; Brunetti, A.; Longo, N.; Di Stefano, G.; Bevilacqua, V. Evaluation of Vision-Based Hand Tool Tracking Methods for Quality Assessment and Training in Human-Centered Industry 4.0. Appl. Sci. 2022, 12, 1796. [Google Scholar] [CrossRef]

- Rueckert, P.; Birgy, K.; Tracht, K. Image Based Classification of Methods-Time Measurement Operations in Assembly Using Recurrent Neuronal Networks. In Proceedings of the Advances in System-Integrated Intelligence; Valle, M., Lehmhus, D., Gianoglio, C., Ragusa, E., Seminara, L., Bosse, S., Ibrahim, A., Thoben, K.D., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 53–62. [Google Scholar]

- Vukicevic, A.M.; Petrovic, M.N.; Knezevic, N.M.; Jovanovic, K.M. Deep Learning-Based Recognition of Unsafe Acts in Manufacturing Industry. IEEE Access 2023, 11, 103406–103418. [Google Scholar] [CrossRef]

- Urgo, M.; Berardinucci, F.; Zheng, P.; Wang, L. AI-Based Pose Estimation of Human Operators in Manufacturing Environments. In CIRP Novel Topics in Production Engineering: Volume 1; Tolio, T., Ed.; Springer Nature: Cham, Switzerland, 2024; pp. 3–38. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2323. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).